WO2021024083A1 - 半導体装置 - Google Patents

半導体装置 Download PDFInfo

- Publication number

- WO2021024083A1 WO2021024083A1 PCT/IB2020/057051 IB2020057051W WO2021024083A1 WO 2021024083 A1 WO2021024083 A1 WO 2021024083A1 IB 2020057051 W IB2020057051 W IB 2020057051W WO 2021024083 A1 WO2021024083 A1 WO 2021024083A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- circuit

- transistor

- memory

- data

- cpu

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F7/00—Methods or arrangements for processing data by operating upon the order or content of the data handled

- G06F7/38—Methods or arrangements for performing computations using exclusively denominational number representation, e.g. using binary, ternary, decimal representation

- G06F7/48—Methods or arrangements for performing computations using exclusively denominational number representation, e.g. using binary, ternary, decimal representation using non-contact-making devices, e.g. tube, solid state device; using unspecified devices

- G06F7/544—Methods or arrangements for performing computations using exclusively denominational number representation, e.g. using binary, ternary, decimal representation using non-contact-making devices, e.g. tube, solid state device; using unspecified devices for evaluating functions by calculation

- G06F7/5443—Sum of products

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10D—INORGANIC ELECTRIC SEMICONDUCTOR DEVICES

- H10D30/00—Field-effect transistors [FET]

- H10D30/60—Insulated-gate field-effect transistors [IGFET]

- H10D30/67—Thin-film transistors [TFT]

- H10D30/674—Thin-film transistors [TFT] characterised by the active materials

- H10D30/6755—Oxide semiconductors, e.g. zinc oxide, copper aluminium oxide or cadmium stannate

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F12/00—Accessing, addressing or allocating within memory systems or architectures

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11C—STATIC STORES

- G11C11/00—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor

- G11C11/21—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using electric elements

- G11C11/34—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using electric elements using semiconductor devices

- G11C11/40—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using electric elements using semiconductor devices using transistors

- G11C11/401—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using electric elements using semiconductor devices using transistors forming cells needing refreshing or charge regeneration, i.e. dynamic cells

- G11C11/403—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using electric elements using semiconductor devices using transistors forming cells needing refreshing or charge regeneration, i.e. dynamic cells with charge regeneration common to a multiplicity of memory cells, i.e. external refresh

- G11C11/404—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using electric elements using semiconductor devices using transistors forming cells needing refreshing or charge regeneration, i.e. dynamic cells with charge regeneration common to a multiplicity of memory cells, i.e. external refresh with one charge-transfer gate, e.g. MOS transistor, per cell

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11C—STATIC STORES

- G11C11/00—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor

- G11C11/21—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using electric elements

- G11C11/34—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using electric elements using semiconductor devices

- G11C11/40—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using electric elements using semiconductor devices using transistors

- G11C11/401—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using electric elements using semiconductor devices using transistors forming cells needing refreshing or charge regeneration, i.e. dynamic cells

- G11C11/403—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using electric elements using semiconductor devices using transistors forming cells needing refreshing or charge regeneration, i.e. dynamic cells with charge regeneration common to a multiplicity of memory cells, i.e. external refresh

- G11C11/405—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using electric elements using semiconductor devices using transistors forming cells needing refreshing or charge regeneration, i.e. dynamic cells with charge regeneration common to a multiplicity of memory cells, i.e. external refresh with three charge-transfer gates, e.g. MOS transistors, per cell

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11C—STATIC STORES

- G11C11/00—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor

- G11C11/21—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using electric elements

- G11C11/34—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using electric elements using semiconductor devices

- G11C11/40—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using electric elements using semiconductor devices using transistors

- G11C11/401—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using electric elements using semiconductor devices using transistors forming cells needing refreshing or charge regeneration, i.e. dynamic cells

- G11C11/4063—Auxiliary circuits, e.g. for addressing, decoding, driving, writing, sensing or timing

- G11C11/407—Auxiliary circuits, e.g. for addressing, decoding, driving, writing, sensing or timing for memory cells of the field-effect type

- G11C11/409—Read-write [R-W] circuits

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11C—STATIC STORES

- G11C11/00—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor

- G11C11/54—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor using elements simulating biological cells, e.g. neuron

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11C—STATIC STORES

- G11C14/00—Digital stores characterised by arrangements of cells having volatile and non-volatile storage properties for back-up when the power is down

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11C—STATIC STORES

- G11C16/00—Erasable programmable read-only memories

- G11C16/02—Erasable programmable read-only memories electrically programmable

- G11C16/06—Auxiliary circuits, e.g. for writing into memory

- G11C16/10—Programming or data input circuits

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11C—STATIC STORES

- G11C16/00—Erasable programmable read-only memories

- G11C16/02—Erasable programmable read-only memories electrically programmable

- G11C16/06—Auxiliary circuits, e.g. for writing into memory

- G11C16/26—Sensing or reading circuits; Data output circuits

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11C—STATIC STORES

- G11C7/00—Arrangements for writing information into, or reading information out from, a digital store

- G11C7/10—Input/output [I/O] data interface arrangements, e.g. I/O data control circuits, I/O data buffers

- G11C7/1051—Data output circuits, e.g. read-out amplifiers, data output buffers, data output registers, data output level conversion circuits

- G11C7/1069—I/O lines read out arrangements

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11C—STATIC STORES

- G11C7/00—Arrangements for writing information into, or reading information out from, a digital store

- G11C7/10—Input/output [I/O] data interface arrangements, e.g. I/O data control circuits, I/O data buffers

- G11C7/1078—Data input circuits, e.g. write amplifiers, data input buffers, data input registers, data input level conversion circuits

- G11C7/1096—Write circuits, e.g. I/O line write drivers

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11C—STATIC STORES

- G11C7/00—Arrangements for writing information into, or reading information out from, a digital store

- G11C7/12—Bit line control circuits, e.g. drivers, boosters, pull-up circuits, pull-down circuits, precharging circuits, equalising circuits, for bit lines

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11C—STATIC STORES

- G11C7/00—Arrangements for writing information into, or reading information out from, a digital store

- G11C7/14—Dummy cell management; Sense reference voltage generators

-

- H—ELECTRICITY

- H03—ELECTRONIC CIRCUITRY

- H03K—PULSE TECHNIQUE

- H03K3/00—Circuits for generating electric pulses; Monostable, bistable or multistable circuits

- H03K3/02—Generators characterised by the type of circuit or by the means used for producing pulses

- H03K3/027—Generators characterised by the type of circuit or by the means used for producing pulses by the use of logic circuits, with internal or external positive feedback

- H03K3/037—Bistable circuits

-

- H—ELECTRICITY

- H03—ELECTRONIC CIRCUITRY

- H03K—PULSE TECHNIQUE

- H03K3/00—Circuits for generating electric pulses; Monostable, bistable or multistable circuits

- H03K3/02—Generators characterised by the type of circuit or by the means used for producing pulses

- H03K3/353—Generators characterised by the type of circuit or by the means used for producing pulses by the use, as active elements, of field-effect transistors with internal or external positive feedback

- H03K3/356—Bistable circuits

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10B—ELECTRONIC MEMORY DEVICES

- H10B12/00—Dynamic random access memory [DRAM] devices

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10B—ELECTRONIC MEMORY DEVICES

- H10B12/00—Dynamic random access memory [DRAM] devices

- H10B12/50—Peripheral circuit region structures

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10D—INORGANIC ELECTRIC SEMICONDUCTOR DEVICES

- H10D84/00—Integrated devices formed in or on semiconductor substrates that comprise only semiconducting layers, e.g. on Si wafers or on GaAs-on-Si wafers

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10D—INORGANIC ELECTRIC SEMICONDUCTOR DEVICES

- H10D84/00—Integrated devices formed in or on semiconductor substrates that comprise only semiconducting layers, e.g. on Si wafers or on GaAs-on-Si wafers

- H10D84/01—Manufacture or treatment

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10D—INORGANIC ELECTRIC SEMICONDUCTOR DEVICES

- H10D84/00—Integrated devices formed in or on semiconductor substrates that comprise only semiconducting layers, e.g. on Si wafers or on GaAs-on-Si wafers

- H10D84/01—Manufacture or treatment

- H10D84/0123—Integrating together multiple components covered by H10D12/00 or H10D30/00, e.g. integrating multiple IGBTs

- H10D84/0126—Integrating together multiple components covered by H10D12/00 or H10D30/00, e.g. integrating multiple IGBTs the components including insulated gates, e.g. IGFETs

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10D—INORGANIC ELECTRIC SEMICONDUCTOR DEVICES

- H10D84/00—Integrated devices formed in or on semiconductor substrates that comprise only semiconducting layers, e.g. on Si wafers or on GaAs-on-Si wafers

- H10D84/01—Manufacture or treatment

- H10D84/02—Manufacture or treatment characterised by using material-based technologies

- H10D84/03—Manufacture or treatment characterised by using material-based technologies using Group IV technology, e.g. silicon technology or silicon-carbide [SiC] technology

- H10D84/038—Manufacture or treatment characterised by using material-based technologies using Group IV technology, e.g. silicon technology or silicon-carbide [SiC] technology using silicon technology, e.g. SiGe

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10D—INORGANIC ELECTRIC SEMICONDUCTOR DEVICES

- H10D84/00—Integrated devices formed in or on semiconductor substrates that comprise only semiconducting layers, e.g. on Si wafers or on GaAs-on-Si wafers

- H10D84/80—Integrated devices formed in or on semiconductor substrates that comprise only semiconducting layers, e.g. on Si wafers or on GaAs-on-Si wafers characterised by the integration of at least one component covered by groups H10D12/00 or H10D30/00, e.g. integration of IGFETs

- H10D84/82—Integrated devices formed in or on semiconductor substrates that comprise only semiconducting layers, e.g. on Si wafers or on GaAs-on-Si wafers characterised by the integration of at least one component covered by groups H10D12/00 or H10D30/00, e.g. integration of IGFETs of only field-effect components

- H10D84/83—Integrated devices formed in or on semiconductor substrates that comprise only semiconducting layers, e.g. on Si wafers or on GaAs-on-Si wafers characterised by the integration of at least one component covered by groups H10D12/00 or H10D30/00, e.g. integration of IGFETs of only field-effect components of only insulated-gate FETs [IGFET]

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11C—STATIC STORES

- G11C11/00—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor

- G11C11/005—Digital stores characterised by the use of particular electric or magnetic storage elements; Storage elements therefor comprising combined but independently operative RAM-ROM, RAM-PROM, RAM-EPROM cells

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11C—STATIC STORES

- G11C2211/00—Indexing scheme relating to digital stores characterized by the use of particular electric or magnetic storage elements; Storage elements therefor

- G11C2211/56—Indexing scheme relating to G11C11/56 and sub-groups for features not covered by these groups

- G11C2211/564—Miscellaneous aspects

- G11C2211/5641—Multilevel memory having cells with different number of storage levels

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10D—INORGANIC ELECTRIC SEMICONDUCTOR DEVICES

- H10D86/00—Integrated devices formed in or on insulating or conducting substrates, e.g. formed in silicon-on-insulator [SOI] substrates or on stainless steel or glass substrates

- H10D86/40—Integrated devices formed in or on insulating or conducting substrates, e.g. formed in silicon-on-insulator [SOI] substrates or on stainless steel or glass substrates characterised by multiple TFTs

- H10D86/421—Integrated devices formed in or on insulating or conducting substrates, e.g. formed in silicon-on-insulator [SOI] substrates or on stainless steel or glass substrates characterised by multiple TFTs having a particular composition, shape or crystalline structure of the active layer

- H10D86/423—Integrated devices formed in or on insulating or conducting substrates, e.g. formed in silicon-on-insulator [SOI] substrates or on stainless steel or glass substrates characterised by multiple TFTs having a particular composition, shape or crystalline structure of the active layer comprising semiconductor materials not belonging to the Group IV, e.g. InGaZnO

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10D—INORGANIC ELECTRIC SEMICONDUCTOR DEVICES

- H10D86/00—Integrated devices formed in or on insulating or conducting substrates, e.g. formed in silicon-on-insulator [SOI] substrates or on stainless steel or glass substrates

- H10D86/40—Integrated devices formed in or on insulating or conducting substrates, e.g. formed in silicon-on-insulator [SOI] substrates or on stainless steel or glass substrates characterised by multiple TFTs

- H10D86/60—Integrated devices formed in or on insulating or conducting substrates, e.g. formed in silicon-on-insulator [SOI] substrates or on stainless steel or glass substrates characterised by multiple TFTs wherein the TFTs are in active matrices

Definitions

- one aspect of the present invention is not limited to the above technical fields.

- the technical fields of one aspect of the present invention disclosed in the present specification and the like include semiconductor devices, imaging devices, display devices, light emitting devices, power storage devices, storage devices, display systems, electronic devices, lighting devices, input devices, input / output devices.

- Devices, their driving methods, or their manufacturing methods can be given as an example.

- BNN Binary Neural Network

- TNN Ternary Neural Network

- TNN the amount of calculation and the number of parameters are greatly reduced by compressing the data originally expressed with 32-bit or 16-bit precision into the three values of "+1", "0", or "-1". it can. Since BNN and TNN are effective for reducing the circuit scale and power consumption, they are considered to be compatible with applications that require low power consumption with limited hardware resources such as embedded chips.

- Trivalent data is used for TNN calculation.

- ternary data is stored in SRAM (Static RAM)

- SRAM Static RAM

- the number of transistors in the memory cell increases. Therefore, there is a risk that it will be difficult to miniaturize the semiconductor device.

- the data stored in the memory may be switched between binary data and ternary data. In this case, in a configuration in which memory cells corresponding to data are prepared, the number of transistors in the memory cells increases. Therefore, there is a risk that it will be difficult to miniaturize the semiconductor device.

- the power consumption of the semiconductor device is dominated by the number of data transfers in the CPU. Therefore, in order to suppress low power consumption or heat generation of the semiconductor device, it is important to suppress an increase in the number of data transfers.

- One aspect of the present invention is to reduce the size of a semiconductor device. Alternatively, one aspect of the present invention is to reduce the power consumption of the semiconductor device. Alternatively, one aspect of the present invention is to suppress heat generation of a semiconductor device. Alternatively, one aspect of the present invention is to reduce the number of data transfers between the CPU and the semiconductor device that functions as a memory. Alternatively, one of the issues is to provide a semiconductor device having a new configuration.

- one aspect of the present invention does not necessarily have to solve all of the above problems, as long as it can solve at least one problem. Moreover, the description of the above-mentioned problem does not prevent the existence of other problem. Issues other than these are naturally clarified from the description of the description, claims, drawings, etc., and the issues other than these should be extracted from the description of the specification, claims, drawings, etc. Is possible.

- One aspect of the present invention includes a CPU and an accelerator, the accelerator has a first memory circuit and an arithmetic circuit, and the first memory circuit has a first transistor and a first transistor.

- the accelerator has a semiconductor layer having a metal oxide in the channel forming region

- the arithmetic circuit has a second transistor

- the second transistor has a semiconductor layer having silicon in the channel forming region, and the first transistor and ,

- the second transistor is a semiconductor device provided in a laminated manner.

- One aspect of the present invention includes a CPU, an accelerator, the accelerator has a first memory circuit, a drive circuit, and an arithmetic circuit, and the first memory circuit has a first transistor.

- the first transistor has a semiconductor layer having a metal oxide in the channel forming region

- the drive circuit has a write circuit and a read circuit

- the write circuit has a switching signal, a write control signal, and data. It has a function of switching the data to be written to the first memory circuit to a binary or ternary voltage value according to a signal and outputting it

- the read circuit has a first reference voltage according to a first reference voltage and a second reference voltage.

- the drive circuit and the arithmetic circuit have a second transistor, and the second transistor is in the channel formation region.

- a semiconductor device having a semiconductor layer having silicon, and a first transistor and a second transistor are provided in a laminated manner.

- One aspect of the present invention includes a CPU and an accelerator, the accelerator has a first memory circuit and an arithmetic circuit, and the first memory circuit has a first transistor and a first transistor.

- the arithmetic circuit has a second transistor, the second transistor has a semiconductor layer having silicon in the channel forming region, and the CPU backs up.

- It has a CPU core with a flip flop provided with a circuit, the backup circuit has a third transistor, the third transistor has a semiconductor layer with a metal oxide in the channel forming region, and the first transistor and ,

- the second transistor is a semiconductor device provided in a laminated manner.

- One aspect of the present invention includes a CPU, an accelerator, the accelerator has a first memory circuit, a drive circuit, and an arithmetic circuit, and the first memory circuit has a first transistor.

- the first transistor has a semiconductor layer having a metal oxide in the channel forming region

- the drive circuit has a write circuit and a read circuit

- the write circuit has a switching signal, a write control signal, and data. It has a function of switching the data to be written to the first memory circuit to a binary or ternary voltage value according to a signal and outputting it

- the read circuit has a first reference voltage according to a first reference voltage and a second reference voltage.

- the arithmetic circuit has a second transistor, and the second transistor has silicon in the channel forming region. It has a semiconductor layer, the CPU has a CPU core having a flip flop provided with a backup circuit, the backup circuit has a third transistor, and the third transistor has a metal oxide in the channel forming region. It is a semiconductor device having a semiconductor layer, and the first transistor and the second transistor are provided in a laminated manner.

- the backup circuit is preferably a semiconductor device having a function of holding the data held in the flip-flop in a state where the supply of the power supply voltage is stopped when the CPU is not operating.

- the arithmetic circuit is preferably a semiconductor device, which is a circuit that performs a product-sum calculation.

- the metal oxide preferably contains a semiconductor device containing In, Ga, and Zn.

- the first transistor is electrically connected to the read bit line, and the read bit line is connected to the arithmetic circuit via wiring provided substantially perpendicular to the surface of the substrate on which the second transistor is provided.

- Semiconductor devices that are electrically connected are preferred.

- One aspect of the present invention can reduce the size of a semiconductor device. Alternatively, one aspect of the present invention can reduce the power consumption of the semiconductor device. Alternatively, one aspect of the present invention can suppress heat generation of the semiconductor device. Alternatively, one aspect of the present invention can reduce the number of data transfers between the CPU and the semiconductor device that functions as a memory. Alternatively, a semiconductor device having a new configuration can be provided.

- FIG. 1A and 1B are diagrams for explaining a configuration example of a semiconductor device.

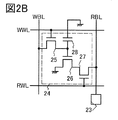

- 2A and 2B are diagrams for explaining a configuration example of the semiconductor device.

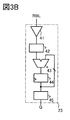

- 3A and 3B are diagrams for explaining a configuration example of the semiconductor device.

- FIG. 4 is a diagram illustrating a configuration example of the semiconductor device.

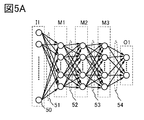

- 5A and 5B are diagrams for explaining a configuration example of the semiconductor device.

- 6A and 6B are diagrams for explaining a configuration example of the semiconductor device.

- 7A and 7B are diagrams for explaining a configuration example of the semiconductor device.

- 8A and 8B are diagrams for explaining a configuration example of the semiconductor device.

- FIG. 9 is a diagram illustrating a configuration example of the semiconductor device.

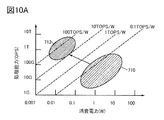

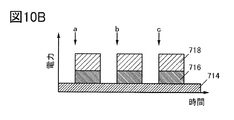

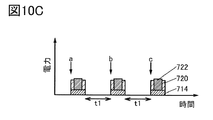

- 10A, 10B and 10C are diagrams for explaining the relationship between the processing performance of the semiconductor device and the power consumption.

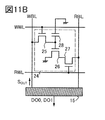

- 11A and 11B are diagrams for explaining a configuration example of the semiconductor device.

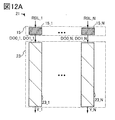

- 12A and 12B are diagrams for explaining a configuration example of the semiconductor device.

- FIG. 13 is a diagram illustrating a configuration example of the semiconductor device.

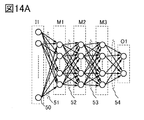

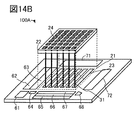

- 14A and 14B are diagrams for explaining a configuration example of the semiconductor device.

- 15A and 15B are diagrams for explaining a configuration example of the semiconductor device.

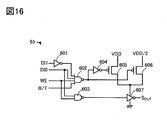

- FIG. 16 is a diagram illustrating a configuration example of a semiconductor device.

- FIG. 17 is a diagram illustrating a configuration example of the semiconductor device.

- 18A and 18B are diagrams for explaining a configuration example of the semiconductor device.

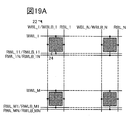

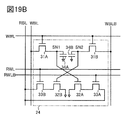

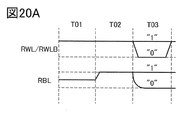

- 19A and 19B are diagrams for explaining a configuration example of the semiconductor device.

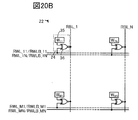

- 20A and 20B are diagrams for explaining a configuration example of the semiconductor device.

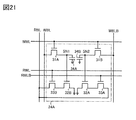

- FIG. 21 is a diagram illustrating a configuration example of a semiconductor device.

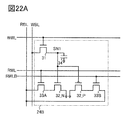

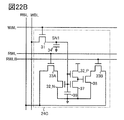

- 22A and 22B are diagrams for explaining a configuration example of the semiconductor device.

- FIG. 23 is a diagram illustrating a configuration example of the semiconductor device.

- FIG. 24 is a diagram illustrating a configuration example of the semiconductor device.

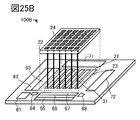

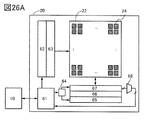

- 25A and 25B are diagrams for explaining a configuration example of the semiconductor device.

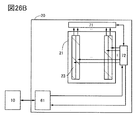

- 26A and 26B are diagrams for explaining a configuration example of the semiconductor device.

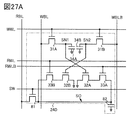

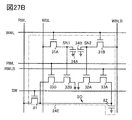

- 27A and 27B are diagrams illustrating a configuration example of the semiconductor device.

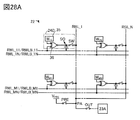

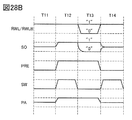

- 28A and 28B are diagrams for explaining a configuration example of the semiconductor device.

- FIG. 21 is a diagram illustrating a configuration example of a semiconductor device.

- 22A and 22B are diagrams for explaining a configuration example of the semiconductor device.

- FIG. 23 is

- FIG. 29 is a diagram illustrating a configuration example of the semiconductor device.

- FIG. 30 is a diagram illustrating a configuration example of a CPU.

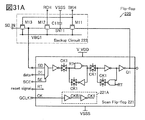

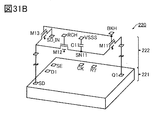

- 31A and 31B are diagrams for explaining a configuration example of a CPU.

- FIG. 32 is a diagram illustrating a configuration example of the CPU.

- FIG. 33 is a diagram illustrating a configuration example of an integrated circuit.

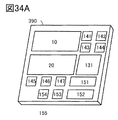

- 34A and 34B are diagrams illustrating a configuration example of an integrated circuit.

- 35A and 35B are diagrams illustrating application examples of integrated circuits.

- 36A and 36B are diagrams illustrating application examples of integrated circuits.

- 37A, 37B and 37C are diagrams illustrating application examples of integrated circuits.

- FIG. 38 is a diagram illustrating an application example of an integrated circuit.

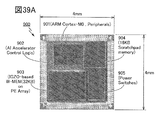

- FIG. 39A is an external photograph of the semiconductor device.

- FIG. 39B is a cross-sectional TEM photograph of the semiconductor device.

- FIG. 40 is a block diagram illustrating a system configuration of a semiconductor device.

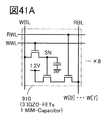

- FIG. 41A is a circuit diagram of a memory cell.

- FIG. 41B is a timing chart showing an operation example of the memory cell.

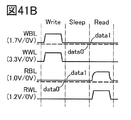

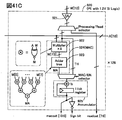

- FIG. 41C is a block diagram showing the configuration of the arithmetic unit.

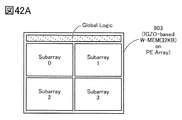

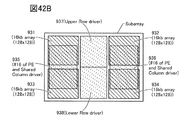

- 42A and 42B are block diagrams illustrating the configuration of the semiconductor device.

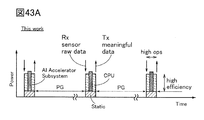

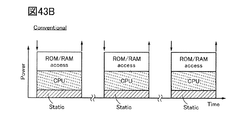

- 43A and 43B are conceptual diagrams illustrating changes in power consumption that occur during the operating period of the semiconductor device.

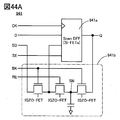

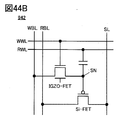

- 44A and 44B are circuit diagrams of an information holding circuit.

- FIG. 45A is a diagram showing an operation waveform after executing the simulation.

- FIG. 45B is a diagram showing a neural network model assumed in the simulation.

- the ordinal numbers "1st”, “2nd”, and “3rd” are added to avoid confusion of the components. Therefore, the number of components is not limited. Moreover, the order of the components is not limited. Further, for example, the component referred to in “first” in one of the embodiments of the present specification and the like is defined as a component referred to in “second” in another embodiment or in the claims. It is possible. Further, for example, the component referred to in “first” in one of the embodiments of the present specification and the like may be omitted in another embodiment or in the claims.

- the power supply potential VDD may be abbreviated as potentials VDD, VDD, etc. This also applies to other components (eg, signals, voltages, circuits, elements, electrodes, wiring, etc.).

- the code is used for identification such as "_1”, “_2”, “[n]", “[m, n]”. May be added and described.

- the second wiring GL is described as wiring GL [2].

- the semiconductor device refers to all devices that can function by utilizing the semiconductor characteristics.

- a semiconductor device such as a transistor, a semiconductor circuit, an arithmetic unit, and a storage device are one aspect of the semiconductor device.

- a display device liquid crystal display device, light emission display device, etc.

- projection device lighting device, electro-optical device, power storage device, storage device, semiconductor circuit, image pickup device, electronic device, etc. may be said to have a semiconductor device.

- the semiconductor device 100 includes a CPU 10, an accelerator 20, and a bus 30.

- the accelerator 20 has an arithmetic processing unit 21 and a memory unit 22.

- the arithmetic processing unit 21 has an arithmetic circuit 23.

- the memory unit 22 has a memory circuit 24.

- the memory unit 22 may be referred to as a device memory or a shared memory.

- the memory circuit 24 has a transistor 25 having a semiconductor layer 29 having a channel forming region.

- the arithmetic circuit 23 and the memory circuit 24 are electrically connected via the wiring 31.

- the CPU 10 has a function of performing general-purpose processing such as execution of an operating system, control of data, execution of various operations and programs.

- the CPU 10 has one or more CPU cores.

- Each CPU core has a data holding circuit that can hold data even when the supply of the power supply voltage is stopped.

- the supply of the power supply voltage can be controlled by electrical disconnection from the power supply domain (power domain) by a power switch or the like.

- the power supply voltage may be referred to as a drive voltage.

- a memory having a transistor (OS transistor) having an oxide semiconductor in the channel formation region is suitable.

- the oxide semiconductor is also referred to as a metal oxide.

- the configuration of the CPU core including the data holding circuit including the OS transistor will be described in the fifth embodiment.

- the accelerator 20 has a function of executing a program (also called a kernel or a kernel program) called from a host program.

- the accelerator 20 can perform, for example, parallel processing of matrix operations in graphic processing, parallel processing of product-sum operations of neural networks, parallel processing of floating-point operations in scientific and technological calculations, and the like.

- the memory unit 22 has a function of storing data processed by the accelerator 20. Specifically, it is possible to store data input or output to the arithmetic processing unit 21, such as weight data used for parallel processing of the product-sum operation of the neural network.

- the memory circuit 24 is electrically connected to the arithmetic circuit 23 of the arithmetic processing unit 21 via wiring 31, and has a function of holding a binary or ternary digital value.

- the semiconductor layer 29 included in the transistor 25 is an oxide semiconductor. That is, the transistor 25 is an OS transistor.

- the memory circuit 24 is preferably a memory having an OS transistor (hereinafter, also referred to as an OS memory).

- the OS transistor Since the bandgap of the metal oxide is 2.5 eV or more, the OS transistor has a minimum off current. As an example, voltage 3.5V between the source and the drain, at at room temperature (25 °C), 1 ⁇ less than 10 -20 A state current per channel width 1 [mu] m, less than 1 ⁇ 10 -22 A, or 1 ⁇ 10 It can be less than -24A . That is, the on / off current ratio of the drain current can be set to 20 digits or more and 150 digits or less. Therefore, the OS memory has an extremely small amount of charge leaked from the holding node via the OS transistor. Therefore, since the OS memory can function as a non-volatile memory circuit, power gating of the accelerator becomes possible.

- High-density integrated semiconductor devices may generate heat due to the driving of circuits. Due to this heat generation, the temperature of the transistor rises, which may change the characteristics of the transistor, resulting in a change in field effect mobility and a decrease in operating frequency. Since the OS transistor has higher thermal resistance than the Si transistor, the field effect mobility is less likely to change due to temperature changes, and the operating frequency is less likely to decrease. Further, the OS transistor tends to maintain the characteristic that the drain current increases exponentially with respect to the gate-source voltage even when the temperature rises. Therefore, by using the OS transistor, stable operation can be performed in a high temperature environment.

- the metal oxides applied to the OS transistor are Zn oxide, Zn-Sn oxide, Ga-Sn oxide, In-Ga oxide, In-Zn oxide, and In-M-Zn oxide (M is: Ti, Ga, Y, Zr, La, Ce, Nd, Sn or Hf) and the like.

- M is: Ti, Ga, Y, Zr, La, Ce, Nd, Sn or Hf

- the transistor can be obtained with excellent electrical characteristics such as field effect mobility by adjusting the ratio of the elements.

- oxides containing indium and zinc include aluminum, gallium, yttrium, copper, vanadium, beryllium, boron, silicon, titanium, iron, nickel, germanium, zirconium, molybdenum, lanthanum, cerium, neodymium, hafnium, tantalum, and tungsten. , One or more selected from magnesium and the like may be included.

- the metal oxide applied to the semiconductor layer is preferably a metal oxide having a crystal portion such as CAAC-OS, CAC-OS, and nc-OS.

- CAAC-OS is an abbreviation for c-axis-aligned crystal line oxide semiconductor ductor.

- CAC-OS is an abbreviation for Cloud-Aligned Complex Oxide Semiconductor.

- nc-OS is an abbreviation for nanocrystalline oxide semiconductor.

- CAAC-OS has a c-axis orientation and has a distorted crystal structure in which a plurality of nanocrystals are connected in the ab plane direction.

- the strain refers to a region in which a plurality of nanocrystals are connected in which the orientation of the lattice arrangement changes between a region in which the lattice arrangement is aligned and a region in which another lattice arrangement is aligned.

- the CAC-OS has a function of flowing electrons (or holes) as carriers and a function of not flowing electrons as carriers. By separating the function of flowing electrons and the function of not flowing electrons, both functions can be maximized. That is, by using CAC-OS in the channel formation region of the OS transistor, both a high on-current and an extremely low off-current can be realized.

- OS transistors Since metal oxides have a large bandgap, electrons are less likely to be excited, and the effective mass of holes is large, OS transistors may be less likely to undergo avalanche breakdown than general Si transistors. .. Therefore, for example, hot carrier deterioration caused by avalanche breakdown can be suppressed. By suppressing hot carrier deterioration, the OS transistor can be driven with a high drain voltage.

- the OS transistor is a storage transistor that has a large number of electrons as carriers. Therefore, the influence of DIBL (Drain-Induced Barrier Lowering), which is one of the short-channel effects, is smaller than that of an inverting transistor (typically, a Si transistor) having a pn junction. That is, the OS transistor has higher resistance to the short channel effect than the Si transistor.

- DIBL Drain-Induced Barrier Lowering

- the OS transistor Since the OS transistor has high resistance to the short channel effect, the channel length can be reduced without deteriorating the reliability of the OS transistor. Therefore, the degree of circuit integration can be increased by using the OS transistor.

- the drain electric field becomes stronger as the channel length becomes finer, but as mentioned above, the OS transistor is less likely to undergo avalanche breakdown than the Si transistor.

- the OS transistor has high resistance to the short channel effect, it is possible to make the gate insulating film thicker than the Si transistor. For example, even in a fine transistor having a channel length and a channel width of 50 nm or less, it may be possible to provide a thick gate insulating film of about 10 nm. By thickening the gate insulating film, the parasitic capacitance can be reduced, so that the operating speed of the circuit can be improved. Further, by making the gate insulating film thicker, the leakage current through the gate insulating film is reduced, which leads to a reduction in static current consumption.

- the accelerator 20 can hold data even if the supply of the power supply voltage is stopped by having the memory circuit 24 which is the OS memory. Therefore, the power gating of the accelerator 20 becomes possible, and the power consumption can be significantly reduced.

- the memory circuit 24 composed of the OS transistor can be provided so as to be stacked with the arithmetic circuit 23 that can be configured by Si CMOS. Therefore, the circuit area can be arranged without increasing the circuit area.

- the memory circuit 24 and the arithmetic circuit 23 are electrically connected via a wiring 31 extending in a direction substantially perpendicular to the surface of the substrate on which the arithmetic circuit 23 is provided.

- approximately vertical means a state in which the objects are arranged at an angle of 85 degrees or more and 95 degrees or less.

- the memory circuit 24 can have a NOSRAM circuit configuration.

- NOSRAM registered trademark

- NOSRAM refers to a memory in which the memory cell is a 2-transistor type (2T) or 3-transistor type (3T) gain cell and the access transistor is an OS transistor.

- the OS transistor has an extremely small leakage current, that is, a current flowing between the source and the drain in the off state.

- the NOSRAM can be used as a non-volatile memory by holding the electric charge corresponding to the data in the memory circuit by using the characteristic that the leakage current is extremely small.

- NO SRAM can read the held data without destroying it (non-destructive reading), it is suitable for parallel processing of the product-sum operation of a neural network in which only the data reading operation is repeated in large quantities.

- the arithmetic processing unit 21 has a function of performing arithmetic processing using digital values. Digital values are less susceptible to noise. Therefore, the accelerator 20 is suitable for performing arithmetic processing that requires highly accurate arithmetic results.

- the arithmetic processing unit 21 is preferably composed of Si CMOS, that is, a transistor (Si transistor) having silicon in the channel forming region. With this configuration, it can be provided by stacking with an OS transistor.

- the arithmetic circuit 23 has a function of performing any one of processing such as integer arithmetic, single-precision floating-point arithmetic, and double-precision floating-point arithmetic by using the digital value data held in each of the memory circuits 24 of the memory unit 22. Has.

- the arithmetic circuit 23 has a function of repeatedly executing the same processing such as a product-sum operation.

- the calculation circuit 23 is configured to provide one calculation circuit 23 for each read bit line of the memory circuit 24, that is, for each row (Column) (Column-Parallel Calibration).

- the data for one line of the memory circuit 24 (up to all bit lines) can be arithmetically processed in parallel.

- the data bus size between the CPU and the memory 32 bits, etc.) is not limited. Therefore, in the Colon-Parallel Calction, the degree of parallelism of the calculation can be significantly increased. It is possible to improve the calculation efficiency related to a huge amount of arithmetic processing such as deep neural network learning (deep learning), which is an AI technology, and scientific and technological calculations that perform floating-point arithmetic.

- deep neural network learning deep learning

- the power generated by the memory access (data transfer between the CPU and the memory and the calculation by the CPU) can be reduced, resulting in heat generation and generation. It is possible to suppress an increase in power consumption. Further, by making the physical distance between the arithmetic circuit 23 and the memory circuit 24 close to each other, for example, the wiring distance can be shortened by stacking, the parasitic capacitance generated in the signal line can be reduced, so that the power consumption can be reduced.

- the bus 30 electrically connects the CPU 10 and the accelerator 20. That is, the CPU 10 and the accelerator 20 can transmit data via the bus 30.

- One aspect of the present invention can reduce the size of a semiconductor device that functions as an accelerator for AI technology and the like, which has a huge amount of calculation and a large number of parameters.

- one aspect of the present invention can reduce the power consumption of a semiconductor device that functions as an accelerator for AI technology and the like, which has a huge amount of calculation and a large number of parameters.

- one aspect of the present invention can suppress heat generation in a semiconductor device that functions as an accelerator such as AI technology having a huge amount of calculation and a large number of parameters.

- one aspect of the present invention can reduce the number of data transfers between the CPU and the semiconductor device that functions as a memory in the semiconductor device that functions as an accelerator such as AI technology having a huge amount of calculation and the number of parameters. ..

- semiconductor devices that function as accelerators such as AI technology which has a huge amount of calculation and a large number of parameters, have a non-Von Neumann architecture, and compared to the von Neumann architecture, which consumes more power as the processing speed increases.

- Parallel processing can be performed with extremely low power consumption.

- FIG. 2A is a diagram illustrating a circuit configuration example applicable to the memory unit 22 included in the semiconductor device 100 of the present invention.

- writing word lines WWL_1 to WWL_M are arranged side by side in the matrix direction of M rows and N columns (M and N are natural numbers of 2 or more).

- M and N are natural numbers of 2 or more.

- the read bit lines RBL_1 to RBL_N are shown in the figure.

- the memory circuit 24 connected to each word line and bit line is illustrated.

- FIG. 2B is a diagram illustrating a circuit configuration example applicable to the memory circuit 24.

- the memory circuit 24 includes a transistor 25, a transistor 26, a transistor 27, and a capacitance element 28 (also referred to as a capacitor).

- One of the source and drain of the transistor 25 is connected to the writing bit line WBL.

- the gate of the transistor 25 is connected to the writing word line WWL.

- the other of the source or drain of the transistor 25 is connected to one electrode of the capacitive element 28 and the gate of the transistor 26.

- One of the source or drain of the transistor 26 and the other electrode of the capacitive element 28 are connected to a wire that provides a fixed potential, eg, a ground potential.

- the other of the source or drain of the transistor 26 is connected to one of the source or drain of the transistor 27.

- the gate of the transistor 27 is connected to the read word line RWL.

- the other of the source or drain of the transistor 27 is connected to the read bit line RBL.

- the read bit line RBL is connected to the arithmetic circuit 23 via a wiring 31 or the like extending in a direction substantially perpendicular to the surface of the substrate on which the arithmetic circuit 23 is provided.

- the circuit configuration of the memory circuit 24 shown in FIG. 2B corresponds to a NO SRAM of a 3-transistor type (3T) gain cell.

- the transistor 25 to the transistor 27 are OS transistors.

- the OS transistor has an extremely small leakage current, that is, a current flowing between the source and the drain in the off state.

- the NOSRAM can be used as a non-volatile memory by holding the electric charge corresponding to the data in the memory circuit by using the characteristic that the leakage current is extremely small.

- FIG. 3A is a diagram illustrating an example of a circuit configuration applicable to the arithmetic processing unit 21 included in the semiconductor device 100 of the present invention.

- the arithmetic processing unit 21 has N arithmetic circuits 23_1 to 23_N.

- Each of the N arithmetic circuits 23_1 to 23_N receives a signal of any one of N read bit lines RBL_1 to read bit lines RBL_N, and outputs output signals Q_1 to Q_N.

- the signal of the read bit line RBL_1 to the read bit line RBL_N may be amplified and read by a sense amplifier or the like.

- the output signals Q_1 to Q_N correspond to the data obtained by performing the product-sum operation using the data held in the memory circuit 24.

- FIG. 3B is a diagram illustrating a circuit configuration example of the arithmetic circuit 23 applicable to the arithmetic circuit 23_1 to the arithmetic circuit 23_N.

- FIG. 4 is a circuit for executing arithmetic processing based on the architecture of Binary Neural Network (BNN).

- the calculation circuit 23 includes a read circuit 41 to which a signal of the read bit line RBL is given, a bit product sum calculation unit 42, an accumulator 43, a latch circuit 44, and a coding circuit 45 that outputs an output signal Q.

- FIG. 4 shows a configuration example showing more details about the configuration of the arithmetic circuit 23 shown in FIG. 3B.

- the product-sum calculation of 8-bit signals (W [0] to W [7], A [0] to A [7]) is performed, and 1-bit output signal Q and 11-bit output signal (acout) are performed.

- the configuration for outputting [10: 0]) is shown as an example.

- the same product of M pieces and their sum can be executed in 8 parallel ⁇ 1 bit ⁇ M / 8 lines, so that M / 8 clock is required. Therefore, in the configuration of FIG. 4, the calculation time can be shortened by executing the multiply-accumulate operation in parallel, so that the calculation efficiency can be improved.

- the bit product-sum calculator 42 is obtained by an adder to which an 8-bit signal (W [0] to W [7], A [0] to A [7]) is input, and the adder. It has an adder in which the value is input.

- the product of 1-bit signals calculated in 8 parallels is shown as WA0 to WA7, the sum thereof is shown as WA10, WA32, WA54, WA76, and the sum thereof is shown as WA3210, WA7654.

- the accumulator 43 functioning as an adder outputs the sum of the signal of the bit multiply-accumulate calculator 42 and the output signal of the latch circuit 44 to the latch circuit 44.

- the accumulator 43 switches the signal to be input to the adder according to the control signal TxD_EN.

- TxD_EN 0

- the control signal TxD_EN 1

- TxD_EN 1

- the logic circuit 47 composed of the AND circuit is used for batch normalization after the product-sum calculation of the signals A [0] to A [7] and the signals W [0] to W [7] is completed. Add the data together. Specifically, the signals W [7] are added while switching with the switching signal (th select [10: 0]).

- the data for batch normalization may be configured to be simultaneously read and selected from signals W [0] to W [6] other than the signal W [7], for example.

- Batch normalization is an operation for adjusting the distribution of output data of each layer in a neural network so as to be constant. For example, image data often used for calculations in neural networks may differ from the distribution of prediction data (input data) because the distribution of data used for training tends to vary.

- Batch normalization can improve the accuracy of learning in a neural network by normalizing the distribution of input data to the intermediate layer of the neural network to a Gaussian distribution with an average of 0 and a variance of 1.

- BNN Binary Neural Network

- the latch circuit 44 holds the output signal (acout [10: 0]) of the accumulator 43.

- the binary data passed to the layer (NN layer) in the next neural network by batch normalization becomes the most significant bit of the product-sum operation result held by the latch circuit 44.

- the signal of the most significant bit (acout10) represents the sign of the latch data calculated by the two's complement, and the plus data is 1 and the minus data is 0. Since it is passed to the NN layer, it is inverted by the inverter circuit 46 that functions as a coding circuit, and is output as an output signal Q. Since Q is the output of the intermediate layer, it is temporarily held in the buffer memory (also referred to as an input buffer) in the accelerator 20 and then used for the calculation of the next layer.

- FIG. 5A illustrates a hierarchical neural network based on the Binary Neural Network (BNN) architecture.

- FIG. 5A illustrates a fully connected neural network of a neuron 50, an input layer 1 layer (I1), an intermediate layer 3 layers (M1 to M3), and an output layer 1 layer (O1).

- the number of neurons in the input layer I1 is 786

- the number of neurons in the intermediate layers M1 to M3 is 256

- the number of neurons in the output layer O1 is 10

- the number of connections in each layer (layer 51, layer 52, layer 53 and layer 54) is ( 784 x 256) + (256 x 256) + (256 x 256) + (256 x 10), for a total of 334,336 pieces. That is, since the weight parameters required for the neural network calculation are about 330 Kbits in total, the memory capacity can be sufficiently implemented even in a small-scale system.

- FIG. 5B shows a detailed block diagram of the semiconductor device 100 capable of calculating the neural network shown in FIG. 5A.

- FIG. 5B in addition to the arithmetic processing unit 21, the arithmetic circuit 23, the memory unit 22, the memory circuit 24, and the wiring 31 described with reference to FIGS. 1A and 1B, each configuration shown in FIGS. 1A and 1B is driven.

- the configuration example of the peripheral circuit of is shown in the figure.

- FIG. 5B illustrates a controller 61, a row decoder 62, a word line driver 63, a column decoder 64, a write driver 65, a precharge circuit 66, a sense amplifier 67, a selector 68, an input buffer 71, and an arithmetic control circuit 72.

- FIG. 6A is a diagram in which blocks for controlling the memory unit 22 are extracted for each configuration shown in FIG. 5B.

- the controller 61, the row decoder 62, the word line driver 63, the column decoder 64, the write driver 65, the precharge circuit 66, the sense amplifier 67, and the selector 68 are extracted and shown.

- the controller 61 processes an input signal from the outside to generate a control signal for the row decoder 62 and the column decoder 64.

- the input signal from the outside is a control signal for controlling the memory unit 22 such as a write enable signal and a read enable signal. Further, the controller 61 inputs / outputs data written to the memory unit 22 or data read from the memory unit 22 via a bus with the CPU 10.

- the low decoder 62 generates a signal for driving the word line driver 63.

- the word line driver 63 generates a signal to be given to the writing word line WWL and the reading word line RWL.

- the column decoder 64 generates a signal for driving the sense amplifier 67 and the write driver 65.

- the sense amplifier 67 amplifies the potential of the read bit line RBL.

- the write driver generates a signal for controlling the read bit line RBL and the write bit line WBL.

- the precharge circuit 66 has a function of precharging a read bit line RBL or the like.

- the signal read from the memory circuit 24 of the memory unit 22 is input to the arithmetic circuit 23 and can be output via the selector 68.

- the selector 68 can sequentially read data corresponding to the bus width and output necessary data to the CPU 10 or the like via the controller 61.

- FIG. 6B is a diagram in which blocks for controlling the arithmetic processing unit 21 are extracted for each configuration shown in FIG. 5B.

- the controller 61 processes the input signal from the outside to generate the control signal of the arithmetic control circuit 72. Further, the controller 61 generates various signals for controlling the arithmetic circuit 23 included in the arithmetic processing unit 21. Further, the controller 61 inputs / outputs data related to the calculation result via the input buffer 71. By using the input buffer 71, parallel calculation of the number of bits equal to or larger than the data bus width of the CPU becomes possible. Further, since the number of times that a huge number of weight parameters are transferred to and from the CPU 10 can be reduced, power consumption can be reduced.

- One aspect of the present invention can reduce the size of a semiconductor device that functions as an accelerator for AI technology and the like, which has a huge amount of calculation and a large number of parameters.

- one aspect of the present invention can reduce the power consumption of a semiconductor device that functions as an accelerator for AI technology and the like, which has a huge amount of calculation and a large number of parameters.

- one aspect of the present invention can suppress heat generation in a semiconductor device that functions as an accelerator such as AI technology having a huge amount of calculation and a large number of parameters.

- one aspect of the present invention can reduce the number of data transfers between the CPU and the semiconductor device that functions as a memory in the semiconductor device that functions as an accelerator such as AI technology having a huge amount of calculation and the number of parameters. ..

- semiconductor devices that function as accelerators such as AI technology which has a huge amount of calculation and a large number of parameters, have a non-Von Neumann architecture, and compared to the von Neumann architecture, which consumes more power as the processing speed increases.

- Parallel processing can be performed with extremely low power consumption.

- FIGS. 7A and 7B are diagrams for explaining the semiconductor device 100A, which is one aspect of the present invention.

- the CPU 10 has a CPU core 11 and a backup circuit 12.

- the accelerator 20 has an arithmetic processing unit 21 and a memory unit 22.

- the arithmetic processing unit 21 has a drive circuit 15 and an arithmetic circuit 23.

- the drive circuit 15 is a circuit for driving the memory unit 22.

- the memory unit 22 has a memory circuit 24.

- the memory unit 22 may be referred to as a device memory or a shared memory.

- the memory circuit 24 has a transistor 25 having a semiconductor layer 29 having a channel forming region.

- the drive circuit 15 and the memory circuit 24 are electrically connected via the wiring 31.

- the memory circuit 24 is electrically connected to the arithmetic circuit 23 of the arithmetic processing unit 21 via the wiring 31 and the drive circuit 15.

- the memory circuit 24 has a function of holding binary or ternary data as an analog voltage value.

- the arithmetic processing unit 21 can efficiently perform arithmetic processing based on architectures such as Binary Neural Network (BNN) and Ternary Neural Network (TNN).

- BNN Binary Neural Network

- TNN Ternary Neural Network

- the drive circuit 15 has a write circuit for writing data to the memory unit 22 and a read circuit for reading data from the memory unit 22.

- the write circuit binary or 3 values data to be written to the memory circuit 24 in the memory unit 22 according to various signals such as a switching signal for switching the writing of the binary or trivalent data signal, a write control signal, and a data signal. It has a function to switch to the voltage value and output it.

- the writing circuit is composed of a logic circuit in which a plurality of signals are input.

- the read circuit has a function of switching the voltage value held in the memory circuit 24 in the memory unit 22 into a binary or ternary data signal and reading the data using a plurality of reference voltages.

- the readout circuit has the function of a sense amplifier.

- the memory circuit 24 and the drive circuit 15 composed of OS transistors are electrically connected via wiring 31 extending in a direction substantially perpendicular to the surface of the substrate on which the drive circuit 15 and the arithmetic circuit 23 are provided. Be connected.

- the term "approximately vertical" means a state in which the objects are arranged at an angle of 85 degrees or more and 95 degrees or less.

- the bit lines connected to the memory circuit 24 are the write bit line and the read bit line, it is preferable that they are connected via separate wirings.

- the write bit line is connected to the write circuit via wiring (first wiring) provided substantially perpendicular to the surface of the substrate on which the drive circuit 15 and the arithmetic circuit 23 are provided.

- the read bit line is connected to the read circuit via a wiring (second wiring) provided substantially perpendicular to the surface of the substrate on which the drive circuit 15 and the arithmetic circuit 23 are provided.

- FIG. 8A in addition to the configuration of the semiconductor device 100A described with reference to FIGS. 7A and 7B, the OS memory 300 connected to the bus 30 and the main memory 400 composed of a DRAM or the like are shown. Further, in FIG. 8A, the data between the OS memory 300 and the CPU 10 is shown as a data D CPU . Further, in FIG. 8A, the data between the OS memory 300 and the accelerator 20 is illustrated as data D ACC .

- the accelerator 20 can continue to hold the binary or ternary analog voltage value as data, and the CPU 10 calculates the calculation result obtained by the calculation circuit. It can be configured to output to. Therefore, the data D ACC from the OS memory 300 for arithmetic processing can be reduced. Further, since the amount of arithmetic processing of the CPU 10 can be reduced, the data D CPU between the OS memory 300 and the CPU 10 can also be reduced. That is, in the configuration of one aspect of the present invention, it is possible to reduce the number of accesses via the bus 30 and the amount of data to be transferred.

- the backup circuit 12 in the CPU 10 and the memory unit 22 in the accelerator 20 can be provided so as to be stacked with the CPU core 11 and the arithmetic processing unit 21 which can be configured by Si CMOS. Therefore, the circuit area can be arranged without increasing the circuit area.

- DOSRAM is an abbreviation for "Dynamic Oxide Semiconductor Random Access Memory (RAM)" and refers to a RAM having a 1T (transistor) 1C (capacity) type memory cell.

- RAM Dynamic Oxide Semiconductor Random Access Memory

- DOSRAM is a memory that utilizes the low off-current of the OS transistor.

- the DOSRAM is a DRAM formed by using an OS transistor, and the DOSRAM is a memory that temporarily stores information sent from the outside.

- the DOSRAM has a memory cell including an OS transistor and a read circuit unit including a Si transistor (a transistor having silicon in a channel forming region). Since the memory cell and the read circuit unit can be provided in different stacked layers, the overall circuit area of the DOSRAM can be reduced. In addition, the DOSRAM can divide the memory cell array into small pieces and arrange them efficiently.

- the OS memory 300 is formed by stacking layers having OS transistors to form an OS memory 300N in which DOSRAM is highly integrated, thereby increasing the storage capacity per unit area. Can be made larger. In this case, it is possible to omit the main memory 400 provided separately from the semiconductor device 100A.

- FIG. 9 shows a schematic diagram of a semiconductor device 100A that functions as a SoC in which a CPU 10, an accelerator 20, and an OS memory 300N are tightly coupled.

- the backup circuit 12 can be provided in the layer having the OS transistor on the upper layer of the CPU core 11.

- the memory unit 22 can be provided on the layer having the OS transistor on the upper layer of the arithmetic processing unit 21.

- the stacked OS memory 300N can be arranged in the same manner as the memory unit 22.

- a control circuit 500 having a Si transistor, a logic circuit 600 having an OS transistor, and the like can be provided.

- the logic circuit 600 is preferably a simple logic circuit such as a changeover switch that can be replaced with an OS transistor.

- the OS transistor has a fluctuation amount of electrical characteristics due to heat as compared with the Si transistor. It is suitable because it is small. Further, by integrating the circuits in the three-dimensional direction as shown in FIG. 9, the parasitic capacitance can be reduced as compared with a laminated structure using a through silicon via (Through Silicon Via: TSV) or the like. .. It is possible to reduce the power consumption required for charging and discharging each wiring. Therefore, it is possible to improve the calculation processing efficiency.

- TSV Through Silicon Via

- FIG. 10A is a diagram for explaining the relationship between the processing performance (OPS: Operations Per Second) and the power consumption (W).

- OPS Operations Per Second

- W power consumption

- the vertical axis represents the processing capacity

- the horizontal axis represents the power consumption.

- 0.1 TOPS / W Trip Operations Per Second / W

- 1 TOPA / W 1 TOPA / W

- 10 TOPS / W and 100 TOPS / W are clearly indicated by broken lines as indexes of calculation efficiency. ..

- the region 710 shows the region including the conventional general-purpose AI accelerator (Von Neumann type), and the region 712 shows the region including the semiconductor device of one aspect of the present invention.

- the area 710 includes, for example, a CPU (Central Processing Unit), a GPU (Graphics Processing Unit), an FPGA (Field-Programmable Gate Array), and the like.

- the semiconductor device of one aspect of the present invention by applying the semiconductor device of one aspect of the present invention, it is possible to reduce the power consumption by about two orders of magnitude and improve the processing performance as compared with the conventional general-purpose AI accelerator (Von Neumann type). It can be significantly improved (for example, 1000 times or more). By applying the semiconductor device of one aspect of the present invention, a calculation efficiency of 100 TOPS / W or more can be expected.

- FIG. 10B shows an image diagram of the power consumption of the semiconductor device having the conventional configuration in image recognition

- FIG. 10C shows an image diagram of the power consumption of the semiconductor device using the configuration of one aspect of the present invention in image recognition.

- the vertical axis represents electric power and the horizontal axis represents time.

- the electric power 714 indicates the leak power

- the electric power 716 indicates the CPU power

- the electric power 718 indicates the memory power.

- the electric power 714 indicates the leak power

- the electric power 720 indicates the CPU power

- the electric power 722 indicates the accelerator power.

- the electric power 722 also includes the electric power used for the arithmetic circuit and the memory circuit.

- the arrows a, b, and c represent signals in image recognition, respectively. It is assumed that the semiconductor device starts arithmetic processing such as image recognition when the signals of the arrows a, b, and c are input.

- a constant leakage power (power 714) is generated with respect to time.

- the leakage power (electric power) while using the CPU power (electric power 720) and the accelerator power (electric power 722). 714) is generated, but during the period when the CPU power (power 720) and the accelerator power (power 722) are not used, the leak power (power 714) does not occur during the normally-off drive (the period shown in FIG. 10C). It can be t1). This makes it possible to significantly reduce power consumption. That is, it is possible to provide a semiconductor device having extremely low power consumption.

- FIG. 11A is a diagram illustrating an example of a circuit configuration applicable to the memory unit 22 included in the semiconductor device 100A of the present invention.

- writing word lines WWL_1 to WWL_M writing word lines RWL_1 to RWL_M, writing bit lines WBL_1 WBL_N, and writing word lines WWL_1 to WWL_M arranged side by side in the matrix direction of M rows and N columns (M and N are natural numbers of 2 or more), and

- M and N are natural numbers of 2 or more

- the read bit lines RBL_1 to RBL_N are shown in the figure.

- the memory circuit 24 connected to each word line and bit line is illustrated.

- FIG. 11B is a diagram illustrating a circuit configuration example applicable to the memory circuit 24.

- the memory circuit 24 includes a transistor 25, a transistor 26, a transistor 27, and a capacitance element 28 (also referred to as a capacitor).

- One of the source and drain of the transistor 25 is connected to the writing bit line WBL.

- the gate of the transistor 25 is connected to the writing word line WWL.

- the other of the source or drain of the transistor 25 is connected to one electrode of the capacitive element 28 and the gate of the transistor 26.

- One of the source or drain of the transistor 26 and the other electrode of the capacitive element 28 are connected to a wire that provides a fixed potential, eg, a ground potential.

- the other of the source or drain of the transistor 26 is connected to one of the source or drain of the transistor 27.

- the gate of the transistor 27 is connected to the read word line RWL.

- the other of the source or drain of the transistor 27 is connected to the read bit line RBL.

- the write bit line WBL and the read bit line RBL are connected to the drive circuit 15 via wiring or the like extending in a direction substantially perpendicular to the surface of the substrate on which the arithmetic circuit 23 is provided. Be connected.

- the drive circuit 15 outputs a data signal S OUT which is a binary or ternary analog voltage value. Further, the drive circuit 15 is given a voltage of the read bit line RBL corresponding to the data read from the memory circuit 24, and outputs data signals DO0 and DO1 corresponding to the voltage.

- the circuit configuration of the memory circuit 24 shown in FIG. 11B corresponds to a NO SRAM of a 3-transistor type (3T) gain cell.

- the transistor 25 to the transistor 27 are OS transistors.

- the OS transistor has an extremely small leakage current, that is, a current flowing between the source and the drain in the off state.

- the NOSRAM can be used as a non-volatile memory by holding the electric charge corresponding to the data in the memory circuit by using the characteristic that the leakage current is extremely small.

- each transistor may have a back gate. By having a back gate, it is possible to improve the transistor characteristics.

- FIG. 12A is a diagram illustrating an example of a circuit configuration applicable to the arithmetic processing unit 21 included in the semiconductor device 100A of the present invention.

- the arithmetic processing unit 21 has a drive circuit 15 and an arithmetic circuit 23.

- the drive circuit 15 has N drive circuits 15_1 to 15_N.

- the arithmetic circuit 23 has N arithmetic circuits 23_1 to 23_N.

- a signal of any one of N read bit lines RBL_1 to read bit line RBL_N is input to each of the N drive circuits 15_1 to 15_N, and the data signals DO0_1 to DO0_N and / or the data signals DO1_1 to DO1_N are input. Is output.

- the data signals DO0_1 to DO0_N and / or the data signals DO1_1 to DO1_N are input to the arithmetic circuits 23_1 to 23_N to obtain output signals Y_1 to Y_N.

- the output signals Y_1 to Y_N correspond to the data obtained by performing the product-sum operation using the data held in the memory circuit 24.

- FIG. 12B is a diagram illustrating a circuit configuration example of the arithmetic circuit 23 applicable to the arithmetic circuit 23_1 to the arithmetic circuit 23_N.

- FIG. 13 is a circuit for executing arithmetic processing based on the architecture of Binary Neural Network (BNN) or Ternary Neural Network (TNN).

- the calculation circuit 23 includes a read circuit 41 to which the data signal DO0 and / or the data signal DO1 is input, a bit product / sum calculation unit 42, an accumulator 43, a latch circuit 44, and a coding circuit 45 to output the output signal Y. Have.

- FIG. 13 shows a configuration example showing more details about the configuration of the arithmetic circuit 23 shown in FIG. 12B.

- the product-sum calculation of 8-bit signals (W [0] to W [7], A [0] to A [7]) is performed, and the output signal Y and the 11-bit output signal (acout [10:: The configuration for outputting 0]) is shown as an example.

- the same product of M pieces and their sum can be executed in 8 parallel ⁇ 1 bit ⁇ M / 8 lines, so that M / 8 clock is required. Therefore, in the configuration of FIG. 13, the calculation time can be shortened by executing the multiply-accumulate operation in parallel, so that the calculation efficiency can be improved.

- bit product-sum calculator 42 is obtained by an adder to which an 8-bit signal (W [0] to W [7], A [0] to A [7]) is input and the adder. It has an adder in which the value is input.

- the product of 1-bit signals calculated in 8 parallels is shown as WA0 to WA7, the sum thereof is shown as WA10, WA32, WA54, WA76, and the sum thereof is shown as WA3210, WA7654.

- the accumulator 43 functioning as an adder outputs the sum of the signal of the bit multiply-accumulate calculator 42 and the output signal of the latch circuit 44 to the latch circuit 44.

- the accumulator 43 switches the signal to be input to the adder according to the control signal TxD_EN.

- TxD_EN 0

- the control signal TxD_EN 1

- TxD_EN 1

- the logic circuit 47 composed of the AND circuit is used for batch normalization after the product-sum calculation of the signals A [0] to A [7] and the signals W [0] to W [7] is completed.

- the signal W [7] is added while switching with the data, specifically, the switching signal (th select [10: 0]).

- the data for batch normalization may be configured to be simultaneously read and selected from signals W [0] to W [6] other than the signal W [7], for example.

- Batch normalization is an operation for adjusting the distribution of output data of each layer in a neural network so as to be constant. For example, image data often used for calculations in neural networks may differ from the distribution of prediction data (input data) because the distribution of data used for training tends to vary.

- Batch normalization can improve the accuracy of learning in a neural network by normalizing the distribution of input data to the intermediate layer of the neural network to a Gaussian distribution with an average of 0 and a variance of 1.

- BNN Binary Neural Network

- the latch circuit 44 holds the output signal (acout [10: 0]) of the accumulator 43.

- the binary data passed to the layer (NN layer) in the next neural network by batch normalization becomes the most significant bit of the product-sum operation result held by the latch circuit 44.

- the signal of the most significant bit (acout10) represents the sign of the latch data calculated by the two's complement, and the plus data is 1 and the minus data is 0. Since it is passed to the NN layer, it is inverted by the inverter circuit 46 that functions as a coding circuit, and is output as an output signal Y. Since Y is the output of the intermediate layer, it is temporarily stored in the buffer memory (also referred to as an input buffer) in the accelerator 20 and then used for the calculation of the next layer.

- FIG. 14A illustrates a hierarchical neural network based on the architecture of Binary Neural Network (BNN) or Ternary Neural Network (TNN).

- FIG. 14A illustrates a fully connected neural network of a neuron 50, an input layer 1 layer (I1), an intermediate layer 3 layers (M1 to M3), and an output layer 1 layer (O1). Assuming that the number of neurons in the input layer I1 is 786, the number of neurons in the intermediate layers M1 to M3 is 256, and the number of neurons in the output layer O1 is 10, for example, in Binary Neural Network (BNN), each layer (layer 51, layer 52, layer 53).

- BNN Binary Neural Network

- the number of bonds of the layer 54 is (784 ⁇ 256) + (256 ⁇ 256) + (256 ⁇ 256) + (256 ⁇ 10), for a total of 334336. That is, since the weight parameters required for the neural network calculation are about 330 Kbits in total, the memory capacity can be sufficiently implemented even in a small-scale system.

- FIG. 14B shows a detailed block diagram of the semiconductor device 100A capable of calculating the neural network shown in FIG. 14A.

- FIG. 14B in addition to the arithmetic processing unit 21, the arithmetic circuit 23, the memory unit 22, the memory circuit 24, and the wiring 31 described with reference to FIGS. 7A and 7B, each configuration shown in FIGS. 7A and 7B is driven.

- the configuration example of the peripheral circuit of is shown in the figure.