WO2019131692A1 - ゲーム装置、制御方法、制御プログラム、及び制御プログラムを記録したコンピュータ読み取り可能な記録媒体 - Google Patents

ゲーム装置、制御方法、制御プログラム、及び制御プログラムを記録したコンピュータ読み取り可能な記録媒体 Download PDFInfo

- Publication number

- WO2019131692A1 WO2019131692A1 PCT/JP2018/047690 JP2018047690W WO2019131692A1 WO 2019131692 A1 WO2019131692 A1 WO 2019131692A1 JP 2018047690 W JP2018047690 W JP 2018047690W WO 2019131692 A1 WO2019131692 A1 WO 2019131692A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- unit

- operation information

- information

- game

- acceleration

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

-

- A—HUMAN NECESSITIES

- A63—SPORTS; GAMES; AMUSEMENTS

- A63F—CARD, BOARD, OR ROULETTE GAMES; INDOOR GAMES USING SMALL MOVING PLAYING BODIES; VIDEO GAMES; GAMES NOT OTHERWISE PROVIDED FOR

- A63F13/00—Video games, i.e. games using an electronically generated display having two or more dimensions

- A63F13/40—Processing input control signals of video game devices, e.g. signals generated by the player or derived from the environment

- A63F13/42—Processing input control signals of video game devices, e.g. signals generated by the player or derived from the environment by mapping the input signals into game commands, e.g. mapping the displacement of a stylus on a touch screen to the steering angle of a virtual vehicle

- A63F13/428—Processing input control signals of video game devices, e.g. signals generated by the player or derived from the environment by mapping the input signals into game commands, e.g. mapping the displacement of a stylus on a touch screen to the steering angle of a virtual vehicle involving motion or position input signals, e.g. signals representing the rotation of an input controller or a player's arm motions sensed by accelerometers or gyroscopes

-

- A—HUMAN NECESSITIES

- A63—SPORTS; GAMES; AMUSEMENTS

- A63F—CARD, BOARD, OR ROULETTE GAMES; INDOOR GAMES USING SMALL MOVING PLAYING BODIES; VIDEO GAMES; GAMES NOT OTHERWISE PROVIDED FOR

- A63F13/00—Video games, i.e. games using an electronically generated display having two or more dimensions

- A63F13/20—Input arrangements for video game devices

- A63F13/21—Input arrangements for video game devices characterised by their sensors, purposes or types

- A63F13/211—Input arrangements for video game devices characterised by their sensors, purposes or types using inertial sensors, e.g. accelerometers or gyroscopes

-

- A—HUMAN NECESSITIES

- A63—SPORTS; GAMES; AMUSEMENTS

- A63F—CARD, BOARD, OR ROULETTE GAMES; INDOOR GAMES USING SMALL MOVING PLAYING BODIES; VIDEO GAMES; GAMES NOT OTHERWISE PROVIDED FOR

- A63F13/00—Video games, i.e. games using an electronically generated display having two or more dimensions

- A63F13/20—Input arrangements for video game devices

- A63F13/23—Input arrangements for video game devices for interfacing with the game device, e.g. specific interfaces between game controller and console

-

- A—HUMAN NECESSITIES

- A63—SPORTS; GAMES; AMUSEMENTS

- A63F—CARD, BOARD, OR ROULETTE GAMES; INDOOR GAMES USING SMALL MOVING PLAYING BODIES; VIDEO GAMES; GAMES NOT OTHERWISE PROVIDED FOR

- A63F13/00—Video games, i.e. games using an electronically generated display having two or more dimensions

- A63F13/55—Controlling game characters or game objects based on the game progress

- A63F13/57—Simulating properties, behaviour or motion of objects in the game world, e.g. computing tyre load in a car race game

-

- A—HUMAN NECESSITIES

- A63—SPORTS; GAMES; AMUSEMENTS

- A63F—CARD, BOARD, OR ROULETTE GAMES; INDOOR GAMES USING SMALL MOVING PLAYING BODIES; VIDEO GAMES; GAMES NOT OTHERWISE PROVIDED FOR

- A63F13/00—Video games, i.e. games using an electronically generated display having two or more dimensions

- A63F13/80—Special adaptations for executing a specific game genre or game mode

- A63F13/818—Fishing

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/01—Input arrangements or combined input and output arrangements for interaction between user and computer

-

- A—HUMAN NECESSITIES

- A63—SPORTS; GAMES; AMUSEMENTS

- A63F—CARD, BOARD, OR ROULETTE GAMES; INDOOR GAMES USING SMALL MOVING PLAYING BODIES; VIDEO GAMES; GAMES NOT OTHERWISE PROVIDED FOR

- A63F2300/00—Features of games using an electronically generated display having two or more dimensions, e.g. on a television screen, showing representations related to the game

- A63F2300/60—Methods for processing data by generating or executing the game program

- A63F2300/6045—Methods for processing data by generating or executing the game program for mapping control signals received from the input arrangement into game commands

-

- A—HUMAN NECESSITIES

- A63—SPORTS; GAMES; AMUSEMENTS

- A63F—CARD, BOARD, OR ROULETTE GAMES; INDOOR GAMES USING SMALL MOVING PLAYING BODIES; VIDEO GAMES; GAMES NOT OTHERWISE PROVIDED FOR

- A63F2300/00—Features of games using an electronically generated display having two or more dimensions, e.g. on a television screen, showing representations related to the game

- A63F2300/80—Features of games using an electronically generated display having two or more dimensions, e.g. on a television screen, showing representations related to the game specially adapted for executing a specific type of game

- A63F2300/8035—Virtual fishing

Definitions

- the present disclosure relates to a game device, a control method, a control program, and a computer readable recording medium recording the control program.

- a game device for advancing a game based on operation information from a controller held by a player.

- operation information by a motion sensor incorporated in the controller is transmitted to the device body by wired or wireless communication, and the device body executes game processing based on the received operation information. can do.

- Patent Document 1 describes a game system having a controller having an acceleration sensor and a game device.

- this game system when the player holds the controller and performs various operations, measurement data measured by the acceleration sensor is transmitted to the game device as operation data.

- the game device executes operation control of the operation target object based on the received operation data.

- the player can reflect the movement of the player's arms and hands in the game, and can obtain a feeling of being immersed in the virtual game space.

- the player when playing a game using a plurality of operation units, the player has to set in the game device which operation target object each of the plurality of operation units corresponds to. For example, in such a game device, before the start of the game or during the game play, the player has to perform a complicated setting operation for correlating the operation unit with the object to be operated. Therefore, it has been necessary to automate the setting operation before the start of the game in the game device or during the game play.

- the present disclosure has been made to solve such a problem, and a game device, a control method, a control program, and a computer readable program recording the control program that automates setting operation and improves the operability of the operation unit. It is an object of the present invention to provide a flexible recording medium.

- the game device includes a storage unit for storing an image of a first object, a display processing unit for displaying an image of at least a first object, and first and second operation information for outputting operation information based on a motion of the player.

- the operation unit, the determination unit that determines whether the output operation information satisfies the predetermined condition, and the operation unit that outputs the operation information that satisfies the predetermined condition among the first and second operation units And an object control unit that changes the first object according to operation information from the object operation unit.

- each of the first and second operation units has an output unit for outputting the operation information measured by the motion sensor, and the predetermined condition is that the operation information output from the output unit is a predetermined numerical value Is preferably exceeded.

- the storage unit stores information related to the virtual space

- the object control unit moves the second object related to the first object in the virtual space based on the operation information from the object operation unit.

- the display processing unit displays an image of the second object moving in the virtual space.

- the object control unit calculates the velocity of the first object based on the operation information from the object operation unit, and calculates the movement distance of the second object in the virtual space based on the calculated velocity. It is preferable to do.

- the object control unit calculates the movement direction of the object operation unit based on the operation information from the object operation unit, and the movement direction of the second object in the virtual space is calculated based on the calculated direction. It is preferable to calculate.

- the information related to the virtual space includes information related to the movable range of the second object, and the object control unit determines that the position of the second object deviates from the movable range. It is preferable to correct the position within the movable range.

- the control method is a control method by a game apparatus including a storage unit for storing an image of a first object, and first and second operation units for outputting operation information based on an operation of a player.

- An operation of displaying an image of the first object, determining whether or not the output operation information satisfies a predetermined condition, and outputting the operation information satisfying the predetermined condition among the first and second operation units A unit is identified as an object operation unit, and the first object is changed according to operation information from the object operation unit.

- the control program is a control program of a game apparatus including a storage unit for storing an image of a first object, and first and second operation units for outputting operation information based on the operation of the player, An operation of displaying an image of the first object, determining whether or not the output operation information satisfies a predetermined condition, and outputting the operation information satisfying the predetermined condition among the first and second operation units

- the game apparatus is caused to identify the unit as an object operation unit, and to change the first object according to the operation information from the object operation unit.

- the computer readable recording medium includes at least a computer including: a storage unit for storing an image of a first object; and first and second operation units for outputting operation information based on an operation of a player.

- the image of the object is displayed, it is determined whether or not the output operation information satisfies a predetermined condition, and the operation unit which has output the operation information satisfying the predetermined condition among the first and second operation units is selected.

- a control program is identified that is identified as an object operation unit, and causes a first object to be changed in accordance with operation information from the object operation unit.

- the game device the control method by the game device, the control program, and the computer-readable recording medium recording the control program can improve the operability of the operation unit of the game device.

- FIG. 1 is a diagram for explaining an example of a game provided by the game apparatus 1.

- the game apparatus 1 is a home game machine such as a console game machine, a personal computer (Personal Computer), a multifunctional mobile phone (so-called "smart phone”), a tablet terminal or a tablet PC.

- a home game machine such as a console game machine, a personal computer (Personal Computer), a multifunctional mobile phone (so-called “smart phone”), a tablet terminal or a tablet PC.

- the game device 1 in at least some embodiments of the present invention displays a virtual space including at least one or more types of objects.

- the virtual space is defined by three coordinate axes, and various objects having three-dimensional coordinates are arranged in the virtual space.

- the virtual space may be defined by two coordinate axes. In this case, various objects having two-dimensional coordinates are arranged in the virtual space.

- the shape of the object is a shape imitating a predetermined object (a car, a tree, a person, an animal, a building, etc.).

- the shape of the object may be cubic, rectangular, cylindrical, spherical or plate-like.

- the shape of the object may be deformed according to the passage of time.

- a virtual camera is disposed at a predetermined position in the virtual space.

- Three-dimensional coordinates of various objects arranged in the virtual space are projected on a predetermined two-dimensional screen surface arranged in the viewing direction of the virtual camera.

- the game device displays the projected two-dimensional screen surface on a display unit or the like.

- Certain specific ones of the objects in the virtual space are controlled to change in accordance with operation information based on the motion of the player.

- the change of the object is, for example, movement of the object in the virtual space, deformation of the external shape of the object, and the like.

- the game apparatus 1 includes a game processing apparatus 2 including a display unit 23, and a first operating device 3 and a second operating device 4.

- the game processing apparatus 2 acquires operation information based on the motion of the player, and generates image data for displaying a virtual space including the first object OJ1 that changes in accordance with the acquired operation information.

- the game processing device 2 displays an image indicating the virtual space on the display unit 23 based on the generated image data.

- the first operating device 3 and the second operating device 4 include motion sensors.

- the player holds the first controller 3 with the left hand and the second controller 4 with the right, and performs various operations related to the game provided by the game apparatus 1.

- the motion sensor provided in each of the first operating device 3 and the second operating device 4 generates operation information according to various operations of the player, and each of the first operating device 3 and the second operating device 4 generates The operation information is output to the game processing device 2.

- the player may hold the first operating device 3 with the right hand and hold the second operating device 4 with the left hand.

- the first controller 3 and the second controller 4 may be mounted on either the left or right upper arm or the lower arm, or on either the left or right leg or the like.

- the first operating device 3 and the second operating device 4 may be attached to any part of the player's body, as long as the operation of the player can be detected.

- the first controller 3 is an example of a first controller

- the second controller 4 is an example of a second controller.

- the motion sensor is a sensor for measuring the motion of the player, and is, for example, an acceleration sensor.

- the motion sensor may be an angular velocity sensor, a displacement sensor, an orientation measuring sensor, an image sensor, an optical sensor or the like.

- the angular velocity sensor is, for example, a gyro sensor.

- the direction measurement sensor is, for example, a geomagnetic sensor.

- the motion sensor may be provided with a plurality of sensors for measuring the motion of the player.

- the motion sensors of the first operating device 3 and the second operating device 4 perform the first operation.

- the operation information of each of the operating device 3 and the second operating device 4 is output.

- the motion sensor is an acceleration sensor

- the motion sensor of the first operating device 3 detects the acceleration of the first operating device 3 and outputs acceleration information indicating the detected acceleration.

- the motion sensor of the second controller 4 detects the acceleration of the second controller 4 and outputs acceleration information indicating the detected acceleration.

- the first operating device 3 and the second operating device 4 output the operation information from the motion sensor to the game processing device 2.

- the game processing device 2 changes the object corresponding to the operation information, and generates image data for displaying a virtual space including the changed object. For example, when acceleration information is output as operation information, the game processing device 2 calculates the moving speed of the object corresponding to the acceleration information, and moves the object in the virtual space based on the calculated moving speed.

- the game processing device 2 displays an image indicating the virtual space on the display unit 23 based on the generated image data.

- the first object OJ1 displayed on the display unit 23 is based on operation information from the operation unit that satisfies the first operation information condition among the first operation device 3 and the second operation device 4.

- the first operation information condition is, for example, a condition that, when the motion sensor is an acceleration sensor, acceleration information output by the motion sensor exceeds a predetermined value.

- the game provided by the game device 1 is a fishing game displaying an image of the first object OJ1 which imitates a fishing rod will be described as an example.

- the player holds one of the first operating device 3 and the second operating device 4 as a fishing rod and performs, for example, a casting operation.

- the casting operation is an operation of throwing lure or bait or the like to the water surface (sea surface, lake surface, river surface) in fishing.

- the lure or bait is tied to a fishing line, and the fishing line is wound on a reel provided on a fishing rod gripped by an angler.

- the casting operation is, for example, an operation in which an angler takes back a fishing rod over his head and then flicks in the front direction.

- the motion sensor of the operating device held as a fishing rod outputs operation information

- the operating device held as a fishing rod outputs operation information to the game processing device 2.

- the game processing device 2 identifies the operation device that has output the operation information as an object operation device. For example, in the case where acceleration information is output as operation information, when the acquired acceleration information exceeds a predetermined numerical value, the controller device that has output the acceleration information is identified as an object controller. Thereafter, the object operating device functions as an operating device for operating the first object OJ1 that simulates a fishing rod.

- the object operating device is an example of an object operating unit.

- the game processing device 2 changes (moves or deforms, etc.) the image of the first object OJ1 based on the operation information from the object operating device.

- the game processing device 2 generates image data for displaying a virtual space including the changed object, and displays an image indicating the virtual space on the display unit 23 based on the generated image data.

- the operating device when the operation information from the operating device operated by the player satisfies the first operation information condition, the operating device performs the first object OJ1. It is automatically identified as an object operating device for control. As described above, even when the game apparatus 1 includes a plurality of operating devices, the object operating device is automatically operated without the player setting any of the plurality of operating devices as the object operating device. It becomes possible to set Thereby, the game apparatus 1 can improve the operability of the operation device provided in the game apparatus 1 and prevent the player from losing the sense of immersion in the game.

- FIG. 2 is a view showing an example of a schematic configuration of the game apparatus 1.

- the game device 1 is a home-use game machine such as a console game machine, and includes a game processing device 2, a first operation device 3, and a second operation device 4.

- the home gaming machine may be stationary or portable.

- the game apparatus 1 may be a business-use game machine such as an arcade game machine installed in a specific facility.

- the specified facilities are attraction facilities such as amusement facilities, event facilities, and event facilities.

- the game device 1 may have three or more operating devices.

- the game processing device 2 may be any information processing device as long as it can be connected to the operation device.

- the game processing apparatus 2 may be a personal computer, a multifunctional mobile phone (so-called “smart phone”), a tablet terminal, a tablet PC, a mobile phone (so-called “feature phone”), a portable music player or a notebook PC.

- the game processing device 2 acquires operation information based on the player's operation from the first operation device 3 and the second operation device 4 and displays an image showing a virtual space including an object that changes according to the acquired operation information.

- the game processing device 2 includes a communication unit 21, a storage unit 22, a display unit 23, and a processing unit 24.

- the communication unit 21, the storage unit 22, the display unit 23, and the processing unit 24 provided in the game processing apparatus 2 will be described with reference to FIG.

- FIG. 3 is a diagram showing an example of a schematic configuration of the game processing apparatus 2. As shown in FIG.

- the communication unit 21 has an interface circuit for performing near field wireless communication according to a communication system such as Bluetooth (registered trademark), and radio waves transmitted by broadcast from the first controller 3 and the second controller 4 described later. Receive Note that the interface circuit included in the communication unit 21 is not limited to one for performing near field communication. For example, the communication unit 21 may have a receiving circuit for receiving various signals transmitted by infrared communication or the like. The communication unit 21 demodulates the radio wave broadcasted from the first controller 3 and the second controller 4 into a predetermined signal and supplies the signal to the processor 24.

- a communication system such as Bluetooth (registered trademark)

- the storage unit 22 includes, for example, a semiconductor memory device such as a read only memory (ROM) or a random access memory (RAM).

- the storage unit 22 stores an operating system program, a driver program, an application program, data, and the like used for processing in the processing unit 24.

- the driver program stored in the storage unit 22 is a communication device driver program for controlling the communication unit 21, an output device driver program for controlling the display unit 23 or the like.

- the application program stored in the storage unit 22 is a control program or the like that controls the progress of the game.

- the data stored in the storage unit 22 is various data and the like used by the processing unit 24 and the like during the execution of the game.

- the storage unit 22 may temporarily store temporary data related to a predetermined process.

- the information stored in the storage unit 22 is, for example, information on a virtual space (three-dimensional coordinates indicating the position of the virtual camera, information on the line-of-sight direction and view of the virtual camera, Coordinates etc.) are information on various objects in the virtual space (information on three-dimensional coordinates indicating the shape of the object, information on three-dimensional coordinates indicating the arrangement position of the object, etc.).

- the information stored in the storage unit 22 may be various game information (scores of the player, information on the player's character (HP (Hit Point), MP (Magic Point), etc.)) as the game progresses.

- the display unit 23 is a liquid crystal display.

- the display unit 23 may be an organic EL (Electro-Luminescence) display or the like.

- the display unit 23 displays an image according to the image data supplied from the processing unit 24.

- the image data is still image data or moving image data, and the displayed image is a still image or moving image.

- the display unit 23 may display a video according to the video data supplied from the processing unit 24.

- the processing unit 24 is a circuit including one or more processors operating according to a computer program (software), one or more dedicated hardware circuits executing at least a part of various processes, or a combination thereof. circuitry).

- the processor includes an arithmetic processing unit such as a central processing unit (CPU), a micro processing unit (MPU), or a graphics processing unit (GPU), and a storage medium such as a RAM or a ROM.

- the game processing apparatus 2 further includes a storage medium (memory) which is a storage such as a hard disk drive (HDD) or a solid state drive (SSD). At least one of these storage media stores program code or instructions configured to cause the CPU to execute processing.

- Storage media or computer readable media include any available media that can be accessed by a general purpose or special purpose computer.

- the processing unit 24 includes a game progress control unit 241, a determination unit 242, an identification unit 243, an object control unit 244, a parameter control unit 245, and a display processing unit 246.

- Each of these units is a functional module realized by a program executed by a processor included in the processing unit 24. Alternatively, these units may be implemented in the game processing apparatus 2 as firmware.

- the processing unit 24 may include one or more processors and their peripheral circuits. In this case, the processing unit 24 centrally controls the overall operation of the game processing apparatus 2 and is, for example, a CPU.

- the processing unit 24 executes various information processing in an appropriate procedure based on the program stored in the storage unit 22 and the operation information from the first operating device 3 and the second operating device 4, and The operation of the display unit 23 is controlled.

- the processing unit 24 executes various information processing based on the operating system program, the driver program, and the application program stored in the storage unit 22. Also, the processing unit 24 can execute a plurality of programs in parallel.

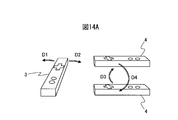

- FIG. 4 is a diagram showing an example of a schematic configuration of the first controller 3.

- the first operation device 3 has a function of outputting operation information based on the player's operation to the game processing device 2. Therefore, the first controller device 3 includes a first communication unit 31, a first storage unit 32, a first input unit 33, a first acceleration detection unit 34, a first angular velocity detection unit 35, and a first processing unit 36. .

- the first communication unit 31, the first storage unit 32, the first input unit 33, the first acceleration detection unit 34, the first angular velocity detection unit 35, and the first operation device 3 are provided.

- the 1 processing unit 36 will be described.

- the first communication unit 31 has an interface circuit for performing near field wireless communication according to a communication method such as Bluetooth (registered trademark), and establishes inter-terminal wireless communication with the game processing apparatus 2, Perform direct wireless communication.

- a communication method such as Bluetooth (registered trademark)

- the interface circuit which the 1st communication part 31 has is not limited to the thing for performing near field communication.

- the first communication unit 31 may have a transmission circuit for transmitting various signals by infrared communication or the like.

- the first communication unit 31 modulates the signal acquired from the first processing unit 36 into a predetermined radio wave and broadcasts it.

- the first storage unit 32 includes a semiconductor memory device such as a ROM and a RAM.

- the first storage unit 32 stores programs, data, parameters, and the like used for processing in the first processing unit 36.

- the program stored in the first storage unit 32 is a communication device driver program or the like that controls the first communication unit 31.

- the data stored in the first storage unit 32 is operating device identification information or the like for identifying the first operating device 3.

- the first input unit 33 is a key or a button that can be pressed by the player.

- the first input unit 33 includes, for example, a force sensor, and when the player performs an operation to press the first input unit 33 in a predetermined direction, the force sensor is generated when the first input unit 33 is pressed by the player. To detect the pressing force. Each time the pressing force is detected by the force sensor, the first input unit 33 outputs, to the first processing unit 36, input unit operation information corresponding to the first input unit 33 in which the pressing force is detected.

- the first acceleration detection unit 34 is an acceleration sensor, and detects an acceleration applied to the first operation device 3 at predetermined time intervals in each of three axial directions.

- the acceleration sensor is, for example, a piezoresistive three-axis acceleration sensor utilizing a piezoresistive effect, or a capacitive three-axis acceleration center utilizing a change in capacitance.

- the first acceleration detection unit 34 outputs acceleration information indicating the detected acceleration to the first processing unit 36 at predetermined time intervals (for example, 1/100 second intervals).

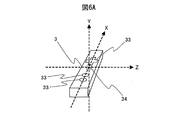

- FIG. 6A is a schematic view for explaining an example of the detection direction of the acceleration in the first acceleration detection unit 34.

- the 1st acceleration detection part 34 detects each acceleration of an X-axis direction, a Y-axis direction, and a Z-axis direction.

- the X-axis direction is a predetermined direction in the first operating device 3 (for example, a longitudinal direction or the like in the case where the first operating device 3 is rod-like).

- the Y-axis direction is, for example, a direction perpendicular to both the predetermined plane on which part or all of the first input unit 33 is disposed in the first operating device 3 and the X-axis direction.

- the Z-axis direction is a direction perpendicular to both the X-axis direction and the Y-axis direction.

- the first acceleration detection unit 34 may detect an acceleration on one axis, two axes, or four or more axes.

- the three-dimensional coordinate system by the X axis, the Y axis, and the Z axis may be referred to as a sensor coordinate system.

- the first angular velocity detection unit 35 is a gyro sensor, and detects an angular velocity (rotation angle per unit time) at which the first operation device 3 rotates at a predetermined time interval.

- the angular velocity detected by the first angular velocity detection unit 35 is, for example, an angular velocity centered on each of three axes.

- the gyro sensor is, for example, a vibrating gyro sensor using a MEMS (Micro Electro Mechanical System).

- the first angular velocity detection unit 35 outputs angular velocity information indicating the detected angular velocity to the first processing unit 36 at predetermined time intervals (for example, 1/100 second intervals).

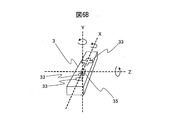

- FIG. 6B is a schematic view for explaining an example of the axis of the angular velocity detected by the first angular velocity detection unit 35.

- the 1st angular velocity detection part 35 detects the angular velocity centering on each of X axis, Y axis, and Z axis.

- the X, Y, and Z axes pass through the predetermined position of the first angular velocity detector 35 and are parallel to the X, Y, and Z axes of the sensor coordinate system in the first angular velocity detector 35.

- the axis of the angular velocity detected by the first angular velocity detection unit 35 may be one, two, or four or more.

- the first processing unit 36 includes one or more processors and their peripheral circuits.

- the first processing unit 36 centrally controls the overall operation of the first operating device 3 and is, for example, a CPU.

- the first processing unit 36 performs operations of the first transmission unit 361 and the like so that various processes of the first operating device 3 are executed in an appropriate procedure according to the program stored in the first storage unit 32 or the like. Control.

- the first transmission unit 361 is a functional module realized by a program executed by a processor included in the first processing unit 36.

- the first processing unit 36 may be mounted on the first controller 3 as firmware.

- FIG. 5 is a view showing an example of a schematic configuration of the second operating device 4. Similar to the first operating device 3, the second operating device 4 has a function of outputting, to the game processing apparatus 2, operation information based on the operation of the player. Therefore, the second controller device 4 includes a second communication unit 41, a second storage unit 42, a second input unit 43, a second acceleration detection unit 44, a second angular velocity detection unit 45, and a second processing unit 46. .

- the second communication unit 41 has a configuration similar to that of the first communication unit 31.

- the second communication unit 41 modulates the signal acquired from the second processing unit 46 into a predetermined radio wave and broadcasts it.

- the second storage unit 42 has a configuration similar to that of the first storage unit 32.

- the second storage unit 42 stores programs, data, parameters, and the like used for processing in the second processing unit 46.

- the program stored in the second storage unit 42 is a communication device driver program or the like that controls the second communication unit 41.

- the data stored in the second storage unit 42 is operating device identification information or the like for identifying the second operating device 4.

- the second input unit 43 has a configuration similar to that of the first input unit 33.

- the second input unit 43 outputs, to the second processing unit 46, input unit operation information corresponding to the second input unit 43 in which the pressing force is detected each time the pressing force is detected by the force sensor.

- the second acceleration detection unit 44 has a configuration similar to that of the first acceleration detection unit 34, and detects the acceleration applied to the second operation device 4 at predetermined time intervals in each of the three axial directions.

- the axis from which the second acceleration detection unit 44 detects acceleration may be one, two, or four or more axes.

- the second acceleration detection unit 44 outputs acceleration information indicating the detected acceleration to the second processing unit 46.

- the second angular velocity detection unit 45 has a configuration similar to that of the first angular velocity detection unit 35, and detects an angular velocity (rotational angle per unit time) at which the second operating device 4 rotates at predetermined time intervals.

- the axis of the angular velocity detected by the second angular velocity detection unit 45 may be one, two, or four or more.

- the second angular velocity detection unit 45 outputs angular velocity information indicating the detected angular velocity to the second processing unit 46.

- the second processing unit 46 integrally controls the overall operation of the second operating device 4 and has a configuration similar to that of the first processing unit 36.

- the second processing unit 46 performs operations of the second transmission unit 461 and the like so that various processes of the second operating device 4 can be performed in an appropriate procedure according to the program stored in the second storage unit 42 or the like.

- the second transmission unit 461 is a functional module realized by a program executed by a processor included in the second processing unit 46.

- the second processing unit 46 may be mounted on the second controller 4 as firmware.

- FIG. 7 is a diagram showing an example of a data structure of operation information output by the first operation device and the second operation device.

- the operation information is output at a predetermined time interval (for example, an interval of 1/100 second).

- the operation information is the first input unit 33, the first acceleration detection unit 34 and the first angular velocity detection unit 35, or the second input unit 43, the second acceleration detection unit 44 and the second angular velocity detection according to the operation of the player. It includes various information output by the unit 45.

- the operation information shown in FIG. 7 includes operation device identification information, X-axis direction acceleration information, Y-axis direction acceleration information, Z-axis direction acceleration information, X-axis angular velocity information, Y-axis angular velocity information, Z-axis angular velocity information, and input unit operation Contains information.

- the operating device identification information is identification information for identifying the first operating device 3 or identification information for identifying the second operating device 4. Identification information for identifying the first operating device 3 is stored in the first storage unit 32, and identification information for identifying the second operating device 4 is stored in the second storage unit 42.

- the X-axis direction acceleration information is acceleration information indicating the acceleration in the X-axis direction detected by the first acceleration detection unit 34, or acceleration information indicating the acceleration in the X-axis direction detected by the second acceleration detection unit 44. .

- the Y-axis direction acceleration information is acceleration information indicating the acceleration in the Y-axis direction detected by the first acceleration detection unit 34, or acceleration information indicating the acceleration in the Y-axis direction detected by the second acceleration detection unit 44. .

- the Z-axis direction acceleration information is acceleration information indicating the acceleration in the Z-axis direction detected by the first acceleration detection unit 34, or acceleration information indicating the acceleration in the Z-axis direction detected by the second acceleration detection unit 44. .

- the X-axis angular velocity information indicates angular velocity information indicating an angular velocity centered on the X-axis detected by the first angular velocity detecting unit 35, or an angular velocity indicating an angular velocity centered on the X-axis detected by the second angular velocity detecting unit 45. It is information.

- the Y-axis angular velocity information indicates angular velocity information indicating an angular velocity centered on the Y-axis detected by the first angular velocity detecting unit 35, or an angular velocity indicating an angular velocity centered on the Y-axis detected by the second angular velocity detecting unit 45. It is information.

- the Z-axis angular velocity information indicates angular velocity information indicating an angular velocity centered on the Z-axis detected by the first angular velocity detecting unit 35, or an angular velocity indicating an angular velocity centered on the Z-axis detected by the second angular velocity detecting unit 45. It is information.

- the input unit operation information is information included only when the input unit operation information is output from the first input unit 33 or the second input unit 43. For example, when the player presses the first input unit 33 for one second, the operation information output during the one second includes the input unit operation information output from the first input unit 33. When the operation information is output at an interval of 1/100 seconds, the first input unit 33 is used for each of all the operation information output during one second when the first input unit 33 is pressed by the player. The output unit operation information is included.

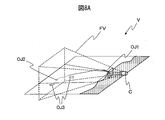

- FIG. 8A is a schematic view showing an example of a virtual space V created by the game processing apparatus 2. As shown in FIG.

- a first object OJ1 simulating a fishing rod a first object OJ1 simulating a fishing rod

- a second object OJ2 simulating a lure or bait connected with a fishing rod and a fishing line

- a third object OJ3 simulating a fish Etc. an object that simulates a fishing line, an object that simulates seawater, lake water, a river, or the like, an object that simulates a land, an island, or the like may be included.

- the first object OJ1 may be any object as long as it is operated by the object operating device.

- the second object OJ2 may be any object as long as it is associated with the first object.

- the third object OJ3 is an object that moves along a path that is automatically determined in advance or a path that is randomly determined.

- the third object OJ3 may be disposed at a specific point without moving.

- the virtual camera C is disposed at a predetermined position near the first object OJ1.

- the viewing direction of the virtual camera C is controlled such that at least a portion of each of the first object OJ1, the second object OJ2, and the third object OJ3 is included in the field of view FV of the virtual camera C.

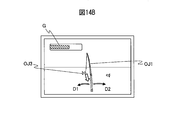

- FIG. 8B is a diagram showing an example of an image displayed on the display unit 23 of the game processing device 2.

- the image shown in FIG. 8B is an image showing the virtual space V in the field of view FV, which is projected on a predetermined two-dimensional screen surface arranged in the viewing direction of the virtual camera C.

- an image including the first object OJ1, the second object OJ2, and the third object OJ3 is displayed.

- FIG. 9A is a schematic view for explaining an example of the movement of the controller device.

- the movement of the operating device shown in FIG. 9A is performed when the player holds one of the first operating device 3 and the second operating device 4 as a fishing rod and performs a casting operation (over throw). It is movement.

- the controller held by the player to perform the casting operation is the first controller 3

- the first controller 3 moves from the first position P1 to the second position P2 while rotating in the rotational direction about the Z axis, for example. Do.

- the acceleration information output by the first acceleration detection unit 34 while the first operation device 3 moves from the first position P1 to the second position P2 is an acceleration obtained by combining the gravitational acceleration and the acceleration in the moving direction. They are X-axis direction acceleration information, Y-axis direction acceleration information, and Z-axis direction acceleration information.

- the angular velocity information output by the first angular velocity detection unit 35 of the first operating device 3 while the first operating device 3 moves from the first position P1 to the second position P2 is the first angular position information from the first position P1.

- the first transmission unit 361 of the first operation device 3 acquires the acceleration information and the angular velocity information output from the first acceleration detection unit 34 and the first angular velocity detection unit 35 at predetermined time intervals. Then, the first transmission unit 361 transmits operation information including the acquired acceleration information and angular velocity information to the game processing apparatus 2 via the first communication unit 31 at predetermined time intervals.

- the first transmission unit 361 is an example of an output unit.

- the second transmission unit 461 of the second controller 4 detects the second acceleration in the same manner as the first transmitter 361.

- the acceleration information and the angular velocity information output from the unit 44 and the second angular velocity detecting unit 45 are acquired at predetermined time intervals.

- the second transmission unit 461 transmits the operation information including the acquired acceleration information and angular velocity information to the game processing apparatus 2 via the second communication unit 41 at predetermined time intervals.

- the second transmission unit 461 is an example of an output unit.

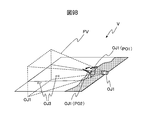

- FIG. 9B is a schematic view showing an example of the virtual space V created by the game processing device 2. As shown in FIG.

- the player holds any one of the first operating device 3 and the second operating device 4 as a fishing rod and performs casting operation (over throw) It changes based on the operation information output when it is performed.

- the game processing device 2 Each time the game processing device 2 receives the operation information transmitted from the first operation device 3 and the second operation device 4 at predetermined time intervals, whether the received operation information satisfies the first operation information condition or not Determine if When it is determined that the received operation information satisfies the first operation information condition, the game processing device 2 identifies the operation device that has transmitted the operation information as an object operation device.

- the first operation information condition is, for example, when X-axis direction acceleration information, Y-axis direction acceleration information, or Z-axis direction acceleration information is output as operation information, an acceleration in the X-axis direction indicated by X-axis direction acceleration information And a condition that the combined acceleration of the acceleration in the Y-axis direction indicated by the Y-axis direction acceleration information and the acceleration in the Z-axis direction indicated by the Z-axis direction acceleration information exceeds a predetermined numerical value.

- the first operation information condition may be a condition that the acceleration indicated by the acceleration information of any one axis exceeds a predetermined numerical value when the acceleration information of one or more axes is output as the operation information.

- the first object OJ1 included in the virtual space V changes based on the operation information from the object operating device. For example, when it is determined that the operation information from the object operating device satisfies the first operation information condition, the first object OJ1 moves from the predetermined first object position PO1 to the second object position PO2 Do.

- the information on the three-dimensional coordinates indicating the first object position PO1 is stored in the storage unit 22 of the game processing apparatus 2 as the information on the first object.

- the second object position PO2 is a predetermined position or a position based on operation information from the object operating device.

- the information of the three-dimensional coordinates indicating the second object position PO2 is stored in the storage unit 22 of the game processing device 2 as the information related to the first object. Ru.

- the second object position PO2 is calculated based on the acceleration information included in the operation information.

- the acceleration indicated by the acceleration information included in the operation information is, for example, a combined acceleration obtained by combining the acceleration indicated by the X axis direction acceleration information, the acceleration indicated by the Y axis direction acceleration information, and the acceleration indicated by the Z axis direction acceleration information. It is.

- the first movement distance of the first object OJ1 in the virtual space V corresponding to the movement distance of the object manipulation device is calculated.

- the second object position PO2 is calculated based on the first object position PO1 and the first movement distance.

- the second object position PO2 may be calculated based on angular velocity information included in the operation information. For example, based on all or part of acceleration information and angular velocity information in the target period, vectors from the first position P1 of the object operating device to the position of the object operating device corresponding to each operation information are at predetermined time intervals. It is calculated.

- three-dimensional coordinates indicating the position of the first object OJ1 according to the calculated vector are calculated. Then, while rotating based on angular velocity information, the first object OJ1 moves based on the three-dimensional coordinates.

- 10A and 10B are diagrams showing an example of an image displayed on the display unit 23 of the game processing device 2.

- FIG. 10A shows the virtual space V in the field of view FV projected onto a predetermined two-dimensional screen surface arranged in the direction of the line of sight of the virtual camera C when the first object OJ1 is at the first object position PO1. It is an image shown.

- FIG. 10B shows the virtual space V in the field of view FV projected onto a predetermined two-dimensional screen surface arranged in the line of sight of the virtual camera C when the first object OJ1 is at the second object position PO2. It is an image shown.

- the second object OJ2 When the first object OJ1 reaches the second object position PO2, the second object OJ2 is at a predetermined location of the first object OJ1 (for example, when the first object imitates a fishing rod, the rod Start the movement from the top guide position etc.). While the first object OJ1 moves from the first object position PO1 to the second object position PO2, the second object OJ2 may start moving from a predetermined location of the first object OJ1.

- the movement start position of the second object OJ2 is not limited to the predetermined portion of the first object OJ1, but may be a position within a predetermined range from the first object OJ1.

- an image including the moving second object OJ2 is displayed.

- the second movement distance and movement route of the second object OJ2 are calculated based on the operation information, and the second object OJ2 moves along the movement route from the movement start position to the arrival position PO3 separated by the second movement distance Do.

- the second movement distance of the second object OJ2 is calculated based on, for example, acceleration information included in the operation information.

- the moving speed of the second object OJ2 at the movement start time of the second object is calculated based on all or part of the acceleration information in the target period.

- a second movement distance corresponding to the calculated movement speed is calculated.

- the moving speed used to calculate the second moving distance may be an average moving speed in a period from the movement start time to a predetermined time before.

- a predetermined curve for example, a quadratic curve or the like

- the second movement distance of the second object OJ2 may be calculated based on the movement angle of the direction of acceleration indicated by the acceleration information included in the operation information.

- the acceleration indicated by the acceleration information included in the operation information is, for example, a combined acceleration obtained by combining the acceleration indicated by the X axis direction acceleration information, the acceleration indicated by the Y axis direction acceleration information, and the acceleration indicated by the Z axis direction acceleration information. It is.

- the direction of the acceleration indicated by the acceleration information included in each piece of operation information in the sensor coordinate system is calculated for each piece of operation information.

- the second movement distance of the second object OJ2 may be calculated based on angular velocity information included in the operation information. For example, based on the correspondence table between the integral value calculated by integrating the angular velocity information and the second movement distance (the correspondence table is stored, for example, in the storage unit 22 of the game processing apparatus 2), all in the target period or The second movement distance is calculated based on an integral value calculated by integrating a part of angular velocity information.

- the angle (suspension angle) A of the movement direction of the second object OJ2 from the horizontal plane in the virtual space V is calculated so as to decrease as the movement angle increases and to increase as the movement angle decreases. .

- FIG. 11 is a schematic view showing an example of a movement route of the second object OJ2 in the virtual space V.

- the movement route R and the second movement distance D of the second object OJ2 are the movement speed of the second object OJ2, the calculated angle A, and a predetermined curve (for example, 2) at the movement start time of the second object. It is calculated based on a function showing the next curve and the like.

- the angle (supine angle) A in the movement direction of the second object OJ2 from the horizontal surface in the virtual space V may be calculated based on the acceleration information and the angular velocity information included in the operation information.

- the angle A is calculated based on the acceleration information and the angular velocity information included in the operation information.

- a first difference angle between the direction of acceleration immediately after the start of movement of the first object OJ1 and the reference direction is calculated.

- a second difference angle between the direction of acceleration of the first object at the movement start time point of the second object OJ2 and the reference direction is calculated. Then, the difference between the first difference angle and the second difference angle is calculated as the angle A.

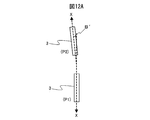

- FIG. 12A is a schematic view for explaining an example of the movement of the object operating device.

- a difference angle B between a line segment obtained by projecting the X axis of the object operating device at the first position P1 on a horizontal plane and a line segment obtained by projecting the X axis of the object operating device at the second position P2 on the horizontal plane is operation information Calculated based on angular velocity information and acceleration information included in

- the difference angle B is calculated based on angular velocity information and acceleration information included in the operation information.

- vertical downward is determined based on acceleration information, and a plane perpendicular to the vertical downward is determined as a horizontal plane.

- a line segment obtained by projecting the X axis of the object operating device at the first position P1 on a horizontal plane is determined.

- a line segment obtained by projecting the X axis of the object operating device at the second position P2 on a horizontal plane and an X axis of the object operating device at the first position P1 on the horizontal plane The difference angle B with the cut line segment is calculated.

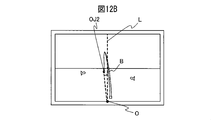

- FIG. 12B is an image showing the virtual space V in the field of view FV, which is projected on a predetermined two-dimensional screen surface arranged in the direction of the line of sight of the virtual camera C when the second object OJ2 moves.

- the difference angle B is set to the direction projected on the horizontal surface in the virtual space V in the movement direction of the second object OJ2.

- the difference angle B is set to the direction projected on the horizontal surface in the virtual space V in the movement direction of the second object OJ2.

- the position of the second object moving according to the movement direction may be corrected within the movable range.

- the movable range is a range within the field of view FV of the virtual camera C.

- FIG. 13 is a schematic diagram for explaining an example of processing in which the position of the second object OJ2 is corrected in the virtual space V created by the game processing apparatus 2.

- the reaching position PO3 moves into the movable range, and the correction connects the reaching position PO3 after movement from the movement start position Recalculate the route.

- the angle A in the movement route to be corrected is used to calculate the correction route.

- the predetermined relationship is, for example, a positional relationship in which the position of the second object OJ2 and the position of the third object OJ3 are in contact with each other.

- the predetermined relationship may be another relationship, and may be, for example, a positional relationship in which the position of the third object OJ3 is included within a predetermined range centered on the position of the second object OJ2.

- the third object OJ3 moves in the direction toward the first object OJ1 based on the operation information based on the player's operation.

- FIG. 14A is a schematic view for explaining an example of the movement of the controller device.

- the movement of the controller is the movement of the second controller 4 when the player holds the second controller 4 as a handle of the reel and winds the reel.

- the first operating device 3 as the object operating device and the first operating device 3 moves in the direction of D1

- the operation information including the angular velocity information detected by the first angular velocity detection unit the one object OJ1 similarly moves in the direction of D1.

- the first controller device 3 moves in the direction D2

- the first object OJ1 also moves in the direction D2 according to the operation information including the angular velocity information detected by the first angular velocity detector.

- the predetermined movement is, for example, periodic movement.

- the periodic motion is reciprocating motion or vibration motion in which the operation device repeats movement in the first direction D3 and movement in the second direction D4, or circular movement around a predetermined axis.

- the predetermined axis of the circular motion may be displaced or rotated, and the trajectory of the circular motion may not be a true circle.

- the predetermined movement is not limited to the periodic movement, and may be any movement according to the specific movement of the player.

- the game processing device 2 Each time the game processing device 2 receives the operation information transmitted from the first operation device 3 and the second operation device 4 at predetermined time intervals, whether the received operation information satisfies the second operation information condition or not Determine if When it is determined that the received operation information satisfies the second operation information condition, the game processing device 2 identifies the operation device that has transmitted the operation information as a parameter operation device.

- the second operation information condition is, for example, based on the acceleration information included in the operation information, the operating device that has transmitted the operation information repeats the movement in the first direction D3 and the movement in the second direction D4. It is a condition that it is determined that periodic motion is performed.

- the acceleration detection unit (the first acceleration detection unit 34 or the second acceleration detection unit 44) of the operation device reverses the detected acceleration direction in at least one axis of the sensor coordinate system. . That is, when a motion in which the direction of acceleration repeats inversion is detected in the acceleration detection unit, it is determined that periodic motion is performed.

- the periodic motion is a reciprocating motion will be described as an example.

- the operating device gripped to perform the reel winding operation may be an operating device gripped as an object operating device.

- the player performs a reciprocating motion that repeats the movement of the first operation device 3 in the first direction D3 and the movement in the second direction D4, and the game processing device 2 performs the first operation device.

- the first operation device 3 that has transmitted the operation information is identified as the parameter operation device.

- the second controller 4 is identified as an object controller.

- FIG. 14B is a view showing an example of an image displayed on the display unit 23 of the game processing device 2.

- an image showing the gauge G is displayed together with an image showing the virtual space V including at least the first object OJ1 and the third object OJ3 moving according to the reciprocating motion of the parameter operation device. Ru.

- the shape of the gauge G changes to a display mode according to the cycle of the reciprocation of the parameter manipulation device.

- the cycle of reciprocating motion of the parameter manipulation device is a cycle of movement in the first direction D3 and movement in the second direction D4.

- the gauge G is in the shape of a bar graph.

- the shape of the gauge G is not limited to the shape of a bar graph.

- the shorter the cycle of reciprocating motion of the parameter manipulation device that is, the greater the number of reciprocating motions per unit time

- the larger the value of the parameter, and the longer the cycle of reciprocating motion of the parameter manipulation device the smaller the value of the parameter It is set by the game processing device 2 to be

- the length of the bar graph of gauge G changes according to the value of the parameter. For example, the larger the value of the parameter, the longer the length of the bar graph, and the smaller the value of the parameter, the shorter the length of the bar graph.

- the value of the parameter may be set by the game processing device 2 to be larger as the displacement amount of one cycle in the reciprocation of the parameter manipulation device is larger. As a result, even when the reciprocation cycle is long, the player can wind a large number of fishing lines by turning the arms largely.

- the length of the bar graph of the gauge G may change based on the value of the parameter and the information on the game progress.

- the information on the game progress is, for example, information on the direction and / or the moving speed of the third object OJ 3 to move automatically.

- the length of the bar graph of the gauge G is a predetermined coefficient which is equal to or more than 1 as the parameter value.

- the length according to the value calculated by multiplying The predetermined coefficient may be increased as the moving speed at which the third object OJ3 automatically moves is faster.

- the length of the bar graph of the gauge G is a length corresponding to the value calculated by multiplying the value of the parameter by a predetermined coefficient less than one.

- the moving speed of the third object OJ3 to the first object OJ1 changes in accordance with the value of the parameter.

- the moving speed of the third object OJ3 to the first object OJ1 is set to a higher speed as the parameter value is larger, and to a slower speed as the parameter value is smaller. Thereby, the player can move the third object OJ3 to the hand earlier as the reciprocating motion of the parameter manipulation device is faster.

- the game progress control unit 241 reads information related to various objects stored in the storage unit 22 and creates a virtual space V in which various objects are arranged based on the read information.

- the objects arranged in the virtual space V are, for example, a first object OJ1, a second object OJ2, and a third object OJ3. Further, in the virtual space V, a virtual camera C is disposed.

- the game progress control unit 241 receives, via the communication unit 21, the operation information output from the first operation device 3 and the second operation device 4 at predetermined time intervals.

- the game progress control unit 241 determines whether or not the received operation information includes input unit operation information instructing the start of the game.

- the game progress control unit 241 determines that the received operation information includes the input unit operation information instructing the game start, the game is started, and the determination unit 242, the identification unit 243, and the object control unit 244 Instructs execution of object change processing.

- the game progress control unit 241 determines whether or not an instruction to start the game is included in the input unit operation information of the received operation information.

- the game progress control unit 241 determines that the instruction to start the game is included in the input unit operation information of the received operation information, the game is started again, and the determination unit 242, the identification unit 243, and the object The control unit 244 is instructed to execute object change processing.

- the game progress control unit 241 instructs the determination unit 242, the identification unit 243, the object control unit 244, and the parameter control unit 245 to execute the parameter change process.

- the game progress control unit 241 determines whether the game is over. For example, the game progress control unit 241 determines that the game is over when the third object OJ3 moves within the range of the end determination distance of the first object OJ1. In addition, the game progress control unit 241 determines that the game is ended when the input unit operation information of the received operation information includes an instruction to end the game.

- the determination unit 242 determines whether the received operation information satisfies the first operation information condition. In addition, each time the determination unit 242 receives the transmitted operation information, the determination unit 242 determines whether the received operation information satisfies the second operation information condition.

- the identification unit 243 identifies the operation device that has transmitted the operation information as an object operation device. Further, when the determination unit 242 determines that the received operation information satisfies the second operation information condition, the identification unit 243 identifies the operation device that has transmitted the operation information as the parameter operation device.

- the identification unit 243 determines whether the controller identified as the parameter controller is an object controller. When the controller identified as the parameter operating device is the object operating device, the identification unit 243 does not identify the controller identified as the parameter operating device as the object operating device, and the operation identified as the parameter operating device. An operating device different from the device is identified as an object operating device.

- the object control unit 244 changes the first object OJ1 based on the operation information transmitted from the object operating device at predetermined time intervals. When it is determined that the operation information from the object operating device satisfies the first operation information condition, the object control unit 244 determines in advance the first object OJ1 from a predetermined first object position PO1. You may move to the 2nd object position PO2 defined.

- the object control unit 244 determines the first one based on the operation information transmitted from the object operating device at predetermined time intervals.

- the moving direction and moving distance of the second object OJ2 are calculated, and the second object OJ2 is moved based on the calculated moving direction and moving distance.

- the object control unit 244 moves the second object based on the parameter changed by the parameter control unit 245 described later.

- the object control unit 244 reads the information on the line-of-sight direction and the visual field of the virtual camera C stored in the storage unit 22 as the information on the movable range, and moves based on the calculated moving direction and moving distance. If the object OJ2 deviates from the movable range, the position of the second object OJ2 is corrected within the movable range.

- the parameter control unit 245 changes the parameter based on the operation information transmitted from the parameter operating device at predetermined time intervals.

- the display processing unit 246 generates an image indicating the virtual space V in the field of view FV, which is projected on a predetermined two-dimensional screen surface arranged in the line of sight direction of the virtual camera C, and displays the generated image on the display unit 23 Do.

- the display processing unit 246 when an object in the virtual space V changes (moves, deforms, or the like), when the changed object is included in the field of view FV, the display processing unit 246 includes the changed object in the field of view FV. An image indicating the virtual space V is generated, and the generated image is displayed on the display unit 23.

- the display processing unit 246 also displays an image showing a gauge G corresponding to the parameter changed by the parameter control unit 245 when the identification unit 243 identifies the operation device identified as the parameter operation device as the object operation device. Are superimposed on the image showing the virtual space V and displayed on the display unit 23.

- FIG. 15 is a diagram showing an example of an operation flow of game progression processing by the processing unit 24 of the game processing device 2.

- the game progress control unit 241 receives operation information output from the first operating device 3 and the second operating device 4 through the communication unit 21 and instructs the start of the game to the received operation information. It is determined whether operation information is included (step S101).

- the operation information including the input unit operation information for instructing the start of the game is operated by the player by operating the predetermined first input unit 33 of the first operation device 3 or the predetermined second input unit 43 of the second operation device 4.

- the signal is transmitted from the first transmission unit 361 or the second transmission unit 461 via the first communication unit 31 or the second communication unit 41.

- step S101-No the game progress control unit 241 returns the process to step S101 again.

- the determination unit 242 determines whether the received operation information includes input unit operation information instructing a game start (step S101-Yes). If the received operation information includes input unit operation information instructing a game start (step S101-Yes), the determination unit 242, the identification unit 243, and the object control unit 244 execute object change processing ((step S101) Step S102). The details of the object change process will be described later.

- step S103 the game progress control unit 241, the determination unit 242, the identification unit 243, the object control unit 244, and the parameter control unit 245 execute parameter change processing.

- the details of the parameter change process will be described later.

- the game progress control unit 241 receives the operation information output from the first operating device 3 and the second operating device 4 via the communication unit 21, and uses the received operation information in the operation unit of the operation information again. It is determined whether an instruction to start the game is included (step S104). If it is determined that the received operation information includes an instruction to start the game again in the input unit operation information (step S104-Yes), the game progress control unit 241 returns the process to step S102.

- the game progress control unit 241 ends the game progress processing.

- FIG. 16 is a diagram showing an example of an operation flow of object change processing by the determination unit 242, the identification unit 243, and the object control unit 244 of the game processing device 2.

- the object change process shown in FIG. 16 is executed in step S102 of FIG.

- step S201 each time the determination unit 242 receives the operation information transmitted from the first operating device 3 and the second operating device 4 at predetermined time intervals, does the received operation information satisfy the first operation information condition? It is determined whether or not it is (step S201).

- Step S201 If it is not determined that the received operation information satisfies the first operation information condition (No at Step S201), the determining unit 242 returns the process to Step S201.

- the identification unit 243 identifies the operating device that has transmitted the operation information as an object operating device (step S202). .

- the object control unit 244 changes the first object OJ1 based on the operation information transmitted from the object operating device at predetermined time intervals (step S203).

- step S203 When the process of step S203 is executed, the object change process ends.

- FIG. 17 is a diagram showing an example of an operation flow of parameter change processing by the game progress control unit 241, the determination unit 242, the identification unit 243, the object control unit 244, and the parameter control unit 245 of the game processing apparatus 2.

- the parameter change process shown in FIG. 17 is executed in step S103 of FIG.

- the determination unit 242 determines whether the operation information received from the first controller device 3 and the second controller device 4 at predetermined time intervals satisfies the second operation information condition (step S301).

- Step S301 If it is not determined that the received operation information satisfies the second operation information condition (No at Step S301), the determining unit 242 returns the process to Step S301.

- the identification unit 243 identifies the operation device that has transmitted the operation information as a parameter operation device (step S302). .

- the identifying unit 243 determines whether the controller identified as the parameter controller in step S302 is an object controller (step S303).

- step S305 described later is executed by the parameter controller 245.

- the identification unit 243 identifies an operating device different from the operating device identified as the parameter operating device as the object operating device (Ste S304).

- the parameter control unit 245 changes the parameter based on the operation information transmitted from the parameter operating device at predetermined time intervals (step S305).

- the object control unit 244 moves the second object based on the changed parameter (step S306).

- the game progress control unit 241 determines whether the game is over (step S307).

- step S307-No the process returns to step S301.

- step S307-Yes the parameter change process ends.

- the player when the player operates any one of the plurality of operating devices, it becomes possible to automatically operate the operation target object. Therefore, the player can enjoy the game without setting the operating device, and the game system of at least some embodiments of the present invention can improve the operability of the operating device.