EP0847198B1 - Procede, dispositif et disque pour l'enregistrement et la reproduction de train binaire intercale sur et a partir du disque - Google Patents

Procede, dispositif et disque pour l'enregistrement et la reproduction de train binaire intercale sur et a partir du disque Download PDFInfo

- Publication number

- EP0847198B1 EP0847198B1 EP19960932020 EP96932020A EP0847198B1 EP 0847198 B1 EP0847198 B1 EP 0847198B1 EP 19960932020 EP19960932020 EP 19960932020 EP 96932020 A EP96932020 A EP 96932020A EP 0847198 B1 EP0847198 B1 EP 0847198B1

- Authority

- EP

- European Patent Office

- Prior art keywords

- ilvu

- data

- vob

- video

- angle

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Expired - Lifetime

Links

Images

Classifications

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N5/00—Details of television systems

- H04N5/76—Television signal recording

- H04N5/91—Television signal processing therefor

- H04N5/92—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N9/00—Details of colour television systems

- H04N9/79—Processing of colour television signals in connection with recording

- H04N9/80—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback

- H04N9/804—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback involving pulse code modulation of the colour picture signal components

- H04N9/8042—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback involving pulse code modulation of the colour picture signal components involving data reduction

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11B—INFORMATION STORAGE BASED ON RELATIVE MOVEMENT BETWEEN RECORD CARRIER AND TRANSDUCER

- G11B20/00—Signal processing not specific to the method of recording or reproducing; Circuits therefor

- G11B20/00007—Time or data compression or expansion

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11B—INFORMATION STORAGE BASED ON RELATIVE MOVEMENT BETWEEN RECORD CARRIER AND TRANSDUCER

- G11B20/00—Signal processing not specific to the method of recording or reproducing; Circuits therefor

- G11B20/10—Digital recording or reproducing

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11B—INFORMATION STORAGE BASED ON RELATIVE MOVEMENT BETWEEN RECORD CARRIER AND TRANSDUCER

- G11B20/00—Signal processing not specific to the method of recording or reproducing; Circuits therefor

- G11B20/10—Digital recording or reproducing

- G11B20/12—Formatting, e.g. arrangement of data block or words on the record carriers

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11B—INFORMATION STORAGE BASED ON RELATIVE MOVEMENT BETWEEN RECORD CARRIER AND TRANSDUCER

- G11B27/00—Editing; Indexing; Addressing; Timing or synchronising; Monitoring; Measuring tape travel

- G11B27/02—Editing, e.g. varying the order of information signals recorded on, or reproduced from, record carriers

- G11B27/031—Electronic editing of digitised analogue information signals, e.g. audio or video signals

- G11B27/034—Electronic editing of digitised analogue information signals, e.g. audio or video signals on discs

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11B—INFORMATION STORAGE BASED ON RELATIVE MOVEMENT BETWEEN RECORD CARRIER AND TRANSDUCER

- G11B27/00—Editing; Indexing; Addressing; Timing or synchronising; Monitoring; Measuring tape travel

- G11B27/10—Indexing; Addressing; Timing or synchronising; Measuring tape travel

- G11B27/102—Programmed access in sequence to addressed parts of tracks of operating record carriers

- G11B27/105—Programmed access in sequence to addressed parts of tracks of operating record carriers of operating discs

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11B—INFORMATION STORAGE BASED ON RELATIVE MOVEMENT BETWEEN RECORD CARRIER AND TRANSDUCER

- G11B27/00—Editing; Indexing; Addressing; Timing or synchronising; Monitoring; Measuring tape travel

- G11B27/10—Indexing; Addressing; Timing or synchronising; Measuring tape travel

- G11B27/19—Indexing; Addressing; Timing or synchronising; Measuring tape travel by using information detectable on the record carrier

- G11B27/28—Indexing; Addressing; Timing or synchronising; Measuring tape travel by using information detectable on the record carrier by using information signals recorded by the same method as the main recording

- G11B27/30—Indexing; Addressing; Timing or synchronising; Measuring tape travel by using information detectable on the record carrier by using information signals recorded by the same method as the main recording on the same track as the main recording

- G11B27/3027—Indexing; Addressing; Timing or synchronising; Measuring tape travel by using information detectable on the record carrier by using information signals recorded by the same method as the main recording on the same track as the main recording used signal is digitally coded

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11B—INFORMATION STORAGE BASED ON RELATIVE MOVEMENT BETWEEN RECORD CARRIER AND TRANSDUCER

- G11B27/00—Editing; Indexing; Addressing; Timing or synchronising; Monitoring; Measuring tape travel

- G11B27/10—Indexing; Addressing; Timing or synchronising; Measuring tape travel

- G11B27/19—Indexing; Addressing; Timing or synchronising; Measuring tape travel by using information detectable on the record carrier

- G11B27/28—Indexing; Addressing; Timing or synchronising; Measuring tape travel by using information detectable on the record carrier by using information signals recorded by the same method as the main recording

- G11B27/32—Indexing; Addressing; Timing or synchronising; Measuring tape travel by using information detectable on the record carrier by using information signals recorded by the same method as the main recording on separate auxiliary tracks of the same or an auxiliary record carrier

- G11B27/327—Table of contents

- G11B27/329—Table of contents on a disc [VTOC]

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11B—INFORMATION STORAGE BASED ON RELATIVE MOVEMENT BETWEEN RECORD CARRIER AND TRANSDUCER

- G11B2220/00—Record carriers by type

- G11B2220/20—Disc-shaped record carriers

- G11B2220/23—Disc-shaped record carriers characterised in that the disc has a specific layer structure

- G11B2220/235—Multilayer discs, i.e. multiple recording layers accessed from the same side

- G11B2220/237—Multilayer discs, i.e. multiple recording layers accessed from the same side having exactly two recording layers

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11B—INFORMATION STORAGE BASED ON RELATIVE MOVEMENT BETWEEN RECORD CARRIER AND TRANSDUCER

- G11B2220/00—Record carriers by type

- G11B2220/20—Disc-shaped record carriers

- G11B2220/25—Disc-shaped record carriers characterised in that the disc is based on a specific recording technology

- G11B2220/2537—Optical discs

- G11B2220/2562—DVDs [digital versatile discs]; Digital video discs; MMCDs; HDCDs

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N5/00—Details of television systems

- H04N5/76—Television signal recording

- H04N5/84—Television signal recording using optical recording

- H04N5/85—Television signal recording using optical recording on discs or drums

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N9/00—Details of colour television systems

- H04N9/79—Processing of colour television signals in connection with recording

- H04N9/80—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback

- H04N9/804—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback involving pulse code modulation of the colour picture signal components

- H04N9/806—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback involving pulse code modulation of the colour picture signal components with processing of the sound signal

- H04N9/8063—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback involving pulse code modulation of the colour picture signal components with processing of the sound signal using time division multiplex of the PCM audio and PCM video signals

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N9/00—Details of colour television systems

- H04N9/79—Processing of colour television signals in connection with recording

- H04N9/80—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback

- H04N9/804—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback involving pulse code modulation of the colour picture signal components

- H04N9/806—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback involving pulse code modulation of the colour picture signal components with processing of the sound signal

- H04N9/8063—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback involving pulse code modulation of the colour picture signal components with processing of the sound signal using time division multiplex of the PCM audio and PCM video signals

- H04N9/8066—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback involving pulse code modulation of the colour picture signal components with processing of the sound signal using time division multiplex of the PCM audio and PCM video signals with insertion of the PCM audio signals in the vertical blanking interval of the PCM video signal

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N9/00—Details of colour television systems

- H04N9/79—Processing of colour television signals in connection with recording

- H04N9/80—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback

- H04N9/82—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback the individual colour picture signal components being recorded simultaneously only

- H04N9/8205—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback the individual colour picture signal components being recorded simultaneously only involving the multiplexing of an additional signal and the colour video signal

- H04N9/8227—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback the individual colour picture signal components being recorded simultaneously only involving the multiplexing of an additional signal and the colour video signal the additional signal being at least another television signal

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N9/00—Details of colour television systems

- H04N9/79—Processing of colour television signals in connection with recording

- H04N9/87—Regeneration of colour television signals

- H04N9/877—Regeneration of colour television signals by assembling picture element blocks in an intermediate memory

Definitions

- the present invention relates to a method and apparatus for interleaving a bitstream for use in an authoring system for variously processing a data bitstream comprising the video data, audio data, and sub-picture data constituting each of plural program titles containing related video data, audio data, and sub-picture data content to generate a bitstream from which a new title containing the content desired by the user can be reproduced, and efficiently recording and reproducing said generated bitstream using a particular recording medium.

- Video-CDs in particular are able to record video data to a CD format disk, which was originally designed with an approximately 600 MB recording capacity for storing digital audio data only, by using such high efficiency video compression techniques as MPEG.

- MPEG high efficiency video compression techniques

- karaoke titles and other conventional laser disk applications are gradually being transferred to the video CD format.

- each title must be composed from bitstreams with an increasingly deep hierarchical structure.

- the data size of multimedia titles written with bitstreams having such deep hierarchical structures is ten or more times greater than the data size of less complex titles.

- the need to edit small image (title) details also makes it necessary to process and control the bitstream using low order hierarchical data units.

- One way to increase the storage capacity of the optical disk is to reduce the spot diameter D of the optical (laser) beam. If the wavelength of the laser beam is 1 and the aperture of the objective lens is NA, then the spot diameter D is proportional to 1/NA, and the storage capacity can be efficiently improved by decreasing 1 and increasing NA.

- tilt coma caused by a relative tilt between the disk surface and the optical axis of the laser beam

- NA optical axis of the laser beam

- tilt-induced coma the transparent substrate must be made very thin. The problem is that the mechanical strength of the disk is low when the transparent substrate is very thin.

- MPEG1 the conventional method of recording and reproducing video, audio, and graphic signal data

- MPEG2 the more robust MPEG2 method, which can transfer large data volumes at a higher rate.

- the compression method and data format of the MPEG2 standard differ somewhat from those of MPEG1.

- the specific content of and differences between MPEG1 and MPEG2 are described in detail in the ISO-11172 and ISO-13818 MPEG standards, and further description thereof is omitted below.

- the object of the present invention is to provide an effective authoring system for controlling a multimedia data bitstream with advanced hardware and software requirements using a data unit smaller than the title to better address advanced user requirements.

- the object of the present invention is therefore to provide a data structure enabling discrete scene data to be seamlessly reproduced, i.e., without intermittence in the data presentation, even when reproducing such multi-scene data; a method for generating a system stream having said data structure; a recording apparatus and a reproduction apparatus for recording and reproducing said system stream; and a medium to which said system stream can be reproduced and from which said system stream can be reproduced by said recording apparatus and reproduction apparatus.

- a further problem can be expected when the multi-scene data is multi-angle scene data, i.e., scene data showing substantially the same subject from different angles.

- this multi-angle scene data may be obtained by recording a baseball batter, for example, with cameras in different locations. The problem is thus that when the user selects one of these plural angle scenes during data presentation, data from different angles is connected where the angle changes, and a natural, seamless presentation cannot be expected.

- WO - A - 95/12179 discloses a data stream which contains two alternatively reproducible video presentations.

- the data stream which is disclosed in this documents contains three types of data blocks. Two types of data blocks belong to different versions of video presentations whereas a third type of data block is common to both versions. If a particular video version shall be replayed the non-selected video data units have to be jumped over in order to go to the next block.

- the object of the present invention is to provide a data structure whereby natural, seamless reproduction without scene data intermitting can be achieved even in such multi-angle scene periods; a method for generating a system stream having said data structure; a recording apparatus and a reproduction apparatus for recording and reproducing said system stream; and a medium to which said system stream can be recorded and from which said system stream can be reproduced by said recording apparatus and reproduction apparatus.

- a bit stream generating method for generating a bit stream from a plurality of video objects VOB contains according to the present invention compressed video data to be stored on an optical disc which is reproducable by a predetermined disc reproducing apparatus, said bit stream generating method being characterized by the steps of providing m video objects VOB with identical video data presentation time, wherein m>1, segmenting each of said m video objects VOB into v interleave units ILVU, wherein v>1; interleaving said interleave units ILVU of said m segmented video objects VOB in a sequence ILVU 11 ..ILVU 21 .... ILVU (m-1)1 ..ILVU m1 ..

- an optical disc containing a bit stream which comprises a plurality of video objects VOB along a spiral track containing compressed video data for being reproduced by a predetermined disc reproducing apparatus, wherein said disc comprises a video object storage area for storing one or more video objects VOB, characterized in that said optical disk M containing a management information storage area for storing presentation sequence information indicative of a video object VOB presentation sequence, and group information indicative of one or more groups of alternatively reproducable video objects VOB, and in that said video objects VOB contain at least m video objects VOB with identical video data presentation time, wherein each of said m video objects VOB are alternatively reproducible, and are segmented into v interleave units ILVU, and wherein said interleave units ILVU of said m segmented video objects VOB are interleaved along the storage and reproduction track in a sequence ILVU 11 ..ILVU 21 ....

- each interleave unit ILVU comprises one or more sub regions VOBU to which video data and management information NV are stored.

- an optical disc M comprising a video object storage area for storing one or more video objects VOB along a spiral track containing compressed video data, characterized in that said video objects VOB contain at least m video objects VOB with identical video data presentation time, wherein each of said m video objects VOB are alternatively reproducible, and segmented into v interleave units ILVU, and wherein said interleave units ILVU of said m segmented video objects VOB are interleaved along the storage and reproduction track in a sequence ILVU 11 ..ILVU 21 .... ILVU (m-1)1 ..ILVU m1 .. ILVU 12 ..ILVU 22 ....

- each interleave unit ILVU comprises one or more sub regions VOBU to which video data and management information NV are stored

- said management information NV comprises end address information ILVU_EA indicative of an end address of an associated interleave unit ILVU, linking information NT_ILVU_SA indicative of a position on the disc M of a next interleave unit ILVU to be reproduced, and scene viewing angle linking information SML_AGL_C#1-9_DSTA indicative of a position on the optical disc M of a next interleave unit ILVU to be reproduced for each video object VOB during alternative reproduction.

- a disc reproducing apparatus comprises a means for reading presentation sequence information, group information, and a video object VOB from a disc M according to the present invention, wherein said disk stores at least m video objects VOB with identical video presentation time being segmented into v interleave units ILVU in a sequence ILVU 11 ..ILVU 21 .... ILVU (m-1)1 ..ILVU m1 .. ILVU 12 ..ILVU 22 .... ILVU (m-1)2 ... ILVU m2 .. .... ILVU 1(v-1) ..ILVU 2(v-1) .... ILVU (m-1)(v-1) ..ILVU m(v-1) ...

- a reproduction method for a disc wherein said disk stores at least m video objects VOB with identical video presentation time being segmented into v interleave units ILVU in a sequence ILVU 11 ..ILVU 21 .... ILVU (m-1)1 ..ILVU m1 .. ILVU 12 ..ILVU 22 .... ILVU (m-1)2 ...ILVU m2 .. .... ILVU 1(v-1 )..ILVU 2(v-1) .... ILVU (m-1)(v-1) ..ILVU m(v-1) .. ILVU 1v ..ILVU 2v ....

- ILVU (m-1)v ...ILVU mv .., wherein v>1 and m>1, comprising steps for: selecting a video object to be presented, reading the selected video object, retrieving and storing the management information NV from the selected video object, presenting the selected video object, detecting during the presentation of the selected video object whether presentation of the video object has continued to a position indicated by end address information ILVU_EA in the management information NV, and resuming video object presentation from a position indicated by a scene-viewing angle linking information SML_AGL_C#1-9_DSTA when video object presentation has been completed to the position indicated by end address information ILVU_EA of the management information NV.

- one title refers to the combination of video and audio data expressing program content recognized by a user for education, entertainment, or other purpose.

- Referenced to a motion picture (movie) one title may correspond to the content of an entire movie, or to just one scene within said movie.

- a video title set comprises the bitstream data containing the information for a specific number of titles. More specifically, each VTS comprises the video, audio, and other reproduction data representing the content of each title in the set, and control data for controlling the content data.

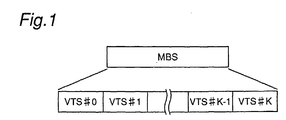

- the video zone VZ is the video data unit processed by the authoring system, and comprises a specific number of video title sets. More specifically, each video zone is a linear sequence of K + 1 video title sets numbered VTS #0 - VTS #K where K is an integer value of zero or greater.

- One video title set preferably the first video title set VTS #0, is used as the video manager describing the content information of the titles contained in each video title set.

- the multimedia bitstream MBS is the largest control unit of the multimedia data bitstream handled by the authoring system of the present invention, and comprises plural video zones VZ.

- FIG. 2 A preferred embodiment of the authoring encoder EC according to the present invention for generating a new multimedia bitstream MBS by re-encoding the original multimedia bitstream MBS according to the scenario desired by the user is shown in Fig. 2.

- the original multimedia bitstream MBS comprises a video stream St1 containing the video information, a sub-picture stream St3 containing caption text and other auxiliary video information, and the audio stream St5 containing the audio information.

- the video and audio streams are the bitstreams containing the video and audio information obtained from the source within a particular period of time.

- the sub-picture stream is a bitstream containing momentary video information relevant to a particular scene.

- the sub-picture data encoded to a single scene may be captured to video memory and displayed continuously from the video memory for plural scenes as may be necessary.

- this multimedia source data St1, St3, and St5 is obtained from a live broadcast

- the video and audio signals are supplied in real-time from a video camera or other imaging source; when the multimedia source data is reproduced from a video tape or other recording medium, the audio and video signals are not real-time signals.

- multimedia source stream is shown in Fig. 2 as comprising these three source signals, this is for convenience only, and it should be noted that the multimedia source stream may contain more than three types of source signals, and may contain source data for different titles. Multimedia source data with audio, video, and sub-picture data for plural titles are referred to below as multi-title streams.

- the authoring encoder EC comprises a scenario editor 100, encoding system controller 200, video encoder 300, video stream buffer 400, sub-picture encoder 500, sub-picture stream buffer 600, audio encoder 700, audio stream buffer 800, system encoder 900, video zone formatter 1300, recorder 1200, and recording medium M.

- the video zone formatter 1300 comprises video object (VOB) buffer 1000, formatter 1100, and volume and file structure formatter 1400.

- VOB video object

- the bitstream encoded by the authoring encoder EC of the present embodiment is recorded by way of example only to an optical disk.

- the scenario editor 100 of the authoring encoder EC outputs the scenario data, i.e., the user-defined editing instructions.

- the scenario data controls editing the corresponding parts of the multimedia bitstream MBS according to the user's manipulation of the video, sub-picture, and audio components of the original multimedia title.

- This scenario editor 100 preferably comprises a display, speaker(s), keyboard, CPU, and source stream buffer.

- the scenario editor 100 is connected to an external multimedia bitstream source from which the multimedia source data St1, St3, and St5 are supplied.

- the user is thus able to reproduce the video and audio components of the multimedia source data using the display and speaker to confirm the content of the-generated title.

- the user is then able to edit the title content according to the desired scenario using the keyboard, mouse, and other command input devices while confirming the content of the title on the display and speakers.

- the result of this multimedia data manipulation is the scenario data St7.

- the scenario data St7 is basically a set of instructions describing what source data is selected from all or a subset of the source data containing plural titles within a defined time period, and how the selected source data is reassembled to reproduce the scenario (sequence) intended by the user.

- the CPU codes the position, length, and the relative time-based positions of the edited parts of the respective multimedia source data streams St1, St3, and St5 to generate the scenario data St7.

- the source stream buffer has a specific capacity, and is used to delay the multimedia source data streams St1, St3, and St5 a known time Td and then output streams St1, St3, and St5.

- This delay is required for synchronization with the editor encoding process. More specifically, when data encoding and user generation of scenario data St7 are executed simultaneously, i.e., when encoding immediately follows editing, time Td is required to determine the content of the multimedia source data editing process based on the scenario data St7 as will be described further below. As a result, the multimedia source data must be delayed by time Td to synchronize the editing process during the actual encoding operation. Because this delay time Td is limited to the time required to synchronize the operation of the various system components in the case of sequential editing as described above, the source stream buffer is normally achieved by means of a high speed storage medium such as semiconductor memory.

- the source stream buffer may be a low speed, high capacity storage medium such as video tape, magnetic disk, or optical disk.

- the structure (type) of media used for the source stream buffer may therefore be determined according to the delay time Td required and the allowable manufacturing cost.

- the encoding system controller 200 is connected to the scenario editor 100 and receives the scenario data St7 therefrom. Based on the time-base position and length information of the edit segment contained in the scenario data St7, the encoding system controller 200 generates the encoding parameter signals St9, St11, and St13 for encoding the edit segment of the multimedia source data.

- the encoding signals St9, St11, and St13 supply the parameters used for video, sub-picture, and audio encoding, including the encoding start and end timing.

- multimedia source data St1, St3, and St5 are output after delay time Td by the source stream buffer, and are therefore synchronized to encoding parameter signals St9, St11, and St13.

- encoding parameter signal St9 is the video encoding signal specifying the encoding timing of video stream St1 to extract the encoding segment from the video stream St1 and generate the video encoding unit.

- Encoding parameter signal St11 is likewise the sub-picture stream encoding signal used to generate the sub-picture encoding unit by specifying the encoding timing for sub-picture stream St3.

- Encoding parameter signal St13 is the audio encoding signal used to generate the audio encoding unit by specifying the encoding timing for audio stream St5.

- the encoding system controller 200 Based on the time-base relationship between the encoding segments of streams St1, St3, and St5 in the multimedia source data contained in scenario data St7, the encoding system controller 200 generates the timing signals St21, St23, and St25 arranging the encoded multimedia-encoded stream in the specified time-base relationship.

- the encoding system controller 200 also generates the reproduction time information IT defining the reproduction time of the title editing unit (video object, VOB), and the stream encoding data St33 defining the system encode parameters for multiplexing the encoded multimedia stream containing video, audio, and sub-picture data. Note that the reproduction time information IT and stream encoding data St33 are generated for the video object VOB of each title in one video zone VZ.

- the encoding system controller 200 also generates the title sequence control signal St39, which declares the formatting parameters for formatting the title editing units VOB of each of the streams in a particular time-base relationship as a multimedia bitstream. More specifically, the title sequence control signal St39 is used to control the connections between the title editing units (VOB) of each title in the multimedia bitstream MBS, or to control the sequence of the interleaved title editing unit (VOBs) interleaving the title editing units VOB of plural reproduction paths.

- VOB title editing units

- VOBs interleaved title editing unit

- the video encoder 300 is connected to the source stream buffer of the scenario editor 100 and to the encoding system controller 200, and receives therefrom the video stream St1 and video encoding parameter signal St9, respectively.

- Encoding parameters supplied by the video encoding signal St9 include the encoding start and end timing, bit rate, the encoding conditions for the encoding start and end, and the material type. Possible material types include NTSC or PAL video signal, and telecine converted material.

- the video encoder 300 Based on the video encoding parameter signal St9, the video encoder 300 encodes a specific part of the video stream St1 to generate the encoded video stream St15.

- the sub-picture encoder 500 is similarly connected to the source stream buffer of the scenario editor 100 and to the encoding system controller 200, and receives therefrom the sub-picture stream St3 and sub-picture encoding parameter signal St11, respectively. Based on the sub-picture encoding parameter signal St11, the sub-picture encoder 500 encodes a specific part of the sub-picture stream St3 to generate the encoded sub-picture stream St17.

- the audio encoder 700 is also connected to the source stream buffer of the scenario editor 100 and to the encoding system controller 200, and receives therefrom the audio stream St5 and audio encoding parameter signal St13, which supplies the encoding start and end timing. Based on the audio encoding parameter signal St13, the audio encoder 700 encodes a specific part of the audio stream St5 to generate the encoded audio stream St19.

- the video stream buffer 400 is connected to the video encoder 300 and to the encoding system controller 200.

- the video stream buffer 400 stores the encoded video stream St15 input from the video encoder 300, and outputs the stored encoded video stream St15 as the time-delayed encoded video stream St27 based on the timing signal St21 supplied from the encoding system controller 200.

- the sub-picture stream buffer 600 is similarly connected to the sub-picture encoder 500 and to the encoding system controller 200.

- the sub-picture stream buffer 600 stores the encoded sub-picture stream St17 output from the sub-picture encoder 500, and then outputs the stored encoded sub-picture stream St17 as time-delayed encoded sub-picture stream St29 based on the timing signal St23 supplied from the encoding system controller 200.

- the audio stream buffer 800 is similarly connected to the audio encoder 700 and to the encoding system controller 200.

- the audio stream buffer 800 stores the encoded audio stream St19 input from the audio encoder 700, and then outputs the encoded audio stream St19 as the time-delayed encoded audio stream St31 based on the timing signal St25 supplied from the encoding system controller 200.

- the system encoder 900 is connected to the video stream buffer 400, sub-picture stream buffer 600, audio stream buffer 800, and the encoding system controller 200, and is respectively supplied thereby with the time-delayed encoded video stream St27, time-delayed encoded sub-picture stream St29, time-delayed encoded audio stream St31, and the stream encoding data St33.

- the system encoder 900 is a multiplexer that multiplexes the time-delayed streams St27, St29, and St31 based on the stream encoding data St33 (timing signal) to generate title editing unit (VOB) St35.

- the stream encoding data St33 contains the system encoding parameters, including the encoding start and end timing.

- the video zone formatter 1300 is connected to the system encoder 900 and the encoding system controller 200 from which the title editing unit (VOB) St35 and title sequence control signal St39 (timing signal) are respectively supplied.

- the title sequence control signal St39 contains the formatting start and end timing, and the formatting parameters used to generate (format) a multimedia bitstream MBS.

- the video zone formatter 1300 rearranges the title editing units (VOB) St35 in one video zone VZ in the scenario sequence defined by the user based on the title sequence control signal St39 to generate the edited multimedia stream data St43.

- the multimedia bitstream MBS St43 edited according to the user-defined scenario is then sent to the recorder 1200.

- the recorder 1200 processes the edited multimedia stream data St43 to the data stream St45 format of the recording medium M, and thus records the formatted data stream St45 to the recording medium M.

- the multimedia bitstream MBS recorded to the recording medium M contains the volume file structure VFS, which includes the physical address of the data on the recording medium generated by the video zone formatter 1300.

- the encoded multimedia bitstream MBS St35 may be output directly to the decoder to immediately reproduce the edited title content. It will be obvious that the output multimedia bitstream MBS will not in this case contain the volume file structure VFS.

- a preferred embodiment of the authoring decoder DC used to decode the multimedia bitstream MBS edited by the authoring encoder EC of the present invention, and thereby reproduce the content of each title unit according to the user-defined scenario, is described next below with reference to Fig. 3. Note that in the preferred embodiment described below the multimedia bitstream St45 encoded by the authoring encoder EC is recorded to the recording medium M.

- the authoring decoder DC comprises a multimedia bitstream producer 2000, scenario selector 2100, decoding system controller 2300, stream buffer 2400, system decoder 2500, video buffer 2600, sub-picture buffer 2700, audio buffer 2800, synchronizer 2900, video decoder 3800, sub-picture decoder 3100, audio decoder 3200, synthesizer 3500, video data output terminal 3600, and audio data output terminal 3700.

- the bitstream producer 2000 comprises a recording media drive unit 2004 for driving the recording medium M; a reading head 2006 for reading the information recorded to the recording medium M and producing the binary read signal St57; a signal processor 2008 for variously processing the read signal St57 to generate the reproduced bitstream St61; and a reproduction controller 2002.

- the reproduction controller 2002 is connected to the decoding system controller 2300 from which the multimedia bitstream reproduction control signal St53 is supplied, and in turn generates the reproduction control signals St55 and St59 respectively controlling the recording media drive unit (motor) 2004 and signal processor 2008.

- the authoring decoder DC comprises a scenario selector 2100 for selecting and reproducing the corresponding scenes (titles).

- the scenario selector 2100 then outputs the selected titles as scenario data to the authoring decoder DC.

- the scenario selector 2100 preferably comprises a keyboard, CPU, and monitor. Using the keyboard, the user then inputs the desired scenario based on the content of the scenario input by the authoring encoder EC. Based on the keyboard input, the CPU generates the scenario selection data St51 specifying the selected scenario.

- the scenario selector 2100 is connected by an infrared communications device, for example, to the decoding system controller 2300, to which it inputs the scenario selection data St51.

- the decoding system controller 2300 Based on the scenario selection data St51, the decoding system controller 2300 then generates the bitstream reproduction control signal St53 controlling the operation of the bitstream producer 2000.

- the stream buffer 2400 has a specific buffer capacity used to temporarily store the reproduced bitstream St61 input from the bitstream producer 2000, extract the address information and initial synchronization data SCR (system clock reference) for each stream, and generate bitstream control data St63.

- the stream buffer 2400 is also connected to the decoding system controller 2300, to which it supplies the generated bitstream control data St63.

- the synchronizer 2900 is connected to the decoding system controller 2300 from which it receives the system clock reference SCR contained in the synchronization control data St81 to set the internal system clock STC and supply the reset system clock St79 to the decoding system controller 2300.

- the decoding system controller 2300 Based on this system clock St79, the decoding system controller 2300 also generates the stream read signal St65 at a specific interval and outputs the read signal St65 to the stream buffer 2400.

- the stream buffer 2400 Based on the supplied read signal St65, the stream buffer 2400 outputs the reproduced bitstream St61 at a specific interval to the system decoder 2500 as bitstream St67.

- the decoding system controller 2300 Based on the scenario selection data St51, the decoding system controller 2300 generates the decoding signal St69 defining the stream Ids for the video, sub-picture, and audio bitstreams corresponding to the selected scenario, and outputs to the system decoder 2500.

- the system decoder 2500 respectively outputs the video, sub-picture, and audio bitstreams input from the stream buffer 2400 to the video buffer 2600, sub-picture buffer 2700, and audio buffer 2800 as the encoded video stream St71, encoded sub-picture stream St73, and encoded audio stream St75.

- the system decoder 2500 detects the presentation time stamp PTS and decoding time stamp DTS of the smallest control unit in each bitstream St67 to generate the time information signal St77.

- This time information signal St77 is supplied to the synchronizer 2900 through the decoding system controller 2300 as the synchronization control data St81.

- the synchronizer 2900 determines the decoding start timing whereby each of the bitstreams will be arranged in the correct sequence after decoding, and then generates and inputs the video stream decoding start signal St89 to the video decoder 3800 based on this decoding timing.

- the synchronizer 2900 also generates and supplies the sub-picture decoding start signal St91 and audio stream decoding start signal St93 to the sub-picture decoder 3100 and audio decoder 3200, respectively.

- the video decoder 3800 generates the video output request signal St84 based on the video stream decoding start signal St89, and outputs to the video buffer 2600.

- the video buffer 2600 outputs the video stream St83 to the video decoder 3800.

- the video decoder 3800 thus detects the presentation time information contained in the video stream St83, and disables the video output request signal St84 when the length of the received video stream St83 is equivalent to the specified presentation time.

- a video stream equal in length to the specified presentation time is thus decoded by the video decoder 3800, which outputs the reproduced video signal St104 to the synthesizer 3500.

- the sub-picture decoder 3100 similarly generates the sub-picture output request signal St86 based on the sub-picture decoding start signal St91, and outputs to the sub-picture buffer 2700.

- the sub-picture buffer 2700 outputs the sub-picture stream St85 to the sub-picture decoder 3100.

- the sub-picture decoder 3100 decodes a length of the sub-picture stream St85 corresponding to the specified presentation time to reproduce and supply to the synthesizer 3500 the sub-picture signal St99.

- the synthesizer 3500 superimposes the video signal St104 and sub-picture signal St99 to generate and output the multi-picture video signal St105 to the video data output terminal 3600.

- the audio decoder 3200 generates and supplies to the audio buffer 2800 the audio output request signal St88 based on the audio stream decoding start signal St93.

- the audio buffer 2800 thus outputs the audio stream St87 to the audio decoder 3200.

- the audio decoder 3200 decodes a length of the audio stream St87 corresponding to the specified presentation time based on the presentation time information contained in the audio stream St87, and outputs the decoded audio stream St101 to the audio data output terminal 3700.

- the authoring decoder DC is able to reproduce the title content desired by the user in the desired sequence by reproducing the multimedia bitstream MBS corresponding to the selected scenario.

- the multimedia bitstream thus encoded can then be reproduced according to the one scenario selected from among plural possible scenarios. It is also possible to change scenarios while playback is in progress, i.e., to select a different scenario and dynamically generate a new multimedia bitstream according to the most recently selected scenario. It is also possible to dynamically select and reproduce any of plural scenes while reproducing the title content according to a desired scenario.

- FIG. 4 An example of a digital video disk (DVD) with only one recording surface (a single-sided DVD) is shown in Fig. 4.

- the DVD recording medium RC1 in the preferred embodiment of the invention comprises a data recording surface RS1 to and from which data is written and read by emitting laser beam LS, and a protective layer PL1 covering the data recording surface RS1.

- a backing layer BL1 is also provided on the back of data recording surface RS1.

- the side of the disk on which protective layer PL1 is provided is therefore referred to below as side SA (commonly "side A"), and the opposite side (on which the backing layer BL1 is provided) is referred to as side SB ("side B").

- side SA commonly "side A"

- side SB side B

- digital video disk recording media having a single data recording surface RS1 on only one side such as this DVD recording medium RC1 is commonly called a single-sided single layer disk.

- FIG. 5 A detailed illustration of area C1 in Fig. 4 is shown in Fig. 5.

- the data recording surface RS1 is formed by applying a metallic thin film or other reflective coating as a data layer 4109 on a first transparent layer 4108 having a particular thickness T1.

- This first transparent layer 4108 also functions as the protective layer PL1.

- a second transparent substrate 4111 of a thickness T2 functions as the backing layer BL1, and is bonded to the first transparent layer 4108 by means of an adhesive layer 4110 disposed therebetween.

- a printing layer 4112 for printing a disk label may also be disposed on the second transparent substrate 4111 as necessary.

- the printing layer 4112 does not usually cover the entire surface area of the second transparent substrate 4111 (backing layer BL1), but only the area needed to print the text and graphics of the disk label.

- the area of second transparent substrate 4111 to which the printing layer 4112 is not formed may be left exposed.

- Light reflected from the data layer 4109 (metallic thin film) forming the data recording surface RS1 can therefore be directly observed where the label is not printed when the digital video disk is viewed from side SB.

- the background looks like a silver-white over which the printed text and graphics float when the metallic thin film is an aluminum thin film, for example.

- FIG. 6 A detailed illustration of area C2 in Fig. 5 is shown in Fig. 6.

- Pits and lands are molded to the common contact surface between the first transparent layer 4108 and the data layer 4109 on side SA from which data is read by emitting a laser beam LS, and data is recorded by varying the lengths of the pits and lands (i.e., the length of the intervals between the pits). More specifically, the pit and land configuration formed on the first transparent layer 4108 is transferred to the data layer 4109. The lengths of the pits and lands are shorter, and the pitch of the data tracks formed by the pit sequences is narrower, than with a conventional Compact Disc (CD). The surface recording density is therefore greatly improved.

- CD Compact Disc

- the second transparent substrate 4111 is for reinforcement, and is a transparent panel made from the same material as the first transparent layer 4108 with both sides flat.

- Thickness T1 and T2 are preferably equal and commonly approximately 0.6 mm, but the invention shall not be so limited.

- the objective lens aperture NA can be large and the wavelength 1 of the light beam small in a digital video disk system, the diameter of the light spot Ls used can be reduced to approximately 1/1.6 the light spot needed to read a CD. Note that this means the resolution of the laser beam LS in the DVD system is approximately 1.6 times the resolution of a conventional CD system.

- the optical system used to read data from the digital video disk uses a short 650 nm wavelength red semiconductor laser and an objective lens with a 0.6 mm aperture NA. By thus also reducing the thickness T of the transparent panels to 0.6 mm, more than 5 GB of data can be stored to one side of a 120 mm diameter optical disk.

- the digital video disk is therefore well-suited as a recording medium for video images.

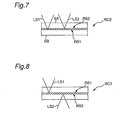

- a digital video disk recording medium with plural recording surfaces RS as described above is shown in Figs. 7 and 8.

- the DVD recording medium RC2 shown in Fig. 7 comprises two recording surfaces, i.e., first recording surface RS1 and semi-transparent second recording surface RS2, on the same side, i.e. side SA, of the disk. Data can be simultaneously recorded or reproduced from these two recording surfaces by using different laser beams LS1 and LS2 for the first recording surface RS1 and the second recording surface RS2. It is also possible to read/write both recording surfaces RS1 and RS2 using only one of the laser beams LS1 or LS2. Note that recording media thus comprised are called "single-side, dual-layer disks.”

- the DVD recording medium RC3 shown in Fig. 8 has the recording surfaces on opposite sides of the disk, i. e., has the first data recording surface RS1 on side SA and the second data recording surface RS2 on side SB. It will also be obvious that while only two recording surfaces are shown on one digital video disk in this example, more than two recording surfaces may also be formed on a double-sided digital video disk. As with the recording medium shown in Fig. 7, it is also possible to provide two separate laser beams LS1 and LS2 for recording surfaces RS1 and RS2, or to read/write both recording surfaces RS1 and RS2 using a single laser beam.

- double-sided, dual-layer disk This type of digital video disk is called a “double-sided, dual-layer disk.” It will also be obvious that a double-sided digital video disk can be comprised with two or more recording surfaces per side. This type of disk is called a “double-sided, multi-layer disk.”

- FIG. 9 A plan view from the laser beam LS irradiation side of the recording surface RS of the DVD recording medium RC is shown in Fig. 9 and Fig. 10. Note that a continuous spiral data recording track TR is provided from the inside circumference to the outside circumference of the DVD. The data recording track TR is divided into plural sectors each having the same known storage capacity. Note that for simplicity only the data recording track TR is shown in Fig. 9 with more than three sectors per revolution.

- the data recording track TR is normally formed clockwise inside to outside (see arrow DrA) from the inside end point IA at the inside circumference of disk RCA to the outside end point OA at the outside circumference of the disk with the disk RCA rotating counterclockwise RdA.

- This type of disk RCA is called a clockwise disk, and the recording track formed thereon is called a clockwise track TRA.

- the recording track TRB may be formed clockwise from outside to inside circumference (see arrow DrB in Fig. 10) from the outside end point OB at the outside circumference of disk RCB to the inside end point IB at the inside circumference of the disk with the disk RCB rotating clockwise RdB.

- the recording track appears to wind counterclockwise when viewed from the inside circumference to the outside circumference on disks with the recording track formed in the direction of arrow DrB, these disks are referred to as counterclockwise disk RCB with counterclockwise track TRB to distinguish them from disk RCA in Fig. 9.

- track directions DrA and DrB are the track paths along which the laser beam travels when scanning the tracks for recording and playback.

- Direction of disk rotation RdA in which disk RCA turns is thus opposite the direction of track path DrA

- direction of disk rotation RdB in which disk RCB turns is thus opposite the direction of track path DrB.

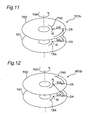

- FIG. 7 An exploded view of the single-sided, dual-layer disk RC2 shown in Fig. 7 is shown as disk RC2o in Fig. 11. Note that the recording tracks formed on the two recording surfaces run in opposite directions. Specifically, a clockwise recording track TRA as shown in Fig. 9 is formed in clockwise direction DrA on the (lower) first data recording surface RS1, and a counterclockwise recording track TRB formed in counterclockwise direction DrB as shown in Fig. 10 is provided on the (upper) second data recording surface RS2. As a result, the outside end points OA and OB of the first and second (top and bottom) tracks are at the same radial position relative to the center axis of the disk RC2o.

- track paths DrA and DrB of tracks TR are also the data read/write directions to disk RC.

- the first and second (top and bottom) recording tracks thus wind opposite each other with this disk RC, i.e., the track paths DrA and DrB of the top and bottom recording layers are opposite track paths.

- Opposite track path type, single-sided, dual-layer disks RC2o rotate in direction RdA corresponding to the first recording surface RS1 with the laser beam LS traveling along track path DrA to trace the recording track on the first recording surface RS1.

- the laser beam LS can be refocused to end point OB on the second recording surface RS2 to continue tracing the recording track from the first to the second recording surface uninterrupted.

- the physical distance between the recording tracks TRA and TRB on the first and second recording surfaces RS1 and RS2 can thus be instantaneously eliminated by simply adjusting the focus of the laser beam LS.

- the tracks on recording surfaces RS1 and RS2 can be wound in the directions opposite those described above, i.e., the counterclockwise track TRB may be provided on the first recording surface RS1 and the clockwise track TRA on the second recording surface RS2.

- the direction of disk rotation is also changed to a clockwise rotation RdB, thereby enabling the two recording surfaces to be used as comprising a single continuous recording track as described above.

- a further example of this type of disk is therefore neither shown nor described below.

- the digital video disk it is therefore possible by thus constructing the digital video disk to record the multimedia bitstream MBS for a feature-length title to a single opposite track path type, single-sided, dual-layer disk RC2o. Note that this type of digital video disk medium is called a single-sided dual-layer disk with opposite track paths.

- FIG. 7 Another example of the single-sided, dual-layer DVD recording medium RC2 shown in Fig. 7 is shown as disk RC2p in Fig. 12.

- the recording tracks formed on both first and second recording surfaces RS1 and RS2 are clockwise tracks TRA as shown in Fig. 9.

- the single-sided, dual-layer disk RC2p rotates counterclockwise in the direction of arrow RdA, and the direction of laser beam LS travel is the same as the direction of the track spiral, i.e., the track paths of the top and bottom recording surfaces are mutually parallel (parallel track paths).

- the outside end points OA of both top and bottom tracks are again preferably positioned at the same radial position relative to the center axis of the disk RC2p as described above.

- the access point can be instantaneously shifted from outside end point OA of track TRA on the first recording surface RS1 to the outside end point OA of track TRA on the second recording surface RS2 by appropriately adjusting the focus of the laser beam LS at outside end point OA.

- the recording medium RC2p must be driven in the opposite direction (clockwise, opposite direction RdA).

- RdA opposite direction

- the laser beam LS is therefore moved from the outside end point OA of the track on the first recording surface RS1 to the inside end point IA of the track on the second recording surface RS2 to use these physically discrete recording tracks as one logically continuous recording track.

- This type of digital video disk recording medium is called a "single-sided, dual-layer disk with parallel track paths.”

- the single-sided, dual-layer disk RC2p with parallel track paths thus described is well-suited to storing on a single disk encyclopedia and similar multimedia bitstreams comprising multiple titles that are frequently and randomly accessed.

- FIG. 13 An exploded view of the dual-sided single-layer DVD recording medium RC3 comprising one recording surface layer RS1 and RS2 on each side as shown in Fig. 8 is shown as DVD recording medium RC3s in Fig. 13.

- Clockwise recording track TRA is provided on the one recording surface RS1

- a counterclockwise recording track TRB is provided on the other recording surface RS2.

- the outside end points OA and OB of the recording tracks on each recording surface are preferably positioned at the same radial position relative to the center axis of the DVD recording medium RC3s.

- This type of recording medium is therefore known as a double-sided dual layer disk with symmetrical track paths.

- This double-sided dual layer disk with symmetrical track paths RC3s rotates in direction RdA when reading/writing the first recording surface RS1.

- the track path on the second recording surface RS2 on the opposite side is opposite the direction DrB in which the track winds, i.e., direction DrA.

- Accessing both recording surfaces RS1 and RS2 using a single laser beam LS is therefore not realistic irrespective of whether access is continuous or non-continuous.

- a multimedia bitstream MBS is separately recorded to the recording surfaces on the first and second sides of the disk.

- FIG. 14 A different example of the double-sided single layer disk RC3 shown in Fig. 8 is shown in Fig. 14 as disk RC3a.

- this disk comprises clockwise recording tracks TRA as shown in Fig. 9 on both recording surfaces RS1 and RS2.

- the outside end points OA and OA of the recording tracks on each recording surface are preferably positioned at the same radial position relative to the center axis of the DVD recording medium RC3a.

- the tracks on these recording surfaces RS1 and RS2 are asymmetrical. This type of disk is therefore known as a double-sided dual layer disk with asymmetrical track paths.

- This double-sided dual layer disk with asymmetrical track paths RC3a rotates in direction RdA when reading/writing the first recording surface RS1.

- the track path on the second recording surface RS2 on the opposite side is opposite the direction DrA in which the track winds, i.e., direction DrB.

- both sides of the recording medium RC3a can be read/written without turning the disk over and without providing different laser beams for the two sides.

- the track paths for recording surfaces RS1 and RS2 are also the same with this double-sided dual layer disk with asymmetrical track paths RC3a.

- RC3a asymmetrical track paths

- this recording medium remains functionally identical even if counterclockwise recording track TRB is provided in place of clockwise recording track TRA on both recording surfaces RS1 and RS2.

- the true value of a DVD system whereby the storage capacity of the recording medium can be easily increased by using a multiple layer recording surface is realized in multimedia applications whereby plural video data units, plural audio data units, and plural graphics data units recorded to a single disk are reproduced through interactive operation by the user.

- the digital video disk recording medium is close to solving these problems.

- One interactive operation widely sought in multimedia applications today is for the user to be able to change the position from which a scene is viewed during reproduction of that scene. This capability is achieved by means of the multiple angle function.

- the available angles may include a position behind the backstop centered on the catcher, batter, and pitcher; one from behind the backstop centered on a fielder, the pitcher, and the catcher; and one from center field showing the view to the pitcher and catcher.

- the digital video disk system uses MPEG, the same basic standard format used with Video-CDs to record the video, audio, graphics, and other signal data. Because of the differences in storage capacity, transfer rates, and signal processing performance within the reproduction apparatus, DVD uses MPEG2, the compression method and data format of which differ slightly from the MPEG1 format used with Video-CDs.

- a fully functional and practical parental lock playback function and multi-angle scene playback function must enable the user to modify the system output in minor, subtle ways while still presenting substantially the same video and audio output. If these functions are achieved by preparing and recording separate titles satisfying each of the many possible parental lock and multi-angle scene playback requests, titles that are substantially identical and differ in only minor ways must be recorded to the recording medium. This results in identical data being repeatedly recorded to the larger part of the recording medium, and significantly reduces the utilization efficiency of the available storage capacity. More particularly, it is virtually impossible to record discrete titles satisfying every possible request even using the massive capacity of the digital video disk medium. While it may be concluded that this problem can be easily solved by increasing the capacity of the recording medium, this is an obviously undesirable solution when the effective use of available system resources is considered.

- multi-scene control the concept of which is described in another section below, in a DVD system, it is possible to dynamically construct titles for numerous variations of the same basic content using the smallest possible amount of data, and thereby effectively utilize the available system resources (recording medium). More specifically, titles that can be played back with numerous variations are constructed from basic (common) scene periods containing data common to each title, and multi-scene periods comprising groups of different scenes corresponding to the various requests. During reproduction, the user is able to freely and at any time select particular scenes from the multi-scene periods to dynamically construct a title conforming to the desired content, e.g., a title omitting certain scenes using the parental lock control function.

- Fig. 22 The data structure used in the authoring system of a digital video disk system according to the present invention is shown in Fig. 22.

- this digital video disk system divides the recording medium into three major recording areas, the lead-in area LI, the volume space VS, and the lead-out area LO.

- the lead-in area LI is provided at the inside circumference area of the optical disk. In the disks described with reference to Figs. 9 and 10, the lead-in area LI is positioned at the inside end points IA and IB of each track. Data for stabilizing the operation of the reproducing apparatus when reading starts is written to the lead-in area LI.

- the lead-out area LO is correspondingly located at the outside circumference of the optical disk, i.e., at outside end points OA and OB of each track in the disks described with reference to Figs. 9 and 10. Data identifying the end of the volume space VS is recorded in this lead-out area LO.

- the volume space VS is located between the lead-in area LI and lead-out area LO, and is recorded as a one-dimensional array of n+1 (where n is an integer greater than or equal to zero) 2048-byte logic sectors LS.

- the logic sectors LS are sequentially number #0, #1, #2, ... #n.

- the volume space VS is also divided into a volume and file structure management area VFS and a file data structure area FDS.

- the volume and file structure management area VFS comprises m+1 logic sectors LS#0 to LS#m (where m is an integer greater than or equal to zero and less than n.

- the file data structure FDS comprises n-m logic sectors LS #m+1 to LS #n.

- this file data structure area FDS corresponds to the multimedia bitstream MBS shown in Fig. 1 and described above.

- the volume file structure VFS is the file system for managing the data stored to the volume space VS as files, and is divided into logic sectors LS#0 - LS#m where m is the number of sectors required to store all data needed to manage the entire disk, and is a natural number less than n.

- Information for the files stored to the file data structure area FDS is written to the volume file structure VFS according to a known specification such as ISO-9660 or ISO-13346.

- the file data structure area FDS comprises n-m logic sectors LS#m - LS#n, each comprising a video manager VMG sized to an integer multiple of the logic sector (2048 x I, where I is a known integer), and k video title sets VTS #1 - VTS#k (where k is a natural number less than 100).

- the video manager VMG stores the title management information for the entire disk, and information for building a volume menu used to set and change reproduction control of the entire volume.

- Any video title set VTS #k is also called a "video file" representing a title comprising video, audio, and/or still image data.

- each video title set VTS comprises VTS information VTSI describing the management information for the entire disk, and the VTS title video objects VOB (VTSTT_VOBS), i.e., the system stream of the multimedia bitstream.

- VTSTT_VOBS VTS title video objects

- the VTS information VTSI is described first below, followed by the VTS title VOBS.

- the VTS information primarily includes the VTSI management table VTSI_MAT and VTSPGC information table VTS_PGCIT.

- the VTSI management table VTSI_MAT stores such information as the internal structure of the video title set VTS, the number of selectable audio streams contained in the video title set VTS, the number of sub-pictures, and the video title set VTS location (storage address).

- the VTSPGC information table VTS_PGCIT records i (where i is a natural number) program chain (PGC) data blocks VTS_PGCI #1 - VTS_PGCI #i for controlling the playback sequence.

- Each of the table entries VTS_PGCI #i is a data entry expressing the program chain, and comprises j (where j is a natural number) cell playback information blocks C_PBI #1 - C_PBI #j.

- Each cell playback information block C_PBI #j contains the playback sequence of the cell and playback control information.

- the program chain PGC is a conceptual structure describing the story of the title content, and therefore defines the structure of each title by describing the cell playback sequence. Note that these cells are described in detail below.

- the video title set information VTSI is stored to a buffer in the playback device when playback starts. If the user then presses a MENU button on a remote control device, for example, during playback, the playback device references the buffer to fetch the menu information and display the top menu #1. If the menus are hierarchical, the main menu stored as program chain information VTS_PGCI #1 may be displayed, for example, by pressing the MENU button, VTS_PGCI #2 - #9 may correspond to submenus accessed using the numeric keypad on the remote control, and VTS_PGCI #10 and higher may correspond to additional submenus further down the hierarchy. Alternatively, VTS_PGCI #1 may be the top menu displayed by pressing the MENU button, while VTS_PGCI #2 and higher may be voice guidance reproduced by pressing the corresponding numeric key.

- the menus themselves are expressed by the plural program chains defined in this table.

- the menus may be freely constructed in various ways, and shall not be limited to hierarchical or non-hierarchical menus or menus containing voice guidance.

- the video title set information VTSI is stored to a buffer in the playback device when playback starts, the playback device references the cell playback sequence described by the program chain PGC, and reproduces the system stream.

- the "cells” referenced here may be all or part of the system stream, and are used as access points during playback. Cells can therefore be used, for example, as the "chapters" into which a title may be divided.

- each of the PGC information entries C_PBI #j contain both cell playback processing information and a cell information table.

- the cell playback processing information comprises the processing information needed to reproduce the cell, such as the presentation time and number of repetitions. More specifically, this information includes the cell block mode CBM, cell block type CBT, seamless playback flag SPF, interleaved allocation flag IAF, STC resetting flag STCDF, cell presentation time C_PBTM, seamless angle change flag SACF, first cell VOBU start address C_FVOBU_SA, and the last cell VOBU start address C_LVOBU_SA.

- seamless playback refers to the reproduction in a digital video disk system of multimedia data including video, audio, and sub-picture data without intermittent breaks in the data or information. Seamless playback is described in detail in another section below with reference to Fig. 23 and Fig. 24.

- the cell block mode CBM indicates whether plural cells constitute one functional block.

- the cell playback information of each cell in a functional block is arranged consecutively in the PGC information.

- the cell block mode CBM of the first cell playback information in this sequence contains the value of the first cell in the block

- the cell block mode CBM of the last cell playback information in this sequence contains the value of the last cell in the block.

- the cell block mode CBM of each cell arrayed between these first and last cells contains a value indicating that the cell is a cell between these first and last cells in that block.

- the cell block type CBT identifies the type of the block indicated by the cell block mode CBM. For example, when a multiple angle function is enabled, the cell information corresponding to each of the reproducible angles is programmed as one of the functional blocks mentioned above, and the type of these functional blocks is defined by a value identifying "angle" in the cell block type CBT for each cell in that block.

- the seamless playback flag SPF simply indicates whether the corresponding cell is to be linked and played back seamlessly with the cell or cell block reproduced immediately therebefore. To seamlessly reproduce a given cell with the preceding cell or cell block, the seamless playback flag SPF is set to 1 in the cell playback information for that cell; otherwise SPF is set to 0.

- the interleaved allocation flag IAF stores a value identifying whether the cell exists in a contiguous or interleaved block. If the cell is part of an interleaved block, the flag IAF is set to 1; otherwise it is set to 0.

- the STC resetting flag STCDF identifies whether the system time clock STC used for synchronization must be reset when the cell is played back; when resetting the system time clock STC is necessary, the STC resetting flag STCDF is set to 1.

- the seamless angle change flag SACF stores a value indicating whether a cell in a multi-angle period should be connected seamlessly at an angle change. If the angle change is seamless, the seamless angle change flag SACF is set to 1; otherwise it is set to 0.

- the cell presentation time C_PBTM expresses the cell presentation time with video frame precision.

- the first cell VOBU start address C_FVOBU_SA is the VOBU start address of the first cell in a block, and is also expressed as the distance from the logic sector of the first cell in the VTS title VOBS (VTSTT_VOBS) as measured by the number of sectors.

- the last cell VOBU start address C_LVOBU_SA is the VOBU start address of the last cell in the block.

- the value of this address is expressed as the distance from the logic sector of the first cell in the VTS title VOBS (VTSTT_VOBS) as measured by the number of sectors.

- VTSTT_VOBS The VTS title VOBS (VTSTT_VOBS), i.e., the multimedia system stream data, is described next.

- the system stream data VTSTT_VOBS comprises i (where i is a natural number) system streams SS, each of which is referred to as a "video object" (VOB).

- Each video object VOB #1 - VOB #i comprises at least one video data block interleaved with up to a maximum eight audio data blocks and up to a maximum 32 sub-picture data blocks.

- Each video object VOB comprises q (where q is a natural number) cells C#1 - C#q.

- Each cell C comprises r (where r is a natural number) video object units VOBU #1 - VOBU #r.

- Each video object unit VOBU comprises plural group_of_pictures GOP, and the audio and sub-pictures corresponding to the playback of said plural group_of_pictures GOP. Note that the group_of_pictures GOP corresponds to the video encoding refresh cycle.

- Each video object unit VOBU also starts with an NV pack, i.e., the control data for that VOBU.

- the internal structure of the video zone VZ (see Fig. 22), i.e., the system stream St35 encoded by the authoring encoder EC described with reference to Fig. 25, is described with reference to Fig. 17.

- the encoded video stream St15 shown in Fig. 17 is the compressed one-dimensional video data stream encoded by the video encoder 300.

- the encoded audio stream St19 is likewise the compressed one-dimensional audio data stream multiplexing the right and left stereo audio channels encoded by the audio encoder 700.

- the audio signal shall not be limited to a stereo signal, and may also be a multichannel surround-sound signal.

- the system stream (title editing unit VOB) St35 is a one dimensional array of packs with a byte size corresponding to the logic sectors LS #n having a 2048-byte capacity as described using Fig. 21.

- a stream control pack is placed at the beginning of the title editing unit (VOB) St35, i.e., at the beginning of the video object unit VOBU. This stream control pack is called the "navigation pack NV", and records the data arrangement in the system stream and other control information.

- the encoded video stream St15 and the encoded audio stream St19 are packetized in byte units corresponding to the system stream packs. These packets are shown in Fig. 17 as packets V1, V2, V3, V4... and A1, A2, A3.... As shown in Fig. 17, these packets are interleaved in the appropriate sequence as system stream St35, thus forming a packet stream, with consideration given to the decoder buffer size and the time required by the decoder to expand the video and audio data packets. In the example shown in Fig. 17, the packet stream is interleaved in the sequence V1, V2, A1, V3, V4, A2....

- Fig. 17 interleaves one video data unit with one audio data unit.

- Significantly increased recording/playback capacity, high speed recording/playback, and performance improvements in the signal processing LSI enable the DVD system to record plural audio data and plural sub-picture data (graphics data) to one video data unit in a single interleaved MPEG system stream, and thereby enable the user to select the specific audio data and sub-picture data to be reproduced during playback.

- the structure of the system stream used in this type of DVD system is shown in Fig. 18 and described below.

- the packetized encoded video stream St15 is shown in Fig. 18 as V1, V2, V3, V4, ... In this example, however, there is not just one encoded audio stream St19, but three encoded audio streams St19A, St19B, and St19C input as the source data. There are also two encoded sub-picture streams St17A and St17B input as the source data sub-picture streams. These six compressed data streams, St15, St19A, St19B, St19C, St17A and St17B, are interleaved to a single system stream St35.

- the video data is encoded according to the MPEG specification with the group_of_pictures GOP being the unit of compression.

- each group_of_pictures GOP contains 15 frames in the case of an NTSC signal, but the specific number of frames compressed to one GOP is variable.

- the stream management pack which describes the management data containing, for example, the relationship between interleaved data, is also interleaved at the GOP unit interval. Because the group_of_pictures GOP unit is based on the video data, changing the number of video frames per GOP unit changes the interval of the stream management packs. This interval is expressed in terms of the presentation time on the digital video disk within a range from 0.4 sec. to 1.0 sec. referenced to the GOP unit. If the presentation time of contiguous plural GOP units is less than 1 sec., the management data packs for the video data of the plural GOP units is interleaved to a single stream.

- These management data packs are referred to as navigation packs NV in the digital video disk system.

- the data from one navigation pack NV to the packet immediately preceding the next navigation pack NV forms one video object unit VOBU.

- one contiguous playback unit that can be defined as one scene is called a video object VOB, and each video object VOB contains plural video object units VOBU.

- Data sets of plural video objects VOB form a VOB set (VOBS). Note that these data units were first used in the digital video disk.

- each group_of_pictures GOP is normally a unit containing approximately 0. 5 sec. of video data, which is equivalent to the presentation time required for 12 - 15 frames, and one navigation pack NV is generally interleaved with the number of data packets required for this presentation time.

- the stream management information contained in the interleaved video, audio, and sub-picture data packets constituting the system stream is described below with reference to Fig. 19

- the data contained in the system stream is recorded in a format packed or packetized according to the MPEG2 standard.

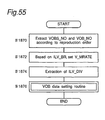

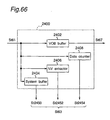

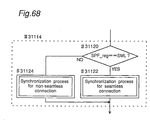

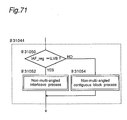

- the packet structure is essentially the same for video, audio, and sub-picture data.