WO2011064909A1 - 映像情報再生方法及びシステム、並びに映像情報コンテンツ - Google Patents

映像情報再生方法及びシステム、並びに映像情報コンテンツ Download PDFInfo

- Publication number

- WO2011064909A1 WO2011064909A1 PCT/JP2010/002707 JP2010002707W WO2011064909A1 WO 2011064909 A1 WO2011064909 A1 WO 2011064909A1 JP 2010002707 W JP2010002707 W JP 2010002707W WO 2011064909 A1 WO2011064909 A1 WO 2011064909A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- video information

- playback

- time

- content

- playback device

- Prior art date

Links

- 238000000034 method Methods 0.000 title claims abstract description 25

- 239000000872 buffer Substances 0.000 claims description 56

- 230000001360 synchronised effect Effects 0.000 claims description 9

- 230000003111 delayed effect Effects 0.000 claims description 8

- 238000001514 detection method Methods 0.000 claims description 5

- 238000010586 diagram Methods 0.000 description 14

- 230000005540 biological transmission Effects 0.000 description 7

- 239000013078 crystal Substances 0.000 description 6

- 238000011069 regeneration method Methods 0.000 description 5

- 230000007704 transition Effects 0.000 description 5

- 230000001172 regenerating effect Effects 0.000 description 4

- 230000007423 decrease Effects 0.000 description 3

- 230000000694 effects Effects 0.000 description 3

- 238000012545 processing Methods 0.000 description 3

- 230000008929 regeneration Effects 0.000 description 3

- 102100035353 Cyclin-dependent kinase 2-associated protein 1 Human genes 0.000 description 2

- 101000737813 Homo sapiens Cyclin-dependent kinase 2-associated protein 1 Proteins 0.000 description 2

- 238000012544 monitoring process Methods 0.000 description 2

- 230000010355 oscillation Effects 0.000 description 2

- 230000005236 sound signal Effects 0.000 description 2

- 238000012546 transfer Methods 0.000 description 2

- 101000911772 Homo sapiens Hsc70-interacting protein Proteins 0.000 description 1

- 101001139126 Homo sapiens Krueppel-like factor 6 Proteins 0.000 description 1

- 238000009825 accumulation Methods 0.000 description 1

- 238000012790 confirmation Methods 0.000 description 1

- 238000012937 correction Methods 0.000 description 1

- 230000003247 decreasing effect Effects 0.000 description 1

- 108090000237 interleukin-24 Proteins 0.000 description 1

- 239000012464 large buffer Substances 0.000 description 1

- 238000007726 management method Methods 0.000 description 1

- 230000004044 response Effects 0.000 description 1

Images

Classifications

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N9/00—Details of colour television systems

- H04N9/79—Processing of colour television signals in connection with recording

- H04N9/80—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback

- H04N9/804—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback involving pulse code modulation of the colour picture signal components

- H04N9/8042—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback involving pulse code modulation of the colour picture signal components involving data reduction

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11B—INFORMATION STORAGE BASED ON RELATIVE MOVEMENT BETWEEN RECORD CARRIER AND TRANSDUCER

- G11B20/00—Signal processing not specific to the method of recording or reproducing; Circuits therefor

- G11B20/10—Digital recording or reproducing

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11B—INFORMATION STORAGE BASED ON RELATIVE MOVEMENT BETWEEN RECORD CARRIER AND TRANSDUCER

- G11B20/00—Signal processing not specific to the method of recording or reproducing; Circuits therefor

- G11B20/10—Digital recording or reproducing

- G11B20/10527—Audio or video recording; Data buffering arrangements

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/20—Servers specifically adapted for the distribution of content, e.g. VOD servers; Operations thereof

- H04N21/23—Processing of content or additional data; Elementary server operations; Server middleware

- H04N21/242—Synchronization processes, e.g. processing of PCR [Program Clock References]

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/40—Client devices specifically adapted for the reception of or interaction with content, e.g. set-top-box [STB]; Operations thereof

- H04N21/43—Processing of content or additional data, e.g. demultiplexing additional data from a digital video stream; Elementary client operations, e.g. monitoring of home network or synchronising decoder's clock; Client middleware

- H04N21/432—Content retrieval operation from a local storage medium, e.g. hard-disk

- H04N21/4325—Content retrieval operation from a local storage medium, e.g. hard-disk by playing back content from the storage medium

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/40—Client devices specifically adapted for the reception of or interaction with content, e.g. set-top-box [STB]; Operations thereof

- H04N21/43—Processing of content or additional data, e.g. demultiplexing additional data from a digital video stream; Elementary client operations, e.g. monitoring of home network or synchronising decoder's clock; Client middleware

- H04N21/44—Processing of video elementary streams, e.g. splicing a video clip retrieved from local storage with an incoming video stream or rendering scenes according to encoded video stream scene graphs

- H04N21/44016—Processing of video elementary streams, e.g. splicing a video clip retrieved from local storage with an incoming video stream or rendering scenes according to encoded video stream scene graphs involving splicing one content stream with another content stream, e.g. for substituting a video clip

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/60—Network structure or processes for video distribution between server and client or between remote clients; Control signalling between clients, server and network components; Transmission of management data between server and client, e.g. sending from server to client commands for recording incoming content stream; Communication details between server and client

- H04N21/63—Control signaling related to video distribution between client, server and network components; Network processes for video distribution between server and clients or between remote clients, e.g. transmitting basic layer and enhancement layers over different transmission paths, setting up a peer-to-peer communication via Internet between remote STB's; Communication protocols; Addressing

- H04N21/633—Control signals issued by server directed to the network components or client

- H04N21/6332—Control signals issued by server directed to the network components or client directed to client

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/80—Generation or processing of content or additional data by content creator independently of the distribution process; Content per se

- H04N21/85—Assembly of content; Generation of multimedia applications

- H04N21/854—Content authoring

- H04N21/8547—Content authoring involving timestamps for synchronizing content

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N5/00—Details of television systems

- H04N5/76—Television signal recording

- H04N5/91—Television signal processing therefor

- H04N5/92—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N5/00—Details of television systems

- H04N5/76—Television signal recording

- H04N5/91—Television signal processing therefor

- H04N5/93—Regeneration of the television signal or of selected parts thereof

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N9/00—Details of colour television systems

- H04N9/79—Processing of colour television signals in connection with recording

- H04N9/80—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback

-

- G—PHYSICS

- G09—EDUCATION; CRYPTOGRAPHY; DISPLAY; ADVERTISING; SEALS

- G09G—ARRANGEMENTS OR CIRCUITS FOR CONTROL OF INDICATING DEVICES USING STATIC MEANS TO PRESENT VARIABLE INFORMATION

- G09G2340/00—Aspects of display data processing

- G09G2340/02—Handling of images in compressed format, e.g. JPEG, MPEG

-

- G—PHYSICS

- G09—EDUCATION; CRYPTOGRAPHY; DISPLAY; ADVERTISING; SEALS

- G09G—ARRANGEMENTS OR CIRCUITS FOR CONTROL OF INDICATING DEVICES USING STATIC MEANS TO PRESENT VARIABLE INFORMATION

- G09G2370/00—Aspects of data communication

- G09G2370/10—Use of a protocol of communication by packets in interfaces along the display data pipeline

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11B—INFORMATION STORAGE BASED ON RELATIVE MOVEMENT BETWEEN RECORD CARRIER AND TRANSDUCER

- G11B20/00—Signal processing not specific to the method of recording or reproducing; Circuits therefor

- G11B20/10—Digital recording or reproducing

- G11B20/10527—Audio or video recording; Data buffering arrangements

- G11B2020/1062—Data buffering arrangements, e.g. recording or playback buffers

- G11B2020/1075—Data buffering arrangements, e.g. recording or playback buffers the usage of the buffer being restricted to a specific kind of data

- G11B2020/10759—Data buffering arrangements, e.g. recording or playback buffers the usage of the buffer being restricted to a specific kind of data content data

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11B—INFORMATION STORAGE BASED ON RELATIVE MOVEMENT BETWEEN RECORD CARRIER AND TRANSDUCER

- G11B20/00—Signal processing not specific to the method of recording or reproducing; Circuits therefor

- G11B20/10—Digital recording or reproducing

- G11B20/10527—Audio or video recording; Data buffering arrangements

- G11B2020/1062—Data buffering arrangements, e.g. recording or playback buffers

- G11B2020/10805—Data buffering arrangements, e.g. recording or playback buffers involving specific measures to prevent a buffer overflow

-

- G—PHYSICS

- G11—INFORMATION STORAGE

- G11B—INFORMATION STORAGE BASED ON RELATIVE MOVEMENT BETWEEN RECORD CARRIER AND TRANSDUCER

- G11B20/00—Signal processing not specific to the method of recording or reproducing; Circuits therefor

- G11B20/10—Digital recording or reproducing

- G11B20/10527—Audio or video recording; Data buffering arrangements

- G11B2020/1062—Data buffering arrangements, e.g. recording or playback buffers

- G11B2020/10814—Data buffering arrangements, e.g. recording or playback buffers involving specific measures to prevent a buffer underrun

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/20—Servers specifically adapted for the distribution of content, e.g. VOD servers; Operations thereof

- H04N21/23—Processing of content or additional data; Elementary server operations; Server middleware

- H04N21/24—Monitoring of processes or resources, e.g. monitoring of server load, available bandwidth, upstream requests

- H04N21/2401—Monitoring of the client buffer

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/40—Client devices specifically adapted for the reception of or interaction with content, e.g. set-top-box [STB]; Operations thereof

- H04N21/43—Processing of content or additional data, e.g. demultiplexing additional data from a digital video stream; Elementary client operations, e.g. monitoring of home network or synchronising decoder's clock; Client middleware

- H04N21/4302—Content synchronisation processes, e.g. decoder synchronisation

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/60—Network structure or processes for video distribution between server and client or between remote clients; Control signalling between clients, server and network components; Transmission of management data between server and client, e.g. sending from server to client commands for recording incoming content stream; Communication details between server and client

- H04N21/63—Control signaling related to video distribution between client, server and network components; Network processes for video distribution between server and clients or between remote clients, e.g. transmitting basic layer and enhancement layers over different transmission paths, setting up a peer-to-peer communication via Internet between remote STB's; Communication protocols; Addressing

- H04N21/64—Addressing

- H04N21/6405—Multicasting

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N9/00—Details of colour television systems

- H04N9/79—Processing of colour television signals in connection with recording

- H04N9/80—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback

- H04N9/804—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback involving pulse code modulation of the colour picture signal components

- H04N9/806—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback involving pulse code modulation of the colour picture signal components with processing of the sound signal

- H04N9/8063—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback involving pulse code modulation of the colour picture signal components with processing of the sound signal using time division multiplex of the PCM audio and PCM video signals

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N9/00—Details of colour television systems

- H04N9/79—Processing of colour television signals in connection with recording

- H04N9/80—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback

- H04N9/82—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback the individual colour picture signal components being recorded simultaneously only

- H04N9/8205—Transformation of the television signal for recording, e.g. modulation, frequency changing; Inverse transformation for playback the individual colour picture signal components being recorded simultaneously only involving the multiplexing of an additional signal and the colour video signal

Definitions

- the present invention relates to a video information playback method and a video information playback system for playing back encoded data distributed via a network and encoded data read from a storage medium.

- the invention also relates to content distributed over a network or read from a storage medium.

- devices that reproduce MPEG data such as DVDs and Blu-ray players

- PCR PCR to read data from storage media so that the decoder buffer does not underflow or overflow.

- Time correction using the system clock is not necessary. Therefore, for example, when data read from these storage media is sent to a plurality of playback devices and displayed on the plurality of playback devices, the synchronization is shifted due to system clock variations after several hours. It may end up.

- the present invention has been made to solve the above-described problem, and its purpose is to read data from a storage medium and reproduce a plurality of video information in a situation where the system clock cannot be corrected using PCR.

- the reproduction is synchronized between the reproducing devices.

- the video information reproducing method of the present invention A plurality of videos including content including time stamp information, which is composed of a sequence including at least intra-frame encoded image information and inter-frame forward prediction encoded image information from a content server connected via a network or storage media.

- a video information playback method for receiving at an information playback device and decoding and playing back the content with the video information playback device, A video information playback device serving as a reference for playback time is determined from among a plurality of video information playback devices, and an error in playback time between each video information playback device and the reference video information playback device is detected.

- a detection step If there is a delay from the reference video information playback device, the number of frames of the encoded content to be played back according to the delay time is subtracted from the number of frames to be played back by the reference video information playback device.

- the number of frames of the encoded content to be reproduced according to the advance time is added to the frame reproduced by the reference video information reproducing apparatus to adjust the number of frames to be reproduced. And adjusting step.

- the reproduction time can be synchronized with high accuracy among a plurality of video information reproduction devices.

- FIG. 1 It is a block diagram which shows roughly the structural example of the video information reproducing

- FIG. 3 is a diagram illustrating an example of transition of the remaining amount of the buffer memory of the playback device with the most advanced playback time and the playback device with the latest delay in the video information playback system of FIG. 2. It is a figure which shows an example of a structure of the PES packet of MPEG data which concerns on Embodiment 1 of this invention. It is a figure which shows an example of a structure of the packet of TS stream which concerns on Embodiment 1 of invention. It is a figure which shows an example of the syntax of the packet for a synchronization adjustment of FIG. It is a flowchart which shows the video information reproduction

- FIG. FIG. 1 is a block diagram schematically showing a configuration example of a video information playback apparatus used for carrying out the video information playback method according to Embodiment 1 of the present invention.

- the illustrated video information reproducing apparatus is an apparatus that reproduces content distributed from a content server via a network.

- FIG. 2 is a block diagram schematically showing the entire video information playback system including the video information playback device and content server of FIG.

- Data is distributed from the content server 41 to each video information reproducing device 42 via a network 43 constituted by, for example, Ethernet (registered trademark).

- the following description is based on the assumption that data is distributed on the network 43 by broadcast distribution using UDP (User Datagram Protocol).

- the video information reproducing device 42 shown in FIG. 2 is configured as shown in FIG. 1, for example.

- the video information playback apparatus shown in FIG. 1 includes a playback unit 10 as a playback unit for data distributed from the content server 41 and a CPU 21 as a playback control unit for controlling the entire apparatus.

- the playback unit 10 receives the data distributed from the content server 41, the data reception unit 11, the buffer memory 12 that temporarily stores the data received by the data reception unit 11, and the buffer memory 12 It has a demultiplexer 13 that separates the read data into video information and audio information, a video decoder 14 that decodes the video information, and an audio decoder 15 that decodes the audio information.

- the video signal output from the video decoder 14 is sent to the external display device 31, and the video is displayed by the external display device 31.

- the audio signal output from the audio decoder 15 is sent to an audio output device (not shown), and audio is output.

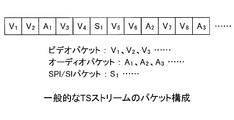

- the data distributed to the data receiving unit 11 is assumed to be a TS (Transport Stream).

- the video information and the audio information are divided into PES (Packetized Elementary Stream) packets and further divided into TS packets, and the video information and the audio information are distributed in a superimposed state.

- PES Packetized Elementary Stream

- PES packet can be MPEG2 or H.264.

- PES header information is added to an ES (Elementary Stream) encoded by H.264 or the like.

- the PES packet is packetized in units of time management for reproduction, and for example, one image frame (picture) is regarded as one PES packet in the video information.

- the header information of the PES packet includes a time stamp, for example, a PTS (Presentation Time Stamp) which is time information for reproduction.

- the TS packet has a fixed length (188 bytes), and a unique PID (Packet ID) is assigned to each data type in the header portion of each TS packet. With this PID, it is possible to identify whether it is video information, audio information, or system information (reproduction control information or the like).

- the demultiplexer 13 reads the PID, identifies whether it is video information or audio information, and distributes the data to each decoder.

- the data format can identify whether the information is video information or audio information. Any format other than TS may be used. Further, when there is no audio information and only data of video information is distributed, it may be left as PES (Packetized Elementary Stream) without being divided into TS packets. In this case, the demultiplexer 13 and the audio decoder 15 of the reproducing unit 10 are not necessary.

- the CPU 21 operates as reception data control means that constantly monitors the capacity of the buffer memory 12 and performs control so that the buffer memory 12 does not cause underflow or overflow. .

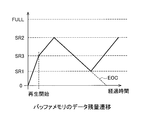

- FIG. 3 is a diagram showing the transition of the capacity of the buffer memory 12.

- the CPU 21 constantly monitors the capacity of the buffer memory 12 and notifies the content server 41 of the remaining amount of the buffer memory 12 sequentially.

- the playback device performs playback in a state in which the content server 41 is not transmitting data, and as a result, the remaining amount of data in the buffer memory 12 decreases to the lower limit (first predetermined threshold) SR1, the content server 41

- transmission of data to the playback device 42 is started (restarted) and the remaining capacity of the buffer memory 12 reaches the upper limit (second predetermined threshold) SR2, the content server 41 transmits data to the playback device 42. Stop.

- the content server 41 resumes data transmission.

- the transmission data is controlled so that the remaining amount of data in the buffer memory 12 falls between the upper limit value SR2 and the lower limit value SR1.

- the CPU 21 monitors the playback time of the video decoder 14 in the same playback device 42, notifies the content server 41 of the time, and at the same playback device 42 for the reference playback time notified from the content server 41. It also operates as means for increasing and decreasing the data size transferred from the content server 41 to the buffer memory 12 in accordance with the difference in the playback time of the video decoder 14.

- the content server 41 notifies each playback device 42 of the deviation from the playback time of the standard playback device 42. If the playback time of the playback device 42 is ahead of the standard playback time of the playback device 42, the CPU 21 increases the amount of data stored in the buffer memory 12 from the data received by the data receiving unit 11 to When the playback time is later than the playback time of the playback device 42, the data is controlled so as to reduce the amount of data stored in the buffer memory 12. A detailed description of the control of the amount of data stored in the buffer memory 12 will be described later.

- FIG. 4 is a diagram showing a data structure of TS data distributed from the content server 41.

- video information and audio information are divided into 188-byte packets (TS packets), and system information such as video packets storing video data, audio packets storing audio data, and PAT / PMT is stored.

- TS packets 188-byte packets

- system information such as video packets storing video data, audio packets storing audio data, and PAT / PMT is stored.

- the superimposed PSI / SI packet is superimposed so that video and audio reproduction is not interrupted.

- the content server 41 starts broadcasting TS data to each reproduction device 42.

- This playback start request may be made by sending a signal from any of the playback devices, or may be given by a separate control device not shown.

- Each reproduction device 42 receives the distributed TS data by the data receiving unit 11 and starts accumulation in the buffer memory 12.

- the content server 41 arbitrarily determines a representative device among all the playback devices 42 and sequentially monitors the remaining amount of data in the buffer memory 12 of the playback device 42 as a representative.

- the content server 41 detects that the remaining capacity of the buffer memory 12 exceeds the third predetermined threshold value (initial reproduction start value) SR3 that is larger than the lower limit value SR1 and smaller than the upper limit value SR2 in FIG.

- the apparatus 42 is instructed to start reproduction (display and output start on the reproduction apparatus).

- each packet of TS data in the buffer memory 12 is sent to the demultiplexer 13, and according to the PID of the packet, video information, audio information, SPI / SI information And separated.

- the separated video information is sent to the video decoder 14, and the decoded video signal is output to the external display device 31.

- the separated audio information is sent to the audio decoder 15, and the decoded audio signal is sent to an audio output device (not shown) to output audio.

- the CPU 21 sequentially notifies the content server 41 of the remaining amount of the buffer memory 12, and when the content server 41 detects that the remaining amount of the buffer memory 12 has reached the upper limit SR2, The content server 41 stops data transfer.

- the remaining capacity of the buffer memory 12 of each playback device 42 gradually decreases as data is consumed by the decoder.

- the content server 41 detects that the remaining capacity of the buffer memory 12 of the representative playback device 42 has reached the lower limit SR1

- the content server 41 resumes data distribution.

- the reproduction is continued without causing the buffer memory 12 to underflow or overflow and the sound or video to be interrupted.

- the remaining capacity of the buffer memory 12 has reached the lower limit SR1

- there is no more data to be transmitted so as shown by the dotted line EOC in FIG. ,

- the remaining amount is even less, and finally reaches zero.

- the threshold values SR1, SR2, and SR3 should be set to optimum values depending on the capacity of the buffer memory 12, the bit rate to be reproduced, etc., but are not directly related to the present application. Is not mentioned.

- each PES indicates that the time specified by the PTS included in the header information of the PES (relative time with respect to the time when the head of the content is played back) is generated from the crystal oscillator in the playback device 42.

- the image of each PES is designated by the PTS included in the header information of the PES while performing decoding while performing detection or performing time adjustment (delay) after decoding.

- Playback output is performed at the specified time (relative time from the start of content playback).

- the difference in the reproduction time mentioned above means that the reproduction time of the PES packet including a certain PTS in one reproduction apparatus is different from the time measured by the clock circuit 22 in the one reproduction apparatus and the PES packet including the same PTS. This means a difference from the time measured by the clock circuit 22 in the other playback device.

- the error in the frequency of the crystal oscillator causes the deviation in the reproduction time of each reproduction device 42 with time. For example, if the accuracy of the crystal oscillator is ⁇ 20 ppm, an error of 40 ppm occurs between the reproducing device 42 with the most advanced display and the reproducing device 42 with the most delayed display, and the reproduction is continued for 24 hours. Assuming that a time lag of about 3 seconds may occur.

- the content server 41 controls the data distribution while monitoring the remaining amount of data in the buffer memory 12 with one of all the playback devices 42 as a representative playback device 42 and monitoring the remaining data.

- the reproducing device 42 having a large error is used as a representative, there is a high possibility that a buffer underflow or overflow occurs in the buffer memory 12 of another reproducing device 42.

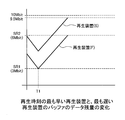

- FIG. 5 shows the playback device (F) with the fastest time advance and the slowest when the video playback device 42 with the fastest time advance is selected as a representative 20 hours after the start of playback.

- the transition of the remaining amount of the buffer of the playback device (S) is shown.

- Data delivery from the content server 41 is resumed from time T1 when the remaining amount of the earliest playback device (F) reaches the lower limit SR1, but at this time, the remaining amount (S) of the latest playback device is the data More remains.

- the video encoding bit rate is 10 Mbps

- the playback device (S) more data remains in the playback device (S) than the playback device (F) by 3.5 Mbits due to the time lag described above.

- the capacity of the buffer memory 12 is 10 Mbit

- the lower limit SR1 is 3 Mbit

- the upper limit SR2 is 6 Mbit

- the remaining capacity of the playback apparatus (F) becomes 6 Mbit

- the remaining capacity of the playback apparatus (S) is 9. 5 Mbit, which is approaching the limit of the buffer memory capacity.

- FIG. 6 is a diagram showing an example of an array of PES packets in the improved video information for carrying out the video information reproducing method according to the embodiment of the present invention.

- the one indicated by the symbol “I” is constituted by the I picture

- the symbol “P” is constituted by the P picture

- the symbol “B” is constituted by the B picture.

- the subscripts “1”, “2”, “3”, etc. attached to the symbols “I”, “P”, “B” are for distinguishing I, P, and B pictures, respectively. Also shows the order.

- I picture is image information (intra-frame encoded image information) that is intra-frame encoded, and can be decoded independently.

- the P picture is image information (interframe forward prediction encoded image information) that is forward motion compensated prediction encoded with reference to a previous picture (I picture or another P picture), and the B picture is It is image information (inter-frame bi-directional predictive encoded image) that has been bi-directional motion compensated predictive encoded with reference to a previous picture and a subsequent picture (I picture, P picture or other B picture). .

- the TS data playback time is 60 sec and the frame rate is 30 fps

- the TS data is composed of the above 1800 PES packets. 10 frames of PES packets are added to the main part (the part included in the TS data even when the synchronization adjustment of the present invention is not performed) for reproduction synchronization adjustment, and a total of 1810 PES packets are added. Consists of.

- the added 10-frame video is encoded to repeatedly display the same video as the 60-second final frame.

- a frame (group) for synchronization adjustment is composed of 1 frame of I picture data composed of the same video data as the video of the last frame, and 9 frames of P picture data for subsequently displaying the same video. It is configured.

- the addition of the frame for synchronization adjustment may be held in a form authored in advance in the content server 41, or may be added at the time of distribution on the content server 41 using an MPEG dummy picture. Good.

- the PES packet at the head of the added PES packet is an I picture (in the case of H.264, it is an IDR picture, but this is also intra-frame encoded image information), and the subsequent PES packet is a B picture. Is composed of P picture or I picture (IDR picture). Since the B picture is not included, the added picture for 10 frames for synchronization adjustment has the same display order as the data arrangement order.

- PES packets are arranged in the order of “I 1 ”, “P 1 ”, “B 1 ”, “B 2 ” in the head portion of the TS data in FIG.

- the display order is “I 1 ”, “B 1 ”, “B 2 ”, “P 1 ”, and the arrangement order is different from the display order.

- FIG. 7 is a diagram showing an arrangement of TS packets of TS data improved in order to implement the video information reproducing method according to the embodiment.

- the difference from the TS data in FIG. 4 is that adjustment information in which adjustment packet information indicating the number of packets from the beginning of the TS data in which the first TS packet of each added PES packet for 10 frames exists is described. This is the point where packets are superimposed.

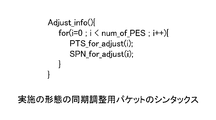

- FIG. 8 is a diagram illustrating the syntax of the synchronization adjustment packet.

- “PTS_for_adjust (i)” indicates the leading PTS value of each PES packet that can be used for synchronization adjustment.

- SPN_for_adjust (i) is a value indicating how many packets from the beginning of the TS data the TS packet including the beginning of the PES packet indicated by “PTS_for_adjust (i)” is.

- the adjustment packet information is superimposed on the TS packet and sent to the reproduction device 42. However, it is not always necessary to superimpose the adjustment packet information on the TS packet. 42 may be sent.

- the content server 41 has a plurality of TS data configured as shown in FIG. 7 and is continuously distributed to each video information reproducing device 42.

- distribution of TS data of the content server 41 is started in the order instructed in advance.

- the CPU 21 monitors the arrival of TS packets including the added 10-frame PES packet from the adjustment information packet data included in the TS data, and the TS packets after SPN_for_adjust (0). Are discarded and are not stored in the buffer memory 12.

- FIG. 9 is a diagram showing a processing sequence for synchronizing reproduction between the video information reproducing apparatuses 42. Steps ST5 and ST6 indicate processing in the content server 41, and other steps indicate processing in the playback device 42.

- the content server 41 confirms the current playback time for each playback device 42 (ST3) about several tens of minutes, that is, every time a predetermined time elapses (ST2).

- each playback device 42 In response to the confirmation of the playback time from the content server 41, each playback device 42 notifies the content server 41 of the current playback time (ST4).

- the content server 41 totals the notified times and determines whether or not it is necessary to adjust the reproduction time (ST5). Therefore, it is determined whether or not the difference between the most advanced playback time and the latest playback time is greater than a predetermined value, for example, one frame or more.

- a predetermined value for example, one frame or more.

- the reproduction device 42 having the average value among the reproduction times is set as the reference reproduction device 42, and the reproduction time is set as the reference time.

- Notification is sent to all playback devices 42 (ST6).

- Each playback device 42 that has received the reference time determines whether or not playback is delayed from the reference playback device 42 based on the difference between the playback time notified by itself and the reference time notified from the content server 41. To recognize whether or not playback is being performed and adjust the playback time (ST7).

- the reproduction apparatus 42 adds 10 frames added for synchronization adjustment. Of these, up to the fifth frame is reproduced, and the CPU 21 discards data after SPN_for_adjust (5). Therefore, the total reproduction time of TS data currently being reproduced is the main story + 5 frames, and the final frame of the main story is displayed continuously for the last six frames. After reproducing the added first five frames, the TS data of the next content is continuously reproduced.

- FIG. 10 is a diagram showing in detail the adjustment sequence in step ST7 of FIG. First, it is determined whether or not playback is delayed by one frame or more from the playback time (reference time) of the playback device 42 as a reference (ST8).

- the playback device 42 that has determined that it is delayed by one frame or more from the reference time calculates a delay time from the reference time and its own playback time, calculates the number of frames corresponding to the delay time, and corresponds to the delay time from 5 frames.

- the data size to be accumulated in the buffer memory 12 is calculated by subtracting the number of frames.

- the delay time is 60 msec (2 frames)

- the data after SPN_for_adjust (3) is stored.

- Discard (ST9) the total reproduction time of the TS data currently being reproduced is the main part + 3 frames, and the final frame of the main part is continuously displayed in the last four frames. After playing the added first three frames, the TS data of the next content is continuously played back.

- step ST10 it is determined whether or not one frame or more has been advanced from the playback time of the playback device 42 serving as a reference.

- the playback device 42 that has determined that it has advanced one frame or more calculates the advance time from the reference time and its own playback time, calculates the number of frames corresponding to the advance time, advances to 5 frames, and the number of frames corresponding to the time Is added to calculate the data size to be stored in the buffer memory 12 (ST11). For example, when the advance time is 90 msec (for 3 frames), only the first 8 frames of the 10 frames added to the end of the TS data are stored in the buffer memory 12, and the data after SPN_for_adjust (8) is stored. Discard.

- the total playback time of the TS data currently being played back is the main story + 8 frames, and the final frame of the main story is displayed continuously for the last nine frames. After the added first half 8 frames are reproduced, the reproduction of the TS data of the next content is continued.

- step ST10 the playback device 42 that has determined that the advance time is within one frame plays back up to the fifth frame of the 10 frames added to the end of the TS data, and discards the data after SPN_for_adjust (5) ( ST12). Therefore, the total reproduction time of TS data currently being reproduced is the main story + 5 frames, and the final frame of the main story is continuously displayed in the last six frames. After reproducing the added first five frames, the TS data of the next content is continuously reproduced.

- the total playback time of the currently played content of the playback device 42 that is delayed from the reference time is shortened by two frames compared to the reference playback device 42, and the playback time is advanced.

- the total playback time of the content currently being played back by the device 42 is longer than the reference playback device 42 by two frames.

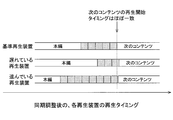

- FIG. 11 is a diagram showing the transition of the playback time of each playback device 42 before and after content switching.

- the playback device 42 that is delayed with respect to the reference time shortens the total playback time of the TS data that is currently played back by the amount of delay compared to the reference playback device 42.

- the advanced playback device 42 sets the total playback time of the TS data being played back longer than the reference playback device 42 by the amount of delay, thereby reproducing the TS data of the next content. It is possible to adjust the start timing to a time difference within one frame or less.

- FIG. 12 is a diagram showing a general arrangement of MPEG frames.

- the content server 41 distributes data in the order of “I 1 ”, “P 1 ”, “B 1 ”, “B 2 ”,..., But the display order is “I 1 ”, “ B 1 ”,“ B 2 ”,“ P 1 ”,...

- the data is four frames of “I 1 ”, “P 1 ”, “B 1 ”, “B 2 ”. Minutes need to be input to the buffer memory 12. Since the decoder 14 reproduces four frames “I 1 ”, “B 1 ”, “B 2 ”, and “P 1 ”, the number of frames originally intended to be displayed exceeds two frames.

- FIG. 13 shows an arrangement of frames that are considered in order to synchronize with the accuracy of one frame in units of frames.

- the difference from FIG. 12 is that encoding is performed without using B frames.

- the frame arrangement order matches the playback order. For this reason, if it is desired to reproduce only three frames, the data for three frames “I 1 ”, “P 1 ”, and “P 2 ” may be stored in the buffer memory, and the subsequent frames may be discarded.

- the frame accuracy can be adjusted synchronously.

- the encoding efficiency is lowered, and the data size is increased in order to ensure the same image quality as that when the B frame is used.

- the main part is encoded using the B frame and the adjustment frame is encoded without using the B frame, but the main part and the adjustment frame are separated.

- Encoding according to another rule has a problem that encoding becomes complicated. Therefore, in a system where synchronization accuracy is required, the adjustment frame is encoded without using the B frame, and when high synchronization accuracy is not required, encoding using the B frame may be performed.

- the playback time of the playback device 42 whose playback time is close to the average value of the playback times of all playback devices 42 is set as the reference time.

- the playback time needs to be close to the average value.

- the median value may be used, and the playback device 42 with the most advanced playback time is used as a reference, and the playback device 42 with the most advanced playback time plays back all the 10 added frames. The same effect can be obtained even if the apparatus 42 reproduces by reducing the number of additional reproduction frames from 10 frames in accordance with the magnitude of the error from the reference reproduction time.

- the playback device 42 with the latest playback time is used as a reference, the playback device 42 with the latest playback time is not played back, and the other playback devices 42 are not connected to the reference playback time.

- the same effect can be obtained by increasing the number of additional frames to be reproduced according to the size of the error.

- the present invention can be applied to a display device that displays on a plurality of screens in, for example, an automobile, a train, a building, and the like. .

- Embodiment 2 the adjustment method in the case where the variation in the reproduction time between the reproduction devices 42 is within the number of frames prepared for adjustment has been described.

- the data from the content server 41 is not available before the playback device 42 is ready for playback because a sudden load is applied to the CPU 21 in the playback device 42 or the playback device 42 is reset due to the influence of electrical noise.

- the reproduction synchronization may be greatly shifted. In this case, since the shift time is several seconds, it may take several tens of minutes to several hours until synchronization is achieved by the adjustment method of the first embodiment.

- FIG. 14 is a sequence diagram improved in order to make adjustments in a short time when the reproduction time has deviated significantly in this way.

- steps ST18 and ST19 are processes performed in the content server 41, and the other steps are processes performed in each playback device 42.

- First with respect to the playback time of the playback device 42 serving as a reference, whether or not the amount of deviation is within a range that can be synchronized by the adjustment method described in the first embodiment (the method described in the first embodiment). It is determined whether or not adjustment is possible (ST13).

- step ST14 normal adjustment (adjustment described with reference to FIG. 10) is performed. Details of the adjustment in step ST14 have been described in detail in the first embodiment, and will not be repeated. If it is determined that the amount of deviation in the reproduction time is outside the range where synchronization is possible, reproduction of the currently reproduced content is interrupted and all data stored in the buffer memory 12 is cleared (ST15). .

- the playback device 42 that interrupted playback waits for the delivery of the TS data of the content currently being played back and the delivery of the first packet of the first TS packet of the next content (ST16).

- the CPU 21 starts storing data in the buffer memory 12 (ST17).

- the content server 41 instructs the playback device 42 serving as a reference to notify the content server 41 when playback of the first frame of the next content is started, and plays back the reference.

- the content server 41 issues a playback start instruction to the playback device 42 that has stopped playback ( ST19), the reproduction of the content in the reproduction device 42 that has interrupted the reproduction is resumed.

- the content server 41 is notified of the start of playback, and until the playback start instruction is transmitted from the content server 41 to the playback device 42 that interrupted playback, the delay due to the network and the delay due to the software processing time Since there is a delay from when the playback instruction is issued to the video decoder 14 until the first frame is displayed, at this time, the playback time of the playback device 42 that interrupted playback is several frames from the other playback devices 42. Being late. However, since this delay is within a range that can be adjusted by the sequence for adjusting the normal synchronization, the synchronization can be matched in the next adjustment sequence.

- the playback device 42 As described above, with respect to the playback device 42 whose playback time is significantly shifted, the playback is temporarily stopped, and the playback device 42 serving as the reference starts playback of the first frame of the next content. By restarting the playback, it becomes possible to quickly synchronize the playback time by adjusting the next content.

- the present invention can be applied to a display device that displays on a plurality of screens, for example, in an automobile, a train, a building, and the like, and an effect that synchronization of reproduction times between the plurality of display devices can be adjusted in a short time can be obtained. .

Landscapes

- Engineering & Computer Science (AREA)

- Signal Processing (AREA)

- Multimedia (AREA)

- Computer Security & Cryptography (AREA)

- Databases & Information Systems (AREA)

- Two-Way Televisions, Distribution Of Moving Picture Or The Like (AREA)

- Television Signal Processing For Recording (AREA)

- Controls And Circuits For Display Device (AREA)

- Compression Or Coding Systems Of Tv Signals (AREA)

Abstract

Description

ネットワークで接続されたコンテンツサーバーから、或いは蓄積メディアから、少なくともフレーム内符号化画像情報、及びフレーム間順方向予測符号化画像情報を含むシーケンスで構成され、かつタイムスタンプ情報を含むコンテンツを複数の映像情報再生装置で受信し、前記コンテンツを前記映像情報再生装置で復号し再生する映像情報再生方法であって、

複数の映像情報再生装置の中から、再生時刻の基準となる映像情報再生装置を決めて、それぞれの映像情報再生装置と、基準となる映像情報再生装置との間の再生時刻の誤差を検出する検出ステップと、

基準となる映像情報再生装置から遅れている場合は、遅れ時間に応じて再生する符号化されたコンテンツのフレーム数を、基準となる映像情報再生装置が再生するフレーム数から減じ、基準となる映像情報再生装置から進んでいる場合は、進み時間に応じて再生する符号化されたコンテンツのフレーム数を、基準となる映像情報再生装置が再生するフレームに加算して、再生するフレーム数を調整する調整ステップと

を有することを特徴とする。

図1は、本発明の実施の形態1に係る映像情報再生方法の実施に用いられる映像情報再生装置の構成例を概略的に示すブロック図である。図示の映像情報再生装置は、コンテンツサーバーからネットワークを経由して配信されるコンテンツを再生する装置である。

図1に示される映像情報再生装置は、コンテンツサーバー41から配信されたデータの再生手段としての再生部10と、装置全体の制御を実行する再生制御手段としてのCPU21とを有する。再生部10は、コンテンツサーバー41からの配信されたデータを受信する、データ受信部11と、データ受信部11で受信されたデータを一時的に蓄積しておくバッファメモリ12と、バッファメモリ12から読み出されたデータを、映像情報、音声情報の各情報に分離するデマルチプレクサ13と、映像情報をデコードするビデオデコーダ14と、音声情報をデコードするオーディオデコーダ15とを有する。ビデオデコーダ14から出力される映像信号は、外部表示装置31に送られ、外部表示装置31によって映像が表示される。オーディオデコーダ15から出力される音声信号は、音声出力装置(図示せず)に送られ、音声が出力される。

コンテンツサーバー41がデータの送信を行なっていない状態で、再生装置が再生を行ない、その結果バッファメモリ12のデータ残量が下限値(第1の所定の閾値)SR1まで減少すると、コンテンツサーバー41は再生装置42に対してデータの送信を開始(再開)し、バッファメモリ12の残量が上限値(第2の所定の閾値)SR2に達すると、コンテンツサーバー41は再生装置42に対するデータの送信を停止する。

次にバッファメモリ12の残量が下限値12まで減少すると、コンテンツサーバー41はデータの送信を再開する。

以上の動作を繰り返すことで、バッファメモリ12のデータ残量が上限値SR2と下限値SR1との間に収まるよう送信データの制御を行う。

データの転送が停止されると、各再生装置42のバッファメモリ12の残量は、デコーダでデータが消費されるに従って次第に減少する。代表となっている再生装置42のバッファメモリ12の残量が下限値SR1に達したことをコンテンツサーバー41が検知すると、コンテンツサーバー41はデータの配信を再開する。

但し、各コンテンツの最後の部分では、バッファメモリ12の残量が下限値SR1に達したことが検知されても、それ以上送信すべきデータが存在しないので、図3に点線EOCで示すように、残量はさらに少なくなり、最後はゼロに達する。

バッファメモリ12の容量が10Mbit、下限値SR1が3Mbit、上限値SR2が6Mbitとすると、再生装置(F)の残量が6Mbitになった時点で、再生装置(S)の残量は、9.5Mbitとなり、バッファメモリの容量の限界に近づいている。このまま再生(そのためのデータの送信)をさらに継続すると、再生装置(S)のバッファメモリ12の容量を超えたときに、再生すべきデータが捨てられ、一部フレームの抜けた映像が表示されることになる。

PESパケットのうち、符号「I」で示すものはIピクチャーで構成されたもの、符号「P」はPピクチャーで構成されたもの、符号「B」はBピクチャーで構成されたものを示す。符号「I」、「P」、「B」に付された添え字「1」、「2」、「3」などはそれぞれI、P、Bピクチャー同士を区別するためのものであり、再生の順序をも示す。

“num_of_PES”は、本編に追加された同期調整用に利用できるPES(フレーム)の総数である。次のループは、“num_of_PES”の数だけ繰り返される。10フレームある場合は“num_of_PES”=10となる。

“PTS_for_adjust(i)”は、同期調整用に利用できるPESパケットの各々の先頭のPTS値を示す。

“SPN_for_adjust(i)”は、“PTS_for_adjust(i)”が指し示すPESパケットの先頭を含むTSパケットが、TSデータの先頭から何パケット目であるかを示す値である。

なお、本実施の形態では、調整パケット情報をTSパケットに重畳して再生装置42に送っているが、必ずしもTSパケットに重畳する必要は無く、独自のコマンドに調整パケット情報を載せて、再生装置42に送ってもよい。

通常再生時においては、CPU21では、TSデータに含まれる調整情報パケットのデータをから、追加された10フレームのPESパケットが含まれるTSパケットが来るのを監視し、SPN_for_adjust(0)以降のTSパケットは破棄し、バッファメモリ12に蓄積を行わない。

再生時刻の差が1フレーム以上あり、調整の必要性があると判断された場合、再生時刻の中の平均値を持つ再生装置42を基準となる再生装置42とし、その再生時刻を基準時刻として全再生装置42に対して通知する(ST6)。

基準時刻を受け取った各再生装置42は、先ほど自身で通知した再生時刻と、コンテンツサーバー41から通知された基準時刻との差から、基準の再生装置42から、遅れて再生しているのか、進んで再生をしているかを認識し再生時刻を調整する(ST7)。

基準時刻から1フレーム以上遅れていると判断した再生装置42は、基準時刻と、自らの再生時刻から遅れ時間を算出し、遅れ時間に相当するフレーム数を割り出し、5フレームから遅れ時間に相当するフレーム数を減算することで、バッファメモリ12に蓄積するべきデータサイズを計算する。例えば遅れ時間が60msec(2フレーム分)であった場合、TSデータの終端に付加された10フレームの内、前半の3フレーム分だけをバッファメモリ12に蓄積し、SPN_for_adjust(3)以降のデータを破棄する(ST9)。

この結果、現在再生中のTSデータの総再生時間は、本編+3フレームとなり、最後の4フレームは本編最終のフレームが連続して表示されることになる。付加された前半3フレーム分を再生した後、引き続き次のコンテンツのTSデータの再生を継続する。

実施の形態1では、各再生装置42間の再生時刻のばらつきが調整用に用意されたフレーム数以内に収まっている場合の調整方法について説明をおこなった。しかし、再生装置42内でCPU21に急な負荷がかったり、また電気的なノイズの影響で再生装置42にリセットがかかってしまったりして、再生準備可能となる前に、コンテンツサーバー41からのデータ配信が開始されてしまった場合、大幅に再生同期がずれてしまう場合がある。この場合、ずれ時間が、数秒間にもなるため、実施の形態1の調整方法で、同期がとれるまでに、数十分から数時間を要する場合がある。

まず、基準となっている再生装置42の再生時刻に対して、実施の形態1で説明した調整方法で、同期化が可能な範囲内のずれ量かどうか(実施の形態1で説明した方法で調整が可能な範囲内かどうか)を判断する(ST13)。

再生時刻のずれ量が、同期化が可能な範囲外であると判断されると、現在再生中のコンテンツの再生を中断し、バッファメモリ12に蓄積されているすべてのデータをクリアする(ST15)。

Claims (14)

- ネットワークで接続されたコンテンツサーバーから、或いは蓄積メディアから、少なくともフレーム内符号化画像情報、及びフレーム間順方向予測符号化画像情報を含むシーケンスで構成され、かつタイムスタンプ情報を含むコンテンツを複数の映像情報再生装置で受信し、前記コンテンツを前記映像情報再生装置で復号し再生する映像情報再生方法であって、

複数の映像情報再生装置の中から、再生時刻の基準となる映像情報再生装置を決めて、それぞれの映像情報再生装置と、基準となる映像情報再生装置との間の再生時刻の誤差を検出する検出ステップと、

基準となる映像情報再生装置から遅れている場合は、遅れ時間に応じて再生する符号化されたコンテンツのフレーム数を、基準となる映像情報再生装置が再生するフレーム数から減じ、基準となる映像情報再生装置から進んでいる場合は、進み時間に応じて再生する符号化されたコンテンツのフレーム数を、基準となる映像情報再生装置が再生するフレームに加算して、再生するフレーム数を調整する調整ステップと

を有することを特徴とする映像情報再生方法。 - 前記それぞれの映像情報再生装置と前記基準となる映像情報再生装置との間の再生時刻の誤差を検出するステップにおいて、コンテンツサーバーが、各映像情報再生装置から通知される再生時刻を集計し、平均値に最も近い再生時刻の映像情報再生装置を基準とすることを特徴とする請求項1に記載の映像情報再生方法。

- 前記それぞれの映像情報再生装置と前記基準となる映像情報再生装置との間の再生時刻の誤差を検出するステップにおいて、コンテンツサーバーが、各映像情報再生装置から通知される再生時刻を集計し、最も遅れた再生時刻の映像情報再生装置を基準とすることを特徴とする請求項1に記載の映像情報再生方法。

- 前記それぞれの映像情報再生装置と前記基準となる映像情報再生装置との間の再生時刻の誤差を検出するステップにおいて、コンテンツサーバーが、各映像情報再生装置から通知される再生時刻を集計し、最も進んだ再生時刻の映像情報再生装置を基準とすることを特徴とする請求項1に記載の映像情報再生方法。

- 前記検出ステップで検出された再生時刻の誤差が、前記調整ステップによる調整により同期化が可能な範囲内のものかどうか判断する判断ステップと、

上記判断ステップで同期化が可能な範囲内のものではないと判断された場合、現在再生中のコンテンツの再生を中断し、バッファメモリに蓄積されているコンテンツのデータを破棄する破棄ステップと、

再生を中断した映像情報再生装置において、次のコンテンツを受信した時点より、コンテンツのデータをバッファメモリに蓄積する蓄積ステップと、

次のコンテンツを、基準となる映像情報再生装置が再生を開始したタイミングを、前記再生を中断した映像情報再生装置がコンテンツサーバーから通知を受け、その通知を受けたら、次のコンテンツの再生を開始する開始制御ステップと

をさらに有することを特徴とする請求項1に記載の映像情報再生方法。 - 前記フレーム内符号化画像情報、及びフレーム間順方向予測符号化画像情報がMPEG2またはH.264で符号化されたものであることを特徴とする請求項1乃至5のいずれかに記載の映像情報再生方法。

- ネットワークで接続されたコンテンツサーバーから、或いは蓄積メディアから、少なくともフレーム内符号化画像情報、及びフレーム間順方向予測符号化画像情報を含むシーケンスで構成され、かつタイムスタンプ情報を含むコンテンツを複数の映像情報再生装置で受信し、前記コンテンツを前記映像情報再生装置で復号し再生する映像情報再生システムであって、

複数の映像情報再生装置の中から、再生時刻の基準となる映像情報再生装置を決めて、それぞれの映像情報再生装置と、基準となる映像情報再生装置との間の再生時刻の誤差を検出する手段と、

基準となる映像情報再生装置から遅れている場合は、遅れ時間に応じて再生する符号化されたコンテンツのフレーム数を、基準となる映像情報再生装置が再生するフレーム数から減じ、基準となる映像情報再生装置から進んでいる場合は、進み時間に応じて再生する符号化されたコンテンツのフレーム数を、基準となる映像情報再生装置が再生するフレームに加算して、再生するフレーム数を調整する手段と、

を有することを特徴とする映像情報再生システム。 - 前記それぞれの映像情報再生装置と前記基準となる映像情報再生装置との間の再生時刻の誤差を検出する手段において、コンテンツサーバーが、各映像情報再生装置から通知される再生時刻を集計し、平均値に最も近い再生時刻の映像情報再生装置を基準とすることを特徴とする請求項7に記載の映像情報再生システム。

- 前記それぞれの映像情報再生装置と前記基準となる映像情報再生装置との間の再生時刻の誤差を検出する手段において、コンテンツサーバーが、各映像情報再生装置から通知される再生時刻を集計し、最も遅れた再生時刻の映像情報再生装置を基準とすることを特徴とする請求項7に記載の映像情報再生システム。

- 前記それぞれの映像情報再生装置と前記基準となる映像情報再生装置との間の再生時刻の誤差を検出する手段において、コンテンツサーバーが、各映像情報再生装置から通知される再生時刻を集計し、最も進んだ再生時刻の映像情報再生装置を基準とすることを特徴とする請求項7に記載の映像情報再生システム。

- 前記検出手段で検出された再生時刻の誤差が、前記調整手段による調整により同期化が可能な範囲内のものかどうか判断する判断手段と、

上記判断手段で同期化が可能な範囲内のものではないと判断された場合、現在再生中のコンテンツの再生を中断し、バッファメモリに蓄積されているコンテンツのデータを破棄する破棄手段と、

再生を中断した映像情報再生装置において、次のコンテンツを受信した時点より、コンテンツのデータをバッファメモリに蓄積する蓄積手段と、

次のコンテンツを、基準となる映像情報再生装置が再生を開始したタイミングを、前記再生を中断した映像情報再生装置がコンテンツサーバーから通知を受けたら、次のコンテンツの再生を開始する開始制御手段と

をさらに有することを特徴とする請求項7に記載の映像情報再生システム。 - 前記フレーム内符号化画像情報、及びフレーム間順方向予測符号化画像情報がMPEG2またはH.264で符号化されたものであることを特徴とする請求項7乃至11のいずれかに記載の映像情報再生システム。

- ネットワークで接続されたコンテンツサーバーから配信される、或いは蓄積メディアから、少なくともフレーム内符号化画像情報、及びフレーム間順方向予測符号化画像情報を含むシーケンスで構成され、かつタイムスタンプ情報を含むコンテンツであって、

前記本編の最後のフレームと同じ内容の映像を表示するためのフレーム内符号化画像情報、及びフレーム間順方向予測符号化画像情報が、再生同期調整用のフレームとして、本編の最後のフレームの後に複数枚追加されている

ことを特徴とする映像情報コンテンツ。 - 前記フレーム内符号化画像情報、及びフレーム間順方向予測符号化画像情報がMPEG2またはH.264で符号化されたものであることを特徴とする請求項13に記載の映像情報コンテンツ。

Priority Applications (5)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| KR1020127013418A KR101443470B1 (ko) | 2009-11-27 | 2010-04-14 | 영상 정보 재생 방법 및 시스템, 및 영상 정보 콘텐츠가 기록된 컴퓨터 판독 가능한 기록 매체 |

| EP10832765.1A EP2506570B1 (en) | 2009-11-27 | 2010-04-14 | Method and system for playing video information, and video information content |

| US13/498,243 US9066061B2 (en) | 2009-11-27 | 2010-04-14 | Video information reproduction method and system, and video information content |

| CN201080053315.2A CN102640511B (zh) | 2009-11-27 | 2010-04-14 | 视频信息再现方法和系统、以及视频信息内容 |

| HK12112071.3A HK1171591A1 (en) | 2009-11-27 | 2012-11-26 | Method and system for playing video information, and video information content |

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| JP2009-270330 | 2009-11-27 | ||

| JP2009270330A JP5489675B2 (ja) | 2009-11-27 | 2009-11-27 | 映像情報再生方法及びシステム、並びに映像情報コンテンツ |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| WO2011064909A1 true WO2011064909A1 (ja) | 2011-06-03 |

Family

ID=44066024

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/JP2010/002707 WO2011064909A1 (ja) | 2009-11-27 | 2010-04-14 | 映像情報再生方法及びシステム、並びに映像情報コンテンツ |

Country Status (7)

| Country | Link |

|---|---|

| US (1) | US9066061B2 (ja) |

| EP (1) | EP2506570B1 (ja) |

| JP (1) | JP5489675B2 (ja) |

| KR (1) | KR101443470B1 (ja) |

| CN (1) | CN102640511B (ja) |

| HK (1) | HK1171591A1 (ja) |

| WO (1) | WO2011064909A1 (ja) |

Families Citing this family (28)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| KR101987756B1 (ko) | 2012-07-24 | 2019-06-11 | 삼성전자주식회사 | 미디어 재생 방법 및 미디어 장치 |

| JP5542892B2 (ja) * | 2012-10-18 | 2014-07-09 | 株式会社東芝 | コンテンツ処理装置、及びコンテンツ同期方法 |

| US8978076B2 (en) * | 2012-11-05 | 2015-03-10 | Comcast Cable Communications, Llc | Methods and systems for content control |

| JP6376526B2 (ja) * | 2012-12-03 | 2018-08-22 | 株式会社コナミデジタルエンタテインメント | 出力タイミング制御装置、出力タイミング制御システム、出力タイミング制御方法、およびプログラム |

| JP6051847B2 (ja) * | 2012-12-25 | 2016-12-27 | 三菱電機株式会社 | 映像情報再生方法及びシステム |

| JP2015015706A (ja) * | 2013-07-03 | 2015-01-22 | パナソニック インテレクチュアル プロパティ コーポレーション オブアメリカPanasonic Intellectual Property Corporation of America | データ送信方法、データ再生方法、データ送信装置およびデータ再生装置 |

| KR101782453B1 (ko) * | 2013-07-08 | 2017-09-28 | 후아웨이 테크놀러지 컴퍼니 리미티드 | 비디오 재생 제어 방법, 장치 및 시스템 |

| EP3020204B1 (en) | 2013-07-09 | 2022-04-27 | Koninklijke KPN N.V. | Synchronized data processing between receivers |

| KR20150033827A (ko) * | 2013-09-24 | 2015-04-02 | 삼성전자주식회사 | 영상표시장치, 서버 및 그 동작방법 |

| KR20150037372A (ko) * | 2013-09-30 | 2015-04-08 | 삼성전자주식회사 | 영상표시장치, 컨텐츠 동기화 서버 및 그 동작방법 |

| JP6510205B2 (ja) * | 2013-10-11 | 2019-05-08 | パナソニック インテレクチュアル プロパティ コーポレーション オブ アメリカPanasonic Intellectual Property Corporation of America | 送信方法、受信方法、送信装置および受信装置 |

| JP6136993B2 (ja) * | 2014-03-05 | 2017-05-31 | 三菱電機株式会社 | 映像情報再生システム、この映像情報再生システムを備えた映像情報表示システム及びこの映像情報再生システムに設けられた車両内映像再生装置 |

| CN104822008B (zh) * | 2014-04-25 | 2019-01-08 | 腾讯科技(北京)有限公司 | 视频同步方法及装置 |

| EP3151577B1 (en) * | 2014-05-28 | 2019-12-04 | Sony Corporation | Information processing apparatus, information processing method, and program |

| KR102336709B1 (ko) | 2015-03-11 | 2021-12-07 | 삼성디스플레이 주식회사 | 타일드 표시 장치 및 그 동기화 방법 |

| US20160295256A1 (en) * | 2015-03-31 | 2016-10-06 | Microsoft Technology Licensing, Llc | Digital content streaming from digital tv broadcast |

| US10021438B2 (en) | 2015-12-09 | 2018-07-10 | Comcast Cable Communications, Llc | Synchronizing playback of segmented video content across multiple video playback devices |

| US11165683B2 (en) * | 2015-12-29 | 2021-11-02 | Xilinx, Inc. | Network interface device |

| US11044183B2 (en) | 2015-12-29 | 2021-06-22 | Xilinx, Inc. | Network interface device |

| JP6623888B2 (ja) * | 2016-03-29 | 2019-12-25 | セイコーエプソン株式会社 | 表示システム、表示装置、頭部装着型表示装置、表示制御方法、表示装置の制御方法、及び、プログラム |

| US10560730B2 (en) | 2016-11-09 | 2020-02-11 | Samsung Electronics Co., Ltd. | Electronic apparatus and operating method thereof |

| CN110557675A (zh) * | 2018-05-30 | 2019-12-10 | 北京视连通科技有限公司 | 一种对视频节目内容进行分析、标注及时基校正的方法 |

| JP7229696B2 (ja) * | 2018-08-02 | 2023-02-28 | キヤノン株式会社 | 情報処理装置、情報処理方法、及びプログラム |

| KR102650340B1 (ko) * | 2019-06-07 | 2024-03-22 | 주식회사 케이티 | 재생 시각을 정밀하게 제어하는 동영상 재생 단말, 방법 및 서버 |

| KR20210087273A (ko) * | 2020-01-02 | 2021-07-12 | 삼성전자주식회사 | 전자장치 및 그 제어방법 |

| CN112153445B (zh) * | 2020-09-25 | 2022-04-12 | 四川湖山电器股份有限公司 | 一种分布式视频显示系统同步解码播放方法及系统 |

| CN112675531A (zh) * | 2021-01-05 | 2021-04-20 | 深圳市欢太科技有限公司 | 数据同步方法、装置、计算机存储介质及电子设备 |

| US11968417B2 (en) * | 2021-12-30 | 2024-04-23 | Comcast Cable Communications, Llc | Systems, methods, and apparatuses for buffer management |

Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2005244605A (ja) * | 2004-02-26 | 2005-09-08 | Nippon Telegr & Teleph Corp <Ntt> | ストリーミングコンテンツ配信制御システム、プログラム及び該プログラムを格納した記録媒体 |

| JP2006287642A (ja) * | 2005-03-31 | 2006-10-19 | Nec Corp | Mpegコンテンツの同期再生方法、クライアント端末、mpegコンテンツの同期再生プログラム |

| JP2009071632A (ja) * | 2007-09-13 | 2009-04-02 | Yamaha Corp | 端末装置およびデータ配信システム |

| JP2009081654A (ja) * | 2007-09-26 | 2009-04-16 | Nec Corp | ストリーム同期再生システム及び方法 |

| JP2009205396A (ja) * | 2008-02-27 | 2009-09-10 | Sanyo Electric Co Ltd | 配信装置および受信装置 |

Family Cites Families (9)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US6061399A (en) * | 1997-05-28 | 2000-05-09 | Sarnoff Corporation | Method and apparatus for information stream frame synchronization |

| US6034731A (en) * | 1997-08-13 | 2000-03-07 | Sarnoff Corporation | MPEG frame processing method and apparatus |

| US6324217B1 (en) * | 1998-07-08 | 2001-11-27 | Diva Systems Corporation | Method and apparatus for producing an information stream having still images |

| US6507616B1 (en) * | 1998-10-28 | 2003-01-14 | Lg Information & Communications, Ltd. | Video signal coding method |

| JP4039417B2 (ja) * | 2004-10-15 | 2008-01-30 | 株式会社日立製作所 | 記録再生装置 |

| WO2006112508A1 (ja) | 2005-04-20 | 2006-10-26 | Matsushita Electric Industrial Co., Ltd. | ストリームデータ記録装置、ストリームデータ編集装置、ストリームデータ再生装置、ストリームデータ記録方法、及びストリームデータ再生方法 |

| JP2008096756A (ja) | 2006-10-12 | 2008-04-24 | Sharp Corp | 多画面表示システムおよびその表示方法 |

| US20090213777A1 (en) | 2008-02-27 | 2009-08-27 | Sanyo Electric Co., Ltd. | Distribution apparatus and receiving apparatus for reproducing predetermined contents |

| KR20120080122A (ko) * | 2011-01-06 | 2012-07-16 | 삼성전자주식회사 | 경쟁 기반의 다시점 비디오 부호화/복호화 장치 및 방법 |

-

2009

- 2009-11-27 JP JP2009270330A patent/JP5489675B2/ja not_active Expired - Fee Related

-

2010

- 2010-04-14 KR KR1020127013418A patent/KR101443470B1/ko active IP Right Grant

- 2010-04-14 US US13/498,243 patent/US9066061B2/en not_active Expired - Fee Related

- 2010-04-14 WO PCT/JP2010/002707 patent/WO2011064909A1/ja active Application Filing

- 2010-04-14 EP EP10832765.1A patent/EP2506570B1/en not_active Not-in-force

- 2010-04-14 CN CN201080053315.2A patent/CN102640511B/zh not_active Expired - Fee Related

-

2012

- 2012-11-26 HK HK12112071.3A patent/HK1171591A1/xx not_active IP Right Cessation

Patent Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2005244605A (ja) * | 2004-02-26 | 2005-09-08 | Nippon Telegr & Teleph Corp <Ntt> | ストリーミングコンテンツ配信制御システム、プログラム及び該プログラムを格納した記録媒体 |

| JP2006287642A (ja) * | 2005-03-31 | 2006-10-19 | Nec Corp | Mpegコンテンツの同期再生方法、クライアント端末、mpegコンテンツの同期再生プログラム |

| JP2009071632A (ja) * | 2007-09-13 | 2009-04-02 | Yamaha Corp | 端末装置およびデータ配信システム |

| JP2009081654A (ja) * | 2007-09-26 | 2009-04-16 | Nec Corp | ストリーム同期再生システム及び方法 |

| JP2009205396A (ja) * | 2008-02-27 | 2009-09-10 | Sanyo Electric Co Ltd | 配信装置および受信装置 |

Non-Patent Citations (1)

| Title |

|---|

| See also references of EP2506570A4 * |

Also Published As

| Publication number | Publication date |

|---|---|

| EP2506570A1 (en) | 2012-10-03 |

| JP2011114681A (ja) | 2011-06-09 |

| KR101443470B1 (ko) | 2014-09-22 |

| US20120207215A1 (en) | 2012-08-16 |

| HK1171591A1 (en) | 2013-03-28 |

| CN102640511A (zh) | 2012-08-15 |

| EP2506570A4 (en) | 2013-08-21 |

| CN102640511B (zh) | 2014-11-19 |

| JP5489675B2 (ja) | 2014-05-14 |

| EP2506570B1 (en) | 2017-08-23 |

| US9066061B2 (en) | 2015-06-23 |

| KR20120068048A (ko) | 2012-06-26 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| JP5489675B2 (ja) | 映像情報再生方法及びシステム、並びに映像情報コンテンツ | |

| JP4118232B2 (ja) | 映像データ処理方法および映像データ処理装置 | |

| JP2011114681A5 (ja) | ||

| US8526501B2 (en) | Decoder and decoding method based on video and audio time information | |

| JP2005531245A (ja) | パーソナルビデオレコーディングの応用におけるmpegデコーダでの音声画像同期を達成する安定した方法 | |

| JP2004297577A (ja) | 画像再生装置 | |

| JP2006186580A (ja) | 再生装置およびデコード制御方法 | |

| CN101207764A (zh) | 记录装置、记录方法和再现装置 | |

| JP6051847B2 (ja) | 映像情報再生方法及びシステム | |

| KR100981378B1 (ko) | 비디오 데이터 및 보조 데이터의 판독-동기화를 위한 디바이스 및 방법, 및 이와 연관된 제품 | |

| US20090046994A1 (en) | Digital television broadcast recording and reproduction apparatus and reproduction method thereof | |

| JP4933145B2 (ja) | ネットワーク受信機 | |

| JP4987034B2 (ja) | 映像表示装置 | |

| JP3583977B2 (ja) | デジタルビデオ再生装置 | |

| JP2005151434A (ja) | 受信装置および方法、記録媒体、並びにプログラム | |

| JP2004350237A (ja) | ストリーム切り替え装置、ストリーム切り替え方法及びストリーム切り替えプログラム、並びに、表示時刻補正装置 | |

| JPH099215A (ja) | データ多重方法、データ伝送方法、及び多重データ復号方法、多重データ復号装置 | |

| JP6464647B2 (ja) | 動画像処理方法、動画像の送信装置、動画像処理システムおよび動画像処理プログラム | |

| US20050265369A1 (en) | Network receiving apparatus and network transmitting apparatus | |

| JP3671969B2 (ja) | データ多重方法及び多重データ復号方法 | |

| JP4188402B2 (ja) | 映像受信装置 | |

| JP4390666B2 (ja) | 圧縮映像データ及び圧縮音声データの復号再生方法及び復号再生装置 | |

| JP4719602B2 (ja) | ビデオ出力システム | |

| JP2005102192A (ja) | コンテンツ受信装置、ビデオオーディオ出力タイミング制御方法及びコンテンツ提供システム | |

| JP5720285B2 (ja) | ストリーミングシステム |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| WWE | Wipo information: entry into national phase |

Ref document number: 201080053315.2 Country of ref document: CN |

|

| 121 | Ep: the epo has been informed by wipo that ep was designated in this application |

Ref document number: 10832765 Country of ref document: EP Kind code of ref document: A1 |

|

| REEP | Request for entry into the european phase |

Ref document number: 2010832765 Country of ref document: EP |

|

| WWE | Wipo information: entry into national phase |

Ref document number: 2010832765 Country of ref document: EP |

|

| WWE | Wipo information: entry into national phase |

Ref document number: 13498243 Country of ref document: US |

|

| ENP | Entry into the national phase |

Ref document number: 20127013418 Country of ref document: KR Kind code of ref document: A |

|

| NENP | Non-entry into the national phase |

Ref country code: DE |