WO2014024751A1 - 音声応答装置 - Google Patents

音声応答装置 Download PDFInfo

- Publication number

- WO2014024751A1 WO2014024751A1 PCT/JP2013/070756 JP2013070756W WO2014024751A1 WO 2014024751 A1 WO2014024751 A1 WO 2014024751A1 JP 2013070756 W JP2013070756 W JP 2013070756W WO 2014024751 A1 WO2014024751 A1 WO 2014024751A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- voice

- input

- schedule

- person

- unit

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10L—SPEECH ANALYSIS TECHNIQUES OR SPEECH SYNTHESIS; SPEECH RECOGNITION; SPEECH OR VOICE PROCESSING TECHNIQUES; SPEECH OR AUDIO CODING OR DECODING

- G10L15/00—Speech recognition

- G10L15/22—Procedures used during a speech recognition process, e.g. man-machine dialogue

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10L—SPEECH ANALYSIS TECHNIQUES OR SPEECH SYNTHESIS; SPEECH RECOGNITION; SPEECH OR VOICE PROCESSING TECHNIQUES; SPEECH OR AUDIO CODING OR DECODING

- G10L17/00—Speaker identification or verification techniques

- G10L17/26—Recognition of special voice characteristics, e.g. for use in lie detectors; Recognition of animal voices

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10L—SPEECH ANALYSIS TECHNIQUES OR SPEECH SYNTHESIS; SPEECH RECOGNITION; SPEECH OR VOICE PROCESSING TECHNIQUES; SPEECH OR AUDIO CODING OR DECODING

- G10L15/00—Speech recognition

- G10L15/22—Procedures used during a speech recognition process, e.g. man-machine dialogue

- G10L2015/226—Procedures used during a speech recognition process, e.g. man-machine dialogue using non-speech characteristics

- G10L2015/227—Procedures used during a speech recognition process, e.g. man-machine dialogue using non-speech characteristics of the speaker; Human-factor methodology

Definitions

- the present invention relates to a voice response device that makes a response to an input voice by voice.

- One aspect of the present invention is to improve the usability for a user in a voice response device that performs voice response to input voice.

- the invention of the first aspect A voice response device that makes a response to an input voice by voice, An audio feature recording unit for recording the features of the input audio; A voice coincidence determining unit that determines whether or not a feature of the input voice matches a feature of the voice previously recorded by the voice feature recording unit; An audio output unit that outputs a response different from that when the audio feature is determined to match when the audio match determination unit determines that the audio feature does not match; It is provided with.

- a voice response device when the person who inputted the voice is different from the previous one, the person who inputted the voice can return a different response from the same case as before. Therefore, compared with the case where the same answer is made regardless of whether or not the person who inputted the voice is the same as before, it is possible to improve the usability for the user.

- a person identifying unit that identifies a person who has input the sound based on the characteristics of the input sound;

- a control unit that controls the controlled unit according to the input voice, When receiving a contradicting instruction from a different person, the control unit may perform control by giving priority to an instruction by a person having a higher priority according to a priority set in advance for each person.

- a voice response device even if a contradictory instruction is received from a different person, it is possible to control the controlled unit according to the priority order.

- the contradiction may be pointed out by a voice response, or an alternative may be presented.

- a response including the weather may be output.

- a person identifying unit that identifies a person who has input the sound based on the characteristics of the input sound;

- a schedule recording unit that records a schedule based on the input voice for each person.

- a schedule can be managed for each person.

- the schedule recording unit can be subordinate to the invention according to the second aspect except for the person specifying unit.

- the priority of the schedule may be changed according to the attribute of the schedule.

- the scheduled attribute is classified by, for example, whether or not it can be changed (whether or not there is an influence on the partner).

- the schedules of these plurality of persons are not available. It is also possible to search for a certain time zone and set a meeting at this time. Further, when there is no vacant time zone, the already registered schedule may be changed according to the schedule attribute.

- the predetermined contact address You may be asked to contact.

- the inquiry source and the inquiry destination may be specified using the position information.

- the correct content can be obtained by inquiring to the mother or when the content of the old man cannot be heard, by contacting the family of the elderly. Therefore, it is possible to ensure the accuracy of the input voice.

- the voice response device is provided with a plurality of databases prepared in advance according to age information indicating the age or age group of the user (the person who inputted the voice) as in the invention of the fifth aspect.

- a database to be used may be selected according to the user's age information, and voice may be recognized according to the selected database.

- the database to be referred to at the time of voice recognition is changed according to the age. Therefore, if the words frequently used according to the age, the wording of the words are registered, the voice recognition is performed. Accuracy can be improved.

- the age of the user for example, it may be estimated according to the characteristics of the input voice (voice waveform, voice pitch, etc.), or the camera may be used when the user inputs the voice.

- the image may be estimated by imaging the user's face by an imaging unit such as the above.

- the present invention may be applied to a face-to-face device such as an automatic teller machine. In this case, identity verification such as age authentication can be performed using the present invention.

- the present invention may be applied to a vehicle.

- the configuration for specifying the person can be used as a configuration that replaces the vehicle key.

- the said invention demonstrated as a voice response apparatus, you may comprise as a voice recognition apparatus provided with the structure which recognizes the input audio

- the invention of each aspect does not have to be based on other inventions, and can be made as independent as possible.

- FIG. 1 is a block diagram showing a schematic configuration of a voice response system to which the present invention is applied. It is a block diagram which shows schematic structure of a terminal device. It is a flowchart which shows the voice response terminal process which MPU of a terminal device performs. It is a flowchart which shows the voice response server process (the 1) which the calculating part of a server performs. It is a flowchart which shows a voice response server process (the 2).

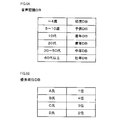

- FIG. 6A is an explanatory diagram showing a voice recognition DB.

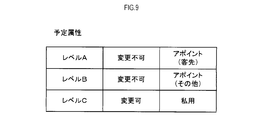

- FIG. 6B is an explanatory diagram of the priority DB. It is a flowchart which shows the schedule input process among audio

- SYMBOLS 1 Terminal device, 10 ... Behavior sensor unit, 11 ... Three-dimensional acceleration sensor, 13 ... 3-axis gyro sensor, 15 ... Temperature sensor, 17 ... Humidity sensor, 19 ... Temperature sensor, 21 ... Humidity sensor, 23 ... Illuminance sensor, 25 ... Wetting sensor, 27 ... GPS receiver, 29 ... Wind speed sensor, 33 ... Electrocardiographic sensor, 35 ... Heart sound sensor, 37 ... Microphone, 39 ... Memory, 41 ... Camera, 50 ... Communication unit, 53 ... Wireless telephone unit, 55 ... Contact memory, 60 ... Notification unit, 61 ... Display, 63 ... Illumination, 65 ... Speaker, 70 ... Operation unit, 71 ...

- the voice response system 100 to which the present invention is applied is a system configured to generate an appropriate response at the server 90 and output the response by voice at the terminal device 1 with respect to the voice input at the terminal device 1. It is. Further, when a command is included in the input voice, the control command is output to the target device (the controlled unit 95). Further, it has a function of managing the user's schedule.

- the voice response system 100 includes a plurality of terminal devices 1, various devices (controlled units 95) such as an air conditioner mounted on a vehicle, and a server 90 with communication base stations 80 and 81. And are configured to be able to communicate with each other via the Internet network 85.

- the terminal device 1 may be configured to directly communicate with other terminal devices 1 and the controlled unit 95.

- the server 90 has a function as a normal server device.

- the server 90 includes a calculation unit 101 and various databases (DB).

- the calculation unit 101 is configured as a well-known calculation device including a CPU and a memory such as a ROM and a RAM. Based on a program in the memory, the calculation unit 101 communicates with the terminal device 1 and the like via the Internet network 85, Various processes such as voice recognition and response generation for performing reading / writing of data in various DBs or conversation with a user using the terminal device 1 are performed.

- the various DBs include a voice recognition DB 102, a prediction conversion DB 103, a voice DB 104, a response candidate DB 105, a personality DB 106, a learning DB 107, a preference DB 108, a news DB 109, a weather DB 110, a priority DB 111, a schedule DB 112, a terminal.

- An information DB 113, an emotion determination DB 114, a health determination DB 115, a report destination DB 117, and the like are provided. The details of these DBs will be described every time the processing is described.

- the terminal device 1 includes a behavior sensor unit 10, a communication unit 50, a notification unit 60, and an operation unit 70 provided in a predetermined housing.

- the behavior sensor unit 10 includes a well-known MPU 31 (microprocessor unit), a memory 39 such as a ROM and a RAM, and various sensors.

- the MPU 31 includes sensor elements that constitute various sensors to be inspected (humidity, wind speed, etc.). For example, processing such as driving a heater for optimizing the temperature of the sensor element is performed so that the detection can be performed satisfactorily.

- the behavior sensor unit 10 includes, as various sensors, a three-dimensional acceleration sensor 11 (3DG sensor), a three-axis gyro sensor 13, a temperature sensor 15 disposed on the back surface of the housing, and humidity disposed on the back surface of the housing.

- a wetness sensor 25 a GPS receiver 27 that detects the current location of the terminal device 1, and a wind speed sensor 29.

- the behavior sensor unit 10 also includes an electrocardiogram sensor 33, a heart sound sensor 35, a microphone 37, and a camera 41 as various sensors.

- the temperature sensors 15 and 19 and the humidity sensors 17 and 21 measure the temperature or humidity of the outside air of the housing as an inspection target.

- the three-dimensional acceleration sensor 11 measures accelerations applied to the terminal device 1 in three orthogonal directions (vertical direction (Z direction), width direction of the casing (Y direction), and thickness direction of the casing (X direction)). Detect and output the detection result.

- the three-axis gyro sensor 13 has an angular velocity applied to the terminal device 1 as a vertical direction (Z direction), two arbitrary directions orthogonal to the vertical direction (a width direction of the casing (Y direction), and a casing Angular acceleration (thickness direction (X direction)) (counterclockwise speed in each direction is positive) is detected, and the detection result is output.

- the temperature sensors 15 and 19 include, for example, a thermistor element whose electric resistance changes according to temperature.

- the temperature sensors 15 and 19 detect the Celsius temperature, and all temperature displays described in the following description are performed at the Celsius temperature.

- the humidity sensors 17 and 21 are configured as, for example, known polymer film humidity sensors.

- This polymer film humidity sensor is configured as a capacitor in which the amount of moisture contained in the polymer film changes in accordance with the change in relative humidity and the dielectric constant changes.

- the illuminance sensor 23 is configured as a well-known illuminance sensor including a phototransistor, for example.

- the wind speed sensor 29 is, for example, a well-known wind speed sensor, and calculates the wind speed from electric power (heat radiation amount) necessary for maintaining the heater temperature at a predetermined temperature.

- the heart sound sensor 35 is configured as a vibration sensor that captures vibrations caused by the beat of the heart of the user.

- the MPU 31 considers the detection result of the heart sound sensor 35 and the heart sound input from the microphone 37, Distinguish between noise and other vibrations and noise.

- the wetness sensor 25 detects water droplets on the surface of the housing, and the electrocardiographic sensor 33 detects the user's heartbeat.

- the camera 41 is arranged in the casing of the terminal device 1 so that the outside of the terminal device 1 is an imaging range. In particular, in the present embodiment, the camera 41 is arranged at a position where the user of the terminal device 1 can be imaged.

- the communication unit 50 includes a well-known MPU 51, a wireless telephone unit 53, and a contact memory 55, and can acquire detection signals from various sensors constituting the behavior sensor unit 10 via an input / output interface (not shown). It is configured. And MPU51 of the communication part 50 performs the process according to the detection result by this behavior sensor unit 10, the input signal input via the operation part 70, and the program stored in ROM (illustration omitted).

- the MPU 51 of the communication unit 50 functions as an operation detection device that detects a specific operation performed by the user, a function as a positional relationship detection device that detects a positional relationship with the user, and is performed by the user.

- the function as an exercise load detection device for detecting the exercise load and the function of transmitting the processing result by the MPU 51 are executed.

- the radio telephone unit 53 is configured to be able to communicate with, for example, a mobile phone base station, and the MPU 51 of the communication unit 50 outputs a processing result by the MPU 51 to the notification unit 60 or via the radio telephone unit 53. To a preset destination (a contact recorded in the contact memory 55).

- the contact address memory 55 functions as a storage area for storing location information of the user's visit destination.

- the contact address memory 55 stores information on contact information (such as a telephone number) to be contacted when an abnormality occurs in the user.

- the notification unit 60 includes, for example, a display 61 configured as an LCD or an organic EL display, an electrical decoration 63 made of LEDs that can emit light in, for example, seven colors, and a speaker 65.

- a display 61 configured as an LCD or an organic EL display

- an electrical decoration 63 made of LEDs that can emit light in, for example, seven colors

- a speaker 65 Each part which comprises the alerting

- the operation unit 70 includes a touch pad 71, a confirmation button 73, a fingerprint sensor 75, and a rescue request lever 77.

- the touch pad 71 outputs a signal corresponding to the position and pressure touched by the user (user, user's guardian, etc.).

- the confirmation button 73 is configured so that the contact of the built-in switch is closed when pressed by the user, and the communication unit 50 can detect that the confirmation button 73 is pressed. Yes.

- the fingerprint sensor 75 is a well-known fingerprint sensor, and is configured to be able to read a fingerprint using, for example, an optical sensor.

- a means for recognizing a human physical feature such as a sensor for recognizing the shape of a palm vein (means capable of biometric authentication: identifying an individual) If it is a possible means), it can be adopted.

- the voice response terminal process performed in the terminal device 1 is a process of receiving voice input by the user, sending the voice to the server 90, and playing back the voice response when receiving a response to be output from the server 90. . This process is started when the user inputs a voice input via the operation unit 70.

- the input from the microphone 37 is accepted (ON state) (S2), and imaging (recording) by the camera 41 is started (S4). Then, it is determined whether or not there is a voice input (S6).

- the timeout indicates that the allowable time for waiting for processing has been exceeded, and here the allowable time is set to about 5 seconds, for example.

- the process returns to S10. If the voice input is not completed (S12: NO), the process returns to S10. If the voice input has been completed (S12: YES), data such as an ID for identifying itself, a voice, and a captured image are packet-transmitted to the server 90 (S14). Note that the process of transmitting data may be performed between S10 and S12.

- S16 it is determined whether or not the data transmission is completed. If transmission has not been completed (S16: NO), the process returns to S14. If the transmission has been completed (S16: YES), it is determined whether or not data (packet) transmitted by the voice response server process described later has been received (S18). If no data has been received (S18: NO), it is determined whether or not a timeout has occurred (S20).

- reception is completed S24. If reception has not been completed (S24: NO), it is determined whether or not a timeout has occurred (S26). If timeout has occurred (S26: YES), the fact that an error has occurred is output via the notification unit 60, and the voice response terminal process is terminated. If the time has not expired (S26: NO), the process returns to S22.

- the voice response server process is a process of receiving voice from the terminal device 1, performing voice recognition for converting the voice into character information, and generating a response to the voice and returning it to the terminal device 1.

- the communication partner terminal device 1 is specified (S44). In this process, the terminal device 1 is specified by the ID of the terminal device 1 included in the packet.

- an image captured by the camera 41 included in the packet is acquired (S70), and the audio feature included in the packet is detected (S72).

- features such as voice waveform features (voice prints) and pitches are detected.

- the age group of the person who inputted the voice is specified from the picked-up image of the user and the characteristics of the voice (S74).

- the voice characteristics and the tendency of the age group are stored in the voice recognition DB 102 in advance, and the age group is specified by referring to the voice recognition DB 102.

- a well-known technique for estimating the age of the user from the captured image is also used.

- the voice recognition DB 102 stores voice characteristics for each person in advance corresponding to the name of the person, and in this process, the person is specified by referring to the voice recognition DB 102.

- the voice and the detected voice feature are recorded in the voice recognition DB 102 (S78), and a database used for voice recognition is selected (S80).

- the speech recognition DB 102 is an infant DB for up to 4 years old, a child DB for 5 to 10 years old, and a teenager (10 to 19 years old).

- Each DB has a dictionary database for correlating speech waveforms and characters (sounds or words) for recognizing speech as characters.

- speech feature tendency how the user speaks (speech feature tendency), words that tend to be used in the age group, and the like are recorded as different information.

- each DB is set to have a narrower age range for younger ages.

- the reason for this is that younger ages have a higher ability to speak and the ability to create new words so that they can respond immediately to these changes.

- one database (any one shown in FIG. 6A) matching the age group is selected and set according to the estimated age of the user. Subsequently, the voice included in the packet is recognized (S46).

- the predictive conversion DB 103 is associated with words that tend to be used following a certain word.

- a known speech recognition process is performed by referring to the selected database in the speech recognition DB 102 and the prediction conversion DB 103, and speech is converted into character information.

- an object in the captured image is specified by performing image processing on the captured image (S48). Then, the user's emotion is determined based on the waveform of the voice or the ending of the word (S50).

- the user is referred to by referring to the emotion determination DB 114 in which a speech waveform (voice color), a word ending, and the like are usually associated with emotion categories such as anger, joy, confusion, sadness, and elevation. It is determined whether or not the emotion falls in any category, and the determination result is recorded in the memory. Subsequently, by referring to the learning DB 107, a word often spoken by the user is searched, and a portion where the character information generated by the speech recognition is ambiguous is corrected.

- a speech waveform voice color

- a word ending, and the like are usually associated with emotion categories such as anger, joy, confusion, sadness, and elevation. It is determined whether or not the emotion falls in any category, and the determination result is recorded in the memory.

- the learning DB 107 a word often spoken by the user is searched, and a portion where the character information generated by the speech recognition is ambiguous is corrected.

- the learning DB 107 user features such as words often spoken by the user and habits during pronunciation are recorded for each user. Further, addition / correction of data to the learning DB 107 is performed in a conversation with the user. Further, in the predictive conversion DB 103, the emotion determination DB 104, and the like, data may be held by being classified for each age group, like the voice recognition DB 102.

- the corrected character information is specified as the input character information (S54). Then, as a result of these processes, it is determined whether or not the voice has been recognized as character information (S82). In this process, when there is a defect in the sentence (for example, when there is a grammatical error), it is considered that the sentence cannot be recognized even if the sentence is completed. If it is not recognized as character information (S82: NO), a predetermined voice (for example, “the following words” is added to a predetermined contact registered in advance in the notification destination DB 117 (a contact set for each terminal device 1). The recorded voice is played back, so please say the correct sentence. "And the voice entered by the user are sent to make an inquiry (S84).

- S84 the voice entered by the user are sent to make an inquiry

- a schedule input process for managing the schedule is performed (S90). In this process, as shown in FIG. 7, first, a schedule of a specific person for which a schedule is input is extracted (S102).

- schedule data in which specific persons and times are arranged in a matrix is extracted from the schedule DB 112 (S102), and the input schedule (time zone, schedule content, location information) Are temporarily registered (S104).

- the travel time until moving to the place where the provisionally registered schedule is implemented is calculated (S110).

- a time required for movement is calculated using a known transfer guidance program. For example, it takes about 2 hours to travel from Marunouchi in Tokyo to Nagoya.

- a schedule attribute is set for each schedule recorded in the schedule DB 112, and the schedule attribute is set at a level corresponding to the importance as shown in FIG. For example, level A corresponds to an appointment with a customer (a meeting appointment), and the schedule cannot be changed.

- level B corresponds to an appointment in the company other than the customer, for example, and the schedule cannot be changed.

- level C will be handled by private matters, and the schedule can be changed.

- the schedule attribute is recognized from the contents, and the schedule attribute is also registered. Further, the schedule attribute is recognized in this process for the temporarily registered schedule.

- the change plan is a plan in which a changeable schedule (that is, a schedule belonging to level C) is moved, and there is no conflict and the user (target person) can move between places where the schedule is executed. Present.

- the change flag is set to ON (S132), and the schedule input process is terminated. If the preceding and following schedules or the temporarily registered schedule cannot be changed (S128: NO), the fact that the schedules are duplicated is recorded (S134), and the schedule input process is terminated.

- an operation input process is performed (S94).

- This process is a process of controlling the operation of the controlled unit 95 according to the input voice. Specifically, as shown in FIG. 10, first, the command content is recognized (S202). Examples of the command contents include changing the reception channel and volume of the television receiver corresponding to the controlled unit 95, and increasing the set temperature of the air conditioner of the vehicle corresponding to the controlled unit 95 by 1 ° C. To do.

- this past command is extracted (S206), and it is determined whether there is a contradiction with the past command (S208).

- the contradiction is, for example, when there is a past command to lower the set temperature by 1 ° C. when the air conditioner of the vehicle corresponds to the controlled portion 95, which contradicts this command. For example, a command to increase the set temperature of 1 ° C. is input.

- the volume is further changed immediately after the reception channel is changed, when a command to change to another reception channel is received, or immediately after the volume is changed. This is the case when a command to execute is input.

- the priority order DB 111 As shown in FIG. 6B, a person and a priority order are recorded in association with each other. For example, when Mr. A and Mr. B input inconsistent commands, Mr. A's first place and Mr. B's fourth place are acquired from the priority order DB 111.

- Mr. A who has the highest priority gives a command to “increase the set temperature of the air conditioner of the vehicle by 1 ° C.”, and Mr. B who has the highest priority ranks “Decreases the set temperature of the air conditioner of the vehicle by 1 ° C.” ”Command is applied, Mr. B command is invalidated.

- the set command is transmitted to the controlled unit 95 (S218), and the operation input process is terminated. Also, in the process of S210, if the persons who have input contradictory commands match (S210: YES), the most recently input command is set (S216), the process of S218 described above is performed, and the operation is performed. The input process is terminated.

- the change confirmation process is a process of confirming the user's intention whether the schedule may be changed as in the presented change proposal when the schedule change proposal is presented.

- the voice “Today's * weather is *” is output.

- the portion “*” is acquired by accessing the weather DB 110 in which the region name and the weather forecast for several days in the region are associated with each other.

- the response content is converted into voice (S62).

- a process for outputting response contents (character information) as a voice is performed.

- the generated response (voice) is packet-transmitted to the communication partner terminal device 1 (S64).

- the packet may be transmitted while generating the voice of the response content.

- the conversation content is recorded (S68).

- the input character information and the output response contents are recorded in the learning DB 107 as conversation contents.

- keywords words recorded in the speech recognition DB 102 included in the conversation content, pronunciation characteristics, and the like are recorded in the learning DB 107.

- the voice response server processing ends.

- the server 90 (calculation unit 101) records the characteristics of the input voice, and the characteristics of the input voice match the previously recorded voice characteristics. It is determined whether or not. When the server 90 determines that the voice features do not match, the server 90 outputs a response different from the case where it is determined that the voice features match.

- a voice response system 100 when the person who inputted the voice is different from the previous one, the person who inputted the voice can return a response different from the same case as before. Therefore, compared with the case where the same answer is made regardless of whether or not the person who inputted the voice is the same as before, it is possible to improve the usability for the user.

- the server 90 identifies a person who has inputted a voice based on the characteristics of the inputted voice, and controls the controlled unit 95 according to the inputted voice. At this time, if the server 90 receives an inconsistent instruction from a different person, the server 90 performs control by giving priority to an instruction from a higher-priority person according to a priority order set in advance for each person.

- the control of the controlled unit 95 can be performed according to the priority order.

- the server 90 records a schedule based on the inputted voice for each person. According to such a voice response system 100, a schedule can be managed for each person.

- the server 90 changes the priority of the schedule according to the schedule attribute.

- the scheduled attributes are classified according to, for example, whether they can be changed (whether they have an influence on the other party).

- the schedule registered earlier is changed according to the schedule attribute, or the schedule registered later is registered at an available time. Also, when registering a schedule, consider the location of the previous schedule and the location of the subsequent schedule, search for the time to move between them, and set the travel time to move between them. Register a schedule that will be registered later.

- the server 90 when a plurality of persons share the same schedule as in the case where a plurality of persons managed by the system 100 perform a meeting, the schedule of the plurality of persons. Search for a free time zone, and set up a meeting at this time. If the schedule is not available, the already registered schedule is changed according to the schedule attribute.

- the server 90 when the schedule is changed in this way, the server 90 outputs that effect as a voice response. According to such a voice response system, the user can be confirmed when changing the schedule.

- the server 90 inquires the content of the message to a predetermined contact when the input voice cannot be heard (that is, when there is an error as a sentence when converted into characters). Also, the voice that could not be heard is recorded, the voice is transmitted to a predetermined contact, and the voice is again input to the person of the contact.

- the mother can be inquired. Accuracy can be guaranteed.

- the inquiry source and the inquiry destination may be specified using the position information.

- the server 90 includes a plurality of databases prepared in advance according to age information indicating the age or age group of the user (the person who has input the voice).

- the database to be used is selected according to the age information of the person, and the voice is recognized according to the selected database.

- the database to be referred to at the time of voice recognition is changed according to the age. Therefore, if a frequently used word, wording, etc. are registered according to the age, the voice Recognition accuracy can be improved.

- the age of the user (the person who inputted the voice) is estimated, and the estimated age is used as age information.

- the age of the user for example, it may be estimated according to the characteristics of the input voice (voice waveform, voice pitch, etc.), or the camera may be used when the user inputs the voice. It estimates by imaging a user's face by imaging parts, such as.

- voice response system 100 voice can be recognized more accurately.

- Embodiments of the present invention are not limited to the above-described embodiments, and can take various forms as long as they belong to the technical scope of the present invention.

- a period (schedule and time zone) is specified as “between September 1st and 3rd”, and the schedule setting is left to the voice response system 100. May be.

- a period such as “Meeting with Mr. B and Mr. C on September 1 for one hour” is designated between the processes of S102 and S104. It is determined whether or not there is a schedule setting request (S103).

- a weather forecast may be acquired (S232), and an alternative may be set according to the weather forecast (S234). For example, as a result of obtaining a weather forecast, if the temperature tends to rise from now on, a proposal to lower the set temperature of the air conditioner is proposed, and if the temperature tends to fall from now on, a plan to increase the set temperature of the air conditioner suggest. Also, if it is going to rain in the future, we will suggest closing the window.

- voice recognition is used as a configuration for inputting character information.

- the input is not limited to voice recognition, and may be input using an input unit (operation unit 70) such as a keyboard or a touch panel.

- operation unit 70 such as a keyboard or a touch panel.

- the calculation unit 101 learns (records and analyzes) the user's behavior (conversation, the place where the user moved, and what is reflected in the camera) to compensate for the lack of words in the user's conversation. You may do it.

- the server 90 may acquire response candidates from a predetermined server or the Internet.

- response candidates can be acquired not only from the server 90 but also from any device connected via the Internet, a dedicated line, or the like.

- the present invention may be applied to a face-to-face device such as an automatic teller machine.

- identity verification such as age authentication can be performed using the present invention.

- the present invention may be applied to a vehicle.

- the configuration for specifying the person can be used as a configuration that replaces the vehicle key.

- the said invention demonstrated as the voice response system 100, you may comprise as a voice recognition apparatus which recognizes the input audio

- the terminal device 1 and the server 90 communicate, the one part or all the processes (process shown by a flowchart) are performed with the terminal device 1. May be implemented. In this case, the process regarding the communication between the terminal device 1 and the server 90 can be omitted.

- the controlled unit 95 corresponds to an arbitrary device that performs control according to a command from the outside. Furthermore, in the voice response system 100, an identification sound that is a sound indicating a mechanical sound may be included in the uttered voice. This is to make it possible to distinguish mechanical sounds from voices spoken by people. In this case, the identification sound may include an identifier indicating which sound is generated by the device, and in this way, the generation source of a plurality of types of mechanical sounds can be specified.

- Such an identification sound may be an audible sound or a non-audible sound.

- the identification sound is a non-audible sound

- the identifier may be embedded in the sound using a digital watermark technique.

- the response corresponding to the input voice is output by voice.

- the response corresponding to this input may be output by voice without being limited to voice input.

- a camera for detecting a change in the shape of the user's mouth may be provided, and a means for estimating what words the user is speaking based on the shape of the user's mouth may be provided.

- the correspondence relationship between the mouth shape and the sound is prepared as a database, the sound is estimated from the mouth shape, and the word is estimated from the sound. According to such a configuration, the user can input voice without actually making a sound.

- the shape of the mouth may be used as an assist when performing input using voice. In this way, voice recognition can be performed more reliably even when the user's smooth tongue is bad. Further, in preparation for the case where the user cannot input voice, it may be configured such that input in place of voice can be performed by selecting the input history by the user on the display. In this case, the history may be simply displayed in the new order, or it is estimated that there is a high possibility of being used in consideration of the frequency of use of the input content included in the history, the time zone when the input content is input, and the like. You may make it display in order from the content.

- the terminal device 1 when the terminal device 1 is mounted on a vehicle, a specific operation such as unlocking in response to a call from the owner (user) is performed in response to a call to the vehicle. Also good. In this way, the voice can be used as a key, and even if the owner of the vehicle loses sight of his / her vehicle in a large parking lot or the like, the user can find his / her vehicle by calling the vehicle.

- the voice response system 100 in the present embodiment corresponds to an example of a voice response device according to the present invention.

- the process of S74 corresponds to an example of the person specifying unit referred to in the present invention

- the process of S78 corresponds to an example of the audio feature recording unit referred to in the present invention.

- the process of S210 corresponds to an example of a voice match determination unit according to the present invention

- the processes of S214 and S216 correspond to an example of a voice output unit according to the present invention

- the processing of S208 and S218 corresponds to an example of a control unit referred to in the present invention

- the processing of S90 corresponds to an example of a schedule recording unit referred to in the present invention.

Landscapes

- Engineering & Computer Science (AREA)

- Computational Linguistics (AREA)

- Health & Medical Sciences (AREA)

- Audiology, Speech & Language Pathology (AREA)

- Human Computer Interaction (AREA)

- Physics & Mathematics (AREA)

- Acoustics & Sound (AREA)

- Multimedia (AREA)

- Telephonic Communication Services (AREA)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| JP2014529447A JPWO2014024751A1 (ja) | 2012-08-10 | 2013-07-31 | 音声応答装置 |

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| JP2012-178454 | 2012-08-10 | ||

| JP2012178454 | 2012-08-10 |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| WO2014024751A1 true WO2014024751A1 (ja) | 2014-02-13 |

Family

ID=50067982

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/JP2013/070756 Ceased WO2014024751A1 (ja) | 2012-08-10 | 2013-07-31 | 音声応答装置 |

Country Status (2)

| Country | Link |

|---|---|

| JP (4) | JPWO2014024751A1 (enExample) |

| WO (1) | WO2014024751A1 (enExample) |

Cited By (10)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2017054241A (ja) * | 2015-09-08 | 2017-03-16 | 株式会社東芝 | 表示制御装置、方法及びプログラム |

| WO2017199486A1 (ja) * | 2016-05-16 | 2017-11-23 | ソニー株式会社 | 情報処理装置 |

| CN109036406A (zh) * | 2018-08-01 | 2018-12-18 | 深圳创维-Rgb电子有限公司 | 一种语音信息的处理方法、装置、设备和存储介质 |

| WO2019123775A1 (ja) * | 2017-12-22 | 2019-06-27 | ソニー株式会社 | 情報処理装置、情報処理システム、および情報処理方法、並びにプログラム |

| CN109960754A (zh) * | 2019-03-21 | 2019-07-02 | 珠海格力电器股份有限公司 | 一种语音设备及其语音交互方法、装置和存储介质 |

| CN113096654A (zh) * | 2021-03-26 | 2021-07-09 | 山西三友和智慧信息技术股份有限公司 | 一种基于大数据的计算机语音识别系统 |

| JP2022515513A (ja) * | 2018-12-26 | 2022-02-18 | ジョン イ、チョン | スケジュール管理サービスシステム及び方法 |

| JP2022044590A (ja) * | 2017-10-03 | 2022-03-17 | グーグル エルエルシー | センサーベースの検証を用いた車両機能制御 |

| WO2023185004A1 (zh) * | 2022-03-29 | 2023-10-05 | 青岛海尔空调器有限总公司 | 一种音色切换方法及装置 |

| JP2024096819A (ja) * | 2020-02-11 | 2024-07-17 | ディズニー エンタープライゼス インコーポレイテッド | デジタルコンテンツを認証するためのシステム |

Families Citing this family (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP6659514B2 (ja) | 2016-10-12 | 2020-03-04 | 東芝映像ソリューション株式会社 | 電子機器及びその制御方法 |

| WO2019198186A1 (ja) * | 2018-04-11 | 2019-10-17 | 東芝映像ソリューション株式会社 | 電子機器及びその制御方法 |

| JP7286368B2 (ja) * | 2019-03-27 | 2023-06-05 | 本田技研工業株式会社 | 車両機器制御装置、車両機器制御方法、およびプログラム |

| US11257493B2 (en) * | 2019-07-11 | 2022-02-22 | Soundhound, Inc. | Vision-assisted speech processing |

Citations (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JPH06186996A (ja) * | 1992-12-18 | 1994-07-08 | Sony Corp | 電子機器 |

| JP2002372991A (ja) * | 2001-06-13 | 2002-12-26 | Olympus Optical Co Ltd | 音声制御装置 |

| JP2004033624A (ja) * | 2002-07-05 | 2004-02-05 | Nti:Kk | ペット型ロボットによる遠隔制御装置 |

Family Cites Families (26)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US6081782A (en) * | 1993-12-29 | 2000-06-27 | Lucent Technologies Inc. | Voice command control and verification system |

| JPH11259085A (ja) * | 1998-03-13 | 1999-09-24 | Toshiba Corp | 音声認識装置及び認識結果提示方法 |

| US8355912B1 (en) * | 2000-05-04 | 2013-01-15 | International Business Machines Corporation | Technique for providing continuous speech recognition as an alternate input device to limited processing power devices |

| JP2002182895A (ja) * | 2000-12-14 | 2002-06-28 | Sony Corp | 対話方法、情報提供サーバでの対話方法、情報提供サーバ、記憶媒体及びコンピュータプログラム |

| JP2003255991A (ja) * | 2002-03-06 | 2003-09-10 | Sony Corp | 対話制御システム、対話制御方法及びロボット装置 |

| JP3715584B2 (ja) * | 2002-03-28 | 2005-11-09 | 富士通株式会社 | 機器制御装置および機器制御方法 |

| JP2004094077A (ja) * | 2002-09-03 | 2004-03-25 | Nec Corp | 音声認識装置及び制御方法並びにプログラム |

| JP2004163541A (ja) * | 2002-11-11 | 2004-06-10 | Mitsubishi Electric Corp | 音声応答装置 |

| JP4109091B2 (ja) * | 2002-11-19 | 2008-06-25 | 株式会社山武 | 予定管理装置および方法、プログラム |

| JP2004212533A (ja) * | 2002-12-27 | 2004-07-29 | Ricoh Co Ltd | 音声コマンド対応機器操作装置、音声コマンド対応機器、プログラム、及び記録媒体 |

| JP3883066B2 (ja) * | 2003-03-07 | 2007-02-21 | 日本電信電話株式会社 | 音声対話システム及び方法、音声対話プログラム並びにその記録媒体 |

| JP2004286805A (ja) * | 2003-03-19 | 2004-10-14 | Sony Corp | 話者識別装置および話者識別方法、並びにプログラム |

| JP2005147925A (ja) * | 2003-11-18 | 2005-06-09 | Hitachi Ltd | 車載端末装置および車両向け情報提示方法 |

| JP2005227510A (ja) * | 2004-02-12 | 2005-08-25 | Ntt Docomo Inc | 音声認識装置及び音声認識方法 |

| US20050229185A1 (en) * | 2004-02-20 | 2005-10-13 | Stoops Daniel S | Method and system for navigating applications |

| JP2005300958A (ja) * | 2004-04-13 | 2005-10-27 | Mitsubishi Electric Corp | 話者照合装置 |

| JP4539149B2 (ja) * | 2004-04-14 | 2010-09-08 | ソニー株式会社 | 情報処理装置および情報処理方法、並びに、プログラム |

| JP4385949B2 (ja) * | 2005-01-11 | 2009-12-16 | トヨタ自動車株式会社 | 車載チャットシステム |

| JP5011686B2 (ja) * | 2005-09-02 | 2012-08-29 | トヨタ自動車株式会社 | 遠隔操作システム |

| US8788589B2 (en) * | 2007-10-12 | 2014-07-22 | Watchitoo, Inc. | System and method for coordinating simultaneous edits of shared digital data |

| JP4869268B2 (ja) * | 2008-03-04 | 2012-02-08 | 日本放送協会 | 音響モデル学習装置およびプログラム |

| JP2010066519A (ja) * | 2008-09-11 | 2010-03-25 | Brother Ind Ltd | 音声対話装置、音声対話方法、および音声対話プログラム |

| JP2010107614A (ja) * | 2008-10-29 | 2010-05-13 | Mitsubishi Motors Corp | 音声案内応答方法 |

| CN102549653B (zh) * | 2009-10-02 | 2014-04-30 | 独立行政法人情报通信研究机构 | 语音翻译系统、第一终端装置、语音识别服务器装置、翻译服务器装置以及语音合成服务器装置 |

| JP2012088370A (ja) * | 2010-10-15 | 2012-05-10 | Denso Corp | 音声認識システム、音声認識端末、およびセンター |

| JP2012141449A (ja) * | 2010-12-28 | 2012-07-26 | Toshiba Corp | 音声処理装置、音声処理システム及び音声処理方法 |

-

2013

- 2013-07-31 WO PCT/JP2013/070756 patent/WO2014024751A1/ja not_active Ceased

- 2013-07-31 JP JP2014529447A patent/JPWO2014024751A1/ja active Pending

-

2017

- 2017-09-22 JP JP2017182574A patent/JP2018036653A/ja active Pending

-

2018

- 2018-11-01 JP JP2018206748A patent/JP2019049742A/ja active Pending

-

2020

- 2020-08-06 JP JP2020133867A patent/JP2020194184A/ja active Pending

Patent Citations (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JPH06186996A (ja) * | 1992-12-18 | 1994-07-08 | Sony Corp | 電子機器 |

| JP2002372991A (ja) * | 2001-06-13 | 2002-12-26 | Olympus Optical Co Ltd | 音声制御装置 |

| JP2004033624A (ja) * | 2002-07-05 | 2004-02-05 | Nti:Kk | ペット型ロボットによる遠隔制御装置 |

Cited By (20)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2017054241A (ja) * | 2015-09-08 | 2017-03-16 | 株式会社東芝 | 表示制御装置、方法及びプログラム |

| JPWO2017199486A1 (ja) * | 2016-05-16 | 2019-03-14 | ソニー株式会社 | 情報処理装置 |

| WO2017199486A1 (ja) * | 2016-05-16 | 2017-11-23 | ソニー株式会社 | 情報処理装置 |

| JP2022044590A (ja) * | 2017-10-03 | 2022-03-17 | グーグル エルエルシー | センサーベースの検証を用いた車両機能制御 |

| US12154567B2 (en) | 2017-10-03 | 2024-11-26 | Google Llc | Vehicle function control with sensor based validation |

| US11651770B2 (en) | 2017-10-03 | 2023-05-16 | Google Llc | Vehicle function control with sensor based validation |

| JP7337901B2 (ja) | 2017-10-03 | 2023-09-04 | グーグル エルエルシー | センサーベースの検証を用いた車両機能制御 |

| WO2019123775A1 (ja) * | 2017-12-22 | 2019-06-27 | ソニー株式会社 | 情報処理装置、情報処理システム、および情報処理方法、並びにプログラム |

| JPWO2019123775A1 (ja) * | 2017-12-22 | 2020-10-22 | ソニー株式会社 | 情報処理装置、情報処理システム、および情報処理方法、並びにプログラム |

| JP7276129B2 (ja) | 2017-12-22 | 2023-05-18 | ソニーグループ株式会社 | 情報処理装置、情報処理システム、および情報処理方法、並びにプログラム |

| CN109036406A (zh) * | 2018-08-01 | 2018-12-18 | 深圳创维-Rgb电子有限公司 | 一种语音信息的处理方法、装置、设备和存储介质 |

| JP2022515513A (ja) * | 2018-12-26 | 2022-02-18 | ジョン イ、チョン | スケジュール管理サービスシステム及び方法 |

| JP7540023B2 (ja) | 2018-12-26 | 2024-08-26 | ジョン イ、チョン | スケジュール管理サービスシステム及び方法 |

| JP7228699B2 (ja) | 2018-12-26 | 2023-02-24 | ジョン イ、チョン | スケジュール管理サービスシステム及び方法 |

| JP2023053250A (ja) * | 2018-12-26 | 2023-04-12 | ジョン イ、チョン | スケジュール管理サービスシステム及び方法 |

| CN109960754A (zh) * | 2019-03-21 | 2019-07-02 | 珠海格力电器股份有限公司 | 一种语音设备及其语音交互方法、装置和存储介质 |

| JP2024096819A (ja) * | 2020-02-11 | 2024-07-17 | ディズニー エンタープライゼス インコーポレイテッド | デジタルコンテンツを認証するためのシステム |

| JP7754982B2 (ja) | 2020-02-11 | 2025-10-15 | ディズニー エンタープライゼス インコーポレイテッド | デジタルコンテンツを認証するためのシステム |

| CN113096654A (zh) * | 2021-03-26 | 2021-07-09 | 山西三友和智慧信息技术股份有限公司 | 一种基于大数据的计算机语音识别系统 |

| WO2023185004A1 (zh) * | 2022-03-29 | 2023-10-05 | 青岛海尔空调器有限总公司 | 一种音色切换方法及装置 |

Also Published As

| Publication number | Publication date |

|---|---|

| JP2018036653A (ja) | 2018-03-08 |

| JPWO2014024751A1 (ja) | 2016-07-25 |

| JP2020194184A (ja) | 2020-12-03 |

| JP2019049742A (ja) | 2019-03-28 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| JP2018036653A (ja) | 音声応答装置 | |

| JP7766379B2 (ja) | 音声応答装置用サーバ、車両、及び音声応答システム | |

| CN110288987B (zh) | 用于处理声音数据的系统和控制该系统的方法 | |

| US10991374B2 (en) | Request-response procedure based voice control method, voice control device and computer readable storage medium | |

| US10832674B2 (en) | Voice data processing method and electronic device supporting the same | |

| KR102740847B1 (ko) | 사용자 입력 처리 방법 및 이를 지원하는 전자 장치 | |

| WO2021008538A1 (zh) | 语音交互方法及相关装置 | |

| US20180232645A1 (en) | Alias resolving intelligent assistant computing device | |

| US9299350B1 (en) | Systems and methods for identifying users of devices and customizing devices to users | |

| CN110088833A (zh) | 语音识别方法和装置 | |

| CN111258529B (zh) | 电子设备及其控制方法 | |

| US11380325B2 (en) | Agent device, system, control method of agent device, and storage medium | |

| BR112015018905B1 (pt) | Método de operação de recurso de ativação por voz, mídia de armazenamento legível por computador e dispositivo eletrônico | |

| CN110100277A (zh) | 语音识别方法和装置 | |

| CN111919248A (zh) | 用于处理用户发声的系统及其控制方法 | |

| US20200286479A1 (en) | Agent device, method for controlling agent device, and storage medium | |

| WO2020202862A1 (ja) | 応答生成装置及び応答生成方法 | |

| JP2001083984A (ja) | インタフェース装置 | |

| WO2019024602A1 (zh) | 移动终端及其情景模式的触发方法、计算机可读存储介质 | |

| CN111739524B (zh) | 智能体装置、智能体装置的控制方法及存储介质 | |

| US11398221B2 (en) | Information processing apparatus, information processing method, and program | |

| CN111352501A (zh) | 业务交互方法及装置 | |

| KR20200056754A (ko) | 개인화 립 리딩 모델 생성 방법 및 장치 | |

| KR20220118698A (ko) | 사용자와 대화하는 인공 지능 에이전트 서비스를 지원하는 전자 장치 | |

| CN108174030B (zh) | 定制化语音控制的实现方法、移动终端及可读存储介质 |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| 121 | Ep: the epo has been informed by wipo that ep was designated in this application |

Ref document number: 13828716 Country of ref document: EP Kind code of ref document: A1 |

|

| ENP | Entry into the national phase |

Ref document number: 2014529447 Country of ref document: JP Kind code of ref document: A |

|

| NENP | Non-entry into the national phase |

Ref country code: DE |

|

| 122 | Ep: pct application non-entry in european phase |

Ref document number: 13828716 Country of ref document: EP Kind code of ref document: A1 |