WO2012147839A1 - サーバ装置、サーバ装置の制御方法、プログラム、及び、記録媒体 - Google Patents

サーバ装置、サーバ装置の制御方法、プログラム、及び、記録媒体 Download PDFInfo

- Publication number

- WO2012147839A1 WO2012147839A1 PCT/JP2012/061186 JP2012061186W WO2012147839A1 WO 2012147839 A1 WO2012147839 A1 WO 2012147839A1 JP 2012061186 W JP2012061186 W JP 2012061186W WO 2012147839 A1 WO2012147839 A1 WO 2012147839A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- image

- specified

- image processing

- character string

- information

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/01—Input arrangements or combined input and output arrangements for interaction between user and computer

- G06F3/048—Interaction techniques based on graphical user interfaces [GUI]

- G06F3/0484—Interaction techniques based on graphical user interfaces [GUI] for the control of specific functions or operations, e.g. selecting or manipulating an object, an image or a displayed text element, setting a parameter value or selecting a range

- G06F3/04842—Selection of displayed objects or displayed text elements

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F16/00—Information retrieval; Database structures therefor; File system structures therefor

- G06F16/90—Details of database functions independent of the retrieved data types

- G06F16/95—Retrieval from the web

- G06F16/957—Browsing optimisation, e.g. caching or content distillation

- G06F16/9577—Optimising the visualization of content, e.g. distillation of HTML documents

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F40/00—Handling natural language data

- G06F40/40—Processing or translation of natural language

- G06F40/58—Use of machine translation, e.g. for multi-lingual retrieval, for server-side translation for client devices or for real-time translation

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V30/00—Character recognition; Recognising digital ink; Document-oriented image-based pattern recognition

- G06V30/10—Character recognition

- G06V30/14—Image acquisition

- G06V30/142—Image acquisition using hand-held instruments; Constructional details of the instruments

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F2218/00—Aspects of pattern recognition specially adapted for signal processing

- G06F2218/08—Feature extraction

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V30/00—Character recognition; Recognising digital ink; Document-oriented image-based pattern recognition

- G06V30/10—Character recognition

Definitions

- the present invention relates to a server device, a control method for the server device, a program, and a recording medium, and more particularly to translation of a character string in an image.

- the present invention solves the problems as described above, and provides a server device, a server device control method, a program, and a recording medium suitable for translating characters of an image in a WEB page in real time. With the goal.

- the server device provides: A request receiving unit that receives a request in which identification information of an image and parameters for image processing are specified from a terminal; By acquiring an image based on the identification information specified in the received request, and applying the image processing to the acquired image using parameters specified in the received request, An image processing unit for outputting extraction information extracted from the image; A response transmission unit that transmits a response specifying the extraction information output by the image processing unit to the terminal; When the extracted information is extracted, the image processing unit caches the extracted extracted information in association with image identification information and parameters for the extracted information, When the identification information specified in the received request and the extraction information associated with the parameter are cached, the image processing unit is not cached instead of acquiring the image and applying the image processing. The extracted information is obtained and the obtained extracted information is output.

- the parameter specified in the request includes a specified area

- the image processing by the image processing unit includes an extraction process for outputting a recognized character string recognized from the designated area in the image by performing character recognition on the designated area included in the parameter in the image. Included, When the recognized character string is extracted, the image processing unit caches the recognized character string in association with image identification information and a designated area for the recognized character string, If the recognized character string associated with the identification information specified in the received request and the specified area included in the parameter is cached, the image processing unit is cached instead of the character recognition. Obtain the recognized character string, and output the obtained recognized character string.

- the extracted information includes the recognized character string.

- the image processing unit matches the identification information associated when the recognized character string is cached with the identification information specified in the received request, and the recognized character string is cached. If the specified area associated with the received request overlaps with the specified area included in the parameter specified in the received request, it is included in the identification information and parameter specified in the received request. It is characterized in that the extraction information associated with the specified area is determined to be cached.

- the image processing unit corrects the designated area to a recognized area in which the recognized character string is recognized in the designated area, and then caches the recognized character string. It is characterized by.

- the parameters specified in the request include a recognition language, Character recognition by the image processing unit is performed in a recognition language.

- the parameters specified in the request include conversion information;

- the recognized character string is converted by using the conversion information included in the parameter specified in the received request for the output recognized character string. Includes a conversion process that outputs the converted string converted from the string,

- the extracted information includes the converted character string.

- the image processing unit associates the image identification information, the designated area, the recognition language, the recognized character string, and the conversion information with respect to the converted character string, Cache the converted string,

- the image processing unit replaces the conversion process with the cache

- the converted character string is obtained, and the obtained converted character string is output.

- the conversion process is characterized in that the converted character string is obtained by translating the recognized character string into a translation destination language specified in the conversion information.

- An inquiry receiving unit for receiving an inquiry in which identification information of an image is designated from the terminal; If the extracted information associated with the identification information specified in the received inquiry is not cached, a message that prompts transmission of a request specifying the image identification information and the image processing parameters is specified. An answer is transmitted to the terminal, and when the extracted information associated with the identification information specified in the received inquiry is cached, the answer is transmitted to the terminal.

- the apparatus further comprises a section.

- An inquiry receiving unit for receiving an inquiry in which identification information of an image and a position in the image are designated from the terminal;

- the identification information specified in the received query and the recognized character string associated with the specified area including the position specified in the received query are cached by the image processing unit, It is further characterized by further comprising an answer transmission unit that transmits an answer in which a recognized character string that has been cached is designated to the terminal.

- An inquiry reception unit that receives an inquiry in which identification information of an image, a position in the image, and conversion information are designated from the terminal; Identification information and conversion information specified in the received query, and a converted character string associated with a specified area including a position specified in the received query are cached by the image processing unit.

- the method further includes a reply transmission unit that transmits a reply in which the cached converted character string is designated to the reply to the terminal.

- the program according to the second aspect of the present invention is: A program executed by a computer that includes a display unit that displays an image identified by the identification information on a screen and is capable of communicating with the server device according to the above aspect.

- a position detection unit for detecting selection of a position in the image displayed on the screen; When the selection of the position is detected, an inquiry transmission unit that transmits an inquiry in which the identification information and the selected position are designated to the server device,

- the program functions as an answer receiving unit that receives an answer from the server device.

- the display unit functions to further display the extracted information specified in the received answer on the screen, and the program causes the computer to An area detection unit for detecting selection of an area in the image displayed on the screen; A setting unit that prompts the user to set parameters for image processing; When the selection of the region is detected, a request transmission unit that transmits a request in which the identification information, the set parameter, and the selected region are specified to the server device, The program causes the computer to function as a response receiving unit that receives a response from the server device. The display unit functions to further display the extracted information specified in the received response on the screen.

- the server device control method provides: A request receiving step for receiving a request in which identification information of an image and parameters for image processing are specified from a terminal; By acquiring an image based on the identification information specified in the received request, and applying the image processing to the acquired image using parameters specified in the received request, An image processing step of outputting extracted information extracted from the image; A response transmission step of transmitting a response in which the output extraction information is specified to the terminal; In the image processing step, When the extracted information is extracted, the extracted extracted information is cached in association with image identification information and parameters for the extracted information, When the identification information specified in the received request and the extraction information associated with the parameter are cached, the cached extraction information is obtained instead of obtaining the image and applying the image processing. The extracted information obtained is output.

- the program according to the fourth aspect of the present invention is: Computer A request receiving unit that receives a request in which identification information of an image and parameters for image processing are specified from a terminal; By acquiring an image based on the identification information specified in the received request, and applying the image processing to the acquired image using parameters specified in the received request, An image processing unit for outputting extraction information extracted from the image; A response that specifies the extraction information output by the image processing unit is functioned as a response transmission unit that transmits to the terminal; When the extracted information is extracted, the image processing unit caches the extracted extracted information in association with image identification information and parameters for the extracted information, When the identification information specified in the received request and the extraction information associated with the parameter are cached, the image processing unit is not cached instead of acquiring the image and applying the image processing. It is characterized in that it functions to obtain the extracted information and output the obtained extracted information.

- a computer-readable recording medium according to a fifth aspect of the present invention is provided.

- Computer A request receiving unit that receives a request in which identification information of an image and parameters for image processing are specified from a terminal; By acquiring an image based on the identification information specified in the received request, and applying the image processing to the acquired image using parameters specified in the received request, An image processing unit for outputting extraction information extracted from the image; A response that specifies the extraction information output by the image processing unit is functioned as a response transmission unit that transmits to the terminal; When the extracted information is extracted, the image processing unit caches the extracted extracted information in association with image identification information and parameters for the extracted information, When the identification information specified in the received request and the extraction information associated with the parameter are cached, the image processing unit is not cached instead of acquiring the image and applying the image processing.

- a program is recorded that functions to obtain the extracted information and to output the obtained extracted information.

- the above program can be distributed and sold via a computer communication network independently of the computer on which the program is executed.

- the recording medium can be distributed and sold independently from the computer.

- non-transitory recording medium means a tangible recording medium.

- Non-temporary recording media are, for example, compact disks, flexible disks, hard disks, magneto-optical disks, digital video disks, magnetic tapes, semiconductor memories, and the like.

- the transitory recording medium refers to the transmission medium (propagation signal) itself.

- the temporary recording medium is, for example, an electric signal, an optical signal, an electromagnetic wave, or the like.

- the temporary storage area is an area for temporarily storing data and programs, and is, for example, a volatile memory such as a RAM (Random Access Memory).

- a server device a control method for the server device, a program, and a recording medium that are suitable for translating image characters in a WEB page in real time.

- FIG. 1 It is a figure which shows the relationship between the server apparatus which concerns on embodiment of this invention, a WEB server apparatus, and a terminal device. It is a figure which shows schematic structure of the typical information processing apparatus with which the server apparatus or terminal device which concerns on embodiment of this invention is implement

- FIG. It is a figure for demonstrating the example of the image in a WEB page. It is a figure for demonstrating an extraction information table. It is a figure for demonstrating the example of the image in a WEB page.

- FIG. 5 is a flowchart for explaining control processing performed by each unit of the server device according to the first embodiment. It is a figure for demonstrating a designation

- FIG. 10 is a flowchart for explaining control processing performed by each unit of the server device according to the second embodiment. It is a figure for demonstrating the example of the image in a WEB page. It is a figure for demonstrating an extraction information table. It is a figure for demonstrating the example of the image in a WEB page. FIG.

- FIG. 10 is a flowchart for explaining control processing performed by each unit of the server device according to the third embodiment. It is a figure for demonstrating the schematic structure of the server apparatus of Embodiment 4. FIG. It is a figure for demonstrating the example of the image in a WEB page.

- FIG. 9 is a flowchart for explaining control processing performed by each unit of a server device according to a fourth embodiment. It is a figure for demonstrating the example of the image in a WEB page.

- FIG. 10 is a flowchart for explaining a control process performed by each unit of a server device according to a fifth embodiment. It is a figure for demonstrating an extraction information table. It is a figure for demonstrating the example of the image in a WEB page.

- FIG. 10 is a flowchart for explaining a control process performed by each unit of a server device according to a sixth embodiment. It is a figure for demonstrating the schematic structure of the terminal device with which the program of Embodiment 7 operate

- FIG. 10 is a flowchart for explaining control processing performed by each unit of the terminal device according to the seventh embodiment.

- the server device 100 is connected to the Internet 300 as shown in FIG.

- Terminal device 200 a plurality of terminal devices 211, 212 to 21n (hereinafter collectively referred to as “terminal device 200”) operated by a user, and a WEB server device 400.

- the WEB server device 400 provides a predetermined WEB page to the terminal device 200 in response to a request from the terminal device 200.

- the server apparatus 100 translates a WEB page according to the request

- the server apparatus 100 and the WEB server apparatus 400 are connected via the Internet 300 as shown in FIG. 1, but the present invention is not limited to this example.

- the server device 100 and the WEB server device 400 may be realized by the same device.

- the server device 100 and the WEB server device 400 may be directly connected.

- the information processing apparatus 500 includes a CPU (Central Processing Unit) 501, a ROM (Read only Memory) 502, a RAM 503, a NIC (Network Interface Card) 504, an image processing unit 505, an audio A processing unit 506, a DVD-ROM (Digital Versatile Disc ROM) drive 507, an interface 508, an external memory 509, a controller 510, a monitor 511, and a speaker 512 are provided.

- a CPU Central Processing Unit

- ROM Read only Memory

- RAM 503 Random Access Memory

- NIC Network Interface Card

- the CPU 501 controls the overall operation of the information processing apparatus 500 and is connected to each component to exchange control signals and data.

- the ROM 502 stores an IPL (Initial Program Loader) that is executed immediately after the power is turned on, and when this is executed, a predetermined program is read into the RAM 503 and execution of the program by the CPU 501 is started.

- the ROM 502 stores an operating system program and various data necessary for operation control of the entire information processing apparatus 500.

- the RAM 503 is for temporarily storing data and programs, and holds programs and data read from the DVD-ROM and other data necessary for communication.

- the NIC 504 is used to connect the information processing apparatus 500 to a computer communication network such as the Internet 300, and conforms to the 10BASE-T / 100BASE-T standard used when configuring a LAN (Local Area Network).

- Analog modems for connecting to the Internet using telephone lines ISDN (Integrated Services Digital Network) modems, ADSL (Asymmetric Digital Subscriber Line) modems, cable modems for connecting to the Internet using cable television lines, etc. These are constituted by an interface (not shown) that mediates between these and the CPU 501.

- the image processing unit 505 processes the data read from the DVD-ROM or the like by an image arithmetic processor (not shown) provided in the CPU 501 or the image processing unit 505, and then processes the processed data in a frame memory provided in the image processing unit 505. (Not shown).

- the image information recorded in the frame memory is converted into a video signal at a predetermined synchronization timing and output to the monitor 511. Thereby, various page displays are possible.

- the audio processing unit 506 converts audio data read from a DVD-ROM or the like into an analog audio signal, and outputs the analog audio signal from a speaker 512 connected thereto. Further, under the control of the CPU 501, a sound to be generated during the progress of the processing performed by the information processing apparatus 500 is generated, and a sound corresponding to the sound is output from the speaker 512.

- a program for realizing the server device 100 is stored in the DVD-ROM mounted on the DVD-ROM drive 507.

- the DVD-ROM drive 507 Under the control of the CPU 501, the DVD-ROM drive 507 performs a reading process on the DVD-ROM mounted on the DVD-ROM drive 507 to read out necessary programs and data, and these are temporarily stored in the RAM 503 or the like.

- the external memory 509, the controller 510, the monitor 511, and the speaker 512 are detachably connected to the interface 508.

- the external memory 509 stores data related to the user's personal information in a rewritable manner.

- the controller 510 accepts an operation input performed when various settings of the information processing apparatus 500 are performed.

- the user of the information processing apparatus 500 can record these data in the external memory 509 as appropriate by inputting instructions via the controller 510.

- the monitor 511 presents the data output by the image processing unit 505 to the user of the information processing apparatus 500.

- the speaker 512 presents the audio data output by the audio processing unit 506 to the user of the information processing apparatus 500.

- the information processing apparatus 500 uses a large-capacity external storage device such as a hard disk to perform the same function as the ROM 502, RAM 503, external memory 509, DVD-ROM mounted on the DVD-ROM drive 507, and the like. You may comprise.

- a large-capacity external storage device such as a hard disk to perform the same function as the ROM 502, RAM 503, external memory 509, DVD-ROM mounted on the DVD-ROM drive 507, and the like. You may comprise.

- the server apparatus 100 or the terminal apparatus 200 according to the embodiment realized in the information processing apparatus 500 will be described with reference to FIGS.

- a program that functions as the server apparatus 100 or the terminal apparatus 200 according to the embodiment is executed, and the server apparatus 100 or the terminal apparatus 200 according to the embodiment is realized.

- the WEB page 600 includes images 601, 602, and 603 and texts 604, 605, and 606.

- the images 601 and 603 are images including characters, and the image 602 is an image not including characters.

- the image URLs of the images 601, 602, and 603 are “http: //xxx.601.jpg”, “http: //xxx.602.jpg”, and “http: //xxx.603.jpg”. Suppose there is.

- the server device 100 When translating a character string included in an image, the server device 100 according to the first embodiment can omit image acquisition and character recognition processing when the character recognition processing has already been performed on the image. To do.

- the server device 100 includes a request receiving unit 101, an image processing unit 102, and a response transmitting unit 103, as shown in FIG.

- the request receiving unit 101 receives from the terminal device 200 a request in which image identification information and image processing parameters are specified.

- the image identification information is, for example, an image URL.

- Image processing is character recognition processing for recognizing characters in an image, for example.

- the parameter for image processing is, for example, a recognition language indicating which language the character in the image is. Character recognition is performed assuming that the character in the image is the designated recognition language.

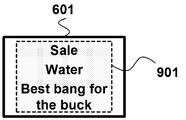

- a case where the user requests the server device 100 to translate the image 601 of the WEB page 600 will be described as an example.

- a pop-up 801 for requesting specification of a recognition language is displayed as shown in FIG.

- FIG. 5B when the user clicks the button 802 with the cursor 701, a pull-down menu is displayed.

- the user selects the language (recognition language) of the character string in the image 601 from the menu.

- FIG. 5C when the recognition language “English” is designated, the image URL “http: //xxx.601.jpg” of the image 601 and the recognition language “English” are displayed.

- the designated request is transmitted to the server device 100.

- the request receiving unit 101 of the server device 100 receives the request.

- the CPU 501 and the NIC 504 cooperate to function as the request reception unit 101.

- the image processing unit 102 acquires an image based on the identification information specified in the received request, and applies image processing to the acquired image using the parameters specified in the received request. Thus, extraction information extracted from the image is output.

- the extracted information is, for example, a character string in an image obtained as a result of character recognition (hereinafter referred to as “recognized character string”).

- a character string in an image obtained as a result of character recognition hereinafter referred to as “recognized character string”.

- the image processing unit 102 first determines the WEB server device based on the image URL. An image 601 is acquired from 400. Then, the image processing unit 102 performs character recognition on the assumption that the characters included in the acquired image 601 are English. As a result of character recognition, when it is determined that the characters in the image 601 are “Sale Water Best bang for the buck”, the image processing unit 102 recognizes these recognized character strings extracted from the image 601. Output as a column.

- the image processing unit 102 caches the extracted extracted information in association with image identification information and parameters for the extracted information.

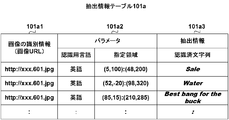

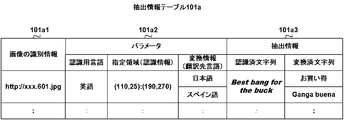

- the RAM 503 stores an extraction information table 101a as shown in FIG.

- image identification information (image URL) 101a1 image identification information

- parameters (recognition language) 101a2 parameters (recognition language) 101a2

- extraction information (recognized character string) 101a3 are registered in association with each other.

- image processing unit 102 obtains the recognized character string “Sale Water Best Bang for the book” by character recognition

- the recognized character string and the image URL “http: //xxxx.601.jpg” of the image 601 are obtained.

- the recognition language “English” is registered in the extracted information table 101a in association with each other.

- the CPU 501 and the image processing unit 505 cooperate to function as the image processing unit 102.

- the response transmission unit 103 transmits a response specifying the extraction information output by the image processing unit 102 to the terminal device 200.

- the response transmission unit 103 transmits a response in which the recognized character string “Sale Water Best bang for the buck” is designated to the terminal device 200.

- the response includes, for example, an instruction to add a tag for displaying an arbitrary figure superimposed on an image in which character recognition in the WEB page displayed on the terminal device 200 is completed.

- a translucent rectangle 901 is displayed over the image 601 after the character recognition is completed.

- the CPU 501 and the NIC 504 cooperate to function as the response transmission unit 103.

- the image processing unit 102 extracts the cached extraction instead of acquiring the image and applying the image processing. Obtain information and output the extracted information.

- the image processing unit 102 when the request receiving unit 101 receives a request in which the image URL of the image 601 and the recognition language “English” are specified, the image processing unit 102 refers to the extraction information table 101a in FIG. Since the recognized character string “Sale Water Best Bang for the Buck” corresponding to the identification information of the image 601 and the recognition language “English” is registered, the image processing unit 102 acquires the image 601 and performs character recognition processing. And the recognized character string is output to the response transmission unit 103.

- the request receiving unit 101 receives from the terminal device 200 a request in which image identification information (image URL) and parameters for image processing (recognition language) are designated (step S101). For example, when the user places the cursor 701 on the image 601 and designates the recognition language “English” by the procedure shown in FIGS. 5A to 5C, the request receiving unit 101 displays the image URL “http: // xxx”. .601.jpg ”and the recognition language“ English ”are received.

- the image processing unit 102 determines whether or not the identification information specified in the received request and the extracted information (recognized character string) associated with the recognition language are cached (step S102).

- the cached recognized character string is output (step S103).

- the image processing unit 102 determines that the recognized character string is cached (step S102; Yes)

- the cached recognized character string is output (step S103).

- the image processing unit 102 supports the image URL “http: //xxx.601.jpg” and the recognition language “English”.

- the recognized character string “Sale Water Best bang for the buck” attached is output to the response transmission unit 103.

- the image processing unit 102 determines that the recognized character string is not cached (step S102; No)

- the image processing unit 102 acquires an image of the image URL specified in the received request (step S105). For example, when the information of the recognized character string associated with the image URL “http: //xxxx.601.jpg” and the recognition language “English” is not registered in the extraction information table 101a, image processing is performed.

- the unit 102 accesses the WEB server apparatus 400 and acquires an image 601 corresponding to the image URL “http: //xxx.601.jpg”.

- the image processing unit 102 After obtaining the image, the image processing unit 102 performs character recognition using the recognition language specified in the request, and extracts a character string (recognized character string) from the image (step S106). For example, the image processing unit 102 performs character recognition on the image 601 assuming that the character in the image 601 is “English”, and obtains a recognized character string of “Sale Water Best bang for the buck”.

- the image processing unit 102 caches the image identification information, the recognition language, and the recognized character string in association with each other (step S107). For example, the image processing unit 102, as shown in FIG. 6, the image URL “http: //xxx.601.jpg”, the recognition language “English”, and the recognized character string “Sale Water best bang for the buck”. "Is registered in the extracted information table 101a in association with each other.

- the image processing unit 102 outputs the extracted recognized character string (step S108). For example, the image processing unit 102 outputs a recognized character string “Sale Water Best bang for the buck” to the response transmission unit 103 as a result of character recognition.

- the response transmission unit 103 transmits a response specifying the recognized character string output in step S103 or step S108 to the terminal device 200 (step S104). For example, the response transmission unit 103 transmits a response in which the recognized character string “Sale Water Best bang for the buck” is designated to the terminal device 200.

- the server apparatus 100 When translating a character string included in an image, the server apparatus 100 according to the second embodiment performs image acquisition and character recognition processing when character recognition processing has already been performed for a specified region in the image. It can be omitted.

- the server device 100 includes a request reception unit 101, an image processing unit 102, and a response transmission unit 103 as in the first embodiment (FIG. 4).

- the information is different from that in the first embodiment. This will be described in detail below.

- the request receiving unit 101 receives from the terminal device 200 a request in which image identification information and a specified area in the image are specified.

- the designated area is, for example, an area in the image of the WEB page designated by the user operating the terminal device 200.

- the designated area is defined by a rectangle and is specified by the coordinates of the upper left corner and the lower right corner of the rectangle.

- the user selects a region 902 in the image 601 by dragging the cursor 701 on the terminal device 200.

- the coordinates of the upper left corner 911 (referred to as “(ordinate, abscissa)”) of the image 601 are set to “(0, 0)”, and the coordinates of the lower right corner 912 are set to (200, 300).

- the coordinates of the upper left corner of the area 902 are (5, 100) and the coordinates of the lower right corner are (48, 200).

- the terminal device 200 displays the image URL “http: //xxx.601.jpg” of the image 601 and the designated area “(5, 100): (48, 200)”.

- the designated request is transmitted to the server device 100.

- the request receiving unit 101 of the server device 100 receives the request.

- the method for designating the designated area is not limited to the above.

- a rectangle 903 having a predetermined size is displayed around the cursor 701, and the area of the rectangle 903 is set as the designated area.

- the designated area is not limited to a rectangle, but may be a circle or the like.

- the image processing unit 102 acquires an image based on the identification information specified in the received request, performs character recognition on the specified area in the acquired image, and is recognized from the specified area in the image.

- the recognized character string is output.

- the image processing unit 102 receives a request in which an image URL “http: //xxx.601.jpg” and a designated area “(5, 100): (48, 200)” are designated.

- the image processing unit 102 acquires an image 601 from the WEB server device 400 based on the image URL.

- the image processing unit 102 estimates a recognition language for character recognition. For example, if the URL of the WEB page 600 includes a domain code indicating the country, the language of characters included in the image is estimated from the domain code. For example, if “.UK” is included in the domain code, the recognition language is estimated as “English”.

- the image processing unit 102 caches the recognized character string in association with the image identification information and the designated area for the recognized character string.

- the image processing unit 102 includes an image URL “http: //xxx.601.jpg” of the image 601, a recognition language “English”, and a designated area “(5, 100): (48, 200) "and the recognized character string” Sale "are associated with each other and registered in the extraction information table 101a.

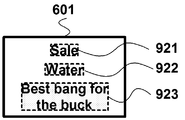

- the image processing unit 102 corrects the designated area to the recognized area in which the recognized character string is recognized in the designated area, and then caches the recognized character string. You may make it do.

- the recognition area is, for example, a rectangle including a recognized character string, and is an area specified by a rectangle having a minimum length in the vertical and horizontal directions.

- the recognition area of “” (area 921 in FIG. 11) is “(8, 110): (45, 170)”

- the recognition area of “Water” (area 922 in FIG. 11) is “(60, 120): (90, 180) ”

- “ Best bang for the buck ”recognition area is“ (110, 25): (190, 270) ”.

- an area 902 is designated as a designated area, and a designated area “(5, 100): (48, 200)” is received.

- the image processing unit 102 performs character recognition on the designated area, extracts the character string “Sale”, and obtains a recognition area including the character string. Then, the image processing unit 102, as shown in FIG. 12, has an image URL “http: //xxx.601.jpg”, a recognition language “English”, and a designated area (recognition area) “(8, 110). : (45, 170) "and the recognized character string” Sale "are registered in the extracted information table 101a in association with each other.

- an area 904 that protrudes from the image 601 is designated as the designated area, and a request that designates the designated area “(52, ⁇ 20): (98, 320)” is made.

- the image processing unit 102 as shown in FIG. 12, the image URL “http: //xxx.601.jpg” of the image 601, the recognition language “English”, and the designated area (recognition area) “( 60, 120): (90, 180) "and the recognized character string” Water "are associated with each other and registered in the extraction information table 101a.

- an area 905 that crosses the character string in the image 601 is designated as the designated area, and designated area “(85, 15): (210, 285)” is designated.

- the image processing unit 102 as shown in FIG. 12, the image URL “http: //xxx.601.jpg” of the image 601, the recognition language “English”, and the designated area (recognition area) “( 110, 25): (190, 270) "and the recognized character string” Best bang for the buck "are associated with each other and registered in the extraction information table 101a.

- the response transmission unit 103 transmits a response specifying the recognized character string output from the image processing unit 102 to the terminal device 200.

- the recognition area of the recognized character string “Sale” for which character recognition has been completed is entered.

- a semi-transparent rectangle 931 is displayed in an overlapping manner.

- semi-transparent rectangles 932 and 933 are superimposed and displayed in the recognition area.

- the image processing unit 102 acquires the image and Instead of character recognition, a cached recognized character string is obtained, and the obtained recognized character string is output.

- the image processing unit 102 matches the identification information associated when the recognized character string is cached with the identification information specified in the received request, and the recognized character string is cached. If the specified area associated with the request and the specified area specified in the received request overlap, the identification information specified in the received request and the extraction associated with the specified area included in the parameter Determine that the information is cached.

- the request receiving unit 101 displays the image URL of the image 601 and the designated area “(5, 35): (52, 200)”.

- a request with the specified is received.

- the image processing unit 102 refers to the extraction information table 101a in FIG.

- the image URL of the image 601 matches, and the designated area “(5, 35): (52, 200)” designated in the request and the cached designated area “(8, 110): (45, 170) "is recognized and the recognized character string is registered. Accordingly, the image processing unit 102 omits image acquisition and character recognition processing, and outputs the recognized character string “Sale” to the response transmission unit 103.

- the designated areas (areas 906 and 907) designated in the request include all cached designated areas (recognition areas, rectangles 931 and 932). In the case, it is determined that there is duplication.

- FIG. 13C when the specified area (area 908) specified in the request and the cached specified area (recognition area, rectangle 933) partially overlap, it is determined that they do not overlap. It may be.

- the recognition area and the designated area specified in the request overlap by a predetermined ratio of the area of the recognition area, it may be determined that they overlap.

- FIG. 13D when the cursor 701 overlaps the recognition area (rectangle 933), it may be determined that the cursor overlaps.

- the request receiving unit 101 receives a request in which the image identification information and the specified area are specified from the terminal device 200 (step S201). For example, when the user designates the area 902 in FIG. 9A, the request receiving unit 101 includes the image URL “http: //xxx.601.jpg” and the designated area “(5, 100): (48, 200). ) ”Is received.

- the image processing unit 102 determines whether or not the identification information (image URL) specified in the received request and the extracted information (recognized character string) associated with the specified area are cached (step S202).

- the cached recognized character string is output (step S203).

- the image processing unit 102 refers to the extraction information table 101a shown in FIG. 12, and the image URLs match, and the designated area “(5, 100) :( 48, 200)” designated in the request is cached. It is determined that the designated area (recognition area) “(8, 110): (45, 170)” overlaps. In this case, the image processing unit 102 outputs the recognized character string “Sale” associated with the image URL and the recognition area to the response transmission unit 103.

- the image processing unit 102 determines that the recognized character string is not cached (step S202; No)

- the image processing unit 102 acquires an image of the image URL specified in the received request (step S205). For example, if the image URL “http: //xxxx.601.jpg” does not match, or if the designated area specified in the request and the cached designated area do not overlap, the image processing unit 102 is sent to the WEB server. The apparatus 400 is accessed and an image 601 corresponding to the image URL “http: //xxx.601.jpg” is acquired.

- the image processing unit 102 After acquiring the image, the image processing unit 102 next estimates a recognition language, performs character recognition using the recognition language, and extracts a recognized character string from the image (step S206). For example, the image processing unit 102 performs character recognition on the region 902 on the assumption that the character in the designated region (region 902) of the image 601 is English, and obtains a recognized character string “Sale”.

- the image processing unit 102 caches the image identification information, the recognition language, the designated area, and the recognized character string in association with each other (step S207).

- the image processing unit 102 includes an image URL “http: //xxx.601.jpg”, a recognition language “English”, and a designated area “(5, 100): (48, 200) ”and the recognized character string“ Sale ”are associated with each other and registered in the extraction information table 101a.

- the designated area may be corrected to the recognition area “(8, 110) :( 45, 170)” and registered as shown in FIG.

- the image processing unit 102 outputs the extracted recognized character string (step S208). For example, the image processing unit 102 outputs a recognized character string “Sale” to the response transmission unit 103 as a result of character recognition.

- the response transmission unit 103 transmits a response specifying the recognized character string output in step S203 or step S208 to the terminal device 200 (step S204). For example, the response transmission unit 103 transmits a response in which the recognized character string “Sale” is designated to the terminal device 200.

- the image acquisition and character recognition processing can be omitted. Then, by translating the character string obtained as a result of character recognition, the time required for translating the character string in the image can be shortened.

- the server device 100 obtains the image, recognizes the character, and performs the character recognition and translation when the character recognition and translation processing has already been performed for the specified region in the image. This makes it possible to omit translation processing.

- the server device 100 includes a request reception unit 101, an image processing unit 102, and a response transmission unit 103, as in the second embodiment (FIG. 4).

- the information is different from that in the second embodiment. This will be described in detail below.

- the request receiving unit 101 receives from the terminal device 200 a request in which image identification information, a recognition language, a specified area, and conversion information are specified.

- the conversion information is, for example, a language after translation of a character string included in an image (hereinafter referred to as “translation destination language”).

- translation destination language a language after translation of a character string included in an image

- the recognized character string extracted from the image using the recognition language is translated into the target language.

- a button 805 is clicked for the translation destination language, and the language (translation destination language) to be translated from the character string in the area 909 is selected from the menu.

- the recognition language “English” and the translation destination language “Japanese” are designated

- a request in which “(100, 20): (200, 280)”, recognition language “English”, and translation destination language “Japanese” are designated is transmitted to server apparatus 100.

- the request receiving unit 101 of the server device 100 receives the request.

- the image processing unit 102 acquires an image based on the identification information specified in the received request, performs character recognition on the specified area in the acquired image, and is recognized from the specified area in the image.

- the recognized character string is output.

- the image processing unit 102 performs a conversion process on the output recognized character string using the conversion information (translation destination language) specified in the received request, thereby obtaining the recognized character string from the recognized character string. Output the converted string after conversion.

- the image processing unit 102 acquires an image 601 corresponding to the image URL “http: //xxx.601.jpg” specified in the request from the WEB server device 400. Next, the image processing unit 102 extracts a recognized character string “Best bang for the buck” using the recognition language “English” for the designated area “(100, 20): (200, 280)”. Then, the image processing unit 102 converts the recognized character string into the translation destination language “Japanese”, and outputs the converted character string “Bargain” to the response transmission unit 103.

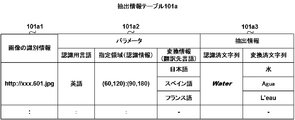

- the image processing unit 102 associates the converted character string with the image identification information, the designated area, the recognition language, the recognized character string, and the conversion information. Cache completed strings.

- the designated area to be cached is a recognition area.

- the image processing unit 102 includes an image URL “http: //xxx.601.jpg” of the image 601, a recognition language “English”, and a designated area “(110, 25): (190, 270) ”, the translated language“ Japanese ”, the recognized character string“ Best bang for the book ”, and the converted character string“ Bargain ”are associated with each other and registered in the extraction information table 101a. To do. Note that the information to be registered is not limited to these combinations. For example, it is possible not to register the translated language and the converted character string.

- the image processing unit 102 caches identification information (image URL) specified in the received request, a specified area, a recognition language, and a converted character string for conversion information (translation destination language).

- the image processing unit 102 obtains a cached converted character string instead of the conversion process, and outputs the obtained converted character string.

- the user designates an area 910 (the coordinates are “(90, 0): (200, 290)”), and in the pop-up 806, the recognition language “English”

- the request receiving unit 101 includes the image URL of the image 601, the designated area “(90, 0): (200, 290)”, the recognition language “English”, the translation destination language “Japanese”, A request with the specified is received.

- the image processing unit 102 refers to the extraction information table 101a in FIG. 16, and the image URL, recognition language, and translation destination language specified in the request match the cached information and is specified in the request.

- the image processing unit 102 omits image acquisition, character recognition, and conversion processing, and outputs the converted character string “bargain” to the response transmission unit 103.

- the image processing unit 102 omits only image acquisition and character recognition processing.

- the recognized character string registered in the extraction information table 101a may be converted into the translation target language to obtain a converted character string.

- the request receiving unit 101 obtains the image URL of the image 601, the designated area “(90, 0): (200, 290)”, the recognition language “English”, and the translation destination language “German”. Receive the specified request.

- the recognized character string “Best bang for the book” is converted into German and converted.

- the response transmission unit 103 transmits to the terminal device 200 a response in which the converted character string output by the image processing unit 102 is specified.

- a pop-up 807 with the converted character string “Bargain” is displayed on the WEB page, as shown in FIG. 600 is displayed.

- a rectangle 941 in which the converted character string is written in the recognition area may be displayed in an overlapping manner.

- the request receiving unit 101 receives from the terminal device 200 a request in which image identification information (image URL), a specified area, a recognition language, and conversion information (translation destination language) are specified (step S301). ). For example, when the user designates the area 909 in FIG. 15A and designates the recognition language and the translation language in the pop-up 803 as shown in FIG. 15C, the request receiving unit 101 displays the image URL “http”. //Xxxx.601.jpg ”, a designated area“ (100, 20): (200, 280) ”, a recognition language“ English ”, and a translation destination language“ Japanese ”. Receive.

- the image processing unit 102 determines whether or not the converted character string associated with the identification information designated in the received request, the designated area, the recognition language, and the translation destination language is cached ( Step S302).

- the image processing unit 102 determines that the converted character string is cached (step S302; Yes), it outputs the cached converted character string (step S303).

- the image processing unit 102 refers to the extraction information table 101a illustrated in FIG. 16, and the image URL, the recognition language, and the translation destination language match, and the designated area “(100, 20) designated in the request: It is determined that (200, 280) "and the cached designated area (recognition area)" (110, 25): (190, 270) "overlap.

- the image processing unit 102 outputs the converted character string “bargain” associated with the image URL, the designated area, the recognition language, and the translation destination language to the response transmission unit 103.

- step S302 determines that the converted character string is not cached (step S302; No)

- the recognized character string associated with the image URL, the designated area, and the recognition language is not detected. It is determined whether or not it is cached (step S305).

- the image processing unit 102 determines that the recognized character string is cached (step S305; Yes), it converts the cached recognized character string into the translation target language and obtains the converted character string. (Step S306). For example, if the translation destination language “Japanese” and the converted character string “Bargain” are not registered in the extraction information table 101a of FIG. 16, the image processing unit 102 recognizes the recognized character string “Best bang for the book”. "Is converted into the target language” Japanese ", and the converted character string” Bargain "is obtained.

- the image processing unit 102 determines that the recognized character string is not cached (step S305; No)

- the image processing unit 102 acquires an image of the image URL specified in the received request (step S309).

- the image processing unit 102 performs character recognition for the designated area in the image and extracts a recognized character string (step S310). For example, if the image URL “http: //xxxx.601.jpg” does not match, or if the designated area specified in the request and the cached designated area do not overlap, the image processing unit 102 is sent to the WEB server.

- the apparatus 400 is accessed and an image 601 corresponding to the image URL “http: //xxx.601.jpg” is acquired.

- character recognition is performed on the designated area using the recognition language “English” designated in the request, and the recognized character string “Best bang for the buck” is extracted.

- step S310 when a recognized character string is extracted, the image processing unit 102 performs a conversion process on the recognized character string (step S306).

- the image processing unit 102 caches the image identification information, the recognition language, the translation destination language, the designated area, the recognized character string, and the converted character string in association with each other (step S307). Then, the acquired converted character string is output to the response transmission unit 103 (step S308).

- the image processing unit 102 includes an image URL “http: //xxxx.601.jpg”, a recognition language “English”, a translation destination language “Japanese”, a designated area ( (Recognition area) “(110, 25): (190, 270)”, the recognized character string “Best bang for the buck”, and the converted character string “bargain” are associated with each other in the extraction information table 101a. sign up. Then, the converted character string “Bargain” is output to the response transmission unit 103.

- the image processing unit 102 transmits a response specifying the converted character string output in step S303 or step S308 to the terminal device 200 (step S304).

- the response transmission unit 103 transmits a response in which the converted character string “Bargain” is designated to the terminal device 200.

- the image acquisition, the character recognition process, and the conversion process are omitted. Can do. Thereby, the time required for the translation of the character string in the image can be shortened.

- the server device 100 presents to the user whether or not the character recognition process has been completed for the image.

- the server device 100 includes a request reception unit 101, an image processing unit 102, a response transmission unit 103, an inquiry reception unit 104, and an answer transmission unit 105. Is done.

- the request reception unit 101, the image processing unit 102, and the response transmission unit 103 of this embodiment have the same functions as those of the first embodiment.

- the inquiry reception unit 104 and the answer transmission unit 105 having different functions will be described.

- the inquiry receiving unit 104 receives an inquiry in which image identification information is designated from the terminal device 200.

- the terminal device 200 when the user moves the cursor 701 over the image 601 (when the user moves the mouse over), the terminal device 200 sends an inquiry specifying the image URL of the image 601 to the server device 100. Send.

- the inquiry receiving unit 104 of the server device 100 receives the inquiry.

- the CPU 501 and the NIC 504 cooperate to function as the inquiry reception unit 104.

- the response transmission unit 105 prompts transmission of a request in which the image identification information and the image processing parameters are specified.

- the extracted information associated with the identification information specified in the received inquiry is cached when the response in which the message is specified is transmitted to the terminal device 200, the response in which the extracted information is specified 200.

- the image processing parameter is a recognition language for character recognition

- the extracted information is a recognized character string.

- the extraction information table 101a shown in FIG. In the extraction information table 101a, a recognized character string “Sale Water best for the book” corresponding to the image URL “http: //xxx.601.jpg” of the image 601 specified in the request is registered. . Accordingly, the answer transmission unit 105 transmits an answer in which the recognized character string is designated to the terminal device 200. When the terminal device 200 receives the answer, a popup 809 including a recognized character string is displayed on the WEB page displayed on the terminal device 200 as shown in FIG.

- a message indicating that a recognized character string is obtained may be specified in the reply sent when the recognized character string is cached.

- a pop-up 810 including a message indicating that a recognized character string has been obtained is displayed as shown in FIG.

- the response transmission unit 105 transmits a request in which the image URL of the image 601 and the recognition language are specified.

- An answer in which a prompt message is specified is transmitted to the terminal device 200.

- a pop-up 801 for requesting input of a recognition language is displayed on the WEB page displayed on the terminal device 200 as shown in FIG.

- the CPU 501 and the NIC 504 cooperate to function as the answer transmission unit 105.

- the inquiry receiving unit 104 receives an inquiry in which image identification information is designated from the terminal device 200 (step S401). For example, an inquiry specifying the image URL of the image 601 is received.

- the response transmission unit 105 determines whether or not the recognized character string associated with the identification information specified in the inquiry is cached (step S402).

- the reply transmission unit 105 determines that the recognized character string is cached (step S402; Yes)

- the reply transmission unit 105 transmits a reply in which the cached recognized character string is designated to the terminal device 200 (step S403).

- the reply transmission unit 104 transmits to the terminal device 200 an answer specifying the recognized character string to the terminal device 200.

- the answer transmitting unit 105 determines that the recognized character string is not cached (step S402; No)

- the answer transmitting unit 105 transmits an answer in which a message for prompting specification of a recognition language is specified to the terminal device 200 (Ste S404).

- the answer transmission unit 104 determines that the image URL and recognition language of the image 601 are the same. An answer in which a message prompting to transmit the specified request is specified is transmitted to the terminal device 200.

- the server device 100 starts the control processing shown in the flowchart of FIG. 8, for example.

- the server device 100 presents to the user whether or not the character recognition process has been completed for the region in the image.

- the server device 100 includes a request reception unit 101, an image processing unit 102, a response transmission unit 103, an inquiry reception unit 104, and an answer transmission unit 105. Is done.

- the request reception unit 101, the image processing unit 102, and the response transmission unit 103 of the present embodiment have the same functions as those of the second embodiment.

- the inquiry reception unit 104 and the answer transmission unit 105 having different functions will be described.

- the inquiry receiving unit 104 receives an inquiry in which the identification information of the image and the position in the image are specified from the terminal device 200.

- the terminal device 200 displays the image URL of the image 601 and the coordinates of the position of the cursor 701 (A inquiry in which “(75: 175)” is designated is transmitted to the server apparatus 100.

- the inquiry receiving unit 104 of the server device 100 receives the inquiry.

- the reply transmission unit 105 the identification information specified in the received query and the recognized character string associated with the specified area including the position specified in the received query are cached by the image processing unit 102. If there is, the response specifying the cached recognized character string is transmitted to the terminal device 200.

- the extraction information table 101a shown in FIG. 22A the coordinate “(75: 175)” of the position specified in the request is included in the recognition area 922.

- the extraction information table 101a includes an image URL “http: //xxx.601.jpg” of the image 601 specified in the request and a specified area (recognition area 922) including the coordinates of the position specified in the request.

- the corresponding recognized character string “Water” is registered. Therefore, the answer transmission unit 105 transmits an answer in which the recognized character string is designated to the terminal device 200.

- a pop-up 811 including the recognized character string “Water” is displayed on the WEB page displayed on the terminal device 200 as shown in FIG.

- a message that prompts transmission of a request in which a translation destination language is specified may be specified as an answer to be transmitted when the recognized character string is cached.

- a pop-up 812 for requesting specification of the translation destination language is displayed as shown in FIG.

- the response transmission unit 105 displays the image of the image 601 for the designated area.

- An answer in which a message urging to transmit a request in which a URL, a position in an image, and a recognition language are specified is transmitted to the terminal device 200.

- a pop-up 813 for requesting input of a recognition language is displayed on the WEB page displayed on the terminal device 200, for example, as shown in FIG.

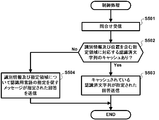

- the inquiry reception unit 104 receives an inquiry in which the identification information of the image and the position in the image are specified from the terminal device 200 (step S501). For example, an inquiry specifying the image URL and the position “(75: 175)” of the image 601 is received.

- the response transmission unit 105 determines whether or not the recognized character string associated with the specified area including the identification information and position specified in the inquiry is cached (step S502).

- the response transmission unit 105 determines that the recognized character string is cached (step S502; Yes)

- the response transmission unit 105 transmits the response in which the cached recognized character string is designated to the terminal device 200 (step S503).

- the recognized character string “Water” corresponding to the designated area including the image URL of the image 601 and the designated position “(75: 175)” is registered in the extraction information table 101 a stored in the server device 100. If it is present (FIG. 12), the response transmission unit 104 transmits to the terminal device 200 a response specifying the recognized character string.

- the response transmitting unit 105 transmits the response in which the message for prompting the specification of the recognition language for the specified region of the image is specified. It transmits to the apparatus 200 (step S504).

- the answer transmission unit 104 displays the image An answer in which a message prompting to transmit a request in which the image URL 601, the designated area, and the recognition language are designated is designated is transmitted to the terminal device 200.

- the server device 100 according to the sixth embodiment presents to the user whether or not the conversion process has been completed for the region in the image.

- the server device 100 includes a request reception unit 101, an image processing unit 102, a response transmission unit 103, an inquiry reception unit 104, and an answer transmission unit 105. Is done.

- the request reception unit 101, the image processing unit 102, and the response transmission unit 103 of the present embodiment have the same functions as those of the third embodiment.

- the inquiry reception unit 104 and the answer transmission unit 105 having different functions will be described.

- the inquiry reception unit 104 receives, from the terminal device 200, an inquiry in which image identification information, a position in the image, and conversion information are specified.

- a pop-up 812 for requesting specification of the translation destination language is displayed as shown in FIG. 22B.

- the terminal device 200 displays the image URL of the image 601, the coordinates “(75: 175)” of the position of the cursor 701, and the translation destination.

- An inquiry in which the language “Spanish” is designated is transmitted to the server apparatus 100.

- the inquiry receiving unit 104 of the server device 100 receives the inquiry.

- the reply transmission unit 105 includes the identification information and conversion information specified in the received query, and the converted character string associated with the specified area including the position specified in the received query. If the cached character string is cached, the reply in which the cached converted character string is designated is sent to the reply.

- the extraction information table 101a includes an image URL “http: //xxx.601.jpg” of the image 601 specified in the request, a specified area (recognition area 922) including the coordinates of the position specified in the request, A converted character string “Agua” corresponding to the target language “Spanish” is registered. Therefore, the reply transmission unit 105 transmits the reply in which the converted character string is designated to the terminal device 200.

- a pop-up 814 including the converted character string “Agua” is displayed on the WEB page displayed on the terminal device 200 as shown in FIG.

- the response transmission unit 105 determines that the transformed A reply with a message indicating that the character string is not cached is transmitted to the terminal device 200.

- the WEB page displayed on the terminal device 200 includes a pop-up 815 including a message indicating that the converted character string is not cached, as shown in FIG. 25B, for example. Is displayed.

- the inquiry reception unit 104 receives an inquiry in which the identification information of the image, the position in the image, and the conversion information are specified from the terminal device 200 (step S601). For example, an inquiry specifying the image URL of the image 601, the position “(75: 175)”, and the translation destination language “Spanish” is received.

- the reply transmission unit 105 determines whether or not the identification information designated in the inquiry, the designated area including the position, and the converted character string associated with the translation target language are cached (step S602).

- the response transmission unit 105 determines that the converted character string is cached (step S602; Yes)

- the response transmission unit 105 transmits an answer in which the cached converted character string is designated to the terminal device 200 (step S603).

- an image URL of the image 601, a designated area including the designated position “(75: 175)”, and a converted character string “Agua” corresponding to the translation destination language “Spanish” are stored in the server device 100.

- the reply transmission unit 104 transmits to the terminal apparatus 200 a reply specifying the converted character string.

- the response transmission unit 105 determines that the converted character string is not cached (step S602; No)

- the response specifying the message indicating that the converted character string is not cached is sent to the terminal device. 200 (step S604).

- the image URL of the image 601, the designated area including the designated position, and the converted character string corresponding to the translation destination language “Spanish” are not registered in the extraction information table 101 a stored in the server device 100.

- the response transmission unit 104 transmits to the terminal device 200 a response in which a message indicating that the converted character string is not cached is designated.

- the inquiry receiving unit 104 receives an inquiry in which an image URL and a position are specified, and the answer transmission unit 105 is a converted character corresponding to the specified area including the image URL and the position. You may make it transmit the reply by which the column was designated.

- the response transmission unit 105 refers to the extraction information table 101a in FIG.

- the converted character strings “water”, “Agua”, and “L'eau” corresponding to the designated area including the position are acquired.

- the reply transmission unit 105 transmits a reply in which all these converted character strings are designated to the terminal device 200.

- a popup 816 as shown in FIG. 25C is displayed on the terminal device 200, for example.

- the answer transmitting unit 105 may select a converted character string corresponding to the translation destination language having the highest frequency specified in the request so far, and transmit an answer specifying the converted character string.

- the answer transmission unit 105 may randomly select from the converted character strings registered in the extraction information table 101a and transmit an answer specifying the converted character string.

- the program according to the seventh embodiment causes a terminal device capable of communicating with the server device to function so as to display the result of character recognition or conversion processing performed by the server device.

- the terminal device 200 on which the program according to the present embodiment operates includes a display unit 201, a position detection unit 202, an inquiry transmission unit 203, an answer reception unit 204, and an area detection unit 205. , A setting unit 206, a request transmission unit 207, and a response reception unit 208.

- the display unit 201 displays an image identified by the identification information on the screen.

- the display unit 201 displays an image 601 designated as an image URL “http: //xxx.601.jpg” as shown in FIG.

- the CPU 501 and the image processing unit 505 cooperate to function as the display unit 201.

- the position detection unit 202 detects selection of a position in the image displayed on the screen.

- the position detection unit 202 detects the coordinates of the position of the cursor 701 when the cursor 701 is superimposed on the image.

- the CPU 501 and the image processing unit 505 cooperate to function as the position detection unit 202.

- the inquiry transmission unit 203 transmits an inquiry in which the identification information and the selected position are specified to the server device 100.

- the inquiry transmission unit 203 transmits an inquiry in which the image URL of the image 601 and the position coordinates “(75: 175)” are designated to the server apparatus 100.

- the CPU 501 and the NIC 504 cooperate to function as the inquiry transmission unit 203.

- the answer receiving unit 204 receives an answer from the server device 100. Then, the display unit 201 further displays the extraction information specified in the received answer on the screen.

- the answer receiving unit 204 receives an answer in which the recognized character string “Water” is designated.

- the display unit 201 displays a pop-up 811 including the recognized character string as shown in FIG.

- the CPU 501 and the NIC 504 cooperate to function as the answer reception unit 204.

- the area detection unit 205 detects selection of an area in the image displayed on the screen.

- the region detection unit 205 detects the coordinates of the dragged region (region 902).

- the CPU 501 and the image processing unit 505 cooperate to function as the region detection unit 205.

- the setting unit 206 prompts the user to set image processing parameters.

- the setting unit 206 prompts the user to set a recognition language used for character recognition.

- the CPU 501 functions as the setting unit 206.

- the request transmission unit 207 transmits a request in which the identification information, the set parameter, and the selected area are specified to the server apparatus 100.

- the request transmission unit 207 displays the image URL “http: //xxx.601.jpg” and the recognition language.

- a request in which “English” and the coordinates of the selected area “(5, 100): (48, 200)” are designated is transmitted to the server apparatus 100.

- the CPU 501 and the NIC 504 cooperate to function as the request transmission unit 207.

- the response receiving unit 208 receives a response from the server device 100. Then, the display unit 201 further displays the extraction information specified in the received response on the screen.

- the response receiving unit 208 receives a response in which the recognized character string “Sale” is designated.

- the display unit 201 displays a translucent rectangle 931 superimposed on the recognized character string “Sale”.

- the CPU 501 and the NIC 504 cooperate to function as the response reception unit 208.

- the display unit 201 displays an image identified by the identification information on the screen (step S701).

- the position detection unit 202 determines whether or not selection of a position in the image displayed on the screen has been detected (step S702).

- the display unit 201 displays an image 601 designated as an image URL “http: //xxx.601.jpg”, and when the cursor 701 is overlaid on the image 601, the position detection unit 202 displays the position of the cursor 701. The coordinate “(75: 175)” is detected (FIG. 22A).

- the inquiry transmission unit 203 sends an inquiry in which the identification information and the selected position are specified to the server. It transmits to the apparatus 100 (step S703). Then, the response receiving unit 204 receives a response to the response from the server device 100 (step S704). The display unit 201 further displays the extraction information specified in the received answer on the screen (step S705).