US20120249797A1 - Head-worn adaptive display - Google Patents

Head-worn adaptive display Download PDFInfo

- Publication number

- US20120249797A1 US20120249797A1 US13/429,721 US201213429721A US2012249797A1 US 20120249797 A1 US20120249797 A1 US 20120249797A1 US 201213429721 A US201213429721 A US 201213429721A US 2012249797 A1 US2012249797 A1 US 2012249797A1

- Authority

- US

- United States

- Prior art keywords

- eyepiece

- user

- light

- optical

- image

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Abandoned

Links

Images

Classifications

-

- G—PHYSICS

- G02—OPTICS

- G02B—OPTICAL ELEMENTS, SYSTEMS OR APPARATUS

- G02B27/00—Optical systems or apparatus not provided for by any of the groups G02B1/00 - G02B26/00, G02B30/00

- G02B27/0093—Optical systems or apparatus not provided for by any of the groups G02B1/00 - G02B26/00, G02B30/00 with means for monitoring data relating to the user, e.g. head-tracking, eye-tracking

-

- G—PHYSICS

- G01—MEASURING; TESTING

- G01C—MEASURING DISTANCES, LEVELS OR BEARINGS; SURVEYING; NAVIGATION; GYROSCOPIC INSTRUMENTS; PHOTOGRAMMETRY OR VIDEOGRAMMETRY

- G01C21/00—Navigation; Navigational instruments not provided for in groups G01C1/00 - G01C19/00

- G01C21/26—Navigation; Navigational instruments not provided for in groups G01C1/00 - G01C19/00 specially adapted for navigation in a road network

- G01C21/34—Route searching; Route guidance

- G01C21/36—Input/output arrangements for on-board computers

- G01C21/3626—Details of the output of route guidance instructions

- G01C21/3629—Guidance using speech or audio output, e.g. text-to-speech

-

- G—PHYSICS

- G02—OPTICS

- G02B—OPTICAL ELEMENTS, SYSTEMS OR APPARATUS

- G02B27/00—Optical systems or apparatus not provided for by any of the groups G02B1/00 - G02B26/00, G02B30/00

- G02B27/01—Head-up displays

- G02B27/017—Head mounted

-

- G—PHYSICS

- G02—OPTICS

- G02B—OPTICAL ELEMENTS, SYSTEMS OR APPARATUS

- G02B27/00—Optical systems or apparatus not provided for by any of the groups G02B1/00 - G02B26/00, G02B30/00

- G02B27/01—Head-up displays

- G02B27/017—Head mounted

- G02B27/0172—Head mounted characterised by optical features

-

- G—PHYSICS

- G04—HOROLOGY

- G04G—ELECTRONIC TIME-PIECES

- G04G21/00—Input or output devices integrated in time-pieces

- G04G21/02—Detectors of external physical values, e.g. temperature

- G04G21/025—Detectors of external physical values, e.g. temperature for measuring physiological data

-

- G—PHYSICS

- G04—HOROLOGY

- G04G—ELECTRONIC TIME-PIECES

- G04G21/00—Input or output devices integrated in time-pieces

- G04G21/04—Input or output devices integrated in time-pieces using radio waves

-

- G—PHYSICS

- G04—HOROLOGY

- G04G—ELECTRONIC TIME-PIECES

- G04G21/00—Input or output devices integrated in time-pieces

- G04G21/08—Touch switches specially adapted for time-pieces

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F1/00—Details not covered by groups G06F3/00 - G06F13/00 and G06F21/00

- G06F1/16—Constructional details or arrangements

- G06F1/1613—Constructional details or arrangements for portable computers

- G06F1/163—Wearable computers, e.g. on a belt

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/002—Specific input/output arrangements not covered by G06F3/01 - G06F3/16

- G06F3/005—Input arrangements through a video camera

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/01—Input arrangements or combined input and output arrangements for interaction between user and computer

- G06F3/011—Arrangements for interaction with the human body, e.g. for user immersion in virtual reality

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/01—Input arrangements or combined input and output arrangements for interaction between user and computer

- G06F3/011—Arrangements for interaction with the human body, e.g. for user immersion in virtual reality

- G06F3/013—Eye tracking input arrangements

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/01—Input arrangements or combined input and output arrangements for interaction between user and computer

- G06F3/016—Input arrangements with force or tactile feedback as computer generated output to the user

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/01—Input arrangements or combined input and output arrangements for interaction between user and computer

- G06F3/017—Gesture based interaction, e.g. based on a set of recognized hand gestures

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/01—Input arrangements or combined input and output arrangements for interaction between user and computer

- G06F3/03—Arrangements for converting the position or the displacement of a member into a coded form

- G06F3/0304—Detection arrangements using opto-electronic means

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/01—Input arrangements or combined input and output arrangements for interaction between user and computer

- G06F3/03—Arrangements for converting the position or the displacement of a member into a coded form

- G06F3/033—Pointing devices displaced or positioned by the user, e.g. mice, trackballs, pens or joysticks; Accessories therefor

- G06F3/0354—Pointing devices displaced or positioned by the user, e.g. mice, trackballs, pens or joysticks; Accessories therefor with detection of 2D relative movements between the device, or an operating part thereof, and a plane or surface, e.g. 2D mice, trackballs, pens or pucks

- G06F3/03547—Touch pads, in which fingers can move on a surface

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/01—Input arrangements or combined input and output arrangements for interaction between user and computer

- G06F3/03—Arrangements for converting the position or the displacement of a member into a coded form

- G06F3/041—Digitisers, e.g. for touch screens or touch pads, characterised by the transducing means

- G06F3/042—Digitisers, e.g. for touch screens or touch pads, characterised by the transducing means by opto-electronic means

- G06F3/0428—Digitisers, e.g. for touch screens or touch pads, characterised by the transducing means by opto-electronic means by sensing at the edges of the touch surface the interruption of optical paths, e.g. an illumination plane, parallel to the touch surface which may be virtual

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06Q—INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR ADMINISTRATIVE, COMMERCIAL, FINANCIAL, MANAGERIAL OR SUPERVISORY PURPOSES; SYSTEMS OR METHODS SPECIALLY ADAPTED FOR ADMINISTRATIVE, COMMERCIAL, FINANCIAL, MANAGERIAL OR SUPERVISORY PURPOSES, NOT OTHERWISE PROVIDED FOR

- G06Q30/00—Commerce

- G06Q30/02—Marketing; Price estimation or determination; Fundraising

-

- G—PHYSICS

- G02—OPTICS

- G02B—OPTICAL ELEMENTS, SYSTEMS OR APPARATUS

- G02B27/00—Optical systems or apparatus not provided for by any of the groups G02B1/00 - G02B26/00, G02B30/00

- G02B27/01—Head-up displays

- G02B27/0101—Head-up displays characterised by optical features

- G02B2027/0138—Head-up displays characterised by optical features comprising image capture systems, e.g. camera

-

- G—PHYSICS

- G02—OPTICS

- G02B—OPTICAL ELEMENTS, SYSTEMS OR APPARATUS

- G02B27/00—Optical systems or apparatus not provided for by any of the groups G02B1/00 - G02B26/00, G02B30/00

- G02B27/01—Head-up displays

- G02B27/0101—Head-up displays characterised by optical features

- G02B2027/014—Head-up displays characterised by optical features comprising information/image processing systems

-

- G—PHYSICS

- G02—OPTICS

- G02B—OPTICAL ELEMENTS, SYSTEMS OR APPARATUS

- G02B27/00—Optical systems or apparatus not provided for by any of the groups G02B1/00 - G02B26/00, G02B30/00

- G02B27/01—Head-up displays

- G02B27/017—Head mounted

- G02B2027/0178—Eyeglass type

-

- G—PHYSICS

- G02—OPTICS

- G02B—OPTICAL ELEMENTS, SYSTEMS OR APPARATUS

- G02B27/00—Optical systems or apparatus not provided for by any of the groups G02B1/00 - G02B26/00, G02B30/00

- G02B27/01—Head-up displays

- G02B27/0179—Display position adjusting means not related to the information to be displayed

- G02B2027/0187—Display position adjusting means not related to the information to be displayed slaved to motion of at least a part of the body of the user, e.g. head, eye

-

- G—PHYSICS

- G02—OPTICS

- G02C—SPECTACLES; SUNGLASSES OR GOGGLES INSOFAR AS THEY HAVE THE SAME FEATURES AS SPECTACLES; CONTACT LENSES

- G02C7/00—Optical parts

- G02C7/02—Lenses; Lens systems ; Methods of designing lenses

- G02C7/08—Auxiliary lenses; Arrangements for varying focal length

-

- G—PHYSICS

- G02—OPTICS

- G02C—SPECTACLES; SUNGLASSES OR GOGGLES INSOFAR AS THEY HAVE THE SAME FEATURES AS SPECTACLES; CONTACT LENSES

- G02C7/00—Optical parts

- G02C7/02—Lenses; Lens systems ; Methods of designing lenses

- G02C7/08—Auxiliary lenses; Arrangements for varying focal length

- G02C7/086—Auxiliary lenses located directly on a main spectacle lens or in the immediate vicinity of main spectacles

-

- G—PHYSICS

- G02—OPTICS

- G02C—SPECTACLES; SUNGLASSES OR GOGGLES INSOFAR AS THEY HAVE THE SAME FEATURES AS SPECTACLES; CONTACT LENSES

- G02C9/00—Attaching auxiliary optical parts

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F2203/00—Indexing scheme relating to G06F3/00 - G06F3/048

- G06F2203/033—Indexing scheme relating to G06F3/033

- G06F2203/0331—Finger worn pointing device

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F2203/00—Indexing scheme relating to G06F3/00 - G06F3/048

- G06F2203/033—Indexing scheme relating to G06F3/033

- G06F2203/0338—Fingerprint track pad, i.e. fingerprint sensor used as pointing device tracking the fingertip image

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V20/00—Scenes; Scene-specific elements

- G06V20/20—Scenes; Scene-specific elements in augmented reality scenes

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V40/00—Recognition of biometric, human-related or animal-related patterns in image or video data

- G06V40/10—Human or animal bodies, e.g. vehicle occupants or pedestrians; Body parts, e.g. hands

- G06V40/16—Human faces, e.g. facial parts, sketches or expressions

- G06V40/161—Detection; Localisation; Normalisation

- G06V40/166—Detection; Localisation; Normalisation using acquisition arrangements

Definitions

- the present disclosure relates to an augmented reality eyepiece, associated control technologies, and applications for use, and more specifically to software applications running on the eyepiece.

- the eyepiece may include an internal software application running on an integrated multimedia computing facility that has been adapted for 3D augmented reality (AR) content display and interaction with the eyepiece.

- 3D AR software applications may be developed in conjunction with mobile applications and provided through application store(s), or as stand-alone applications specifically targeting the eyepiece as the end-use platform and through a dedicated 3D AR eyepiece store.

- Internal software applications may interface with inputs and output facilities provided by the eyepiece through facilities internal and external to the eyepiece, such as initiated from the surrounding environment, sensing devices, user action capture devices, internal processing facilities, internal multimedia processing facilities, other internal applications, camera, sensors, microphone, through a transceiver, through a tactile interface, from external computing facilities, external applications, event and/or data feeds, external devices, third parties, and the like.

- Command and control modes operating in conjunction with the eyepiece may be initiated by sensing inputs through input devices, user action, external device interaction, reception of events and/or data feeds, internal application execution, external application execution, and the like.

- FIG. 1 depicts an illustrative embodiment of the optical arrangement.

- FIG. 2 depicts an RGB LED projector.

- FIG. 3 depicts the projector in use.

- FIG. 4 depicts an embodiment of the waveguide and correction lens disposed in a frame.

- FIG. 5 depicts a design for a waveguide eyepiece.

- FIG. 6 depicts an embodiment of the eyepiece with a see-through lens.

- FIG. 7 depicts an embodiment of the eyepiece with a see-through lens.

- FIG. 8A-C depicts embodiments of the eyepiece arranged in a flip-up/flip-down configuration.

- FIG. 8D-E depicts embodiments of snap-fit elements of a secondary optic.

- FIG. 8F depicts embodiments of flip-up/flip-down electro-optics modules.

- FIG. 9 depicts an electrochromic layer of the eyepiece.

- FIG. 10 depicts the advantages of the eyepiece in real-time image enhancement, keystone correction, and virtual perspective correction.

- FIG. 11 depicts a plot of responsivity versus wavelength for three substrates.

- FIG. 12 illustrates the performance of the black silicon sensor.

- FIG. 13A depicts an incumbent night vision system

- FIG. 13B depicts the night vision system of the present disclosure

- FIG. 13C illustrates the difference in responsivity between the two.

- FIG. 14 depicts a tactile interface of the eyepiece.

- FIG. 14A depicts motions in an embodiment of the eyepiece featuring nod control.

- FIG. 15 depicts a ring that controls the eyepiece.

- FIG. 15 AA depicts a ring that controls the eyepiece with an integrated camera, where in an embodiment may allow the user to provide a video image of themselves as part of a videoconference.

- FIG. 15A depicts hand mounted sensors in an embodiment of a virtual mouse.

- FIG. 15B depicts a facial actuation sensor as mounted on the eyepiece.

- FIG. 15C depicts a hand pointing control of the eyepiece.

- FIG. 15D depicts a hand pointing control of the eyepiece.

- FIG. 15E depicts an example of eye tracking control.

- FIG. 15F depicts a hand positioning control of the eyepiece.

- FIG. 16 depicts a location-based application mode of the eyepiece.

- FIG. 17 shows the difference in image quality between A) a flexible platform of uncooled CMOS image sensors capable of VIS/NIR/SWIR imaging and B) an image intensified night vision system

- FIG. 18 depicts an augmented reality-enabled custom billboard.

- FIG. 19 depicts an augmented reality-enabled custom advertisement.

- FIG. 20 an augmented reality-enabled custom artwork.

- FIG. 20A depicts a method for posting messages to be transmitted when a viewer reaches a certain location.

- FIG. 21 depicts an alternative arrangement of the eyepiece optics and electronics.

- FIG. 22 depicts an alternative arrangement of the eyepiece optics and electronics.

- FIG. 22A depicts the eyepiece with an example of eyeglow.

- FIG. 22B depicts a cross-section of the eyepiece with a light control element for reducing eyeglow.

- FIG. 23 depicts an alternative arrangement of the eyepiece optics and electronics.

- FIG. 24 depicts a lock position of a virtual keyboard.

- FIG. 24A depicts an embodiment of a virtually projected image on a part of the human body.

- FIG. 25 depicts a detailed view of the projector.

- FIG. 26 depicts a detailed view of the RGB LED module.

- FIG. 27 depicts a gaming network.

- FIG. 28 depicts a method for gaming using augmented reality glasses.

- FIG. 29 depicts an exemplary electronic circuit diagram for an augmented reality eyepiece.

- FIG. 29A depicts a control circuit for eye-tracking control of an external device.

- FIG. 29B depicts a communication network among users of augmented reality eyepieces.

- FIG. 30 depicts partial image removal by the eyepiece.

- FIG. 31 depicts a flowchart for a method of identifying a person based on speech of the person as captured by microphones of the augmented reality device.

- FIG. 32 depicts a typical camera for use in video calling or conferencing.

- FIG. 33 illustrates an embodiment of a block diagram of a video calling camera.

- FIG. 34 depicts embodiments of the eyepiece for optical or digital stabilization.

- FIG. 35 depicts an embodiment of a classic cassegrain configuration.

- FIG. 36 depicts the configuration of the micro-cassegrain telescoping folded optic camera.

- FIG. 37 depicts a swipe process with a virtual keyboard.

- FIG. 38 depicts a target marker process for a virtual keyboard.

- FIG. 38A depicts an embodiment of a visual word translator.

- FIG. 39 illustrates glasses for biometric data capture according to an embodiment.

- FIG. 40 illustrates iris recognition using the biometric data capture glasses according to an embodiment.

- FIG. 41 depicts face and iris recognition according to an embodiment.

- FIG. 42 illustrates use of dual omni-microphones according to an embodiment.

- FIG. 43 depicts the directionality improvements with multiple microphones.

- FIG. 44 shows the use of adaptive arrays to steer the audio capture facility according to an embodiment.

- FIG. 45 shows the mosaic finger and palm enrollment system according to an embodiment.

- FIG. 46 illustrates the traditional optical approach used by other finger and palm print systems.

- FIG. 47 shows the approach used by the mosaic sensor according to an embodiment.

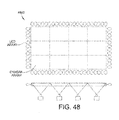

- FIG. 48 depicts the device layout of the mosaic sensor according to an embodiment.

- FIG. 49 illustrates the camera field of view and number of cameras used in a mosaic sensor according to another embodiment.

- FIG. 50 shows the bio-phone and tactical computer according to an embodiment.

- FIG. 51 shows the use of the bio-phone and tactical computer in capturing latent fingerprints and palm prints according to an embodiment.

- FIG. 52 illustrates a typical DOMEX collection.

- FIG. 53 shows the relationship between the biometric images captured using the bio-phone and tactical computer and a biometric watch list according to an embodiment.

- FIG. 54 illustrates a pocket bio-kit according to an embodiment.

- FIG. 55 shows the components of the pocket bio-kit according to an embodiment.

- FIG. 56 depicts the fingerprint, palm print, geo-location and POI enrollment device according to an embodiment.

- FIG. 57 shows a system for multi-modal biometric collection, identification, geo-location, and POI enrollment according to an embodiment.

- FIG. 58 illustrates a fingerprint, palm print, geo-location, and POI enrollment forearm wearable device according to an embodiment.

- FIG. 59 shows a mobile folding biometric enrollment kit according to an embodiment.

- FIG. 60 is a high level system diagram of a biometric enrollment kit according to an embodiment.

- FIG. 61 is a system diagram of a folding biometric enrollment device according to an embodiment.

- FIG. 62 shows a thin-film finger and palm print sensor according to an embodiment.

- FIG. 63 shows a biometric collection device for finger, palm, and enrollment data collection according to an embodiment.

- FIG. 64 illustrates capture of a two stage palm print according to an embodiment.

- FIG. 65 illustrates capture of a fingertip tap according to an embodiment.

- FIG. 66 illustrates capture of a slap and roll print according to an embodiment.

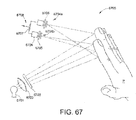

- FIG. 67 depicts a system for taking contactless fingerprints, palmprints or other biometric prints.

- FIG. 68 depicts a process for taking contactless fingerprints, palmprints or other biometric prints.

- FIG. 69 depicts an embodiment of a watch controller.

- FIG. 70A-D depicts embodiment cases for the eyepiece, including capabilities for charging and integrated display.

- FIG. 71 depicts an embodiment of a ground stake data system.

- FIG. 72 depicts a block diagram of a control mapping system including the eyepiece.

- FIG. 73 depicts a biometric flashlight.

- FIG. 74 depicts a helmet-mounted version of the eyepiece.

- FIG. 75 depicts an embodiment of situational awareness glasses.

- FIG. 76A depicts an assembled 360° imager and FIG. 76B depicts a cutaway view of the 360° imager.

- FIG. 77 depicts an exploded view of the multi-coincident view camera.

- FIG. 78 depicts a flight eye.

- FIG. 79 depicts an exploded top view of the eyepiece.

- FIG. 80 depicts an exploded electro-optic assembly.

- FIG. 81 depicts an exploded view of the shaft of the electro-optic assembly.

- FIG. 82 depicts an embodiment of an optical display system utilizing a planar illumination facility with a reflective display.

- FIG. 83 depicts a structural embodiment of a planar illumination optical system.

- FIG. 84 depicts an embodiment assembly of a planar illumination facility and a reflective display with laser speckle suppression components.

- FIG. 85 depicts an embodiment of a planar illumination facility with grooved features for redirecting light.

- FIG. 86 depicts an embodiment of a planar illumination facility with grooved features and ‘anti-grooved’ features paired to reduce image aberrations.

- FIG. 87 depicts an embodiment of a planar illumination facility fabricated from a laminate structure.

- FIG. 88 depicts an embodiment of a planar illumination facility with a wedged optic assembly for redirecting light.

- FIG. 89 depicts a block diagram of an illumination module, according to an embodiment of the invention.

- FIG. 90 depicts a block diagram of an optical frequency converter, according to an embodiment of the invention.

- FIG. 91 depicts a block diagram of a laser illumination module, according to an embodiment of the invention.

- FIG. 92 depicts a block diagram of a laser illumination system, according to another embodiment of the invention.

- FIG. 93 depicts a block diagram of an imaging system, according to an embodiment of the invention.

- FIGS. 94A & B depict a lens with a photochromic element and a heater element in a top down and side view, respectively.

- FIG. 95 depicts an embodiment of an LCoS front light design.

- FIG. 96 depicts optically bonded prisms with a polarizer.

- FIG. 97 depicts optically bonded prisms with a polarizer.

- FIG. 98 depicts multiple embodiments of an LCoS front light design.

- FIG. 99 depicts a wedge plus OBS overlaid on an LCoS.

- FIG. 100 depicts two versions of a wedge.

- FIG. 101 depicts a curved PBS film over the LCoS chip.

- FIG. 102 depicts an embodiment of an optical assembly.

- FIG. 103 depicts an embodiment of an image source.

- FIG. 104 depicts an embodiment of an image source.

- FIG. 105 depicts embodiments of image sources.

- FIG. 106 depicts a top-level block diagram showing software application facilities and markets in conjunction with functional and control aspects of the eyepiece in an embodiment of the present invention.

- FIG. 107 depicts a functional block diagram of the eyepiece application development environment in an embodiment of the present invention.

- FIG. 108 depicts a platform elements development stack in relation to software applications for the eyepiece in an embodiment of the present invention.

- FIG. 109 is an illustration of a head mounted display with see-through capability according to an embodiment of the present invention.

- FIG. 110 is an illustration of a view of an unlabeled scene as viewed through the head mounted display depicted in FIG. 109 .

- FIG. 111 is an illustration of a view of the scene of FIG. 110 with 2D overlaid labels.

- FIG. 112 is an illustration of 3D labels of FIG. 111 as displayed to the viewer's left eye.

- FIG. 113 is an illustration of 3D labels of FIG. 111 as displayed to the viewer's right eye.

- FIG. 114 is an illustration of the left and right 3D labels of FIG. 111 overlaid on one another to show the disparity.

- FIG. 115 is an illustration of the view of a scene of FIG. 110 with the 3D labels.

- FIG. 116 is an illustration of stereo images captured of the scene of FIG. 110 .

- FIG. 118 is an illustration of the scene of FIG. 110 showing the overlaid 3D labels.

- FIG. 119 is a flowchart for a depth cue method embodiment of the present invention for providing 3D labels.

- FIG. 120 is a flowchart for another depth cue method embodiment of the present invention for providing 3D labels.

- FIG. 121 is a flowchart for yet another depth cue method embodiment of the present invention for providing 3D labels.

- FIG. 122 is a flowchart for a still another depth cue method embodiment of the present invention for providing 3D labels.

- the present disclosure relates to eyepiece electro-optics.

- the eyepiece may include projection optics suitable to project an image onto a see-through or translucent lens, enabling the wearer of the eyepiece to view the surrounding environment as well as the displayed image.

- the projection optics also known as a projector, may include an RGB LED module that uses field sequential color. With field sequential color, a single full color image may be broken down into color fields based on the primary colors of red, green, and blue and imaged by an LCoS (liquid crystal on silicon) optical display 210 individually. As each color field is imaged by the optical display 210 , the corresponding LED color is turned on. When these color fields are displayed in rapid sequence, a full color image may be seen.

- LCoS liquid crystal on silicon

- the resulting projected image in the eyepiece can be adjusted for any chromatic aberrations by shifting the red image relative to the blue and/or green image and so on.

- the image may thereafter be reflected into a two surface freeform waveguide where the image light engages in total internal reflections (TIR) until reaching the active viewing area of the lens where the user sees the image.

- a processor which may include a memory and an operating system, may control the LED light source and the optical display.

- the projector may also include or be optically coupled to a display coupling lens, a condenser lens, a polarizing beam splitter, and a field lens.

- an illustrative embodiment of the augmented reality eyepiece 100 may be depicted. It will be understood that embodiments of the eyepiece 100 may not include all of the elements depicted in FIG. 1 while other embodiments may include additional or different elements.

- the optical elements may be embedded in the arm portions 122 of the frame 102 of the eyepiece. Images may be projected with a projector 108 onto at least one lens 104 disposed in an opening of the frame 102 .

- One or more projectors 108 such as a nanoprojector, picoprojector, microprojector, femtoprojector, LASER-based projector, holographic projector, and the like may be disposed in an arm portion of the eyepiece frame 102 . In embodiments, both lenses 104 are see-through or translucent while in other embodiments only one lens 104 is translucent while the other is opaque or missing. In embodiments, more than one projector 108 may be included in the eyepiece 100 .

- the eyepiece 100 may also include at least one articulating ear bud 120 , a radio transceiver 118 and a heat sink 114 to absorb heat from the LED light engine, to keep it cool and to allow it to operate at full brightness.

- a radio transceiver 118 and a heat sink 114 to absorb heat from the LED light engine, to keep it cool and to allow it to operate at full brightness.

- TI OMAP4 open multimedia applications processors

- the projector 200 may be an RGB projector.

- the projector 200 may include a housing 202 , a heatsink 204 and an RGB LED engine or module 206 .

- the RGB LED engine 206 may include LEDs, dichroics, concentrators, and the like.

- a digital signal processor (DSP) (not shown) may convert the images or video stream into control signals, such as voltage drops/current modifications, pulse width modulation (PWM) signals, and the like to control the intensity, duration, and mixing of the LED light.

- PWM pulse width modulation

- the DSP may control the duty cycle of each PWM signal to control the average current flowing through each LED generating a plurality of colors.

- a still image co-processor of the eyepiece may employ noise-filtering, image/video stabilization, and face detection, and be able to make image enhancements.

- An audio back-end processor of the eyepiece may employ buffering, SRC, equalization and the like.

- the projector 200 may include an optical display 210 , such as an LCoS display, and a number of components as shown.

- the projector 200 may be designed with a single panel LCoS display 210 ; however, a three panel display may be possible as well.

- the display 210 is illuminated with red, blue, and green sequentially (aka field sequential color).

- the projector 200 may make use of alternative optical display technologies, such as a back-lit liquid crystal display (LCD), a front-lit LCD, a transflective LCD, an organic light emitting diode (OLED), a field emission display (FED), a ferroelectric LCoS (FLCoS), liquid crystal technologies mounted on Sapphire, transparent liquid-crystal micro-displays, quantum-dot displays, and the like.

- LCD liquid crystal display

- front-lit LCD a front-lit LCD

- a transflective LCD an organic light emitting diode

- OLED organic light emitting diode

- FED field emission display

- FLCoS ferroelectric LCoS

- the eyepiece may be powered by any power supply, such as battery power, solar power, line power, and the like.

- the power may be integrated in the frame 102 or disposed external to the eyepiece 100 and in electrical communication with the powered elements of the eyepiece 100 .

- a solar energy collector may be placed on the frame 102 , on a belt clip, and the like.

- Battery charging may occur using a wall charger, car charger, on a belt clip, in an eyepiece case, and the like.

- the projector 200 may include the LED light engine 206 , which may be mounted on heat sink 204 and holder 208 , for ensuring vibration-free mounting for the LED light engine, hollow tapered light tunnel 220 , diffuser 212 and condenser lens 214 .

- Hollow tunnel 220 helps to homogenize the rapidly-varying light from the RGB LED light engine.

- hollow light tunnel 220 includes a silvered coating.

- the diffuser lens 212 further homogenizes and mixes the light before the light is led to the condenser lens 214 .

- the light leaves the condenser lens 214 and then enters the polarizing beam splitter (PBS) 218 .

- PBS polarizing beam splitter

- the LED light is propagated and split into polarization components before it is refracted to a field lens 216 and the LCoS display 210 .

- the LCoS display provides the image for the microprojector.

- the image is then reflected from the LCoS display and back through the polarizing beam splitter, and then reflected ninety degrees.

- the image leaves microprojector 200 in about the middle of the microprojector.

- the light then is led to the coupling lens 504 , described below.

- FIG. 2 depicts an embodiment of the projector assembly along with other supporting figures as described herein, but one skilled in the art will appreciate that other configurations and optical technologies may be employed.

- transparent structures such as with substrates of Sapphire, may be utilized to implement the optical path of the projector system rather than with reflective optics, thus potentially altering and/or eliminating optical components, such as the beam splitter, redirecting mirror, and the like.

- the system may have a backlit system, where the LED RGB triplet may be the light source directed to pass light through the display. As a result the back light and the display may be mounted either adjacent to the wave guide, or there may be collumnizing/directing optics after the display to get the light to properly enter the optic.

- the display may be mounted on the top, the side, and the like, of the waveguide.

- a small transparent display may be implemented with a silicon active backplane on a transparent substrate (e.g. sapphire), transparent electrodes controlled by the silicon active backplane, a liquid crystal material, a polarizer, and the like.

- the function of the polarizer may be to correct for depolarization of light passing through the system to improve the contrast of the display.

- the system may utilize a spatial light modulator that imposes some form of spatially-varying modulation on the light path, such as a micro-channel spatial light modulator where a membrane-mirror light shutters based on micro-electromechanical systems (MEMS).

- MEMS micro-electromechanical systems

- the system may also utilize other optical components, such as a tunable optical filter (e.g. with a deformable membrane actuator), a high angular deflection micro-mirror system, a discrete phase optical element, and the like.

- the eyepiece may utilize OLED displays, quantum-dot displays, and the like, that provide higher power efficiency, brighter displays, less costly components, and the like.

- display technologies such as OLED and quantum-dot displays may allow for flexible displays, and so allowing greater packaging efficiency that may reduce the overall size of the eyepiece.

- OLED and quantum-dot display materials may be printed through stamping techniques onto plastic substrates, thus creating a flexible display component.

- the OLED (organic LED) display may be a flexible, low-power display that does not require backlighting. It can be curved, as in standard eyeglass lenses.

- the OLED display may be or provide for a transparent display.

- the eyepiece may utilize a planar illumination facility 8208 in association with a reflective display 8210 , where light source(s) 8202 are coupled 8204 with an edge of the planar illumination facility 8208 , and where the planar side of the planar illumination facility 8208 illuminates the reflective display 8210 that provides imaging of content to be presented to the eye 8222 of the wearer through transfer optics 8212 .

- the reflective display 8210 may be an LCD, an LCD on silicon (LCoS), cholesteric liquid crystal, guest-host liquid crystal, polymer dispersed liquid crystal, phase retardation liquid crystal, and the like, or other liquid crystal technology know in the art.

- the reflective display 8210 may be a bi-stable display, such as electrophoretic, electrofluidic, electrowetting, electrokinetic, cholesteric liquid crystal, and the like, or any other bi-stable display known to the art.

- the reflective display 8210 may also be a combination of an LCD technology and a bi-stable display technology.

- the coupling 8204 between a light source 8202 and the ‘edge’ of the planar illumination facility 8208 may be made through other surfaces of the planar illumination facility 8208 and then directed into the plane of the planar illumination facility 8208 , such as initially through the top surface, bottom surface, an angled surface, and the like. For example, light may enter the planar illumination facility from the top surface, but into a 45° facet such that the light is bent into the direction of the plane. In an alternate embodiment, this bending of direction of the light may be implemented with optical coatings.

- the light source 8202 may be an RGB LED source (e.g. an LED array) coupled 8204 directly to the edge of the planar illumination facility.

- the light entering the edge of the planar illumination facility may then be directed to the reflective display for imaging, such as described herein.

- Light may enter the reflective display to be imaged, and then redirected back through the planar illumination facility, such as with a reflecting surface at the backside of the reflective display.

- Light may then enter the transfer optics 8212 for directing the image to the eye 8222 of the wearer, such as through a lens 8214 , reflected by a beam splitter 8218 to a reflective surface 8220 , back through the beam splitter 8218 , and the like, to the eye 8222 .

- the transfer optics 8212 have been described in terms of the 8214 , 8218 , and 8220 , it will be appreciated by one skilled in the art that the transfer optics 8212 may include any transfer optics configuration known, including more complex or simpler configurations than describe herein. For instance, with a different focal length in the field lens 8214 , the beam splitter 8218 could bend the image directly towards the eye, thus eliminating the curved mirror 8220 , and achieving a simpler design implementation.

- the light source 8202 may be an LED light source, a laser light source, a white light source, and the like, or any other light source known in the art.

- the light coupling mechanism 8204 may be direct coupling between the light source 8202 and the planar illumination facility 8208 , or through coupling medium or mechanism, such as a waveguide, fiber optic, light pipe, lens, and the like.

- the planar illumination facility 8208 may receive and redirect the light to a planar side of its structure through an interference grating, optical imperfections, scattering features, reflective surfaces, refractive elements, and the like.

- the planar illumination facility 8208 may be a cover glass over the reflective display 8210 , such as to reduce the combined thickness of the reflective display 8210 and the planar illumination facility 8208 .

- the planar illumination facility 8208 may further include a diffuser located on the side nearest the transfer optics 8212 , to expand the cone angle of the image light as it passes through the planar illumination facility 8208 to the transfer optics 8212 .

- the transfer optics 8212 may include a plurality of optical elements, such as lenses, mirrors, beam splitters, and the like, or any other optical transfer element known to the art.

- FIG. 83 presents an embodiment of an optical system 8302 for the eyepiece 8300 , where a planar illumination facility 8310 and reflective display 8308 mounted on substrate 8304 are shown interfacing through transfer optics 8212 including an initial diverging lens 8312 , a beam splitter 8314 , and a spherical mirror 8318 , which present the image to the eyebox 8320 where the wearer's eye receives the image.

- the flat beam splitter 8314 may be a wire-grid polarizer, a metal partially transmitting mirror coating, and the like

- the spherical reflector 8318 may be a series of dielectric coatings to give a partial mirror on the surface.

- the coating on the spherical mirror 8318 may be a thin metal coating to provide a partially transmitting mirror.

- FIG. 84 shows a planar illumination facility 8408 as part of a ferroelectric light-wave circuit (FLC) 8404 , including a configuration that utilizes laser light sources 8402 coupling to the planar illumination facility 8408 through a waveguide wavelength converter 8420 8422 , where the planar illumination facility 8408 utilizes a grating technology to present the incoming light from the edge of the planar illumination facility to the planar surface facing the reflective display 8410 . The image light from the reflective display 8410 is then redirected back though the planar illumination facility 8408 though a hole 8412 in the supporting structure 8414 to the transfer optics.

- FLC ferroelectric light-wave circuit

- the FLC also utilizes optical feedback to reduce speckle from the lasers, by broadening the laser spectrum as described in U.S. Pat. No. 7,265,896.

- the laser source 8402 is an IR laser source, where the FLC combines the beams to RGB, with back reflection that causes the laser light to hop and produce a broadened bandwidth to provide the speckle suppression.

- the speckle suppression occurs in the wave-guides 8420 .

- the laser light from laser sources 8402 is coupled to the planar illumination facility 8408 through a multi-mode interference combiner (MMI) 8422 .

- MMI multi-mode interference combiner

- Each laser source port is positioned such that the light traversing the MMI combiner superimposes on one output port to the planar illumination facility 8408 .

- the grating of the planar illumination facility 8408 produces uniform illumination for the reflective display.

- the grating elements may use a very fine pitch (e.g. interferometric) to produce the illumination to the reflective display, which is reflected back with very low scatter off the grating as the light passes through the planar illumination facility to the transfer optics. That is, light comes out aligned such that the grating is nearly fully transparent.

- the optical feedback utilized in this embodiment is due to the use of laser light sources, and when LEDs are utilized, speckle suppression may not be required because the LEDs are already broadband enough.

- FIG. 85 In an embodiment of an optics system utilizing a planar illumination facility 8502 that includes a configuration with optical imperfections, in this case a ‘grooved’ configuration, is shown in FIG. 85 .

- the light source(s) 8202 are coupled 8204 directly to the edge of the planar illumination facility 8502 .

- Light then travels through the planar illumination facility 8502 and encounters small grooves 8504 A-D in the planar illumination facility material, such as grooves in a piece of Poly-methyl methacrylate (PMMA).

- the grooves 8504 A-D may vary in spacing as they progress away from the input port (e.g. less ‘aggressive’ as they progress from 8504 A to 8504 D), vary in heights, vary in pitch, and the like.

- the light is then redirected by the grooves 8504 A-D to the reflective display 8210 as an incoherent array of light sources, producing fans of rays traveling to the reflective display 8210 , where the reflective display 8210 is far enough away from the grooves 8504 A-D to produce illumination patterns from each groove that overlap to provide uniform illumination of the area of the reflective display 8210 .

- there may be an optimum spacing for the grooves where the number of grooves per pixel on the reflective display 8210 may be increased to make the light more incoherent (more fill), but where in turn this produces lower contrast in the image provided to the wearer with more grooves to interfere within the provided image. While this embodiment has been discussed with respect to grooves, other optical imperfections, such as dots, are also possible.

- counter ridges 8604 may be applied into the grooves of the planar illumination facility, such as in a ‘snap-on’ ridge assembly 8602 .

- the counter ridges 8604 are positioned in the grooves 8504 A-D such that there is an air gap between the groove sidewalls and the counter ridge sidewalls. This air gap provides a defined change in refractive index as perceived by the light as it travels through the planar illumination facility that promotes a reflection of the light at the groove sidewall.

- the application of counter ridges 8604 reduces aberrations and deflections of the image light caused by the grooves.

- image light reflected from reflective display 8210 is refracted by the groove sidewall and as such it changes direction because of Snell's law.

- the refraction of the image light is compensated for and the image light is redirected toward the transfer optics 8214 .

- the planar illumination facility 8702 may be a laminate structure created out of a plurality of laminating layers 8704 wherein the laminating layers 8704 have alternating different refractive indices.

- the planar illumination facility 8702 may be cut across two diagonal planes 8708 of the laminated sheet. In this way, the grooved structure shown in FIGS. 85 and 86 is replaced with the laminate structure 8702 .

- the laminating sheet may be made of similar materials (PMMA 1 versus PMMA 2 —where the difference is in the molecular weight of the PMMA). As long as the layers are fairly thick, there may be no interference effects, and act as a clear sheet of plastic. In the configuration shown, the diagonal laminations will redirect a small percentage of light source 8202 to the reflective display, where the pitch of the lamination is selected to minimize aberration.

- FIG. 88 shows a planar illumination facility 8802 utilizing a ‘wedge’ configuration.

- the light source(s) are coupled 8204 directly to the edge of the planar illumination facility 8802 .

- Light then travels through the planar illumination facility 8802 and encounters the slanted surface of the first wedge 8804 , where the light is redirected to the reflective display 8210 , and then back to the illumination facility 8802 and through both the first wedge 8804 and the second wedge 8812 and on to the transfer optics.

- multi-layer coatings 8808 8810 may be applied to the wedges to improve transfer properties.

- the wedge may be made from PMMA, with dimensions of 1 ⁇ 2 mm high-10 mm width, and spanning the entire reflective display, have 1 to 1.5 degrees angle, and the like.

- the light may go through multiple reflections within the wedge 8804 before passing through the wedge 8804 to illuminate the reflective display 8210 .

- the wedge 8804 is coated with a highly reflecting coating 8808 and 8810 , the ray may make many reflections inside wedge 8804 before turning around and coming back out to the light source 8202 again.

- multi-layer coatings 8808 and 8810 on the wedge 8804 such as with SiO2, Niobium Pentoxide, and the like, light may be directed to illuminate the reflective display 8210 .

- the coatings 8808 and 8810 may be designed to reflect light at a specified wavelength over a wide range of angles, but transmit light within a certain range of angles (e.g. theta out angles). In embodiments, the design may allow the light to reflect within the wedge until it reaches a transmission window for presentation to the reflective display 8210 , where the coating is then configured to enable transmission.

- the angle of the wedge directs light from an LED lighting system to uniformly irradiate a reflective image display to produce an image that is reflected through the illumination system.

- the image provided to the wearer's eye has uniform brightness as determined by the image content in the image.

- the see-through optics system including a planar illumination facility 8208 and reflective display 8210 as described herein may be applied to any head-worn device known to the art, such as including the eyepiece as described herein, but also to helmets (e.g. military helmets, pilot helmets, bike helmets, motorcycle helmets, deep sea helmets, space helmets, and the like) ski goggles, eyewear, water diving masks, dusk masks, respirators, Hazuiat head gear, virtual reality headgear, simulation devices, and the like.

- helmets e.g. military helmets, pilot helmets, bike helmets, motorcycle helmets, deep sea helmets, space helmets, and the like

- goggles e.g. military helmets, pilot helmets, bike helmets, motorcycle helmets, deep sea helmets, space helmets, and the like

- eyewear e.g. military helmets, pilot helmets, bike helmets, motorcycle helmets, deep sea helmets, space helmets, and the like

- goggles e.g. military

- the optics system and protective covering associated with the head-worn device may incorporate the optics system in a plurality of ways, including inserting the optics system into the head-worn device in addition to optics and covering traditionally associated with the head-worn device.

- the optics system may be included in a ski goggle as a separate unit, providing the user with projected content, but where the optics system doesn't replace any component of the ski goggle, such as the see-through covering of the ski goggle (e.g. the clear or colored plastic covering that is exposed to the outside environment, keeping the wind and snow from the user's eyes).

- the optics system may replace, at least in part, certain optics traditionally associated with the head-worn gear.

- certain optical elements of the transfer optics 8212 may replace the outer lens of an eyewear application.

- a beam splitter, lens, or mirror of the transfer optics 8212 could replace the front lens for an eyewear application (e.g. sunglasses), thus eliminating the need for the front lens of the glasses, such as if the curved reflection mirror 8220 is extended to cover the glasses, eliminating the need for the cover lens.

- the see-through optics system including a planar illumination facility 8208 and reflective display 8210 may be located in the head-worn gear so as to be unobtrusive to the function and aesthetic of the head-worn gear.

- the optics system may be located in proximity with an upper portion of the lens, such as in the upper portion of the frame.

- a planar illumination facility may provide light in a plurality of colors including Red-Green-Blue (RGB) light and/or white light.

- the light from the illumination module may be directed to a 3LCD system, a Digital Light Processing (DLP®) system, a Liquid Crystal on Silicon (LCoS) system, or other micro-display or micro-projection systems.

- the illumination module may use wavelength combining and nonlinear frequency conversion with nonlinear feedback to the source to provide a source of high-brightness, long-life, speckle-reduced or speckle-free light.

- Various embodiments of the invention may provide light in a plurality of colors including Red-Green-Blue (RGB) light and/or white light.

- the light from the illumination module may be directed to a 3LCD system, a Digital Light Processing (DLP) system, a Liquid Crystal on Silicon (LCoS) system, or other micro-display or micro-projection systems.

- the illumination modules described herein may be used in the optical assembly for the eyepiece 100 .

- One embodiment of the invention includes a system comprising a laser, LED or other light source configured to produce an optical beam at a first wavelength, a planar lightwave circuit coupled to the laser and configured to guide the optical beam, and a waveguide optical frequency converter coupled to the planar lightwave circuit, and configured to receive the optical beam at the first wavelength, convert the optical beam at the first wavelength into an output optical beam at a second wavelength.

- the system may provide optically coupled feedback which is nonlinearly dependent on the power of the optical beam at the first wavelength to the laser.

- Another embodiment of the invention includes a system comprising a substrate, a light source, such as a laser diode array or one or more LEDs disposed on the substrate and configured to emit a plurality of optical beams at a first wavelength, a planar lightwave circuit disposed on the substrate and coupled to the light source, and configured to combine the plurality of optical beams and produce a combined optical beam at the first wavelength, and a nonlinear optical element disposed on the substrate and coupled to the planar lightwave circuit, and configured to convert the combined optical beam at the first wavelength into an optical beam at a second wavelength using nonlinear frequency conversion.

- the system may provide optically coupled feedback which is nonlinearly dependent on a power of the combined optical beam at the first wavelength to the laser diode array.

- Another embodiment of the invention includes a system comprising a light source, such as a semiconductor laser array or one or more LEDs configured to produce a plurality of optical beams at a first wavelength, an arrayed waveguide grating coupled to the light source and configured to combine the plurality of optical beams and output a combined optical beam at the first wavelength, a quasi-phase matching wavelength-converting waveguide coupled to the arrayed waveguide grating and configured to use second harmonic generation to produce an output optical beam at a second wavelength based on the combined optical beam at the first wavelength.

- a light source such as a semiconductor laser array or one or more LEDs configured to produce a plurality of optical beams at a first wavelength

- an arrayed waveguide grating coupled to the light source and configured to combine the plurality of optical beams and output a combined optical beam at the first wavelength

- a quasi-phase matching wavelength-converting waveguide coupled to the arrayed waveguide grating and configured to use second harmonic generation to produce an output optical beam at a second wavelength based on the

- Nonlinear Feedback may reduce the sensitivity of the output power from the wavelength conversion device to variations in the nonlinear coefficients of the device because the feedback power increases if a nonlinear coefficient decreases.

- the increased feedback tends to increase the power supplied to the wavelength conversion device, thus mitigating the effect of the reduced nonlinear coefficient.

- a processor 10902 may provide display sequential frames 10924 for image display through a display component 10928 (e.g. an LCoS display component) of the eyepiece 100 .

- the sequential frames 10924 may be produced with or without a display driver 10912 as an intermediate component between the processor 10902 and the display component 10928 .

- the processor 10902 may include a frame buffer 10904 and a display interface 10908 (e.g. a mobile industry processor interface (MIPI), with a display serial interface (DSI)).

- MIPI mobile industry processor interface

- DSI display serial interface

- the display interface 10908 may provide per-pixel RGB data 10910 to the display driver 10912 as an intermediate component between the processor 10902 and the display component 10928 , where the display driver 10912 accepts the per-pixel RGB data 10910 and generates individual full frame display data for red 10918 , green 10920 , and blue 10922 , thus providing the display sequential frames 10924 to the display component 10928 .

- the display driver 10912 may provide timing signals, such as to synchronize the delivery of the full frames 10918 10920 10922 as display sequential frames 10924 to the display component 10928 .

- the display interface 10930 may be configured to eliminate the display driver 10912 by providing full frame display data for red 10934 , green 10938 , and blue 10940 directly to the display component 10928 as display sequential frames 10924 .

- timing signals 10932 may be provided directly from the display interface 10930 to the display components. This configuration may provide significantly lower power consumption by removing the need for a display driver. Not only may this direct panel information remove the need for a driver, but also may simplify the overall logic of the configuration, and remove redundant memory required to reform panel information from pixels, to generate pixel information from frame, and the like.

- FIG. 89 is a block diagram of an illumination module, according to an embodiment of the invention.

- Illumination module 8900 comprises an optical source, a combiner, and an optical frequency converter, according to an embodiment of the invention.

- An optical source 8902 , 8904 emits optical radiation 8910 , 8914 toward an input port 8922 , 8924 of a combiner 8906 .

- Combiner 8906 has a combiner output port 8926 , which emits combined radiation 8918 .

- Combined radiation 8918 is received by an optical frequency converter 8908 , which provides output optical radiation 8928 .

- Optical frequency converter 8908 may also provide feedback radiation 8920 to combiner output port 8926 .

- Combiner 8906 splits feedback radiation 8920 to provide source feedback radiation 8912 emitted from input port 8922 and source feedback radiation 8916 emitted from input port 8924 .

- Source feedback radiation 8912 is received by optical source 8902

- source feedback radiation 8916 is received by optical source 8904 .

- Optical radiation 8910 and source feedback radiation 8912 between optical source 8902 and combiner 8906 may propagate in any combination of free space and/or guiding structure (e.g., an optical fiber or any other optical waveguide).

- Optical radiation 8914 , source feedback radiation 8916 , combined radiation 8918 and feedback radiation 8920 may also propagate in any combination of free space and/or guiding structure.

- Suitable optical sources 8902 and 8904 include one or more LEDs or any source of optical radiation having an emission wavelength that is influenced by optical feedback.

- sources include lasers, and may be semiconductor diode lasers.

- optical sources 8902 and 8904 may be elements of an array of semiconductor lasers. Sources other than lasers may also be employed (e.g., an optical frequency converter may be used as a source). Although two sources are shown on FIG. 89 , the invention may also be practiced with more than two sources.

- Combiner 8906 is shown in general terms as a three port device having ports 8922 , 8924 , and 8926 . Although ports 8922 and 8924 are referred to as input ports, and port 8926 is referred to as a combiner output port, these ports may be bidirectional and may both receive and emit optical radiation as indicated above.

- Combiner 8906 may include a wavelength dispersive element and optical elements to define the ports. Suitable wavelength dispersive elements include arrayed waveguide gratings, reflective diffraction gratings, transmissive diffraction gratings, holographic optical elements, assemblies of wavelength-selective filters, and photonic band-gap structures. Thus, combiner 8906 may be a wavelength combiner, where each of the input ports i has a corresponding, non-overlapping input port wavelength range for efficient coupling to the combiner output port.

- optical frequency converter 8908 Various optical processes may occur within optical frequency converter 8908 , including but not limited to harmonic generation, sum frequency generation (SFG), second harmonic generation (SHG), difference frequency generation, parametric generation, parametric amplification, parametric oscillation, three-wave mixing, four-wave mixing, stimulated Raman scattering, stimulated Brillouin scattering, stimulated emission, acousto-optic frequency shifting and/or electro-optic frequency shifting.

- optical frequency converter 8908 accepts optical inputs at an input set of optical wavelengths and provides an optical output at an output set of optical wavelengths, where the output set differs from the input set.

- Optical frequency converter 8908 may include nonlinear optical materials such as lithium niobate, lithium tantalate, potassium titanyl phosphate, potassium niobate, quartz, silica, silicon oxynitride, gallium arsenide, lithium borate, and/or beta-barium borate.

- Optical interactions in optical frequency converter 8908 may occur in various structures including bulk structures, waveguides, quantum well structures, quantum wire structures, quantum dot structures, photonic bandgap structures, and/or multi-component waveguide structures.

- this nonlinear optical process is preferably phase-matched.

- phase-matching may be birefringent phase-matching or quasi-phase-matching.

- Quasi-phase matching may include methods disclosed in U.S. Pat. No. 7,116,468 to Miller, the disclosure of which is hereby incorporated by reference.

- Optical frequency converter 8908 may also include various elements to improve its operation, such as a wavelength selective reflector for wavelength selective output coupling, a wavelength selective reflector for wavelength selective resonance, and/or a wavelength selective loss element for controlling the spectral response of the converter.

- multiple illumination modules as described in FIG. 89 may be associated to form a compound illumination module.

- FIG. 90 is a block diagram of an optical frequency converter, according to an embodiment of the invention.

- FIG. 90 illustrates how feedback radiation 8920 is provided by an exemplary optical frequency converter 8908 which provides parametric frequency conversion.

- Combined radiation 8918 provides forward radiation 9002 within optical frequency converter 8908 that propagates to the right on FIG. 90

- parametric radiation 9004 also propagating to the right on FIG. 90

- there is a net power transfer from forward radiation 9002 to parametric radiation 9004 as the interaction proceeds i.e., as the radiation propagates to the right in this example).

- a reflector 9008 which may have wavelength-dependent transmittance, is disposed in optical frequency converter 8908 to reflect (or partially reflect) forward radiation 9002 to provide backward radiation 9006 or may be disposed externally to optical frequency converter 8908 after endface 9010 .

- Reflector 9008 may be a grating, an internal interface, a coated or uncoated endface, or any combination thereof. The preferred level of reflectivity for reflector 9008 is greater than 90%.

- a reflector located at an input interface 9012 provides purely linear feedback (i.e., feedback that does not depend on the process efficiency).

- a reflector located at an endface 9010 provides a maximum degree of nonlinear feedback, since the dependence of forward power on process efficiency is maximized at the output interface (assuming a phase-matched parametric interaction).

- FIG. 91 is a block diagram of a laser illumination module, according to an embodiment of the invention. While lasers are used in this embodiment, it is understood that other light sources, such as LEDs, may also be used.

- Laser illumination module 9100 comprises an array of diode lasers 9102 , waveguides 9104 and 9106 , star couplers 9108 and 9110 and optical frequency converter 9114 .

- An array of diode lasers 9102 has lasing elements coupled to waveguides 9104 acting as input ports (such as ports 8922 and 8924 on FIG. 89 ) to a planar waveguide star coupler 9108 .

- Star coupler 9108 is coupled to another planar waveguide star coupler 9110 by waveguides 9106 which have different lengths.

- the combination of star couplers 9108 and 9110 with waveguides 9106 may be an arrayed waveguide grating, and acts as a wavelength combiner (e.g., combiner 8906 on FIG. 89 ) providing combined radiation 8918 to waveguide 9112 .

- Waveguide 9112 provides combined radiation 8918 to optical frequency converter 9114 .

- an optional reflector 9116 provides a back reflection of combined radiation 8918 . As indicated above in connection with FIG. 90 , this back reflection provides nonlinear feedback according to embodiments of the invention.

- One or more of the elements described with reference to FIG. 91 may be fabricated on a common substrate using planar coating methods and/or lithography methods to reduce cost, parts count and alignment requirements.

- a second waveguide may be disposed such that its core is in close proximity with the core of the waveguide in optical frequency converter 8908 .

- this arrangement of waveguides functions as a directional coupler, such that radiation in waveguide may provide additional radiation in optical frequency converter 8908 .

- Significant coupling may be avoided by providing radiation at wavelengths other than the wavelengths of forward radiation 9002 or additional radiation may be coupled into optical frequency converter 8908 at a location where forward radiation 9002 is depleted.

- traveling wave feedback configurations may also be used.

- the feedback re-enters the gain medium at a location different from the location at which the input power is emitted from.

- FIG. 92 is a block diagram of a compound laser illumination module, according to another embodiment of the invention.

- Compound laser illumination module 9200 comprises one or more laser illumination modules 9100 described with reference to FIG. 91 .

- FIG. 92 illustrates compound laser illumination module 9200 including three laser illumination modules 9100 for simplicity, compound laser illumination module 9200 may include more or fewer laser illumination modules 9100 .

- An array of diode lasers 9210 may include one or more arrays of diode lasers 9102 which may be an array of laser diodes, a diode laser array, and/or a semiconductor laser array configured to emit optical radiation within the infrared spectrum, i.e., with a wavelength shorter than radio waves and longer than visible light.

- Laser array output waveguides 9220 couple to the diode lasers in the array of diode lasers 9210 and directs the outputs of the array of diode lasers 9210 to star couplers 9108 A-C.

- the laser array output waveguides 9220 , the arrayed waveguide gratings 9230 , and the optical frequency converters 9114 A-C may be fabricated on a single substrate using a planar lightwave circuit, and may comprise silicon oxynitride waveguides and/or lithium tantalate waveguides.

- Arrayed waveguide gratings 9230 comprise the star couplers 9108 A-C, waveguides 9106 A-C, and star couplers 9110 A-C.

- Waveguides 9112 A-C provide combined radiation to optical frequency converters 9114 A-C and feedback radiation to star couplers 9110 A-C, respectively.

- Optical frequency converters 9114 A-C may comprise nonlinear optical (NLO) elements, for example optical parametric oscillator elements and/or quasi-phase matched optical elements.

- NLO nonlinear optical

- Compound laser illumination module 9200 may produce output optical radiation at a plurality of wavelengths.

- the plurality of wavelengths may be within a visible spectrum, i.e., with a wavelength shorter than infrared and longer than ultraviolet light.

- waveguide 9240 A may similarly provide output optical radiation between about 450 nm and about 470 nm

- waveguide 9240 B may provide output optical radiation between about 525 nm and about 545 nm

- waveguide 9240 C may provide output optical radiation between about 615 nm and about 660 nm.

- These ranges of output optical radiation may again be selected to provide visible wavelengths (for example, blue, green and red wavelengths, respectively) that are pleasing to a human viewer, and may again be combined to produce a white light output.

- the waveguides 9240 A-C may be fabricated on the same planar lightwave circuit as the laser array output waveguides 9220 , the arrayed waveguide gratings 9230 , and the optical frequency converters 9114 A-C.

- the output optical radiation provided by each of the waveguides 9240 A-C may provide an optical power in a range between approximately 1 watts and approximately 20 watts.

- the optical frequency converter 9114 may comprise a quasi-phase matching wavelength-converting waveguide configured to perform second harmonic generation (SHG) on the combined radiation at a first wavelength, and generate radiation at a second wavelength.

- a quasi-phase matching wavelength-converting waveguide may be configured to use the radiation at the second wavelength to pump an optical parametric oscillator integrated into the quasi-phase matching wavelength-converting waveguide to produce radiation at a third wavelength, the third wavelength optionally different from the second wavelength.

- the quasi-phase matching wavelength-converting waveguide may also produce feedback radiation propagated via waveguide 9112 through the arrayed waveguide grating 9230 to the array of diode lasers 9210 , thereby enabling each laser disposed within the array of diode lasers 9210 to operate at a distinct wavelength determined by a corresponding port on the arrayed waveguide grating.

- compound laser illumination module 9200 may be configured using an array of diode lasers 9210 nominally operating at a wavelength of approximately 830 nm to generate output optical radiation in a visible spectrum corresponding to any of the colors red, green, or blue.

- Compound laser illumination module 9200 may be optionally configured to directly illuminate spatial light modulators without intervening optics.

- compound laser illumination module 9200 may be configured using an array of diode lasers 9210 nominally operating at a single first wavelength to simultaneously produce output optical radiation at multiple second wavelengths, such as wavelengths corresponding to the colors red, green, and blue. Each different second wavelength may be produced by an instance of laser illumination module 9100 .

- the compound laser illumination module 9200 may be configured to produce diffraction-limited white light by combining output optical radiation at multiple second wavelengths into a single waveguide using, for example, waveguide-selective taps (not shown).

- the array of diode lasers 9210 , laser array output waveguides 9220 , arrayed waveguide gratings 9230 , waveguides 9112 , optical frequency converters 9114 , and frequency converter output waveguides 9240 may be fabricated on a common substrate using fabrication processes such as coating and lithography.

- the beam shaping element 9250 is coupled to the compound laser illumination module 9200 by waveguides 9240 A-C, described with reference to FIG. 92 .

- Beam shaping element 9250 may be disposed on a same substrate as the compound laser illumination module 9200 .

- the substrate may, for example, comprise a thermally conductive material, a semiconductor material, or a ceramic material.

- the substrate may comprise copper-tungsten, silicon, gallium arsenide, lithium tantalate, silicon oxynitride, and/or gallium nitride, and may be processed using semiconductor manufacturing processes including coating, lithography, etching, deposition, and implantation.

- Some of the described elements such as the array of diode lasers 9210 , laser array output waveguides 9220 , arrayed waveguide gratings 9230 , waveguides 9112 , optical frequency converters 9114 , waveguides 9240 , beam shaping element 9250 , and various related planar lightwave circuits may be passively coupled and/or aligned, and in some embodiments, passively aligned by height on a common substrate.

- Each of the waveguides 9240 A-C may couple to a different instance of beam shaping element 9250 , rather than to a single element as shown.

- Beam shaping element 9250 may be configured to shape the output optical radiation from waveguides 9240 A-C into an approximately rectangular diffraction-limited optical beam, and may further configure the output optical radiation from waveguides 9240 A-C to have a brightness uniformity greater than approximately 95% across the approximately rectangular beam shape.

- the beam shaping element 9250 may comprise an aspheric lens, such as a “top-hat” microlens, a holographic element, or an optical grating.

- the diffraction-limited optical beam output by the beam shaping element 9250 produces substantially reduced or no speckle.

- the optical beam output by the beam shaping element 9250 may provide an optical power in a range between approximately 1 watt and approximately 20 watts, and a substantially flat phase front.

- FIG. 93 is a block diagram of an imaging system, according to an embodiment of the invention.

- Imaging system 9300 comprises light engine 9310 , optical beams 9320 , spatial light modulator 9330 , modulated optical beams 9340 , and projection lens 9350 .

- the light engine 9310 may be a compound optical illumination module, such as multiple illumination modules described in FIG. 89 , a compound laser illumination module 9200 , described with reference to FIG. 92 , or a laser illumination system 9300 , described with reference to FIG. 93 .

- Spatial light modulator 9330 may be a 3LCD system, a DLP system, a LCoS system, a transmissive liquid crystal display (e.g. transmissive LCoS), a liquid-crystal-on-silicon array, a grating-based light valve, or other micro-display or micro-projection system or reflective display.

- the spatial light modulator 9330 may be configured to spatially modulate the optical beam 9320 .

- the spatial light modulator 9330 may be coupled to electronic circuitry configured to cause the spatial light modulator 9330 to modulate a video image, such as may be displayed by a television or a computer monitor, onto the optical beam 9320 to produce a modulated optical beam 9340 .

- modulated optical beam 9340 may be output from the spatial light modulator on a same side as the spatial light modulator receives the optical beam 9320 , using optical principles of reflection.

- modulated optical beam 9340 may be output from the spatial light modulator on an opposite side as the spatial light modulator receives the optical beam 9320 , using optical principles of transmission.

- the modulated optical beam 9340 may optionally be coupled into a projection lens 9350 .

- the projection lens 9350 is typically configured to project the modulated optical beam 9340 onto a display, such as a video display screen.

- a method of illuminating a video display may be performed using a compound illumination module such as one comprising multiple illumination modules 8900 , a compound laser illumination module 9100 , a laser illumination system 9200 , or an imaging system 9300 .

- a diffraction-limited output optical beam is generated using a compound illumination module, compound laser illumination module 9100 , laser illumination system 9200 or light engine 9310 .

- the output optical beam is directed using a spatial light modulator, such as spatial light modulator 9330 , and optionally projection lens 9350 .

- the spatial light modulator may project an image onto a display, such as a video display screen.

- the illumination module may be configured to emit any number of wavelengths including one, two, three, four, five, six, or more, the wavelengths spaced apart by varying amounts, and having equal or unequal power levels.

- An illumination module may be configured to emit a single wavelength per optical beam, or multiple wavelengths per optical beam.

- An illumination module may also comprise additional components and functionality including polarization controller, polarization rotator, power supply, power circuitry such as power FETs, electronic control circuitry, thermal management system, heat pipe, and safety interlock.

- an illumination module may be coupled to an optical fiber or a lightguide, such as glass (e.g. BK7).