WO2018168095A1 - 人物動向記録装置、人物動向記録方法、及びプログラム - Google Patents

人物動向記録装置、人物動向記録方法、及びプログラム Download PDFInfo

- Publication number

- WO2018168095A1 WO2018168095A1 PCT/JP2017/042505 JP2017042505W WO2018168095A1 WO 2018168095 A1 WO2018168095 A1 WO 2018168095A1 JP 2017042505 W JP2017042505 W JP 2017042505W WO 2018168095 A1 WO2018168095 A1 WO 2018168095A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- person

- trend

- index

- psychological

- physiological

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06Q—INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR ADMINISTRATIVE, COMMERCIAL, FINANCIAL, MANAGERIAL OR SUPERVISORY PURPOSES; SYSTEMS OR METHODS SPECIALLY ADAPTED FOR ADMINISTRATIVE, COMMERCIAL, FINANCIAL, MANAGERIAL OR SUPERVISORY PURPOSES, NOT OTHERWISE PROVIDED FOR

- G06Q30/00—Commerce

- G06Q30/02—Marketing; Price estimation or determination; Fundraising

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/20—Analysis of motion

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V40/00—Recognition of biometric, human-related or animal-related patterns in image or video data

- G06V40/10—Human or animal bodies, e.g. vehicle occupants or pedestrians; Body parts, e.g. hands

- G06V40/16—Human faces, e.g. facial parts, sketches or expressions

- G06V40/168—Feature extraction; Face representation

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V40/00—Recognition of biometric, human-related or animal-related patterns in image or video data

- G06V40/10—Human or animal bodies, e.g. vehicle occupants or pedestrians; Body parts, e.g. hands

- G06V40/18—Eye characteristics, e.g. of the iris

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V40/00—Recognition of biometric, human-related or animal-related patterns in image or video data

- G06V40/20—Movements or behaviour, e.g. gesture recognition

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V40/00—Recognition of biometric, human-related or animal-related patterns in image or video data

- G06V40/70—Multimodal biometrics, e.g. combining information from different biometric modalities

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N7/00—Television systems

- H04N7/18—Closed-circuit television [CCTV] systems, i.e. systems in which the video signal is not broadcast

Definitions

- the present invention relates to a technique for recording and analyzing a person's behavior and psychological movement.

- Patent Document 1 proposes a system that automatically records various waiting times that occur in customer service for the purpose of improving customer satisfaction and efficient store operation.

- the specific mechanism is to detect events such as customer entry / seating / ordering / distribution / accounting (exit) from the video of the camera installed in the store and the POS system information. From the difference, the guide waiting time, the order waiting time, and the serving waiting time are automatically calculated.

- objective data can be collected without imposing a burden on the customer, but the research items are limited to the waiting time, and it is not possible to sufficiently evaluate customer satisfaction, the level of interest, and changes thereof.

- the present invention has been made in view of the above circumstances, and is intended to automatically collect and record data useful for objectively evaluating customer satisfaction and high interest without burdening the customer.

- the purpose is to provide the technology.

- an event that has occurred in a certain person and a temporal change in the physiological psychological index of that person are detected by moving image analysis, and data that associates them is recorded.

- the configuration is adopted.

- an image acquisition unit that acquires moving image data obtained by photographing a target area and a person who acts in the target area are detected by analyzing the moving image data.

- a physiological psychological index detection unit for detecting temporal changes in the physiological psychological index of the person during the period, at least one event occurring in the person, and the physiological psychological index of the person

- a personal trend recording device comprising: a trend recording generation unit that generates, as the personal trend recording data, data that temporally associates a temporal change of

- trend recording data in which an event occurring in a certain person is temporally associated with a temporal change in the physiological psychological index of the person is automatically generated.

- customer satisfaction and high interest can be objectively evaluated from the tendency of physiological psychological indicators.

- trend recording data is automatically collected and recorded without any physical or psychological burden on the customer. be able to.

- a psychological state estimating unit that calculates a psychological state index obtained by estimating the psychological state of the person based on a temporal change in the physiological psychological index, and the trend record data is calculated by the psychological state estimating unit; It is preferable to further include a psychological state index. According to this configuration, since an index representing a person's psychological state and its temporal change are automatically recorded, very useful data can be obtained. At this time, it is preferable that the psychological state estimation unit calculates a psychological state index by combining a plurality of physiological psychological indices. This is because a combination of a plurality of physiological psychological indices can be expected to improve the estimation accuracy and reliability of the psychological state.

- the plurality of physiological psychological indices may include a physiological psychological index that the person can consciously control and a physiological psychological index that the person cannot consciously control.

- the psychological state of the person can be objectively and reliably calculated.

- psychological state indicators for example, satisfaction (also referred to as satisfaction) indicating the level of satisfaction, interest (also referred to as interest or concentration) indicating the level of interest, and comfort indicating the degree of comfort / discomfort (Also called pleasant discomfort).

- the apparatus further comprises a satisfaction estimation unit that estimates the satisfaction level of the person based on a temporal change of the physiological psychological index, and the trend recording data includes information on the satisfaction level estimated by the satisfaction level estimation unit. Furthermore, it is preferable to include.

- the apparatus further includes an interest level estimation unit that estimates an interest level of the person with respect to a target in the target area based on a temporal change of a physiological psychological index, and the trend recording data includes the interest level estimation unit. It may further include information on the degree of interest estimated by. According to these configurations, since temporal changes in customer satisfaction and interest level are automatically recorded, very useful data can be obtained.

- the physiological psychological index includes at least one of an expression, a smile degree, a pulse rate per unit time, a blink number per unit time, and a gaze degree. This is because these indices can be detected from a moving image or a still image with a certain degree of reliability.

- the physiological psychological index may include items such as pupil diameter, eye movement, respiratory rate per unit time, body temperature, sweating, blood flow, blood pressure, and the like. It is more preferable to use a combination of multiple items of physiological psychological indicators.

- an attribute estimation unit that estimates the attribute of the person by analyzing the moving image data is further provided, and the trend recording data further includes attribute information estimated by the attribute estimation unit.

- the difference in the tendency for every attribute for example, age, sex, body type, etc.

- a trend analysis result display unit for displaying a trend analysis result based on the trend record data, the trend analysis result including one or more events that occurred in the person during the person's tracking period; and the person It is preferable to include information for displaying on the time axis the temporal change of the psychophysiological index. According to such information display, it is possible to visualize the causal relationship between an action (event) of a person and a change in physiological psychological index.

- the trend analysis result includes information for displaying the statistical value calculated for each subarea. This makes it possible to evaluate the popularity (customer satisfaction and high interest) for each sub-area.

- the apparatus further comprises an attribute estimation unit that estimates the attribute of the person by analyzing the moving image data, the trend recording data further includes attribute information estimated by the attribute estimation unit, and the trend analysis result is It is preferable that information including the statistical value calculated for each attribute is included. Thereby, the tendency of satisfaction and interest level can be evaluated for each attribute.

- the trend analysis result preferably includes information for displaying the statistical value calculated for each event type. Thereby, the causal relationship with satisfaction and interest level can be evaluated for each event type.

- the present invention can be understood as a person trend recording device having at least a part of the above configuration or function.

- the present invention also includes a person trend recording method or a control method for a person trend recording apparatus, a program for causing a computer to execute these methods, or such a program that includes at least a part of the above processing. It can also be understood as a computer-readable recording medium recorded on a computer.

- FIG. 1 is a block diagram schematically showing a hardware configuration and a functional configuration of the person trend recording apparatus.

- FIG. 2 is a diagram showing an installation example of the person trend recording device.

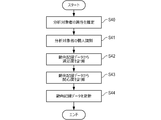

- FIG. 3 is a flowchart of the trend recording process.

- FIG. 4 is a flowchart of the trend analysis process.

- 5A to 5C are diagrams illustrating an example of satisfaction estimation.

- FIG. 6 is an example of trend recording data.

- FIG. 7 is a display example of the trend analysis result.

- FIG. 8 is a display example of a trend analysis result.

- FIG. 9 is a display example of a trend analysis result.

- FIG. 10 is a display example of the trend analysis result.

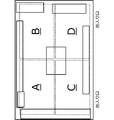

- FIG. 11 shows an example of area division.

- FIG. 12 is a display example of a trend analysis result.

- the present invention relates to a technique for automatically recording a person's trend (behavior and psychological movement), and in particular, by analyzing a moving image, an event occurring in the person and a temporal change of the person's physiological psychological index are detected.

- the present invention relates to a technique for detecting and recording them in association with each other in time. Such recorded data is useful for objectively evaluating customer satisfaction and interest and for analyzing factors that have influenced customer satisfaction and interest.

- the technology according to the present invention can be preferably applied to a system that performs customer analysis, customer satisfaction survey, marketing research, problem detection, and the like, for example, in a store or place that provides services to customers.

- FIG. 1 is a block diagram schematically illustrating a hardware configuration and a functional configuration of the person trend recording device 1

- FIG. 2 is a diagram illustrating a store layout of a clothing store and an installation example of the imaging device 10.

- the person trend recording device 1 has, as main hardware configurations, an imaging device 10 installed in a store, and an information processing device 11 that analyzes moving image data captured from the imaging device 10 and records customer trends. is doing.

- the imaging device 10 and the information processing device 11 are connected by wire or wireless.

- the imaging device 10 is a device for capturing the moving image data by capturing the target area 20 in the store.

- the target area 20 is a range where the customer is monitored, and is set in advance based on a range where the customer can act.

- a monochrome or color camera can be used.

- a special camera such as a high sensitivity (night vision) camera, an infrared camera, or a thermography may be used as the imaging device 10.

- the imaging device 10 is installed on a ceiling, a pillar, or the like so as to overlook a target area in a store. 1 and 2 show two imaging devices 10, the number of the imaging devices 10 is arbitrary. It is preferable to design the required number and installation positions of the imaging devices 10 so that the target area 20 can be photographed without blind spots.

- the information processing apparatus 11 is an apparatus having a function of analyzing moving image data captured from the imaging apparatus 10 and automatically detecting and recording a customer trend (behavior and psychological movement) existing in the target area 20. .

- the information processing apparatus 11 includes, as specific functions, an image acquisition unit 110, a person detection unit 111, an event detection unit 112, a psychophysiological index detection unit 113, a satisfaction level estimation unit 114, an interest level estimation unit 115, and an attribute estimation.

- the information processing apparatus 11 includes a CPU (processor), memory, storage (HDD, SSD, etc.), input device (keyboard, mouse, touch panel, etc.), output device (display, speaker, etc.), communication interface, and the like.

- a CPU processor

- memory storage

- HDD hard disk drive

- storage HDD, SSD, etc.

- input device keyboard, mouse, touch panel, etc.

- output device display, speaker, etc.

- communication interface and the like.

- Each function of the information processing apparatus 11 described above is realized by the CPU executing a program stored in the storage or memory.

- the configuration of the information processing apparatus 11 is not limited to this example. For example, distributed computing by a plurality of computers may be performed, a part of the above functions may be executed by a cloud server, or a part of the above functions may be executed by a circuit such as an ASIC or FPGA. Also good.

- the image acquisition unit 110 has a function of acquiring moving image data obtained by capturing the target area 20 from the imaging device 10.

- the moving image data input from the image acquisition unit 110 is temporarily stored in a memory or storage and is used for subsequent processes such as person detection, trend recording, and trend analysis.

- the person detection unit 111 has a function of detecting and tracking a person acting in the target area 20 by analyzing moving image data.

- the person detection unit 111 assigns a unique identifier (referred to as a person ID) to the person, and associates the person with the person ID. Store location and features. Then, the person detection unit 111 performs tracking (tracking) of the person by searching for a person whose position and feature are similar after the next frame.

- the adjacent relationship between the imaging areas of each imaging device 10 is defined in advance, and the search range of the tracking target is expanded to the adjacent imaging areas, so that the inside of the target area 20 The person who moves can be tracked without omission.

- an algorithm for detecting a face or a human body using a discriminator using Haar-like feature values or HoG feature values, or a discriminator using Deep Learning such as Faster R-CNN can be preferably used.

- the event detection unit 112 has a function of detecting an event (phenomenon) that has occurred in a person during the tracking period of the person by analyzing moving image data.

- event refers to a factor that can affect a person's psychological state. Any event may be detected as long as it can be detected by image analysis.

- Examples of clothing stores are “entered the store”, “saw the product”, “spoken by the store clerk”, “takes the product”, “tryed on”, “sale item” ⁇ I came '', ⁇ I saw a display of new products '', ⁇ I was crowded '', ⁇ I bumped into another person '', ⁇ I was queued for payment '', ⁇ I made a payment '', ⁇ I left the store Is assumed. It is assumed that the event detection target is set in the event detection unit 112 in advance.

- events such as “entering the store”, “trying on”, “visiting the special sale corner”, “performing accounting”, and “leaving the store” show where the customer is in the store. It can be detected by recognition. Also, events such as “I saw a product” and “I saw a display of a new product” can be detected by recognizing the customer's face direction and line of sight from the image. In addition, events such as “Talked by a store clerk”, “I picked up a product”, “lined up in a queue for accounting”, etc. are identified by learning with Deep Learning using the teacher data of these events Can be detected by the instrument. In addition, events such as “I collided with others” and “Crowded” can be detected by recognizing the number, density, distance between customers, and the like from the image.

- the physiological / psychological index detection unit 113 has a function of detecting temporal changes in the physiological / psychological index of the person during the tracking period of the person by analyzing the moving image data.

- expressing a person's psychological state or its change as a physiological phenomenon is called a physiological psychological reaction, and the physiological psychological reaction is measured and quantified as a physiological psychological index. Call. Since the physiological psychological reaction is unconsciously expressed, it is possible to objectively grasp the person's true psychological state and its change by observing the physiological psychological index.

- physiological psychological indicators include facial expression, smile level, pulse rate per unit time (hereinafter simply referred to as “pulse rate”), blink rate per unit time (hereinafter simply referred to as “blink number”). , Gaze degree (an index representing the rate at which the line of sight is directed to a specific object), pupil diameter, eye movement, respiratory rate per unit time, body temperature, sweating, blood flow, blood pressure, and the like.

- voice rate pulse rate per unit time

- blink rate per unit time hereinafter simply referred to as “blink number”.

- Gaze degree an index representing the rate at which the line of sight is directed to a specific object

- pupil diameter an index representing the rate at which the line of sight is directed to a specific object

- eye movement eye movement

- respiratory rate per unit time body temperature

- sweating blood flow

- blood pressure blood pressure

- One or more items may be used.

- facial expressions (5 categories of joy / true face / disgust / sadness / surprise), smile degree [%], pulse rate [beats / minute], instantane

- a method of estimating the facial expression and the smile level based on the shape of the facial organ, the degree of opening / closing the eyes / mouth, wrinkles, and the like can be used.

- the pulse can be detected by capturing minute fluctuations in the Green value of the face (skin) portion.

- Blink can be detected by performing eyelid determination based on the shape of the eyes, the presence or absence of black eyes, and the like.

- the gaze degree can be calculated based on the probability that the gaze direction is estimated for each frame and the gaze is within a predetermined angle range centered on a certain target.

- a method for detecting pupil diameter and eye movement from an infrared image a method for detecting body temperature and sweating from a thermographic image, and a method for detecting respiratory rate, blood flow, and blood pressure from a moving image are known. Therefore, it is possible to obtain a physiological psychological index using these methods.

- the person trend recording device 1 calculates a psychological state index in which the psychological state of the person is estimated based on temporal changes in the physiological psychological index, in addition to the collection and recording of the physiological psychological index as described above. It has a function (this function is called a psychological state estimation unit).

- this function is called a psychological state estimation unit.

- two satisfaction estimation units 114 and an interest level estimation unit 115 are provided as psychological state estimation units.

- the satisfaction level estimation unit 114 is a function that estimates “satisfaction level”, which is one of the psychological state indexes, based on temporal changes in the physiological psychological index.

- the satisfaction level S (t) at time t is defined as in equation (1), and the satisfaction level S (t) is calculated based on changes in the smile level Sm (t) and the pulse rate PR (t). To do.

- the definition of satisfaction is not limited to this, and it may be calculated in any way.

- Sm (t) is the smile level at time t

- PR (t) is the pulse rate at time t

- PRmin is set to a predetermined period including time t (for example, about 5 to 10 minutes). Is the minimum value of the pulse rate in (good), and PRmax is the maximum value of the pulse rate during the same period.

- the satisfaction value can be objectively obtained by obtaining from two types of indicators: a human-controllable index (smile level) and an index that is difficult to control consciously (pulse rate). And it can be calculated with high reliability.

- the degree-of-interest estimation unit 115 is a function that estimates the “degree of interest” for a certain target in the target area 20 based on the temporal change of the physiological psychological index.

- the degree of interest is also a psychological condition index.

- the interest level I (t) at time t is defined as in Expression (2), and the interest level I (t) is calculated based on changes in the gaze degree At (t) and the blink number BF (t). calculate.

- the definition of the interest level is not limited to this and may be calculated in any way.

- At (t) is a gaze degree at time t

- BF (t) is the number of blinks at time t

- BFmin is set to a predetermined period including time t (for example, about 5 to 10 minutes).

- BFmax is the maximum value of the number of blinks during synchronization.

- the value of the degree of interest can be objectively determined by obtaining from two types of indicators: the index that can be controlled by humans (gaze degree) and the index that is difficult to control consciously (number of blinks). And can be calculated with high reliability.

- the attribute estimation unit 116 is a function for estimating the attributes of a person by analyzing moving image data.

- attributes age (up to 10/10 to 20/20 to 30/30 to 40/40), gender (male / female), body type (lean / normal / fat) Three items are estimated.

- Various algorithms have been proposed for specific methods of attribute estimation, and any algorithm may be used.

- the personal identification unit 117 is a function of performing personal identification of a person detected from an image and acquiring unique information regarding the person.

- a method of personal identification there are a method by so-called image recognition (face recognition) and a method of specifying an individual from an ID card possessed by the person, and any method may be used.

- the ID card include a credit card, an electronic money card, and a point card.

- the personal identification unit 117 can identify the person by reading the ID card presented at the time of payment.

- “Individual information about a person” includes name, address, telephone number, e-mail address, age, occupation, place of work, family composition, purchase history, and the like.

- the trend record generation unit 118 is a function that generates trend record data for each person based on the information obtained by the function units 111 to 117.

- the generated trend recording data is stored in the storage unit 120 or a cloud server (not shown). Details of the trend record data will be described later.

- the trend analysis result display unit 119 is a function for displaying the trend analysis result on the display based on the trend record data. A display example of the trend analysis result will be described later.

- the person detection unit 111 refers to the latest frame in the moving image data and detects a person existing in the image (step S30).

- step S31 one of the detected persons is selected (the selected person is referred to as “recording person”).

- the person detection unit 111 identifies a person to be recorded and a known person (a person already detected in a past frame and being tracked), and assigns the same person ID when the person to be recorded is a person being tracked If it is an unknown person (a person detected for the first time), a new person ID is assigned (step S32).

- the trend record generation unit 118 records the shooting time of the latest frame and the position information of the recording target person in the trend recording data of the recording target person (step S33).

- the event detection unit 112 refers to one or more recent frames in the moving image data, and detects an event that has occurred in the person to be recorded (step S34).

- the trend record generation unit 118 records information on the detected event in the trend record data of the person to be recorded (step S36).

- the physiological psychological index detection unit 113 refers to the latest one or a plurality of frames in the moving image data, and displays indices of four items such as the facial expression, smile level, pulse rate, blink rate, and gaze level of the person to be recorded. Detection is performed (step S37).

- the expression and smile level can be detected from an image of one frame, and the pulse rate, blink rate, and gaze degree can be detected from a moving image of a plurality of frames.

- the trend record generation unit 118 records the value of each index in the trend record data of the recording target person (step S38).

- step S40 the attribute estimation unit 116 estimates the age, sex, and body type of the analysis target person by analyzing the moving image data. Further, the personal identification unit 117 performs personal identification of the analysis target person, and when the personal identification is successful, acquires information unique to the analysis target person (for example, purchase history) (step S41). Next, the satisfaction level estimation unit 114 calculates the value of satisfaction level at each time from the trend record data of the analysis subject (step S42).

- FIGS. 5A to 5C An example of satisfaction estimation is shown in FIGS. 5A to 5C.

- FIG. 5A is a graph of the smile level of the analysis subject, with the horizontal axis indicating time [sec] and the vertical axis indicating smile level [%].

- FIG. 5B shows an observed waveform of the pulse wave of the analysis subject, with the horizontal axis representing time [sec] and the vertical axis representing the green value of the face portion.

- FIG. 5C is a graph of the pulse rate calculated from FIG. 5B, where the horizontal axis indicates time [sec] and the vertical axis indicates the pulse rate [beats / minute].

- the interest level estimation unit 115 calculates the value of the interest level at each time from the trend record data of the analysis subject (step S43). Then, the trend record generation unit 118 records the information obtained in steps S40 to S43 in the trend record data of the analysis subject (step S44).

- FIG. 6 is an example of trend recording data related to the person X.

- An event occurring in the person X and a temporal change in physiological psychological indices (expression, smile level, pulse rate, blink rate, gaze degree) detected from the person X are correlated in time. It is data.

- the position information of the person X and temporal changes in satisfaction and interest are also associated.

- FIG. 7 is a graph in which events occurring during the tracking period of the person X and temporal changes in the physiological psychological index of the person X are plotted on the same time axis.

- FIG. 8 is a graph in which events that occur during the tracking period of the person X and temporal changes in the satisfaction level and interest level of the person X are plotted on the same time axis. According to these graphs, it is possible to visualize the causal relationship between the behavior (event) of the person X from entering the store to leaving the store and changes in physiological psychological indicators, satisfaction, and interest. Since it is thought that the true psychological state of the person X appears in the physiological psychological indicators, satisfaction, and interest, using the trend analysis results in FIGS. 7 and 8, the product display is good and bad, Can be evaluated with certain reliability.

- FIG. 9 shows a moving route (broken line) of the person X, an event (white triangle) that occurred in the person X, and points indicating a high degree of satisfaction and interest (double circles, black stars) on the store floor plan. ) Is displayed. According to such a floor plan display, it is possible to obtain information about where the person X has taken what kind of action and where the satisfaction and the degree of interest have increased.

- FIG. 10 is a display example showing the trend of the trend record data of the person X.

- the trend analysis result display unit 119 displays the physiological psychological index, satisfaction, and interest level from the trend record data of a plurality of persons.

- Statistical values average value, minimum value, maximum value, intermediate value, etc.

- the target area in the store may be divided into four sub-areas A to D as shown in FIG. 11, and the statistical value for each sub-area may be calculated and displayed as shown in FIG.

- the popularity customer satisfaction and interest level

- the evaluation is performed by age group and body type, and the event is satisfied for each event.

- the causal relationship with degree and interest level can be evaluated.

- trend record data that automatically associates an event that has occurred in a person with a temporal change in the physiological psychological index of the person is automatically generated. Is done.

- customer satisfaction and high interest can be objectively evaluated from the tendency of physiological psychological indicators.

- trend recording data is automatically collected and recorded without any physical or psychological burden on the customer. be able to.

- the physiological psychological index described above is an example, and an index different from the above-described index may be collected. From the viewpoint of simplification of the apparatus and cost reduction, it is desirable that all information can be detected from the moving image data, but some information other than the moving image data may be extracted depending on the index. For example, the body temperature of a person may be measured from an image obtained from thermography.

Landscapes

- Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- General Physics & Mathematics (AREA)

- Multimedia (AREA)

- Business, Economics & Management (AREA)

- Human Computer Interaction (AREA)

- Health & Medical Sciences (AREA)

- Strategic Management (AREA)

- Development Economics (AREA)

- Finance (AREA)

- Accounting & Taxation (AREA)

- Computer Vision & Pattern Recognition (AREA)

- General Health & Medical Sciences (AREA)

- Economics (AREA)

- General Business, Economics & Management (AREA)

- Game Theory and Decision Science (AREA)

- Entrepreneurship & Innovation (AREA)

- Marketing (AREA)

- Psychiatry (AREA)

- Social Psychology (AREA)

- Oral & Maxillofacial Surgery (AREA)

- Signal Processing (AREA)

- Ophthalmology & Optometry (AREA)

- Measurement Of The Respiration, Hearing Ability, Form, And Blood Characteristics Of Living Organisms (AREA)

- Image Analysis (AREA)

- Closed-Circuit Television Systems (AREA)

- Management, Administration, Business Operations System, And Electronic Commerce (AREA)

Priority Applications (3)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| US16/461,912 US11106900B2 (en) | 2017-03-14 | 2017-11-28 | Person trend recording device, person trend recording method, and program |

| DE112017007261.1T DE112017007261T8 (de) | 2017-03-14 | 2017-11-28 | Personentrendaufzeichnungsvorrichtung, personentrendaufzeichnungsverfahren, und programm |

| CN201780071316.1A CN109983505B (zh) | 2017-03-14 | 2017-11-28 | 人物动向记录装置、人物动向记录方法及存储介质 |

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| JP2017048828A JP6724827B2 (ja) | 2017-03-14 | 2017-03-14 | 人物動向記録装置 |

| JP2017-048828 | 2017-03-14 |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| WO2018168095A1 true WO2018168095A1 (ja) | 2018-09-20 |

Family

ID=63523766

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/JP2017/042505 Ceased WO2018168095A1 (ja) | 2017-03-14 | 2017-11-28 | 人物動向記録装置、人物動向記録方法、及びプログラム |

Country Status (5)

| Country | Link |

|---|---|

| US (1) | US11106900B2 (enExample) |

| JP (1) | JP6724827B2 (enExample) |

| CN (1) | CN109983505B (enExample) |

| DE (1) | DE112017007261T8 (enExample) |

| WO (1) | WO2018168095A1 (enExample) |

Families Citing this family (16)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP7521175B2 (ja) * | 2019-03-19 | 2024-07-24 | 日本電気株式会社 | 顧客情報処理装置、顧客情報処理方法、及びプログラム |

| JP7251392B2 (ja) * | 2019-08-01 | 2023-04-04 | 株式会社デンソー | 感情推定装置 |

| JP7227884B2 (ja) * | 2019-10-10 | 2023-02-22 | 株式会社日立製作所 | 購買促進システムおよび購買促進方法 |

| CN110942055A (zh) * | 2019-12-31 | 2020-03-31 | 北京市商汤科技开发有限公司 | 展示区域的状态识别方法、装置、设备及存储介质 |

| JP7526955B2 (ja) * | 2020-02-05 | 2024-08-02 | パナソニックIpマネジメント株式会社 | 観客分析装置、観客分析方法、及び、コンピュータプログラム |

| CN111983210B (zh) * | 2020-06-29 | 2022-04-15 | 北京津发科技股份有限公司 | 基于时间同步的空间位置和多通道人机环境数据采集、时空行为分析方法和装置 |

| CN112487888B (zh) * | 2020-11-16 | 2023-04-07 | 支付宝(杭州)信息技术有限公司 | 一种基于目标对象的图像采集方法及装置 |

| WO2022153496A1 (ja) * | 2021-01-15 | 2022-07-21 | 日本電気株式会社 | 情報処理装置、情報処理方法、およびプログラム |

| JP2024075796A (ja) * | 2021-02-17 | 2024-06-05 | 株式会社Preferred Networks | 解析装置、解析システム、解析方法及びプログラム |

| JP7654429B2 (ja) | 2021-03-16 | 2025-04-01 | サトーホールディングス株式会社 | 表示システム、制御装置、及び制御プログラム |

| JP7453181B2 (ja) * | 2021-05-20 | 2024-03-19 | Lineヤフー株式会社 | 情報処理装置、情報処理方法、及び情報処理プログラム |

| US11887405B2 (en) * | 2021-08-10 | 2024-01-30 | Capital One Services, Llc | Determining features based on gestures and scale |

| JP7276419B1 (ja) | 2021-12-24 | 2023-05-18 | 富士通株式会社 | 情報処理プログラム、情報処理方法、および情報処理装置 |

| JP2023107647A (ja) * | 2022-01-24 | 2023-08-03 | 株式会社東芝 | 監視システム、及び監視方法 |

| EP4560559A4 (en) * | 2022-07-19 | 2025-06-18 | Fujitsu Limited | GENERATION PROGRAM, GENERATION METHOD AND INFORMATION PROCESSING DEVICE |

| JP7333570B1 (ja) | 2022-12-21 | 2023-08-25 | 株式会社Shift | プログラム、方法、情報処理装置、システム |

Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2006127057A (ja) * | 2004-10-27 | 2006-05-18 | Canon Inc | 推定装置、及びその制御方法 |

| JP2009037459A (ja) * | 2007-08-02 | 2009-02-19 | Giken Torasutemu Kk | 商品関心度計測装置 |

| JP2013537435A (ja) * | 2010-06-07 | 2013-10-03 | アフェクティヴァ,インコーポレイテッド | ウェブサービスを用いた心理状態分析 |

| JP2016103786A (ja) * | 2014-11-28 | 2016-06-02 | 日立マクセル株式会社 | 撮像システム |

Family Cites Families (11)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN101108125B (zh) * | 2007-08-02 | 2010-06-16 | 无锡微感科技有限公司 | 一种身体体征动态监测系统 |

| US8539359B2 (en) * | 2009-02-11 | 2013-09-17 | Jeffrey A. Rapaport | Social network driven indexing system for instantly clustering people with concurrent focus on same topic into on-topic chat rooms and/or for generating on-topic search results tailored to user preferences regarding topic |

| JP5822651B2 (ja) * | 2011-10-26 | 2015-11-24 | 株式会社ソニー・コンピュータエンタテインメント | 個体判別装置および個体判別方法 |

| JP5899472B2 (ja) * | 2012-05-23 | 2016-04-06 | パナソニックIpマネジメント株式会社 | 人物属性推定システム、及び学習用データ生成装置 |

| JP5314200B1 (ja) * | 2013-02-01 | 2013-10-16 | パナソニック株式会社 | 接客状況分析装置、接客状況分析システムおよび接客状況分析方法 |

| JP6071141B2 (ja) * | 2013-08-22 | 2017-02-01 | Kddi株式会社 | 多数のユーザの通信履歴からイベントの発生位置を推定する装置、プログラム及び方法 |

| US11494390B2 (en) * | 2014-08-21 | 2022-11-08 | Affectomatics Ltd. | Crowd-based scores for hotels from measurements of affective response |

| KR101648827B1 (ko) * | 2015-03-11 | 2016-08-17 | (주)중외정보기술 | 실시간 위치 기반 이벤트 발생 시스템 및 이를 이용한 단말 제어 방법 |

| JP6728863B2 (ja) * | 2016-03-25 | 2020-07-22 | 富士ゼロックス株式会社 | 情報処理システム |

| CN106264568B (zh) * | 2016-07-28 | 2019-10-18 | 深圳科思创动实业有限公司 | 非接触式情绪检测方法和装置 |

| CN106407935A (zh) * | 2016-09-21 | 2017-02-15 | 俞大海 | 基于人脸图像和眼动注视信息的心理测试方法 |

-

2017

- 2017-03-14 JP JP2017048828A patent/JP6724827B2/ja active Active

- 2017-11-28 US US16/461,912 patent/US11106900B2/en active Active

- 2017-11-28 DE DE112017007261.1T patent/DE112017007261T8/de active Active

- 2017-11-28 CN CN201780071316.1A patent/CN109983505B/zh active Active

- 2017-11-28 WO PCT/JP2017/042505 patent/WO2018168095A1/ja not_active Ceased

Patent Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2006127057A (ja) * | 2004-10-27 | 2006-05-18 | Canon Inc | 推定装置、及びその制御方法 |

| JP2009037459A (ja) * | 2007-08-02 | 2009-02-19 | Giken Torasutemu Kk | 商品関心度計測装置 |

| JP2013537435A (ja) * | 2010-06-07 | 2013-10-03 | アフェクティヴァ,インコーポレイテッド | ウェブサービスを用いた心理状態分析 |

| JP2016103786A (ja) * | 2014-11-28 | 2016-06-02 | 日立マクセル株式会社 | 撮像システム |

Also Published As

| Publication number | Publication date |

|---|---|

| CN109983505A (zh) | 2019-07-05 |

| JP6724827B2 (ja) | 2020-07-15 |

| DE112017007261T8 (de) | 2020-01-16 |

| US11106900B2 (en) | 2021-08-31 |

| CN109983505B (zh) | 2023-10-17 |

| JP2018151963A (ja) | 2018-09-27 |

| DE112017007261T5 (de) | 2019-11-28 |

| US20190332855A1 (en) | 2019-10-31 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| JP6724827B2 (ja) | 人物動向記録装置 | |

| US10380603B2 (en) | Assessing personality and mood characteristics of a customer to enhance customer satisfaction and improve chances of a sale | |

| Generosi et al. | A deep learning-based system to track and analyze customer behavior in retail store | |

| CN109740466B (zh) | 广告投放策略的获取方法、计算机可读存储介质 | |

| US11320902B2 (en) | System and method for detecting invisible human emotion in a retail environment | |

| JP2023171650A (ja) | プライバシーの保護を伴う人物の識別しおよび/または痛み、疲労、気分、および意図の識別および定量化のためのシステムおよび方法 | |

| US10559102B2 (en) | Makeup simulation assistance apparatus, makeup simulation assistance method, and non-transitory computer-readable recording medium storing makeup simulation assistance program | |

| US20120083675A1 (en) | Measuring affective data for web-enabled applications | |

| US20130151333A1 (en) | Affect based evaluation of advertisement effectiveness | |

| JP2007006427A (ja) | 映像監視装置 | |

| US20130102854A1 (en) | Mental state evaluation learning for advertising | |

| KR102799123B1 (ko) | 인간의 사회적 행동 분류를 결정하기 위한 방법 및 시스템 | |

| JP7306152B2 (ja) | 感情推定装置、感情推定方法、プログラム、情報提示装置、情報提示方法及び感情推定システム | |

| US20230043838A1 (en) | Method for determining preference, and device for determining preference using same | |

| CN107210830A (zh) | 一种基于生物特征的对象呈现、推荐方法和装置 | |

| US20130238394A1 (en) | Sales projections based on mental states | |

| JP7259370B2 (ja) | 情報処理装置、情報処理方法、および情報処理プログラム | |

| JP2020067720A (ja) | 人属性推定システム、それを利用する情報処理装置及び情報処理方法 | |

| US20240382125A1 (en) | Information processing system, information processing method and computer program product | |

| KR20230137529A (ko) | 사용자 관심 분석 기반 구매 유도 시스템 및 방법 | |

| JP2025068442A (ja) | 情報処理装置、情報処理方法、及びプログラム | |

| WO2025149652A1 (en) | Methods and systems for determining a physiological response of the eye of a user | |

| WO2025229742A1 (ja) | 情報分析システム | |

| JP2025021811A (ja) | 評価プログラム、評価方法および情報処理装置 | |

| JP2022064726A (ja) | 心理状態推定装置およびプログラム |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| DPE2 | Request for preliminary examination filed before expiration of 19th month from priority date (pct application filed from 20040101) | ||

| 121 | Ep: the epo has been informed by wipo that ep was designated in this application |

Ref document number: 17900588 Country of ref document: EP Kind code of ref document: A1 |

|

| 122 | Ep: pct application non-entry in european phase |

Ref document number: 17900588 Country of ref document: EP Kind code of ref document: A1 |