WO2014132474A1 - 画像処理装置、内視鏡装置、画像処理方法及び画像処理プログラム - Google Patents

画像処理装置、内視鏡装置、画像処理方法及び画像処理プログラム Download PDFInfo

- Publication number

- WO2014132474A1 WO2014132474A1 PCT/JP2013/075627 JP2013075627W WO2014132474A1 WO 2014132474 A1 WO2014132474 A1 WO 2014132474A1 JP 2013075627 W JP2013075627 W JP 2013075627W WO 2014132474 A1 WO2014132474 A1 WO 2014132474A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- image

- subject

- known characteristic

- amount

- enhancement

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B1/00—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor

- A61B1/00002—Operational features of endoscopes

- A61B1/00004—Operational features of endoscopes characterised by electronic signal processing

- A61B1/00009—Operational features of endoscopes characterised by electronic signal processing of image signals during a use of endoscope

- A61B1/000095—Operational features of endoscopes characterised by electronic signal processing of image signals during a use of endoscope for image enhancement

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B1/00—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor

- A61B1/00002—Operational features of endoscopes

- A61B1/00004—Operational features of endoscopes characterised by electronic signal processing

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B1/00—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor

- A61B1/04—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor combined with photographic or television appliances

- A61B1/05—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor combined with photographic or television appliances characterised by the image sensor, e.g. camera, being in the distal end portion

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B1/00—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor

- A61B1/06—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor with illuminating arrangements

- A61B1/0638—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor with illuminating arrangements providing two or more wavelengths

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B1/00—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor

- A61B1/06—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor with illuminating arrangements

- A61B1/0646—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor with illuminating arrangements with illumination filters

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B1/00—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor

- A61B1/31—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor for the rectum, e.g. proctoscopes, sigmoidoscopes, colonoscopes

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/20—Analysis of motion

- G06T7/215—Motion-based segmentation

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N5/00—Details of television systems

- H04N5/222—Studio circuitry; Studio devices; Studio equipment

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B1/00—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor

- A61B1/00163—Optical arrangements

- A61B1/00193—Optical arrangements adapted for stereoscopic vision

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B1/00—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor

- A61B1/273—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor for the upper alimentary canal, e.g. oesophagoscopes, gastroscopes

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/30—Subject of image; Context of image processing

- G06T2207/30004—Biomedical image processing

Definitions

- the present invention relates to an image processing apparatus, an endoscope apparatus, an image processing method, an image processing program, and the like.

- a process for facilitating observation of the difference in the structure and color of the subject is performed by performing image enhancement.

- a technique disclosed in Patent Document 1 is known as a technique for enhancing the structure of a subject by image processing.

- a technique for identifying a lesion site by color enhancement a technique disclosed in Patent Document 2 is known.

- an image processing device an endoscope device, an image processing method, an image processing program, and the like that can perform enhancement processing according to an observation state.

- One aspect of the present invention is an image acquisition unit that acquires a captured image including an image of a subject, a motion amount acquisition unit that acquires a motion amount of the subject, and known characteristic information that is information representing a known characteristic related to the subject.

- This is related to an image processing apparatus that includes a known characteristic information acquisition unit that acquires the image data and an enhancement processing unit that performs enhancement processing based on the known characteristic information with the processing content corresponding to the amount of motion.

- the enhancement process based on the known characteristic information that is information representing the known characteristic related to the subject is performed with the processing content corresponding to the amount of movement of the subject. This makes it possible to perform enhancement processing according to the observation state.

- a captured image including an image of a subject is acquired, a motion amount of the subject is acquired, known characteristic information that is information representing a known property related to the subject is acquired, and the motion amount is acquired.

- known characteristic information that is information representing a known property related to the subject

- the motion amount is acquired. Is related to an image processing method for performing enhancement processing based on the known characteristic information.

- a captured image including an image of a subject is acquired, a movement amount of the subject is acquired, known characteristic information that is information representing a known characteristic related to the subject is acquired, and the motion

- the present invention relates to an image processing program that causes a computer to execute a step of performing enhancement processing based on the known characteristic information with processing content corresponding to a quantity.

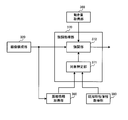

- FIG. 1 is a configuration example of an image processing apparatus.

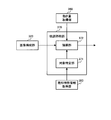

- FIG. 2 is a configuration example of an endoscope apparatus.

- FIG. 3 is a detailed configuration example of the image processing unit in the first embodiment.

- FIG. 4 is a detailed configuration example of a distance information acquisition unit.

- FIG. 5 is a modified configuration example of the endoscope apparatus.

- FIG. 6 is a detailed configuration example of the enhancement processing unit in the first embodiment.

- FIGS. 7A to 7D are explanatory diagrams of extraction unevenness information extraction processing by filter processing.

- FIGS. 8A to 8F are explanatory diagrams of extraction unevenness information extraction processing by morphological processing.

- FIG. 9 is a flowchart example of image processing in the first embodiment.

- FIG. 10 is a detailed configuration example of an image processing unit in the second embodiment.

- FIG. 11 is a detailed configuration example of an enhancement processing unit in the second embodiment.

- FIG. 12 is a flowchart example of image processing in the second embodiment.

- Patent Documents 1 and 2 since it is cumbersome for a doctor and a burden on a patient to perform a pigment spraying operation, an attempt has been made to emphasize a color or a concavo-convex structure by image processing and to determine a lesion (for example, Patent Documents 1 and 2).

- an image that is easier to see is obtained by changing the processing content in accordance with the observation state (observation technique). For example, in the case where the entire digestive tract is sequentially viewed by moving relatively quickly as in screening observation, the doctor observes a relatively large structure. Therefore, it is necessary to present on the image so as not to overlook the large structure. On the other hand, when the location of the target is specified by screening and the detailed structure is enlarged (scrutinized observation, proximity observation), the doctor observes the fine structure. Therefore, it is necessary to emphasize the fine structure so that it can be determined whether the target is benign or malignant.

- FIG. 1 shows a configuration example of an image processing apparatus that can solve such a problem.

- This image processing apparatus includes an image acquisition unit 310 that acquires a captured image including an image of a subject, a motion amount acquisition unit 360 that acquires a motion amount of the subject, and known characteristic information that is information representing a known characteristic related to the subject.

- the enhancement processing can be changed according to the observation state by performing the enhancement processing with the processing content corresponding to the amount of movement. Thereby, it is possible to selectively emphasize what should be emphasized in each observation state for easy viewing.

- the enhancement processing unit 370 performs enhancement processing with processing content corresponding to the amount of motion, using a subject that matches the characteristic specified by the known characteristic information as a target of enhancement processing.

- the enhancement processing unit determines that the motion amount corresponds to the motion amount corresponding to the screening observation

- the enhancement processing unit selects a subject that matches the characteristic specified by the known characteristic information as the target of the enhancement process.

- the process 1 emphasis processing is performed.

- a subject that matches the characteristic specified by the known characteristic information is selected as a target of enhancement processing, and the second processing content is different from the first processing content. The processing contents are emphasized.

- the object is an uneven part, a reddish part, and a fading part of a living body, and each part has features (for example, a large structure, a small structure, a region of a predetermined color, etc.) that the user pays attention to in each observation state.

- the enhancement process it is only necessary to emphasize the feature that the user pays attention to according to such an observation state. For example, it is possible to emphasize a relatively large uneven structure in screening observation with a large amount of movement of the subject, and to emphasize a fine uneven structure in an enlarged observation with a small amount of movement of the subject.

- the motion amount is an amount representing the motion of the subject in the captured image, and is, for example, the direction or distance of the motion of the subject on the captured image.

- the direction and distance may be acquired as a motion vector, or if only the speed of motion needs to be known, only the distance may be acquired as the amount of motion.

- Such a motion amount can be obtained, for example, by matching feature points of a captured image between two frames.

- the known characteristic information is information that can separate a useful structure in the present embodiment from a structure that is not so, among the structures on the surface of the subject.

- features of unevenness and color that are useful to enhance (for example, useful for finding early lesions) and color information (for example, the size, hue, and saturation of the unevenness characteristic of lesions) are known characteristics

- a subject that matches the known characteristic information is the target of the enhancement process.

- information on a structure that is not useful even if it is emphasized may be used as the known characteristic information.

- a subject that does not match the known characteristic information is to be emphasized.

- FIG. 2 shows a configuration example of the endoscope apparatus according to the first embodiment.

- the endoscope apparatus includes a light source unit 100, an imaging unit 200, a processor unit 300 (control device), a display unit 400, and an external I / F unit 500.

- the light source unit 100 includes a white light source 101, a rotating color filter 102 having a plurality of spectral transmittances, a rotation driving unit 103 that drives the rotating color filter 102, and light having each spectral characteristic from the rotating color filter 102. And a condensing lens 104 that condenses light on the incident end face of the light guide fiber 201.

- the rotation color filter 102 includes three primary color filters (a red filter, a green filter, and a blue filter) and a rotation motor.

- the rotation driving unit 103 rotates the rotating color filter 102 at a predetermined number of rotations in synchronization with the imaging period of the imaging element 206 of the imaging unit 200 based on a control signal from the control unit 302 of the processor unit 300. For example, if the rotating color filter 102 is rotated 20 times per second, each color filter crosses the incident white light at 1/60 second intervals. In this case, the observation target is irradiated with light of each of the three primary colors (R, G, or B) at 1/60 second intervals, and the image sensor 206 images the reflected light from the observation target. The captured image is transferred to the A / D conversion unit 209. That is, it is an example of an endoscope apparatus in which an R image, a G image, and a B image are captured in a frame sequential manner at 1/60 second intervals, and the actual frame rate is 20 fps.

- the present embodiment is not limited to the above-described frame sequential method.

- the subject may be irradiated with white light from the white light source 101 and may be imaged by an image sensor having a color filter with an RGB Bayer array.

- the imaging unit 200 is formed to be elongated and bendable so that it can be inserted into a body cavity such as the stomach or the large intestine.

- the imaging unit 200 includes a light guide fiber 201 for guiding the light condensed by the light source unit 100, and an illumination lens 203 that diffuses the light guided to the tip by the light guide fiber 201 and irradiates the observation target. Including.

- the imaging unit 200 digitally converts an objective lens 204 that collects reflected light returning from the observation target, an imaging element 206 that detects the focused imaging light, and a photoelectrically converted analog signal from the imaging element 206.

- An A / D conversion unit 209 that converts the signal into a signal.

- the imaging unit 200 includes a memory 210 in which unique information including scope ID information and manufacturing variation of the imaging unit 200 is recorded, and a connector 212 that allows the imaging unit 200 and the processor unit 300 to be attached and detached.

- the image sensor 206 is a monochrome single-plate image sensor, and for example, a CCD or a CMOS image sensor can be used.

- the A / D conversion unit 209 converts the analog signal output from the image sensor 206 into a digital signal, and outputs an image of the digital signal to the image processing unit 301.

- the memory 210 is connected to the control unit 302 and transfers scope ID information and unique information including manufacturing variations to the control unit 302.

- the processor unit 300 includes an image processing unit 301 that performs image processing on an image transferred from the A / D conversion unit 209, and a control unit 302 that controls each unit of the endoscope apparatus.

- the display unit 400 is a display device capable of displaying a moving image, and includes, for example, a CRT or a liquid crystal monitor.

- the external I / F unit 500 is an interface for performing input from the user to the endoscope apparatus.

- the external I / F unit 500 includes, for example, a power switch for turning on / off the power, a shutter button for starting a photographing operation, a mode switching switch for switching a photographing mode and various other modes (for example, a biological surface) For example, a switch for selectively emphasizing the concavo-convex portion).

- the external I / F unit 500 outputs input information to the control unit 302.

- FIG. 3 shows a detailed configuration example of the image processing unit 301.

- the image processing unit 301 includes an image configuration unit 320, an image storage unit 330, a storage unit 350, a motion amount acquisition unit 360, an enhancement processing unit 370, a distance information acquisition unit 380, and a known characteristic information acquisition unit 390.

- the image configuration unit 320 corresponds to the image acquisition unit 310 in FIG.

- the image construction unit 320 performs predetermined image processing (for example, OB processing, gain processing, gamma processing, etc.) on the image captured by the imaging unit 200, and generates an image that can be output to the display unit 400.

- the image configuration unit 320 outputs the processed image to the image storage unit 330, the enhancement processing unit 370, and the distance information acquisition unit 380.

- the image storage unit 330 stores the image output from the image configuration unit 320 for a plurality of frames (a plurality of temporally continuous frames).

- the motion amount acquisition unit 360 calculates the motion amount of the subject in the captured image based on the plurality of frames of images stored in the image storage unit 330 and outputs the motion amount to the enhancement processing unit 370. For example, a matching process is performed between the image of the reference frame and the image of the next frame, and a motion vector between the two frame images is calculated. Then, a motion vector is sequentially calculated over a plurality of frames while shifting the reference image, and an average value of the plurality of motion vectors is calculated as a motion amount.

- the known characteristic information acquisition unit 390 reads (acquires) the known characteristic information stored in the storage unit 350 and outputs the known characteristic information to the enhancement processing unit 370.

- the known characteristic information is the size (dimension information such as width, height, depth, etc.) of the concavo-convex part unique to the living body to be specified as an emphasis target.

- the distance information acquisition unit 380 acquires distance information to the subject based on the captured image, and outputs the distance information to the enhancement processing unit 370.

- the distance information is information in which each position in the captured image is associated with the distance to the subject at each position.

- the distance information is a distance map.

- the distance map is, for example, the distance (depth / depth) in the Z-axis direction to the subject for each point (for example, each pixel) on the XY plane when the optical axis direction of the imaging unit 200 is the Z-axis. It is a map with the value of. Details of the distance information acquisition unit 380 will be described later.

- the enhancement processing unit 370 specifies a target based on the known characteristic information and the distance information, performs an enhancement process on the target according to the amount of motion, and outputs the processed image to the display unit 400. Specifically, an uneven portion that matches a desired dimension characteristic represented by the known characteristic information is extracted from the distance information, and the extracted uneven portion is specified as a target.

- the threshold value for example, during screening observation

- the uneven portion whose size (uneven pattern) is smaller than the second size threshold among the extracted uneven portions is emphasized.

- the first size threshold and the second size threshold may be set in accordance with the size of the concavo-convex portion that is desired to be enhanced in each observation state (or the image after enhancement is easy to see).

- the enhancement processing for example, different color components are emphasized between the concave portion and the convex portion.

- the emphasis process is not limited to the case where the emphasis process is performed on the identified target, and the emphasis process may be performed based on the known characteristic information without performing the object identification.

- a filter characteristic for example, a frequency band to be emphasized

- structure enhancement for example, emphasizing a high frequency component of an image

- the filter characteristics may be changed according to the amount of movement.

- FIG. 4 shows a detailed configuration example of the distance information acquisition unit 380.

- the distance information acquisition unit 380 includes a luminance signal calculation unit 323, a difference calculation unit 324, a secondary differential calculation unit 325, a blur parameter calculation unit 326, a storage unit 327, and an LUT storage unit 328.

- the luminance signal calculation unit 323 obtains the luminance signal Y (luminance value) from the captured image output from the image acquisition unit 310 using the following equation (1).

- the calculated luminance signal Y is transferred to the difference calculation unit 324, the secondary differential calculation unit 325, and the storage unit 327.

- the difference calculation unit 324 calculates the difference of the luminance signal Y from a plurality of images necessary for calculating the blur parameter.

- the secondary differential calculation unit 325 calculates a secondary differential of the luminance signal Y in the image, and calculates an average value of secondary differentials obtained from the plurality of luminance signals Y having different blurs.

- the blur parameter calculation unit 326 calculates the blur parameter by dividing the average value of the second derivative calculated by the second derivative calculation unit 325 from the difference of the luminance signal Y of the image calculated by the difference calculation unit 324.

- the storage unit 327 stores the luminance signal Y and the second derivative result of the first photographed image. Thereby, the distance information acquisition unit 380 can acquire the plurality of luminance signals Y at different times by disposing the focus lens at different positions via the control unit 302.

- the LUT storage unit 328 stores the relationship between the blur parameter and the subject distance in the form of a lookup table (LUT).

- the control unit 302 determines an optimum focus lens position using a known contrast detection method, phase difference detection method, or the like based on a shooting mode preset by the external I / F unit 500. calculate.

- the lens driving unit 250 drives the focus lens to the calculated focus lens position based on a signal from the control unit 302.

- the first image of the subject is acquired by the image sensor 206 at the driven focus lens position.

- the acquired image is stored in the storage unit 327 via the image acquisition unit 310 and the luminance signal calculation unit 323.

- the lens driving unit 250 drives the focus lens to a second focus lens position different from the focus lens position from which the first image was acquired, and the image sensor 206 acquires the second image of the subject. .

- the second image acquired in this way is output to the distance information acquisition unit 380 via the image acquisition unit 310.

- the difference calculation unit 324 reads the luminance signal Y in the first image from the storage unit 327 and is output from the luminance signal Y in the first image and the luminance signal calculation unit 323 2. The difference from the luminance signal Y in the first image is calculated.

- the secondary differential calculation unit 325 calculates the secondary differential of the luminance signal Y in the second image output from the luminance signal calculation unit 323. Thereafter, the luminance signal Y in the first image is read from the storage unit 327, and its second derivative is calculated. Then, an average value of the calculated second derivative of the first sheet and the second sheet is calculated.

- the blur parameter calculation unit 326 calculates the blur parameter by dividing the average value of the second derivative calculated by the second derivative calculation unit 325 from the difference calculated by the difference calculation unit 324.

- the relationship between the blur parameter and the focus lens position is stored in the LUT storage unit 328 as a table.

- the blur parameter calculation unit 326 uses the blur parameter and information in the table stored in the LUT storage unit 328 to obtain the subject distance from the blur parameter with respect to the optical system by linear interpolation.

- the calculated subject distance is output to the emphasis processing unit 370 as distance information.

- the distance information acquisition process is not limited to the above-described distance information acquisition process.

- the distance information may be obtained by a time-of-flight method using infrared light.

- Time of Flight modifications such as using blue light instead of infrared light are possible.

- distance information may be acquired by stereo matching.

- FIG. 1 A configuration example of the endoscope apparatus in this case is shown in FIG.

- the imaging unit 200 includes an objective lens 205 and an imaging element 207.

- the objective lenses 204 and 205 are arranged at positions separated by a predetermined distance, and arranged so that a parallax image (hereinafter referred to as a stereo image) can be taken.

- a stereo image a parallax image

- a left image and a right image are formed on the image sensors 206 and 207, respectively.

- the A / D conversion unit 209 performs A / D conversion on the left image and the right image output from the imaging elements 206 and 207, and outputs the converted left image and right image to the image configuration unit 320 and the distance information acquisition unit 380. To do.

- the distance information acquisition unit 380 uses the left image as the reference image, and performs a matching operation between the local region including the target pixel and the local region of the right image on the epipolar line passing through the target pixel of the reference image.

- the position having the maximum correlation in the matching calculation is calculated as a parallax, the parallax is converted into a distance in the depth direction (Z-axis direction of the distance map), and the distance information is output to the enhancement processing unit 370.

- FIG. 6 shows a detailed configuration example of the enhancement processing unit 370.

- the enhancement processing unit 370 includes an object specifying unit 371 and an enhancement unit 372.

- object specifying unit 371 includes an object specifying unit 371 and an enhancement unit 372.

- FIG. 7A schematically shows an example of a distance map.

- a one-dimensional distance map is considered, and the distance axis is taken in the direction indicated by the arrow.

- the distance map includes both information on the rough structure of the living body (for example, shape information on the lumen and the folds 2, 3, 4 and the like) and information on the uneven portions (for example, the recessed portions 10, 30, and the protruding portion 20) of the living body surface layer. Is included.

- the known characteristic information acquisition unit 390 acquires the dimension information (size information of the concavo-convex part of the living body to be extracted) from the storage unit 350 as the known characteristic information, and determines the frequency characteristic of the low-pass filter processing based on the dimension information. As shown in FIG. 7B, the target specifying unit 371 performs low-pass filter processing of the frequency characteristics on the distance map, and extracts information on the rough structure of the living body (shape information on lumens, wrinkles, etc.). To do.

- the target specifying unit 371 subtracts the information on the rough structure of the living body from the distance map, and obtains the unevenness map (information on the unevenness portion of the desired size) that is the unevenness information of the biological surface layer.

- the horizontal direction in the image, distance map, and concavo-convex map is defined as the x axis

- the vertical direction is defined as the y axis.

- the enhancement processing unit 370 outputs the uneven map obtained as described above to the enhancement processing unit 370.

- extracting the concavo-convex portion of the biological surface layer as the concavo-convex map corresponds to specifying the concavo-convex portion of the biological surface layer as an emphasis target.

- the target specifying unit 371 performs low-pass filter processing of a predetermined size (for example, N ⁇ N pixels (N is a natural number of 2 or more (including that value))) on the input distance information. And based on the distance information (local average distance) after the process, an extraction process parameter is adaptively determined. More specifically, the unevenness portion unique to the living body to be extracted due to the lesion is smoothed, and the characteristics of the low-pass filter that retains the structure of the lumen and the eyelid unique to the observation site are determined.

- a predetermined size for example, N ⁇ N pixels (N is a natural number of 2 or more (including that value)

- the characteristics of the concavo-convex portion to be extracted, the soot to be excluded, and the characteristics of the lumen structure are known from the known characteristic information, their spatial frequency characteristics are known, and the characteristics of the low-pass filter can be determined. Further, since the apparent size of the structure changes according to the local average distance, the characteristics of the low-pass filter are determined according to the local average distance as shown in FIG.

- the low-pass filter processing is realized by, for example, a Gaussian filter expressed by the following formula (3) or a bilateral filter expressed by the following formula (4).

- the frequency characteristics of these filters are controlled by ⁇ , ⁇ c , and ⁇ ⁇ .

- a ⁇ map that corresponds one-to-one to the pixels of the distance map may be created as an extraction processing parameter.

- a ⁇ map of both or one of ⁇ c and ⁇ ⁇ may be created.

- ⁇ is larger than a predetermined multiple ⁇ (> 1) of the inter-pixel distance D1 of the distance map corresponding to the size of the concavo-convex part unique to the living body to be extracted, and is a distance corresponding to the size of the lumen and the eyelid unique to the observation site.

- a value smaller than a predetermined multiple ⁇ ( ⁇ 1) of the inter-pixel distance D2 of the map is set.

- ⁇ ( ⁇ * D1 + ⁇ * D2) / 2 * R ⁇ may be set.

- R ⁇ is a function of the local average distance. The smaller the local average distance, the larger the value, and the larger the local average distance, the smaller the value.

- the known characteristic information acquisition unit 390 may read, for example, dimension information corresponding to the observation site from the storage unit 350, and the target specifying unit 371 may specify a target corresponding to the observation site based on the dimension information.

- the observation site can be determined by, for example, the scope ID stored in the memory 210 of FIG.

- the dimension information corresponding to the esophagus, stomach, and duodenum which are observation sites is read out.

- part is read.

- the extraction unevenness information may be acquired by, for example, morphological processing without being limited to the extraction processing using the low-pass filter processing as described above.

- opening processing and closing processing of a predetermined kernel size are performed on the distance map.

- the extraction processing parameter is the size of the structural element. For example, when a sphere is used as a structural element, the diameter of the sphere is smaller than the size of the region-specific lumen and fold based on the observed region information, and larger than the size of the living body-specific uneven portion to be extracted due to the lesion Set. As shown in FIG.

- the diameter is increased as the local average distance is decreased, and the diameter is decreased as the local average distance is increased.

- a concave portion on the surface of the living body is extracted by taking the difference between the information obtained by the closing process and the original distance information.

- the convex portion of the surface of the living body is extracted by taking the difference between the information obtained by the opening process and the original distance information.

- the enhancement unit 372 determines the observation state (observation method) based on the amount of movement, and performs enhancement processing corresponding to the observation state. Specifically, when the enhancement unit 372 determines that the observation amount is large and screening observation is performed, the emphasis unit 372 emphasizes a large-sized uneven portion among the extracted uneven portions. On the other hand, when the movement amount is small and it is determined that the observation is magnified, the uneven portion having a small size is emphasized among the extracted uneven portions.

- a process of emphasizing different colors between the concave portion and the convex portion will be described.

- a pixel with diff (x, y) ⁇ 0 is a convex portion

- a pixel with diff (x, y)> 0 is a concave portion.

- the size of the concavo-convex portion for example, the width of the convex region (number of pixels) and the width of the concave region (number of pixels) may be used, and the size of the concavo-convex portion may be determined by comparing the size with a threshold value.

- the present embodiment is not limited to this, and various enhancement processes can be applied. For example, a process of emphasizing a predetermined color (for example, blue) as diff (x, y) is larger (deeper in the concave portion) and reproducing a pigment spray such as indigo carmine may be performed. Further, the coloring method may be changed between screening observation and magnified observation.

- the target specifying unit 371 determines the extraction process parameter based on the known characteristic information, and specifies the uneven portion of the subject as the target of the enhancement process based on the determined extraction process parameter.

- processing target identification processing

- information on the desired uneven portion is extracted from information on various structures included in the distance information, and other structures (for example, structures unique to living bodies such as wrinkles) are excluded. Control is required.

- control is realized by setting extraction processing parameters based on the known characteristic information.

- the target specifying unit 371 determines the size of the structural element used for the opening process and the closing process based on the known characteristic information as an extraction process parameter, and uses the structural element of the determined size. An opening process and a closing process are performed to extract the uneven portion of the subject as extracted uneven information.

- the extraction process parameter at that time is the size of the structural element used in the opening process and the closing process.

- the extraction processing parameter is a parameter representing the diameter of the sphere.

- the captured image is an in-vivo image obtained by imaging the inside of the living body, and the subject is formed in a general three-dimensional structure of the living body, which is a luminal structure inside the living body, and a luminal structure.

- the object specifying unit 371 has a local concavo-convex structure

- the target specifying unit 371 includes a general three-dimensional structure and concavo-convex part included in the subject, and a subject that matches the characteristics specified by the known characteristic information.

- the uneven part is extracted as extracted uneven part information.

- a general three-dimensional structure in this case, a structure having a lower spatial frequency than the uneven portion

- an uneven portion smaller than that are included.

- the extraction process can be realized.

- the object specifying part 371 may extract the uneven part except for them. In this case, what is excluded becomes global (a structure with a low spatial frequency) and the extraction target is local (a structure with a high spatial frequency), so a spatial frequency corresponding to the middle is set as a boundary.

- each part of the processor unit 300 is configured by hardware.

- the present embodiment is not limited to this.

- the configuration may be such that the CPU processes each unit for the image signal and distance information acquired in advance using the imaging device, and the CPU executes the program to realize the software.

- a part of processing performed by each unit may be configured by software.

- the program stored in the information storage medium is read, and the read program is executed by a processor such as a CPU.

- the information storage medium (computer-readable medium) stores programs, data, and the like, and functions as an optical disk (DVD, CD, etc.), HDD (hard disk drive), or memory (card type). It can be realized by memory, ROM, etc.

- a processor such as a CPU performs various processes according to the present embodiment based on a program (data) stored in the information storage medium.

- a program for causing a computer an apparatus including an operation unit, a processing unit, a storage unit, and an output unit

- a program for causing the computer to execute processing of each unit Is memorized.

- FIG. 9 shows a flowchart when the processing performed by the image processing unit 301 is realized by software.

- a captured image is acquired (step S1)

- distance information when the captured image is captured is acquired (step S2).

- the target is specified by the above-described method (step S3). Further, the amount of motion of the subject is calculated from the captured image (step S4), and it is determined whether or not the amount of motion (for example, the magnitude of the motion vector) is larger than the threshold ⁇ (step S5). If the amount of motion is larger than the threshold value ⁇ , it is determined that the observation is screening, the first enhancement processing is performed on the captured image (step S6), and the processed image is output (step S7). In the first emphasis process, an object whose size is larger than the first size threshold Tk among the specified objects is emphasized.

- the second enhancement processing is performed on the captured image (step S8), and the processed image is output (step S8). Step S9).

- the second enhancement process among the identified objects, an object having a size smaller than the second size threshold Ts is emphasized.

- the distance information acquisition unit 380 acquires distance information (distance map in a narrow sense) that represents the distance from the imaging unit 200 to the subject when the captured image is captured.

- the emphasis processing unit 370 performs an emphasis process on the processing content corresponding to the motion amount based on the distance information and the known characteristic information.

- the processing content can be changed according to the observation state.

- the distance information for example, it is possible to specify an observation target characterized by a three-dimensional structure or shape.

- the known characteristic information acquisition unit 390 acquires known characteristic information that is information indicating a known characteristic related to the structure of the subject (for example, the size of the structure characteristic of the lesion).

- the emphasis processing unit 370 performs emphasis processing on the uneven portion of the subject that matches the characteristics specified by the known characteristic information.

- the emphasis process can be changed according to the amount of movement so that the uneven portion that the user is paying attention to in each observation state is emphasized.

- the target specifying unit 371 extracts an uneven portion having a desired characteristic (for example, size) from the distance information, and specifies the extracted uneven portion as a target for the enhancement process.

- the enhancement processing unit 370 performs enhancement processing on the identified target.

- the characteristic for example, size

- the enhancement processing unit 370 has a size larger than the first size threshold Tk among the extracted uneven portions when the amount of motion (for example, the magnitude of the motion vector) is larger than the threshold ⁇ . Emphasis processing is performed on the uneven portion determined to be. On the other hand, when the amount of motion is smaller than the threshold value ⁇ , the emphasis process is performed on the concavo-convex portion of the concavo-convex portion that is determined to have a size smaller than the second size threshold value Ts.

- the reddish part or the fading part is emphasized.

- the reddish portion and the fading portion may not have a shape characteristic like the uneven portion, and therefore, it is desirable to change the method of emphasizing the uneven portion.

- the reddish portion is a portion that is visually more reddish than the surrounding colors

- the fading portion is a portion that is visually less reddish than the surrounding colors.

- the endoscope apparatus can be configured similarly to the first embodiment.

- the same components as those in the first embodiment are denoted by the same reference numerals, and description thereof will be omitted as appropriate.

- FIG. 10 shows a detailed configuration example of the image processing unit 301 in the second embodiment.

- the image processing unit 301 includes an image configuration unit 320, an image storage unit 330, a storage unit 350, a motion amount acquisition unit 360, an enhancement processing unit 370, and a known characteristic information acquisition unit 390.

- the distance information acquisition unit 380 is omitted.

- FIG. 11 shows a detailed configuration example of the enhancement processing unit 370 in the second embodiment.

- the enhancement processing unit 370 includes an object specifying unit 371 and an enhancement unit 372.

- the known characteristic information acquisition unit 390 acquires color information of a living body (for example, a reddish part or a fading part) to be extracted as known characteristic information.

- the target specifying unit 371 specifies a region that matches the color represented by the known characteristic information as a target. Taking the reddish part as an example, an area deviated in the reddish direction from the color of the normal mucous membrane is specified as the reddish part. For example, an area where the ratio R / G of the pixel value of red (R), the pixel value of green (G), and the ratio R / G is larger than the surrounding R / G is identified as a red portion.

- information about how much (for example, how many times) it should be larger than the surrounding R / G is known characteristic information.

- the range of R / G and hue value may be stored as known characteristic information, and an area that matches the range of R / G and hue value may be set as the redness portion.

- image frequency and shape information for example, size and shape characteristic of the redness portion may be used as known characteristic information.

- the emphasis unit 372 performs a process of emphasizing the identified target color when it is determined that the observation amount is large and the screening observation is performed. For example, the redness of the reddish part (for example, R / G or the saturation of the red hue range) is increased, and the redness of the fading part is reduced. On the other hand, when it is determined that the amount of motion is small and magnification observation is performed, processing for enhancing an edge component of an image and processing for enhancing a specific frequency region by frequency analysis are performed. Further, color enhancement similar to that during screening observation may be performed.

- the redness of the reddish part for example, R / G or the saturation of the red hue range

- the present embodiment is not limited to this, and the emphasis process may be performed without specifying the target.

- the emphasis process may be performed without specifying the target.

- the redness enhancement characteristic is stored as known characteristic information.

- the detection target is not limited to the reddish part and the fading part, but may be a polyp, for example.

- a shape (or color) peculiar to the polyp may be stored as known characteristic information, and the polyp may be detected by pattern matching (or color comparison). Then, when it is determined that the observation amount is large and screening observation is performed, the polyp may be emphasized by contour enhancement. When it is determined that the observation amount is small and enlarged observation, color enhancement may be performed in addition to the contour enhancement.

- FIG. 12 shows a flowchart when the processing performed by the image processing unit 301 is realized by software.

- a captured image is acquired (step S1).

- an object is specified by the above-described method (step S22).

- the amount of motion of the subject is calculated from the captured image (step S23), and it is determined whether or not the amount of motion (for example, the magnitude of the motion vector) is larger than the threshold ⁇ (step S24). If the amount of motion is larger than the threshold value ⁇ , it is determined that the observation is screening observation, the first enhancement processing is performed on the captured image (step S25), and the processed image is output (step S26). In the first enhancement process, the specified target color is enhanced.

- the second enhancement process is performed on the captured image (step S27), and the processed image is output ( Step S28).

- the color and edge of the identified target are enhanced.

- the known characteristic information acquisition unit 390 acquires known characteristic information that is information representing a known characteristic related to the color of the subject.

- the enhancement process for the color can be performed with the content according to the observation state.

- the information regarding the color for example, it is possible to emphasize so as to make it easy to see a lesion or the like having a different color compared to the normal part.

- the emphasis processing unit 370 performs the emphasis process using a subject that matches the characteristic specified by the known characteristic information as an object of the emphasis process.

- the target specifying unit 371 specifies a target that matches a desired characteristic (for example, color) represented by the known characteristic information from the captured image, and the enhancement processing unit 370 emphasizes the specified target.

- the emphasis process may be performed based on the known characteristic information without specifying the target, without being limited to the case where the target specifying unit 371 is included.

- the enhancement processing unit 370 enhances the target color (for example, R / G or saturation of a predetermined hue) when the amount of motion (for example, the magnitude of the motion vector) is larger than the threshold ⁇ . Perform the emphasis process. On the other hand, when the amount of motion is smaller than the threshold value ⁇ , enhancement processing for enhancing at least the structure of the object is performed.

- the known characteristic information is information representing a known characteristic relating to the color of the reddish portion having a red component (for example, R / G) larger than the color of the normal mucous membrane.

- the enhancement processing unit 370 performs enhancement processing for enhancing the red component (for example, R / G or saturation of the red hue range) specified as a target. .

- the location of the lesioned part can be specified without overlooking the lesioned part having a characteristic color such as a reddish part or a fading part.

- a characteristic color such as a reddish part or a fading part.

- the slow-motion magnified observation not only the color but also the structure can be emphasized, so that it is easy to see the fine structure of the lesion that the user is paying attention to.

- the visibility in each observation state can be improved, and the oversight of lesions and the improvement of diagnosis accuracy can be realized.

Landscapes

- Health & Medical Sciences (AREA)

- Life Sciences & Earth Sciences (AREA)

- Engineering & Computer Science (AREA)

- Surgery (AREA)

- Physics & Mathematics (AREA)

- Heart & Thoracic Surgery (AREA)

- General Health & Medical Sciences (AREA)

- Optics & Photonics (AREA)

- Pathology (AREA)

- Radiology & Medical Imaging (AREA)

- Biophysics (AREA)

- Biomedical Technology (AREA)

- Veterinary Medicine (AREA)

- Medical Informatics (AREA)

- Molecular Biology (AREA)

- Animal Behavior & Ethology (AREA)

- Nuclear Medicine, Radiotherapy & Molecular Imaging (AREA)

- Public Health (AREA)

- Signal Processing (AREA)

- Multimedia (AREA)

- Computer Vision & Pattern Recognition (AREA)

- General Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- Endoscopes (AREA)

- Instruments For Viewing The Inside Of Hollow Bodies (AREA)

- Image Processing (AREA)

Priority Applications (3)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201380073719.1A CN105025775A (zh) | 2013-02-26 | 2013-09-24 | 图像处理装置、内窥镜装置、图像处理方法和图像处理程序 |

| EP13876108.5A EP2962621A4 (en) | 2013-02-26 | 2013-09-24 | IMAGE PROCESSING DEVICE, ENDOSCOPE DEVICE, IMAGE PROCESSING METHOD, AND IMAGE PROCESSING PROGRAM |

| US14/834,816 US20150363942A1 (en) | 2013-02-26 | 2015-08-25 | Image processing device, endoscope apparatus, image processing method, and information storage device |

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| JP2013-035729 | 2013-02-26 | ||

| JP2013035729A JP6150554B2 (ja) | 2013-02-26 | 2013-02-26 | 画像処理装置、内視鏡装置、画像処理装置の作動方法及び画像処理プログラム |

Related Child Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| US14/834,816 Continuation US20150363942A1 (en) | 2013-02-26 | 2015-08-25 | Image processing device, endoscope apparatus, image processing method, and information storage device |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| WO2014132474A1 true WO2014132474A1 (ja) | 2014-09-04 |

Family

ID=51427768

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/JP2013/075627 Ceased WO2014132474A1 (ja) | 2013-02-26 | 2013-09-24 | 画像処理装置、内視鏡装置、画像処理方法及び画像処理プログラム |

Country Status (5)

| Country | Link |

|---|---|

| US (1) | US20150363942A1 (enExample) |

| EP (1) | EP2962621A4 (enExample) |

| JP (1) | JP6150554B2 (enExample) |

| CN (1) | CN105025775A (enExample) |

| WO (1) | WO2014132474A1 (enExample) |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US9323978B2 (en) | 2013-06-27 | 2016-04-26 | Olympus Corporation | Image processing device, endoscope apparatus, and image processing method |

Families Citing this family (9)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP6401800B2 (ja) * | 2015-01-20 | 2018-10-10 | オリンパス株式会社 | 画像処理装置、画像処理装置の作動方法、画像処理装置の作動プログラムおよび内視鏡装置 |

| WO2017006404A1 (ja) | 2015-07-03 | 2017-01-12 | オリンパス株式会社 | 内視鏡システム |

| DE112016006299T5 (de) * | 2016-01-25 | 2018-10-11 | Sony Corporation | Medizinische Sicherheitssteuerungsvorrichtung, medizinisches Sicherheitssteuerungsverfahren und medizinisches Unterstützungssystem |

| JP6617124B2 (ja) * | 2017-07-20 | 2019-12-11 | セコム株式会社 | 物体検出装置 |

| CN111065315B (zh) * | 2017-11-02 | 2022-05-31 | Hoya株式会社 | 电子内窥镜用处理器以及电子内窥镜系统 |

| CN108154554A (zh) * | 2018-01-25 | 2018-06-12 | 北京雅森科技发展有限公司 | 基于脑部数据统计分析的立体图像生成方法及系统 |

| WO2021039438A1 (ja) | 2019-08-27 | 2021-03-04 | 富士フイルム株式会社 | 医療画像処理システム及びその動作方法 |

| CN117957567A (zh) | 2021-09-10 | 2024-04-30 | 波士顿科学医学有限公司 | 多个图像重建与配准的方法 |

| CN117940959A (zh) | 2021-09-10 | 2024-04-26 | 波士顿科学医学有限公司 | 鲁棒表面和深度估计的方法 |

Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2003088498A (ja) | 2001-09-19 | 2003-03-25 | Pentax Corp | 電子内視鏡装置 |

| JP2005342234A (ja) | 2004-06-03 | 2005-12-15 | Olympus Corp | 内視鏡装置 |

| JP2010005095A (ja) * | 2008-06-26 | 2010-01-14 | Fujinon Corp | 内視鏡装置における距離情報取得方法および内視鏡装置 |

| JP2012100909A (ja) * | 2010-11-11 | 2012-05-31 | Olympus Corp | 内視鏡装置及びプログラム |

| JP2012213552A (ja) * | 2011-04-01 | 2012-11-08 | Fujifilm Corp | 内視鏡システム、内視鏡システムのプロセッサ装置、及び画像処理方法 |

Family Cites Families (7)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2003126030A (ja) * | 2001-10-23 | 2003-05-07 | Pentax Corp | 電子内視鏡装置 |

| JP4855868B2 (ja) * | 2006-08-24 | 2012-01-18 | オリンパスメディカルシステムズ株式会社 | 医療用画像処理装置 |

| WO2010024331A1 (ja) * | 2008-09-01 | 2010-03-04 | 株式会社 日立メディコ | 画像処理装置、及び画像処理方法 |

| JP5658931B2 (ja) * | 2010-07-05 | 2015-01-28 | オリンパス株式会社 | 画像処理装置、画像処理方法、および画像処理プログラム |

| JP5622461B2 (ja) * | 2010-07-07 | 2014-11-12 | オリンパス株式会社 | 画像処理装置、画像処理方法、および画像処理プログラム |

| JP5698476B2 (ja) * | 2010-08-06 | 2015-04-08 | オリンパス株式会社 | 内視鏡システム、内視鏡システムの作動方法及び撮像装置 |

| JP5611892B2 (ja) * | 2011-05-24 | 2014-10-22 | 富士フイルム株式会社 | 内視鏡システム及び内視鏡システムの作動方法 |

-

2013

- 2013-02-26 JP JP2013035729A patent/JP6150554B2/ja active Active

- 2013-09-24 CN CN201380073719.1A patent/CN105025775A/zh active Pending

- 2013-09-24 WO PCT/JP2013/075627 patent/WO2014132474A1/ja not_active Ceased

- 2013-09-24 EP EP13876108.5A patent/EP2962621A4/en not_active Withdrawn

-

2015

- 2015-08-25 US US14/834,816 patent/US20150363942A1/en not_active Abandoned

Patent Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2003088498A (ja) | 2001-09-19 | 2003-03-25 | Pentax Corp | 電子内視鏡装置 |

| JP2005342234A (ja) | 2004-06-03 | 2005-12-15 | Olympus Corp | 内視鏡装置 |

| JP2010005095A (ja) * | 2008-06-26 | 2010-01-14 | Fujinon Corp | 内視鏡装置における距離情報取得方法および内視鏡装置 |

| JP2012100909A (ja) * | 2010-11-11 | 2012-05-31 | Olympus Corp | 内視鏡装置及びプログラム |

| JP2012213552A (ja) * | 2011-04-01 | 2012-11-08 | Fujifilm Corp | 内視鏡システム、内視鏡システムのプロセッサ装置、及び画像処理方法 |

Non-Patent Citations (1)

| Title |

|---|

| See also references of EP2962621A4 |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US9323978B2 (en) | 2013-06-27 | 2016-04-26 | Olympus Corporation | Image processing device, endoscope apparatus, and image processing method |

Also Published As

| Publication number | Publication date |

|---|---|

| EP2962621A4 (en) | 2016-11-16 |

| CN105025775A (zh) | 2015-11-04 |

| EP2962621A1 (en) | 2016-01-06 |

| US20150363942A1 (en) | 2015-12-17 |

| JP6150554B2 (ja) | 2017-06-21 |

| JP2014161537A (ja) | 2014-09-08 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| JP6150554B2 (ja) | 画像処理装置、内視鏡装置、画像処理装置の作動方法及び画像処理プログラム | |

| JP6112879B2 (ja) | 画像処理装置、内視鏡装置、画像処理装置の作動方法及び画像処理プログラム | |

| CN103561629B (zh) | 内窥镜装置及内窥镜装置的工作方法 | |

| JP6045417B2 (ja) | 画像処理装置、電子機器、内視鏡装置、プログラム及び画像処理装置の作動方法 | |

| JP6049518B2 (ja) | 画像処理装置、内視鏡装置、プログラム及び画像処理装置の作動方法 | |

| CN104883948B (zh) | 图像处理装置以及图像处理方法 | |

| WO2014119047A1 (ja) | 内視鏡用画像処理装置、内視鏡装置、画像処理方法及び画像処理プログラム | |

| JP6150555B2 (ja) | 内視鏡装置、内視鏡装置の作動方法及び画像処理プログラム | |

| WO2016136700A1 (ja) | 画像処理装置 | |

| JP6132901B2 (ja) | 内視鏡装置 | |

| JP6168876B2 (ja) | 検出装置、学習装置、検出方法、学習方法及びプログラム | |

| JP2014161355A (ja) | 画像処理装置、内視鏡装置、画像処理方法及びプログラム | |

| JP6128989B2 (ja) | 画像処理装置、内視鏡装置及び画像処理装置の作動方法 | |

| JP2014232470A (ja) | 検出装置、学習装置、検出方法、学習方法及びプログラム | |

| JP6168878B2 (ja) | 画像処理装置、内視鏡装置及び画像処理方法 |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| WWE | Wipo information: entry into national phase |

Ref document number: 201380073719.1 Country of ref document: CN |

|

| 121 | Ep: the epo has been informed by wipo that ep was designated in this application |

Ref document number: 13876108 Country of ref document: EP Kind code of ref document: A1 |

|

| NENP | Non-entry into the national phase |

Ref country code: DE |

|

| WWE | Wipo information: entry into national phase |

Ref document number: 2013876108 Country of ref document: EP |