WO2014119047A1 - 内視鏡用画像処理装置、内視鏡装置、画像処理方法及び画像処理プログラム - Google Patents

内視鏡用画像処理装置、内視鏡装置、画像処理方法及び画像処理プログラム Download PDFInfo

- Publication number

- WO2014119047A1 WO2014119047A1 PCT/JP2013/077286 JP2013077286W WO2014119047A1 WO 2014119047 A1 WO2014119047 A1 WO 2014119047A1 JP 2013077286 W JP2013077286 W JP 2013077286W WO 2014119047 A1 WO2014119047 A1 WO 2014119047A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- information

- unit

- subject

- image

- distance

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/0002—Inspection of images, e.g. flaw detection

- G06T7/0012—Biomedical image inspection

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B1/00—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor

- A61B1/00002—Operational features of endoscopes

- A61B1/00004—Operational features of endoscopes characterised by electronic signal processing

- A61B1/00009—Operational features of endoscopes characterised by electronic signal processing of image signals during a use of endoscope

- A61B1/000094—Operational features of endoscopes characterised by electronic signal processing of image signals during a use of endoscope extracting biological structures

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B1/00—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor

- A61B1/00002—Operational features of endoscopes

- A61B1/00004—Operational features of endoscopes characterised by electronic signal processing

- A61B1/00009—Operational features of endoscopes characterised by electronic signal processing of image signals during a use of endoscope

- A61B1/000095—Operational features of endoscopes characterised by electronic signal processing of image signals during a use of endoscope for image enhancement

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B1/00—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor

- A61B1/04—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor combined with photographic or television appliances

- A61B1/05—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor combined with photographic or television appliances characterised by the image sensor, e.g. camera, being in the distal end portion

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B1/00—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor

- A61B1/06—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor with illuminating arrangements

- A61B1/07—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor with illuminating arrangements using light-conductive means, e.g. optical fibres

-

- G—PHYSICS

- G01—MEASURING; TESTING

- G01B—MEASURING LENGTH, THICKNESS OR SIMILAR LINEAR DIMENSIONS; MEASURING ANGLES; MEASURING AREAS; MEASURING IRREGULARITIES OF SURFACES OR CONTOURS

- G01B11/00—Measuring arrangements characterised by the use of optical techniques

- G01B11/14—Measuring arrangements characterised by the use of optical techniques for measuring distance or clearance between spaced objects or spaced apertures

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/22—Matching criteria, e.g. proximity measures

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/24—Classification techniques

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/24—Classification techniques

- G06F18/241—Classification techniques relating to the classification model, e.g. parametric or non-parametric approaches

- G06F18/2413—Classification techniques relating to the classification model, e.g. parametric or non-parametric approaches based on distances to training or reference patterns

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T1/00—General purpose image data processing

- G06T1/0007—Image acquisition

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T5/00—Image enhancement or restoration

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/60—Analysis of geometric attributes

- G06T7/64—Analysis of geometric attributes of convexity or concavity

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V10/00—Arrangements for image or video recognition or understanding

- G06V10/40—Extraction of image or video features

- G06V10/44—Local feature extraction by analysis of parts of the pattern, e.g. by detecting edges, contours, loops, corners, strokes or intersections; Connectivity analysis, e.g. of connected components

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V10/00—Arrangements for image or video recognition or understanding

- G06V10/70—Arrangements for image or video recognition or understanding using pattern recognition or machine learning

- G06V10/764—Arrangements for image or video recognition or understanding using pattern recognition or machine learning using classification, e.g. of video objects

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/10—Image acquisition modality

- G06T2207/10024—Color image

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/10—Image acquisition modality

- G06T2207/10028—Range image; Depth image; 3D point clouds

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/10—Image acquisition modality

- G06T2207/10068—Endoscopic image

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/30—Subject of image; Context of image processing

- G06T2207/30004—Biomedical image processing

- G06T2207/30028—Colon; Small intestine

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/30—Subject of image; Context of image processing

- G06T2207/30004—Biomedical image processing

- G06T2207/30092—Stomach; Gastric

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V2201/00—Indexing scheme relating to image or video recognition or understanding

- G06V2201/03—Recognition of patterns in medical or anatomical images

- G06V2201/032—Recognition of patterns in medical or anatomical images of protuberances, polyps nodules, etc.

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V2201/00—Indexing scheme relating to image or video recognition or understanding

- G06V2201/03—Recognition of patterns in medical or anatomical images

- G06V2201/034—Recognition of patterns in medical or anatomical images of medical instruments

Definitions

- the present invention relates to an endoscope image processing apparatus, an endoscope apparatus, an image processing method, an image processing program, and the like.

- a method of emphasizing a structure of a captured image for example, a concavo-convex structure such as a groove

- image processing for example, image processing of emphasizing a specific spatial frequency and a method disclosed in Patent Document 1 below are known.

- image processing instead of image processing, a method is known in which an object after change is imaged by causing some change (for example, pigment dispersion) on the object side.

- Patent Document 1 emphasizes a concavo-convex structure by comparing the luminance level of a target pixel in a local extraction area with the luminance level of its peripheral pixels and performing coloring processing when the target area is darker than the peripheral area. An approach is disclosed.

- an endoscope image processing apparatus an endoscope apparatus, an image processing method, an image processing program, and the like that can apply enhancement processing to a subject to be enhanced. Can be provided.

- One aspect of the present invention is an image acquisition unit that acquires a captured image including an image of a subject, and a distance information acquisition unit that acquires distance information based on a distance from the imaging unit when capturing the captured image to the subject

- Concavo-convex specifying processing for specifying the concavo-convex portion of the subject that matches the property specified by the known property information based on the distance information and the known property information that is information representing the known property about the structure of the subject

- An unevenness specifying unit that performs an operation, a biological mucous membrane specifying unit that specifies an area of a biological mucous membrane in the captured image, and an area of the specified biological mucous membrane, based on the information of the uneven part specified by the unevenness specifying process

- an emphasizing processing unit for emphasizing processing.

- the region of the in-vivo mucous membrane in the captured image is identified, and the identified region of the in-vivo mucous membrane is based on the information of the uneven portion obtained based on the known characteristic information and the distance information. It is emphasized. This makes it possible to apply emphasis processing to the subject to be emphasized.

- an image acquisition unit for acquiring a captured image including an image of a subject

- distance information acquisition for acquiring distance information based on a distance from the imaging unit when capturing the captured image to the subject

- Emphasis processing is performed on the captured image based on the information of the unevenness specifying unit performing the specifying process, the exclusion target specifying unit specifying the area to be excluded in the captured image, and the uneven portion specified by the unevenness identifying process

- an emphasizing processing unit that applies or suppresses the emphasizing process to the identified exclusion target area.

- the exclusion target area in the captured image is identified, and the emphasizing process based on the information of the uneven portion acquired based on the known characteristic information and the distance information is performed on the exclusion target area. Inapplicable or suppressed. This makes it possible to invalidate or suppress the emphasis process on objects that should not be emphasized, and as a result, it is possible to apply the emphasis process to objects to be emphasized.

- Yet another aspect of the present invention relates to an endoscope apparatus including the endoscope image processing apparatus described in any of the above.

- a captured image including an image of a subject is acquired, distance information based on a distance from the imaging unit when capturing the captured image to the subject, and the distance information;

- An unevenness specifying process for specifying the uneven portion of the subject matching the property specified by the known property information is performed based on the known property information which is information indicating the known property regarding the structure of the subject, and the captured image

- the present invention relates to an image processing method of specifying the area of the in-vivo mucous membrane and emphasizing the specified area of the in-vivo mucous membrane based on the information of the uneven part specified by the uneven part specifying process.

- a captured image including an image of a subject is acquired, distance information based on a distance from the imaging unit when capturing the captured image to the subject, and the distance information;

- An unevenness specifying process for specifying the uneven portion of the subject matching the property specified by the known property information is performed based on the known property information which is information indicating the known property regarding the structure of the subject, and the captured image

- emphasizing processing on the captured image based on the information of the concavo-convex portion specified by the concavo-convex specifying processing, the emphasizing processing on the specified exclusion target area is performed. It relates to an image processing method which is not applied or suppressed.

- a captured image including an image of a subject is acquired, distance information based on a distance from the imaging unit when capturing the captured image to the subject, and the distance information;

- An unevenness specifying process for specifying the uneven portion of the subject matching the property specified by the known property information is performed based on the known property information which is information indicating the known property regarding the structure of the subject, and the captured image Relates to an image processing program that causes the computer to execute the step of identifying the area of the in-vivo mucous membrane and emphasizing the identified area of the in-vivo mucous membrane based on the information of the uneven part identified by the uneven part identifying process Do.

- a captured image including an image of a subject is acquired, distance information based on a distance from the imaging unit when capturing the captured image to the subject, and the distance information;

- An unevenness specifying process for specifying the uneven portion of the subject matching the property specified by the known property information is performed based on the known property information which is information indicating the known property regarding the structure of the subject, and the captured image

- emphasizing processing on the captured image based on the information of the concavo-convex portion specified by the concavo-convex specifying processing, the emphasizing processing on the specified exclusion target area is performed.

- the step of not applying or suppressing relates to an image processing program that causes a computer to execute.

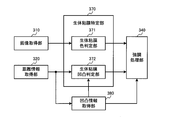

- FIG. 1 shows a first configuration example of the image processing apparatus.

- FIG. 2 shows a second configuration example of the image processing apparatus.

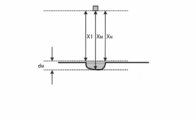

- FIG. 3 is a structural example of the endoscope apparatus in 1st Embodiment.

- FIG. 4 is a detailed configuration example of the rotational color filter.

- FIG. 5 is a detailed configuration example of the image processing unit in the first embodiment.

- FIG. 6 is a detailed configuration example of a biological mucous membrane identification unit.

- FIG. 7A and FIG. 7B are explanatory diagrams of the emphasis amount in the emphasis processing.

- FIG. 8 is a detailed configuration example of the unevenness information acquisition unit.

- FIG. 9A to FIG. 9F are explanatory diagrams of extraction processing of extraction asperity information by morphological processing.

- FIGS. 15 (A) and 15 (B) are setting examples of the emphasis amount (gain coefficient) in the emphasizing process of the concave portion.

- FIG. 16 is a detailed configuration example of the distance information acquisition unit.

- FIG. 17 is a detailed configuration example of the image processing unit in the second embodiment.

- FIG. 18 is a detailed configuration example of the exclusion target identification unit.

- FIG. 19 is a detailed configuration example of the excluded subject identification unit.

- FIG. 20 shows an example of a captured image when inserting forceps.

- 21 (A) to 21 (C) are explanatory diagrams of exclusion target identification processing in the case where the treatment tool is to be excluded.

- FIG. 22 is a detailed configuration example of the excluded scene identification unit.

- FIG. 23 is a detailed configuration example of the image processing unit in the third embodiment.

- FIG. 24A is a view showing the relationship between an imaging unit and an object when observing an abnormal part.

- FIG. 24B is an example of the acquired image.

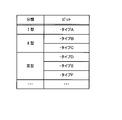

- FIG. 25 is an explanatory diagram of classification processing.

- FIG. 26 is a detailed configuration example of a biological mucous membrane identifying unit in the third embodiment.

- FIG. 27 is a detailed configuration example of the image processing unit in the first modified example of the third embodiment.

- FIG. 28 is a detailed configuration example of the image processing unit in the second modified example of the third embodiment.

- FIG. 29 is a detailed configuration example of the image processing unit in the fourth embodiment.

- FIG. 30 is a detailed structural example of the uneven

- 31 (A) and 31 (B) are explanatory diagrams of processing performed by the surface shape calculation unit.

- FIG. 32 (A) shows an example of a basic pit.

- FIG. 32 (B) shows an example of a correction pit.

- FIG. 33 is a detailed configuration example of the surface shape calculation unit.

- FIG. 32 (A) shows an example of a basic pit.

- FIG. 32 (B) shows an example of a correction pit.

- FIG. 33 is a detailed configuration example of the surface shape calculation unit.

- FIG. 34 shows a detailed configuration example of a classification processing unit in the first classification processing method.

- 35 (A) to 35 (F) are explanatory views of a specific example of classification processing.

- FIG. 36 shows a detailed configuration example of a classification processing unit in the second classification processing method.

- FIG. 37 shows an example of classification types in the case of using a plurality of classification types.

- FIGS. 38A to 38F show examples of pit patterns.

- Method of this embodiment As a method of emphasizing the unevenness of the subject, there is a method of imaging the subject after the change by causing some change on the subject side.

- the endoscope apparatus for the living body there is a method of staining the living body itself and applying a contrast to the surface mucous membrane by dispersing a pigment such as indigo carmine.

- pigment dispersion is time-consuming and costly, and there is a risk that the inherent pigmentation may impair the original color of the subject, and the visibility of structures other than irregularities may be reduced.

- pigment dispersion to a living body may cause a problem of being highly invasive to the patient.

- the unevenness of the subject is emphasized by image processing.

- the uneven portion itself but also the uneven portion may be classified, and emphasis may be performed according to the classification result.

- the emphasizing process for example, various methods such as reproduction of the pigment dispersion described above and emphasizing of high frequency components can be adopted.

- emphasizing by image processing there is a problem that the unevenness of the subject to be emphasized and the unevenness of the subject not to be emphasized are similarly emphasized.

- the emphasis process is performed on the subject to be emphasized.

- the image is an image of an object (or a scene) to be emphasized, the emphasis process on the object (or the entire image) is excluded or suppressed.

- FIG. 1 shows a first configuration example of an image processing apparatus as a configuration example in the case of performing enhancement processing on a subject to be enhanced.

- the image processing apparatus includes an image acquisition unit 310, a distance information acquisition unit 320, an unevenness specifying unit 350, a biological mucous membrane specifying unit 370, and an emphasis processing unit 340.

- the image acquisition unit 310 acquires a captured image including an image of a subject.

- the distance information acquisition unit 320 acquires distance information based on the distance from the imaging unit to the subject when capturing a captured image.

- the concavo-convex specifying unit 350 specifies the concavo-convex portion of the subject that matches the characteristic identified by the known characteristic information based on the distance information and the known characteristic information that is information representing the known characteristic regarding the structure of the subject. Perform unevenness identification processing.

- the in-vivo mucous membrane identifying unit 370 identifies an area of the in-vivo mucous membrane in the captured image.

- the emphasizing unit 340 emphasizes the identified area of the mucous membrane of the living body based on the information of the uneven portion specified by the uneven part specifying process.

- this configuration example it is possible to specify a living body mucous membrane which is a subject to be emphasized, and to perform an emphasizing process on the specified living body mucous membrane. That is, it is possible to apply emphasis processing to a living mucous membrane and not apply or suppress emphasizing processing to a region other than a living mucous membrane that does not need to be emphasized. This makes it easy for the user to distinguish between the mucous membrane of the living body and the other area, thereby improving the examination accuracy and reducing the user's fatigue.

- the distance information is information in which each position of the captured image is associated with the distance to the subject at each position.

- the distance information is a distance map.

- the distance map for example, when the optical axis direction of the imaging unit 200 in FIG. 3 is the Z axis, the distance (depth / depth) in the Z axis direction to the subject at each point (for example, each pixel) in the XY plane Is a map with the value of that point.

- the distance information may be various information acquired based on the distance from the imaging unit 200 to the subject. For example, in the case of triangulation with a stereo optical system, a distance based on an arbitrary point on a surface connecting two lenses generating parallax may be used as distance information. Alternatively, in the case of using the Time of Flight method, for example, a distance based on each pixel position of the imaging device surface may be acquired as distance information.

- the reference point for distance measurement is set in the imaging unit 200, but the reference point is an arbitrary location other than the imaging unit 200, for example, an arbitrary location in a three-dimensional space including the imaging unit and the subject. It may be set, and information when using such a reference point is also included in the distance information of the present embodiment.

- the distance from the imaging unit 200 to the subject may be, for example, the distance from the imaging unit 200 to the subject in the depth direction.

- the distance in the optical axis direction of the imaging unit 200 may be used.

- the viewpoint is set in a direction perpendicular to the optical axis of the imaging unit 200, the distance observed from the viewpoint (from the imaging unit 200 on the line parallel to the optical axis passing through the Distance)).

- the distance information acquisition unit 320 performs a known coordinate conversion process on the coordinates of each corresponding point in the first coordinate system with the first reference point of the imaging unit 200 as the origin, as a second coordinate in the three-dimensional space.

- the coordinates may be converted into the coordinates of the corresponding point in the second coordinate system with the reference point as the origin, and the distance may be measured based on the converted coordinates.

- the distance from the second reference point in the second coordinate system to each corresponding point is the distance from the first reference point in the first coordinate system to each corresponding point, that is, “the corresponding points from the imaging unit The distance between the two is the same.

- the distance information acquisition unit 320 is virtual at a position where the same magnitude relationship as the magnitude relationship between distance values between pixels on the distance map acquired when the reference point is set in the imaging unit 200 can be maintained.

- distance information based on the distance from the imaging unit 200 to the corresponding point may be acquired. For example, when the actual distances from the imaging unit 200 to the three corresponding points are “3”, “4”, and “5”, for example, the distance information acquiring unit 320 maintains the magnitude relationship of the distance values between the pixels. It is also possible to obtain “1.5”, “2”, “2.5” in which the distances are uniformly halved. As described later in FIG.

- the unevenness information acquisition unit 380 acquires unevenness information using the extraction processing parameter

- the unevenness information acquisition unit 380 extracts as compared with the case where the reference point is set in the imaging unit 200.

- Different parameters will be used as processing parameters. Since it is necessary to use distance information to determine the extraction processing parameter, the method of determining the extraction processing parameter also changes when the way of representing the distance information is changed due to the change of the reference point of the distance measurement. For example, when extracting extraction unevenness information by morphology processing as described later, the size (for example, the diameter of a sphere) of the structural element used for the extraction process is adjusted, and extraction of the unevenness portion is performed using the adjusted structural element Perform the process.

- the known characteristic information is information which can separate the structure useful in the present embodiment and the structure not so among the structures of the object surface.

- information on uneven portions that is useful to emphasize may be used as known characteristic information, in which case a subject that matches the known characteristic information is the target of emphasis processing.

- a structure that is not useful even if emphasized may be used as known characteristic information, in which case an object that does not match the known characteristic information is to be emphasized.

- information on both the useful unevenness and the non-useful structure may be held, and the range of the useful unevenness may be set with high accuracy.

- the known characteristic information is information that can classify the structure of the subject into a particular type or state.

- it is information for classifying a structure of a living body into types such as blood vessels, polyps, cancer, and other lesions, and information such as shape, color, size, etc. characteristic of those structures.

- it may be information that can determine whether a specific structure (for example, a pit pattern present in the large intestine mucosa) is normal or abnormal, etc., and the shape of the normal or abnormal structure. It may be information such as color and size.

- the area of the in-vivo mucous membrane is not limited to the entire in-vivo mucous membrane shown in the captured image, but a part thereof may be specified as the in-vivo mucous membrane. That is, the part which is the implementation object of emphasis processing among the body mucous membranes should just be specified as a field of the body mucous membrane.

- a groove region which is a part of the surface of the living body is specified as a region of the living mucous membrane, and the region is emphasized.

- a portion where a feature (for example, color) other than the unevenness on the surface of the living body meets a predetermined condition is specified as the region of the living mucous membrane.

- FIG. 2 shows a second configuration example of the image processing apparatus as a configuration example in the case of excluding or suppressing enhancement processing on a subject (or a scene) that should not be enhanced.

- the image processing apparatus includes an image acquisition unit 310, a distance information acquisition unit 320, an unevenness information acquisition unit 380, an exclusion target identification unit 330, and an emphasis processing unit 340.

- the image acquisition unit 310 acquires a captured image including an image of a subject.

- the distance information acquisition unit 320 acquires distance information based on the distance from the imaging unit to the subject when capturing a captured image.

- the concavo-convex specifying unit 350 specifies the concavo-convex portion of the subject that matches the characteristic identified by the known characteristic information based on the distance information and the known characteristic information that is information representing the known characteristic regarding the structure of the subject. Perform unevenness identification processing.

- the emphasizing processing unit 340 performs emphasizing processing on the captured image based on the information of the concavo-convex portion specified by the concavo-convex specifying processing.

- the exclusion target specifying unit 330 specifies an exclusion target area in the captured image for which enhancement processing is not performed. At this time, the emphasizing processing unit 340 does not apply or suppresses emphasizing processing on the identified exclusion target area.

- the emphasizing process is applied to the area other than the exclusion target, and as a result, the emphasizing process can be performed on the biological mucous membrane to be emphasized. This makes it easy for the user to distinguish between the mucous membrane of the living body and the other area, thereby improving the examination accuracy and reducing the user's fatigue.

- the exclusion target is a subject or scene that does not need to be emphasized (for example, not a living body) or a subject or a scene that is not useful to emphasize (for example, to enhance the doctor's medical examination by emphasizing).

- a subject such as a residue or a blood clot, a treatment tool, a sunken (blackout) area, a whiteout (highlight) area, or a treatment with, for example, water supply or IT knife.

- mist is generated when the knife cauterizes a living body. If an image in which such mist is captured is enhanced, there is a possibility that the image will be rather difficult to observe.

- the enhancement processing of the area is not applied (or suppressed), and in the case of an image obtained by capturing the scene to be excluded, the entire image Do not apply (or suppress) emphasis processing of

- a local uneven structure eg, polyp, eyebrows, etc.

- a desired size eg, width, height, depth, etc.

- the extraction process is performed excluding the global structure (for example, the surface undulation larger than the scale).

- FIG. 3 shows an example of the configuration of the endoscope apparatus according to the first embodiment.

- the endoscope apparatus includes a light source unit 100, an imaging unit 200, a processor unit 300, a display unit 400, and an external I / F unit 500.

- the light source unit 100 includes a white light source 110, a light source diaphragm 120, a light source diaphragm driving unit 130 for driving the light source diaphragm 120, and a rotational color filter 140 having filters of a plurality of spectral transmittances.

- the light source unit 100 also includes a rotary drive unit 150 for driving the rotary color filter 140, and a condenser lens 160 for condensing light transmitted through the rotary color filter 140 on the incident end face of the light guide fiber 210.

- the light source diaphragm drive unit 130 adjusts the light amount by opening and closing the light source diaphragm 120 based on a control signal from the control unit 302 of the processor unit 300.

- FIG. 4 shows a detailed configuration example of the rotary color filter 140.

- the rotating color filter 140 is composed of three primary color red (abbreviated as R) filters 701, a green (abbreviated as G) filter 702, a blue (abbreviated as B) filter 703, and a rotating motor 704.

- R red

- G green

- B blue

- the R filter 701 transmits light having a wavelength of 580 nm to 700 nm

- the G filter 702 transmits light having a wavelength of 480 nm to 600 nm

- the B filter 703 transmits light having a wavelength of 400 nm to 500 nm.

- the rotation drive unit 150 rotates the rotation color filter 140 at a predetermined rotation speed in synchronization with the imaging period of the imaging device 260 based on the control signal from the control unit 302. For example, if the rotary color filter 140 is rotated 20 times per second, each color filter will cross incident white light at intervals of 1/60 second. In this case, the imaging device 260 completes the imaging and transfer of the image signal at an interval of 1/60 of a second.

- the imaging device 260 is, for example, a monochrome single-plate imaging device, and is configured of, for example, a CCD, a CMOS image sensor, or the like. That is, in the present embodiment, imaging in a plane-sequential method is performed in which images of respective primary colors (R, G, or B) of three primary colors are captured at an interval of 1/60 second.

- the imaging unit 200 is formed to be elongated and bendable, for example, to allow insertion into a body cavity.

- the imaging unit 200 diffuses the light guided to the tip by the light guide fiber 210 for guiding the light collected by the light source unit 100 to the illumination lens 220 and irradiates the observation target with the light And an illumination lens 220.

- the imaging unit 200 includes an objective lens 230 for condensing reflected light returning from an observation target, a focus lens 240 for adjusting a focal position, and a lens driving unit 250 for moving the position of the focus lens 240.

- an imaging element 260 for detecting the collected reflected light.

- the lens driving unit 250 is, for example, a VCM (Voice Coil Motor), and is connected to the focus lens 240.

- the lens drive unit 250 adjusts the in-focus object position by switching the position of the focus lens 240 at a continuous position.

- the imaging unit 200 is provided with a switch 270 for allowing the user to instruct on / off of the emphasizing process.

- a switch 270 for allowing the user to instruct on / off of the emphasizing process.

- an on / off instruction signal of enhancement processing is output from the switch 270 to the control unit 302.

- the imaging unit 200 also includes a memory 211 in which information of the imaging unit 200 is recorded.

- a scope ID indicating the use of the imaging unit 200

- information of optical characteristics of the imaging unit 200 information of a function of the imaging unit 200, and the like are recorded.

- the scope ID is, for example, an ID corresponding to a scope for the lower digestive tract (large intestine) or a scope for the upper digestive tract (esophagus, stomach).

- the information of the optical characteristic is, for example, information such as the magnification (angle of view) of the optical system.

- the information of the function is information representing the execution state of the function such as water supply provided in the scope.

- the processor unit 300 (control device) performs control of each unit of the endoscope apparatus and image processing.

- the processor unit 300 includes a control unit 302 and an image processing unit 301.

- the control unit 302 is bidirectionally connected to each unit of the endoscope apparatus, and controls each unit. For example, the control unit 302 transfers the control signal to the lens drive unit 250 to change the position of the focus lens 240.

- the image processing unit 301 performs a process of specifying an area of a biological mucous membrane from a captured image, an enhancement process of the specified area of a biological mucous membrane, and the like. Details of the image processing unit 301 will be described later.

- the display unit 400 displays the endoscopic image transferred from the processor unit 300.

- the display unit 400 is an image display device capable of displaying moving images, such as an endoscope monitor, for example.

- the external I / F unit 500 is an interface for performing input from the user to the endoscope apparatus.

- the external I / F unit 500 starts, for example, a power switch for turning on / off the power, a mode switching button for switching the shooting mode and other various modes, and an autofocus operation for automatically focusing on the subject.

- Is configured to include an AF button and the like.

- FIG. 5 shows a configuration example of the image processing unit 301 in the first embodiment.

- the image processing unit 301 includes an image acquisition unit 310, a distance information acquisition unit 320, a biological mucous membrane identification unit 370, an emphasis processing unit 340, a post-processing unit 360, an unevenness identification unit 350, and a storage unit 390.

- the unevenness identification unit 350 includes an unevenness information acquisition unit 380.

- the image acquisition unit 310 is connected to the distance information acquisition unit 320, the biological mucous membrane identification unit 370, and the emphasis processing unit 340.

- the distance information acquisition unit 320 is connected to the in-vivo mucous membrane identification unit 370 and the unevenness information acquisition unit 380.

- the biological mucous membrane identification unit 370 is connected to the emphasis processing unit 340.

- the emphasizing processing unit 340 is connected to the post-processing unit 360.

- the post-processing unit 360 is connected to the display unit 400.

- the unevenness information acquisition unit 380 is connected to the in-vivo mucous membrane identification unit 370 and the emphasis processing unit 340.

- the storage unit 390 is connected to the unevenness information acquisition unit 380.

- the control unit 302 is bidirectionally connected to each unit of the image processing unit 301, and controls each unit. For example, the control unit 302 synchronizes the image acquisition unit 310, the post-processing unit 360, and the light source aperture drive unit 130. Further, the switch 270 (or the external I / F unit 500) transfers an emphasis processing on / off instruction signal to the emphasis processing unit 340.

- the image acquisition unit 310 converts an analog image signal transferred from the imaging device 260 into a digital image signal by A / D conversion processing. Then, OB clamp processing, gain correction processing, and WB correction processing are performed on the digital image signal using the OB clamp value, gain correction value, and WB coefficient value stored in advance in the control unit 302. Further, the synchronization processing is performed on the R image, the G image, and the B image captured by the field sequential method, and a color image having RGB pixel values for each pixel is acquired. The color image is transferred as an endoscopic image (captured image) to the distance information acquisition unit 320, the biological mucous membrane identification unit 370, and the emphasis processing unit 340.

- the A / D conversion process may be performed at a stage before the image processing unit 301 (for example, built in the imaging unit 200).

- the distance information acquisition unit 320 acquires distance information to the subject based on the endoscope image, and transfers the distance information to the biological mucous membrane identification unit 370 and the unevenness information acquisition unit 380.

- the distance information acquisition unit 320 detects the distance to the subject by calculating the blur parameter from the endoscopic image.

- the imaging unit 200 may have an optical system for capturing a stereo image, and the distance information acquisition unit 320 performs stereo matching processing on the stereo image to detect the distance to the subject. Good.

- the imaging unit 200 may have a sensor that detects TOF (Time Of Flight), and the distance information acquisition unit 320 may detect the distance to the subject based on the sensor output. The details of the distance information acquisition unit 320 will be described later.

- TOF Time Of Flight

- the distance information is, for example, a distance map having distance information corresponding to each pixel of the endoscopic image.

- the distance information includes both information representing the rough structure of the subject and information representing the unevenness relatively smaller than the rough structure.

- the information representing the rough structure corresponds to, for example, the rough structure of the luminal structure or mucous membrane originally possessed by the organ, and is, for example, a low frequency component of distance information.

- the information representing the unevenness corresponds to, for example, the unevenness of the mucous membrane surface or the lesion, and is, for example, a high frequency component of the distance information.

- the concavo-convex information acquisition unit 380 extracts the extracted concavo-convex information representing the concavo-convex part on the surface of the living body from the distance information, based on the known characteristic information stored in the storage unit 390. Specifically, the concavo-convex information acquisition unit 380 acquires, as known characteristic information, the size (dimension information such as width, height, depth, etc.) of the concavo-convex portion unique to the living body desired to be extracted, and the desired dimension represented by the known characteristic information. Extract asperities having characteristics. Details of the unevenness information acquisition unit 380 will be described later.

- the biological mucous membrane identification unit 370 identifies an area of a biological mucous membrane (for example, a part of a biological body in which a lesion may be present) to be subjected to enhancement processing in an endoscopic image. As described later, for example, based on an endoscopic image, a region that matches the color feature of the in-vivo mucous membrane is specified as the in-vivo mucous membrane region.

- a region matching the feature of the biological mucous membrane (for example, a recess or a groove) to be emphasized is specified as a region of the biological mucous membrane among the concavity and convexity represented by the extracted concavity and convexity information.

- the in-vivo mucous membrane identification unit 370 determines whether each pixel is in-vivo mucous membrane, and outputs positional information (coordinates) of the pixel determined to be in-vivo mucous membrane to the emphasis processing unit 340.

- a set of pixels determined to be the in-vivo mucous membrane corresponds to the area of the in-vivo mucous membrane.

- the emphasizing processing unit 340 performs emphasizing processing on the identified area of the mucous membrane of the living body, and outputs the endoscopic image to the post-processing unit 360.

- the biological mucous membrane identification unit 370 specifies the region of the biological mucous membrane by color

- the emphasizing unit 340 emphasizes the region of the biological mucous membrane based on the extracted unevenness information.

- the in-vivo mucous membrane identification unit 370 identifies the area of the in-vivo mucous membrane based on the extracted unevenness information

- the emphasizing unit 340 emphasizes the in-vivo mucous membrane area. In any case, the emphasizing process is performed based on the extracted unevenness information.

- the emphasizing process may be, for example, a process of emphasizing a concavo-convex structure (for example, a high frequency component of an image) of a living mucous membrane, or a process of emphasizing a predetermined color component according to the concavo-convex of the living mucous membrane.

- processing for reproducing pigment dispersion may be performed by making predetermined color components darker in concave portions than in convex portions.

- the post-processing unit 360 performs tone conversion on the endoscope image transferred from the enhancement processing unit 340 using tone conversion coefficients, color conversion coefficients, and edge enhancement coefficients stored in advance in the control unit 302. Performs processing, color processing, and edge enhancement processing.

- the post-processing unit 360 transfers the post-processed endoscopic image to the display unit 400.

- FIG. 6 shows a detailed configuration example of the biological mucous membrane identification unit 370.

- the biological mucous membrane identification unit 370 includes a biological mucous membrane color judgment unit 371 and a biological mucous membrane unevenness judgment unit 372.

- at least one of the in-vivo mucous membrane color determination unit 371 and the in-vivo mucous membrane unevenness determination unit 372 identifies the region of the in-vivo mucous membrane.

- the endoscopic image is transferred from the image acquisition unit 310 to the in-vivo mucous membrane color determination unit 371.

- the biological mucous membrane color determination unit 371 compares the hue value at each pixel of the endoscopic image with the range of hue values possessed by the biological mucous membrane, and determines whether each pixel corresponds to the biological mucous membrane.

- a pixel whose hue value H satisfies the following expression (1) is determined as a pixel corresponding to a biological mucous membrane (hereinafter referred to as a biological mucous membrane pixel). 10 ° ⁇ H ⁇ 30 ° (1)

- the hue value H is calculated from the RGB pixel values by the following equation (2), and takes a range of 0 ° to 360 °.

- max (R, G, B) is the maximum value among R pixel value, G pixel value, and B pixel value

- min (R, G, B) is R pixel value, G pixel Value, which is the minimum value of B pixel values.

- the emphasizing process can be performed by limiting to a region in which the in-vivo mucous membrane can be determined from the color feature. As a result, it is possible to perform an emphasizing process suitable for medical examination, in order not to emphasize a subject that does not need to be emphasized.

- the distance information is transferred from the distance information acquisition unit 320 to the biological mucous membrane unevenness determination unit 372, and the extracted unevenness information is transferred from the unevenness information acquisition unit 380.

- the biological mucous membrane unevenness judgment unit 372 judges whether each pixel corresponds to a biological mucous membrane based on the distance information and the extracted unevenness information. Specifically, based on the extracted asperity information, a groove on the surface of the living body (for example, a recess with a width of 1000 ⁇ m or less (including its value) and a depth of 100 ⁇ m or less (including its value)) is detected.

- the above equation (4) represents that the pixel at the coordinates (p, q) is a pixel detected as a groove on the surface of the living body.

- D (x, y) is the distance to the subject at the pixel at coordinate (x, y)

- D (p, q) is the distance to the subject at the pixel at coordinate (p, q) It is a distance.

- Tneighbor is a threshold for the distance difference between pixels.

- the distance information acquisition unit 320 acquires a distance map as distance information.

- the distance map means, for example, the distance (depth / depth) in the Z-axis direction to the subject at each point (for example, each pixel) in the XY plane when the optical axis direction of the imaging unit 200 is the Z axis. It is the map which made the value of the point concerned.

- the distance D (x, y) at the coordinate (x, y) of the endoscopic image is the coordinate (x, y) of the distance map. It is the value in).

- the biological mucous membrane on which enhancement processing is to be performed is specified in the endoscopic image, and the specified biological mucous membrane on the subject surface is Emphasize unevenness information.

- the emphasizing process can be performed on the area requiring the emphasizing process, so the ability to distinguish between the area requiring the emphasizing process and the area not requiring the emphasizing process is improved, and the user feels tired in image observation when the emphasizing process is performed. Can be minimized.

- the biological mucous membrane specifying unit 370 specifies a region in which the feature amount based on the pixel value of the captured image satisfies the predetermined condition corresponding to the biological mucous membrane as the region of the biological mucous membrane. More specifically, the in-vivo mucous membrane identification unit 370 uses, as the in-vivo mucous membrane area, a region in which color information (for example, hue value) that is a feature amount satisfies a predetermined condition (for example, a range of hue values) Identify.

- a predetermined condition for example, a range of hue values

- the subject to be emphasized can be identified based on the feature amount of the image. That is, by setting the feature of the mucous membrane of the living body as the condition of the feature amount and detecting the region meeting the predetermined condition, it is possible to specify the subject to be emphasized. For example, by setting a color characteristic of the mucous membrane of the living body as a predetermined condition, it is possible to specify an area matching the color condition as a subject to be emphasized.

- the in-vivo mucous membrane identifying unit 370 identifies an area in which the extracted asperity information matches the asperity characteristic that is the known characteristic information as the in-vivo mucous membrane area. More specifically, the in-vivo mucous membrane identification unit 370 acquires, as known characteristic information, dimension information representing at least one of the width and the depth of the recess (groove) of the subject, and among the irregularities included in the extracted unevenness information, Extract a recess that matches the characteristics specified by the dimension information. Then, the recessed area which is an area on the captured image corresponding to the extracted recessed area and the area near the recessed area are specified as the area of the mucous membrane of the living body.

- the subject to be emphasized can be identified based on the uneven shape of the subject. That is, by setting the feature of the mucous membrane of the living body as the condition of the concavo-convex shape and detecting the region meeting the predetermined condition, the subject to be emphasized can be specified. Further, by specifying the recessed area as the area of the mucous membrane of the living body, the emphasizing process can be performed on the recessed area. As will be described later, in the case of dye dispersion, since the concave portions on the surface of the living body tend to be dyed deeply, it is possible to reproduce the dye dispersion by image processing by emphasizing the concave portions.

- the concavo-convex characteristic is a characteristic of the concavo-convex portion specified by the concavo-convex characteristic information.

- the asperity characteristic information is information for specifying the asperity characteristic of the subject that is desired to be extracted from the distance information. Specifically, it includes at least one of information indicating the characteristics of the unevenness to be non-extracted and the information indicating the characteristics of the unevenness to be extracted among the unevenness included in the distance information.

- the emphasizing process is performed such that the emphasizing process is turned on for the area of the mucous membrane of the living body and the emphasizing process is turned off for the other areas.

- the emphasizing processing unit 340 performs emphasizing processing with an emphasizing amount that changes continuously at the boundary between the region of the mucous membrane of the body and the other region.

- the amount of enhancement is multi-valued (for example, 0% to 100%) by applying a low-pass filter to the amount of enhancement at the boundary between the region of the body mucous membrane and the other region. Make it continuous.

- FIG. 8 shows a detailed configuration example of the unevenness information acquisition unit 380.

- the unevenness information acquisition unit 380 includes a known characteristic information acquisition unit 381, an extraction processing unit 383 and an extraction unevenness information output unit 385.

- an uneven portion having a desired dimension characteristic (in a narrow sense, an uneven portion whose width is in a desired range) is extracted as extracted uneven information. Since the three-dimensional structure of the subject is reflected in the distance information, the distance information is a rough surface corresponding to the eyebrow structure, which is a larger structure than the desired uneven portion, and the wall surface structure of the lumen. Structure is included. That is, it can also be said that the extraction unevenness information acquisition process of the present embodiment is a process of excluding the eyelid structure and the lumen structure from the distance information.

- the extraction unevenness information acquisition process is not limited to this.

- the known characteristic information may not be used in the process of acquiring the extracted unevenness information.

- various modifications can be made as to what kind of information is used as the known characteristic information.

- information on lumen structure may be excluded from distance information, but extraction processing may be performed to leave information on hemorrhoid structure.

- known property information for example, dimension information of a recess

- the known characteristic information acquisition unit 381 acquires known characteristic information from the storage unit 390. Specifically, the size of the unevenness inherent in the living body that is desired to be extracted from the lesion surface (dimension information such as width, height, depth, etc.), and the size of the area specific lumen and fold based on the observation area information ( Dimension information such as width, height, and depth is acquired as known characteristic information.

- the observation site information is information indicating a site to be observed, which is determined based on, for example, scope ID information, and the observation site information may also be included in the known characteristic information.

- the observation site is the esophagus, the stomach, and the duodenum

- the observation site is the information determined to be the large intestine. Since the dimension information of the uneven portion to be extracted and the lumen and ⁇ region specific information of the region are different depending on the region, the known characteristic information acquisition unit 381 obtains the standard information acquired based on the observation region information. The information such as the size of the lumen and the fistula is output to the extraction processing unit 383.

- the observation site information is not limited to the one determined by the scope ID information, but may be determined by another method such as being selected using a switch operable by the user in the external I / F unit 500.

- the extraction processing unit 383 determines an extraction processing parameter based on the known characteristic information, and performs extraction processing of the extracted unevenness information based on the determined extraction processing parameter.

- the extraction processing unit 383 performs low-pass filter processing of a predetermined size of N ⁇ N pixels on the input distance information to extract rough distance information. Then, based on the extracted rough distance information, an extraction processing parameter is determined adaptively. Details of the extraction processing parameters will be described later, for example, the kernel size of the morphology (size of structural element) adapted to the distance information at the plane position orthogonal to the distance information of the distance map or the distance information of the plane position. It is the low pass characteristic of the adapted low pass filter or the high pass characteristic of the high pass filter adapted to the planar position. That is, it is change information for changing an adaptive non-linear and linear low-pass filter or high-pass filter according to distance information.

- the low-pass filter processing here is intended to suppress the reduction in the accuracy of the extraction processing due to frequent or extreme changes of the extraction processing parameters according to the position on the image, and the accuracy reduction is a problem. If not, it is not necessary to perform low pass filter processing.

- the extraction processing unit 383 performs extraction processing based on the determined extraction processing parameter, thereby extracting only the uneven portion of a desired size existing in the subject.

- the extraction concavo-convex information output unit 385 sends the extracted concavo-convex portion to the biological mucous membrane identification unit 370 or the emphasis processing unit 340 as extraction concavo-convex information (concave and convexity image) of the same size as the captured image (image to be enhanced). Output.

- FIGS. 9A to 9F are the diameters of structural elements (spheres) used for the opening processing of the morphological processing and the closing processing.

- FIG. 9A is a view schematically showing a cross section in the vertical direction of the living body surface of the subject and the imaging unit 200.

- the wrinkles 2, 3 and 4 on the living body surface are, for example, the wrinkles of the stomach wall. Further, it is assumed that the early lesions 10, 20, 30 are formed on the surface of the living body.

- the extraction processing parameter determination processing in the extraction processing unit 383 is the extraction for extracting only the early lesions 10, 20, 30 without extracting the wrinkles 2, 3, 4 from such a living body surface It is to determine processing parameters.

- the diameter of the sphere is smaller than the size of the region-specific lumen and eyebrow based on the observation region information, and the diameter is set larger than the size of the body-specific unevenness to be extracted due to the lesion. More specifically, it is preferable to set the diameter smaller than half of the size of the eyebrows to be equal to or larger than the size of the unevenness inherent in the living body to be extracted due to the lesion.

- FIGS. 9 (A) to 9 (F) An example in which a sphere satisfying the above conditions is used for the opening process and the closing process is depicted in FIGS. 9 (A) to 9 (F).

- FIG. 9 (B) shows the surface of the living body after the closing process.

- extraction processing parameters size of the structural element

- extraction is performed while maintaining the distance change due to the biological wall and the structure such as wrinkles. It can be seen that, among the uneven portions of the target dimension, information in which the concave portion is filled can be obtained.

- FIG. 9C By taking the difference between the information obtained by the closing process and the original living body surface (corresponding to FIG. 9A), it is possible to extract only the recess on the living body surface as shown in FIG. 9C.

- FIG. 9D shows the surface of the living body after the opening process, and it is understood that among the uneven portions of the dimension to be extracted, information in which the convex portion is scraped can be obtained. Therefore, by taking the difference between the information obtained by the opening process and the original living body surface, it is possible to extract only the convex portion of the living body surface as shown in FIG. 9 (E).

- control may be performed to increase the diameter of the sphere when the distance information is close and reduce the diameter of the sphere when the distance information is far.

- control is performed such that the diameter of the sphere is changed with respect to average distance information in the case of performing opening processing and closing processing on the distance map. That is, in order to extract a desired uneven portion with respect to the distance map, it is necessary to correct with the optical magnification in order to make the real size of the living body surface coincide with the size of the pixel pitch on the image formed on the imaging device . Therefore, it is preferable that the extraction processing unit 383 acquire the optical magnification and the like of the imaging unit 200 determined based on the scope ID information.

- the size of a structural element which is the extraction process parameter when the process by the structural element is performed on the shape which is a non-extraction object such as a weir (in FIG. In the case of (1), the size of a structural element which does not collapse the shape (the ball moves following the shape) is determined.

- the processing by the structural element is performed on the uneven portion to be extracted as the extracted uneven portion information, the uneven portion is eliminated (if it is slid from above, it does not enter into the depressed portion, or is slid from below) In such a case, the size of the structural element which does not enter the convex portion) may be determined.

- the morphological processing is a widely known method, so detailed description will be omitted.

- the asperity information acquisition unit 380 determines the extraction processing parameter based on the known characteristic information, and extracts the asperity portion of the subject as the extraction asperity information based on the determined extraction process parameter.

- extraction processing for example, separation processing

- a specific method of the extraction process may be considered to be the morphological process described above, a filter process described later, etc., in any case, in order to accurately extract the extracted unevenness information, information of various structures included in the distance information From this, it is necessary to control to exclude other structures (for example, a structure unique to a living body such as wrinkles) while extracting information on a desired uneven portion.

- control is realized by setting extraction processing parameters based on known characteristic information.

- the captured image is an in-vivo image obtained by imaging the inside of a living body

- the known characteristic information acquisition unit 381 indicates region information indicating which part of the living body the subject corresponds to; Concavo-convex characteristic information which is information on a part may be acquired as known characteristic information.

- the asperity information acquisition unit 380 determines an extraction processing parameter based on the part information and the asperity characteristic information.

- the site information on the site of the subject of the in-vivo image is , It becomes possible to acquire as known characteristic information.

- extraction of a concavo-convex structure useful for detection of an early lesion and the like as extraction concavities and convexity information is assumed.

- the characteristics (for example, dimension information) of the uneven portion may differ depending on the part.

- the structure unique to the living body to be excluded such as wrinkles naturally varies depending on the site. Therefore, if a living body is targeted, it is necessary to perform appropriate processing according to the site, and in the present embodiment, the process is performed based on the site information.

- the unevenness information acquisition unit 380 determines the size of the structural element used for the opening process and the closing process as the extraction process parameter based on the known property information, and uses the structural element of the determined size.

- the opening process and closing process described above are performed to extract the uneven portion of the subject as the extracted uneven information.

- the extraction process parameter at that time is the size of the structural element used in the opening process and the closing process. Since a sphere is assumed as a structural element in FIG. 9A, the extraction processing parameter is a parameter that represents the diameter of the sphere and the like.

- the extraction process of the present embodiment is not limited to the morphological process, and may be performed by a filter process.

- the unevenness inherent in the living body desired to be extracted due to the lesion can be smoothed, and the characteristics of the low pass filter in which the structure of the lumen and eyebrow specific to the observation site is retained are determined. Since the characteristics of the uneven portion to be extracted, the wrinkles to be excluded, and the lumen structure are known from the known characteristic information, their spatial frequency characteristics become known, and the characteristics of the low pass filter can be determined.

- the low-pass filter may be a well-known Gaussian filter or bilateral filter, the characteristics of which are controlled by ⁇ , and a ⁇ map corresponding to the pixels of the distance map may be created (in the case of the bilateral filter, ⁇ of the luminance difference

- the ⁇ map may be created by using either one or both of the distances ⁇ ).

- the Gaussian filter can be expressed by the following equation (5)

- the bilateral filter can be expressed by the following equation (6).

- a thinned ⁇ map may be created, and a desired low-pass filter may be applied to the distance map by the ⁇ map.

- the ⁇ which determines the characteristics of the low-pass filter is, for example, larger than a predetermined multiple ⁇ (> 1) of the interpixel distance D1 of the distance map corresponding to the size of the unevenness unique to the living body to be extracted

- a value smaller than a predetermined multiple ⁇ ( ⁇ 1) of the inter-pixel distance D2 of the distance map corresponding to the size is set.

- ⁇ ( ⁇ * D1 + ⁇ * D2) / 2 * R ⁇ may be used.

- the filter characteristic is controlled not by ⁇ but by the cutoff frequency fc.

- R ⁇ is a function of the local average distance, and the output value increases as the local average distance decreases, and decreases as the local average distance increases.

- Rf is a function in which the output value decreases as the local average distance decreases and increases as the local average distance increases.

- the recess image can be output by subtracting the low pass processing result from the distance map not subjected to the low pass processing and extracting only a region that is negative. Further, the convex image can be output by subtracting the low-pass processing result from the distance map not subjected to the low-pass processing and extracting only a region that is positive.

- FIGS. 10 (A) to 10 (D) show explanatory views of a process of extracting a desired uneven portion derived from a lesion by a low pass filter.

- filter processing using a low pass filter on the distance map of FIG. 10A, as shown in FIG. 10B, while maintaining the distance change due to the living body wall surface and the structure such as wrinkles, It can be seen that information can be obtained from which the uneven portion of the dimension to be extracted has been removed.

- the low pass filter processing results in the reference phase (FIG. 10 (B)) for extracting the desired uneven portion even without performing the opening processing and the closing processing as described above, the distance map (FIG. 10 (A) By the subtraction process with), the uneven portion can be extracted as shown in FIG.

- FIG. 10 (D) An example is shown in FIG. 10 (D).

- high pass filter processing may be performed instead of low pass filter processing, in which case a high pass filter which holds the unevenness inherent in the living body to be extracted due to the lesion and cuts the structure of the lumen and eyebrow specific to the observation site Determine the characteristics.

- the filter characteristic is controlled at the cutoff frequency fhc.

- the cutoff frequency fhc may be specified to pass the frequency F1 of the D1 cycle and to cut the frequency F2 of the D2 cycle.

- Rf is a function in which the output value decreases as the local average distance decreases, and increases as the local average distance increases.

- the high-pass filter processing it is possible to extract an uneven portion to be extracted directly from the lesion. Specifically, as shown in FIG. 10C, the extracted asperity information is directly acquired without taking the difference.

- the concavo-convex information acquisition unit 380 determines the frequency characteristic of the filter used for the filtering process on the distance information as the extraction process parameter based on the known characteristic information, and determines the determined frequency characteristic.

- a filtering process using a filter is performed to extract the uneven portion of the subject as extracted uneven information.

- the extraction processing parameter at that time is the characteristic (spatial frequency characteristic in a narrow sense) of the filter used in the filter processing.

- the value of ⁇ and the cut-off frequency may be determined based on the frequency corresponding to the exclusion target such as wrinkles and the frequency corresponding to the uneven portion.

- Body mucous membrane unevenness judgment processing emphasizing processing

- the body mucous membrane unevenness judgment unit 372 extracts a recess on the surface of the living body (hereinafter referred to as a groove) and its vicinity as a biological mucous membrane, and the emphasizing processor 340 emphasizes the area of the biological mucous membrane

- the process to be performed will be described in detail.

- an image simulating an image in which indigo carmine is dispersed to improve the contrast of a minute uneven portion on the surface of a living body is created.

- the pixel values of the groove area and the neighboring area are multiplied by a gain that increases bluish.

- the extracted asperity information transferred from the asperity information acquisition unit 380 is in one-to-one correspondence with the endoscopic image input from the image acquisition unit 310 for each pixel.

- FIG. 11 shows a detailed configuration example of the biological mucous membrane unevenness judgment unit 372.

- the biological mucous membrane unevenness determination unit 372 includes a dimension information acquisition unit 601, a recess extraction unit 602, and a vicinity extraction unit 604.

- the dimension information acquisition unit 601 acquires known characteristic information (here, particularly, dimension information) from the storage unit 390 or the like.

- the concave portion extraction unit 602 extracts the concave portion to be emphasized among the concave and convex portions included in the extracted concave and convex information based on the known characteristic information.

- the vicinity extraction unit 604 extracts the surface of the living body within (a vicinity of) a predetermined distance from the extracted recess.

- the groove of the biological body surface is detected from the extracted unevenness information based on the known characteristic information.

- the known characteristic information refers to the width and depth of the groove on the surface of the living body.

- the width of a minute groove on the surface of a living body is several thousand ⁇ m or less (including its value), and the depth is several hundred ⁇ m or less (including its value).

- the width and the depth of the groove on the surface of the living body are calculated from the extracted unevenness information.

- FIG. 12 shows one-dimensional extracted asperity information.

- the distance from the imaging element 260 to the surface of the living body is assumed to be a positive value in the depth direction, with the position of the imaging element 260 (imaging surface) being zero.

- FIG. 13 shows a method of calculating the width of the groove.

- the end of a point separated by a threshold value x1 or more (including that value) from the imaging plane which is farther than the reference plane is detected (Points A and B in FIG. 13) ).

- the distance x1 is a reference plane.

- the number N of pixels corresponding to the inside of the detected point is calculated.

- the average value of the distances x1 to xN from the image pickup element is calculated with respect to the internal point, and is defined as xave.

- the equation for calculating the width w of the groove is shown in the following equation (7).

- p is the width per pixel of the imaging device 260

- K is the optical magnification corresponding to the distance xave from the imaging device on a one-to-one basis.

- w N x p x K (7)

- FIG. 14 shows a method of calculating the depth of the groove.

- the formula for calculating the depth d of the groove is shown in the following formula (8).

- the maximum value of x1 to xN is xM

- the smaller one of x1 and xN is xmin.

- d xM-xmin1 (8)

- the user may set an arbitrary value via the external I / F unit 500 for the reference plane (the plane of the distance x1 from the imaging device). If the calculated groove width and depth match the known characteristic information, the pixel position of the corresponding endoscopic image is determined to be a pixel of the groove area. Here, it is determined whether the width of the groove is equal to or less than 3000 ⁇ m (including its value) and the depth of the groove is equal to or less than 500 ⁇ m (including its value). It is determined that the pixel

- the width and depth of the groove serving as the threshold may be set by the user via the external I / F unit 500.

- the proximity extraction unit 604 detects a pixel on the surface of the living body whose distance in the depth direction is within a predetermined distance from the groove area as a proximity pixel.