WO2020158231A1 - 制御装置、制御対象装置、制御方法及び記憶媒体 - Google Patents

制御装置、制御対象装置、制御方法及び記憶媒体 Download PDFInfo

- Publication number

- WO2020158231A1 WO2020158231A1 PCT/JP2019/049803 JP2019049803W WO2020158231A1 WO 2020158231 A1 WO2020158231 A1 WO 2020158231A1 JP 2019049803 W JP2019049803 W JP 2019049803W WO 2020158231 A1 WO2020158231 A1 WO 2020158231A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- voice

- control

- quiet

- movable part

- controlled

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B25—HAND TOOLS; PORTABLE POWER-DRIVEN TOOLS; MANIPULATORS

- B25J—MANIPULATORS; CHAMBERS PROVIDED WITH MANIPULATION DEVICES

- B25J9/00—Programme-controlled manipulators

- B25J9/16—Programme controls

- B25J9/1694—Programme controls characterised by use of sensors other than normal servo-feedback from position, speed or acceleration sensors, perception control, multi-sensor controlled systems, sensor fusion

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B25—HAND TOOLS; PORTABLE POWER-DRIVEN TOOLS; MANIPULATORS

- B25J—MANIPULATORS; CHAMBERS PROVIDED WITH MANIPULATION DEVICES

- B25J13/00—Controls for manipulators

- B25J13/003—Controls for manipulators by means of an audio-responsive input

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B25—HAND TOOLS; PORTABLE POWER-DRIVEN TOOLS; MANIPULATORS

- B25J—MANIPULATORS; CHAMBERS PROVIDED WITH MANIPULATION DEVICES

- B25J13/00—Controls for manipulators

- B25J13/08—Controls for manipulators by means of sensing devices, e.g. viewing or touching devices

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05B—CONTROL OR REGULATING SYSTEMS IN GENERAL; FUNCTIONAL ELEMENTS OF SUCH SYSTEMS; MONITORING OR TESTING ARRANGEMENTS FOR SUCH SYSTEMS OR ELEMENTS

- G05B19/00—Programme-control systems

- G05B19/02—Programme-control systems electric

- G05B19/04—Programme control other than numerical control, i.e. in sequence controllers or logic controllers

- G05B19/042—Programme control other than numerical control, i.e. in sequence controllers or logic controllers using digital processors

- G05B19/0426—Programming the control sequence

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/16—Sound input; Sound output

- G06F3/167—Audio in a user interface, e.g. using voice commands for navigating, audio feedback

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10L—SPEECH ANALYSIS TECHNIQUES OR SPEECH SYNTHESIS; SPEECH RECOGNITION; SPEECH OR VOICE PROCESSING TECHNIQUES; SPEECH OR AUDIO CODING OR DECODING

- G10L15/00—Speech recognition

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10L—SPEECH ANALYSIS TECHNIQUES OR SPEECH SYNTHESIS; SPEECH RECOGNITION; SPEECH OR VOICE PROCESSING TECHNIQUES; SPEECH OR AUDIO CODING OR DECODING

- G10L25/00—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00

- G10L25/78—Detection of presence or absence of voice signals

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05B—CONTROL OR REGULATING SYSTEMS IN GENERAL; FUNCTIONAL ELEMENTS OF SUCH SYSTEMS; MONITORING OR TESTING ARRANGEMENTS FOR SUCH SYSTEMS OR ELEMENTS

- G05B2219/00—Program-control systems

- G05B2219/20—Pc systems

- G05B2219/23—Pc programming

- G05B2219/23181—Use of sound, acoustic, voice

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05B—CONTROL OR REGULATING SYSTEMS IN GENERAL; FUNCTIONAL ELEMENTS OF SUCH SYSTEMS; MONITORING OR TESTING ARRANGEMENTS FOR SUCH SYSTEMS OR ELEMENTS

- G05B2219/00—Program-control systems

- G05B2219/20—Pc systems

- G05B2219/23—Pc programming

- G05B2219/23386—Voice, vocal command or message

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10L—SPEECH ANALYSIS TECHNIQUES OR SPEECH SYNTHESIS; SPEECH RECOGNITION; SPEECH OR VOICE PROCESSING TECHNIQUES; SPEECH OR AUDIO CODING OR DECODING

- G10L25/00—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00

- G10L25/78—Detection of presence or absence of voice signals

- G10L2025/783—Detection of presence or absence of voice signals based on threshold decision

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10L—SPEECH ANALYSIS TECHNIQUES OR SPEECH SYNTHESIS; SPEECH RECOGNITION; SPEECH OR VOICE PROCESSING TECHNIQUES; SPEECH OR AUDIO CODING OR DECODING

- G10L25/00—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00

- G10L25/03—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00 characterised by the type of extracted parameters

- G10L25/21—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00 characterised by the type of extracted parameters the extracted parameters being power information

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10L—SPEECH ANALYSIS TECHNIQUES OR SPEECH SYNTHESIS; SPEECH RECOGNITION; SPEECH OR VOICE PROCESSING TECHNIQUES; SPEECH OR AUDIO CODING OR DECODING

- G10L25/00—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00

- G10L25/48—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00 specially adapted for particular use

- G10L25/51—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00 specially adapted for particular use for comparison or discrimination

Definitions

- the present disclosure relates to a control device, a control target device, a control method, and a storage medium.

- the problem of the present disclosure is to provide a technique for controlling the sound emitted by the movable part of the controlled device.

- one embodiment of the present disclosure includes one or more memories and one or more processors,

- the one or more processors are To get voice, Recognizing the voice, Determining whether the voice is a quiet voice; Controlling the movable part of the controlled device according to the recognition result of the voice; Is configured to run

- the one or more processors have a sound pressure level of a sound emitted by a movable part of the control target device when the determination result is quiet voice, and a sound pressure level of the control target device when the determination result is not quiet voice.

- the present invention relates to a control device that controls the control target device so that the sound pressure level of a sound emitted by a movable portion becomes lower.

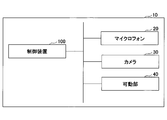

- FIG. 1 is a schematic diagram showing a robot apparatus according to an embodiment of the present disclosure.

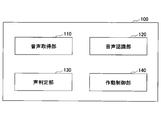

- FIG. 3 is a block diagram showing a hardware configuration of a robot apparatus according to an embodiment of the present disclosure.

- FIG. 3 is a block diagram showing a functional configuration of a control device according to an embodiment of the present disclosure.

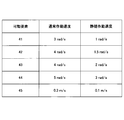

- 6 is a table that defines the operating speeds of the movable part at the normal operating speed and the quiet operating speed according to an embodiment of the present disclosure.

- FIG. 6 is a diagram showing operating speed patterns of a movable part in a normal operation mode and a quiet operation mode according to an embodiment of the present disclosure.

- FIG. 16 is a block diagram showing a functional configuration of a control device according to another embodiment of the present disclosure.

- FIG. 7 is a flowchart showing a control process of the robot apparatus according to an embodiment of the present disclosure.

- FIG. 6 is a schematic diagram illustrating a robot apparatus according to another embodiment of the present disclosure.

- FIG. 6 is a diagram showing a priority order of movable parts according to an embodiment of the present disclosure.

- 6 is a flowchart showing a control process of operating speed of a movable part according to an embodiment of the present disclosure.

- 8 is a flowchart showing a control process of the robot apparatus according to another embodiment of the present disclosure.

- FIG. 6 is a schematic diagram illustrating a robot apparatus according to another embodiment of the present disclosure.

- FIG. 3 is a block diagram showing a hardware configuration of a control device according to an embodiment of the present disclosure.

- a control device for controlling a device such as a robot device.

- a control device based on paralinguistic information extracted from a voice uttered by a user, has a movable part (eg, a joint part, an end effector, etc.) of a device to be controlled (eg, a robot device). Adjust the operating speed of.

- the control device is built in the control target device or is external to the control target device and is communicatively connected to the control target device.

- the paralinguistic information refers to the loudness and pitch of a voice, the speaking speed, the type of phonation, and the like.

- the vocalization type includes, for example, a whisper voice and a breathy voice.

- a voice having a small voice volume or a voice having a whisper is called a quiet voice.

- a small voice is defined as an utterance whose power excluding attenuation according to the distance between the sound collection means (for example, a microphone) of the control target device and the user is equal to or less than a predetermined threshold value.

- a voice with a loud voice or a yelling voice is called a loud voice.

- the control apparatus determines that the voice uttered by the user is a quiet voice, and operates the robot apparatus quietly. To operate at a lower speed than during normal operation.

- the control device determines that the voice uttered by the user is a loud voice, and activates the moving part of the robot device at a higher speed than during the normal operation.

- FIG. 1 is a schematic diagram showing a robot apparatus according to an embodiment of the present disclosure.

- a robot apparatus 10 can move an object according to a voice instruction from a user.

- the operation of the robot apparatus 10 is controlled.

- the control device 100 is mounted inside the robot device 10.

- the robot apparatus 10 grasps an object by using the plurality of joints 41 to 44 and the end effector 45 (hereinafter collectively referred to as a movable element) according to a voice instruction from the user, and instructs the grasped object. Move to the specified destination.

- the robot apparatus 10 according to the present disclosure is not limited to the specific configuration disclosed, and may be any apparatus including a movable portion or a movable element.

- the robot device 10 has a microphone 20, a camera 30, a movable part 40, and a control device 100, as shown in FIG. 2, for example.

- the microphone 20 functions as a sound collecting device, collects environmental sounds around the robot device 10, and collects voices uttered by the user.

- the microphone 20 transmits the collected voice data to the control device 100.

- the robot device 10 according to the present disclosure is not limited to the microphone 20 and may include another type of sound collecting device. Further, although only one microphone 20 is shown in the illustrated embodiment, the robot apparatus 10 according to the present disclosure may collect a plurality of sound sources such as for performing array signal processing for sound source localization, sound source separation, and the like. A device may be provided. Alternatively, the robot device 10 may acquire voice data or other data acquired by another device without including the sound collecting device.

- the camera 30 functions as an image capturing device, captures an image of the surroundings of the robot device 10, and transmits the captured image data to the control device 100.

- the robot device 10 according to the present disclosure is not limited to the camera 30, and may include another type of image capturing device, or may include image data acquired by another device without including the image capturing device or other image data. Data may be acquired.

- the movable portion 40 includes movable elements such as the joints 41 to 44 and the end effector 45, and the joints 41 to 44 and the end effector 45 each include an actuator that operates under the control of the control device 100.

- movable elements such as the joints 41 to 44 and the end effector 45

- the joints 41 to 44 and the end effector 45 each include an actuator that operates under the control of the control device 100.

- an operation sound is generated along with the operation.

- the operation sound is typically a sound emitted from the actuator itself, a sound generated by movement of a part other than the movable element, a cable, an exterior, and the end effector 45 comes into contact with the object when the object is gripped. There are sounds that are generated by things.

- the control device 100 controls the robot device 10, and specifically controls each component such as the microphone 20, the camera 30, and the movable portion 40, as described in detail below. Specifically, when the control device 100 receives a voice instruction from the user collected by the microphone 20, the control device 100 captures an environmental condition around the robot device 10 captured by the camera 30 (for example, an object around the robot device 10). Information indicating such as (image data indicating) and the like is acquired, the action plan of the movable unit 40 is determined based on the acquired environmental condition and voice instruction, and the movable unit 40 is controlled according to the determined action plan. The control device 100 according to the present embodiment further determines whether or not the acquired voice instruction of the user is a quiet voice, and adjusts the operating speed of the movable portion 40 according to the determination result, as described in detail below. To do.

- the robot device 10 according to the present disclosure is not limited to the above hardware configuration, and may have any other appropriate hardware configuration.

- a control device according to the first embodiment of the present disclosure will be described with reference to FIGS. 3 to 7.

- the movement processing of the object by the robot apparatus 10 will be focused and described.

- the process of the robot device 10 controlled by the control device 100 according to the present disclosure is not limited to this. It will be understood by those skilled in the art that the control process of the control device 100 according to the present disclosure can be applied to other processes depending on the application of the robot device 10.

- FIG. 3 is a block diagram showing a functional configuration of the control device 100 according to an embodiment of the present disclosure.

- the control device 100 includes a voice acquisition unit 110, a voice recognition unit 120, a voice determination unit 130, and an operation control unit 140.

- the voice acquisition unit 110 acquires voice. Specifically, the voice acquisition unit 110 acquires a voice collected by a sound collecting device such as the microphone 20, a voice stored in a memory, a voice transmitted via a communication connection, and the like.

- the voice recognition unit 120 recognizes the acquired voice. Specifically, the voice recognition unit 120 extracts voice data indicating a voice instruction from the user from the voice acquired by the voice acquisition unit 110, and performs voice recognition processing on the extracted voice data. The voice recognition process may be executed by using, for example, a well-known voice recognition technique that converts a voice instruction into text data. The voice recognition unit 120 transmits the recognition result (for example, a text instruction, a character string, a voice feature vector, a voice feature vector sequence, etc.) acquired from the voice data to the operation control unit 140.

- the recognition result for example, a text instruction, a character string, a voice feature vector, a voice feature vector sequence, etc.

- the voice determination unit 130 determines whether or not the voice is a quiet voice.

- the quiet voice according to the present disclosure is at least one of a whisper and a low voice, and the voice determination unit 130 determines whether the voice instruction from the user acquired by the voice acquisition unit 110 is a whisper or a low voice. Determine whether.

- whispering voice is defined as vocalization that does not vibrate the vocal cords, and whispering voice detection is, for example, G.Nisha et al., "Robust Whisper Activity Detection Using Long-Term Log Energy Variation of Sub-Band Signal," IEEE It can be realized by existing detection methods disclosed in SIGNAL PROCESSING LETTERS, VOL.22, NO.11, 2015, and detection methods using pitch extraction results.

- the low voice can be defined as a voice in which the power excluding the attenuation according to the distance between the microphone 20 and the user is less than or equal to a predetermined threshold. If it can be assumed that the distance between the microphone 20 and the user does not change significantly, the attenuation need not be considered. In other words, the utterance in which the power of the voice measured by the microphone 20 is equal to or less than the predetermined threshold may be a small voice.

- the voice determination unit 130 transmits the determination result to the operation control unit 140.

- the operation control unit 140 controls the movable unit 40 of the robot apparatus 10 according to the voice recognition result, and controls the moving unit 40 of the robot apparatus 10 when the determination result of the voice determination unit 130 is a quiet voice.

- the robot apparatus 10 is controlled so that the sound pressure level becomes lower than the sound pressure level of the sound emitted by the movable unit 40 of the robot apparatus 10 when the determination result of the voice determination unit 130 is not a quiet voice.

- the operation control unit 140 operates the movable unit 40 of the robot apparatus 10 according to the recognition result at an operation speed according to whether the voice is a quiet voice, and the determination result of the voice determination unit 130 is quiet.

- the robot apparatus 10 is controlled so that the operating speed of the movable unit 40 of the robot apparatus 10 when the voice is lower than the operating speed of the movable section 40 of the robot apparatus 10 when the determination result of the voice determining unit 130 is not a quiet voice. Control.

- the operation control unit 140 sets the robot device 10 to the normal operation mode.

- the normal operation mode is a mode in which the operation speed of the movable part 40 (for example, the maximum value of the operation speed) is set to the normal operation speed.

- the operation control unit 140 sets the robot apparatus 10 in the quiet operation mode.

- the quiet operation mode is a mode in which the operation speed of the movable portion 40 (for example, the maximum value of the operation speed) is set to a quiet operation speed lower than the normal operation speed.

- the operation control unit 140 holds in advance an operation speed table that defines the normal operation speed and the quiet operation speed of the movable unit 40 as illustrated in FIG. 4, and the operation speed of the movable unit 40 according to the operation speed table.

- the maximum value may be set.

- the operation of changing the operation mode corresponds to the operation of changing the reference point in the operation speed table.

- the adjustment of the operating speed is not limited to setting the maximum value of the operating speed, and the operating speed pattern may be adjusted as shown in FIG. 5, for example. That is, the normal operating speed pattern may be limited by a constant maximum speed as shown, or may be adjusted by slowing down the operating speed pattern as shown.

- the operation control unit 140 determines the operation plan of the movable unit 40 based on the maximum value of the operation speed thus set and the voice recognition result, and operates the movable unit 40 according to the determined operation plan.

- control device 100 may further include an image acquisition unit 150 that acquires an image.

- the image acquisition unit 150 acquires an image collected by an imaging device such as the camera 30, an image stored in a memory, an image transmitted via a communication connection, and the like.

- the operation control unit 140 specifies the object name of the movement target and the movement destination based on the voice recognition result such as the text instruction acquired from the voice recognition unit 120, and the image.

- the object to be moved and the movement destination in the physical space may be determined based on the image acquired from the acquisition unit 150.

- the operation control unit 140 determines an operation plan for moving the object to be moved from the movement source to the movement destination at the set operation speed, and operates the movable unit 40 according to the determined operation plan to move the object.

- the target object is moved from the source to the destination.

- control device 100 may further include an image recognition unit 160 that recognizes the acquired image.

- the image recognition unit 160 executes the image recognition process on the image acquired by the image acquisition unit 150.

- the image recognition processing uses, for example, any well-known image recognition technology that detects an object around the robot apparatus 10 such as SSD (Single Shot Multibox Detector) and predicts the name and position of the detected object. May be executed.

- the image recognition unit 160 transmits the image recognition result (for example, the name and position of the object) acquired from the image to the operation control unit 140.

- FIG. 7 is a flowchart showing a control process of the robot apparatus according to the embodiment of the present disclosure.

- the control process can be started in response to the control device 100 detecting a voice instruction from the user, for example.

- the image acquisition/recognition process is used to detect the object to be moved, but the image acquisition/recognition process is not always necessary for the control process of the present disclosure, as described above. ..

- it is decided to perform a certain operation in an industrial machine or the like it is possible to realize the operation control according to the voice recognition result without requiring the image and the image recognition result.

- the voice acquisition unit 110 acquires voice data. Specifically, the voice acquisition unit 110 acquires voice data indicating a voice instruction from the user from the microphone 20. Further, when the control device 100 has the image acquisition unit 150, the image acquisition unit 150 may acquire image data showing the periphery of the robot device 10 from the camera 30.

- step S102 the voice recognition unit 120 executes voice recognition processing on the acquired voice data. Specifically, the voice recognition unit 120 performs voice recognition processing on the acquired voice data and converts the voice instruction into a text instruction.

- the control device 100 includes the image recognition unit 160

- the image recognition unit 160 executes the image recognition process on the acquired image data and detects the position of each object around the robot device 10. The object name may be detected.

- step S103 the voice determination unit 130 determines whether the acquired voice instruction of the user is a quiet voice, that is, a whisper or a small voice.

- the operation control unit 140 applies the normal operation speed as the operation speed of the movable unit 40 in step S104.

- the operation control unit 140 applies the quiet operation speed lower than the normal operation speed as the operation speed of the movable unit 40 in step S105.

- step S106 the operation control unit 140 determines an operation plan corresponding to the applied operation speed and the recognition result, and controls the movable unit 40 according to the determined operation plan. Specifically, the operation control unit 140 determines an operation plan for executing the voice instruction that has been voice-recognized, and operates the movable unit 40 at the operation speed applied according to the determined operation plan.

- the control device 100 includes the image acquisition unit 150

- the operation control unit 140 determines an operation plan for executing the voice instruction recognized by voice based on the acquired image data, and applies the operation plan according to the determined operation plan.

- the movable part 40 may be operated at the determined operating speed.

- the operation control unit 140 determines an operation plan for executing the voice instruction that has been voice-recognized based on the image recognition result, and applies the operation plan according to the determined operation plan.

- the movable part 40 may be operated at different operating speeds.

- the operating speed of the movable portion 40 is not limited to the two discrete values of the normal operating speed and the quiet operating speed described above, and three or more discrete or continuous operating speeds may be set.

- the robot apparatus 10 may be set to have three or more stages of operation modes according to the degree of quietness, and the operation speed corresponding to each stage may be set.

- a continuous operation speed corresponding to the numerical value of the calmness may be set.

- the reliability may be used. An existing technique such as a probability value of two-class classification may be used to calculate the reliability.

- the control device 100 determines whether or not the user is in a quiet voice based on the voice instruction of the user, so that the user can operate the robot device 10 without including the explicit quiet operation instruction in the utterance content. It becomes possible to operate by the quiet operation mode.

- the quiet operation mode applied to the robot apparatus 10 when it is determined to be the quiet voice the operation speed of each movable element of the movable portion 40 is uniformly set to the quiet operation speed. ..

- each movable element generally produces different operating noises.

- the control device 100 preferentially actuates the movable element having a relatively low operating noise. That is, the control device 100 determines the operation plan so as to execute the task without activating the movable element having the relatively high operation noise.

- FIG. 8 is a schematic diagram showing a robot apparatus 10 in which a movable element 46 is added so that the whole of FIG. 1 can move in parallel.

- the operation control unit 140 holds in advance priority order information indicating the priority order of each movable element as shown in FIG. 9, and when the voice determination unit 130 determines that the voice instruction of the user is a quiet voice, Based on the priority information and the recognition result by the voice recognition unit 120, the operation plan of the movable unit 40 is determined.

- the illustrated priority information indicates that the movable element 45 having the priority 1 produces the smallest operating sound and the movable element 46 having the priority 6 produces the largest operating sound.

- the operation control unit 140 uses only the movable element of priority 1 to perform the task instructed by the user (for example, object movement processing). Is determined to be feasible, and if the task can be executed only by the movable element having the priority 1, the operation plan for realizing the task is determined by operating only the movable element having the priority 1. Only the movable element is operated according to the operation plan.

- the operation control unit 140 determines whether the task can be realized by operating the movable elements having the priority 1 or 2.

- the operation control unit 140 determines an operation plan for realizing the task by operating only the movable elements having the priorities 1 and 2. , Operate only the movable element according to the operation plan.

- the operation control unit 140 sequentially adds the movable elements with high priority to the combination of the movable elements to be operated, and determines the combination of the movable elements that can realize the task, in the same manner. ..

- the control process by the control device 100 for realizing the task by the robot device 10 described above may be executed according to a process flow as shown in FIG. 10, for example.

- the illustrated process flow focuses on the control of the movable unit 40 when the voice instruction of the user is the quiet voice, and it is determined whether the voice instruction of the user is the quiet voice and the voice recognition.

- the recognition process performed by the unit 120 is the same as steps S101 to S103 in FIG. 7, and redundant description will be omitted.

- step S202 the operation control unit 140 adds the movable element of the movable unit 40 having the priority i to the set M of combinations of movable elements. For example, since the index i is 1 at the beginning, the operation control unit 140 adds the movable element having the priority of 1 to the set M.

- step S203 the operation control unit 140 determines whether the task instructed by the user can be realized by combining the movable elements of the set M. For example, when the task is to move the specified object to the destination, the operation control unit 140 can cause the end effector 45 to grip the specified object by activating a combination of movable elements, and Alternatively, it may be determined whether the grasped object can be moved to the destination.

- step S204 the operation control unit 140 determines an operation plan for executing the task by the movable elements of the current set M, and the determined operation plan. Actuating the movable elements of set M according to

- the operation control unit 140 increments the index i by 1 in step S205 and repeats step S202.

- each movable element may be operable at a normal operation speed and a quiet operation speed, and a priority order during normal operation and a priority order during quiet operation may be defined for each movable element. ..

- the operation control unit 140 controls the robot apparatus 10 according to the sound pressure level of the environmental sound acquired by the sound acquisition unit 110.

- the control device 100 acquires the operation sound generated from the robot device 10 collected by the microphone 20 even during the execution of the operation plan, and when the voice instruction is issued by the quiet voice, the sound pressure level of the operation sound is predetermined.

- the movable part 40 is controlled so that the value is not more than the value.

- the operation control unit 140 acquires the voice collected by the robot apparatus 10 during the operation of the movable unit 40, and when the voice instruction is issued by the quiet voice, the sound pressure level of the acquired voice is set to the non-movable state of the movable unit 40.

- the operation of the movable part 40 is controlled so that the sound pressure level during operation is equal to or less than a predetermined increase amount.

- the robot apparatus 10 may have the same configuration as that shown in FIG.

- FIG. 11 is a flowchart showing the control processing of the robot apparatus according to another embodiment of the present disclosure. It should be noted that in the following embodiments, the image acquisition/recognition process is used to detect the object to be moved, but the image acquisition/recognition process is not necessarily essential to the control process of the present disclosure, as described above.

- step S301 the audio acquisition unit 110 and the image acquisition unit 150 respectively acquire audio data and image data.

- the voice acquisition unit 110 continues to acquire voice data indicating a voice instruction from the user from the microphone 20 regardless of the operating state of the movable unit 40.

- the sound pressure level of the environmental sound which is the sound pressure level when the movable portion 40 is not in operation, can be measured.

- the image acquisition unit 150 continues to acquire image data showing the periphery of the robot device 10 from the camera 30.

- step S302 the voice recognition unit 120 determines whether or not the acquired voice data includes a voice instruction uttered by the user.

- the operation control unit 140 measures the sound pressure level L of the environmental sound collected by the microphone 20 in step S303.

- some kind of environmental sound exists around the robot apparatus 10 even when the robot apparatus 10 is not in operation. It is necessary to measure the operating noise generated by the operation. Further, since the environmental sound may fluctuate, the operation control unit 140 may periodically measure the sound pressure level L of the environmental sound. Since the sound pressure level is regularly measured, the process returns to step S301 after the measurement.

- step S304 the voice recognition unit 120 and the image recognition unit 160 execute the voice recognition process and the image recognition process, respectively.

- the voice recognition unit 120 performs voice recognition processing on the acquired voice data and converts the voice instruction into a text instruction.

- the image recognition unit 160 also performs image recognition processing on the acquired image data to detect the position of each object around the robot apparatus 10. Further, the object name may be detected.

- step S305 the voice determination unit 130 determines whether the acquired voice instruction of the user is a quiet voice, that is, a whisper or a small voice.

- step S306 the operation control unit 140 applies the normal operation speed as the operation speed of the movable unit 40, and the normal operation speed and The operation plan corresponding to the recognition result is determined, and the movable part 40 is controlled according to the determined operation plan.

- the operation control unit 140 applies the quiet operation speed as the operation speed of the movable unit 40 in step S307 to obtain the quiet operation speed and the recognition result. A corresponding operation plan is determined, and control of the movable part 40 is started according to the determined operation plan.

- step S308 the operation control unit 140 measures the sound pressure level L′ around the robot apparatus 10.

- the voice around the robot apparatus 10 including the operating sound is collected by the microphone 20 while the movable part 40 is operating, and the sound pressure level is measured based on the collected voice.

- step S ⁇ b>309 the operation control unit 140 sets the sound pressure level L′ around the robot apparatus 10 including the acquired operation sound during operation to a predetermined value with respect to the sound pressure level L of the environmental sound when the movable unit 40 is not in operation. It is determined whether the increase amount is less than ⁇ .

- the sound pressure level L′ is lower than L+ ⁇ , that is, L′ ⁇ L+ ⁇ (S309: YES)

- the operation control unit 140 suppresses the sound pressure increase amount due to the operation sound to the predetermined increase amount ⁇ or less. , While maintaining the current operation plan, the process proceeds to step S311.

- step S310 the operation control unit 140 determines that the sound pressure increase amount due to the operation sound is too large. Then, the operation plan is reexamined so as to reduce the operation speed of the movable portion 40. Specifically, the operation control section 140 reduces the operation speed of the movable section 40 by a minute amount ⁇ . Alternatively, the operation control section 140 may multiply the operation speed of the movable section 40 by ⁇ ( ⁇ 1). Further, the minimum value of the maximum operating speed of the movable portion 40 may be determined in advance so as to prevent the robot device 10 from stopping operating. After adjusting the operation of the movable part 40 based on the reset operation plan, the process proceeds to step S311.

- step S311 the operation control unit 140 determines whether or not the operation of the movable unit 40 based on the recognition result is completed, and if the operation is completed (S311: YES), the control process is completed. On the other hand, if not completed (S311: NO), the operation control unit 140 returns to steps S308 and S309, and the operation control unit 140 determines that the increase amount of the sound pressure level due to the operation sound based on the reset operation plan is less than the predetermined threshold ⁇ . Judge whether it was suppressed to.

- the operation control unit 140 continues to monitor the sound pressure level until the operation of the movable unit 40 is completed, and repeats steps S308 to S310 until the condition of L' ⁇ L+ ⁇ is satisfied.

- the operation control unit 140 may decrease the operation speed of the movable unit 40 by individually adjusting each movable element when decreasing the operation speed in step S310.

- the robot device is operated by decreasing the operating speed of the movable element having a large operating noise or stopping the movable element having a large operating noise while operating the movable element having a small operating noise according to the priority order.

- the operating noise of the entire 10 may be reduced.

- the operation control unit 140 sets the operation speed of the movable unit 40 to the quiet operation speed only when the voice is a quiet voice and the sound pressure level L of the environmental sound is less than a predetermined value. You may set it. That is, regardless of whether or not the voice is a quiet voice, the operation control unit 140 sets the operating speed of the movable unit 40 to the normal operating speed when the sound pressure level L of the environmental sound in the non-operation is equal to or higher than a predetermined value. It may be set to. Accordingly, when the sound pressure level L of the environmental sound when the movable portion 40 is not in operation is equal to or higher than a certain value, the robot apparatus 10 does not need to operate quietly, and the operation control unit 140 detects a quiet voice. Alternatively, the movable part 40 may be operated at the normal operation speed, and the sound pressure level L′ during the operation of the movable part 40 may not be measured.

- the robotic device 10 may be configured to include multiple microphones 20.

- the operation control unit 140 may use only one of the plurality of microphones 20 for measuring the sound pressure level of the environmental sound.

- the operation control unit 140 may use the maximum value of the sound pressure levels measured by the plurality of microphones 20 as the sound pressure level of the environmental sound.

- the operation control section 140 sets the operation speed of the movable section 40 so as to satisfy max i (L′ i ⁇ (L i + ⁇ )) ⁇ 0. You may adjust.

- the operating speed can be adjusted in consideration of the environmental sound during the operation of the robot apparatus 10, and the robot apparatus 10 can be operated quietly only when it is necessary to operate quietly. become.

- the operation control unit 140 controls the robot apparatus 10 according to the distance between the robot apparatus 10 and the voice speaker.

- the voice determination unit 130 corrects the voice according to the distance between the sound collection device and the user, and determines whether the corrected voice is a quiet voice. Whether or not the voice is a low voice is determined by whether or not the sound pressure level of the voice acquired from the microphone 20 is less than a predetermined value.

- the voice determination unit 130 may correct the sound pressure level of the voice to be determined according to the distance between the user and the microphone 20.

- the distance between the user and the microphone 20 is described in, for example, “Acoustic positioning using multiple microphone arrays”, Hui Liu and Evangelos Milios, The Journal of the Acoustical Society America117, 2772 (2005). It may be estimated by any appropriate distance estimation method such as a distance estimation method using a plurality of microphone arrays. Alternatively, the distance may be estimated based on the size of the user's face acquired from the camera 30. Alternatively, the distance may be estimated using a distance sensor, an infrared distance sensor, a laser distance sensor, or the like.

- the attenuation coefficient used to correct the sound pressure level may be specified in advance.

- the voice determination unit 130 corrects the sound pressure level measured by the microphone 20 by the attenuation coefficient corresponding to the estimated value, and the user's voice is a quiet voice based on the corrected sound pressure level. It may be determined whether or not there is.

- the robot apparatus 10 further has a light emitting device such as a light for illuminating the surroundings of the robot apparatus 10 and a display for presenting information to the user, and the operation control unit 140 determines the voice determination unit 130.

- the robot device 10 is controlled such that the light amount of the light emitting device of the robot device 10 when the result is the quiet voice is smaller than the light amount of the light emitting device of the robot device 10 when the determination result of the voice determination unit 130 is not the quiet voice.

- the operation control unit 140 operates the robot apparatus 10 in the quiet operation mode and causes the light to emit light with a light amount lower than that in the normal operation mode, or the light is turned on. You may turn it off.

- the operation control unit 140 operates the robot apparatus 10 in the quiet operation mode so that the brightness of the display is lower than that in the normal operation mode, or the display is turned off. May be.

- the adjustment of the light amount or the brightness of the light emitting device may be performed in combination with the adjustment of the operating speed of the movable portion 40, or may be performed independently. Further, the operation control unit 140 may cause the light emitting device to emit light in different colors in the normal operation mode and the quiet operation mode.

- the operation control unit 140 may control the light emission amount of the robot apparatus 10 without controlling the movable portion of the robot apparatus 10 according to the determination result of the voice determination unit 130. Accordingly, it is possible to provide a technique of controlling the light emission amount of the control target device by the paralinguistic information of the voice.

- the voice acquisition unit 110 acquires the voice collected by the sound collector, and the voice determination unit 130 determines whether or not the voice is a quiet voice.

- the robot apparatus 10 can be moved by moving means such as wheels and feet.

- the operation control unit 140 moves the movable unit according to the distance between the moving robot apparatus 10 and the user.

- the operating speed of 40 is switched. Specifically, when the robot apparatus 10 is movable, the operation control unit 140 first moves the movable unit 40 at a quiet operation speed when the voice uttered by the user near the robot apparatus 10 is a quiet voice.

- the movable unit 40 is operated at the normal operation speed.

- the operation control unit 140 when the voice instruction from the user is a quiet voice, the operation control unit 140 first sets the maximum value v max of the operating speed of the movable unit 40 to the quiet operating speed v whisper , as in the first embodiment described above. reduce. After that, when the distance d between the robot apparatus 10 and the user or the position at which the voice instruction is received becomes larger than the predetermined threshold value d c , the operation control unit 140 sets the maximum value v max of the operation speed to the normal operation speed v normal ( >v whisper ). That is, the operation control unit 140 controls the maximum value v max of the operation speed according to the following formula.

- the normal operation speed v normal , the quiet operation speed v whisper , and the threshold value d c may be set for each movable element of the movable portion 40. Only some of these three values may be set in common. Moreover, you may make it set commonly among some movable elements.

- the operating speed according to the present disclosure does not need to be set to two discrete values such as the normal operating speed and the quiet operating speed, and may be set to three or more depending on the distance between the moving robot apparatus 10 and the user. It may be set to a discrete value or a continuous value.

- the operation control unit 140 may reset the operation speed to the quiet operation speed.

- the operation control unit 140 when the voice instruction from the user is a quiet voice, the operation control unit 140 first sets the threshold ⁇ of the sound pressure increase amount due to the operating sound to the quiet operation mode threshold ⁇ whisper . Set. After that, when the distance d between the robot apparatus 10 and the user or the voice instruction position becomes larger than a predetermined threshold value d c , the operation control unit 140 sets the threshold value ⁇ of the sound pressure increase amount to the normal operation mode threshold value ⁇ normal (> ⁇ ). whisper ). That is, the operation control unit 140 controls the threshold value ⁇ of the sound pressure increase amount according to the following formula.

- the sound pressure increase threshold ⁇ is not necessarily set to such two discrete values, and may be set to three or more discrete values or continuous values.

- the voice determination unit 130 determines whether or not the voice is a low voice based on the volume of the voice after being corrected according to the distance between the sound collector and the speaker. You may

- the robot apparatus 10 is operated in the quiet operation mode near the user or the voice instruction position, and the robot apparatus 10 is operated in the normal operation mode when far away from the user or the voice instruction position. This allows the task to be performed efficiently while maintaining tranquility.

- the operation control unit 140 controls the robot apparatus 10 according to the determination result of the voice determination unit 130 and the current time. Specifically, the operation control unit 140 sets the operation speed of the movable unit 40 to the quiet operation speed when the voice instruction from the user is a quiet voice and the current time is within a predetermined time zone.

- the user presets in the control device 100 a time period in which the robot device 10 is desired to be operated quietly such as at midnight or early morning.

- the operation control unit 140 determines whether or not the voice instruction is issued during the set time zone, and within the time zone when the voice instruction is set. If it is emitted, the operating speed of the movable portion 40 is set to the quiet operating speed. On the other hand, when the voice time is generated outside the set time period, the operation control unit 140 sets the operation speed of the movable unit 40 to the normal operation speed.

- the robot apparatus 10 is operated in the normal operation mode regardless of whether or not the voice instruction is the quiet voice except the time period when the quiet operation is required, and the robot apparatus 10 is efficiently operated. Can be activated.

- the voice determination unit 130 determines whether or not the voice is a low utterance speed utterance

- the operation control unit 140 determines whether or not the voice is a low utterance speed utterance. Accordingly, the robot device 10 is controlled.

- the voice determining unit 130 determines whether or not the voice is a low-utterance utterance, and the operation control unit 140 The movable part 40 is operated according to the operating speed and the recognition result depending on whether or not the voice is low utterance speed.

- utterance of low utterance speed means utterance of low utterance speed

- the voice determination unit 130 determines the length of the phoneme sequence or mora sequence acquired by the voice acquisition unit 110 and the utterance of the utterance. The number of phonemes or mora per unit time is calculated from the length, and it is determined whether the calculated number of phonemes or mora is less than a predetermined threshold value. If the calculated number of phonemes or the number of mora is less than a predetermined threshold, the voice determination unit 130 determines that the voice instruction is utterance at a low speech rate, and the calculated number of phonemes or the number of mora is greater than or equal to a predetermined threshold.

- a threshold may be set for each speaker. This can be realized by making a speaker determination using a known speaker recognition technique and then using a threshold value corresponding to the determined speaker.

- the threshold for each speaker is calculated from the voice for each speaker input to the control device 100. For example, the average value of the speaking speed of the speaker x in the past T time is obtained, and a value obtained by subtracting a predetermined constant value from the average value is set as the threshold value of the speaker x.

- the value of T is assumed to be any positive value.

- the average value of the past N utterances may be used (N is a natural number).

- the operation control unit 140 sets the robot apparatus 10 in the quiet operating mode, and the moving unit operates at a low speaking speed lower than the quiet operating speed. 40 may be activated.

- the operating speed of the movable portion 40 is set to two discrete values of the normal operating speed and the quiet operating speed, but the present invention is not limited to this, and three or more discrete or continuous values are set. Different operating speeds may be set.

- the robot apparatus 10 may be set to have three or more stages of operation modes according to the degree of quietness, and the operation speed corresponding to each stage may be set.

- a continuous operation speed corresponding to the numerical value of the calmness may be set.

- the voice determination unit 130 determines whether or not the voice is the quiet voice” is not limited to the case where the voice is determined by the binary value indicating whether or not the voice is the quiet voice. It also includes the case where the degree of quietness is calculated. Furthermore, when the voice determination unit 130 calculates the quietness level of the voice, “the operation control unit 140 determines that the sound pressure level of the sound emitted by the movable unit of the control target device when the determination result of the voice determination unit 130 is a quiet voice.

- controlling the controlled device so that it is lower than the sound pressure level of the sound emitted by the movable part of the controlled device when the determination result of the voice determination unit 130 is not a quiet voice is " The sound pressure level of the sound emitted by the movable part of the control target device when the degree is high is lower than the sound pressure level of the sound emitted by the movable part of the control target device when the degree of the calculated quiet voice is low, Controlling the controlled device” is included.

- the robot device 10 may be provided with a movable element of the movable unit 40 (easy to break, high moving cost, etc.) specialized for the quiet operation mode, and in the quiet operation mode, the specialized movable element may be used for the quiet operation mode. .. [Modification of robot device]

- a robot apparatus according to another embodiment of the present disclosure will be described with reference to FIG.

- FIG. 12 is a schematic diagram showing a robot apparatus according to another embodiment of the present disclosure.

- the control device 100 may be external to the robot device 10, and, for example, acquires voice data and image data respectively acquired by the microphone 20 and the camera 30 via a wireless connection, and acquires the data.

- the operation instruction of the movable unit 40 determined by the above-described control processing based on the voice data and the image data may be transmitted to the robot apparatus 10 via the wireless connection.

- the robot apparatus 10 operates the movable section 40 according to the received operation instruction.

- the control device 100 does not necessarily have to be incorporated in the robot device 10.

- the robot device 10 may be remotely operated by the control device 100 connected by communication.

- each function may be a circuit including an analog circuit, a digital circuit, or an analog/digital mixed circuit.

- a control circuit for controlling each function may be provided.

- Each circuit may be mounted by an ASIC (Application Specific Integrated Circuit), an FPGA (Field Programmable Gate Array), or the like.

- control device 100 may be configured by hardware, or may be configured by software, and a CPU (Central Processing Unit) or the like may perform the information processing of the software. ..

- the control device 100 and a program that implements at least part of the functions thereof may be stored in a storage medium and read by a computer to be executed.

- the storage medium is not limited to a removable medium such as a magnetic disk (for example, a flexible disk) or an optical disk (for example, a CD-ROM or a DVD-ROM), but is fixed to an SSD (Solid State Drive) that uses a hard disk device or a memory.

- SSD Solid State Drive

- Type storage medium that is, information processing by software may be specifically implemented by using hardware resources. Further, the processing by software may be implemented in a circuit such as FPGA and executed by hardware. The job may be executed by using an accelerator such as a GPU (Graphics Processing Unit).

- the computer can be used as the device of the above-described embodiment by the computer reading the dedicated software stored in the computer-readable storage medium.

- the type of storage medium is not particularly limited.

- the computer can be the device of the above-described embodiment by installing the dedicated software downloaded via the communication network by the computer. In this way, information processing by software is specifically implemented using hardware resources.

- FIG. 13 is a block diagram showing an example of the hardware configuration according to the embodiment of the present invention.

- the control device 100 includes a processor 101, a main storage device 102, an auxiliary storage device 103, a network interface 104, and a device interface 105, which can be realized as a computer device connected via a bus 106.

- control device 100 in FIG. 13 includes one component, but may include a plurality of the same components. Further, although one control device 100 is shown, software may be installed in a plurality of computer devices, and each of the plurality of control devices 100 may execute a part of processing of different software. In this case, each of the plurality of control devices 100 may communicate via the network interface 104 or the like.

- the processor 101 is an electronic circuit (processing circuit, processing circuit, processing circuit) that includes a control unit of the control device 100 and a computing device.

- the processor 101 performs arithmetic processing based on data and programs input from each device of the internal configuration of the control device 100, and outputs a calculation result and a control signal to each device.

- the processor 101 controls each constituent element of the control device 100 by executing an OS (Operating System) of the control device 100, an application, or the like.

- the processor 101 is not particularly limited as long as it can perform the above processing.

- the control device 100 and each component thereof are realized by the processor 101.

- the processing circuit may refer to one or a plurality of electric circuits arranged on one chip, or one or a plurality of electric circuits arranged on two or more chips or devices. Good. When using a plurality of electronic circuits, each electronic circuit may communicate by wire or wirelessly.

- the main storage device 102 is a storage device that stores instructions executed by the processor 101 and various data, and the information stored in the main storage device 102 is directly read by the processor 101.

- the auxiliary storage device 103 is a storage device other than the main storage device 102. Note that these storage devices mean arbitrary electronic components capable of storing electronic information, and may be a memory or a storage.

- the memory includes a volatile memory and a non-volatile memory, but either may be used.

- a memory for storing various data in the control device 100 for example, a memory may be realized by the main storage device 102 or the auxiliary storage device 103. For example, at least a part of the memory may be mounted in the main storage device 102 or the auxiliary storage device 103.

- an accelerator is provided, at least a part of the memory described above may be implemented in the memory provided in the accelerator.

- the network interface 104 is an interface for connecting to the communication network 200 wirelessly or by wire.

- the network interface 104 may be one that conforms to the existing communication standard.

- the network interface 104 may exchange information with the external device 300A that is communicatively connected via the communication network 200.

- the external device 300A includes, for example, a camera, motion capture, output destination device, external sensor, input source device, and the like. Further, the external device 300A may be a device having a function of some of the components of the control device 100. Then, the control device 100 may receive a part of the processing result of the control device 100 via the communication network 200 like a cloud service.

- the device interface 105 is an interface such as a USB (Universal Serial Bus) directly connected to the external device 300B.

- the external device 300B may be an external storage medium or a storage device.

- the memory may be implemented by the external device 300B.

- the external device 300B may be an output device.

- the output device may be, for example, a display device for displaying an image, a device for outputting sound, or the like.

- LCD Liquid Crystal Display

- CRT Cathode Ray Tube

- PDP Plasma Display Panel

- organic EL ElectroLuminescence

- the external device 300B may be an input device.

- the input device includes devices such as a keyboard, a mouse, a touch panel, and a microphone, and gives information input by these devices to the control device 100.

- the signal from the input device is output to the processor 101.

- the voice acquisition unit 110, the voice recognition unit 120, the voice determination unit 130, the operation control unit 140, the image acquisition unit 150, the image recognition unit 160, and the like of the control device 100 according to the present embodiment may be realized by the processor 101. ..

- the memory of the control device 100 may be realized by the main storage device 102 or the auxiliary storage device 103.

- the control device 100 may include one or more memories.

- a, b and c means not only a combination of a, b, c, ab, ac, bc and abc, but also aa , Abb, aabbbc, and the like, also include a plurality of combinations of the same elements. It is also an expression that covers a configuration including elements other than a, b, and c such as a combination of abcd.

- a, b or c means not only a combination of a, b, c, ab, ac, bc, abc but

- the expression also includes a plurality of combinations of the same elements such as aa, abb, aabbbcc, and the like. It is also an expression that covers a configuration including elements other than a, b, and c such as a combination of abcd.

- robot device 20 microphone 30 camera 40 movable part 100 control device 101 processor 102 main storage device 103 auxiliary storage device 104 network interface 105 device interface 110 voice acquisition unit 120 voice recognition unit 130 voice determination unit 140 operation control unit 150 image acquisition unit 160 Image recognition unit 200 Communication network 300 External device

Landscapes

- Engineering & Computer Science (AREA)

- Multimedia (AREA)

- Human Computer Interaction (AREA)

- Physics & Mathematics (AREA)

- Audiology, Speech & Language Pathology (AREA)

- Health & Medical Sciences (AREA)

- Robotics (AREA)

- Mechanical Engineering (AREA)

- Computational Linguistics (AREA)

- Acoustics & Sound (AREA)

- General Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- Signal Processing (AREA)

- Automation & Control Theory (AREA)

- General Health & Medical Sciences (AREA)

- General Engineering & Computer Science (AREA)

- Manipulator (AREA)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| US17/443,548 US20210354300A1 (en) | 2019-01-30 | 2021-07-27 | Controller, controlled apparatus, control method, and recording medium |

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| JP2019-014743 | 2019-01-30 | ||

| JP2019014743A JP2020121375A (ja) | 2019-01-30 | 2019-01-30 | 制御装置、制御対象装置、制御方法及びプログラム |

Related Child Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| US17/443,548 Continuation US20210354300A1 (en) | 2019-01-30 | 2021-07-27 | Controller, controlled apparatus, control method, and recording medium |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| WO2020158231A1 true WO2020158231A1 (ja) | 2020-08-06 |

Family

ID=71840834

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/JP2019/049803 Ceased WO2020158231A1 (ja) | 2019-01-30 | 2019-12-19 | 制御装置、制御対象装置、制御方法及び記憶媒体 |

Country Status (3)

| Country | Link |

|---|---|

| US (1) | US20210354300A1 (enExample) |

| JP (1) | JP2020121375A (enExample) |

| WO (1) | WO2020158231A1 (enExample) |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN114690653A (zh) * | 2020-12-25 | 2022-07-01 | 丰田自动车株式会社 | 控制装置、任务系统、控制方法和存储介质 |

Families Citing this family (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US10866783B2 (en) * | 2011-08-21 | 2020-12-15 | Transenterix Europe S.A.R.L. | Vocally activated surgical control system |

| JP7339124B2 (ja) | 2019-02-26 | 2023-09-05 | 株式会社Preferred Networks | 制御装置、システム及び制御方法 |

| US20220152825A1 (en) * | 2020-11-13 | 2022-05-19 | Armstrong Robotics, Inc. | Automated manipulation of objects using a vision-based method for determining collision-free motion planning |

| JP7548095B2 (ja) * | 2021-03-26 | 2024-09-10 | トヨタ自動車株式会社 | 車両の充電システムおよび車両の充電システムの制御方法 |

Citations (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2006095635A (ja) * | 2004-09-29 | 2006-04-13 | Honda Motor Co Ltd | 移動ロボットの制御装置 |

| WO2017098713A1 (ja) * | 2015-12-07 | 2017-06-15 | 川崎重工業株式会社 | ロボットシステム及びその運転方法 |

Family Cites Families (7)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2015181778A (ja) * | 2014-03-25 | 2015-10-22 | シャープ株式会社 | 自走式掃除機 |

| JP2016095635A (ja) * | 2014-11-13 | 2016-05-26 | パナソニックヘルスケアホールディングス株式会社 | 空中タッチパネルおよびこれを備えた手術用シミュレータ表示システム |

| JP2016153999A (ja) * | 2015-02-17 | 2016-08-25 | 横河電機株式会社 | 管理装置、管理システム、管理方法、管理プログラム、及び記録媒体 |

| KR102521493B1 (ko) * | 2015-10-27 | 2023-04-14 | 삼성전자주식회사 | 청소 로봇 및 그 제어방법 |

| JP2017151517A (ja) * | 2016-02-22 | 2017-08-31 | 富士ゼロックス株式会社 | ロボット制御システム |

| US10074359B2 (en) * | 2016-11-01 | 2018-09-11 | Google Llc | Dynamic text-to-speech provisioning |

| KR20180124564A (ko) * | 2017-05-12 | 2018-11-21 | 네이버 주식회사 | 수신된 음성 입력의 입력 음량에 기반하여 출력될 소리의 출력 음량을 조절하는 사용자 명령 처리 방법 및 시스템 |

-

2019

- 2019-01-30 JP JP2019014743A patent/JP2020121375A/ja active Pending

- 2019-12-19 WO PCT/JP2019/049803 patent/WO2020158231A1/ja not_active Ceased

-

2021

- 2021-07-27 US US17/443,548 patent/US20210354300A1/en not_active Abandoned

Patent Citations (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2006095635A (ja) * | 2004-09-29 | 2006-04-13 | Honda Motor Co Ltd | 移動ロボットの制御装置 |

| WO2017098713A1 (ja) * | 2015-12-07 | 2017-06-15 | 川崎重工業株式会社 | ロボットシステム及びその運転方法 |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN114690653A (zh) * | 2020-12-25 | 2022-07-01 | 丰田自动车株式会社 | 控制装置、任务系统、控制方法和存储介质 |

Also Published As

| Publication number | Publication date |

|---|---|

| US20210354300A1 (en) | 2021-11-18 |

| JP2020121375A (ja) | 2020-08-13 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| WO2020158231A1 (ja) | 制御装置、制御対象装置、制御方法及び記憶媒体 | |

| US11430428B2 (en) | Method, apparatus, and storage medium for segmenting sentences for speech recognition | |

| CN111868824B (zh) | 用于情境感知控制的设备和方法 | |

| JP5075664B2 (ja) | 音声対話装置及び支援方法 | |

| US11656837B2 (en) | Electronic device for controlling sound and operation method therefor | |

| JP6635049B2 (ja) | 情報処理装置、情報処理方法およびプログラム | |

| US20210216134A1 (en) | Information processing apparatus and method, and program | |

| JP4204541B2 (ja) | 対話型ロボット、対話型ロボットの音声認識方法および対話型ロボットの音声認識プログラム | |

| CN113168227B (zh) | 执行电子装置的功能的方法以及使用该方法的电子装置 | |

| US20210366506A1 (en) | Artificial intelligence device capable of controlling operation of another device and method of operating the same | |

| TWI871343B (zh) | 激活話音識別 | |

| US20190019513A1 (en) | Information processing device, information processing method, and program | |

| KR102853507B1 (ko) | 사운드 특성에 대한 음향 모델 컨디셔닝 | |

| US20180033424A1 (en) | Voice-controlled assistant volume control | |

| JP6844608B2 (ja) | 音声処理装置および音声処理方法 | |

| US12106754B2 (en) | Systems and operation methods for device selection using ambient noise | |

| US11769490B2 (en) | Electronic apparatus and control method thereof | |

| JP2011071702A (ja) | 収音処理装置、収音処理方法、及びプログラム | |

| JP2005352154A (ja) | 感情状態反応動作装置 | |

| CN118354237A (zh) | 一种mems耳机的唤醒方法、装置、设备以及存储介质 | |

| US12051412B2 (en) | Control device, system, and control method | |

| JPH06236196A (ja) | 音声認識方法および装置 | |

| KR20210015234A (ko) | 전자 장치, 및 그의 음성 명령에 따른 기능이 실행되도록 제어하는 방법 | |

| JP2018045192A (ja) | 音声対話装置および発話音量調整方法 | |

| CN118447854A (zh) | 一种基于声纹识别的建筑工程噪声预测方法 |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| 121 | Ep: the epo has been informed by wipo that ep was designated in this application |

Ref document number: 19913271 Country of ref document: EP Kind code of ref document: A1 |

|

| NENP | Non-entry into the national phase |

Ref country code: DE |

|

| 122 | Ep: pct application non-entry in european phase |

Ref document number: 19913271 Country of ref document: EP Kind code of ref document: A1 |