WO2015104745A1 - 処理装置、処理方法、およびプログラム - Google Patents

処理装置、処理方法、およびプログラム Download PDFInfo

- Publication number

- WO2015104745A1 WO2015104745A1 PCT/JP2014/005893 JP2014005893W WO2015104745A1 WO 2015104745 A1 WO2015104745 A1 WO 2015104745A1 JP 2014005893 W JP2014005893 W JP 2014005893W WO 2015104745 A1 WO2015104745 A1 WO 2015104745A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- shape

- processing

- deformation

- image

- coordinate system

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B5/00—Measuring for diagnostic purposes; Identification of persons

- A61B5/05—Detecting, measuring or recording for diagnosis by means of electric currents or magnetic fields; Measuring using microwaves or radio waves

- A61B5/055—Detecting, measuring or recording for diagnosis by means of electric currents or magnetic fields; Measuring using microwaves or radio waves involving electronic [EMR] or nuclear [NMR] magnetic resonance, e.g. magnetic resonance imaging

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B5/00—Measuring for diagnostic purposes; Identification of persons

- A61B5/43—Detecting, measuring or recording for evaluating the reproductive systems

- A61B5/4306—Detecting, measuring or recording for evaluating the reproductive systems for evaluating the female reproductive systems, e.g. gynaecological evaluations

- A61B5/4312—Breast evaluation or disorder diagnosis

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B6/00—Apparatus or devices for radiation diagnosis; Apparatus or devices for radiation diagnosis combined with radiation therapy equipment

- A61B6/50—Apparatus or devices for radiation diagnosis; Apparatus or devices for radiation diagnosis combined with radiation therapy equipment specially adapted for specific body parts; specially adapted for specific clinical applications

- A61B6/502—Apparatus or devices for radiation diagnosis; Apparatus or devices for radiation diagnosis combined with radiation therapy equipment specially adapted for specific body parts; specially adapted for specific clinical applications for diagnosis of breast, i.e. mammography

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B6/00—Apparatus or devices for radiation diagnosis; Apparatus or devices for radiation diagnosis combined with radiation therapy equipment

- A61B6/52—Devices using data or image processing specially adapted for radiation diagnosis

- A61B6/5211—Devices using data or image processing specially adapted for radiation diagnosis involving processing of medical diagnostic data

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B8/00—Diagnosis using ultrasonic, sonic or infrasonic waves

- A61B8/08—Clinical applications

- A61B8/0825—Clinical applications for diagnosis of the breast, e.g. mammography

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B8/00—Diagnosis using ultrasonic, sonic or infrasonic waves

- A61B8/48—Diagnostic techniques

- A61B8/483—Diagnostic techniques involving the acquisition of a 3D volume of data

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B8/00—Diagnosis using ultrasonic, sonic or infrasonic waves

- A61B8/52—Devices using data or image processing specially adapted for diagnosis using ultrasonic, sonic or infrasonic waves

- A61B8/5207—Devices using data or image processing specially adapted for diagnosis using ultrasonic, sonic or infrasonic waves involving processing of raw data to produce diagnostic data, e.g. for generating an image

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/22—Matching criteria, e.g. proximity measures

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/0002—Inspection of images, e.g. flaw detection

- G06T7/0012—Biomedical image inspection

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/10—Segmentation; Edge detection

- G06T7/13—Edge detection

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/30—Determination of transform parameters for the alignment of images, i.e. image registration

- G06T7/33—Determination of transform parameters for the alignment of images, i.e. image registration using feature-based methods

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/60—Analysis of geometric attributes

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/70—Determining position or orientation of objects or cameras

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V40/00—Recognition of biometric, human-related or animal-related patterns in image or video data

- G06V40/10—Human or animal bodies, e.g. vehicle occupants or pedestrians; Body parts, e.g. hands

- G06V40/103—Static body considered as a whole, e.g. static pedestrian or occupant recognition

-

- G—PHYSICS

- G16—INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR SPECIFIC APPLICATION FIELDS

- G16H—HEALTHCARE INFORMATICS, i.e. INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR THE HANDLING OR PROCESSING OF MEDICAL OR HEALTHCARE DATA

- G16H50/00—ICT specially adapted for medical diagnosis, medical simulation or medical data mining; ICT specially adapted for detecting, monitoring or modelling epidemics or pandemics

- G16H50/20—ICT specially adapted for medical diagnosis, medical simulation or medical data mining; ICT specially adapted for detecting, monitoring or modelling epidemics or pandemics for computer-aided diagnosis, e.g. based on medical expert systems

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B2576/00—Medical imaging apparatus involving image processing or analysis

- A61B2576/02—Medical imaging apparatus involving image processing or analysis specially adapted for a particular organ or body part

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/10—Image acquisition modality

- G06T2207/10116—X-ray image

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/30—Subject of image; Context of image processing

- G06T2207/30004—Biomedical image processing

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/30—Subject of image; Context of image processing

- G06T2207/30004—Biomedical image processing

- G06T2207/30068—Mammography; Breast

Definitions

- the present invention relates to medical images captured by various medical image acquisition apparatuses (modalities) such as a nuclear magnetic resonance imaging apparatus (MRI), an X-ray computed tomography apparatus (X-ray CT), and an ultrasonic diagnostic imaging apparatus (US).

- MRI nuclear magnetic resonance imaging apparatus

- X-ray CT X-ray computed tomography apparatus

- US ultrasonic diagnostic imaging apparatus

- the present invention relates to a processing apparatus, a processing method, and a program for processing.

- the corresponding site may be identified on an image of another modality and diagnosed by comparing the identified site.

- imaging positions differ between modalities, the shape of the subject at the time of imaging differs, which makes it difficult to identify the subject. Therefore, an attempt has been made to estimate the deformation of the subject between the two (that is, to perform alignment between images accompanying the deformation). Thereby, it is possible to estimate the position of the corresponding part based on the position information of the target part, or to generate an image in which one image is deformed to have the same shape as the other.

- Non-Patent Document 1 discloses a technique for facilitating comparison of shapes before and after deformation of an object by normalizing the shape of the object with deformation. Specifically, a method is disclosed in which a geodesic distance matrix of the surface shape of an object is calculated and normalized by a multidimensional scaling method using the matrix. According to this method, it becomes possible to normalize the shape before and after the deformation to a form that can be directly compared with respect to the deformation that does not change the geodesic distance of the object surface. Thereby, comparison between deformation shapes of an object accompanied by deformation, object recognition based on the shape, and the like can be easily performed.

- Non-Patent Document 1 If the method described in Non-Patent Document 1 is used, it can be expected that a complicated shape with deformation can be relatively easily aligned because normalization between shapes before and after the deformation can be performed. However, when the shape of the object is relatively monotonous and there are few landmarks that can be associated between the deformed shapes, there is a problem that instability remains in the posture of the object after normalization.

- the present invention has been made in view of the above-described problems, and an object thereof is to provide a mechanism that can easily and stably normalize different shapes. It is another object of the present invention to provide a mechanism for performing alignment between shapes based on normalization with high accuracy.

- a processing apparatus has the following configuration. That is, from the image of the target object, acquisition means for acquiring the contour of the target region of the target object and the reference point on the contour, and the distance and direction from the reference point at an arbitrary position on the contour are calculated. Calculation means, and normalization means for generating normalization conversion information for converting the shape of the target region of the target object into a predetermined reference shape based on the distance and the orientation.

- the present invention it is possible to provide a mechanism that can easily and stably normalize different shapes. Further, it is possible to provide a mechanism for performing alignment between shapes based on normalization with high accuracy.

- FIG. 1 is a diagram showing a functional configuration of a processing system according to the first embodiment.

- FIG. 2 is a diagram showing a device configuration of the processing system according to the first embodiment.

- FIG. 3 is a flowchart showing a processing procedure of the processing apparatus according to the first embodiment.

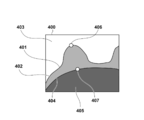

- FIG. 4 is a schematic diagram of a subject depicted on a prone position MRI image.

- FIG. 5 is a flowchart showing the processing procedure of step S320 according to the first embodiment.

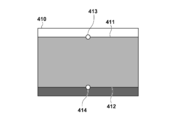

- FIG. 6A is a diagram for explaining a normalized coordinate system according to the first embodiment.

- FIG. 6B is a diagram for explaining a normalized coordinate system according to the first embodiment.

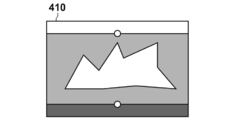

- FIG. 7A is a view for explaining image deformation processing according to the first embodiment.

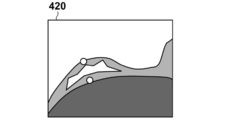

- FIG. 7B is a view for explaining image deformation processing according to the first embodiment.

- FIG. 7C is a view for explaining image deformation processing according to the first embodiment.

- FIG. 7D is a view for explaining image deformation processing according to the first embodiment.

- FIG. 8 is a diagram showing a functional configuration of the processing system according to the third embodiment.

- FIG. 9 is a flowchart showing the processing procedure of the learning phase of the processing device according to the third embodiment.

- FIG. 10 is a flowchart showing the processing procedure of step S580 of the processing apparatus according to the third embodiment.

- FIG. 11 is a flowchart showing the processing procedure of the deformation estimation phase of the processing device according to the third embodiment.

- FIG. 12 is a diagram showing a functional configuration of a processing system according to the fourth embodiment.

- FIG. 13 is a flowchart showing a processing procedure of the processing apparatus according to the fourth embodiment.

- FIG. 14 is a diagram showing a functional configuration of a processing system according to the fifth embodiment.

- FIG. 15 is a flowchart showing the processing procedure of the learning phase of the processing device according to the fifth embodiment.

- FIG. 16 is a flowchart showing the processing procedure of the deformation estimation phase of the processing device according to the fifth embodiment.

- FIG. 17 is a diagram showing a functional configuration of a processing system according to the sixth embodiment.

- FIG. 18 is a flowchart showing the processing procedure of the learning phase of the processing device according to the sixth embodiment.

- FIG. 19 is a flowchart showing a processing procedure of a deformation estimation phase of the processing device according to the sixth embodiment.

- the processing apparatus performs normalization conversion (normalization) that converts each medical image into a reference shape when medical images obtained by imaging the breast of the subject as a target region in two different body positions are acquired. Conversion information) is obtained, and deformation position alignment between images is performed through the obtained information.

- This normalization conversion is a conversion in which the breasts of the subject imaged in different deformed states are coordinate-converted into a space substantially anatomically matched due to the difference in body position.

- the following are known biomechanically as characteristics related to the deformation of the breast between the prone position and the supine position.

- it is possible to substantially absorb the change in position caused by the deformation generated between the prone position and the supine position, and convert it into a anatomically common space.

- By performing deformation position alignment between images through this conversion it becomes possible to position the original image with higher accuracy than when directly deforming and aligning the original image.

- the contour of the breast of the subject is extracted from each of the acquired medical images, and a normalization transform is calculated based on the reference point on the contour and coordinate-converted to the rectangular shape that is the reference shape.

- a normalization transform is calculated based on the reference point on the contour and coordinate-converted to the rectangular shape that is the reference shape.

- FIG. 1 is a diagram showing a configuration of a processing system according to the present embodiment.

- the processing apparatus 100 includes an image acquisition unit 1000, an anatomical feature extraction unit 1020, a normalization unit 1040, an image deformation unit 1060, and an observation image generation unit 1080.

- the processing device 100 is connected to the data server 120 and the monitor 160.

- the MRI image capturing apparatus 110 is an apparatus that acquires an image obtained by nuclear magnetic resonance, that is, an MRI image, of information related to a three-dimensional region inside a subject that is a human body.

- the MRI image capturing apparatus 110 is connected to the data server 120 and transmits the acquired MRI image to the data server 120.

- the data server 120 is a device that holds the MRI image picked up by the MRI image pickup device 110, and transfers the MRI image held by a command from the processing device 100 to the processing device 100.

- the image acquisition unit 1000 captures the MRI image of the subject (target object) captured by the MRI image capturing apparatus 110 into the processing apparatus 100 via the data server 120.

- the anatomical feature extraction unit 1020 performs image processing on the MRI image captured by the image acquisition unit 1000 and extracts the anatomical features of the subject.

- the normalization unit 1040 calculates a conversion for converting (normalizing) the shape of the subject into a reference shape based on the anatomical feature of the subject extracted by the anatomical feature extraction unit 1020. Details regarding normalization will be described later.

- the image deformation unit 1060 performs alignment between the prone position and the supine position based on the conversion calculated by the normalization unit 1040, deforms the prone position MRI image, and generates a deformed image that matches the supine position MRI image.

- the observation image generation unit 1080 generates an observation image to be presented to the user from each of the MRI image captured by the image acquisition unit 1000 and the deformation image generated by the image deformation unit 1060. Then, the observation image is output to the monitor 160.

- the monitor 160 displays the observation image generated by the observation image generation unit 1080.

- FIG. 2 is a diagram showing an apparatus configuration of the processing system according to the present embodiment.

- the processing system of this embodiment includes a processing device 100, an MRI image capturing device 110, a data server 120, a monitor 160, a mouse 170, and a keyboard 180.

- the processing device 100 can be realized by, for example, a personal computer (PC).

- the processing device 100 includes a central processing unit (CPU) 211, a main memory 212, a magnetic disk 213, and a display memory 214.

- CPU central processing unit

- the CPU 211 mainly controls the operation of each component of the processing apparatus 100.

- the main memory 212 stores a control program executed by the CPU 211 and provides a work area when the CPU 211 executes the program.

- the magnetic disk 213 stores an operating system (OS), device drives of peripheral devices, various application software including programs for performing processing described later, and the like.

- the display memory 214 temporarily stores display data for the monitor 160.

- the monitor 160 is, for example, a CRT monitor or a liquid crystal monitor, and displays an image based on data from the display memory 214.

- the mouse 170 and the keyboard 180 are used for a pointing input by a user and an input of characters and commands, respectively.

- the above components are connected to each other via a common bus 218 so as to communicate with each other.

- FIG. 3 is a flowchart of processing executed by the processing apparatus 100 in the present embodiment.

- it is realized by the CPU 211 executing a program that realizes the function of each unit stored in the main memory 212.

- the results of each process performed by the processing apparatus 100 described below are recorded by being stored in the main memory 212.

- each processing step shown in FIG. 3 will be described in detail following the procedure.

- Step S300 Acquire Prone MRI Image

- the image acquisition unit 1000 obtains an MRI image (prone MRI image) in which the MRI image imaging device 110 images the subject's breast in the prone position.

- a process of importing into the processing apparatus 100 via 120 is executed.

- the prone position MRI image is three-dimensional volume data

- the direction from the foot side to the head side of the subject is the Z axis

- the direction from the ventral side to the dorsal side is the Y axis

- the left direction of the subject is X It is assumed that it has a three-dimensional orthogonal coordinate system as an axis (such coordinate conversion is performed in advance).

- this coordinate system is referred to as a prone position MRI image coordinate system.

- the luminance value of the prone position MRI image is expressed as a scalar function I p (x) with the three-dimensional position x in the prone position MRI image coordinate system as an argument.

- Step S310 Extract anatomical features from prone position MRI images

- the anatomical feature extraction unit 1020 processes the prone position MRI images acquired in step S300, thereby anatomical features in the prone position of the subject. Execute the process to extract.

- the anatomical features are the nipple position, body surface shape, pectoralis muscle surface shape, and reference position on the pectoralis muscle surface of the subject.

- FIG. 4 is a diagram for explaining anatomical features on the prone position MRI image.

- the actual prone position MRI image is a three-dimensional image.

- the prone position MRI image 400 includes an air region 403, a breast region 402, and an internal region 405.

- the body surface 401 is a set of positions at the boundary between the air region 403 and the breast region 402, and is a three-dimensional curved surface.

- the pectoralis major surface 404 is a set of boundaries between the breast region 402 and the internal region 405, and is a three-dimensional curved surface.

- the anatomical feature extraction unit 1020 detects the body surface 401 by performing image processing on the prone position MRI image 400 using a known method such as threshold processing or edge detection.

- the detection of the body surface 401 is not required to detect the entire body surface of the subject depicted in the prone position MRI image, and only the body surface related to the breast region and its surrounding region may be detected.

- the center position of the breast region in the prone position MRI image is acquired by user input using the mouse 170 or the keyboard 180, and the processing target is within a predetermined range from the center position.

- the body surface 401 is detected by the above method.

- the body surface shape which is a set of positions of the boundary between the air region 403 and the breast region 402, which is the body surface, is expressed as sp, surface, i (1 ⁇ i ⁇ Np , surface ) in this embodiment.

- N p, surface is the number of positions (points) constituting the body surface shape.

- the pectoralis major surface shape is also detected by image processing. In the present embodiment, this is expressed as sp, pectral, i ( 1 ⁇ i ⁇ Np, pectral ).

- N p, pectral is the number of positions (points) constituting the pectoralis major surface shape.

- the body surface shape and the pectoralis major surface shape are each accompanied by connection information between points constituting these.

- the body surface shape and the pectoralis major surface shape have information on a surface created by the point group in addition to information on a plurality of points (point group) representing the position.

- the anatomical feature extraction unit 1020 detects the nipple position.

- the nipple position can be detected by further processing the body surface shape detected by the above method. For example, the local curvature of the body surface shape can be calculated, and the position where the curvature is maximum can be detected as the nipple position.

- a position having the smallest Y-axis coordinate value (most ventral direction) in the MRI image coordinate system can be selected from all the positions constituting the body surface shape, and this can be set as the nipple position. It is also possible to detect the nipple position by performing image processing on the prone position MRI image.

- the detected nipple position is expressed as a three-dimensional coordinate value x p, surface .

- the anatomical feature extraction unit 1020 detects a reference position on the pectoralis major muscle surface. This process is executed, for example, by selecting a position closest to the nipple position among all positions constituting the pectoralis major surface shape.

- the detected three-dimensional coordinate value of the reference position on the pectoralis major muscle surface is expressed as x p, pectral .

- the anatomical feature extraction unit 1020 executes a process of performing coordinate transformation of the prone position MRI image coordinate system so that the nipple position x p, surface detected as described above is the origin. Specifically, it executes s p acquired in the process of the, surface, i, s p, pectral, i, x p, surface, x p, pectral the -x p, the process only to translate Surface. With the above processing, anatomical features are extracted in step S310.

- the processing apparatus 100 may display the prone position MRI image on the monitor 160 so that the user can input information on anatomical features to the processing apparatus 100 using the mouse 170 or the keyboard 180. Further, the anatomical features extracted by the image processing may be corrected / changed by the user using the mouse 170 or the keyboard 180. Further, the processing apparatus 100 may extract a part of the anatomical features by image processing and acquire the other by input by the user. At that time, a part of the anatomical features extracted by the image processing may be displayed on the monitor 160.

- the processing apparatus 100 extracts the body surface shape and the pectoralis major surface shape by image processing, and displays the results on the monitor 160. Then, the user may input the nipple position and the reference position on the pectoral muscle surface using the mouse 170 or the keyboard 180 to the processing device 100 while referring to the displayed body surface shape or pectoralis muscle surface shape. good.

- Step S320 Calculate transformation to the prone position normalized coordinate system

- the normalization unit 1040 calculates the shape of the subject in the prone position based on the anatomical features in the prone position extracted in step S310. Derive a normalization transformation to transform to the reference shape. Specifically, the normalization unit 1040 executes a process of calculating a coordinate conversion function between these coordinate systems as information representing conversion from the prone position MRI image coordinate system to the prone position normalized coordinate system. This conversion is such that each of the body surface and the pectoral muscle surface in the MRI image coordinate system is located on a predetermined plane in the prone position normalized coordinate system.

- this conversion is a conversion in which an arbitrary structure in the breast region is not damaged as much as possible before and after the conversion from the viewpoint of topology.

- a specific processing procedure executed by step S320 to calculate the coordinate transformation function will be described in detail with reference to the flowchart of FIG.

- Step S3200 Calculate the geodesic distance of the body surface

- the normalization unit 1040 based on the anatomical features extracted in step S310, at each position constituting the body surface shape of the subject in the prone position

- a process for calculating a geodesic distance based on the position is executed. That is, the normalization unit 1040 calculates the geodesic distance from the nipple at any other position, with the geodesic distance set to 0 for each nipple position among the positions constituting the body surface shape. Note that any known method may be used as a method of calculating the geodesic distance.

- the Dijkstra method can be used as a method for calculating the geodesic distance.

- the geodesic distance d p, surface, i (1 ⁇ i ⁇ N p, surface ) of each position constituting the body surface shape is calculated by the above processing.

- the subscript i is the same as the subscript i of the body surface shape sp , surface, i (1 ⁇ i ⁇ N p, surface ), and the position sp of the i- th body surface shape sp, surface, i

- d p, surface, i be the geodesic distance at.

- the normalization unit 1040 calculates the nipple position at each position constituting the body surface shape of the subject in the prone position based on the anatomical features extracted in step S310.

- a process for calculating a reference azimuth is executed.

- the orientation can be, for example, an orientation on the XZ plane in the MRI image coordinate system.

- the X coordinate value x i and the Z coordinate value z i are used to calculate the number.

- a p, surface, i [rad] can be calculated by the calculation shown in 1.

- the subscript i is the same as the subscript i of the body surface shape sp , surface, i (1 ⁇ i ⁇ N p, surface ), and the position sp of the i- th body surface shape sp, surface, i

- azimuth be a p, surface, i .

- direction is not restricted to said method, For example, it can calculate with the following method. That is, a vector connecting the nipple position and the reference position on the pectoral muscle surface is taken as the Y axis, and the body axis direction (direction from the foot side to the head side) of the subject is taken as the Z axis. However, if the Z axis is not orthogonal to the Y axis, correction is required. Then, the above equation 1 may be calculated after setting the outer product direction of the Y axis and the Z axis as the X axis.

- the orientation is determined with the coordinate axes based on the orientation of the subject. There is an effect that can be calculated.

- Step S3220> Calculate the geodesic distance of the pectoral muscle surface>

- the normalization unit 1040 based on the anatomical features extracted in step S310, on the pectoral muscle surface at each position sp, pectral, i constituting the pectoral muscle surface shape of the subject in the prone position.

- a process of calculating a geodesic distance d p, pectral, i (1 ⁇ i ⁇ N p, pectral ) based on the reference position x p, pectral of is executed. This processing step is executed by applying the same processing as step S3200 for the body surface to the pectoralis major muscle surface.

- Step S3230 Calculate the orientation of the pectoralis major plane

- the normalization unit 1040 determines each position s constituting the pectoralis major plane shape of the subject in the prone position based on the anatomical features extracted in step S310.

- p, Pectral, in i performs processing for calculating a reference position x p on the pectoralis major surface, orientation a p relative to the pectral, pectral, i a (1 ⁇ i ⁇ N p, pectral).

- This processing step is executed by applying the same processing as step S3210 for the body surface to the pectoralis major surface.

- Step S3240 Transform the body surface into a normalized coordinate system

- the normalization unit 1040 determines the subject in the prone position based on the geodesic distance and orientation on the body surface calculated in steps S3200 and S3210.

- a process for obtaining a transformation for converting the body surface shape of the body into a predetermined plane in the prone position normalized coordinate system is executed.

- the normalization unit 1040 corresponds to the position s ′ p, surface in the prone position normalized coordinate system corresponding to each position s p, surface, i constituting the body surface shape in the prone position MRI image coordinate system. , i is calculated.

- FIG. 6A is a schematic diagram of the breast in the prone position MRI image 400.

- FIG. FIG. 6B is a schematic diagram of the breast in the prone position normalization space expressed in the prone position normalization coordinate system.

- FIG. 6A and FIG. 6B are illustrated as a two-dimensional image for convenience of explanation on paper, but in actual processing, there is a three-dimensional space.

- the body surface 401 is a curved line in the figure, but is a curved surface in actual processing.

- the normalized surface 411 is a straight line in the figure, but is a plane in the actual processing. In this processing step, as shown in FIG.

- the normalization unit 1040 executes a process of performing coordinate conversion of the body surface 401 whose contour shape is a curved surface into a normalized body surface 411 which is a rectangular upper surface.

- the position of the normalized nipple 413 is defined in advance as a predetermined position.

- the position of the normalized nipple 413 is defined as the origin of the prone position normalized coordinate system.

- the body surface 401 is a set of N p, surface points denoted as sp, surface, i in this embodiment.

- the geodesic distance d p, surface, i and the direction a p, surface, i are calculated by the processing of step S3200 and step S3210.

- the normalization unit 1040 calculates a corresponding position in the prone position normalized coordinate system based on these calculation results. Specifically, the normalization unit 1040 calculates the coordinate value by calculation of Equation 2 to Equation 4.

- the position of the normalized body surface in the prone position normalized coordinate system calculated by the above processing is expressed as s ′ p, surface, i (1 ⁇ i ⁇ N p, surface ).

- Step S3250 Transform the pectoralis muscle surface into a normalized coordinate system

- the normalization unit 1040 determines the prone position based on the geodesic distance and orientation on the pectoral muscle surface calculated in steps S3220 and S3230.

- a process for obtaining a transformation for coordinate-transforming the shape of the pectoralis major muscle surface of the subject to a predetermined plane in the prone position normalized coordinate system is executed.

- the normalization unit 1040 corresponds to the position s ′ p in the prone position normalized coordinate system corresponding to each position s p, pectral, i constituting the pectoralis major plane shape in the prone position MRI image coordinate system.

- pectral, i is calculated.

- the normalization unit 1040 executes processing on the pectoralis major muscle surface in the same manner as in step S3240 for the body surface. That is, the normalization unit 1040 executes processing for coordinate-transforming the pectoralis major muscle surface 404 whose contour shape is a curved surface as shown in FIG. 6A into a normalized pectoral major muscle surface 412 which is a lower plane of the rectangular shape. .

- the position of the reference point 414 of the normalized pectoral muscle surface is defined in advance as a predetermined position.

- the reference point 414 of the normalized pectoral muscle surface is defined as coordinate values (0, 100, 0) of the prone position normalized coordinate system.

- a specific process is executed by the normalization unit 1040 performing calculations of Formulas 5 to 7 for all points sp, pectral, i on the pectoralis muscle surface 404. That is, the normalizing unit 1040 performs coordinate conversion on all points on the same xz plane as the reference point 414 on the normalized pectoralis major muscle surface. At this time, the normalization unit 1040, for all points, the distance and orientation with respect to the reference point 414 of the normalized pectoralis muscle surface are geodesic from the reference point of the pectoral muscle surface in the prone MRI image coordinate system Match the line distance d p, pectral, i and the orientation a p, pectral, i .

- Step S3260 Calculate Normalization Deformation

- the normalization unit 1040 uses a coordinate conversion function (deformation) between the coordinate systems as information representing conversion from the prone position MRI image coordinate system to the prone position normalized coordinate system.

- the process of calculating the field is executed. That is, the normalization unit 1040 spatially interpolates the result group of the discrete coordinate conversion to the prone position normalized coordinate system of the body surface and pectoral muscle surface obtained in steps S3240 and S3250, and Calculate the dense transformation from the MRI image coordinate system to the prone position normalized coordinate system.

- this processing can be realized by a known interpolation method using a radial basis function, a B-spline, or the like.

- ⁇ p (x) The transformation function from the prone position MRI image coordinate system calculated in this processing step to the prone position normalized coordinate system is expressed as ⁇ p (x) in this embodiment.

- ⁇ p (x) is a function that takes the position coordinate value in the prone position MRI image coordinate system as an argument and returns the corresponding position coordinate value in the prone position normalized coordinate system.

- the normalization unit 1040 calculates a function that returns the position of the prone position MRI image coordinate system corresponding to the position coordinate value in the prone position normalization coordinate system as an argument, similarly to ⁇ p (x). .

- this is expressed as ⁇ p ⁇ 1 (x).

- ⁇ p ⁇ 1 (x) is defined in a predetermined rectangular region in the prone position normalized coordinate system.

- the rectangular area is, for example, a rectangular area that includes all of s ′ p, surface, i and s ′ p, pectral, i .

- Equation 8 s' p, surface, i ⁇ ⁇ p (s p, surface, i ) (Equation 9) s' p, pectral, i ⁇ ⁇ p (s p, pectral, i ) (Equation 10) s p, surface, i ⁇ ⁇ p -1 (s' p, surface, i ) (Equation 11) s p, pectral, i ⁇ ⁇ p -1 (s' p, pectral, i )

- Step S340 Acquisition of Supine Position MRI Image

- the image acquisition unit 1000 captures an MRI image (a supine position MRI image) obtained by imaging the subject's breast in the supine position from the data server 120 to the processing apparatus 100. Execute the process. Since this process can be executed in the same procedure as Step S300 for the prone position MRI image, detailed description thereof is omitted.

- the acquired supine position MRI image is three-dimensional volume data in the supine position MRI image coordinate system.

- Step S350 Extract anatomical features from the supine position MRI image

- the anatomical feature extraction unit 1020 processes the supine position MRI image acquired in step S340, thereby anatomical features in the supine position of the subject. Execute the process to extract. Since this process can be executed by applying the same process as step S310 for the prone position MRI image to the supine position MRI image, detailed description thereof will be omitted.

- the body surface shape in the supine position extracted in this processing step is s s, surface, i (1 ⁇ i ⁇ N s, surface ), and the pectoral muscle surface shape is s s, pectral, i (1 ⁇ i ⁇ N s, pectral ), the nipple position is expressed as x s, surface , and the pectoral muscle surface reference point is expressed as x s, pectral .

- Step S360 Calculation of conversion to the supine position normalized coordinate system

- the normalization unit 1040 calculates the shape of the subject in the supine position based on the anatomical features in the supine position extracted in step S350. Derive a normalization transformation to transform to the reference shape.

- the normalizing unit 1040 executes a process of calculating a coordinate conversion function between these coordinate systems as information representing conversion from the supine position MRI image coordinate system to the supine position normalized coordinate system. Since this process can be executed by applying the same procedure as step S320 for the prone anatomical feature to the supine anatomical feature, detailed description thereof will be omitted.

- the conversion function from the supine position MRI image coordinate system acquired in this processing step to the supine position normalized coordinate system is denoted as ⁇ s (x).

- a conversion function from the supine position normalized coordinate system to the supine position MRI image coordinate system is denoted as ⁇ s ⁇ 1 (x).

- Step S380 Deformation of MRI Image

- the image deformation unit 1060 executes a process of generating a deformed image obtained by deforming the prone position MRI image into the supine position based on the processing results of steps S320 and S360. Specifically, the image deformation unit 1060, prone position for all voxels that constitutes the MRI image (pixels constituting the volume data), and the coordinate transformation by calculating the position x p number 12 of that voxel, conversion The subsequent position xd is calculated.

- x d ⁇ s -1 [ ⁇ ps ⁇ p (x p ) ⁇ ]

- the image transformation unit 1060 generates volume data having a luminance value I p (x p ) at the converted position x d .

- the function ⁇ ps (x) is an arbitrary conversion function between the prone position normalized coordinate system and the supine position normalized coordinate system, and is assumed to be an identity function shown in Equation 13 herein.

- the domain of the transformation function ⁇ ps (x) is the same rectangular area as ⁇ p ⁇ 1 (x).

- x ⁇ ps (x)

- the volume data generated by the above processing is expressed as a modified MRI image I d (x).

- I d (x) I p [ ⁇ p -1 ⁇ ps -1 ( ⁇ s (x)) ⁇ ]

- the calculation of the number 12 executed in this processing step means the following. That is, the position x p in the prone position MRI image coordinate system is converted into the prone position normalized coordinate system, and the coordinate value is supine after equating the prone position normalized coordinate system and the supine position normalized coordinate system.

- the position has been converted to the MRI image coordinate system.

- it is assumed that the difference in shape between the prone position and the supine position is canceled by the respective normalizations (anatomically identical points are mapped to the approximate coordinates of the normalized coordinate system by normalization). Based on that assumption, it means that conversion between the prone position MRI image coordinate system and the supine position MRI image coordinate system is required (deformation alignment is performed).

- ⁇ ps (x) may be a deformation function that increases the similarity between images between the deformed MRI image and the supine position MRI image.

- ⁇ ps (x) it can be expressed using FFD (Free Form Deformation), which is one of the typical methods of nonlinear coordinate transformation. In this case, processing for optimizing the FFD deformation parameters is performed so as to maximize the similarity between images shown in Formula 15.

- I s (x) is a supine position MRI image

- ⁇ is a breast region in the supine position MRI image coordinate system.

- Optimization of FFD deformation parameters is performed by well-known nonlinear optimization methods such as steepest gradient method.

- the similarity between images may be any known method for calculating the similarity between images, such as a method using cross-correlation or mutual information, in addition to the calculation method shown in Equation 15.

- deformation with high similarity in the luminance values of the prone position MRI image and the supine position MRI image is performed. Can be generated. For this reason, there is an effect that the alignment between the prone position MRI image and the supine position MRI image can be executed with higher accuracy.

- Step S390 In the display step S390 the deformed image, the observation image generating unit 1080 generates modified image I d (x) generated in step S380, an observation image obtained by arranging the supine position MRI image I s (x) To do. At this time, the observation image generation unit 1080 cuts out an image (cross-sectional image) obtained by cutting each of the deformed image I d (x) and the supine position MRI image I s (x) at an arbitrary plane in accordance with a user operation, These can be arranged side by side to form an observation image.

- the observation image generation unit 1080 may configure an observation image by arranging images obtained by volume rendering of the deformed image I d (x) and the supine position MRI image I s (x). Further, the observation image generation unit 1080 may configure an observation image by superimposing or fusing cross-sectional images generated from the deformed image I d (x) and the supine position MRI image I s (x). Then, the observation image generation unit 1080 executes processing for displaying the observation image generated by the above processing on the monitor 160.

- the processing of the processing apparatus 100 according to the first embodiment is performed by the method described above.

- normalization is performed in consideration of biomechanical characteristics related to the deformation of the breast, so that the change in position due to the deformation can be generally absorbed and converted into an anatomically common space. Therefore, normalization conversion can be performed in which each of the breasts of the subject imaged in the prone position and the supine position is mapped to a space that approximately matches anatomically.

- the normalization unit 1040 has been described as an example in which the breast region 402 illustrated in FIG. 6A generates a normalization deformation having a rectangular shape illustrated in FIG.

- the shape is not limited to this case.

- the breast region in the normalized coordinate system may have a shape other than the rectangular shape.

- it may be a shape surrounded by an arbitrary geometric curved surface such as a quadric surface.

- the processing apparatus 100 may be provided with a plurality of pieces of shape information in advance, and the user may arbitrarily select among them.

- the processing apparatus 100 presents the prone position MRI image and the supine position MRI image to the user by displaying them on the monitor 160, for example, so that the user can select appropriate shape information while observing the image.

- the normalization deformation method can be adaptively selected for the characteristics of the breast that can have various shapes for each subject, and there is an effect that alignment with higher accuracy can be performed.

- the image deforming unit 1060 generates a deformed MRI image obtained by deforming the prone position MRI image in the process of step S380 has been described.

- the MRI image to be performed is not limited to this example.

- the image deforming unit 1060 converts the prone position MRI image into the prone position normalized coordinate system and the supine position MRI image into the supine position normalized coordinate system. A case where a supine position normalized image is generated and these images are displayed side by side will be described.

- step S380 and step S390 in the present embodiment will be described.

- step S380 the image deforming unit 1060 generates a prone position normalized image I pd obtained by converting the prone position MRI image I p to the prone position normalized coordinate system based on the conversion function ⁇ p ⁇ 1 (x).

- 7A to 7D are diagrams showing specific examples of images generated by this processing.

- the prone position MRI image 400 is an example schematically representing I p .

- the image deforming unit 1060 deforms the prone position MRI image 400 based on the transformation function ⁇ p ⁇ 1 (x), thereby normalizing the prone position as an image in the prone position normalized coordinate system, as shown in FIG.

- An image 410, i.e., I pd is generated.

- Image transforming unit 1060 in addition step S380 performs the same processing for the supine position MRI image I s, is modified based supine MRI image 420 of FIG. 7C to ⁇ s -1 (x), supine Figure 7D A position normalized image 430, ie, I sd is generated.

- the prone position normalized image I pd and the supine position normalized image I sd which are three-dimensional volume data are generated.

- the value of the Y coordinate generally represents the ratio of the distance between the body surface and the pectoral muscle surface.

- the slice images I slice, pd and I slice, sd have a curved surface shape with a constant ratio of the distance between the body surface and the pectoral muscle surface in the prone MRI image coordinate system and the supine MRI image coordinate system.

- the cross section (curved cross section) is cut out. For this reason, there is an effect that the two images can be easily compared with each other from a medical and anatomical viewpoint. For example, there is an effect that a single cross-sectional image can compare and observe the running of superficial blood vessels existing near the body surface of the breast and the spread of the mammary gland in the breast region over a wide range.

- the process of generating and displaying the curved section of the MRI image as described above does not necessarily have to be performed on both the prone position MRI image and the supine position MRI image.

- the processing apparatus 100 receives either the prone position MRI image or the supine position MRI image as input, and executes the process from step S300 to step S320 of the first embodiment to generate the curved section described above. May be displayed. According to this method, there is an effect that it is possible to perform advanced image observation from a medical / anatomical viewpoint such as running of superficial blood vessels and spreading of mammary glands.

- the processing apparatus 200 applies normalization processing similar to that of the first embodiment to multiple cases (learning cases), and each normalized A model related to deformation (statistic deformation model) is constructed by statistically processing the deformation of the case.

- normalization between different cases will be described.

- At least the normal human body has almost the same anatomical structure from a topological point of view, regardless of individual differences. Individual differences can be absorbed by scale conversion (similarity conversion).

- scale conversion similarity conversion

- the nipple, body surface, pectoral muscle surface, midline, head-tail direction (body axis), etc. are characteristic geometric structures that are anatomically common to all individuals.

- the processing apparatus 200 considers the space normalized by the characteristic geometric structure in addition to the scale conversion between individuals, and the breasts of human bodies of different individuals are included in the space. Convert coordinates. Thereby, the difference between individuals can be generally absorbed and converted into an anatomically common space.

- the processing apparatus 200 estimates the deformation by fitting a statistical deformation model to an unknown case (target case) different from the learning case. Thereby, the deformation position alignment between the MRI images of the prone position and the supine position of the target case is performed with high accuracy.

- FIG. 8 is a diagram showing a configuration of a processing system according to the present embodiment.

- the processing device 200 in this embodiment includes an image acquisition unit 1000, an anatomical feature extraction unit 1020, a normalization unit 1040, a learning case deformation generation unit 1220, a scale calculation unit 1230, and a statistical deformation model generation unit. 1240, a subject case deformation generation unit 1420, an image deformation unit 1060, and an observation image generation unit 1080.

- generation part 1220 produces

- the scale calculation unit 1230 calculates a scale for each of the learning cases.

- the statistical deformation model generation unit 1240 generates a statistical deformation model based on the deformation / scale regarding each of the learning cases.

- the target case deformation generation unit 1420 generates a deformation between the prone position and the supine position of the target case.

- the processing of the processing device 200 includes a learning phase process and a deformation estimation phase process.

- the learning phase process is performed, and then the deformation estimation phase process is performed.

- the process of the learning phase the process of learning the deformation between the MRI images of the prone position and the supine position of many cases and generating a statistical deformation model is executed.

- the deformation estimation phase the deformation positioning between the prone position and the supine position of the target case is executed using the statistical deformation model calculated in the learning phase.

- the processing device 200 executes both the learning phase process and the deformation estimation phase process will be described as an example.

- the learning phase process and the deformation estimation phase process are described. May be executed by a different processing apparatus.

- the processing device 200 according to the present embodiment is not limited to executing both the learning phase process and the deformation estimation phase process, and may execute only the learning phase process, for example.

- the provision of the statistical deformation model itself obtained as a result of the learning phase process is also included in the present embodiment.

- FIG. 9 is a flowchart for explaining the procedure of the learning phase process performed by the processing apparatus 200 according to this embodiment.

- learning phase processing the learning phase processing of the present embodiment will be described in detail.

- Step S500 Calculation of Learning Case Image

- the image acquisition unit 1000 executes a process of acquiring prone MRI images and supine MRI images of N samples of learning cases. This process can be executed by applying the same process as steps S300 and S340 of the first embodiment to each of the N samples learning cases. Detailed description is omitted.

- Step S510 Extracting Anatomical Features of Learning Case

- the anatomical feature extracting unit 1020 performs anatomy by processing each of the prone position MRI image and the supine position MRI image of the learning case acquired in step S500.

- a process for extracting academic features is executed. This process can be executed by applying the same process as steps S310 and S350 of the first embodiment to each of the N samples learning cases. Detailed description is omitted.

- Step S520 Calculate a deformation function to the normalized coordinate system of the learning case

- the normalization unit 1040 for each of the learning cases based on the anatomical features of the learning case extracted in step S510

- a normalization transformation is derived that transforms the shape into a reference shape.

- the normalization unit 1040 executes a process of calculating a deformation function from the prone position MRI image coordinate system to the prone position normalized coordinate system for each prone position MRI image.

- the normalization unit 1040 executes a process of calculating a deformation function from the supine position MRI image coordinate system to the supine position normalized coordinate system for each of the supine position MRI images.

- the deformation function of the prone position normalized coordinate system is expressed as ⁇ p, j (x) from the prone position MRI image coordinate system of the learning case calculated by the above processing.

- the transformation function from the supine position MRI image coordinate system to the prone position normalized coordinate system is expressed as ⁇ s, j (x).

- j means the case number of the learning case, and 1 ⁇ j ⁇ N samples . That is, a process for obtaining a deformation function is executed for each of the N samples learning cases.

- Step S540 Calculate a conversion function from the prone position normalized coordinate system of the learning case to the supine position normalized coordinate system.

- the learning case deformation generation unit 1220 determines the prone position normalized coordinate system for each of the learning cases.

- a process of calculating a conversion function from to the supine position normalized coordinate system is executed. Specifically, the learning case deformation

- generation part 1220 acquires the position which respond

- FIG. it is assumed that the corresponding position is input from the user.

- the corresponding positions in the input prone position MRI image coordinate system and supine position MRI image coordinate system are x p, corres, k and x s, corres, k , respectively.

- k is the index of the corresponding points is 1 ⁇ k ⁇ N corres when the number of positions corresponding to N corres.

- the corresponding position is, for example, a branch point of a blood vessel in an MRI image, a part having a characteristic structure of a mammary gland, or the like, and is a set of positions to which a user can give a correspondence visually.

- generation part 1220 converts each of acquired xp, corres, k, xs, corres, k using the conversion to the normalization coordinate system acquired by step S520. Specifically, the learning case deformation

- the learning case deformation generation unit 1220 converts the prone position normalized coordinate system to the supine position normalized coordinate system based on the calculated x ′ p, corres, k and x ′ s, corres, k .

- the conversion function ⁇ ps, j (x) is calculated.

- generation part 1220 calculates conversion function (phi) ps, j (x) so that the relationship of several 18 may be approximated with the minimum error.

- the transformation function ⁇ ps, j (x) is a continuous function defined in the prone position normalized coordinate system, specifically expressed using FFD (Free Form Deformation), RBF (Radial Basis Function), etc. You can do that.

- generation part 1220 in the positional relationship between a prone position and a supine position, the conditions that body surfaces and pectoral muscle surfaces agree, and the inside of the breast which the user input etc. It is possible to obtain a conversion function that considers both the corresponding position information.

- generation part 1220 performs the process demonstrated above about each of N samples learning cases, and is converted from a prone position normalization coordinate system to a supine position normalization coordinate system about each case.

- the conversion function ⁇ ps, j (x) is calculated.

- generation part 1220 acquires the information of the position corresponding to both a prone position MRI image coordinate system and a supine position MRI image coordinate system, and based on it, deformation

- generation part 1220 is the deformation

- the learning case deformation generation unit 1220 determines the similarity between images of the prone position MRI image and the supine position MRI image. Based on this, the deformation function ⁇ ps, j (x) may be calculated. According to said method, there exists an effect which can acquire the deformation

- Step S550 Calculation of Learning Case Scale

- the scale calculation unit 1230 executes a process of calculating a case scale for each of the learning cases.

- the scale of the case is a numerical value representing the size of the breast region that is different for each case.

- the scale is calculated by, for example, measuring the distance between the nipple position of the subject in the prone position and the body surface position immediately above the midline closest to the nipple position.

- the user may input a numerical value measured directly using a measuring instrument to the subject in the prone position into the processing apparatus 200.

- the processing apparatus 200 may be configured to measure the above distance value on the prone position MRI image.

- the processing apparatus 200 may calculate the measurement value by automatically processing the prone position MRI image.

- the processing device 200 may present the prone position MRI image to the user using the monitor 160 or the like, and obtain the measurement value by operating the mouse 170 or the keyboard 180 by the user.

- the processing apparatus 200 displays a prone position MRI image on the monitor 160, and displays the nipple position of the subject depicted on the image and the body surface position directly on the midline closest to the nipple position. This can be achieved by calculating the distance between them.

- the method for calculating the scale of the case using the distance (Euclidean distance) between the nipple position and the body surface position on the midline closest to the nipple position has been described. It is not limited to this method.

- the scale may be calculated using the geodesic distance between the two points. According to this, there is an effect that it is possible to calculate a scale value in consideration of the difference in the shape of the breast for each case.

- the calculation of the scale is not limited to the distance between the two points or the geodesic distance, and may be calculated based on the volume of the breast region, the distance to the outer edge of the breast, the chest circumference of the subject, and the like. . Further, the method for calculating the scale is not limited to one.

- the scale may be calculated based on values calculated by a plurality of types of methods.

- a multi-dimensional scale value obtained by vectorizing values calculated from a plurality of types may be used, or a scale value may be calculated as a scalar value by an average operation or a linear combination operation of values calculated by a plurality of types. May be.

- the scale calculated by the method described above is expressed as v j (1 ⁇ j ⁇ N samples ).

- the scale value is the ratio between the scalar value, which is the distance (Euclidean distance) between the nipple position in the prone position and the body surface position on the median line closest to the nipple position, and a predetermined reference value.

- the predetermined reference value is, for example, a value of a distance between a nipple position in a standard breast and a body surface position on the midline closest to the nipple position.

- Step S580 Generation of Statistical Deformation Model

- the statistical deformation model generation unit 1240 is based on ⁇ ps, j (x), v j related to N samples objects calculated by the processing from step S500 to step S570.

- FIG. 10 is a flowchart for explaining the process of step S580 in more detail. Hereinafter, description will be given along the flowchart of FIG.

- Step S5800 Scaling of ⁇ ps, j (x)

- the statistical deformation model generation unit 1240 is based on the transformation function ⁇ ps, j (x) and the scale v j for N samples cases.

- the conversion function ⁇ ′ ps, j (x) is calculated as shown in Equation 19.

- the domain of ⁇ ′ ps, j (x) is an area that is reduced by the scale value v j of the case with respect to the X coordinate and the Z coordinate as compared with the domain of ⁇ ps, j (x).

- Statistical deformation model generation unit 1240 the above process the N samples number of conversion functions phi ps of all cases, j (x) (1 ⁇ j ⁇ N samples) was performed for the conversion function phi 'scaled processing each case ps, j (x) (1 ⁇ j ⁇ N samples ) is calculated.

- Step S5820 Vectorization of ⁇ ′ ps, j (x)

- the statistical deformation model generation unit 1240 discretizes the transformation function ⁇ ′ ps, j (x) after the scaling processing calculated in step S5800. Execute the process. This discretization process is executed according to the following procedure.

- the statistical deformation model generation unit 1240 obtains a common domain of the conversion function ⁇ ′ ps, j (x) for N samples cases.

- Each of the N samples conversion functions ⁇ ′ ps, j (x) has a different domain depending on the shape of the body surface and the pectoral muscle surface of each case, the scale value, etc.

- N samples The region where ⁇ ′ ps, j (x) is defined in all cases is defined as a common domain. Therefore, in this domain, all N samples conversion functions ⁇ ′ ps, j (x) have values.

- the statistical deformation model generation unit 1240 samples the value of the conversion function ⁇ ′ ps, j (x) over the common domain, and generates a discretized vector in which the sampling results are arranged vertically.

- the generation of the discretized vector is a vector in which values sampled in a raster scan form at predetermined intervals in the above defined area are arranged in order. Since the transformation function ⁇ ′ ps, j (x) is a function that returns a three-dimensional value of x, y, and z, the statistical deformation model generation unit 1240 generates a discretized vector for each coordinate axis.

- the discretized vectors for the three-dimensional coordinate axes of x, y, and z are p x, j , p y, j , and p z, j .

- the discretized vector is a real vector having dimensions corresponding to the number of times of sampling by the raster scan.

- the statistical deformation model generation unit 1240 applies the processing described above to all N samples conversion functions ⁇ ′ ps, j (x). As a result, N samples discretized vectors p x, j , p y, j and p z, j are obtained. Note that the discretized vector obtained by the raster scan sampling can be inversely converted into a real space conversion function by an arbitrary interpolation function or the like. In the present embodiment, an interpolating function f interp (p x, j , p y, j , p z) using the discretized vectors p x, j , p y, j , p z, j and the real space position x as arguments. , j , x) approximate ⁇ ′ ps, j (x).

- Step S5840 Statistical Deformation Model Generation by Principal Component Analysis

- the statistical deformation model generation unit 1240 the discretization vector p x, j , p y, j , p z, j (1 ⁇ j calculated in step S5820)

- a process of generating a statistical deformation model is executed by performing principal component analysis of ⁇ N samples ). Since the principal component analysis can be executed by a known method, a detailed description is omitted here.

- the statistical deformation model generation unit 1240 calculates the mean vector e ave, x , e ave, y , e ave, z and the eigenvectors e x, k , e y, k , e z for each discretization vector. , k (1 ⁇ k ⁇ N mode ).

- N mode is the total number of eigenvectors calculated by principal component analysis, and can be set, for example, by setting a predetermined threshold for the cumulative contribution rate calculated by principal component analysis.

- the average vector and eigenvector calculated by the above processing are called a statistical deformation model.

- Equation 20 E x is a matrix in which e x, k are arranged horizontally.

- b is a vector in which b k is vertically arranged, and in the present embodiment, this is called a coefficient vector.

- the learning phase processing of the present embodiment is executed by the processing from step S500 to step S580 described above. As a result of this processing, a statistical deformation model is generated.

- FIG. 11 is a flowchart for explaining the processing procedure of the deformation estimation phase performed by the processing device 200 according to this embodiment.

- the process of the deformation estimation phase of the present embodiment will be described in detail according to the processing procedure shown in this flowchart.

- Step S600 Statistical Deformation Model Reading

- the processing device 200 executes a process of reading the statistical deformation model generated by the learning phase processing into the main memory 212 of the processing device 200.

- Step S602 Image acquisition of target case

- the image acquisition unit 1000 executes a process of acquiring MRI images of the prone position and the supine position of the target case. This processing can be executed by the same processing as Step S300 and Step S340 of the first embodiment. Detailed description is omitted.

- Step S604 Extracting Anatomical Features of Target Case

- the anatomical feature extracting unit 1020 processes the prone and supine MRI images of the target case acquired in step S602, and anatomy of the target case A process for extracting features is executed. This process can be executed by the same process as the process of step S310 and step S350 of the first embodiment. Detailed description is omitted.

- Step S610 Calculate transformation of the target case into a normalized coordinate system

- the normalization unit 1040 based on the anatomical features of the target case extracted in step S604, from the prone position MRI image coordinate system of the target case

- a process of calculating conversion to the prone position normalized coordinate system and conversion from the supine position MRI image coordinate system to the supine position normalized coordinate system is executed. This process is executed by applying the same process as the process of step S520 described as the process of the learning phase to the target case. Detailed description is omitted.

- the transformation from the prone position MRI image coordinate system calculated by this processing to the prone position normalized coordinate system is defined as ⁇ p, target (x). Also, let ⁇ s, target (x) be the transformation from the supine position MRI image coordinate system to the supine position normalized coordinate system.

- Step S630 Scale calculation of target case

- the scale calculation unit 1230 executes a process of calculating the scale of the target case. This process is executed by applying the same process as the process of step S550 described as the process of the learning phase to the target case. Detailed description is omitted.

- the scale of the target case calculated by this processing is set as v target .

- Step S640 Optimization of coefficients of statistical deformation model

- the target case deformation generation unit 1420 performs a process of calculating conversion between the prone position MRI image coordinate system and the supine position MRI image coordinate system of the target case. Execute. That is, the target case deformation generation unit 1420 executes deformation alignment processing between the prone position MRI image and the supine position MRI image. This process is executed based on the statistical deformation model acquired in the process of the learning phase and the MRI images of the prone position and the supine position of the target case. Specifically, a coefficient vector b that maximizes the evaluation function G (b) calculated by the calculation shown in Equation 21 is calculated.

- G (b) G simil ⁇ D (I p , b), I s ⁇

- the I p prone position MRI image, I s of the object cases are supine position MRI image of the object cases.

- the function G simil (I 1 , I 2 ) is a function for evaluating the degree of similarity between two images given as arguments. For example, a known image between SSD, SAD, cross-correlation, mutual information, etc. This can be realized by the similarity evaluation method.

- the function D (I, b) is a function that deforms the image I based on the coefficient vector b of the statistical deformation model. More specifically, the function D (I, b) performs the following processing. That is, based on the coefficient vector b, the discretized vectors p x , p y , and p z are calculated by the calculation shown in Equation 22. (Equation 22)

- ⁇ target (x) ⁇ s -1 [f interp ⁇ p x , p y , p z , ⁇ p (x) ⁇ ]

- a conversion function ⁇ ′ target (x) obtained by scaling the conversion function ⁇ target (x) using the scale v target of the target case calculated in step S630 is calculated by Expression 24 (Expression 24)

- ⁇ ' target (x') ⁇ target (x) x v target

- x ′ (x ⁇ v target , y, z ⁇ v target ) T

- x (x, y, z) T.

- ⁇ ′ target (x) is a function obtained by scaling ⁇ target (x) by the scale value v j of the target case with respect to the X coordinate and the Z coordinate and further scaling the function value by the scale value v target .

- Equation 21 The function D (I, b) deforms the image I based on the deformation function ⁇ ′ target (x). That is, D (I p , b) in Equation 21 is calculated as shown in Equation 25 below.

- the evaluation function G (b) shown in Equation 21 evaluates the similarity between the image obtained by deforming the prone position MRI image and the supine position MRI image based on the coefficient vector b.

- the target case deformation generation unit 1420 uses a nonlinear optimization method such as the steepest descent method, the quasi-Newton method, or the conjugate gradient method to maximize the coefficient vector b

- the process of calculating is executed.

- the coefficient vector obtained by this processing is expressed as b opt .

- the case where the coefficient vector is calculated based on the inter-image similarity between the deformed MRI image obtained by deforming the prone position MRI image and the supine position MRI image is described as an example. Is not limited to this example.

- the processing device 200 acquires these position information, and the target case deformation generation unit 1420

- a coefficient vector may be calculated so as to approximate the relationship. For example, when the processing device 200 acquires the corresponding position by the user's input, there is an effect that the deformation can be estimated so that the position that the user wants to match between both images is matched. .

- a new evaluation function is added to the evaluation function for the similarity between images shown in Equation 21 and an evaluation function for the error of the corresponding position between the prone position MRI image and the supine position MRI image of the target case. Also good. According to this, there is an effect that more accurate deformation estimation can be performed based on both information of the similarity between images and the corresponding position input by the user.

- the above-described corresponding position information is not necessarily acquired by user input, and a feature point detection / feature point association method using nSIFT or the like from both prone MRI images and supine MRI images For example, the corresponding position information may be automatically acquired. According to this, there is an effect that the deformation estimation can be executed more efficiently.

- the coefficient vector calculated in this processing step does not necessarily have to be obtained by maximizing the evaluation function.

- the coefficient vector may be a zero vector.

- the deformation calculated in this processing step is the average deformation calculated in S580, which is the processing of the learning phase in the present embodiment, but ⁇ ps (x) is identical in the first embodiment described above. According to the comparison with the case of using a function, more accurate deformation estimation can be performed. ]

- Step S650 Deformation of MRI Image

- the image deformation unit 1060 executes a process of generating a deformed MRI image obtained by deforming the prone position MRI image based on the conversion calculated in step S640. Specifically, the image deformation unit 1060 uses the image deformation function D (I, b) shown in Equation 24 and deforms the prone position MRI image I p of the target case based on the coefficient vector b opt. MRI image Id is calculated.

- Step S660 displaying a deformed image step S600

- the observation image generating unit 1080 generates an observation image obtained by arranging the supine position MRI image I s modified MRI image I d and the object cases generated in step S650. Since the specific process of this process step is the same as the process of step S390 in the first embodiment, detailed description thereof is omitted.

- the deformation estimation phase processing of this embodiment is executed by the processing from step S600 to step S660 described above.