WO2010058546A1 - 立体視再生を行う再生装置、再生方法、プログラム - Google Patents

立体視再生を行う再生装置、再生方法、プログラム Download PDFInfo

- Publication number

- WO2010058546A1 WO2010058546A1 PCT/JP2009/006115 JP2009006115W WO2010058546A1 WO 2010058546 A1 WO2010058546 A1 WO 2010058546A1 JP 2009006115 W JP2009006115 W JP 2009006115W WO 2010058546 A1 WO2010058546 A1 WO 2010058546A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- plane

- video

- offset

- image

- data

- Prior art date

Links

- 238000000034 method Methods 0.000 title claims description 164

- 238000003860 storage Methods 0.000 claims description 179

- 238000012545 processing Methods 0.000 claims description 126

- 230000015654 memory Effects 0.000 claims description 94

- 238000004364 calculation method Methods 0.000 claims description 51

- 230000015572 biosynthetic process Effects 0.000 claims description 34

- 238000003786 synthesis reaction Methods 0.000 claims description 33

- 230000033001 locomotion Effects 0.000 claims description 24

- 230000002194 synthesizing effect Effects 0.000 claims description 4

- 238000013507 mapping Methods 0.000 claims 2

- 230000008859 change Effects 0.000 abstract description 17

- 230000002452 interceptive effect Effects 0.000 description 219

- 230000008569 process Effects 0.000 description 90

- 238000010586 diagram Methods 0.000 description 40

- 230000006870 function Effects 0.000 description 32

- 230000000694 effects Effects 0.000 description 31

- 238000007726 management method Methods 0.000 description 28

- 239000004973 liquid crystal related substance Substances 0.000 description 27

- 239000011521 glass Substances 0.000 description 26

- 230000009467 reduction Effects 0.000 description 22

- 239000004065 semiconductor Substances 0.000 description 20

- 239000002131 composite material Substances 0.000 description 19

- 239000000203 mixture Substances 0.000 description 19

- 238000009877 rendering Methods 0.000 description 16

- 239000000872 buffer Substances 0.000 description 15

- 230000003287 optical effect Effects 0.000 description 14

- 208000003464 asthenopia Diseases 0.000 description 10

- 230000005540 biological transmission Effects 0.000 description 8

- 238000001514 detection method Methods 0.000 description 8

- 230000001360 synchronised effect Effects 0.000 description 8

- 238000005516 engineering process Methods 0.000 description 7

- 230000003068 static effect Effects 0.000 description 7

- 230000007246 mechanism Effects 0.000 description 6

- 230000004044 response Effects 0.000 description 6

- 238000004458 analytical method Methods 0.000 description 5

- 238000013461 design Methods 0.000 description 5

- 230000001172 regenerating effect Effects 0.000 description 5

- 238000004422 calculation algorithm Methods 0.000 description 4

- 238000006243 chemical reaction Methods 0.000 description 4

- 238000012546 transfer Methods 0.000 description 4

- 230000011664 signaling Effects 0.000 description 3

- 230000000007 visual effect Effects 0.000 description 3

- 239000003855 balanced salt solution Substances 0.000 description 2

- 238000006073 displacement reaction Methods 0.000 description 2

- 229940053083 eye stream Drugs 0.000 description 2

- 238000001914 filtration Methods 0.000 description 2

- 238000005206 flow analysis Methods 0.000 description 2

- 230000006872 improvement Effects 0.000 description 2

- 238000012423 maintenance Methods 0.000 description 2

- 238000005457 optimization Methods 0.000 description 2

- 230000002093 peripheral effect Effects 0.000 description 2

- 238000013468 resource allocation Methods 0.000 description 2

- 238000000926 separation method Methods 0.000 description 2

- 239000000758 substrate Substances 0.000 description 2

- 230000002123 temporal effect Effects 0.000 description 2

- 230000001960 triggered effect Effects 0.000 description 2

- 206010053615 Thermal burn Diseases 0.000 description 1

- 230000004913 activation Effects 0.000 description 1

- 239000008186 active pharmaceutical agent Substances 0.000 description 1

- 230000001174 ascending effect Effects 0.000 description 1

- 230000006399 behavior Effects 0.000 description 1

- 230000033228 biological regulation Effects 0.000 description 1

- 239000003086 colorant Substances 0.000 description 1

- 238000004891 communication Methods 0.000 description 1

- 238000012790 confirmation Methods 0.000 description 1

- 238000005520 cutting process Methods 0.000 description 1

- 238000011161 development Methods 0.000 description 1

- 230000018109 developmental process Effects 0.000 description 1

- 230000007717 exclusion Effects 0.000 description 1

- 210000003128 head Anatomy 0.000 description 1

- 238000003780 insertion Methods 0.000 description 1

- 230000037431 insertion Effects 0.000 description 1

- 230000010354 integration Effects 0.000 description 1

- NUHSROFQTUXZQQ-UHFFFAOYSA-N isopentenyl diphosphate Chemical compound CC(=C)CCO[P@](O)(=O)OP(O)(O)=O NUHSROFQTUXZQQ-UHFFFAOYSA-N 0.000 description 1

- 238000004519 manufacturing process Methods 0.000 description 1

- 230000008447 perception Effects 0.000 description 1

- 230000036316 preload Effects 0.000 description 1

- 238000003825 pressing Methods 0.000 description 1

- 238000011946 reduction process Methods 0.000 description 1

- 238000011069 regeneration method Methods 0.000 description 1

- 239000011347 resin Substances 0.000 description 1

- 229920005989 resin Polymers 0.000 description 1

- 238000013519 translation Methods 0.000 description 1

- 238000002834 transmittance Methods 0.000 description 1

- 238000012795 verification Methods 0.000 description 1

Images

Classifications

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N13/00—Stereoscopic video systems; Multi-view video systems; Details thereof

- H04N13/10—Processing, recording or transmission of stereoscopic or multi-view image signals

- H04N13/106—Processing image signals

- H04N13/139—Format conversion, e.g. of frame-rate or size

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N13/00—Stereoscopic video systems; Multi-view video systems; Details thereof

- H04N13/10—Processing, recording or transmission of stereoscopic or multi-view image signals

- H04N13/106—Processing image signals

- H04N13/128—Adjusting depth or disparity

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N13/00—Stereoscopic video systems; Multi-view video systems; Details thereof

- H04N13/10—Processing, recording or transmission of stereoscopic or multi-view image signals

- H04N13/106—Processing image signals

- H04N13/156—Mixing image signals

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N13/00—Stereoscopic video systems; Multi-view video systems; Details thereof

- H04N13/10—Processing, recording or transmission of stereoscopic or multi-view image signals

- H04N13/106—Processing image signals

- H04N13/161—Encoding, multiplexing or demultiplexing different image signal components

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N13/00—Stereoscopic video systems; Multi-view video systems; Details thereof

- H04N13/10—Processing, recording or transmission of stereoscopic or multi-view image signals

- H04N13/106—Processing image signals

- H04N13/172—Processing image signals image signals comprising non-image signal components, e.g. headers or format information

- H04N13/183—On-screen display [OSD] information, e.g. subtitles or menus

Definitions

- the present invention belongs to the technical field of stereoscopic reproduction.

- Stereoscopic playback technology refers to a technology that artificially creates a stereoscopic image by introducing a mechanism to show different pictures for the left and right eyes and using the parallax between the eyes.

- stereoscopic displays for allowing the user to view stereoscopic images.

- one of the commonly used methods is a method using shutter glasses.

- the viewer's left eye and right eye fields of view are alternately covered with glasses at high speed, and the display image on the display is updated at high speed for the left and right eyes in synchronization with the operation of the glasses.

- the left-eye image displayed on the display as a result can be seen only by the shutter glasses, while the right-eye image can be seen only by the right eye.

- the first method is a method in which video streams for left eye and right eye are prepared, corresponding left eye subtitles and right eye subtitles are prepared separately, and each is superimposed and displayed.

- the second method is a method of bringing a stereoscopic effect to a video from one video stream and corresponding depth information, and superimposing a caption object thereon, as in Patent Document 2.

- the depth of the portion where the subtitle overlaps with the video is displayed as zero parallax, that is, the depth is eliminated, so that the user does not feel the difference in depth between the subtitle and the video.

- the third method is a method in which one caption object is prepared for the left-eye video stream and the right-eye video stream prepared in advance, and a stereoscopic effect with a plane shift is superimposed from the depth information.

- the second method eliminates the need to create left and right graphics streams for subtitle display, and can reduce the burden of authoring, but it loses a sense of depth when the subtitles overlap the stream. So it doesn't look good.

- the plane shift method it is not necessary to create both left and right graphics streams for subtitles / GUI, and there is no loss of depth in the part where subtitles / GUI overlaps with moving images. Will be the most ideal. However, the plane shift has a negative effect when a scaling function for enlarging or reducing the displayed screen is executed.

- the character is reduced by scaling, but the character position remains the same as before the scaling, and the depth of the subtitle character is maintained.

- the depth of the video is reduced, but the subtitles remain as they are, and the difference between the three-dimensionality of the video and the subtitles / GUI becomes severe during scaling.

- the above-described problems do not occur if scaling is prohibited in a state in which captions are synthesized.

- the current playback device displays a full-screen menu and displays a reduced scaled video on it when a menu call operation is performed during playback of a full-screen moving image. To do. This is because such processing makes it possible to widen the field of view of the menu without disturbing the viewing of the video.

- GUI processing with video scaling broadens the menu field of view and preserves user convenience, so omitting GUI processing with scaling scaling, even for stereoscopic viewing, This means retreating from the convenience of the current optical disk playback device, and is never a useful idea for the industry.

- An object of the present invention is to provide a playback device capable of reducing the sense of reality and observing consumer protection while realizing GUI processing with video scaling.

- a playback apparatus is a playback apparatus that realizes stereoscopic playback,

- a video decoder for decoding a video stream to obtain a video frame, a plane memory for storing graphics data composed of a plurality of pixel data having a predetermined number of vertical pixels ⁇ horizontal number of pixels,

- an offset holding unit that holds an offset indicating a reference of how much the pixel coordinates should be moved in each of the right direction and the left direction

- a shift engine that moves the respective coordinates of the pixel data constituting the graphics data in the plane memory in the horizontal direction by the number of pixels corresponding to the offset

- a synthesis unit that synthesizes graphics data in which the coordinates of pixel data are moved to a video frame, and when the scale of the video frame is changed, the amount of movement of coordinates in the pixel data by the shift engine is equal to the offset It is based on a value obtained by multiplying the scaling factor.

- the stereoscopic video playback device adjusts the subtitle shift amount when scaling the video with subtitles, so that when performing GUI processing with scaling, the video and subtitle screen configurations are Thus, it is possible to prevent the difference in three-dimensional effect from becoming serious. As a result, eye fatigue can be reduced and more natural display can be performed, so that consumer protection can be ensured.

- the shift amount of the video plane may be adjusted when scaling video with captions. By shifting the video plane, it is possible to prevent the difference in stereoscopic effect from becoming severe, to reduce eye fatigue, and to perform more natural display.

- the subtitle shift amount may be adjusted little by little in frame units when scaling video with subtitles. As a result, it becomes possible to prevent the difference in stereoscopic effect from becoming intense, to reduce eye fatigue, and to perform more natural display.

- subtitle display may be disabled for a certain period of time when scaling video with subtitles and displayed when a certain period of time has passed.

- subtitles are displayed in a state where the user's eyes are in a three-dimensional difference, preventing the difference in three-dimensional effects from becoming severe, reducing eye fatigue, and providing a more natural display. It becomes like this.

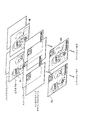

- FIG. 2 is a diagram illustrating an example of an internal configuration of a BD-ROM 100.

- FIG. It is a figure which shows an example of the internal structure of a BD-J object. It is a figure which shows an example of the internal structure of a reproducing

- FIG. 11 is a diagram illustrating an example of a stereoscopic video image that appears when the video output is viewed with the liquid crystal glasses 500 when the stereo mode is ON for all video planes but the stereo mode is OFF for all other planes.

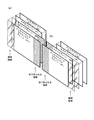

- FIG. 4 is a diagram illustrating an example of a Shifted Left graphics plane shifted in the right direction and a Shifted Left graphics plane shifted in the left direction. Explains the principle that when the sign of the plane offset is positive (the left-view graphics image is shifted to the right and the right-view graphics image is shifted to the left), the image appears to be in front of the display screen. It is a figure for doing.

- FIG. 4 is a diagram illustrating an example of a stereoscopic image viewed by a user when scaling is performed on a moving image. It shows how the plane offset in the plane shift should be determined when the moving image is scaled.

- FIG. 2 is a diagram illustrating an example of an internal configuration of a plane shift engine 20 of the playback device 200 according to the first embodiment.

- FIG. FIG. 4 is a diagram illustrating an example of three scaling factors of 1/1, 1/2, and 1/4 and a combined image of graphics including subtitles and GUI when each scaling factor is applied.

- FIG. 3 is a diagram illustrating an example of three scaling factors 1/1, 1/2, and 1/4 and a composite image of subtitle graphics when each scaling factor is applied.

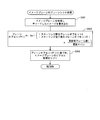

- (A) is a figure for demonstrating an example of the specific process of step S706a

- (b) is a figure for demonstrating an example of the specific process of step S808a.

- . 2 is a diagram illustrating an example of an internal configuration of an image plane 8.

- FIG. 2 is a diagram illustrating an example of an internal configuration of an interactive graphics plane 10.

- FIG. It is a figure which shows an example of the pixel data of a foreground area

- 7 is a diagram illustrating an example of a plane shift processing procedure in the image plane 8.

- FIG. 4 is a diagram illustrating an example of a plane shift processing procedure in the interactive graphics plane 10.

- FIG. 5 is a diagram illustrating an example of stored contents in a display mode storage unit 29.

- FIG. It is a flowchart which shows an example of the process sequence of the display mode setting at the time of title switching. It is a flowchart which shows an example of the process sequence of the display mode setting in a title.

- 14 is a flowchart illustrating an example of a main procedure of playlist reproduction in the BD-J mode. It is a flowchart which shows an example of the reproduction

- FIG. 1 It is a flowchart which shows an example of the processing procedure of 3D display of a 3DAV stream. It is a flowchart which shows an example of the procedure of the process for left eyes at the time of 3D display mode. It is a flowchart which shows an example of the process sequence of the process for right eyes.

- (A) is a figure for demonstrating an example of the specific process of step S702 and step S804a

- (b) is a figure for demonstrating an example of the specific process of step S804b.

- (A) is a figure for demonstrating an example of the specific process of step S704a

- (b) is a figure for demonstrating an example of the specific process of step S806a.

- step S804b is a diagram for describing an example of specific processing of step S804b in the second embodiment. It is a block diagram which shows an example of an internal structure of the plane shift engine 20 of the reproducing

- (A) is a figure for demonstrating an example of the specific process of step S702 in Embodiment 2

- (b) is a figure for demonstrating an example of the specific process of step S804a. It is a figure which shows an example of the condition which intended moving the coordinate of the moving image and graphics which were scaled by the predetermined number of pixels. It is a block diagram which shows an example of an internal structure of the plane shift engine 20 of the reproducing

- FIG. 1 is a diagram showing an example of a usage behavior of a recording medium and a playback device.

- the BD-ROM 100 and the playback device 200 as an example of a recording medium constitute a home theater system together with a remote controller 300, a television 400, and liquid crystal glasses 500, and are used by a user.

- BD-ROM 100 supplies, for example, movie works to the home theater system.

- the playback device 200 is connected to the television 400 and plays back the BD-ROM 100.

- the reproduced video reproduced in this way includes 2D video and 3D video.

- the 2D video is an image expressed by pixels at the display position of the display screen located on the XY plane, for example, by regarding the plane including the display screen of the display device as the XY plane, and is also called a planar view image.

- a 3D image has a straight line perpendicular to the plane captured as the XY plane described above as an axis (in this embodiment, a straight line perpendicular to the XY plane is defined as an axis (Z axis)), and display on the display device

- the image is made to look three-dimensional to the human eye, or to appear in front or behind the display screen. It is.

- the 3D video is, for example, a recording medium from which data (stream data) corresponding to a left-view video to be viewed with the left eye and a right-view video to be viewed with the right eye can be read via a virtual file system shown in FIG. (For example, the BD-ROM 100 or the local storage 1c shown in FIG. 4 to be described later, here, the BD-ROM 100 will be described as an example for the sake of simplicity.) If the right-view video to be viewed with the right eye is a video that looks different in the degree of parallax between both eyes, the left-view video is shown only to the left eye and the right-view video is shown only to the right eye.

- the left-view video By repeating the operation, it can be shown to the user as a stereoscopic image with depth to the human eye.

- it can be seen only as a planar video by human eyes.

- the left-view video is shown in both the left and right eyes, the left-view video can only be seen as a planar video by human eyes.

- the remote controller 300 is a device that accepts an operation on a hierarchical GUI from a user. To accept such an operation, the remote controller 100 moves a menu key for calling a menu constituting the GUI and a focus of a GUI component constituting the menu. An arrow key, a determination key for performing a confirmation operation on a GUI component constituting the menu, a return key for returning a hierarchical menu to a higher level, and a numerical key are provided.

- the television 400 provides a user with an interactive operation environment by displaying a playback image of a movie work or displaying a menu or the like.

- the display screen of the television 400 in this figure shows a display example in which the GUI is full screened by scaling the video.

- the right half of the screen of the television 400 displays the director's comment cm1 described by the director of the movie work.

- the lower half of the screen of the television 400 is the button member bn1 that accepts next skip and previous skip.

- a button member bn2 that accepts a menu call, a button member bn3 that accepts a return operation, a button member bn4 that accepts a network connection, and an indicator ir1 for displaying the current title number and the current chapter number.

- These button members can be operated by the remote controller 300.

- the liquid crystal glasses 500 are composed of a liquid crystal shutter and a control unit, and realize stereoscopic viewing using parallax in both eyes of the user.

- the liquid crystal shutter of the liquid crystal glasses 500 is a shutter using a liquid crystal lens having a property that light transmittance is changed by changing an applied voltage.

- the control unit of the liquid crystal glasses 500 receives a synchronization signal for switching between the right-view image and the left-view image output sent from the playback device, and switches between the first state and the second state according to the synchronization signal. I do.

- the first state is a state in which the applied voltage is adjusted so that the liquid crystal lens corresponding to the right view does not transmit light, and the applied voltage is adjusted so that the liquid crystal lens corresponding to the left view transmits light.

- a left-view image is provided for viewing on the left eye, and a left-view image is not provided for viewing on the right eye.

- the second state is a state in which the applied voltage is adjusted so that the liquid crystal lens corresponding to the right view transmits light, and the applied voltage is adjusted so that the liquid crystal lens corresponding to the left view does not transmit light.

- the right-view image is viewed for the right eye, and the right-view image is not viewed for the left eye.

- a right-view image and a left-view image are images that have a slight difference in appearance between the image seen from the right view and the image seen from the left view due to the difference in the shooting position. is there.

- the degree of difference in the appearance of the image as the degree of difference between the images seen from the left eye / right eye of the human (that is, the degree of parallax)

- the image seen from the human eye can be recognized as a three-dimensional image. It is. Therefore, if the liquid crystal glasses 500 synchronize the switching between the first state and the second state as described above with the output switching timing of the right-view image and the left-view image, the user can The illusion is that a typical display looks three-dimensional. Next, the time interval for displaying the right view video and the left view video will be described.

- This short time interval is sufficient as long as it is an illusion that a person can see three-dimensionally by the switching display described above.

- FIG. 2 is a diagram illustrating an example of the internal configuration of the BD-ROM 100.

- BD-ROM 100 which is an example of a recording medium, is shown in the fourth row of the figure, and tracks on the BD-ROM 100 are shown in the third row.

- the track in this figure is drawn by extending the track formed in a spiral shape from the inner periphery to the outer periphery of the BD-ROM 100 in the horizontal direction.

- This track includes a lead-in area, a volume area, and a lead-out area.

- BCA Burt Cutting Area

- the volume area in this figure has a layer model of a file system layer and an application layer, and application data such as video data is recorded in the file system layer with the file system information at the top.

- the file system is UDF, ISO9660, etc., and logical data recorded in the same way as a normal PC can be read out using a directory and file structure, and a 255 character file name.

- the directory name can be read out.

- the disk root certificate file (app.discroot.cert) exists under the CERTIFICATE directory.

- app.discroot.cert is a Java (registered trademark) application that performs dynamic scenario control using a Java (registered trademark) virtual machine. It is a digital certificate used in the process of performing (hereinafter referred to as signature verification).

- the BDMV directory is a directory in which data such as AV contents and management information handled by the BD-ROM 100 is recorded.

- Under the BDMV directory there are a PLAYLIST directory, a CLIPINF directory, a STREAM directory, a BDJO directory, a JAR directory, a META directory, and so on.

- the STREAM directory is a directory that stores a file that is the main body of the transport stream, and is a file with an extension m2ts (00001. m2ts) exists.

- the CLIPINF directory contains a file (00001.clpi) with the extension clpi.

- the BDJO directory contains a file (XXXXX.bdjo) with the extension bdjo.

- the JAR directory contains a file (YYYYY.jar) with the extension jar.

- the XML file (ZZZZZ.xml) exists in the META directory.

- a file with the extension m2ts is a digital AV stream in the MPEG-TS (TransportStream) format, and is obtained by multiplexing a video stream, one or more audio streams, and a graphics stream.

- the video stream indicates the moving image portion of the movie

- the audio stream indicates the audio portion of the movie.

- a transport stream including only a 2D stream is referred to as a “2D stream”, and a transport stream including a 3D stream is referred to as a “3D stream”.

- both left-eye data and right-eye data can be put in m2ts, and m2ts can be prepared separately for the left eye and the right eye.

- a codec for example, MPEG-4 in which the left-view video stream and the right-view video stream cross-reference each other is used.

- AVC MVC A video stream compression-encoded with such a codec is referred to as an MVC video stream.

- a file with the extension “mpls” is a file storing PlayList (PL) information.

- the playlist information is information that defines a playlist with reference to an AV clip.

- the BD-ROM 100 has a dimension identification flag for identifying whether the stream to be played is for 2D or 3D.

- the dimension identification flag is embedded in the playlist (PL) information.

- Playlist information includes MainPath information, Subpath information, and PlayListMark information.

- MainPath information is information that defines a logical playback section by defining one or more combinations of a time point that becomes In_Time and a time point that becomes Out_Time on the playback time axis of the AV stream.

- the stream number table (STN_table) that defines which of the elementary streams multiplexed in the above is permitted and which is not permitted.

- the PlayListMark information includes designation of a time point to be a chapter among a part of the AV stream designated by the combination of In_Time information and Out_Time information.

- Subpath information is composed of one or more pieces of SubPlayItem information.

- SubPlayItem information includes designation of an elementary stream to be reproduced in synchronization with the AV stream, In_Time information and Out_Time on the reproduction time axis of the elementary stream. Information set.

- AV playback can be started by a Java TM application for playback control instructing the Java TM virtual machine to generate a JMF player instance that plays back this playlist information.

- a JMF (Java Media Frame work) player instance is actual data generated on a heap memory of a virtual machine based on a JMF player class.

- a 2D playlist includes only a 2D playback stream

- a 3D playlist includes a 3D stereoscopic stream in addition to a 2D stream.

- a file with the extension “clpi” is Clip information corresponding to each AV clip on a one-to-one basis. Because of the management information, the Clip information has information such as a stream encoding format, a frame rate, a bit rate, and a resolution in the AV clip, and an EP_map indicating a GOP head position.

- the above Clip information and playlist information are classified as “static scenarios”.

- a file with the extension BDJO is a file storing a BD-J object.

- the BD-J object is information that defines a title by associating an AV clip string defined by playlist information with an application.

- the BD-J object indicates “application management table” and “reference value for playlist information”. “Reference value for play list information” indicates play list information to be reproduced simultaneously with the start of the title.

- the application management table is a list of information designating applications having this title as a life cycle.

- a character string indicating the name of the application and an icon locator indicating the location of the icon associated with the application are stored for each application.

- the icon locator indicates an icon included in the Java (registered trademark) archive file by an address.

- the Java (registered trademark) application is actually the Java (registered trademark) archive file (YYYYY.jar) stored in the JAR directory under the BDMV directory in FIG.

- the application is, for example, a Java (registered trademark) application, and includes one or more xlet programs loaded in the heap area (also called work memory) of the virtual machine.

- Application signaling is performed according to the application management table in the BD-J object, and the life cycle is managed, so it is called a BD-J application.

- the BD-J application is intended to improve interactivity, and in order to operate the BD-J application, the playback device platform has a scaling command that uses the scaling size (hereinafter referred to as scaling factor) as input information.

- scaling factor scaling factor

- APIs that can be issued are defined.

- the timing of issuing the scaling instruction is arbitrary, and it may be issued during playback of the video stream, or at other timings.

- the metafile (ZZZZZ.xml) stored in the META directory stores various information related to video works on the disc.

- Information stored in the metafile includes the disc name and image of the disc, information on who created the disc, the title name associated with each title, and the like. This completes the description of the BD-ROM 100.

- the metafile is not an essential file, and some BD-ROMs do not store this file.

- FIG. 3 is a diagram illustrating an example of an internal configuration of a BD-J object.

- the BD-J object is composed of an “application management table”, a “GUI management table”, and a “playlist management table”.

- AMT Application management table

- AMT Application management table

- a lead line bj1 shows a close-up of the internal configuration of the application management table.

- the application management table includes an “application identifier” that identifies an application to be operated when a title corresponding to the BD-J object becomes the current title, and a “control code”.

- the control code indicates that this application is loaded into the heap memory and then automatically started.

- this application is loaded into the heap memory and then another application Wait for a call from, indicating that it should be activated.

- GUI management table is a management table used when a running application performs GUI, and the resolution, GUI font data, GUI menu call, and title call for GUI display are executed by the user. Contains a mask flag that specifies whether to mask these calls.

- a lead line bj2 shows a close-up of the internal configuration of the GUI management table. As shown in the lead line bj2, the GUI management table should be set to one of HD3D_1920 ⁇ 1080, HD3D_1280 ⁇ 720, HD_1920 ⁇ 1080, HD_1280 ⁇ 720, QHD960 ⁇ 540, SD, SD_50HZ_720 ⁇ 576, SD_60HZ_720 ⁇ 480. Can do.

- Playlist management table includes designation of a playlist to be automatically played when the title corresponding to the BD-J object becomes the current title.

- a lead line bj4 shows a close-up of the internal structure of the automatic playback playlist. As shown in the leader line bj4, 3D playlist 1920 ⁇ 1080, 3D playlist 1280 ⁇ 720, 2D playlist 1920 ⁇ 1080, 2D playlist 1280 ⁇ 720, 2D playlist 720 are used to specify the automatic playback playlist. * 576 and 2D playlist 720 * 480 can be specified.

- FIG. 4 is a diagram showing an example of the internal configuration of the playback apparatus.

- the playback apparatus includes a BD drive 1a, local storage 1b, network interface 1b, local storage 1c, read buffers 2a and 2b, virtual file system 3, demultiplexer 4, video decoders 5a and 5b, video.

- Plane 6 image decoders 7a, 7b, image memories 7c, 7d, image plane 8, audio decoder 9, interactive graphics plane 10, background plane 11, register set 12, static scenario memory 13, playback control engine 14, scaling engine 15, composition unit 16, HDMI transmission / reception unit 17, display function flag holding unit 18, left-right processing storage unit 19, plane shift engine 20, offset setting unit 21, BD-J platform 22, rendering engine 22a, dynamic scenario menu Li 23, the mode management module 24, HDMV module 25, UO detection module 26, a still image memory 27a, a still image decoder 27b, a display mode setting initial display setting unit 28, a display mode storage unit 29.

- the BD-ROM 100 stores data having a file structure as shown in FIG. 2, and a left-eye video stream and a right-eye video from a virtual BD-ROM (virtual package) described later.

- a stream, a caption stream, and a graphics stream can be read out via the virtual file system 3 shown in FIG.

- the subtitle stream and the graphics stream those for the left eye and the right eye may be recorded on the BD-ROM 100, respectively, or one subtitle stream and the graphics stream may be shared between the left and right.

- the subtitles and graphics that can be seen through the liquid crystal glasses 500 are two-dimensional images, but they can be seen at a position protruding from the display screen or behind the display screen. Can appear to be located.

- Each of the left-eye video stream and the right-eye video stream recorded on the BD-ROM 100 is input to the playback device 200 and played back.

- the data for reproducing such a video is recorded in the BD-ROM 100 in advance as a video stream.

- the right-eye video stream, the left-eye video stream, the subtitle stream, and the graphics stream are embedded in one stream file in advance. This is to suppress as much as possible the amount of computation required by a device (for example, a CE device) having a small device resource in memory or graphics.

- a device for example, a CE device

- the BD drive 1a includes, for example, a semiconductor laser (not shown), a collimator lens (not shown), a beam splitter (not shown), an objective lens (not shown), a condenser lens (not shown), and a photodetector (not shown). And an optical head (not shown).

- the light beam emitted from the semiconductor laser passes through the collimator lens, the beam splitter, and the objective lens, and is condensed on the information surface of the optical disk.

- the condensed light beam is reflected / diffracted on the optical disk, and is collected on the photodetector through the objective lens, the beam splitter, and the condenser lens.

- the generated signal corresponds to the data read from the BD-ROM according to the amount of light collected by the photodetector.

- the network interface 1b is for communicating with the outside of the playback device, and can access a server accessible via the Internet or a server connected via a local network. For example, it can be used to download additional BD-ROM content published on the Internet, or data communication can be performed with a server on the Internet specified by the content, enabling content playback using the network function.

- the BD-ROM additional content is content that is not in the original BD-ROM 100 loaded in the BD drive 1a, and includes, for example, additional sub audio, subtitles, privilege video, and applications.

- the network interface 1b can be controlled from the BD-J platform, and additional content published on the Internet can be downloaded to the local storage 1c.

- the local storage 1c includes built-in media and removable media, and is used for storing downloaded additional content and data used by applications.

- the storage area for additional content is divided for each BD-ROM, and the area that an application can use to hold data is divided for each application.

- merge management information in which merge rules describing how the additional content downloaded is merged with the data on the BD-ROM loaded in the BD drive 1a is also stored in the built-in and removable media.

- Build-in media is a writable recording medium such as a hard disk drive or memory built in the playback device.

- the removable media is, for example, a portable recording medium, and preferably a portable semiconductor memory card such as an SD card.

- the playback device has a slot (not shown) for attaching a removable medium and an interface (for example, a memory card I) for reading the removable medium installed in the slot. / F), and when a semiconductor memory is installed in the slot, the removable media and the playback device are electrically connected and recorded in the semiconductor memory using an interface (for example, a memory card I / F). Data can be converted into an electrical signal and read out.

- the read buffer 2a is a buffer for temporarily storing source packets constituting extents constituting the left-view stream read from the BD drive 1a, adjusting the transfer speed, and transferring the packets to the demultiplexer 4. .

- the read buffer 2b is a buffer for temporarily storing source packets constituting extents constituting the right-view stream read from the BD drive 1a, adjusting the transfer speed, and transferring the packets to the demultiplexer 4. .

- the virtual file system 3 is a virtual BD obtained by merging the additional content stored in the local storage and the content on the loaded BD-ROM based on, for example, merge management information downloaded to the local storage 1c together with the additional content.

- -Build a ROM virtual package.

- the virtual file system 3 for building a virtual package has an application data association module for generating and updating application association information.

- the application data association information is information that associates local storage information with an application based on information on the BD-ROM disc and attribute information set by the application.

- the virtual package and the original BD-ROM can be referenced without distinction from the command interpreter, which is the main operating entity in HDMV mode, and the BD-J platform, which is the main operating entity in the BD-J mode.

- the playback device performs playback control using both data on the BD-ROM and data on the local storage.

- the demultiplexer 4 is composed of, for example, a source packet depacketizer and a PID filter, and a stream to be reproduced (a stream is a virtual package constructed (data on a local storage corresponding to a loaded BD-ROM and a loaded BD-ROM)) And packet filtering based on the packet identifier is executed.

- packet filtering a video stream corresponding to the display method flag is extracted from the left-view video stream and the right-view video stream based on the flag of the left / right processing storage unit 19 and transferred to the video decoder 5a and the video decoder 5b.

- the demultiplexer 3 sorts the left-eye video frame and the right-eye video frame from the stream header information.

- the demultiplexer 4 When the stream separated from the reproduction target stream is a subtitle stream, the demultiplexer 4 writes the separated subtitle stream in the image memory. For example, when a 3D subtitle stream (left view subtitle stream, right view subtitle stream) is included in the stream, the left view subtitle stream is written in the image memory 7c, and the right view subtitle stream is written. Write to image memory 7d.

- a 3D subtitle stream left view subtitle stream, right view subtitle stream

- the subtitle stream includes a 2D subtitle stream (a subtitle stream used for flat display)

- the 2D subtitle stream is written in the image memory 7c.

- Video decoder 5a The video decoder 5a decodes the TS packet output from the demultiplexer 4 and writes an uncompressed picture into the left-view video plane 6 (indicated by the code (L) in the video plane 6 in FIG. 4).

- Video decoder 5b The video decoder 5b decodes the right-view video stream output from the demultiplexer 4, decodes the TS packet, and converts the uncompressed picture into the right-view video plane 6 (the code (R) in the video plane 6 in FIG. 4). Write to what is shown.

- the video plane 6 is a plane memory that can store picture data corresponding to a resolution of, for example, 1920 ⁇ 2160 (1280 ⁇ 1440), and is a plane for the left eye having a resolution of 1920 ⁇ 1080 (1280 ⁇ 720) (FIG. 4). ) And a right-eye plane having a resolution of 1920 ⁇ 1080 (1280 ⁇ 720) (denoted by the symbol (R) in the video plane 6 in FIG. 4).

- the image decoders 7a and 7b decode TS packets that are output from the demultiplexer 4 and are written in the image memories 7c and 7d, and write uncompressed graphics subtitles to the graphics plane 8a.

- the “subtitle stream” decoded by the image decoders 7a and 7b is data representing a subtitle compressed by run-length encoding, a pixel code indicating a Y value, a Cr value, a Cb value, and an ⁇ value, and the pixel code Defined by run length.

- the image plane 8 is a graphics plane that can store graphics data (for example, caption data) obtained by decoding a caption stream with a resolution of, for example, 1920 ⁇ 1080 (1280 ⁇ 720), for example, 1920 ⁇ 1080.

- graphics data for example, caption data

- Left-eye plane (indicated by the symbol (L) in the image plane 8 shown in FIG. 4) having a storage area capable of storing data having a resolution of (1280 ⁇ 720), 1920 ⁇ 1080 (1280 ⁇ 720)

- a right-eye plane (indicated by reference numeral (R) in the image plane 8 shown in FIG. 4) having a storage area capable of storing data having a resolution of.

- the audio decoder 9 decodes the audio frame output from the demultiplexer 4 and outputs uncompressed audio data.

- the interactive graphics plane 10 is a graphics plane having a storage area in which graphics data drawn by the BD-J application using the rendering engine 22a can be stored with a resolution of 1920 ⁇ 1080 (1280 ⁇ 720), for example.

- 1920 ⁇ 1080 A plane for the right eye having a storage area capable of storing data having a resolution of 1280 ⁇ 720) (indicated by reference numeral (R) in the interactive graphics plane 10 of FIG. 4).

- “Graphics data” stored in the interactive graphics plane 10 is a graphic in which each pixel is defined by an R value, a G value, a B value, and an ⁇ value. Graphics written in the interactive graphics plane 10 are images and widgets having a purpose mainly used for configuring a GUI. Although there is a difference in data representing pixels, image data and graphics data are included in the expression graphics data.

- graphics planes There are two types of graphics planes that are the subject of the present application: an image plane 8 and an interactive graphics plane 10. When simply called “graphics planes”, they indicate either or both of the image plane 8 and the interactive graphics plane 10. To do.

- the background plane 11 is a plane memory that can store still image data to be a background image with a resolution of 1920 ⁇ 1080 (1280 ⁇ 720), for example, specifically, 1920 ⁇ 1080 (1280 ⁇ 720).

- the register set 12 includes a playback state register that stores the playback state of the playlist, a playback setting register that stores configuration information indicating the configuration of the playback device, and a general-purpose register that can store arbitrary information used by the content. It is a gathering of.

- the reproduction state of the playlist indicates a state such as which AV data is used in various AV data information described in the playlist and which position (time) of the playlist is being reproduced.

- the playback control engine 14 stores the contents in the PSR set 12. Also, the value specified by the application can be stored or the stored value can be stored in the application according to the instruction from the command interpreter that is the HDMV mode operation subject or the Java platform that is the BD-J mode operation subject. It is possible to pass

- the static scenario memory 13 is a memory for storing current playlist information and current clip information.

- Current playlist information refers to information that is currently processed among multiple playlist information that can be accessed from a BD-ROM, a built-in media drive, or a removable media drive.

- Current clip information refers to information that is currently processed among a plurality of clip information that can be accessed from a BD-ROM, a built-in media drive, or a removable media drive.

- the playback control engine 14 executes an AV playback function and a playlist playback function in response to a function call from a command interpreter that is an HDMV mode operating entity and a Java platform that is an BD-J mode operating entity.

- the AV playback function is a group of functions followed from DVD players and CD players. Playback start, playback stop, pause, release of pause, release of still image function, fast forward with specified playback speed, playback speed Is a process such as rewind, audio switching, sub-video switching, angle switching, etc., designated as an immediate value.

- the playlist playback function refers to performing playback start and playback stop in accordance with current playlist information and current clip information constituting the current playlist in the AV playback function.

- the playlist and AV stream that are subject to playback processing by the playback control engine 12 are the automatic playback playlist described in the current scenario on the BD-ROM. (AutoStartPlaylist).

- AV stream playback may be triggered by a user operation (for example, a playback button) or automatically triggered by some event in the terminal.

- the scaling engine 15 can perform reduction, enlargement, and equal magnification control of the video on the image plane 8 and the video plane 5. If the value is set in the plane shift engine 20 when the image data and picture data are decoded, the scaling engine 15 regards that scaling has occurred, and stores the decoded video data in the video plane. Before the decoded graphics are stored in the image plane, scaling is performed through the scaling engine 15.

- the scaling factor is, for example, the magnification of the number of horizontal pixels and / or the magnification of the number of vertical pixels. For example, a scaling factor of “1/2” is applied to graphics data whose basic resolution is 1920 ⁇ 1080 pixels. When Factor is specified, the resolution of the graphics data is reduced to (1920 ⁇ 0.5) ⁇ (1080 ⁇ 0.5) pixels, that is, 960 ⁇ 540 pixels.

- the scaling factor can be set not only to a value of 1 or less, but also to a value of 1 or more, as in 1/2, in which case enlargement processing is performed. (Synthesis unit 16)

- the combining unit 16 combines the stored contents of the interactive graphics plane 10, the image plane 8, the video plane 6, and the background plane 11.

- the interactive graphics plane 10, the image plane 8, the video plane 6, and the background plane 11 have separate layer configurations, and the background plane 11, the video plane 6, the image plane 8, and the interactive graphics plane are always in order from the bottom. Combine (superimpose) the stored data. Assuming playback of content that displays subtitles as image plane data, POP-UP menu (graphics) as interactive graphics plane 10 data, and GUI graphics data, the synthesizing unit must ensure that the video plane 6 data ( The image plane 8 data (caption) is superimposed on the video), and the interactive graphics plane 10 data is superimposed on the image plane 8.

- the HDMI transmission / reception unit 17 includes, for example, an interface conforming to the HDMI standard (HDMI: High Definition Multimedia Interface), and performs transmission / reception so as to conform to the HDMI standard with a playback apparatus and a device that is HDMI-connected (in this example, the television 400).

- the picture data stored in the video and the uncompressed audio data decoded by the audio decoder 9 are transmitted to the television 400 via the HDMI transmission / reception unit 16.

- the television 400 holds, for example, information regarding whether it is compatible with stereoscopic display, information regarding resolution capable of planar display, and information regarding resolution capable of stereoscopic display, and there is a request from the playback device via the HDMI transmission / reception unit 16.

- the television 400 returns the requested necessary information (for example, information regarding whether or not stereoscopic display is supported, information regarding resolution capable of planar display, information regarding resolution capable of stereoscopic display) to the playback device.

- information regarding whether or not the television 400 is compatible with stereoscopic display can be acquired from the television 400 via the HDMI transmission / reception unit 16.

- the display function flag storage unit 18 stores a 3D display function flag indicating whether or not the playback apparatus can display 3D.

- the left-right process storage unit 19 stores whether the current output process is a left-view output or a right-view output.

- the flag in the left / right processing storage unit 19 indicates whether the output to the display device (the television in the example of FIG. 1) connected to the playback apparatus shown in FIG. 1 is a left view output or a right view output. While the left view is output, the flag in the left / right processing storage unit 19 is set to a flag indicating the left view output. During the right view output, the flag in the left / right processing storage unit 19 is set to a flag indicating the right view output.

- the plane shift engine 20 also has an area for storing a plane offset. After determining whether the current processing target is a left-eye image or a right-eye image in the left-right processing storage unit 19, the horizontal axis of the image plane is stored using the stored plane offset. Shift amount (amount indicating how much the image displayed on the display screen is shifted from the reference position in the horizontal direction of the display screen) is calculated and shifted. By adjusting the shift amount of the displayed subtitles (graphics), the planar subtitles (graphics) seen through the liquid crystal glasses 500 may appear to be displayed in front / back of the display screen position. it can. The shift amount is an amount for adjusting how far from the position of the display screen it seems to be located in front or behind.

- the depth is changed by changing the shift width of the horizontal axis of subtitles / graphics.

- the left-eye caption and the right-eye caption are displayed so that the farther apart they are in a certain direction, the closer to the front, and the farther away they are in the opposite direction, the farther they are separated, the deeper the visual effect is displayed. It is done.

- the displacement of the image plane may become too large, resulting in a phenomenon where the image is not visible and looks double.

- the resolution and size information of the display is combined based on the value described in the plane offset, and adjustment is performed so that subtitles and graphics are not displayed too far in front.

- the plane shift engine 20 stores the value set using the setup function.

- Offset setting unit 21 The offset setting unit 21 sets an offset to be updated when there is an offset update request in an offset value storage unit 41 of the plane shift engine 20 described later.

- an image plane setting offset value and an interactive graphics plane setting offset value stored in a display mode storage unit 29 described later are read and set, and (b) input to the demultiplexer 4.

- the demultiplexer 4 acquires the offset value of the image plane and the interactive graphics plane held in the header area of the stream, and sets the above-described offset value obtained from the demultiplexer 4.

- UO The image plane offset value and interactive graphics plane offset value sent from the detection module 26 are read and set, (d) the image plane offset value included in the current playlist information, and the interactive graph Setting is made by the operation such as setting by reading the offset value of Kkusupuren.

- the plane offset is an integer whose depth is represented by ⁇ 63 to 63 (63 is the foremost and ⁇ 63 is the farthest), and is converted into pixel coordinates indicating the final shift width.

- the BD-J platform 22 is a Java platform that is an operation subject of the BD-J mode, and Java2Micro_Edition (J2ME) Personal Basis Profile (PBP 1.0) and Globally Executable MHP

- J2ME Java2Micro_Edition

- PBP 1.0 Java2Micro_Edition

- GEM1.0.2 for package media targets is fully implemented, and the BD-J application is started by reading the bytecode from the class file existing in the JAR archive file and storing it in the heap memory. Then, the byte code constituting the BD-J application and the byte code constituting the system application are converted into native codes and executed by the MPU.

- the BD-J platform 22 When scaling is requested from the BD-J application, the BD-J platform 22 stores the scaling factor given as an argument in the scaling factor storage unit 42 of the scaling engine 20 shown in FIG. 21 described later (rendering engine 22a).

- the rendering engine 22a includes basic software such as Java2D and OPEN-GL, and writes graphics and character strings to the interactive graphics plane 10 in accordance with instructions from the BD-J platform 22 in the BD-J mode.

- the rendering engine 22 a In the HDMV mode, the rendering engine 22 a renders graphics data extracted from a graphics stream other than the stream corresponding to the caption (caption stream) (for example, graphics data corresponding to the input button), and writes it to the interactive graphics plane 10.

- the dynamic scenario memory 23 is a memory that stores a current dynamic scenario and is used for processing by an HDMV module that is an HDMV mode operating subject and a Java platform that is an BD-J mode operating subject.

- the current dynamic scenario refers to an index.bdmv, BD-J object, or movie object that is currently being executed among BD-ROM, built-in media, and removable media.

- the mode management module 24 holds Index.bdmv read from the BD-ROM 100 or the local storage 1c (in the example of FIG. 4, a built-in media drive or a removable media drive), and performs mode management and branch control.

- the mode management by the mode management module 24 is a module assignment that determines which of the BD-J platform 22 and the HDMV module 25 is to execute a dynamic scenario.

- the HDMV module 25 is a DVD virtual player that is an operation subject in the HDMV mode, and an execution subject in the HDMV mode.

- This module has a command interpreter and executes HDMV mode control by decoding and executing navigation commands constituting the movie object. Since navigation commands are described in a syntax similar to DVD-Video, DVD-Video-like playback control can be realized by executing such navigation commands.

- the UO detection module 26 receives a user operation for the GUI.

- User operations accepted by the GUI include title selection, subtitle selection, and audio selection as to which title is selected from among the titles recorded on the BD-ROM.

- a level of depth perception of a stereoscopic image may be received. For example, there are cases where the sense of depth is three levels such as far, normal, and close, and the depth sense is accepted by numerical input such as how many centimeters and how many millimeters the sense of depth is.

- the UO detection module 26 receives a command for changing the scaling of the image plane by operating a button attached to the remote controller or the device, the module in the device directly issues a scaling command.

- the still image memory 27a stores still image data serving as a background image extracted from the BD-ROM or the constructed virtual package.

- the still image decoder 27 b decodes the still image data read to the still image memory 27 a and writes uncompressed background image data to the background plane 11.

- the display mode setting initial display setting unit 28 sets the display mode and resolution based on the BD-J object in the current title provided to the BD-J platform unit.

- the display mode storage unit 29 stores whether the display mode is 2D or 3D, and whether the stereo mode is ON or OFF.

- the playback device is set as a 3D display function flag to enable 3D display

- the display mode which is the terminal setting stored in the display mode storage unit 29, can be switched to either 2D or 3D.

- a state where the display mode is indicated as “3D” is referred to as “3D display mode”

- a state where the display mode is indicated as “2D” is referred to as “2D display mode”.

- each plane takes either a stereo mode ON state or a stereo mode OFF state.

- the difference between ON and OFF of the stereo mode is also the difference in the method of combining the planes.

- stereo mode ON means that the playback device has two images with different views (for example, a viewing angle) (for example, a left-view image and a right-view image with different viewing angles in the degree of parallax). This is a 3D display mode in which composition is performed using each video for view).

- Step mode OFF means that the playback device has one video (for example, one of a left-view video and a right-view video, and an example using a left-view video will be described here).

- This is a 3D display mode in which the composition is used for left eye / right eye using. In other words, when viewing with both eyes, the image has no stereoscopic effect (planar image).

- the horizontal graphics data (caption data) stored and displayed in the graphics plane is shown to be positioned in front of or behind the display screen due to a shift in the horizontal direction due to the plane offset.

- “3D display mode” there are two modes, “stereo mode ON” and “stereo mode OFF”.

- “3D display mode” in “stereo mode ON”, left view data and right view Data (for example, an image seen from the left eye and an image seen from the right eye at different angles) are stored in the left view plane and the right view plane, respectively, and the stored images are synchronized signals.

- stereo mode OFF in “3D display mode”, only one of left view data and right view data (for example, left view data in this embodiment) is used. By storing this in each of the left view plane and right view plane and adjusting the plane offset, it is possible to display a planar image so that it is positioned in front of or behind the display screen. Is possible.

- stereo mode ON and “stereo mode OFF” are configured to be set for each plane (that is, for each video plane 6, graphics plane 8, interactive graphics plane 10, and background plane 11). is doing.

- the “2D display mode” is a normal display, that is, an image corresponding to the position of the display screen is displayed.

- a decoder and a plane to be used as defaults are determined in advance, and a composition image is displayed using the decoder and the plane.

- 2D video data written by the video decoder 5a on the left-eye video plane (indicated by the symbol (L) in the video plane 6 shown in FIG. 4)

- the image decoder 7a is used for the left eye.

- 2D graphics data (caption data) written on the plane (denoted by the symbol (L) in the image plane 8 shown in FIG. 4)

- the BD-J application uses the rendering engine 22a to generate the left-eye plane (FIG. 4).

- 2D interactive graphics and still image decoder 27b written to the left graphics plane (the one with the symbol (L) in the background plane 11 shown in FIG. 4). Configured to synthesize 2D still image data written to Yes.

- composition is composed in the order of 2D still image data, 2D video data, 2D graphics data (caption data), and 2D interactive graphics from the bottom.

- the display mode setting initial display setting unit 28 sets the display mode and resolution based on the BD-J object in the current title provided to the BD-J platform unit.

- the video decoder 5a decodes the left-view video stream and outputs the left-eye plane (the video plane 6 shown in FIG. 5).

- the video decoder 5b decodes the right-view video stream, and the right-eye plane (shown by the code (R) in the video plane 6 shown in FIG. 5). Write to.

- the video decoder 5a decodes a left-view video stream, for example, and displays the left-eye plane (shown in FIG. 5). And the right-eye plane (denoted by the symbol (R) in the video plane 6 shown in FIG. 5).

- the demultiplexer 4 sends a 2D video stream to the video decoder 5a, and the video decoder 5a sends the decoded 2D video data to the left-eye video plane (FIG. 5).

- the video plane 6 shown in FIG. 4 is written in the code (L).

- the image decoder 7a decodes the left-view subtitle stream stored in the image memory 7c, and the left-eye plane ( The image decoder 7b decodes the right-view subtitle stream stored in the image memory 7d and writes the right-eye plane (shown in FIG. 5). (Indicated by the reference (R) in the image plane 8 shown).

- the image decoder 7a decodes the left-view subtitle stream stored in the image memory 7c, and uses the left-eye subtitle stream. Write to the plane (denoted by the symbol (L) in the image plane 8 shown in FIG. 5) and the right-eye plane (denoted by the symbol (R) in the image plane 8 shown in FIG. 5).

- the left-view subtitle stream is decoded and written to the left-eye plane and the right-eye plane. If the subtitle stream recorded on the recording medium shares the same subtitle stream on the left and right, the shared subtitle stream can be read and written to the left-eye image plane and the right-eye image plane. good.

- the demultiplexer 4 is configured to store the 2D subtitle stream in the image memory 7c, and the image decoder 7a is stored in the image memory 7c.

- the 2D subtitle stream is decoded and written to the left-eye plane (denoted by the code (L) in the image plane 8 shown in FIG. 5).

- the interactive graphics for the left eye and the interactive graphics for the right eye drawn by this drawing program are graphics in which the angles seen from each other are different so as to look like three-dimensional graphics.

- the BD-J application uses the rendering engine 22a to create the plane for the left eye (indicated by the reference (L) in the interactive graphics plane 10 of FIG. 4).

- the left-view interactive graphics are written into the right-eye plane (the interactive graphics plane 10 shown in FIG. 4 with the symbol (R) added).

- the BD-J application uses the rendering engine 22a to convert the interactive graphics for the left view into the code of the interactive graphics plane 10. Write to (L) and the code

- the BD-J application uses the rendering engine 22a to convert the 2D interactive graphics into the interactive graphics plane 10 (more specifically, the code (L) of the interactive graphics plane 10). It is configured to write to (the ones marked with).

- the still image decoder 27b stores the left-view still image data and the right-view data stored in the still image memory 27a.

- the still image data is decoded, the left-view still image data is the left-eye plane (the symbol (L) added to the background plane 11 shown in FIG. 4), and the right-view still image data is the right-eye plane. Each of them is written in (the one with the reference (R) in the background plane 11 shown in FIG. 4).

- the background image display mode is, for example, the 3D display mode and the stereo mode is off, the 3D background image (left view still image data, right view still image data) stored in the still image memory 27a.

- the still image decoder 27b decodes the left-view still image data, and the left-eye plane (with the symbol (L) in the background plane 11 shown in FIG. 4) and the right-eye plane (FIG. 4).

- the background image display mode is, for example, the 2D display mode

- the still image decoder 27b decodes the 2D still image data stored in the still image memory 27a, and the left-eye plane (the code in the background plane 11 shown in FIG. 4). (With (L)).

- FIG. 5 is a diagram showing switching between the 2D display mode and the 3D display mode.

- the output model in the 2D display mode is shown on the left side of the figure.

- the video plane 6, the image plane 8 (“Subtitle” in the figure), the interactive graphics plane 10, the background plane 11, and the output are each configured.

- the left view and the right view are used in common, and as a result, the same output is seen.

- the output model in 3D display mode is displayed on the right side of this figure.

- the video plane 6, the image plane 8 (“Subtitle” in the figure), and the interactive graphics plane 10 are divided into left view and right view, respectively.

- the picture data and graphics data to be stored are stored.

- the left view and right view outputs exist separately, and it is possible to provide different images for the left eye and the right eye, and as if the 3D object in the screen pops out to the front in the parallax It is possible to bring out 3D effects.

- FIG. 6 is a diagram illustrating an example of a synthesis process when the stereo mode of each plane is all on and the stereo mode is all off in the 3D display mode.

- FIG. 6 shows an example in which each plane unifies the stereo mode. However, ON / OFF of the stereo mode can be changed for each plane.

- the left side shows the plane configuration when all stereo modes of each plane are on in the 3D display mode

- the right side shows the plane configuration when all stereo modes of each plane are off in the 3D display mode.

- the first level shows the background plane 11 and the output before synthesis.

- the second row shows the video stream, the video plane 6, and the output before synthesis.

- the third row shows the image plane 8 and the output before synthesis.

- the fourth row shows the interactive graphics plane 10 and the output before synthesis.

- the background plane is indicated by the left-eye background plane indicated by the area indicated by (L) for writing the left-view background data, and indicated by the area indicated by (R).

- the right-eye background plane is written with the right-view background data, and is used when combining the left-eye / right-eye.

- the background data for the left view is written by the application in the areas with (L) and (R) in the background plane, so the background data for the right view is Does not affect the display.

- the stereo mode When the stereo mode is ON, the picture data of the left-eye video in the video stream is stored in the left-view video plane. Also, in the video stream, picture data of the right-eye video is stored in the right-view video plane. When the stereo mode of the video plane is OFF, the left-eye video picture data is stored in both the left-view video plane and the right-view video plane.

- the image data for the left view is written in the image plane for the left eye indicated by the area indicated by (L), and the image plane for the right eye indicated by the area indicated by (R) is displayed.

- Image data for right view is written and used for left eye / right eye composition respectively.

- the caption graphics corresponding to the image data for right view does not affect the display.

- the stereo mode is OFF, the contents of the image plane 8 are shifted to the right or left (Shifed in the figure). Left).

- the interactive graphics plane 10 is for left-eye interactive graphics planes written in the left-eye interactive graphics plane indicated by the region (L) and the right-eye indicated by the region (R).

- the interactive graphics for right view is written in the interactive graphics plane, and is used for the left eye / right eye composition, respectively.

- the stereo mode of the interactive graphics plane 10 is OFF, interactive graphics for writing by the application does not affect the display.

- the stereo mode is OFF, the content of the interactive graphics plane 10 is shifted to the right or left (Shifed Left in the figure).

- FIG. 7 shows how the background plane 11, the video plane, the image plane 8, and the interactive graphics plane 10 are superimposed when the display mode is 3D and all the planes are in the stereo mode ON.

- the left view background plane u4 as the left view, the left view video u3 read from the video stream, the left view graphics u2 of the image plane 8, and the left view graphics u1 of the interactive graphics plane 10 are sequentially synthesized. I understand that.

- the right view background plane u8 as the right view, the right view video u7 read from the video stream, the right view graphics u6 of the image plane 8, and the right view graphics u5 of the interactive graphics plane 10 are sequentially combined. I understand.

- FIG. 8 shows how the background plane 11, the video plane, the image plane 8, and the interactive graphics plane 10 are overlaid when the display mode is 3D and all the planes are in the stereo mode OFF.

- the left view background plane r4 as the left view

- the left view video r2 read from the video stream

- the left view graphics of the image plane 8 are shifted to a certain horizontal direction (right in the figure) ShiftedLeft

- ShiftedLeft It can be seen that the Shifted Left graphics r1 obtained by shifting the left view graphics of the graphics r3 and the interactive graphics plane 10 in a certain horizontal direction (right in the figure) is sequentially combined.

- FIG. 9 shows the synthesis result for each plane.

- 6L and 6R are examples of video planes.

- the difference in the orientation of the woman's face indicates that the left-view stream and the right-view stream were taken from different angles.

- the deviation of the face direction and position of the person in FIG. 9 is schematic and does not represent the exact face direction or position for realizing stereoscopic reproduction.

- the character “I love you” in the image plane 8 is an image after subtitle data is decoded by the image decoder.

- the GUI component that accepts the forward / backward skip operation in the interactive graphics plane 10 is a graphics image drawn on the interactive graphics plane 10 by the BD-J application.

- 6LL is an output left view after synthesis

- 6RR is an output right view output after synthesis.

- 6LL left-view video you can see that the subtitle “I“ love you ”is shifted to the right.

- 6RR right-view video the subtitle “I love you” is shifted to the left.

- FIG. 10 is an example of a case where the video output when all the planes are in the stereo mode is viewed on the 3D display.

- the video for the right view and the left view is filtered through, for example, the liquid crystal glasses 500 to display different images for the left and right eyes.

- the video stream video is not only three-dimensionalized with the left and right images superimposed, but also the GUI parts that accept subtitles for “I love you” and skip operations before and after are different for the left and right eyes. It is that. In this way, if both left-eye content and right-eye content are prepared in advance, turning on the stereo mode naturally makes it possible to maintain the depth of all video, subtitles, and GUI parts. .

- FIG. 11 shows an example of a stereoscopic video image that appears when the video output is viewed with the liquid crystal glasses 500 when the video plane is in the stereo mode ON but the other planes are all in the stereo mode OFF.

- Graphics plane stereo for discs that do not have subtitles or GUIs for both the left and right eyes past content created on the assumption of 2D, discs that have to do so due to lack of disk space, etc.

- the mode must be turned off.

- the video plane since the video plane is in the stereo mode ON, the images reproduced from the left view stream and the right view stream are the same subject to be photographed and reproduced from different angles. I can see that.

- the image plane 8 and the interactive graphics plane 10 are in stereo mode OFF, and the same subtitle stream and the same GUI image shifted in the right and left directions are combined into the video plane, and only the subtitle / GUI for one eye is used. Even if it does not exist, it can be displayed in front of the stereoscopic video, and it can be seen that the degree of eye fatigue on the viewer can be reduced.

- the direction in which the plane shift engine 20 shifts depends on whether the graphics plane has a stereoscopic effect that is located behind the display screen or has a stereoscopic effect that is positioned on the near side of the display screen.

- shift amount causes the subtitles written in the image plane 8 or the graphics image written in the interactive graphics plane 10 to be displayed so as to be positioned on the front or back of the display screen. Should be calculated by the depth value. Further, it can be derived from any parameter that can be adopted as parallax for both eyes in stereoscopic reproduction.

- plane offset a parameter for moving pixel data in the graphics plane to the left and right by the shift amount as described above.

- the shift amount is a scalar amount

- the plane offset is a vector with positive and negative polarities and magnitudes. From the normal state (that is, the state that appears to be displayed on the display screen), the display screen It indicates how much the coordinate of the pixel data is moved in which direction in the horizontal direction (for example, right direction and left direction). In the following description, it is assumed that the plane shift is executed according to this plane offset. Some plane offsets are obtained by adding a positive or negative sign to the shift amount, and others can be used as the shift amount after performing calculation by some function expression.

- the plane offset of the graphics plane indicates the number of pixels by which the pixel data coordinates stored in the right-view graphics plane and the pixel data coordinates stored in the left-view graphics plane are shifted. .

- the plane offset is “0”, it means that the graphics plane is not shifted, that is, it is displayed in a normal state (a state that appears to be displayed on the display screen).

- FIG. 12 shows that when the display mode is 3D and the stereo mode of each plane is OFF, the plane offset of the background image stored in the background plane is “0”, and the plane offset of the video stored in the video plane is “0”.

- the plane offset of the image plane is” 0

- the graphics plane after the right shift is added with a transparent area on the left side and the right end is cut off.

- the graphics plane after the right shift is added with a transparent area on the left side and the right end is cut off.