WO2023074279A1 - 俯瞰データ生成装置、俯瞰データ生成プログラム、俯瞰データ生成方法、及びロボット - Google Patents

俯瞰データ生成装置、俯瞰データ生成プログラム、俯瞰データ生成方法、及びロボット Download PDFInfo

- Publication number

- WO2023074279A1 WO2023074279A1 PCT/JP2022/037139 JP2022037139W WO2023074279A1 WO 2023074279 A1 WO2023074279 A1 WO 2023074279A1 JP 2022037139 W JP2022037139 W JP 2022037139W WO 2023074279 A1 WO2023074279 A1 WO 2023074279A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- bird

- eye view

- observation

- view data

- ground

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/20—Analysis of motion

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05D—SYSTEMS FOR CONTROLLING OR REGULATING NON-ELECTRIC VARIABLES

- G05D1/00—Control of position, course, altitude or attitude of land, water, air or space vehicles, e.g. using automatic pilots

- G05D1/20—Control system inputs

- G05D1/24—Arrangements for determining position or orientation

- G05D1/243—Means capturing signals occurring naturally from the environment, e.g. ambient optical, acoustic, gravitational or magnetic signals

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05D—SYSTEMS FOR CONTROLLING OR REGULATING NON-ELECTRIC VARIABLES

- G05D1/00—Control of position, course, altitude or attitude of land, water, air or space vehicles, e.g. using automatic pilots

- G05D1/60—Intended control result

- G05D1/617—Safety or protection, e.g. defining protection zones around obstacles or avoiding hazards

- G05D1/622—Obstacle avoidance

- G05D1/633—Dynamic obstacles

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05D—SYSTEMS FOR CONTROLLING OR REGULATING NON-ELECTRIC VARIABLES

- G05D1/00—Control of position, course, altitude or attitude of land, water, air or space vehicles, e.g. using automatic pilots

- G05D1/60—Intended control result

- G05D1/646—Following a predefined trajectory, e.g. a line marked on the floor or a flight path

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05D—SYSTEMS FOR CONTROLLING OR REGULATING NON-ELECTRIC VARIABLES

- G05D1/00—Control of position, course, altitude or attitude of land, water, air or space vehicles, e.g. using automatic pilots

- G05D1/60—Intended control result

- G05D1/656—Interaction with payloads or external entities

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/20—Analysis of motion

- G06T7/246—Analysis of motion using feature-based methods, e.g. the tracking of corners or segments

- G06T7/251—Analysis of motion using feature-based methods, e.g. the tracking of corners or segments involving models

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/50—Depth or shape recovery

- G06T7/55—Depth or shape recovery from multiple images

- G06T7/579—Depth or shape recovery from multiple images from motion

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/70—Determining position or orientation of objects or cameras

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V40/00—Recognition of biometric, human-related or animal-related patterns in image or video data

- G06V40/10—Human or animal bodies, e.g. vehicle occupants or pedestrians; Body parts, e.g. hands

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V40/00—Recognition of biometric, human-related or animal-related patterns in image or video data

- G06V40/20—Movements or behaviour, e.g. gesture recognition

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N7/00—Television systems

- H04N7/18—Closed-circuit television [CCTV] systems, i.e. systems in which the video signal is not broadcast

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05D—SYSTEMS FOR CONTROLLING OR REGULATING NON-ELECTRIC VARIABLES

- G05D2107/00—Specific environments of the controlled vehicles

- G05D2107/10—Outdoor regulated spaces

- G05D2107/17—Spaces with priority for humans, e.g. populated areas, pedestrian ways, parks or beaches

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05D—SYSTEMS FOR CONTROLLING OR REGULATING NON-ELECTRIC VARIABLES

- G05D2109/00—Types of controlled vehicles

- G05D2109/10—Land vehicles

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05D—SYSTEMS FOR CONTROLLING OR REGULATING NON-ELECTRIC VARIABLES

- G05D2111/00—Details of signals used for control of position, course, altitude or attitude of land, water, air or space vehicles

- G05D2111/10—Optical signals

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/30—Subject of image; Context of image processing

- G06T2207/30196—Human being; Person

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/30—Subject of image; Context of image processing

- G06T2207/30241—Trajectory

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/30—Subject of image; Context of image processing

- G06T2207/30248—Vehicle exterior or interior

- G06T2207/30252—Vehicle exterior; Vicinity of vehicle

Definitions

- the present disclosure relates to a bird's-eye view data generation device, a bird's-eye view data generation program, a bird's-eye view data generation method, and a robot.

- GNSS Global Navigation Satellite System

- JP-A-2021-77287 cannot be applied when a bird's-eye view image is not available.

- Bird's-eye view data generation device capable of generating bird's-eye view data representing movement trajectory on the ground of an observation moving body and movement trajectory of each moving body on the ground from two-dimensional observation information

- the object is to provide a method and a robot.

- a first aspect of the disclosure is a bird's-eye view data generation device, which is time-series data of two-dimensional observation information representing at least one moving object observed from a viewpoint from an observation moving object equipped with an observation device in a dynamic environment. from the observed moving body in real space, obtained using an acquisition unit that acquires the prior information about the size of the moving body in real space, and the size and position of the moving body on the two-dimensional observation information observed from a bird's-eye view of the observed moving object from the time-series data of the two-dimensional observation information using the relative position of the moving object and a predetermined motion model representing the movement of the moving object a generation unit that generates bird's-eye view data representing the movement trajectory on the ground of the observation moving body and the movement trajectory on the ground of each of the moving bodies obtained in the case.

- the prior information relates to a size distribution of the moving object in real space

- the generating unit generates a distribution of relative positions of the moving object from the observed moving object

- the motion model representing the distribution of the movement of the moving body

- the movement trajectory representing the position distribution on the ground at each time of the observed moving body and the moving body from the time-series data of the two-dimensional observation information

- the bird's-eye view data representing the movement trajectory representing the position distribution on the ground at each time may be generated.

- the motion model may be a model representing uniform motion of the moving body, or a model representing motion according to interaction between the moving bodies.

- the bird's-eye view data generation device tracks each of the moving objects from the time series data of the two-dimensional observation information, Further comprising a tracking unit for acquiring size, wherein the generating unit generates the bird's-eye view data from the position and size at each time of each of the moving objects on the two-dimensional observation information acquired by the tracking unit. You may make it

- the generation unit generates a posterior distribution represented using the relative position of the moving body from the observed moving body and the motion model at each time, The bird's eye view is performed so as to maximize a posterior distribution of the positions on the ground of the observation moving object and the moving objects when the positions on the ground of the observation moving object and the moving objects are given. Data may be generated.

- the generation unit fixes the position on the ground of each of the moving bodies, and expresses the posterior distribution by expressing the position on the ground of the observation moving body and the observation direction of the observation device. estimating to optimize an energy cost function;

- the bird's-eye view data may be generated by alternately repeating the estimation so as to optimize the energy cost function representing the distribution.

- the generation unit generates the bird's-eye view data using the static landmarks represented by the two-dimensional observation information under a condition that static landmarks are detected from the two-dimensional observation information. may be generated.

- a second aspect of the disclosure is a bird's-eye view data generation program, in which a computer generates two-dimensional observation information representing at least one moving object observed from a viewpoint from an observation moving object equipped with an observation device in a dynamic environment.

- the observation in real space obtained using an acquisition step of acquiring time-series data, prior information on the size of the moving object in real space, and the size and position of the moving object on the two-dimensional observation information.

- a bird's-eye view of the observed moving object from the time-series data of the two-dimensional observation information using the relative position of the moving object from the moving object and a predetermined motion model representing the movement of the moving object.

- a third aspect of the disclosure is a bird's-eye view data generation method, in which a computer generates two-dimensional observation information representing at least one moving object observed from a viewpoint from an observation moving object equipped with an observation device in a dynamic environment.

- the observation in real space obtained using an acquisition step of acquiring time-series data, prior information on the size of the moving object in real space, and the size and position of the moving object on the two-dimensional observation information.

- a fourth aspect of the disclosure is a robot that acquires time-series data of two-dimensional observation information representing at least one moving object observed from the viewpoint of the robot equipped with an observation device in a dynamic environment. and the relative position of the moving body from the robot in the real space, which is obtained using prior information about the size of the moving body in the real space and the size and position of the moving body on the two-dimensional observation information. and a predetermined motion model representing the motion of the mobile body, the ground surface of the robot obtained when the robot is observed from a bird's-eye view from the time-series data of the two-dimensional observation information.

- a generation unit that generates bird's-eye view data representing a movement trajectory on the ground and a movement trajectory of each of the moving objects on the ground; an autonomous running unit that causes the robot to autonomously travel; a controller that controls the autonomous mobile unit to move to the ground.

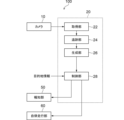

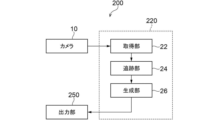

- FIG. 1 is a diagram showing a schematic configuration of a robot according to a first embodiment

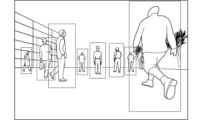

- FIG. It is a figure which shows an example of the image image

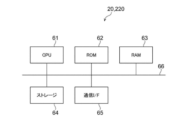

- 1 is a block diagram showing the hardware configuration of a bird's-eye view data generating device according to first and second embodiments

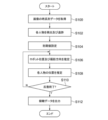

- FIG. 4 is a flowchart showing the flow of bird's-eye view data generation processing by the bird's-eye view data generation device according to the first and second embodiments

- time-series data of images It is an example of time-series data of images. It is a figure which shows an example of bird's-eye view data. It is an example of distribution which shows the position of each person. It is a figure which shows an example of the result of having detected the person from the image.

- FIG. 1 is a diagram showing a schematic configuration of a robot 100 according to the first embodiment of the present disclosure.

- the robot 100 includes a camera 10 , a bird's-eye view data generation device 20 , a notification section 50 and an autonomous traveling section 60 .

- the bird's-eye view data generation device 20 includes an acquisition unit 22 , a tracking unit 24 , a generation unit 26 and a control unit 28 .

- the robot 100 is an example of an observation mobile

- the camera 10 is an example of an observation device.

- the camera 10 captures images of the surroundings of the robot 100 at predetermined intervals until the robot 100 moves from the start point to the destination, and outputs the captured images to the acquisition unit 22 of the bird's-eye view data generation device 20 .

- An image is an example of two-dimensional observation information.

- an image representing at least one person observed from the viewpoint of the robot 100 in a dynamic environment is captured by the camera 10 (see FIG. 2).

- a perspective projection RGB camera As the camera 10, a perspective projection RGB camera, a fisheye camera, or a 360-degree camera may be used.

- the acquisition unit 22 acquires time-series data of images captured by the camera 10 .

- the tracking unit 24 tracks each person from the time-series data of the acquired image, and acquires the position and size of each person on the image at each time.

- a bounding box representing the person is detected and tracked, and the center position (the center position of the bounding box) and the height (the bounding box) of the person on the image are height) at each time.

- the generation unit 26 generates bird's-eye view data representing the movement trajectory of the robot 100 on the ground and the movement trajectory of each person on the ground, which are obtained when the robot 100 is observed from a bird's-eye view, from the time-series data of the images. Generate. At this time, the generation unit 26 generates the distribution of the relative position of the person from the robot 100 in the real space, which is obtained using prior information about the size of the person in the real space and the size and position of each person on the image. and a predetermined motion model that represents the distribution of the motion of a person to generate bird's-eye view data.

- the prior information about the size of the person in the real space relates to the distribution of the size of the person in the real space

- the motion model is a model that expresses the uniform motion of the person, or an interaction between the people. It is a model that expresses the movement according to

- the generation unit 26 generates bird's-eye view data so as to maximize the posterior distribution represented using the relative position of the person from the robot 100 and the motion model for each time.

- This posterior distribution shows the position of each of the robot 100 and the person on the ground one time ago, and the position and size of each of the person on the image at the current time. is the posterior distribution of the position on the ground of

- ⁇ X 0:K t represents the position on the ground of each of the robot 100 and the person at time t.

- X 1:K t represents the position on the ground of each person at time t.

- Z 1:K t represents the relative position of each person from robot 100 at time t.

- the generation unit 26 as an example, (A) fixes the position of each person on the ground, and expresses the above posterior distribution as the position of the robot 100 on the ground and the observation direction of the camera 10. estimating to minimize the energy cost function; and estimating to minimize the energy cost function to be represented. Thereby, the generation unit 26 generates bird's-eye view data.

- Minimizing the energy cost function when estimating the position of the robot 100 on the ground and the observation direction of the camera 10 is represented by the following equation (1). Also, minimizing the energy cost function when estimating the position of each person on the ground is represented by the following equation (2).

- ⁇ x 0 t represents the amount of change in the position of the robot 100 on the ground and the amount of change in the observation direction of the camera 10 at the time t from the time t ⁇ 1 before.

- X 0:K t ⁇ :t ⁇ 1 indicates the positions of the robot 100 and the person on the ground at each time from time t ⁇ to time t ⁇ 1.

- the energy cost function ⁇ c is represented by the following formula.

- the energy cost function is derived by taking the negative logarithm of the posterior distribution.

- the first term of the above equation is expressed by the following equation.

- x 0 t represents the position of the robot 100 on the ground and the observation direction of the camera 10 at time t

- x k t represents the person k at time t. represents the position on the ground of

- the relative position z k t of the k-th person with respect to the camera 10 at time t follows a Gaussian distribution as shown in the following formula.

- h k is the height of a person in real space

- ⁇ h represents the mean

- ⁇ h 2 represents the variance

- the motion model is a model that represents the motion according to the interaction between people, and the first term of the above formula is expressed by the following formula.

- the generation unit 26 generates bird's-eye view data as shown in FIG. 4, for example.

- FIG. 4 shows an example in which the line connecting black circles indicates the movement trajectory of the robot 100 on the ground, and the dashed line indicates the movement trajectory of the robot 100 on the ground.

- the control unit 28 uses the bird's-eye view data to control the autonomous traveling unit 60 so that the robot 100 moves to the destination.

- the control unit 28 designates the moving direction and speed of the robot 100 and controls the autonomous traveling unit 60 to move in the designated moving direction and speed.

- control unit 28 uses the bird's-eye view data to control the notification unit 50 to output a message such as "Please clear the road” or sound a warning sound when it is determined that an intervention action is necessary. do.

- the bird's-eye view data generation device 20 includes a CPU (Central Processing Unit) 61, a ROM (Read Only Memory) 62, a RAM (Random Access Memory) 63, a storage 64, and a communication interface (I/F) 65.

- a CPU Central Processing Unit

- ROM Read Only Memory

- RAM Random Access Memory

- storage 64 a storage

- I/F communication interface

- the storage 64 stores a bird's-eye view data generation program.

- a CPU 61 is a central processing unit that executes various programs and controls each configuration. That is, the CPU 61 reads a program from the storage 64 and executes the program using the RAM 63 as a work area. The CPU 61 performs control of the above components and various arithmetic processing according to programs recorded in the storage 64 .

- the ROM 62 stores various programs and various data.

- the RAM 63 temporarily stores programs or data as a work area.

- the storage 64 is composed of a HDD (Hard Disk Drive) or an SSD (Solid State Drive) and stores various programs including an operating system and various data.

- the communication interface 65 is an interface for communicating with other devices, and uses standards such as Ethernet (registered trademark), FDDI, or Wi-Fi (registered trademark), for example.

- the camera 10 shoots the surroundings of the robot 100 at predetermined intervals.

- the bird's-eye view data generation device 20 periodically generates bird's-eye view data through the bird's-eye view data generation process shown in FIG. do.

- FIG. 6 is a flowchart showing the flow of bird's-eye view data generation processing by the bird's-eye view data generation device 20.

- the bird's-eye view data generation process is performed by the CPU 61 reading out the bird's-eye view data generation program from the storage 64, developing it in the RAM 63, and executing it.

- step S100 the CPU 61, as the acquisition unit 22, acquires the time-series data of the images captured by the camera 10.

- step S102 the CPU 61, as the tracking unit 24, tracks each person from the time-series data of the acquired image, and acquires the position and size of each person on the image at each time.

- step S104 the CPU 61, as the generation unit 26, determines the position of the robot 100 on the ground, the observation direction of the camera 10, and the position of each person on the ground for each time of the acquired image time-series data. to set the initial value.

- step S106 the CPU 61, as the generating unit 26, fixes the position of each person on the ground according to the above equation (1), and converts the position of the robot 100 on the ground and the observation direction of the camera 10 to the above post facto Estimate an optimal energy cost function that describes the distribution.

- step S108 the CPU 61, as the generation unit 26, fixes the position of the robot 100 on the ground and the observation direction of the camera 10 according to the above equation (2), and converts the position of each person on the ground to the above post facto Estimate an optimal energy cost function that describes the distribution.

- step S110 the CPU 61, as the generator 26, determines whether or not a predetermined repetition end condition is satisfied. For example, the fact that the number of iterations reaches the upper limit number of times or that the value of the energy cost function has converged may be used as the iteration termination condition.

- the CPU 61 proceeds to step S112. On the other hand, if the repetition end condition is not satisfied, the CPU 61 returns to step S106.

- step S112 the CPU 61, as the generation unit 26, generates a bird's-eye view representing the position of the robot 100 on the ground, the observation direction of the camera 10, and the position of the person on the ground for each time finally obtained. Data is generated, output to the control unit 28, and the bird's-eye view data generation process is terminated.

- control unit 28 designates the moving direction and speed of the robot 100 so that the robot 100 moves to the destination, and autonomously moves so that the robot 100 moves in the designated moving direction and speed.

- control unit 60 uses the bird's-eye view data to control the notification unit 50 to output a message such as "Please clear the road” or sound a warning sound when it is determined that an intervention action is necessary. do.

- time-series data of images representing at least one person observed from the viewpoint of the robot 100 equipped with the camera 10 in a dynamic environment is acquired.

- a motion model is used to generate bird's-eye view data representing the movement trajectory of the robot 100 on the ground and the movement trajectory of each person on the ground from the time-series data of the images.

- an image observed from the viewpoint of the robot 100 equipped with the camera 10 in a dynamic environment can be used to capture the movement trajectory of the robot 100 on the ground and the human figure. It is possible to generate bird's-eye view data representing the movement trajectory on the ground of each.

- FIG. 7 is a diagram showing a schematic configuration of the information processing terminal 200 according to the second embodiment of the present disclosure.

- the information processing terminal 200 includes a camera 10 , a bird's-eye view data generation device 220 and an output section 250 .

- the bird's-eye view data generation device 220 includes an acquisition unit 22 , a tracking unit 24 and a generation unit 26 .

- the user is an example of an observation moving object

- the camera 10 is an example of an observation device.

- the information processing terminal 200 is directly held by the user or mounted on a holding object (eg, suitcase) held by the user.

- a holding object eg, suitcase

- the camera 10 captures images of the user's surroundings at predetermined intervals, and outputs the captured images to the acquisition unit 22 of the bird's-eye view data generation device 220 .

- the generation unit 26 generates bird's-eye view data representing the movement trajectory of the user on the ground and the movement trajectory of each person on the ground, obtained when the user is observed from a bird's-eye position, from the time-series data of the images. , to the output unit 250 .

- the generating unit 26 generates the relative position of the person from the user in the real space, which is obtained using prior information about the size of the person in the real space and the size and position of each person on the image, and Bird's-eye view data is generated using a determined motion model representing the motion of a person.

- the output unit 250 presents the generated bird's-eye view data to the user, and transmits the bird's-eye view data to a server (not shown) via the Internet.

- the bird's-eye view data generation device 220 has the same hardware configuration as the bird's-eye view data generation device 20 of the first embodiment.

- time-series data of images representing at least one person observed from the viewpoint of the user holding the information processing terminal 200 having the camera 10 in a dynamic environment is acquired.

- the relative position of the person from the user in the real space and the predetermined movement representing the movement of the person which is obtained using prior information about the size of the person in the real space and the size and position of the person on the image.

- a model is used to generate bird's-eye view data representing the movement trajectory of the user on the ground and the movement trajectory of each person on the ground from the time-series data of the images.

- the movement of the user on the ground can be determined from the image observed from the viewpoint of the user holding the information processing terminal 200 having the camera 10 in a dynamic environment.

- Bird's-eye view data representing the trajectory and the movement trajectory of each person on the ground can be generated.

- the present disclosure can also be applied to self-driving vehicles.

- the observation moving object is an automatic driving vehicle

- the observation device is a camera, laser radar, millimeter wave radar

- the moving objects are other vehicles, motorcycles, pedestrians, and the like.

- time-series data of images taken in a crowded environment as shown in FIG. 8 was used as input.

- FIG. 8 shows an example in which time-series data composed of images 11, 12, and 13 at times t, t+1, and t+2 are input.

- bird's-eye view data as shown in FIG. 9 was generated by the bird's-eye view data generation device 20 .

- FIG. 9 shows an example in which bird's-eye view data represents the positions of the robot 100 and the person on the ground at times t, t+1, and t+2.

- the robot 100 and the information processing terminal 200 are provided with the bird's-eye view data generation devices 20 and 220, but the functions of the bird's-eye view data generation devices 20 and 220 may be provided in an external server.

- the robot 100 and the information processing terminal 200 transmit time-series data of images captured by the camera 10 to the external server.

- the external server generates bird's-eye view data from the time-series data of the transmitted images, and transmits the data to the robot 100 and the information processing terminal 200 .

- the positions of the robot 100 and the user at each time and the positions of each person may be expressed as a probability distribution as shown in FIG.

- the generation unit 26 From the time-series data of the images, the generation unit 26 generates a movement trajectory representing the position distribution of the robot 100 and the user on the ground at each time, and a movement trajectory representing the position distribution of the person on the ground at each time.

- the generation unit 26 may generate bird's-eye view data using the static landmarks represented by the image.

- the above “CubeSLAM: Monocular 3D Object SLAM”, internet search ⁇ URL: https://arxiv. org/abs/1806.00557>, Jun 2018 may be used.

- the static landmarks represented by the image are used to generate bird's-eye view data, and the image captured by the camera 10 is generated.

- bird's-eye view data may be generated by the method described in the above embodiment.

- the bird's-eye view data generated using the static landmarks represented by the images may be integrated with the bird's-eye view data generated by the method described in the above embodiment.

- a pre-learned DNN (Deep Neural Network) model may be used as the movement model.

- a DNN model may be used as the movement model, which inputs the relative position of each person in the vicinity and outputs the position of the target person at the next time step.

- the tracking unit 24 detects and tracks the bounding box representing each person on the image, and calculates the center position (center position of the bounding box) and height (height of the bounding box) of the person on the image. ) has been described as an example, but the present invention is not limited to this.

- the tracking unit 24 detects and tracks the human skeleton representing the person, and calculates the center position (center position of the human skeleton) and height (height of the human skeleton) of the person on the image. ) may be obtained for each time.

- the tracking unit 24 detects and tracks a line indicating the height of each person on the image, and detects the center position of the person on the image (the center position of the line). ) and height (line height) may be obtained for each time.

- the two-dimensional observation information is an image

- the observation device is an event camera

- data having a pixel value corresponding to the movement of each pixel may be used as the two-dimensional observation information.

- the moving object represented by the bird's-eye view data is a person has been described as an example, but it is not limited to this.

- the moving object represented by bird's-eye view data may be personal mobility such as a bicycle and a vehicle.

- the CPU may read and execute the software (program) to generate the bird's-eye view data generation process, which may be executed by various processors other than the CPU.

- the processor is a PLD (Programmable Logic Device) whose circuit configuration can be changed after manufacturing, such as an FPGA (Field-Programmable Gate Array), and an ASIC (Application Specific Integrated Circuit) to execute specific processing.

- PLD Programmable Logic Device

- FPGA Field-Programmable Gate Array

- ASIC Application Specific Integrated Circuit

- the bird's-eye view data generation processing may be executed by one of these various processors, or a combination of two or more processors of the same or different types (for example, multiple FPGAs, and a combination of a CPU and an FPGA). combination, etc.). More specifically, the hardware structure of these various processors is an electric circuit in which circuit elements such as semiconductor elements are combined.

- the overview data generation program has been pre-stored in the storage 64, but is not limited to this.

- the program may be provided in a form recorded on a recording medium such as CD-ROM (Compact Disc Read Only Memory), DVD-ROM (Digital Versatile Disc Read Only Memory), and USB (Universal Serial Bus) memory.

- the program may be downloaded from an external device via a network.

- the bird's-eye view data generation process includes: Acquiring time-series data of two-dimensional observation information representing at least one moving object observed from a viewpoint from an observation moving object equipped with an observation device in a dynamic environment; The relative position of the moving object from the observed moving object in real space, which is obtained using prior information on the size of the moving object in real space and the size and position of the moving object on the two-dimensional observation information.

Landscapes

- Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Multimedia (AREA)

- Remote Sensing (AREA)

- Radar, Positioning & Navigation (AREA)

- Aviation & Aerospace Engineering (AREA)

- Automation & Control Theory (AREA)

- Human Computer Interaction (AREA)

- Health & Medical Sciences (AREA)

- General Health & Medical Sciences (AREA)

- Psychiatry (AREA)

- Social Psychology (AREA)

- Signal Processing (AREA)

- Control Of Position, Course, Altitude, Or Attitude Of Moving Bodies (AREA)

- Image Analysis (AREA)

Priority Applications (3)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| US18/696,154 US20240404078A1 (en) | 2021-10-29 | 2022-10-04 | Bird's-eye view data generation device, bird's-eye view data generation program, bird's-eye view data generation method, and robot |

| CN202280065202.7A CN118056398A (zh) | 2021-10-29 | 2022-10-04 | 俯瞰数据生成装置、俯瞰数据生成程序、俯瞰数据生成方法及机器人 |

| EP22886611.7A EP4425912A4 (en) | 2021-10-29 | 2022-10-04 | Preview Data Generation Device, Preview Data Generation Program, Preview Data Generation Method, and Robot |

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| JP2021-177665 | 2021-10-29 | ||

| JP2021177665A JP7438510B2 (ja) | 2021-10-29 | 2021-10-29 | 俯瞰データ生成装置、俯瞰データ生成プログラム、俯瞰データ生成方法、及びロボット |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| WO2023074279A1 true WO2023074279A1 (ja) | 2023-05-04 |

Family

ID=86157838

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/JP2022/037139 Ceased WO2023074279A1 (ja) | 2021-10-29 | 2022-10-04 | 俯瞰データ生成装置、俯瞰データ生成プログラム、俯瞰データ生成方法、及びロボット |

Country Status (5)

| Country | Link |

|---|---|

| US (1) | US20240404078A1 (enExample) |

| EP (1) | EP4425912A4 (enExample) |

| JP (2) | JP7438510B2 (enExample) |

| CN (1) | CN118056398A (enExample) |

| WO (1) | WO2023074279A1 (enExample) |

Families Citing this family (6)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP7683208B2 (ja) * | 2020-12-16 | 2025-05-27 | 株式会社三洋物産 | 遊技機 |

| JP7683207B2 (ja) * | 2020-12-16 | 2025-05-27 | 株式会社三洋物産 | 遊技機 |

| JP7367715B2 (ja) * | 2021-03-02 | 2023-10-24 | 株式会社三洋物産 | 遊技機 |

| JP7509095B2 (ja) * | 2021-07-13 | 2024-07-02 | 株式会社三洋物産 | 遊技機 |

| JP7509094B2 (ja) * | 2021-07-13 | 2024-07-02 | 株式会社三洋物産 | 遊技機 |

| JP7509093B2 (ja) * | 2021-07-13 | 2024-07-02 | 株式会社三洋物産 | 遊技機 |

Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2019213039A (ja) * | 2018-06-04 | 2019-12-12 | 国立大学法人 東京大学 | 俯瞰映像提示システム |

| JP2021077287A (ja) | 2019-11-13 | 2021-05-20 | オムロン株式会社 | 自己位置推定モデル学習方法、自己位置推定モデル学習装置、自己位置推定モデル学習プログラム、自己位置推定方法、自己位置推定装置、自己位置推定プログラム、及びロボット |

| WO2021095464A1 (ja) * | 2019-11-13 | 2021-05-20 | オムロン株式会社 | ロボット制御モデル学習方法、ロボット制御モデル学習装置、ロボット制御モデル学習プログラム、ロボット制御方法、ロボット制御装置、ロボット制御プログラム、及びロボット |

| JP2021177665A (ja) | 2016-11-25 | 2021-11-11 | 国立大学法人東北大学 | 弾性波デバイス |

Family Cites Families (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2012064131A (ja) * | 2010-09-17 | 2012-03-29 | Tokyo Institute Of Technology | 地図生成装置、地図生成方法、移動体の移動方法、及びロボット装置 |

| JP6481520B2 (ja) * | 2015-06-05 | 2019-03-13 | トヨタ自動車株式会社 | 車両の衝突回避支援装置 |

| CN112242069B (zh) * | 2019-07-17 | 2021-10-01 | 华为技术有限公司 | 一种确定车速的方法和装置 |

-

2021

- 2021-10-29 JP JP2021177665A patent/JP7438510B2/ja active Active

-

2022

- 2022-10-04 CN CN202280065202.7A patent/CN118056398A/zh active Pending

- 2022-10-04 US US18/696,154 patent/US20240404078A1/en active Pending

- 2022-10-04 EP EP22886611.7A patent/EP4425912A4/en active Pending

- 2022-10-04 WO PCT/JP2022/037139 patent/WO2023074279A1/ja not_active Ceased

-

2024

- 2024-02-02 JP JP2024015105A patent/JP2024060619A/ja active Pending

Patent Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2021177665A (ja) | 2016-11-25 | 2021-11-11 | 国立大学法人東北大学 | 弾性波デバイス |

| JP2019213039A (ja) * | 2018-06-04 | 2019-12-12 | 国立大学法人 東京大学 | 俯瞰映像提示システム |

| JP2021077287A (ja) | 2019-11-13 | 2021-05-20 | オムロン株式会社 | 自己位置推定モデル学習方法、自己位置推定モデル学習装置、自己位置推定モデル学習プログラム、自己位置推定方法、自己位置推定装置、自己位置推定プログラム、及びロボット |

| WO2021095463A1 (ja) * | 2019-11-13 | 2021-05-20 | オムロン株式会社 | 自己位置推定モデル学習方法、自己位置推定モデル学習装置、自己位置推定モデル学習プログラム、自己位置推定方法、自己位置推定装置、自己位置推定プログラム、及びロボット |

| WO2021095464A1 (ja) * | 2019-11-13 | 2021-05-20 | オムロン株式会社 | ロボット制御モデル学習方法、ロボット制御モデル学習装置、ロボット制御モデル学習プログラム、ロボット制御方法、ロボット制御装置、ロボット制御プログラム、及びロボット |

Non-Patent Citations (5)

| Title |

|---|

| CUBESLAM: MONOCULAR 3D OBJECT SLAM, June 2018 (2018-06-01), Retrieved from the Internet <URL:https://arxiv.org/abs/1806.00557> |

| MONOCULAR 3D OBJECT SLAM, June 2018 (2018-06-01), Retrieved from the Internet <URL:https://arxiv.org/abs/1806.00557> |

| MONOLOCO: MONOCULAR 3D PEDESTRIAN LOCALIZATION AND UNCERTAINTY ESTIMATION, June 2019 (2019-06-01), Retrieved from the Internet <URL:https://arxiv.org/abs/1906.06059> |

| See also references of EP4425912A4 |

| THE CURRENT STATE OF AND AN OUTLOOK ON FIELD ROBOTICS, Retrieved from the Internet <URL:https://committees.jsce.or.jp/opcet_sip/system/files/0130_01.pdf> |

Also Published As

| Publication number | Publication date |

|---|---|

| JP2023066840A (ja) | 2023-05-16 |

| CN118056398A (zh) | 2024-05-17 |

| JP7438510B2 (ja) | 2024-02-27 |

| EP4425912A4 (en) | 2025-10-29 |

| JP2024060619A (ja) | 2024-05-02 |

| EP4425912A1 (en) | 2024-09-04 |

| US20240404078A1 (en) | 2024-12-05 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| WO2023074279A1 (ja) | 俯瞰データ生成装置、俯瞰データ生成プログラム、俯瞰データ生成方法、及びロボット | |

| Kolhatkar et al. | Review of SLAM algorithms for indoor mobile robot with LIDAR and RGB-D camera technology | |

| TWI827649B (zh) | 用於vslam比例估計的設備、系統和方法 | |

| CN107562048B (zh) | 一种基于激光雷达的动态避障控制方法 | |

| CN112861777B (zh) | 人体姿态估计方法、电子设备及存储介质 | |

| US10909411B2 (en) | Information processing apparatus, information processing method, and computer program product | |

| JP2022538927A (ja) | 3次元目標検出及びインテリジェント運転 | |

| WO2019230339A1 (ja) | 物体識別装置、移動体用システム、物体識別方法、物体識別モデルの学習方法及び物体識別モデルの学習装置 | |

| KR20200010640A (ko) | 모션 인식 모델을 이용한 자체 운동 추정 장치 및 방법, 모션 인식 모델 트레이닝 장치 및 방법 | |

| JP7661231B2 (ja) | 自己位置推定装置、自律移動体、自己位置推定方法及びプログラム | |

| KR20220014678A (ko) | 영상의 깊이를 추정하는 방법 및 장치 | |

| US20230077856A1 (en) | Systems and methods for single-shot multi-object 3d shape reconstruction and categorical 6d pose and size estimation | |

| Son et al. | Synthetic deep neural network design for LiDAR-inertial odometry based on CNN and LSTM | |

| JP7322670B2 (ja) | 自己位置推定モデル学習方法、自己位置推定モデル学習装置、自己位置推定モデル学習プログラム、自己位置推定方法、自己位置推定装置、自己位置推定プログラム、及びロボット | |

| JP2020064029A (ja) | 移動体制御装置 | |

| CN113030960A (zh) | 一种基于单目视觉slam的车辆定位方法 | |

| US12243262B2 (en) | Apparatus and method for estimating distance and non-transitory computer-readable medium containing computer program for estimating distance | |

| JP7438515B2 (ja) | 俯瞰データ生成装置、学習装置、俯瞰データ生成プログラム、俯瞰データ生成方法、及びロボット | |

| CN117576199A (zh) | 一种驾驶场景视觉重建方法、装置、设备及介质 | |

| JP2024059653A (ja) | 俯瞰データ生成装置、学習装置、俯瞰データ生成プログラム、俯瞰データ生成方法、及びロボット | |

| Giri et al. | Particle Filter Enhanced by CNN-LSTM for Localization of Autonomous Robot in Indoor Environment | |

| JP7744064B2 (ja) | 地図生成装置、地図生成方法、及びプログラム | |

| Taylor et al. | Parameterless automatic extrinsic calibration of vehicle mounted lidar-camera systems | |

| CN117396912A (zh) | 图像处理装置、图像处理方法以及图像处理程序 | |

| Zhao | 3D Obstacle Avoidance for Unmanned Autonomous System (UAS) |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| 121 | Ep: the epo has been informed by wipo that ep was designated in this application |

Ref document number: 22886611 Country of ref document: EP Kind code of ref document: A1 |

|

| WWE | Wipo information: entry into national phase |

Ref document number: 202280065202.7 Country of ref document: CN |

|

| WWE | Wipo information: entry into national phase |

Ref document number: 2022886611 Country of ref document: EP |

|

| NENP | Non-entry into the national phase |

Ref country code: DE |

|

| ENP | Entry into the national phase |

Ref document number: 2022886611 Country of ref document: EP Effective date: 20240529 |