WO2021085429A1 - 被遠隔操作装置、遠隔操作システム、遠隔操作装置 - Google Patents

被遠隔操作装置、遠隔操作システム、遠隔操作装置 Download PDFInfo

- Publication number

- WO2021085429A1 WO2021085429A1 PCT/JP2020/040293 JP2020040293W WO2021085429A1 WO 2021085429 A1 WO2021085429 A1 WO 2021085429A1 JP 2020040293 W JP2020040293 W JP 2020040293W WO 2021085429 A1 WO2021085429 A1 WO 2021085429A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- remote control

- task

- remote

- control device

- subtask

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

-

- G—PHYSICS

- G08—SIGNALLING

- G08C—TRANSMISSION SYSTEMS FOR MEASURED VALUES, CONTROL OR SIMILAR SIGNALS

- G08C17/00—Arrangements for transmitting signals characterised by the use of a wireless electrical link

- G08C17/02—Arrangements for transmitting signals characterised by the use of a wireless electrical link using a radio link

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B25—HAND TOOLS; PORTABLE POWER-DRIVEN TOOLS; MANIPULATORS

- B25J—MANIPULATORS; CHAMBERS PROVIDED WITH MANIPULATION DEVICES

- B25J9/00—Programme-controlled manipulators

- B25J9/16—Programme controls

- B25J9/1679—Programme controls characterised by the tasks executed

- B25J9/1689—Teleoperation

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B25—HAND TOOLS; PORTABLE POWER-DRIVEN TOOLS; MANIPULATORS

- B25J—MANIPULATORS; CHAMBERS PROVIDED WITH MANIPULATION DEVICES

- B25J9/00—Programme-controlled manipulators

- B25J9/16—Programme controls

- B25J9/1602—Programme controls characterised by the control system, structure, architecture

- B25J9/161—Hardware, e.g. neural networks, fuzzy logic, interfaces, processor

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B25—HAND TOOLS; PORTABLE POWER-DRIVEN TOOLS; MANIPULATORS

- B25J—MANIPULATORS; CHAMBERS PROVIDED WITH MANIPULATION DEVICES

- B25J9/00—Programme-controlled manipulators

- B25J9/16—Programme controls

- B25J9/1628—Programme controls characterised by the control loop

- B25J9/163—Programme controls characterised by the control loop learning, adaptive, model based, rule based expert control

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B25—HAND TOOLS; PORTABLE POWER-DRIVEN TOOLS; MANIPULATORS

- B25J—MANIPULATORS; CHAMBERS PROVIDED WITH MANIPULATION DEVICES

- B25J9/00—Programme-controlled manipulators

- B25J9/16—Programme controls

- B25J9/1656—Programme controls characterised by programming, planning systems for manipulators

- B25J9/1664—Programme controls characterised by programming, planning systems for manipulators characterised by motion, path, trajectory planning

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B25—HAND TOOLS; PORTABLE POWER-DRIVEN TOOLS; MANIPULATORS

- B25J—MANIPULATORS; CHAMBERS PROVIDED WITH MANIPULATION DEVICES

- B25J9/00—Programme-controlled manipulators

- B25J9/16—Programme controls

- B25J9/1694—Programme controls characterised by use of sensors other than normal servo-feedback from position, speed or acceleration sensors, perception control, multi-sensor controlled systems, sensor fusion

- B25J9/1697—Vision controlled systems

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05B—CONTROL OR REGULATING SYSTEMS IN GENERAL; FUNCTIONAL ELEMENTS OF SUCH SYSTEMS; MONITORING OR TESTING ARRANGEMENTS FOR SUCH SYSTEMS OR ELEMENTS

- G05B19/00—Programme-control systems

- G05B19/02—Programme-control systems electric

- G05B19/18—Numerical control [NC], i.e. automatically operating machines, in particular machine tools, e.g. in a manufacturing environment, so as to execute positioning, movement or co-ordinated operations by means of programme data in numerical form

- G05B19/4155—Numerical control [NC], i.e. automatically operating machines, in particular machine tools, e.g. in a manufacturing environment, so as to execute positioning, movement or co-ordinated operations by means of programme data in numerical form characterised by programme execution, i.e. part programme or machine function execution, e.g. selection of a programme

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05D—SYSTEMS FOR CONTROLLING OR REGULATING NON-ELECTRIC VARIABLES

- G05D1/00—Control of position, course, altitude or attitude of land, water, air or space vehicles, e.g. using automatic pilots

- G05D1/0011—Control of position, course, altitude or attitude of land, water, air or space vehicles, e.g. using automatic pilots associated with a remote control arrangement

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05D—SYSTEMS FOR CONTROLLING OR REGULATING NON-ELECTRIC VARIABLES

- G05D1/00—Control of position, course, altitude or attitude of land, water, air or space vehicles, e.g. using automatic pilots

- G05D1/02—Control of position or course in two dimensions

- G05D1/021—Control of position or course in two dimensions specially adapted to land vehicles

- G05D1/0212—Control of position or course in two dimensions specially adapted to land vehicles with means for defining a desired trajectory

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N20/00—Machine learning

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/04—Architecture, e.g. interconnection topology

- G06N3/0464—Convolutional networks [CNN, ConvNet]

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/08—Learning methods

- G06N3/09—Supervised learning

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/08—Learning methods

- G06N3/092—Reinforcement learning

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04Q—SELECTING

- H04Q9/00—Arrangements in telecontrol or telemetry systems for selectively calling a substation from a main station, in which substation desired apparatus is selected for applying a control signal thereto or for obtaining measured values therefrom

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05B—CONTROL OR REGULATING SYSTEMS IN GENERAL; FUNCTIONAL ELEMENTS OF SUCH SYSTEMS; MONITORING OR TESTING ARRANGEMENTS FOR SUCH SYSTEMS OR ELEMENTS

- G05B2219/00—Program-control systems

- G05B2219/30—Nc systems

- G05B2219/39—Robotics, robotics to robotics hand

- G05B2219/39091—Avoid collision with moving obstacles

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05B—CONTROL OR REGULATING SYSTEMS IN GENERAL; FUNCTIONAL ELEMENTS OF SUCH SYSTEMS; MONITORING OR TESTING ARRANGEMENTS FOR SUCH SYSTEMS OR ELEMENTS

- G05B2219/00—Program-control systems

- G05B2219/30—Nc systems

- G05B2219/39—Robotics, robotics to robotics hand

- G05B2219/39543—Recognize object and plan hand shapes in grasping movements

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05B—CONTROL OR REGULATING SYSTEMS IN GENERAL; FUNCTIONAL ELEMENTS OF SUCH SYSTEMS; MONITORING OR TESTING ARRANGEMENTS FOR SUCH SYSTEMS OR ELEMENTS

- G05B2219/00—Program-control systems

- G05B2219/30—Nc systems

- G05B2219/40—Robotics, robotics mapping to robotics vision

- G05B2219/40089—Tele-programming, transmit task as a program, plus extra info needed by robot

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05B—CONTROL OR REGULATING SYSTEMS IN GENERAL; FUNCTIONAL ELEMENTS OF SUCH SYSTEMS; MONITORING OR TESTING ARRANGEMENTS FOR SUCH SYSTEMS OR ELEMENTS

- G05B2219/00—Program-control systems

- G05B2219/30—Nc systems

- G05B2219/40—Robotics, robotics mapping to robotics vision

- G05B2219/40411—Robot assists human in non-industrial environment like home or office

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05B—CONTROL OR REGULATING SYSTEMS IN GENERAL; FUNCTIONAL ELEMENTS OF SUCH SYSTEMS; MONITORING OR TESTING ARRANGEMENTS FOR SUCH SYSTEMS OR ELEMENTS

- G05B2219/00—Program-control systems

- G05B2219/30—Nc systems

- G05B2219/40—Robotics, robotics mapping to robotics vision

- G05B2219/40419—Task, motion planning of objects in contact, task level programming, not robot level

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05B—CONTROL OR REGULATING SYSTEMS IN GENERAL; FUNCTIONAL ELEMENTS OF SUCH SYSTEMS; MONITORING OR TESTING ARRANGEMENTS FOR SUCH SYSTEMS OR ELEMENTS

- G05B2219/00—Program-control systems

- G05B2219/30—Nc systems

- G05B2219/40—Robotics, robotics mapping to robotics vision

- G05B2219/40499—Reinforcement learning algorithm

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05B—CONTROL OR REGULATING SYSTEMS IN GENERAL; FUNCTIONAL ELEMENTS OF SUCH SYSTEMS; MONITORING OR TESTING ARRANGEMENTS FOR SUCH SYSTEMS OR ELEMENTS

- G05B2219/00—Program-control systems

- G05B2219/30—Nc systems

- G05B2219/50—Machine tool, machine tool null till machine tool work handling

- G05B2219/50391—Robot

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/04—Architecture, e.g. interconnection topology

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04Q—SELECTING

- H04Q2209/00—Arrangements in telecontrol or telemetry systems

- H04Q2209/50—Arrangements in telecontrol or telemetry systems using a mobile data collecting device, e.g. walk by or drive by

Definitions

- This disclosure relates to a remote control device, a remote control system, and a remote control device.

- a remote-controlled robot As a remote-controlled robot, a device that transmits gestures to a robot that communicates remotely has been developed, but it is not supposed to work autonomously.

- robots that operate autonomously robots that ask the operator for assistance when lost are known, and they are excellent in performing tasks that cannot realize completely autonomous operation, but there are multiple operators. It is difficult to control the robot.

- the present disclosure provides a remote control system that supports an operation that the remote control device autonomously executes.

- the remote-controlled device comprises one or more memories and one or more processors, said one or more when an event relating to a task performed by the remote-controlled object occurs.

- the processor transmits information on the subtask of the task, receives a command regarding the subtask, and executes the task based on the command.

- FIG. 1 is a schematic diagram showing an outline of the remote control system 1 according to the embodiment.

- the remote control system 1 of the present embodiment includes at least a remote control device 10 and a remote control device 20, and the remote control device 10 supports the operation of the remote control device 20. At least one of the remote control device 10 and the remote control device 20 may be used as the remote control support device.

- the remote control device 10 (control device) is given a command or the like by the operator 2 using various user interfaces, for example. This command is transmitted from the remote control device 10 to the remote control device 20 via a communication interface or the like.

- the remote control device 10 is, for example, a computer, a remote controller, or a mobile terminal (smartphone, tablet, etc.). Further, the remote control device 10 may be provided with a display, a speaker or the like as an output user interface to the operator 2, or may include a mouse, a keyboard, various buttons, a microphone or the like as an input user interface from the operator 2. You may have it.

- the remote-controlled device 20 (controlled device) is a device that receives control by the remote-controlled device 10.

- the remote control device 20 outputs on the end user side.

- the remote-controlled device 20 includes, for example, a remote-controlled object such as a robot or a drone.

- the robot remotely operated target

- the robot is a device that operates autonomously or semi-autonomously, for example, an industrial robot, a cleaning robot, an android, a pet robot, etc., a monitoring device, an unmanned store, etc. It may include a management device, an automatic transfer device, and the like, and may also include a virtual operation target in a virtual space.

- the remote-controlled device 20 will be described by taking a robot as an example, but it can be read as an arbitrary remote-controlled object as described above.

- the remote control device 20 is not limited to this.

- an interface such as a gripper or an end effector that physically executes an operation on the end user side may not be included in the remote control system 1.

- the other part of the remote-controlled device 20 included in the remote-controlled system 1 may be configured to control the interface of the remote-controlled device 20 not included in the remote-controlled system 1.

- the operator 2 may be a human being, but can give instructions that can appropriately solve the problem generated by the remote control device 20, for example, a problematic subtask (for example, object recognition or designation of a grip portion). It may be a trained model or the like having higher performance than the remote control device 20. Thereby, for example, the trained model provided in the remote control device 20 can be reduced in weight. It is also possible to reduce the performance of the sensor (camera resolution, etc.), for example.

- the remote control system 1 performs remote control as follows, for example. First, the remote-controlled device 20 operates autonomously or semi-autonomously based on a predetermined task or a task determined based on the surrounding environment.

- the remote control device 20 detects a state in which task execution is difficult (hereinafter referred to as an event) during autonomous operation, for example, the task execution is stopped.

- an event for example, an operation for ensuring safety, an operation for avoiding a failure, an operation for giving priority to other executable tasks, and the like are executed. May be good.

- it becomes difficult to execute the task it may be detected as an event to notify the remote control device 10 after trying to perform the same operation a plurality of times and failing.

- the remote control device 20 analyzes the task in which the event has occurred, divides the task, and extracts a plurality of divided tasks related to the event in which the event has occurred. Further, in the present specification, the division of a task includes extracting a part of the task. Also, generating a task includes extracting the task.

- a task may be a set of subtasks, which is a task in a unit smaller than the task.

- the remote control device 20 may analyze the task in which the event has occurred and extract the subtask related to the event from the plurality of subtasks constituting the task without dividing the task.

- the divided task may be read as a subtask.

- task division is not an essential configuration and may be arbitrary.

- each of modules, functions, classes, methods, etc., or any combination thereof may be a subtask.

- the task may be divided to any particle size such as the above module.

- the subtask may be analyzed and used as a divided task.

- scores such as the accuracy of each divided task may be calculated for a plurality of divided tasks. Based on this score, the remote-controlled device 20 may extract which division task caused the event.

- the remote control device 20 transmits the extracted information on the divided task to the remote control device 10. Further, for example, when it is difficult to divide the task, the event information may be transmitted to the remote control device 10.

- the remote control device 10 outputs an event or a division task to the operator 2 via the output user interface, and transitions to a standby state waiting for a command.

- the remote control device 10 When the operator 2 inputs a command to the remote control device 10 via the input user interface, the remote control device 10 outputs the command to the remote control device 20, and the task is stopped based on the command. To resume.

- the commands include a direct instruction that directly controls the physical operation of the interface of the remote control device 20, and an indirect instruction that the remote control device 20 determines based on the command to control the interface. , At least one of them is included.

- the direct instruction directly instructs the physical movement of the interface of the remote control device 20.

- This direct instruction is, for example, when the remote control device 20 is provided with an interface for performing a grip operation, the end effector of the remote control device 20 is operated to a position where the target can be gripped by a cursor key or a controller, and then the grip operation is performed. It is like instructing to execute.

- the indirect instruction indirectly instructs the operation of the remote control device 20.

- This indirect instruction is, for example, an instruction to specify the gripping position of the target when the remote control device 20 includes an interface for performing the grip operation, and directly instructs the physical movement of the interface of the remote control device 20. do not.

- the remote-controlled device 20 automatically moves the end effector based on the designated gripping position without the operation of the operator 2, and automatically executes the operation of gripping the target.

- the remote-controlled device 20 there is a task of placing a target falling on the floor on a desk.

- an event occurs because an attempt is made to execute this task by a robot equipped with an arm capable of grasping the target, and the task is executed by the operator 2 by the remote control device 10.

- the robot For direct instructions, for example, while the operator 2 is watching the image of the camera, the robot is first moved to a position where the target can be picked up by using a controller or the like. In this movement, for example, by pressing a button in eight directions provided on the controller, the operator 2 determines in which direction and how much the robot should be advanced, and in response, presses which button for how long. Operate by. When the operator 2 determines that the robot has moved to a position where the target can be picked up, the operator 2 then moves the arm to a position where the target can be grasped. Similar to the movement of the robot, this movement is also directly executed by the operator 2 by, for example, a controller provided with a button capable of designating a direction.

- the operator 2 determines that the arm has moved to a position where the target can be gripped

- the operator 2 executes an operation of gripping the target by the end effector provided on the arm.

- the operator 2 moves the robot to the position of the desk by the same operation as above, moves the arm to the position where he / she wants to place the target in the same manner as above, and releases the grip of the end effector to release the grip of the desk. Place the target on top.

- the gripping and releasing of the gripping are also directly executed by the operator 2 by, for example, a controller provided with a button capable of specifying a direction.

- the direct instruction indicates that the operator 2 directly instructs the operation itself performed by the remote control device 20 by using the pad, the controller, or the like.

- the movement of the robot, the movement of the arm, the gripping of the target, and the release of the gripping of the target, which are the subtasks constituting the task, are all directly instructed.

- Subtasks can also be performed by direct instructions.

- the operator 2 specifies the position of the target in the image acquired by the camera. According to the designation, the robot autonomously moves to a position where the arm reaches the designated target, controls the arm, and grips the target. Subsequently, the operator 2 specifies the position where the target is placed. The robot autonomously moves to a position where the target can be placed at the specified position according to the instruction, and places the target at the specified position.

- the indirect instruction does not directly specify the operation by the operator 2, but represents an indirect instruction in which the remote control device 20 can operate semi-autonomously based on the instruction.

- the movement of the robot, the movement of the arm, the gripping of the target, and the release of the gripping of the target, which are the subtasks constituting the task, are all performed by indirect instructions.

- Subtasks of the department can also be performed by indirect instructions. More examples of indirect instructions will be given later.

- the remote control system 1 receives the operation instruction of the operator 2 via the remote control device 10 triggered by the occurrence of an event when the remote control device 20 is operating, which is a semi-automatic operation. Is a system that executes.

- This embodiment shows one aspect of the remote control system 1 described above.

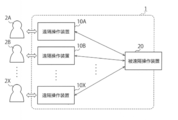

- FIG. 2 shows an example of a block diagram of the remote control system 1 according to the embodiment.

- the remote control device 10 of the present embodiment includes a communication unit 100, an output unit 102, and an input unit 104.

- at least one of an information processing unit that processes input / output data and a storage unit that stores necessary data and the like may be provided.

- the remote-controlled device 20 of the present embodiment includes a communication unit 200, a storage unit 202, an information processing unit 204, an operation generation unit 206, an operation unit 208, a sensor 210, a detection unit 212, and an analysis unit 214. And.

- the remote control device 10 receives an event generated from the remote control device 20 via the communication unit 100 or a subtask (divided task) divided and generated based on the event.

- the output unit 102 outputs the received event or division task to the operator.

- the output unit 102 includes, for example, a display as an output user interface, and causes the display to display the received event or division task.

- the output user interface provided in the output unit 102 is not limited to the display, and for example, the operator can be remotely controlled by outputting sound from a speaker or emitting a light emitting element such as an LED (Light Emitting Diode). You may inform the state.

- the input unit 104 receives input from the operator. For example, the operator gives an instruction directly from the input unit 104 based on the event output to the output unit 102. As another example, the operator gives an indirect instruction from the input unit 104 based on the division task output to the output unit 102.

- the communication unit 100 transmits the command to the remote control device 20 via the communication interface.

- the remote control device 20 is a device that operates autonomously or semi-autonomously.

- the communication unit 200 receives at least the information transmitted from the remote control device 10.

- the storage unit 202 stores data required for the operation of the remote control device 20, a program required for information processing, data transmitted / received by the communication unit 200, and the like.

- the information processing unit 204 executes information processing required for each configuration provided in the remote control device 20.

- the information processing unit 204 may include a trained machine learning model, for example, a neural network model.

- a neural network may include, for example, MLP (Multi-Layer Perceptron), CNN (Convolutional Neural Network), may be formed based on a recurrent neural network, and further, these. It is not limited to, and may be an appropriate neural network model.

- the motion generation unit 206 generates the motion required to execute the task during the autonomous motion. Further, when the communication unit 200 receives the indirect instruction from the remote control device 10, the operation generation unit 206 generates an operation related to the event or the division task based on the indirect instruction, and generates the operation. Further, the motion generation unit 206 generates or acquires a control signal for performing the generated motion. In either case, the motion generation unit 206 outputs the generated control signal for performing the operation to the operation unit 208.

- the operation unit 208 includes a user interface on the end user side of the remote control device 20.

- the moving unit 208 is a physical mechanism for the robot to operate, such as an arm, gripper, and moving device of the robot.

- the operation unit 208 receives the control signal generated by the operation generation unit 206 as shown by the solid line, or gives a direct instruction input to the remote control device 10 via the communication unit 200 as shown by the broken line. Receives the control signal to execute and performs the actual operation in the end user environment.

- the sensor 210 senses the environment around the remote control device 20.

- the sensor 210 may include, for example, a camera, a contact sensor, a weight sensor, a microphone, a temperature sensor, a humidity sensor, and the like.

- the camera may be an RGB-D camera, an infrared camera, a laser sensor, or the like, in addition to a normal RGB camera.

- the detection unit 212 detects the event.

- the detection unit 212 detects an event based on the information from the motion generation unit 206 or the sensor 210.

- the event is information that, for example, when the remote control device includes a grip portion, the grip fails, or the recognition degree in the image acquired by the sensor 210 is insufficient and it is difficult to grip. ..

- the analysis unit 214 analyzes the running task based on the event, and if it determines that the task can be divided, the analysis unit 214 divides the running task. , Generate a split task for the event.

- the information regarding the division task is output to the communication unit 200, and the communication unit 200 transmits the information to the remote control device 10. Judgment as to whether the task can be divided, generation of the divided task related to the event, or extraction of the subtask may be performed by any method. For example, it may be done on a rule basis or by a trained model,

- the remote control system 1 includes, for example, communication units 100 and 200, output units 102, input units 104, storage units 202, information processing units 204, motion generation units 206, detection units 212, and analysis units 214. It may be provided. Other aspects may be used, for example, the moving unit 208 and the sensor 210 may be included in the remote control system 1. Further, each component of the remote control device 20 may be provided in a device such as the remote control device 10 or another server as long as it can be appropriately processed.

- the remote-controlled device 20 may be composed of one device or two or more devices.

- the sensor 210 is a camera or the like provided to monitor the space on the end user side. There may be.

- the storage unit 202 and the information processing unit 204 are provided in the environment on the end user side as a computer separate from the robot or the like, and transmit a wireless or wired signal to the robot or the like to control the robot or the like. You may.

- each device may be provided with a communication unit for communicating with each other.

- FIG. 3 is a flowchart showing an operation example of the remote control system 1 according to the present embodiment. As described above, the configurations of the remote control device 10 and the remote control device 20 can be appropriately rearranged, but in this flowchart, the operation based on the configuration of FIG. 2 is shown.

- the remote control device 20 is executing the task set by the autonomous operation (S200). For example, in the initial state, the remote control device 20 itself may sense the environment by the sensor and execute the task, or may receive a command from the remote control device 10 to execute the task.

- the remote control device 20 continues executing the task (S200).

- the remote control device 20 stops the operation of the operation unit 208, in the present embodiment, the execution of the task, and the analysis unit 214 analyzes the generated event. (S202).

- the analysis unit 214 divides the task and generates and acquires the divided task.

- the remote control device 20 transmits the division task or event to the remote control device 10 via the communication unit 200.

- the remote control device 20 transmits a division task when the task can be divided in S202, and transmits an event when it is determined that the task division is impossible, difficult, or unnecessary. (S203).

- the remote control device 10 when the remote control device 10 receives the split task or event transmitted by the remote control device 20 via the communication unit 100, the remote control device 10 outputs the split task or event received via the output unit 102 to the operator. (S104).

- the remote control device 10 transitions to a standby state for receiving an input of a command from the operator via the input unit 104 (S105).

- the input standby state is not after this output, and the remote control device 10 may be in the input standby state in the normal state.

- the remote control device 10 Upon receiving the indirect instruction for the divided task output from the operator or the direct instruction for the output event, the remote control device 10 transmits the indirect instruction or the direct instruction to the remote control device 20 via the communication unit 100. (S106).

- the operation generation unit 206 When the command received by the communication unit 200 is an indirect instruction, the operation generation unit 206 generates an operation based on the received indirect instruction (S207). Then, a control signal based on the generated operation is transmitted to the operation unit 208, and the operation unit 208 controls to execute the task (S208).

- the remote control device 20 when the command received by the communication unit 200 is a direct instruction, the remote control device 20 outputs the direct instruction to the operation unit 208, and the operation unit 208 controls to execute the task (S208). .. If the direct instruction is not output as a direct control signal, the information processing unit 204 may convert it into a signal that controls the operation of the operation unit 208, and output the control signal to the operation unit 208. ..

- the remote control device 20 continues the steps from S200 when the execution of the task is not completed or when the execution of the task is completed but a new task exists. When the execution of the task is completed and the operation is completed, the remote control device 20 ends the process.

- the remote control device 20 executes the task by an indirect instruction based on the divided task in which the task is divided. It will be possible to support.

- the results of remote control by direct instruction may differ greatly depending on the degree of learning of the operator, and tasks that take a long time to learn can be assumed.

- the influence of the degree of learning can be reduced, and an appropriate result can be obtained by any operator.

- the event is detected in the following cases.

- a weight sensor provided in the end effector when the gripped target cannot be grasped or the gripped target is dropped.

- the event that the target cannot be grasped or dropped is detected by sensing with a tactile sensor or the like, sensing with a camera, or detecting the movement of the grip portion of the end effector.

- the information processing unit 204 performs recognition processing on the image captured by the camera, and determines the gripping position or the like based on the recognition result, the recognition.

- the accuracy or accuracy of the result is low (for example, a recognition result of less than 50% is obtained)

- an event that the recognition accuracy is low is detected based on the output from the sensor 210.

- the detection unit 212 may monitor the recognition result of the information processing unit 204 and generate an event when there is a target whose recognition accuracy is lower than a predetermined threshold value.

- the distance is determined when the distance to the target to be gripped cannot be automatically determined in the image acquired by the sensor 210. You may detect an event that you cannot.

- task analysis and division are executed as follows. First, for example, when the remote control device 20 fails to grip the target, the failure in gripping is detected as an event, and the event is notified to the remote control device 10 without analyzing the task, and the operation is performed. You may accept the direct instruction of the person.

- the task for executing the recognition is acquired as the divided task by dividing the task.

- the acquired division task may be notified to the remote control device 10 and an indirect instruction of the operator regarding the division task capable of resolving the event may be received.

- the indirect instruction from the operator includes, for example, notifying the recognition rate of the target that is difficult to recognize, or notifying the recognition result different from the recognition made by the remote control device 20. Be done.

- the remote control device 20 Based on these indirect instructions received from the remote control device 10, the remote control device 20 generates a motion by the motion generation unit 206 to execute the gripping motion.

- FIG. 4 shows an image acquired by a camera whose remote control device 20 is a sensor 210 in one embodiment. Specifically, it is an image in which objects such as boxes, stationery, and toys are scattered on the floor. The task to be performed is to clean up the stationery in the image.

- FIG. 5 is a diagram showing a recognition rate which is a result of being recognized by the remote control device 20 of each object in the image of FIG.

- the recognition rate for example, a numerical value between 0 and 1 indicates that the higher the recognition rate is, the higher the recognition accuracy is.

- the pen stand in the foreground is recognized as a stationery with a recognition rate of 0.62, which is a relatively high recognition rate, and the task can be executed.

- the pen in the back is recognized as a stationery with a recognition rate of 0.25.

- the remote-controlled device 20 it is difficult for the remote-controlled device 20 to execute the task for the pen when it is set to execute the task under certain conditions, for example, when the threshold value exceeds 0.5.

- the analysis unit 214 analyzes the task and extracts the task related to this recognition from the tasks as a divided task or a subtask.

- the remote control device 20 transmits the division task related to this recognition to the remote control device 10 as a division task determined to be the cause of the problem.

- the operator inputs an indirect instruction that the pen in the image is a stationery via the input unit 104.

- the remote control device 10 transmits the input indirect instruction to the remote control device 20.

- the remote-controlled device 20 restarts the execution of the task based on the recognition result that the pen is the target by the motion generation unit 206.

- the task to be executed is stopped based on the recognition result of the object, the action of recognizing the object is cut out from the task, or the action to be executed based on the recognition is cut out from the task, and the stopped task is restarted by an indirect instruction. To do.

- the task analysis is attempted instead of immediately notifying the remote control device 10 of the event, and as a result, the divided task can be acquired, which is an indirect instruction. Can also be requested from the operator.

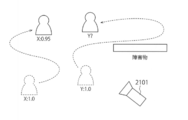

- FIG. 6 is a diagram showing an example of executing an indirect instruction when gripping fails.

- This gripping failure can be detected, for example, by the feedback sensor of the robot hand that performs the gripping.

- the detection unit 212 detects that the grip has failed based on the detection result of the sensor 210 that detects the state of the operation unit 208.

- the remote control device 20 cancels the task and divides the task of cleaning up the stationery into two divided tasks of object recognition and gripping plan by the motion generation unit 206. Then, it is estimated which of the divided tasks is the cause of the failure. For example, when the recognition result of the target is as high as 0.62 as a stationery, the analysis unit 214 determines that the task has failed in the subtask of the motion generation by the motion generation unit 206. Based on this result, the analysis unit 214 notifies the remote control device 10 about the gripping plan, and outputs the operator to give an indirect instruction about the gripping plan.

- the operator indicates the gripping position indicated by the diagonal line in FIG. 6 as an indirect instruction.

- An indirect instruction regarding the gripping position is transmitted to the remote control device 20, and based on the transmitted information, the motion generation unit 206 generates a gripping motion in which the robot arm grips the target at the designated gripping position. , Resume the task.

- FIG. 7 is a diagram obtained by a sensor of the state of the end user space in which the remote control device 20 exists.

- the remote-controlled device 20 includes a robot and a sensor 210 (camera) provided separately from the robot that acquires the state of the end user space as an image.

- a sensor 210 camera

- the movement of the robot is, for example, in the viewpoint from the robot, sensors provided in the robot such as LiDAR, odometer, torque sensor, and images acquired by the sensor 210 (camera) provided in the ceiling of the end user space. Should also be judged.

- sensors provided in the robot such as LiDAR, odometer, torque sensor, and images acquired by the sensor 210 (camera) provided in the ceiling of the end user space. Should also be judged.

- the information processing unit 204 that has acquired information from the sensor 210 processes the movable range of the robot, it may be almost impossible to move if the estimation of the movable range is incorrect. For example, in the state shown in FIG. 7, it may be considered that most of the central space is originally the movable range of the robot. However, some estimates can give different results. As an event, an example of detecting that the movable range is limited and the target to be grasped cannot be sufficiently approached will be described.

- FIG. 8 is an example in which the information processing unit 204 incorrectly specifies the movable range.

- the remote-controlled device 20 recognizes only the shaded area as the movable range as a result of estimating the information processing unit 204 from the image.

- the estimation of the information processing unit 204 is not limited to the image, and the estimation based on the outputs from various sensors may be executed.

- the remote-controlled device 20 detects that the detection unit 212 cannot move and that it is difficult to execute various tasks. When such a detection is made, the execution of the task is stopped, and the analysis unit 214 analyzes and divides the task.

- the division task is transmitted to the remote control device 10.

- the division task in this case is a division task related to area recognition.

- the remote control device 10 may be controlled so that information on privacy is not output by an area based on the recognition result by image recognition, an area designated by using a marker or the like, or the like.

- the privacy-related information may be, for example, a password, a passbook number, or other information such as an ID associated with the user, a toilet, and a dressing room.

- not only the image but also the sound may be blocked. For example, daily life sounds and the like may not be output from the remote control device 10.

- the invisible area may be determined, and the output data may be controlled so that the invisible area cannot be seen.

- FIG. 9 is a diagram showing an example of indirect instructions.

- the analysis unit 214 may modify the estimation result and generate an operation by instructing the operator on the movable range.

- the operator specifies, for example, a movable range on the image as indicated by a broken line, and gives an indirect instruction including information on the movable range to the remote control device 20. May be sent to.

- FIG. 10 is a diagram showing another example of indirect instruction.

- the analysis unit 214 may correct the estimation result and generate the motion by asking the operator to instruct the moving position, the moving route, or the like, assuming that there is an error in the recognition.

- the operator specifies a movement route on the image as shown by an arrow, and transmits an indirect instruction including information on the movement route to the remote control device 20. You may.

- both the input of the movable range as shown in FIG. 9 and the input of the moving route may be accepted as shown in FIG. That is, the operator may be entrusted with the method of instructing the resolution of the event, in this example, whether to specify the movable range or the moving route.

- the operator may determine that the event is resolved by the direct instruction instead of the indirect instruction. In this case, the operator may transmit a signal for directly controlling the remote control device 20 via a controller or the like which is the remote control device 10.

- FIG. 11 is an example of outputting a display for selecting which division task the operator gives an indirect instruction or a direct instruction.

- the display as the output unit 102 provided in the remote control device 10 may be made to select the operation to be the target of the indirect instruction.

- the operator selects, for example, the button 1020 and specifies the movable range.

- the button 1023 for transmitting the indirect instruction may be displayed separately, and the indirect instruction may be transmitted to the remote control device 20 by, for example, pressing the button 1023 after designating the movable range.

- the operator selects button 1021 and specifies the movement route.

- buttons 1020, 1021 and the like are pressed after designating the movable range, the moving route and the like, an indirect instruction may be transmitted.

- the operator may be able to switch to the direct instruction by, for example, pressing the button 1022 for selecting the direct instruction.

- the operator transmits the direct instruction to the remote control device 20 via the remote control device 10.

- a button for switching to the indirect instruction again after switching to the direct instruction may be displayed in a selectable state.

- the task may be divided and the interactivity may be closed on the user side.

- the task performed by the robot is the task of pressing a button

- a delay occurs in the camera image when the operation is performed in a network with a large communication delay. It is difficult for the operator to press a button while checking this image. For example, when the button is pressed while watching the image, the control may be delayed due to the delay, and problems such as excessive pressing of the button may occur. In addition, the operation speed becomes slow.

- the analysis unit 214 defines the task as a division task that causes less problem even if a delay occurs, for example, a task of moving the robot arm to a position where the button is easy to press or a task of recognizing the place where the button is pressed.

- a split task that can cause problems if a delay occurs for example, a task that pushes a button with the tip of the robot arm, and the operator directly or indirectly instructs only the split task that has few problems even if a delay occurs. May be good.

- the operator may instruct the recognition of the position where the button is pressed by an indirect instruction. Based on these indirect instructions, the remote-controlled device 20 can move the robot arm and execute the task of pressing a button. This makes it possible to reduce the difficulty of operation due to network delay.

- a task that automatically performs a gripping operation in a robot that can move and grip is shown as an example, but the remote-controlled device 20 and the task are not limited to this.

- the remote control system 1 can be used as a monitoring robot in an unmanned store or the like.

- FIG. 12 is a diagram showing an example applied to store monitoring or unmanned sales according to one embodiment.

- the entire store including the sensor and the cash register provided in the store (environment) is referred to as the remote control device 20.

- a first camera 2100 mounted on the ceiling of a store acquires the state of a shelf on which products are displayed.

- the information processing unit 204 performs a process of calculating the recognition degree of each product in the image taken by the first camera 2100, for example, as shown by the dotted arrow shown in FIG. It is processed by the information processing unit 204, and the detection unit 212 detects the occurrence of an event in the result processed by the information processing unit 204. When the detection unit 212 detects an event, it is output to the analysis unit 214.

- the analysis unit 214 confirms the recognition level of the products displayed on the shelves.

- the recognition degree of the product A is 0.8, 0.85, 0.9, 0.8, which are relatively high values, so that it can be determined that the product A can be recognized without any problem.

- the recognition level of the product on the upper right is as low as 0.3.

- the threshold value of the recognition level is 0.5, it falls below the threshold value and it is judged that the product cannot be recognized properly. Will be done.

- the remote-controlled device 20 may analyze and divide the task by the analysis unit 214, and transmit the divided task related to the recognition level to the remote-controlled device 10.

- the operator can give an indirect instruction about a problematic part based on the information output from the output unit 102, for example, the image acquired by the first camera 2100.

- the remote control device 20 may restart the motion generation based on this indirect instruction.

- the operator confirms and transmits the product B to the remote control device 20 as an indirect instruction.

- the remote-controlled device 20 continues shooting after recognizing that it is the product B. In this case, only the task related to recognition may be stopped, and the task of shooting may be continued without stopping. In this way, instead of canceling all the tasks, for example, as in this embodiment, only the task related to recognition that seems to have a problem may be divided and transmitted to the remote control device 10.

- the remote control system 1 may be used as a system for segmenting products in stores and the like.

- the image is not limited to the product, and an image of a person who purchases the product may be acquired.

- FIG. 13 is a diagram showing an example applied to human tracking according to an embodiment.

- the second camera 2101 photographs, for example, the space of the end user, and the remote-controlled device 20 executes a task of tracking a human in the image captured by the second camera 2101.

- the information processing unit 204 executes tracking of a person based on the information acquired by the camera 2101.

- the information processing unit 204 notifies the analysis unit 214 that the tracking accuracy has dropped.

- the analysis unit 214 analyzes and divides the task, and transmits the divided task related to the recognition of tracking to the remote control device 10.

- the remote control device 10 issues an indirect instruction to instruct that the person input by the operator is Y. It is transmitted to the remote control device 20. Upon receiving this indirect instruction, the remote control device 20 resumes or continues tracking of the person Y.

- the tasks shown in FIGS. 12 and 13 may be operating at the same timing. For example, when the person Y once enters the blind spot and then holds the product B in his hand and intends to purchase it, the remote control system 1 receives from the remote control device 10 that he is the person Y by an indirect instruction.

- the remote control device 20 may execute a buying and selling operation. You may stop the task of trading operations until you receive an indirect instruction.

- the remote control device 10 when it is unknown that the product picked up by the person Y is the product B, the remote control device 10 similarly transmits an indirect instruction about the product, and the remote control device 20 appropriately performs the task. May be executed.

- the indirect instruction of the division task related to the person and the indirect instruction of the division task related to the product are given from the remote control device 10.

- the remote control device 20 may execute the task according to the transmission and these indirect instructions.

- the camera may be a camera that captures different areas as the first camera 2100 and the second camera 2101.

- each camera may be performing a separate task.

- the first camera 2100 may perform tasks such as recognizing products on the shelves as described above

- the second camera 2101 may perform tasks such as tracking a person as described above.

- the task of automatically purchasing the customer's product may be executed by using the images captured by the cameras 2100 and 2101.

- the remote control system 1 may be provided with a plurality of sensors or operating units, and may operate using information from the plurality of sensors or operating units.

- the remote control may be performed on an event or task that occurs by using information from a plurality of sensors or moving units, which generally increases the difficulty of processing.

- the remote control system 1 may execute a plurality of tasks in parallel as separate tasks, or may further execute tasks based on the execution of those tasks in parallel.

- the remote control system 1 can be applied to various situations. It should be noted that the above examples are shown only as some examples, and the analysis and division of events and tasks are not limited to these, and can be applied to various aspects. is there.

- FIG. 14 is a block diagram of the remote control system 1 according to the present embodiment.

- the remote control system 1 according to the present embodiment further includes a training unit 216 in addition to the remote control system 1 according to the above-described embodiment.

- the flow of data when a command which is an indirect instruction is received from the remote control device 10 is shown by a dotted line.

- the information regarding the received indirect instruction is stored in the storage unit 202.

- the information related to the indirect instruction is, for example, information for modifying the recognition result, information for modifying the movable range, and the like in the above-mentioned example.

- the storage unit 202 stores, for example, such information in association with the information processing result by the information processing unit 204, or in association with at least a part of the information detected by the sensor.

- the training unit 216 trains the trained model using, for example, the neural network used by the information processing unit 204 for recognition based on the information regarding the indirect instruction stored in the storage unit 202. This training is carried out, for example, by reinforcement learning.

- the parameters of the trained model may be trained by a normal learning method instead of reinforcement learning. In this way, the training unit 216 uses the information regarding the indirect instruction as teacher data to improve the recognition accuracy.

- the training unit 216 may execute retraining each time it receives an indirect instruction, or may detect a state in which sufficient calculation resources can be secured, such as a task not being executed, and perform training. Further, the training may be performed periodically using cron or the like, for example, the training is executed at a predetermined time every day. As another example, retraining may be performed when the information regarding the indirect instruction stored in the storage unit 202 exceeds a predetermined number.

- FIG. 15 is a flowchart showing the flow of processing according to the present embodiment. The same processing as in FIG. 3 is omitted.

- the remote control device 10 After receiving the input by the operator, the remote control device 10 transmits the information of the indirect instruction or the direct instruction to the remote control device 20 (S106).

- the remote control device 20 When the received command is an indirect instruction, the remote control device 20 generates an operation for executing the task (S207). Then, the operation unit 208 executes the generated operation or the operation based on the direct instruction (S208).

- the remote-controlled device 20 stores information related to the instruction in the storage unit 202 (S209).

- the training unit 216 executes training of the trained model used for recognition or the like based on a predetermined timing, for example, the timing of receiving an indirect instruction (S210).

- the training by the training unit 216 may be independent of the task being executed by the remote control system 1. Therefore, the training may be executed in parallel with the operation.

- the parameters updated by training are reflected in the trained model at a timing that does not affect the execution of the task.

- the remote control system 1 when it is difficult for the remote control device 20 to autonomously execute the task, the remote control system 1 is from the operator as in the above-described embodiment. Indirect instructions can be received to resume task execution. Further, by storing the received indirect instruction or direct instruction data as teacher data and training using the data, it is possible to improve the recognition accuracy and the like in the remote control device 20. As a result, for example, it is possible to prevent the same event from occurring again. In addition, the probability that a similar event will occur can be suppressed. In this way, by executing the training based on machine learning in the remote control device 20, it is possible to execute a more accurate and smooth autonomous task.

- the training unit 216 is provided in the remote control device 20, but for example, when the remote control device 20 is in a network having a sufficient communication amount and communication speed, it is provided in another device. It may have been done.

- a plurality of remote-controlled devices 20 exist and can communicate with each other, information on a direct instruction, an indirect instruction, a task or an event given to one remote-controlled device 20 can be remotely controlled by another remote-controlled device 20. It may be used for training or updating the trained model provided in the device 20. Further, the storage unit 202 may store the information obtained from the plurality of remote-controlled devices 20, and the training unit trains the trained model using the information obtained from the plurality of remote-controlled devices 20. You may do.

- FIG. 16 is a diagram showing another mounting example of the remote control device in the remote control system 1 and the remote control device.

- the remote control system 1 may be provided with, for example, a plurality of remote control devices 10A, 10B, ..., 10X that can be connected to the remote control device 20 of 1.

- the remote control devices 10A, 10B, ..., 10X are operated by the operators 2A, 2B, ..., 2X, respectively.

- the remote control device 20 transmits, for example, a division task or event to a remote control device controlled by an operator who can appropriately process the division task or event. For example, it is possible to notify the remote control device 10 of 1 to give a command so that the processing is not concentrated. By doing so, it is possible to prevent the load from being concentrated on one operator.

- the divided task can be transmitted to the remote control device 10 for the operator 2 who is good at processing the divided task. By doing so, it is possible to improve the accuracy of task execution and the accuracy of training by the training unit 216.

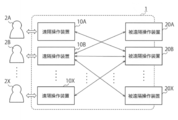

- FIG. 17 is a diagram showing another example of mounting the remote control device and the remote control device in the remote control system 1.

- the remote control system 1 may be provided so that a plurality of remote control devices 20A, 20B, ..., 20X can be connected to one remote control device 10.

- Each remote control device 20 transmits a division task or event to the remote control device 10 of 1. For example, when the task executed by each of the remote-controlled devices 20 is minor, one operator 2 executes a plurality of tasks of the remote-controlled devices 20A, 20B, ..., 20N in this way. It may be possible to send an indirect instruction or a direct instruction command as much as possible.

- FIG. 18 is a diagram showing another example of mounting the remote control device and the remote control device in the remote control system 1.

- the remote control system 1 may include a plurality of remote control devices 10A, 10B, ..., 10X, and a plurality of remote control devices 20A, 20B, ..., 20N that can be connected to them.

- a switch that determines which remote control device 10 and which remote control device 20 are to be connected in the remote control system 1.

- a communication control unit may be provided.

- a signal for confirming the availability is transmitted to the remote control device 10 in advance, and the signal is relative to the remote control device 10. After receiving the ACK signal, the division task or the like may be transmitted.

- the remote control device 20 may retransmit the split task to another remote control device 10. Good.

- the remote control device 20 may broadcast a division task or the like, or transmit it to a part or all of the connected remote control devices 10 all at once, and the operator who can handle the received remote control device 10 divides the tasks. You may secure a task or the like and give an instruction.

- the operator may register in advance the processing that he / she is good at or the processing that he / she can handle in the remote control device 10.

- the remote control device 20 may confirm this registration information before or after the execution of the task, and transmit the divided task to the appropriate remote control device 10.

- a storage unit may be provided outside the plurality of remote control devices 20, and information on a division task or an indirect instruction may be stored in the storage unit.

- the storage unit may store information about only one of the plurality of remote-controlled devices 20, and the information of the plurality of remote-controlled devices 20 in the remote-controlled system 1 is stored.

- the information of all the remote-controlled devices 20 in the remote-controlled system 1 may be stored.

- the model may be trained outside the remote control device 20.

- the model in which this training is performed may be a trained model provided in the remote control device 20 in the remote control system 1, or a trained model provided outside the remote control system 1. It may be an untrained model.

- the transmission / reception of the above data is shown as an example, and is not limited to this, and any configuration can be used as long as the remote control device 10 and the remote control device 20 are appropriately connected. There may be.

- the remote control system 1 may be provided with an appropriate number of remote control devices 10 and remote control devices 20, and in this case, more appropriate processing can be performed more smoothly.

- remote control is used, but it may be read as remote control. As described above, in the present disclosure, the remote control may be performed directly, or the device for remote control may be controlled.

- each device may be configured by hardware, a CPU (Central Processing Unit), or a GPU (Graphics Processing Unit).

- Etc. may be composed of information processing of software (program) executed.

- the software that realizes at least a part of the functions of each device in the above-described embodiment is a flexible disk, a CD-ROM (Compact Disc-Read Only Memory), or a USB (Universal Serial).

- Information processing of software may be executed by storing it in a non-temporary storage medium (non-temporary computer-readable medium) such as a memory and loading it into a computer.

- the software may be downloaded via a communication network.

- information processing may be executed by hardware by implementing the software in a circuit such as an ASIC (Application Specific Integrated Circuit) or an FPGA (Field Programmable Gate Array).

- the type of storage medium that stores the software is not limited.

- the storage medium is not limited to a removable one such as a magnetic disk or an optical disk, and may be a fixed storage medium such as a hard disk or a memory. Further, the storage medium may be provided inside the computer or may be provided outside the computer.

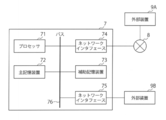

- FIG. 19 is a block diagram showing an example of the hardware configuration of each device (remote control device 10 or remote control device 20) in the above-described embodiment.

- Each device includes a processor 71, a main storage device 72, an auxiliary storage device 73, a network interface 74, and a device interface 75, and even if these are realized as a computer 7 connected via a bus 76. Good.

- the computer 7 in FIG. 19 includes one component for each component, but may include a plurality of the same components. Further, although one computer 7 is shown in FIG. 19, software is installed on a plurality of computers, and each of the plurality of computers executes the same or different part of the software. May be good. In this case, it may be a form of distributed computing in which each computer communicates via a network interface 74 or the like to execute processing. That is, each device (remote control device 10 or remote control device 20) in the above-described embodiment realizes a function by executing an instruction stored in one or a plurality of storage devices by one or a plurality of computers. It may be configured as a system. Further, the information transmitted from the terminal may be processed by one or a plurality of computers provided on the cloud, and the processing result may be transmitted to the terminal.

- each device in the above-described embodiment can be performed in parallel using one or more processors or by using a plurality of computers via a network. It may be executed. Further, various operations may be distributed to a plurality of arithmetic cores in the processor and executed in parallel processing. In addition, some or all of the processes, means, etc. of the present disclosure may be executed by at least one of a processor and a storage device provided on the cloud capable of communicating with the computer 7 via a network. As described above, each device in the above-described embodiment may be in the form of parallel computing by one or a plurality of computers.

- the processor 71 may be an electronic circuit (processing circuit, Processing circuit, Processing circuitry, CPU, GPU, FPGA, ASIC, etc.) including a computer control device and an arithmetic unit. Further, the processor 71 may be a semiconductor device or the like including a dedicated processing circuit. The processor 71 is not limited to an electronic circuit using an electronic logic element, and may be realized by an optical circuit using an optical logic element. Further, the processor 71 may include an arithmetic function based on quantum computing.

- the processor 71 can perform arithmetic processing based on data and software (programs) input from each device or the like of the internal configuration of the computer 7, and output the arithmetic result or control signal to each device or the like.

- the processor 71 may control each component constituting the computer 7 by executing an OS (Operating System) of the computer 7, an application, or the like.

- OS Operating System

- Each device (remote control device 10 and / or remote control device 20) in the above-described embodiment may be realized by one or a plurality of processors 71.

- the processor 71 may refer to one or more electronic circuits arranged on one chip, or may refer to one or more electronic circuits arranged on two or more chips or devices. .. When a plurality of electronic circuits are used, each electronic circuit may communicate by wire or wirelessly.

- the main storage device 72 is a storage device that stores instructions executed by the processor 71, various data, and the like, and the information stored in the main storage device 72 is read out by the processor 71.

- the auxiliary storage device 73 is a storage device other than the main storage device 72. Note that these storage devices mean arbitrary electronic components capable of storing electronic information, and may be semiconductor memories. The semiconductor memory may be either a volatile memory or a non-volatile memory.

- the storage device for storing various data in each device (remote control device 10 or remote control device 20) in the above-described embodiment may be realized by the main storage device 72 or the auxiliary storage device 73, and may be realized by the processor 71. It may be realized by the built-in internal memory.

- the storage unit 202 in the above-described embodiment may be mounted on the main storage device 72 or the auxiliary storage device 73.

- processors may be connected (combined) to one storage device (memory), or a single processor may be connected.

- a plurality of storage devices (memory) may be connected (combined) to one processor.

- Each device (remote control device 10 or remote control device 20) in the above-described embodiment is connected (combined) to at least one storage device (memory) and the at least one storage device (memory) by a plurality of processors.

- it may include a configuration in which at least one of the plurality of processors is connected (combined) to at least one storage device (memory).

- this configuration may be realized by a storage device (memory) and a processor included in a plurality of computers.

- a configuration in which the storage device (memory) is integrated with the processor for example, a cache memory including an L1 cache and an L2 cache) may be included.

- the network interface 74 is an interface for connecting to the communication network 8 wirelessly or by wire. As the network interface 74, one conforming to the existing communication standard may be used. The network interface 74 may exchange information with the external device 9A connected via the communication network 8.

- the external device 9A includes, for example, a camera, motion capture, an output destination device, an external sensor, an input source device, and the like.

- an external storage device for example, network storage or the like may be provided.

- the external device 9A may be a device having some functions of the components of each device (remote control device 10 or remote control device 20) in the above-described embodiment.

- the computer 7 may receive a part or all of the processing result via the communication network 8 like a cloud service, or may transmit it to the outside of the computer 7.

- the device interface 75 is an interface such as USB that directly connects to the external device 9B.

- the external device 9B may be an external storage medium or a storage device (memory).

- the storage unit 202 in the above-described embodiment may be realized by the external device 9B.

- the external device 9B may be an output device.

- the output device may be, for example, a display device for displaying an image, a device for outputting audio or the like, or the like.

- output destination devices such as LCD (Liquid Crystal Display), CRT (Cathode Ray Tube), PDP (Plasma Display Panel), organic EL (Electro Luminescence) panel, speaker, personal computer, tablet terminal, or smartphone.

- the external device 9B may be an input device.

- the input device includes a device such as a keyboard, a mouse, a touch panel, or a microphone, and gives the information input by these devices to the computer 7.

- the expression (including similar expressions) of "at least one (one) of a, b and c" or "at least one (one) of a, b or c" is used.

- expressions such as "with data as input / based on / according to / according to data” (including similar expressions) refer to various data itself unless otherwise specified. This includes the case where it is used as an input and the case where various data that have undergone some processing (for example, noise-added data, normalized data, intermediate representation of various data, etc.) are used as input data.

- some result can be obtained "based on / according to / according to the data”

- connection and “coupled” refer to direct connection / coupling, indirect connection / coupling, and electrical (including claims). Intended as a non-limiting term that includes any of electrically connect / join, communicateively connect / join, operatively connect / join, physically connect / join, etc. To. The term should be interpreted as appropriate according to the context in which the term is used, but any connection / combination form that is not intentionally or naturally excluded is not included in the term. It should be interpreted in a limited way.

- the expression "A is configured to B (A configured to B)" means that the physical structure of the element A has a configuration capable of executing the operation B.

- the permanent or temporary setting (setting / configuration) of the element A may be included to be set (configured / set) to actually execute the operation B.

- the element A is a general-purpose processor

- the processor has a hardware configuration capable of executing the operation B

- the operation B is set by setting a permanent or temporary program (instruction). It suffices if it is configured to actually execute.

- the element A is a dedicated processor, a dedicated arithmetic circuit, or the like, the circuit structure of the processor actually executes the operation B regardless of whether or not the control instruction and data are actually attached. It only needs to be implemented.

- maximum refers to finding a global maximum value, finding an approximate value of a global maximum value, and finding a local maximum value. And to find an approximation of the local maximum, and should be interpreted as appropriate according to the context in which the term was used. It also includes probabilistically or heuristically finding approximate values of these maximum values.

- minimize refers to finding a global minimum, finding an approximation of a global minimum, finding a local minimum, and an approximation of a local minimum. Should be interpreted as appropriate according to the context in which the term was used. It also includes probabilistically or heuristically finding approximate values of these minimum values.

- optimize refers to finding a global optimal value, finding an approximate value for a global optimal value, finding a local optimal value, and an approximate value for a local optimal value. Should be interpreted as appropriate according to the context in which the term was used. It also includes probabilistically or heuristically finding approximate values of these optimal values.

- Remote control system 10, 10A, 10B, 10N: Remote control device, 100: Communication department, 102: Output unit, 104: Input section, 20, 20A, 20B, 20N: Remote control device, 200: Communication Department, 202: Storage unit, 204: Information Processing Department, 206: Motion generator, 208: Moving part, 210: Sensor, 212: Detector, 214: Analysis Department, 216: Training Department

Landscapes

- Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- Software Systems (AREA)

- Automation & Control Theory (AREA)

- Mathematical Physics (AREA)

- Evolutionary Computation (AREA)

- Artificial Intelligence (AREA)

- Mechanical Engineering (AREA)

- Robotics (AREA)

- General Engineering & Computer Science (AREA)

- Computing Systems (AREA)

- Data Mining & Analysis (AREA)

- Remote Sensing (AREA)

- Radar, Positioning & Navigation (AREA)

- Aviation & Aerospace Engineering (AREA)

- Health & Medical Sciences (AREA)

- Life Sciences & Earth Sciences (AREA)

- Biomedical Technology (AREA)

- Biophysics (AREA)

- Computational Linguistics (AREA)

- General Health & Medical Sciences (AREA)

- Molecular Biology (AREA)

- Computer Networks & Wireless Communication (AREA)

- Medical Informatics (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Human Computer Interaction (AREA)

- Manufacturing & Machinery (AREA)

- Fuzzy Systems (AREA)

- Manipulator (AREA)

- Selective Calling Equipment (AREA)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| US17/733,949 US20220250247A1 (en) | 2019-11-01 | 2022-04-29 | Remote controlled device, remote control system and remote control device |

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| JP2019-200241 | 2019-11-01 | ||

| JP2019200241A JP7550511B2 (ja) | 2019-11-01 | 2019-11-01 | 自律移動ロボット、装置、被遠隔操作装置及びプログラム |

Related Child Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| US17/733,949 Continuation US20220250247A1 (en) | 2019-11-01 | 2022-04-29 | Remote controlled device, remote control system and remote control device |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| WO2021085429A1 true WO2021085429A1 (ja) | 2021-05-06 |

Family

ID=75712178

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/JP2020/040293 Ceased WO2021085429A1 (ja) | 2019-11-01 | 2020-10-27 | 被遠隔操作装置、遠隔操作システム、遠隔操作装置 |

Country Status (3)

| Country | Link |

|---|---|

| US (1) | US20220250247A1 (enExample) |

| JP (1) | JP7550511B2 (enExample) |

| WO (1) | WO2021085429A1 (enExample) |

Families Citing this family (6)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2022063707A (ja) * | 2020-10-12 | 2022-04-22 | 東京ロボティクス株式会社 | ロボットシステム、その制御方法及びプログラム並びにシステム |

| JPWO2023080230A1 (enExample) * | 2021-11-08 | 2023-05-11 | ||

| CN115167248A (zh) * | 2022-08-12 | 2022-10-11 | 郑州市伊璞网络技术有限公司 | 一种无人超市控制系统的控制方法 |

| JP7652157B2 (ja) * | 2022-09-02 | 2025-03-27 | トヨタ自動車株式会社 | 遠隔操作システム、遠隔操作方法、及び、制御プログラム |

| CN120958841A (zh) * | 2023-03-10 | 2025-11-14 | 索尼集团公司 | 信息处理装置、远程操作控制方法、远程操作控制系统和自主移动体 |

| WO2025177835A1 (ja) * | 2024-02-20 | 2025-08-28 | ソニーグループ株式会社 | 情報処理装置、情報処理方法、およびプログラム |

Citations (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2003296855A (ja) * | 2002-03-29 | 2003-10-17 | Toshiba Corp | 監視装置 |