WO2020115930A1 - 手順検知装置、および手順検知プログラム - Google Patents

手順検知装置、および手順検知プログラム Download PDFInfo

- Publication number

- WO2020115930A1 WO2020115930A1 PCT/JP2019/023375 JP2019023375W WO2020115930A1 WO 2020115930 A1 WO2020115930 A1 WO 2020115930A1 JP 2019023375 W JP2019023375 W JP 2019023375W WO 2020115930 A1 WO2020115930 A1 WO 2020115930A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- procedure

- image

- processing unit

- hand

- estimator

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

-

- G—PHYSICS

- G05—CONTROLLING; REGULATING

- G05B—CONTROL OR REGULATING SYSTEMS IN GENERAL; FUNCTIONAL ELEMENTS OF SUCH SYSTEMS; MONITORING OR TESTING ARRANGEMENTS FOR SUCH SYSTEMS OR ELEMENTS

- G05B19/00—Programme-control systems

- G05B19/02—Programme-control systems electric

- G05B19/418—Total factory control, i.e. centrally controlling a plurality of machines, e.g. direct or distributed numerical control [DNC], flexible manufacturing systems [FMS], integrated manufacturing systems [IMS] or computer integrated manufacturing [CIM]

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/20—Analysis of motion

-

- Y—GENERAL TAGGING OF NEW TECHNOLOGICAL DEVELOPMENTS; GENERAL TAGGING OF CROSS-SECTIONAL TECHNOLOGIES SPANNING OVER SEVERAL SECTIONS OF THE IPC; TECHNICAL SUBJECTS COVERED BY FORMER USPC CROSS-REFERENCE ART COLLECTIONS [XRACs] AND DIGESTS

- Y02—TECHNOLOGIES OR APPLICATIONS FOR MITIGATION OR ADAPTATION AGAINST CLIMATE CHANGE

- Y02P—CLIMATE CHANGE MITIGATION TECHNOLOGIES IN THE PRODUCTION OR PROCESSING OF GOODS

- Y02P90/00—Enabling technologies with a potential contribution to greenhouse gas [GHG] emissions mitigation

- Y02P90/02—Total factory control, e.g. smart factories, flexible manufacturing systems [FMS] or integrated manufacturing systems [IMS]

Definitions

- the present invention relates to a procedure detection device and a procedure detection program that apply image recognition technology to determine from the operation whether or not the procedure has been performed according to a desired procedure, and measure the execution time of each procedure.

- Patent Document 1 Provides a system and method for monitoring the use of hand washing agents for determining compliance with hand hygiene guidelines. Hand washing agents are provided together with detectable volatile substances such as odor, After being washed, the subject puts his/her hand on the detector (badge, etc.), which notifies the sensor of the volatile substance and the detection of the volatile substance. And an indicator for indicating hand hygiene use and hand hygiene compliance.”

- the first method is verification by a checklist.

- the second method is visual verification by a hand-wash checker.

- the third method is visual verification using a hand stamp medium.

- the fourth method is a speedy cleanliness test by an ATP (adenosine triphosphate) wiping test.

- the fifth method is to grasp the number of bacteria by a simple wiping test.

- the sixth method is to grasp the status of hand washing implementation from the amount of medicine used.

- the invention of Patent Document 1 relates to the sixth method.

- the first to fifth methods have a drawback that the verification procedure after hand washing increases.

- the contents of the checklist are left to the subjectivity of the person himself, so that even if the handwashing is inappropriate, there is a possibility that the handwashing is properly done.

- the sixth method can only grasp macroscopically the handwashing implementation status, and it is difficult for each person to recognize that it has been implemented without omission. Therefore, it is an object of the present invention to determine whether or not hand washing has been reliably performed as objective data.

- the procedure detection device of the present invention learns an image of a series of procedures performed by a person, and estimates which of the series of procedures from the images captured by the imaging device. And a processing unit that determines a procedure for each image from each estimation procedure estimated from each image by the estimator and calculates a time associated with each procedure that is confirmed. To do.

- the procedure detection program of the present invention comprises: a computer learning a series of procedure images performed by a person; a step of estimating from the images captured by an imaging device which of the series procedures is performed; From each estimation procedure estimated from the image, a step of determining a procedure for each of the images and a step of calculating a time for each of the confirmed procedures are executed.

- Other means will be described in the modes for carrying out the invention.

- the hands of the person (speaker) who wash the hand are photographed from a camera installed at the upper part of the handwashing room and used as a model image, and this model image is learned by deep learning for each procedure to recognize each procedure. Then, a person newly picks up the hand of the person to wash the hand, and the time when the person executes each procedure is calculated.

- FIG. 1A is a configuration diagram of a hand-washing detection system 1A according to the first embodiment.

- the hand-washing detection system 1A includes an imaging device 3 and a touch panel display 4 provided in a hand-washing room 6, a recognition device 2, and a display unit 25.

- the hand-washing detection system 1A detects each hand-washing procedure of each person (worker), determines whether or not the hand-washing has been carried out reliably, and leaves it as objective data.

- the imaging device 3 is a camera that repeatedly captures an image (still image) at a predetermined frame cycle.

- the image acquisition cycle of the imaging device 3 is, for example, 30 frames/second.

- the imaging device 3 is installed, for example, in the upper part of the hand washing area 6, photographs the hands of each person when washing hands, and outputs the photographed image to the recognition device 2.

- the recognition device 2 (procedure detection device) is a computer and includes a CPU (Central Processing Unit), a ROM (Read Only Memory), and a RAM (Random Access Memory), which are not shown.

- the recognition device 2 learns the model image of the hand-washed fingertip by deep learning for each procedure, then determines the procedure from the new image of the hand-washed fingertip, and calculates the time for each of the confirmed steps. To do.

- the result determined by the recognition device 2 is displayed on the display unit 25.

- the recognition device 2 stores, in the storage unit 28, a video image (moving image) of a model of a hand washing hand for each procedure. As a result, the model video can be presented to the worker so that each procedure can be executed without error.

- the display unit 25 is, for example, a liquid crystal panel, and displays characters, figures, images, and the like.

- the display unit 25 displays the time related to the procedure determined by the recognition device 2.

- the recognition device 2 and the display unit 25 may be directly connected to the imaging device 3 of the handwashing place 6, they may be arranged at a remote place and may be communicatively connected via a cloud or the like.

- the touch panel display 4 is configured by laminating a transparent touch panel on a liquid crystal panel. The touch panel display 4 is for inputting which procedure the model image corresponds to when the recognition device 2 learns the model image in which the hand of hand washing is photographed.

- the touch panel display 4 further receives a selection input between the normal operation mode and the learning mode, and outputs it to the additional learning unit 29 described later.

- the normal operation mode is a mode in which the learned estimator 22 estimates which procedure the image captured by the imaging device 3 is.

- the learning mode is a mode in which the estimator 22 learns an image captured by the imaging device 3, and is used when it is desired to recognize the image by additional learning or when learning a new motion.

- the additional image registration by the touch panel display 4 is not essential. Therefore, as shown in FIG. 1B, the touch panel display 4 and the additional learning unit 29 may not be provided. This is because there is a risk of over-learning, and the additional learning unit 29 is unnecessary in the case of hand washing according to the standard procedure.

- the touch panel display 4 may be optionally attached to the hand washing detection system 1B shown in FIG. 1B. If the hand-washing detection system is realized as a cloud service, it is possible to improve the function of the hand-washing detection system by processing such as downloading the improved learning result to the processing unit 23. Further, the touch panel operation function is not essential, and only the display of FIG. 18 and FIG. 19 described later may be displayed on the display unit 25.

- the recognition device 2 further includes a difference processing unit 21, an estimator 22, a processing unit 23, a person detection unit 24, a storage unit 28, and an additional learning unit 29.

- the difference processing unit 21 detects a difference between an image of a predetermined frame and an image of a frame immediately preceding the predetermined frame. The detection result of the difference processing unit 21 is output to the processing unit 23.

- the estimator 22 is, for example, a convolutional neural network, and after learning the relationship between the image that is the teacher data and the procedure by deep learning, estimates the procedure to which the new image captured by the imaging device 3 corresponds. It is a thing. For example, when the estimator 22 learns a predetermined procedure for 10 seconds, the teacher data is a combination of 300 still images and the procedure. When the estimator 22 learns N procedures each for 10 seconds, the teacher data is a combination of N ⁇ 300 still images and procedures.

- the estimator 22 includes an estimation unit using a convolutional neural network that estimates which of the series of procedures is performed from images of a series of procedures performed by a person.

- a model obtained by learning these teacher data by deep learning is constructed in the estimator 22.

- the estimation result by the estimator 22 is output to the processing unit 23.

- the additional learning unit 29 operates the estimator 22 in the learning mode and additionally performs learning. Specifically, the performer keeps pressing the label number (registered or newly registered corresponding to the hand-washing procedure) from the touch panel while shooting the motion to be learned.

- Teacher data is created as long as the performer keeps pushing, and creation of teacher data stops when the performer stops pushing.

- the teacher data refers to a model image relating to the procedure of the designated label number.

- the estimator 22 learns the created teacher data as a model for the procedure of the designated label number. As a result, the estimation model to which the date and time information is added is formed in the estimator 22. The user can select or delete each estimation model formed in the estimator 22.

- the person detection unit 24 detects whether or not a new person has come to the washroom 6 from the still image captured by the imaging device 3.

- the detection result of the person detection unit 24 is output to the processing unit 23.

- the person detection unit 24 may recognize each person in a distinguishable manner, or may simply recognize whether or not the person is a person, and is not limited.

- the processing unit 23 starts the procedure confirmation process.

- the processing unit 23 determines which procedure is being executed from the estimation result of the estimator 22.

- the processing unit 23 further detects from the detection result of the difference processing unit 21 that the hand is stopped and the procedure is not executed, or that the hand is moving and the procedure is executed. As a result, the processing unit 23 detects how long the decided procedure has been executed.

- the storage unit 28 is a non-volatile storage device such as a hard disk or a flash memory.

- the storage unit 28 stores the procedure determined by the processing unit 23 and its execution time, and stores a program (not shown) for executing each process of the recognition device 2.

- the storage unit 28 may further store a model image of each procedure. As a result, when the worker executes each procedure, the model can be shown by the image.

- FIG. 2 is a configuration diagram of a hand washing detection system 1C of a modified example.

- the hand-washing detection system 1C includes a recognition device 2A different from the hand-washing detection system 1A shown in FIG. 1A, and further includes a finger vein authentication device 5.

- the recognition device 2A does not include the person detection unit 24.

- the finger vein authentication device 5 detects a person who is washing a hand by detecting a finger, and authenticates who the person is based on the information of the finger vein.

- the authentication result obtained by the finger vein authentication device 5 is output to the processing unit 23.

- the processing unit 23 starts the procedure confirmation process.

- the processing unit 23 determines which procedure is being executed from the estimation result of the estimator 22.

- the processing unit 23 further detects from the detection result of the difference processing unit 21 that the hand is stopped and the procedure is not executed, or that the hand is moving and the procedure is executed. As a result, the processing unit 23 detects which procedure is being executed for how long.

- FIG. 3 is a diagram showing an exemplary hand washing procedure and the time required for each procedure. Images of each procedure are shown in FIGS. 4 to 14 described later.

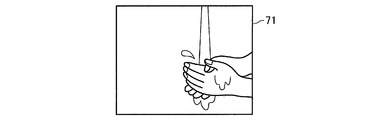

- Procedure #1 is to wet the hand with running water, and is shown in the procedure image 71 of FIG.

- This procedural image 71 is similar to the procedural image 79 of the procedural #9, which will be described later in which water is thoroughly rinsed with running water.

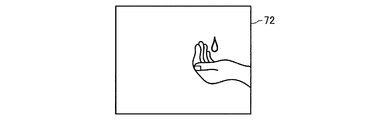

- Procedure #2 is to apply an appropriate amount of soap solution to the hand and is shown in the procedure image 72 of FIG.

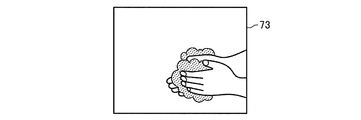

- Procedure #3 is shown in the procedure image 73 of FIG. 6 where the palms are rubbed together.

- Procedure #4 involves rubbing the back of one hand with the palm and the back of the other hand with the palm and is shown in the procedure image 74 of FIG.

- the recognition device 2 when the recognition device 2 is made to learn, it may be divided into a procedure of rubbing the back of the right hand with the palm and a procedure of rubbing the back of the left hand with the palm.

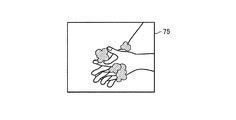

- Procedure #5 is to rub between fingers and is shown in procedure image 75 of FIG.

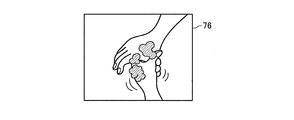

- Procedure #6 is to rub one thumb with the other hand and the other thumb with one hand, and is shown in the procedure image 76 of FIG.

- the recognition device 2 is made to learn, the procedure of rubbing the thumb of the right hand with the left hand and the procedure of rubbing the thumb of the left hand with the right hand may be divided.

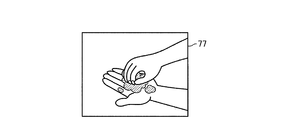

- Procedure #7 is to rub one palm with a fingertip and rub the other palm with a fingertip, and is shown in a procedure image 77 of FIG.

- the recognition device 2 When the recognition device 2 is made to learn, it may be divided into a procedure of rubbing the palm of the left hand with the fingertip of the right hand and a procedure of rubbing the palm of the right hand with the fingertip of the left hand.

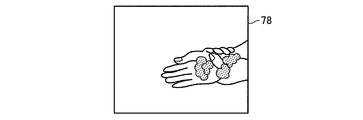

- Procedure #8 involves rubbing one wrist and the other wrist and is shown in procedure image 78 of FIG. When the recognition device 2 is made to learn, it may be divided into a procedure of rubbing the right wrist and a procedure of rubbing the left wrist.

- Procedure #9 was a good rinse with running water and is shown in procedure image 79 of FIG.

- the procedure image 79 is similar to the procedure image 71 of the procedure #1.

- the procedure #10 is to wipe off water firmly, and is shown in the procedure image 710 of FIG.

- Procedure #11 involves rubbing the disinfectant and is shown in procedure image 711 of FIG.

- the cleaning rate in hand washing is increased and the cleaning performance does not vary. This makes it possible to remove pathogenic microorganisms that cause food poisoning.

- the learning by the estimator 22 uses the above-mentioned procedure number as a label and can be detected even if the procedure number does not match. Further, by post-processing of the processing unit 23, it is possible to detect whether the hands have been washed in a prescribed order. In this case, the detection along the procedure number is positive. However, it is expected that the rules (how to wash) of the hand washing procedure will be different for each customer who installs the hand washing detection system 1B. In that case, the estimator 22 may be made to learn how to wash according to the regulations. For example, the procedure is not limited to the procedure #1 to the procedure #11, but the procedure #2 to the procedure #9 can be repeated twice to effectively remove the norovirus.

- the person (actor) taps the additional procedure number displayed on the touch panel display 4 to perform additional hand-washing, for example, for 10 seconds as a model, and let the estimator 22 learn. In this case, at least a few people (actors) are required. When detecting hand washing by an unspecified large number, the recognition rate may decrease. In that case as well, it is advisable to additionally learn.

- the normal recognition level is set when the execution time difference of each procedure number is less than 10% in a series of hand washing operations.

- the learning model that operates on the estimator 22 is stored in the storage unit 28, for example, and can be arbitrarily selected on the selection screen displayed on the touch panel display 4.

- step S10 the person detection unit 24 of the hand washing detection system 1B determines whether a new person has been detected. If the person detection unit 24 does not detect a new person (No), the process returns to step S10. When the person detection unit 24 detects a new person (Yes), the process proceeds to step S11. Here, the new person is an operator who performs hand washing.

- the processing unit 23 initializes the current confirmation procedure before starting hand washing (S11).

- the imaging device 3 acquires a captured image (S12) and performs Augmentation processing (S13).

- the Argumentation process is a padding of an image, for example, adding an artificial operation such as moving, rotating, enlarging or reducing the original image.

- the learned estimator 22 estimates the procedure corresponding to this image (S14). Note that the processing unit 23 may initialize the current procedure before the start of hand washing when the person detection unit 24 no longer detects a person, and is not limited.

- the processing unit 23 majority-determines which procedure is the image n frames before in the past by the images of the front and rear n frames by the moving majority process (S15).

- the n frame is a value that is appropriately set according to the estimation accuracy of the estimator 22.

- the processing unit 23 does not execute the process of step S15 until the photographing of n frames is completed after detecting the person, and thus the procedure is not determined.

- the processing unit 23 determines the result of majority voting of n frames before and after the target frame (S16). If the result of the majority decision is the same multiple values (Yes), the processing unit 23 determines whether or not these results include the same as the pre-determination procedure (S18).

- the pre-determination procedure is a procedure for determining the frame one before the target frame, and is the procedure set in step S11 when the target frame is the first. If this determination is satisfied (Yes), the processing unit 23 determines the same procedure as the previous determination procedure as the procedure of the target frame (S19), and proceeds to the processing of step S22 in FIG.

- step S18 if the result of the majority decision does not include the same procedure as the previous decision procedure (No), the processing unit 23 further determines whether the result of the majority decision includes the same procedure as the next scheduled procedure of the previous decision procedure (No). S20).

- the next scheduled procedure is a procedure that is one step ahead of the previously determined procedure. If this determination is satisfied (Yes), the processing unit 23 determines the same procedure as the next scheduled procedure as the procedure of the target frame (S21), and proceeds to the processing of Step S23 of FIG.

- step S20 if the result of the majority decision does not include the same procedure as the next scheduled procedure (No), the procedure of the target frame is set to “unknown” (S22), and the processing in step S23 of FIG. 16 is performed. move on.

- step S16 if the result of the majority vote is not the same value (No), that is, if a single procedure is decided by majority vote, the processing section 23 determines the target frame as the procedure (S17), The process proceeds to step S23 of 16.

- the processing unit 23 can correct the procedure erroneously estimated by the estimator 22.

- step S23 of FIG. 16 the processing unit 23 determines whether or not the target frame is confirmed as the procedure #1 or the procedure #9 by the moving majority decision process.

- the reason for determining whether or not the procedure #1 or the procedure #9 is determined is that the procedure #1 and the procedure #9 are almost the same captured images, and the estimation of the estimator 22 and the majority movement of the processing unit 23 are determined. This is because it is difficult to decide only by processing.

- the processing unit 23 determines whether or not the pre-determined procedure is in the middle of hand washing (S24).

- “in the middle of hand washing” refers to any one of procedure #2 to procedure #9.

- step S24 if the pre-established procedure is in the middle of hand washing (Yes), the processing unit 23 re-establishes the procedure #9 (S25), and if the pre-established procedure is not in the middle of hand washing (No), re-installs in the procedure #1.

- step S26 After confirmation (S26), the process proceeds to step S27. If the processing unit 23 determines in step S23 that the target frame is neither procedure #1 nor procedure #9 (No), the processing proceeds to step S27. Through the processes of steps S23 to S26, the processing unit 23 can re-determine the image-similar procedure based on the relationship with the pre-determination procedure. Further, even when the user specifies that the same operation is to be executed plural times in different procedures, the processing unit 23 can distinguish and determine these procedures.

- step S27 the difference processing unit 21 performs image difference processing.

- the image difference process is a process of calculating the difference between the target image and the image immediately before the target image.

- the processing unit 23 determines whether or not the difference between the target image and the immediately preceding image is equal to or greater than the threshold value (S28). If the image difference is greater than or equal to the threshold, it can be determined that the hand is moving. When the image difference is less than the threshold value, it can be determined that the hand is stopped. That is, the difference processing unit 21 detects that the hand is stopped and thus the hand washing is not executed even though the procedure of the target frame is confirmed.

- the processing unit 23 adds the time of one frame to the execution time of this procedure (S29), and proceeds to the process of step S30. If the image difference is less than the threshold value (No), the processing unit 23 proceeds to the process of step S30.

- the processing unit 23 records each procedure and their execution time in the storage unit 28 (S30). Further, the processing unit 23 displays the execution state of the hand washing procedure on the display unit 25 (S31), and returns to the process of step S12 of FIG.

- the execution state of the hand washing procedure displayed on the display unit 25 is illustrated in FIG. 17 described later.

- FIG. 17 is a diagram illustrating an example of the procedure detection result.

- the screen 8 displays a hand washing procedure execution state 81 on the left side and a rectangular detection area 82 on the lower center side.

- the detection region 82 is a target image processed by the estimator 22 and the difference processing unit 21.

- “0 Not Ready” in the execution state 81 indicates the time when the hand is not washed.

- the time when the hand is not washed refers to the time when the hand is stopped, for example.

- “1 Water:” shows the procedure #1 of wetting a hand with running water, and the bar graph on the right side shows the execution time.

- “2 Soap:” indicates the time of step #2 in which an appropriate amount of soap solution is taken into the hand, and the bar graph on the right side indicates the execution time.

- Palm: indicates the time of step #3 of rubbing between the palms

- the bar graph on the right side indicates the execution time.

- “4 Back(R):” indicates the time of the procedure of rubbing the back of the right hand with the palm of the left hand

- the bar graph on the right side indicates the execution time.

- “5 Back(L):” indicates the time of the procedure of rubbing the back of the left hand with the palm of the right hand

- the bar graph on the right side indicates the execution time.

- “9 Fingers(R):” indicates the time of the procedure of rubbing the palm of the left hand with the fingertip of the right hand, and the bar graph on the right side indicates the execution time.

- “A Fingers(L):” shows the time of the procedure of rubbing the palm of the right hand with the fingertip of the left hand, and the bar graph on the right side shows the execution time.

- “B Wrist(R):” indicates the time of the procedure for rubbing the right wrist, and the bar graph on the right side thereof indicates the execution time.

- “C Wrist(L):” indicates the time of the procedure for rubbing the left wrist, and the bar graph on the right side thereof indicates the execution time.

- “Z Unknown:” indicates an unknown time during hand washing and cannot be classified into any of the above procedures, and the bar graph on the right side thereof indicates the execution time.

- this screen 8 By monitoring the execution status 81 of this screen 8 by the administrator, it is possible to confirm whether or not each employee carried out hand washing according to the procedure. Further, by disposing the display unit 25 in each hand washing area 6 and displaying the screen 8, it becomes possible for the employee to check whether the hand washing is performed according to the procedure.

- FIG. 18 is a diagram showing a screen 9 at the start of hand washing in the modified example.

- This screen 9 is displayed on the touch panel display 4 of FIG.

- an execution result list 91 is displayed on the right side

- a guidance 92 for the next procedure is displayed below it

- a live image 93 of the camera and a model image 94 are displayed on the left side. ..

- a case is shown in which the worker “Hitachi Hanako” holds his finger on the finger vein authentication device 5 shown in FIG. 2 for authentication.

- the model video 94 is a replay of the model video of the next "wet with running water” procedure to be executed. This allows the operator to know how to perform the current procedure.

- FIG. 19 is a diagram showing a screen 9 upon completion of hand washing in the modification.

- an implementation result list 91 is displayed on the right side, and a live image 93 of the camera is displayed on the left side. “Completed” is displayed for all the execution results in the execution result list 91, indicating that each procedure has been executed for the determined time.

- “Hand wash completed” is displayed with the characters superposed on it.

- the hand-washing detection system 1A, 1B, 1C of the first embodiment it can be determined whether or not the hand-washing has been carried out with certainty, and the data can be left in the storage unit 28 as objective data. It is also possible to provide the device as a device for surely performing hand washing in cooperation with the finger vein authentication device 5 (see FIG. 2) of the modified example.

- FIG. 20 is a block diagram of the hand-washing detection system 1D in 2nd Embodiment. As shown in FIG. 20, the hand-washing detection system 1D includes a recognition device 2B different from the hand-washing detection system 1A shown in FIG. 1A.

- the recognition device 2B includes a skeleton information estimation unit 26 and a motion difference processing unit 27, and does not include the difference processing unit 21.

- the skeleton information estimation unit 26 estimates two-dimensional coordinates or three-dimensional coordinates of each joint of the hand. Thereby, the recognition device 2B can accurately grasp the movement of the finger.

- the two-dimensional coordinates or three-dimensional coordinates of each joint estimated by the skeleton information estimation unit 26 are output to the estimator 22 and the motion difference processing unit 27.

- Examples of the skeleton information estimation unit 26 include OpenPose (registered trademark), VISION POSE (registered trademark), and VNect.

- the estimator 22 is a learning/estimating means by machine learning including a neural network, and after learning the relationship between the coordinates of each joint which is teacher data and the procedure, determines which procedure the coordinate of each joint corresponds to. It is an estimate.

- the estimation result by the estimator 22 is output to the processing unit 23.

- the motion difference processing unit 27 calculates the difference between the coordinates and thus the motion amount of the finger.

- the motion amount of the finger calculated by the motion difference processing unit 27 is output to the processing unit 23.

- the processing unit 23 starts a series of procedure estimation when the person detection unit 24 detects a new person.

- the processing unit 23 determines which procedure is being executed from the series of estimation results of the estimator 22.

- the processing unit 23 further detects from the detection result of the motion difference processing unit 27 that the hand has stopped and the procedure has not been executed, or that the hand has moved and the procedure has been executed. As a result, the processing unit 23 detects which procedure is being executed for how long.

- FIG. 21, FIG. 22, and FIG. 23 are flowcharts (No. 1) of the procedure detection process.

- step S40 the person detection unit 24 of the hand washing detection system 1D determines whether a new person has been detected. If the person detection unit 24 does not detect a new person (No), the process returns to step S40. When the person detection unit 24 detects a new person (Yes), the process proceeds to step S41.

- the new person is an operator who performs hand washing.

- the processing unit 23 initializes the current procedure before the start of hand washing (S41).

- the imaging device 3 acquires a captured image (S42).

- the skeleton information estimation unit 26 estimates two-dimensional coordinates or three-dimensional coordinates of each joint of the hand (S43).

- the skeleton information estimation unit 26 sets the midpoint between the left and right wrists or the center of gravity of each of the left and right hands as the origin, and vectorizes from the origin to each skeleton coordinate (S44). Then, the skeleton information estimation unit 26 converts an object in which the hand appears large or small into an appropriate size, normalizes the vector (S45), and proceeds to step S46 in FIG.

- the learned estimator 22 estimates the procedure corresponding to the coordinates of the finger from the two-dimensional coordinates or the three-dimensional coordinates of each joint of the hand.

- the processing unit 23 majority-determines which procedure the finger coordinates of the previous n frames ago are by the finger coordinates of the n frames before and after the past by the moving majority processing (S47).

- the n frame is a value that is appropriately set according to the estimation accuracy of the estimator 22.

- the processing unit 23 does not execute the process of step S47 until the photographing of n frames is completed after detecting the person.

- the processing unit 23 determines the result of the majority decision of n frames before and after (S48). As a result, the processing unit 23 determines whether or not the results of the majority decision have the same plural values (Yes), these results include the same as the previous confirmation procedure (S50).

- the pre-determination procedure is a procedure for determining the frame one before the target frame, and is the procedure set in step S41 when the target frame is the first. If this determination is established (Yes), the processing unit 23 determines the same procedure as the previous confirmation procedure as the procedure of the target frame (S51) and proceeds to the processing of step S55 in FIG.

- step S50 if the result of the majority decision does not include the same as the pre-determination procedure (No), the processing unit 23 further determines whether the result of the majority decision includes the same thing as the next scheduled procedure (S52).

- the next scheduled procedure is a procedure that is one step ahead of the previously determined procedure. If this determination is satisfied (Yes), the processing unit 23 determines the same procedure as the next scheduled procedure as the procedure of the target frame (S53), and proceeds to the processing of step S55 in FIG.

- step S52 if the result of the majority decision does not include the same as the next scheduled procedure (No) (No), the procedure is set to “unknown” (S54), and the process proceeds to step S55 of FIG.

- step S48 if the result of the majority vote is not the same value (No), that is, if a single procedure is decided by majority vote, the processing section 23 determines the target frame as the procedure (S49), 23, the process proceeds to step S55.

- the processing unit 23 can correct the procedure erroneously estimated by the estimator 22.

- step S55 of FIG. 23 the processing unit 23 determines whether or not it has been decided as the procedure #1 or the procedure #9 by the moving majority decision process.

- the reason for determining whether or not the procedure #1 or the procedure #9 is determined is that the procedure #1 and the procedure #9 have substantially the same finger coordinate, and the estimation of the estimator 22 and the movement of the processing unit 23 are performed. This is because it is difficult to make a decision only by majority processing.

- the processing unit 23 determines whether or not the pre-determination procedure is in the middle of hand washing (S56).

- “in the middle of hand washing” refers to any one of procedure #2 to procedure #9.

- step S56 if the pre-determination procedure is in the middle of hand washing (Yes), the processing unit 23 re-determines the target frame as procedure #9 (S57), and if not in the middle of hand washing (No), sets the target frame in procedure #1. (S58), and the process proceeds to step S59. If it is determined in step S55 that the processing unit 23 is neither procedure #1 nor procedure #9 (No), the processing proceeds to step S59. Through the processes of steps S55 to S58, the processing unit 23 can re-determine the image-similar procedure based on the relationship with the pre-determination procedure. Further, even when the user specifies that the same operation is to be executed plural times in different procedures, the processing unit 23 can distinguish and determine these procedures.

- step S59 the motion difference processing unit 27 calculates the difference between the two-dimensional coordinates or the three-dimensional coordinates of the finger.

- the difference between the two-dimensional coordinates or the three-dimensional coordinates of the fingers is the difference between the coordinates of the fingers of the previous frame and the coordinates of the fingers of the current frame, and corresponds to the amount of movement of the fingers.

- the processing unit 23 determines whether or not the motion amount calculated by the motion difference processing unit 27 is equal to or more than a threshold value (S60). If the motion amount is equal to or more than the threshold value, it can be determined that the hand is moving. If the motion amount is less than the threshold value, it can be determined that the hand is stopped.

- the processing unit 23 adds the time of one frame to the execution time of the procedure (S61), and proceeds to the process of step S62. If the operation amount is less than the threshold value (No), the processing unit 23 proceeds to the process of step S62 as it is.

- the processing unit 23 records each procedure and their execution time in the storage unit 28 (S62). Further, the processing unit 23 displays the execution state of the hand washing procedure on the display unit 25 (S63), and returns to the process of step S42 of FIG.

- the execution state of the hand washing procedure displayed on the display unit 25 is illustrated in FIG. 17 described above.

- the hand-washing detection system 1D of the second embodiment does not directly machine-learn the images captured by the imaging device 3, but learns the coordinates of each skeleton of the person estimated from these images by deep learning. This makes it possible to estimate the motion of the part hidden by one hand.

- the present invention is not limited to the above-described embodiment, but includes various modifications.

- the above-described embodiments have been described in detail in order to explain the present invention in an easy-to-understand manner, and are not necessarily limited to those including all the configurations described.

- a part of the configuration of a certain embodiment can be replaced with the configuration of another embodiment, and the configuration of another embodiment can be added to the configuration of a certain embodiment.

- the respective configurations, functions, processing units, processing means, and the like described above may be partially or entirely realized by hardware such as an integrated circuit.

- Each of the above configurations and functions may be realized by software by a processor interpreting and executing a program that realizes each function.

- Information such as programs, tables, and files that implement each function can be placed on a recording device such as a memory, hard disk, SSD (Solid State Drive), or recording medium such as a flash memory card or DVD (Digital Versatile Disk). it can.

- control lines and information lines are shown to be necessary for explanation, and not all the control lines and information lines on the product are necessarily shown. In reality, it may be considered that almost all the configurations are connected to each other. Examples of modifications of the present invention include the following (a) to (d).

- the trigger that authenticates each person and starts hand-washing detection is not limited to detection of a person by a captured image or authentication by a finger vein authentication device. For example, detection of a non-contact IC card or biometric authentication other than a finger vein. May be, but is not limited to.

- the series of procedures to be recognized by the present invention is not limited to the procedure of hand washing, and the procedures of care, rehabilitation, dance choreography, yoga pose procedure, sports procedure (form), etc. The procedure may be any of, and is not limited.

- the frame rate of the imaging device is not limited to 30 frames/sec.

- the image-wise similar procedure identified by the recognition device is not limited to manual setting, and the estimator may determine and set the similarity between the two. Accordingly, two image-similar procedures can be re-determined based on the pre-determination procedure.

Landscapes

- Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Theoretical Computer Science (AREA)

- General Engineering & Computer Science (AREA)

- Manufacturing & Machinery (AREA)

- Quality & Reliability (AREA)

- Automation & Control Theory (AREA)

- Multimedia (AREA)

- Image Analysis (AREA)

- General Factory Administration (AREA)

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| JP2018-229307 | 2018-12-06 | ||

| JP2018229307A JP2020091739A (ja) | 2018-12-06 | 2018-12-06 | 手順検知装置、および手順検知プログラム |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| WO2020115930A1 true WO2020115930A1 (ja) | 2020-06-11 |

Family

ID=70974522

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/JP2019/023375 Ceased WO2020115930A1 (ja) | 2018-12-06 | 2019-06-12 | 手順検知装置、および手順検知プログラム |

Country Status (2)

| Country | Link |

|---|---|

| JP (1) | JP2020091739A (enExample) |

| WO (1) | WO2020115930A1 (enExample) |

Families Citing this family (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP7476599B2 (ja) * | 2020-03-24 | 2024-05-01 | 大日本印刷株式会社 | 情報処理システム、及び情報処理方法 |

| JP7166005B2 (ja) * | 2020-05-26 | 2022-11-07 | 株式会社ニューギン | 遊技機 |

| JP7681826B2 (ja) * | 2020-12-22 | 2025-05-23 | 株式会社プロフィールド | 検査装置、学習装置、検査方法、学習器の生産方法、およびプログラム |

| JP7583273B2 (ja) * | 2021-03-31 | 2024-11-14 | 富士通株式会社 | 手洗い認識装置、手洗い認識方法、手洗い認識プログラム、及び手洗い認識システム |

| JP7583275B2 (ja) * | 2021-03-31 | 2024-11-14 | 富士通株式会社 | 手洗い認識装置、手洗い認識方法、手洗い認識プログラム、及び手洗い認識システム |

Citations (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2015133694A (ja) * | 2013-12-13 | 2015-07-23 | パナソニック インテレクチュアル プロパティ コーポレーション オブアメリカPanasonic Intellectual Property Corporation of America | 端末装置、端末装置の制御方法およびコンピュータプログラムを記録した記録媒体 |

Family Cites Families (6)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP5438601B2 (ja) * | 2010-06-15 | 2014-03-12 | 日本放送協会 | 人物動作判定装置およびそのプログラム |

| JP2012053813A (ja) * | 2010-09-03 | 2012-03-15 | Dainippon Printing Co Ltd | 人物属性推定装置、人物属性推定方法、及びプログラム |

| JP2013017631A (ja) * | 2011-07-11 | 2013-01-31 | Sumitomo Electric Ind Ltd | 手洗いモニタおよび手洗いモニタ方法 |

| JP5884554B2 (ja) * | 2012-03-01 | 2016-03-15 | 住友電気工業株式会社 | 手洗いモニタ、手洗いモニタ方法および手洗いモニタプログラム |

| JP2016177755A (ja) * | 2015-03-23 | 2016-10-06 | 日本電気株式会社 | 注文端末装置、注文システム、客情報生成方法、及びプログラム |

| JP6305448B2 (ja) * | 2016-01-29 | 2018-04-04 | アース環境サービス株式会社 | 手洗い監視システム |

-

2018

- 2018-12-06 JP JP2018229307A patent/JP2020091739A/ja active Pending

-

2019

- 2019-06-12 WO PCT/JP2019/023375 patent/WO2020115930A1/ja not_active Ceased

Patent Citations (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2015133694A (ja) * | 2013-12-13 | 2015-07-23 | パナソニック インテレクチュアル プロパティ コーポレーション オブアメリカPanasonic Intellectual Property Corporation of America | 端末装置、端末装置の制御方法およびコンピュータプログラムを記録した記録媒体 |

Also Published As

| Publication number | Publication date |

|---|---|

| JP2020091739A (ja) | 2020-06-11 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| WO2020115930A1 (ja) | 手順検知装置、および手順検知プログラム | |

| US20220189211A1 (en) | Physical activity quantification and monitoring | |

| US8824802B2 (en) | Method and system for gesture recognition | |

| JP7476599B2 (ja) | 情報処理システム、及び情報処理方法 | |

| JP7380567B2 (ja) | 情報処理装置、情報処理方法及び情報処理プログラム | |

| JP6757010B1 (ja) | 動作評価装置、動作評価方法、動作評価システム | |

| CN115552465B (zh) | 洗手识别系统以及洗手识别方法 | |

| JP2021174488A (ja) | 手洗い評価装置および手洗い評価プログラム | |

| CN114140722A (zh) | 引体向上运动的评估方法、装置、服务器及存储介质 | |

| Mayo et al. | How hard are computer vision datasets? calibrating dataset difficulty to viewing time | |

| JP2021077230A (ja) | 動作認識装置、動作認識方法、動作認識プログラム及び動作認識システム | |

| EA201990408A1 (ru) | Способ и система выявления тревожных событий при взаимодействии с устройством самообслуживания | |

| Czarnuch et al. | Development and evaluation of a hand tracker using depth images captured from an overhead perspective | |

| JP7726373B2 (ja) | 動作評価装置、動作評価方法、及びプログラム | |

| JP7447998B2 (ja) | 手洗い認識システムおよび手洗い認識方法 | |

| CN115116136A (zh) | 一种异常行为检测方法、装置、介质 | |

| JP7490347B2 (ja) | 片付け支援装置、片付け支援プログラム及び片付け支援方法 | |

| JP7687062B2 (ja) | 学習モデルの生成方法、情報処理方法、コンピュータプログラム及び情報処理装置 | |

| JP7492850B2 (ja) | 衛生管理システム | |

| JP2021092918A (ja) | 画像処理装置、画像処理方法、コンピュータプログラム及び記憶媒体 | |

| JP2020201755A (ja) | 集中度計測装置、集中度計測方法、およびプログラム | |

| CN118201554A (zh) | 认知功能评估系统和训练方法 | |

| CN118176530A (zh) | 行动辨识方法、行动辨识装置以及行动辨识程序 | |

| JP7463792B2 (ja) | 情報処理システム、情報処理装置及び情報処理方法 | |

| Bakchy et al. | Limbs and muscle movement detection using gait analysis |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| 121 | Ep: the epo has been informed by wipo that ep was designated in this application |

Ref document number: 19893140 Country of ref document: EP Kind code of ref document: A1 |

|

| NENP | Non-entry into the national phase |

Ref country code: DE |

|

| 122 | Ep: pct application non-entry in european phase |

Ref document number: 19893140 Country of ref document: EP Kind code of ref document: A1 |