WO2016174823A1 - Ophthalmologic imaging apparatus, operation method thereof, and computer program - Google Patents

Ophthalmologic imaging apparatus, operation method thereof, and computer program Download PDFInfo

- Publication number

- WO2016174823A1 WO2016174823A1 PCT/JP2016/001849 JP2016001849W WO2016174823A1 WO 2016174823 A1 WO2016174823 A1 WO 2016174823A1 JP 2016001849 W JP2016001849 W JP 2016001849W WO 2016174823 A1 WO2016174823 A1 WO 2016174823A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- image

- images

- acquisition

- confocal

- imaging apparatus

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

- 0 CCC(C)CC(CC1)(C1(C)C1C)C1(*)NC12C(CC)(CC3)C3(C3)C13CC2 Chemical compound CCC(C)CC(CC1)(C1(C)C1C)C1(*)NC12C(CC)(CC3)C3(C3)C13CC2 0.000 description 3

Images

Classifications

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B3/00—Apparatus for testing the eyes; Instruments for examining the eyes

- A61B3/10—Objective types, i.e. instruments for examining the eyes independent of the patients' perceptions or reactions

- A61B3/1025—Objective types, i.e. instruments for examining the eyes independent of the patients' perceptions or reactions for confocal scanning

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B3/00—Apparatus for testing the eyes; Instruments for examining the eyes

- A61B3/10—Objective types, i.e. instruments for examining the eyes independent of the patients' perceptions or reactions

- A61B3/102—Objective types, i.e. instruments for examining the eyes independent of the patients' perceptions or reactions for optical coherence tomography [OCT]

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B3/00—Apparatus for testing the eyes; Instruments for examining the eyes

- A61B3/10—Objective types, i.e. instruments for examining the eyes independent of the patients' perceptions or reactions

- A61B3/12—Objective types, i.e. instruments for examining the eyes independent of the patients' perceptions or reactions for looking at the eye fundus, e.g. ophthalmoscopes

- A61B3/1225—Objective types, i.e. instruments for examining the eyes independent of the patients' perceptions or reactions for looking at the eye fundus, e.g. ophthalmoscopes using coherent radiation

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B2576/00—Medical imaging apparatus involving image processing or analysis

- A61B2576/02—Medical imaging apparatus involving image processing or analysis specially adapted for a particular organ or body part

Definitions

- the present invention relates to an ophthalmologic imaging apparatus used in ophthalmological diagnosis and treatment, an operation method thereof, and a computer program.

- the scanning laser ophthalmoscope which is an ophthalmological apparatus that employs the principle of confocal laser scanning microscopy, performs raster scanning of a laser, which is measurement light, over the fundus, and acquires a high-resolution planar image from the intensity of returning light at high speed. Detecting only light that has passed through an aperture (pinhole) enables an image to be formed just using returning light of a particular depth position, and images with higher contrast than fundus cameras and the like can be acquired.

- An apparatus which images such a planar image will hereinafter be referred to as an SLO apparatus, and the planar image to as an SLO image.

- the adaptive optic SLO apparatus has an adaptive optic system that measures aberration of the eye being examined in real time using a wavefront sensor, and corrects aberration occurring in the eye being examined with regard to the measurement light and the returning light thereof using a wavefront correction device. This enables SLO images with high horizontal resolution (high-magnification image) to be acquired.

- Such a high horizontal resolution SLO image can be acquired as a moving image.

- hemodynamics for example, retinal blood vessels are extracted from each frame, and the moving speed of blood cells through capillaries and so forth is measured.

- photoreceptors P are detected, and the density distribution and array of the photoreceptors P are calculated.

- Fig. 6B illustrates an example of a high horizontal resolution SLO image. The photoreceptors P, a low-luminance region Q corresponding to the position of capillaries, and a high-luminance region W corresponding to the position of a white blood cell, can be observed.

- the focus position is set nearby the outer layer of the retina (B5 in Fig. 6A) to take a SLO image such as in Fig. 6B.

- a SLO image such as in Fig. 6B.

- Acquiring an adaptive optics SLO image with the focus position set in the inner layers of the retina enables the retinal blood vessel walls, which are fine structures relating to retinal blood vessels, to be directly observed.

- Non-confocal images taken of the inner layers of the retina have intense noise signals due to the influence of light reflecting from the nerve fiber layer, and there have been cases where observing blood vessel walls and detection of wall boundaries has been difficult. Accordingly, as of recent, techniques have come into use using observation of non-confocal images obtained by acquiring scattered light, by changing the diameter, shape, and position of a pinhole on the near side of the light receiving portion (NPL 1). Non-confocal images have a great depth of focus, so objects that have unevenness in the depth direction, such as blood vessels can be easily observed, and also noise is reduced since reflected light from the nerve fiber layer is not readily directly received.

- NPL 2 non-confocal images

- Dc5 in Fig. 6K black defect area in confocal images

- Dn5 in Fig. 6L high-luminance granular objects exist in non-confocal images

- NPL 1 discloses technology for acquiring non-confocal images of retinal blood vessels using an adaptive optics SLO apparatus

- NPL 2 discloses technology for acquiring both confocal images and non-confocal images at the same time using an adaptive optics SLO apparatus. Further, technology for effectively taking tomographic images of the eye is disclosed in PTL 1.

- Embodiments of an information processing apparatus according to the present invention and an operation method thereof have the following configurations, for example.

- An ophthalmologic imaging apparatus that acquires a plurality of types of images of an eye including a confocal image and a non-confocal image of the eye, includes a deciding unit configured to decide conditions relating to acquisition of the plurality of types of images, for each type of the plurality of types of images, and a control unit configured to control the ophthalmologic imaging apparatus to acquire at lest one of the plurality of types of images, in accordance with the decided conditions.

- An operation method of an ophthalmologic imaging apparatus that acquires a plurality of types of images of an eye including a confocal image and a non-confocal image of the eye includes a step of deciding conditions relating to acquisition of the plurality of types of images, for each type of the plurality of types of images, and a step of controlling the ophthalmologic imaging apparatus to acquire at lest one of the plurality of types of images, in accordance with the decided conditions.

- Fig. 1 is a block diagram illustrating a functional configuration example of an information processing apparatus according to a first embodiment of the present invention.

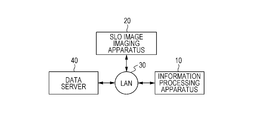

- Fig. 2A is a block diagram illustrating a configuration example of a system including the information processing apparatus according to an embodiment of the present invention.

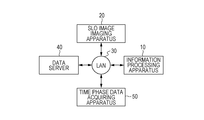

- Fig. 2B is a block diagram illustrating a configuration example of a system including the information processing apparatus according to an embodiment.

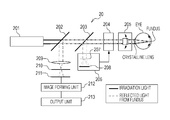

- Fig. 3A is a diagram for describing the overall configuration of an SLO image imaging apparatus according to an embodiment of the present invention.

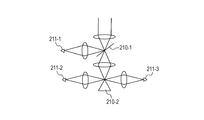

- Fig. 3B is a diagram for describing part of the configuration of the SLO image imaging apparatus in Fig. 3A.

- Fig. 3C is a diagram for describing part of the configuration of the SLO image imaging apparatus in Fig. 3A.

- Fig. 3A is a block diagram illustrating a functional configuration example of an information processing apparatus according to a first embodiment of the present invention.

- Fig. 2A is a block diagram illustrating a configuration example of a system

- FIG. 3D is a diagram for describing part of the configuration of the SLO image imaging apparatus in Fig. 3A.

- Fig. 3E is a diagram for describing part of the configuration of the SLO image imaging apparatus in Fig. 3A.

- Fig. 3F is a diagram for describing part of the configuration of the SLO image imaging apparatus in Fig. 3A.

- Fig. 3G is a diagram for describing part of the configuration of the SLO image imaging apparatus in Fig. 3A.

- Fig. 3H is a diagram for describing part of the configuration of the SLO image imaging apparatus in Fig. 3A.

- Fig. 4 is a block diagram illustrating a hardware configuration example of a computer which has hardware equivalent to a storage unit and image processing unit and holds other units as software which is executed.

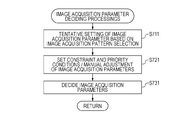

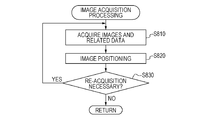

- Fig. 5A is a flowchart of processing which the information processing apparatus according to an embodiment of the present invention executes.

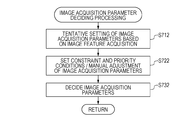

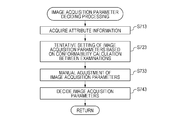

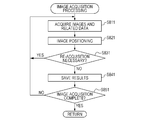

- Fig. 5B is a flowchart of processing which the information processing apparatus according to an embodiment of the present invention executes.

- Fig. 6A is a diagram for describing image processing according to an embodiment of the present invention.

- Fig. 6B is a diagram for describing image processing according to an embodiment of the present invention.

- Fig. 6C is a diagram for describing image processing according to an embodiment of the present invention.

- Fig. 6D is a diagram for describing image processing according to an embodiment of the present invention.

- Fig. 6E is a diagram for describing image processing according to an embodiment of the present invention.

- Fig. 6A is a diagram for describing image processing according to an embodiment of the present invention.

- Fig. 6B is a diagram for describing image processing according to an embodiment of the present invention.

- Fig. 6C is a diagram for describing image processing according to an

- FIG. 6F is a diagram for describing image processing according to an embodiment of the present invention.

- Fig. 6G is a diagram for describing image processing according to an embodiment of the present invention.

- Fig. 6H is a diagram for describing image processing according to an embodiment of the present invention.

- Fig. 6I is a diagram for describing image processing according to an embodiment of the present invention.

- Fig. 6J is a diagram for describing image processing according to an embodiment of the present invention.

- Fig. 6K is a diagram for describing image processing according to an embodiment of the present invention.

- Fig. 6L is a diagram for describing image processing according to an embodiment of the present invention.

- Fig. 7A is a flowchart illustrating the details of processing executed in S510 in the first embodiment.

- Fig. 7A is a flowchart illustrating the details of processing executed in S510 in the first embodiment.

- Fig. 7A is a flowchart illustrating the details of processing executed in S510 in the first

- FIG. 7B is a flowchart illustrating the details of processing executed in S511 in a second embodiment.

- Fig. 7C is a flowchart illustrating the details of processing executed in S511 in a third embodiment.

- Fig. 7D is a flowchart illustrating the details of processing executed in S511 in a fourth embodiment.

- Fig. 8A is a flowchart illustrating the details of processing executed in S520 in the first embodiment.

- Fig. 8B is a flowchart illustrating the details of processing executed in S521 in the second through fourth embodiments.

- Fig. 9 is a block diagram illustrating a functional configuration example of an information processing apparatus according to the second embodiment of the present invention.

- Fig. 10 is a block diagram illustrating a functional configuration example of an information processing apparatus according to the third embodiment of the present invention.

- Fig. 11 is a block diagram illustrating a functional configuration example of an information processing apparatus according to the fourth embodiment of the present invention.

- SLO apparatuses that acquire confocal images and non-confocal images generally acquire all types of images with the same imaging parameters. In doing so, there is a possibility that the amount of acquired data is larger than the data capacity that the central processing unit (CPU) can process in unit time. Accordingly, there is demand to efficiently use limited CPU resources to perform examination efficiently.

- CPU central processing unit

- one aspect of an embodiment is an ophthalmologic imaging apparatus that acquires multiple types of images of an eye including at least one type of non-confocal image of the eye.

- An example of the ophthalmologic imaging apparatus is an SLO imaging apparatus 20 communicably connected with an information processing apparatus 10, illustrated in Figs. 2A and 2B.

- the SLO imaging apparatus 20 in Fig. 2A and 2B may have the information processing apparatus 10 integrally built in.

- a control unit that controls the ophthalmologic imaging apparatus to acquire at least one of the plurality of types of images, by conditions relating to acquisition of the plurality of types of images.

- An example of the control unit is a data acquiring unit 110.

- the control unit does not have to be provided at the information processing apparatus 10, and may be configured as a central processing unit (CPU) or the like provided to the ophthalmologic imaging apparatus. Accordingly, multiple types of images of the eye including at least one type of non-confocal image can be efficiently acquired.

- CPU central processing unit

- Another aspect of an embodiment is an ophthalmologic imaging apparatus that acquires multiple types of images of an eye including a confocal image and at least one type of non-confocal image of the eye.

- Another aspect of an embodiment includes a deciding unit that decides conditions relating to acquisition of the multiple types of images for each image type (an example is a deciding unit 131 in Fig. 1).

- Another aspect of an embodiment includes a control unit that controls the ophthalmologic imaging apparatus to acquire at least one of the plurality of types of images, by conditions that have been decided. Accordingly, multiple types of images of the eye including a confocal image and at least one type of non-confocal image of the eye can be efficiently acquired.

- Another aspect of an embodiment preferably efficiently performs image processing acquisition upon having decided whether or not to acquire an image and a method of acquisition, in accordance with attributes of imaged images, and whether the images include anatomical features or lesion portions. That is to say, an apparatus that acquires multiple types of images with different light receiving methods performs acquisition image processing with priority on images which are more important with regard to observation and measurement necessitates acquisition of a great number of images more efficiently.

- the technology described in NPL 1 discloses technology relating to an adaptive optics SLO apparatus that acquires multi-channel non-confocal images, but does not disclose a method to efficiently generate or measure a great number of types of non-confocal images.

- NPL 2 acquires confocal images and non-confocal images at the same time, there is no disclosure of a method of efficiently generating or measuring confocal images and non-confocal images. Further, the technology in NPL 1 discloses deciding image acquisition parameters of tomographic images of the eye, based on lesion candidate regions acquired from a wide-angle image. However, an efficient acquisition method of multiple images including one or more types of non-confocal images is not disclosed.

- An ophthalmologic imaging apparatus is configured to acquire confocal images and non-confocal images using image acquisition parameters decided for each image type beforehand, so that when an operator specifies a imaging position, images that are more crucial for observation and measurement have a greater data amount.

- images are acquired as follows.

- confocal images Dcj and two types of non-confocal images Dnrk and Dnlk are the types of images taken at the parafovea, and the frame rate of the non-confocal images is lower than that of the confocal images.

- the type of images taken farther than 1.5 mm from the fovea is just confocal images Dcj, and the resolution is lower than at the parafovea.

- Fig. 2A is a configuration diagrams of a system including the information processing apparatus 10 according to the present embodiment.

- the information processing apparatus 10 is connected to an SLO image imaging apparatus 20, which is an example of an ophthalmic imaging apparatus, and a data server 40, via a local area network (LAN) 30 including optical fiber, Universal Serial Bus (USB), IEEE 1394, or the like, as illustrated in Fig. 2A.

- LAN local area network

- USB Universal Serial Bus

- the configuration of connection to these devices may be via an external network such as the Internet, or may be a configuration where the information processing apparatus 10 is directly connected to the SLO image imaging apparatus 20.

- the information processing apparatus 10 may be integrally built into an ophthalmic imaging apparatus.

- the SLO image imaging apparatus 20 is an apparatus to image confocal images Dc and non-confocal images Dn, which are wide-angle images D1 and high-magnification images Dh of the eye.

- the SLO image imaging apparatus 20 transmits wide-angle images D1, confocal images Dc, non-confocal images Dn, and information of fixation target positions F1 and Fcn used for imaging thereof, to the information processing apparatus 10 and the data server 40. In a case where these images are acquired at different imaging positions, this is expressed as Dli, Dcj, Dnk.

- this is expressed like Dc1m, Dc2o, ... (Dn1m, Dn2o, %) in order from the highest-magnification image, with Dc1m (Dn1m) denoting high-magnification confocal (non-confocal) images, and Dc2o, ... (Dn2o, ...) denoting mid-magnification confocal images (non-confocal images).

- the data server 40 holds the wide-angle images D1, confocal images Dc, and non-confocal images Dn, of the examinee eye, imaging conditions data such as fixation target positions F1 and Fcn used for the imaging thereof, image features of the eye, and so forth.

- image features relating to the photoreceptors P, capillaries Q, and retinal blood vessel walls are handled as image features of the eye.

- the wide-angle images D1, confocal images Dc, and non-confocal images Dn output from the SLO image imaging apparatus 20, fixation target positions F1 and Fcn used for the imaging thereof, and image features of the eye output from the information processing apparatus 10, are saved in the server 40.

- the wide-angle images D1, confocal images Dc, and non-confocal images Dn, and image features of the eye are transmitted to the information processing apparatus 10 in response to requests from the information processing apparatus 10.

- Fig. 1 is a block diagram illustrating the functional configuration of the information processing apparatus 10.

- the information processing apparatus 10 includes a data acquiring unit 110, a storage unit 120, an image processing unit 130, and an instruction acquiring unit 140.

- the data acquiring unit 110 includes a confocal data acquiring unit 111, a non-confocal data acquiring unit 112, and an attribute data acquiring unit 113.

- the image processing unit 130 includes a deciding unit 131, a positioning unit 132, a computing unit 133, a display control unit 134, and a saving unit 135.

- the deciding unit 131 further has a determining unit 1311 and a imaging parameter deciding unit 1312.

- the SLO imaging apparatus 20 includes a superluminiscent diode (SLD) 201, a Shack-Hartman wavefront sensor 206, an adaptive optics system 204, beam splitters 202 and 203, an X-Y scanning mirror 205, a focus lens 209, a diaphragm 210, a photosensor 211, an image forming unit 212, and an output unit 213.

- SLD superluminiscent diode

- the Shack-Hartman wavefront sensor 206 is a device to measure aberration of the eye, in which a lens eye 207 is connected to a charge-coupled device (CCD) 208.

- CCD charge-coupled device

- the adaptive optics system 204 drives an aberration correction device (deformable mirror or spatial light phase modulator) to correct the aberration, based on the wave aberration measured by the Shack-Hartman wavefront sensor 206.

- the light subjected to aberration-correction passes through the focus lens 209 and diaphragm 210, and is received at the photosensor 211.

- the diaphragm 210 and photosensor 211 are examples of the aperture and photoreceptor according to the present invention.

- the aperture preferably is provided upstream of the photoreceptor and near to the photoreceptor.

- the scanning position on the fundus can be controlled by moving the X-Y scanning mirror 205, thereby acquiring data according to an imaging region and time (frame rate ⁇ frame count) that the operator has instructed.

- the data is transmitted to the image forming unit 212, where image distortion due to variation in scanning rate is corrected and luminance value correction is performed, thereby forming image data (moving image or still image).

- the output unit 213 outputs the image data formed by the image forming unit 212.

- the configuration of the diaphragm 210 and photosensor 211 portion in Fig. 3A is optional, just as long as the SLO imaging apparatus 20 is configured to be able to acquire confocal images Dc and non-confocal images Dn.

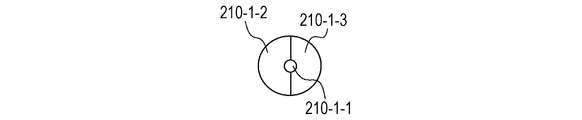

- the present embodiment is configured using a light-shielding member 210-1 (Figs. 3B and 3E) and photosensor 211-1, 211-2, and 211-3 (Fig. 3B). Regarding the returning light in Fig. 3B, part of the light that has entered the light-shielding member 210-1 disposed at the image forming plate is reflected and enters the photosensor 211-1. Now, the light-shielding member 210-1 will be described with reference to Fig. 3E.

- the light-shielding member 210-1 is formed of transmitting regions 210-1-2 and 210-1-3, a light-shielded region (omitted from illustration), and a reflecting region 210-1-1, so that the center is positioned on the center of the optical axis of the returning light.

- the light-shielding member 210-1 has an elliptic shape pattern so that when disposed obliquely as to the optical axis of the returning light, the shape appears to be circular when seen from the optical axis direction.

- the returning light divided at the light-shielding member 210-1 is input to the photosensor 211-1.

- the returning light that has passed through the transmitting regions 210-1-2 and 210-1-3 of the light-shielding member 210-1 is split by a prism 210-2 disposed at the image forming plane, and is input to photosensors 211-2 and 211-3, as illustrated in Fig. 3B. Voltage signals obtained at the photosensors are converted into digital values at an AD board within the image forming unit 212, thereby forming a two-dimensional image.

- the image based on light entering the photosensor 211-1 is a confocal image where focus has been made on a particular narrow range. Images based on light entering the photosensors 211-2 and 211-3 are non-confocal images where focus has been made on a broad range.

- the light-shielding member 210-1 is an example of an optical member that divides returning light from the eye which has been irradiated by light from the light source, into returning light passing through a confocal region and returning light passing through a non-confocal region.

- the transmitting regions 210-1-2 and 210-1-3 are examples of a non-confocal region, and non-confocal images are acquired based on the returning light passing through the non-confocal regions.

- the reflecting region 210-1-1 is an example of a confocal region, and confocal images are acquired based on the returning light passing through the confocal region.

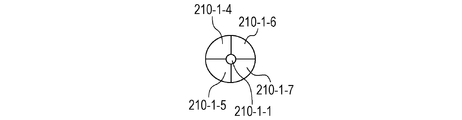

- the method for dividing non-confocal signals is not restricted to this, and a configuration may be made where non-confocal signals are divided into four and received, such as illustrated in Fig. 3F, for example.

- the reception method of confocal signals and non-confocal signals is not restricted to this.

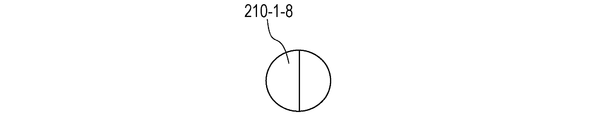

- a mechanism is preferably had where the diameter and position of the diaphragm 210 (aperture) is changeable. In doing so, at least one of the diameter of the aperture and the position in the optical axis direction is configured so as to be adjustable, so as to receive as confocal signals as illustrated in Fig. 3C and to receive as non-confocal signals as illustrated in Fig. 3D.

- the diameter and movement amount of the aperture may be optionally adjusted.

- Fig. 3C shows that the diameter of the aperture can be adjusted to around 1 Airy disc diameter (ADD)

- Fig. 3D shows that the diameter of the aperture can be adjusted to around 10 ADD with a movement amount of around 6 ADD.

- a configuration may be made where multiple non-confocal signals are received at the same time, as in Figs. 3G and 3H.

- the notation "non-confocal image Dn" refers to both the R channel image Dnr and L channel image Dnl.

- the SLO imaging apparatus 20 can also operate as a normal SLO apparatus, by increasing the scan angle of the scanning optical system in the configuration in Fig. 3A, and instructing so that the adaptive optics system 204 does not perform aberration correction, so as to acquire wide-angle images. Images which are lower magnification than the high-magnification images Dc and Dn, and have the lowest magnification of images acquired by the data acquiring unit 110 will be referred to as wide-angle images Dl (Dlc, Dln). Accordingly, a wide-angle image Dl may be an SLO image where adaptive optics has been applied, and cases of a simple SLO image are also included.

- Dlc and Dln images which have a relatively lower magnification than high-magnification images are collectively referred to as low-magnification images. Accordingly, wide-angle images and mid-magnification images are included in low-magnification images.

- 301 denotes a central processing unit (CPU), 302 memory (random access memory (RAM)), 303 control memory (read-only memory (ROM)), 304 an external storage device, 305 a monitor, 306 a keyboard, 307 a mouse, and 308 an interface.

- Control programs for realizing the image processing functions according to the present embodiment, and data used at the time of the control programs being executed, are stored in the external storage device 304.

- the control programs and data are loaded to the RAM 302 via a bus 309 as appropriate under control of the CPU 301, executed by the CPU 301, and function as the units described below.

- the functions of the blocks making up the information processing apparatus 10 will be correlated with specific execution procedures of the information processing apparatus 10 illustrated in the flowchart in Fig. 5A.

- Step 510 Deciding Image Acquisition Parameters

- the image processing unit 130 acquires a preset value list 121 of imaging conditions from the storage unit 120.

- the operator specifies information relating to the acquisition position and focus position of images, via the instruction acquiring unit 140.

- the preset value list 121 for the imaging conditions include whether or not to acquire (i.e., acquisition necessity), for each type of image (confocal / R channel / L channel), and in a case of acquiring, - Number of gradients - Field angle - Resolution - Frame rate - Number of seconds for acquisition as preset values for imaging conditions at each acquisition position and focus position.

- the determining unit 1311 determines whether or not to acquire each image type at the imaging position and focus position specified by the operator, based on the preset values.

- the imaging parameter deciding unit 1312 decides acquisition parameters for the image at the acquisition position and focus position the determining unit 1311 has determined to be necessary, using the preset values.

- the operator changes the individual image acquisition parameters that have been decided as necessary, either for this acquisition alone, or changes the preset values. If there is the changed image acquisition parameters and the new preset value parameter, the determining unit 1311 and imaging parameter deciding unit 1312 overwrite the parameter with the changed value or the new preset value, and decide the final image acquisition parameters. Specifics of image acquisition parameter deciding processing will be decided in detail with reference to S710 through S730.

- the present invention is not restricted to this.

- a case where images are acquired based on acquisition necessity and acquisition parameters for each image type, uniformly stipulated unrelated to imaging position and focus position is also included in the present invention.

- the operator changing individual image acquisition parameters and changing preset values is not indispensable to the present invention, and a case where the image acquisition parameters and preset values are not changed is also included in the present invention.

- Step 520 Image Acquisition

- the data acquiring unit 110 acquires images based on the image acquisition parameters decided in S510. In the present embodiment, all types of images are not acquired with the same image acquisition parameters. Images which are relatively low in importance in observation and measurement are set to have a lower number of frames or resolution, thereby efficiently acquiring images.

- the positioning unit 132 performs inter-frame positioning and tiling processing on the acquired images. Further, the image processing unit 130 determines the necessity to re-acquire images based on luminance value, image quality, etc., of acquired images. Specifics of image acquiring processing will be described in detail in S810 through S830.

- Step 530 Display

- the computing unit 133 performs computation among inter-frame-positioned images, and generates an image.

- a Split Detector image is generated using an R-channel image Dnrk and an L-channel image Dnlk.

- a Split Detector image is a type of a differential image using non-confocal images generated by computing ((pixel value of L-channel image - pixel value of R-channel image) / (pixel value of R-channel image + pixel value of L-channel image)).

- the display control unit 134 displays the acquired image on the monitor 305.

- a composited image of confocal image Dcj and Split Detector images Dnsk is subjected to composited display on the monitor 305 using positioning parameters decided in S820.

- the type of image to be displayed is switched using a graphical user interface (GUI) that has been prepared for this purpose.

- GUI graphical user interface

- radio buttons are used for switching in the present embodiment, any GUI arrangement may be used for the switching.

- the types of images to be switched are the two types of confocal image Dc and Split Detector image Dns.

- Step 540 Saving Results

- the saving unit 135 correlates the examination date and information identifying the examinee eye with the images acquired in S520, the image generated in S530, and the positioning parameters, and transmits this to the data server 40.

- Step 550 Determining Whether or not Image Acquisition is Complete

- the instruction acquiring unit 140 externally acquires an instruction regarding whether or not to end acquisition processing of the wide-angle images Dl, high-magnification confocal images Dcj, and high-magnification non-confocal images Dnk, by the information processing apparatus 10. In a case of having obtained an instruction to end image acquisition processing, the processing ends. In a case of having obtained an instruction to continue image acquisition, the flow returns to S510, and the next image acquisition parameter is decided. This instruction is input by an operator by way of the keyboard 306 or mouse 307, for example.

- Step 710 Acquiring Preset Values of Image Acquisition Parameters

- the image processing unit 130 acquires the preset value list 121 of imaging conditions from the storage unit 120.

- the determining unit 1311 determines the acquisition necessity for each image type, with regard to the imaging position and focus position that the operator has specified, based on the acquired preset values.

- the imaging parameter deciding unit 1312 decides the acquisition parameters for an image of the acquisition position and focus position regarding which necessity of acquisition has been determined, using the preset values listed in the preset value list 121.

- acquisition necessity number of gradients, field angle, resolution, frame rate, number of seconds for acquisition

- number of frames is fewer in the Split Detector image generated using non-confocal images.

- the preset value list 121 may also include preset values for imaging positions and focus positions.

- the density of cone cells is low at an acquisition position farther than a radius of 1.5 mm from the fovea, so cones can be observed even with a lower resolution.

- acquisition necessity number of gradients, field angle, resolution, frame rate, number of seconds for acquisition

- the focus position is set to the inner layer of the retina.

- there are intense noise signals due to light reflecting from the nerve fiber layer that is nearby the retinal blood vessels so confocal images that have a small focal depth are not used for observing fine structures like retinal blood vessel walls, and non-confocal images that have a large focal depth are used for observation.

- Preset values can be specified for wide-angle images in the same way.

- positioning of wide-angle images and high-magnification images is performed such that positioning parameters decided between the non-confocal wide-angle images Dln and confocal high-magnification images Dc are also used for positioning of the confocal wide-angle images ⁇ Dlc and non-confocal high-magnification images Dnk.

- Step 720 Changing Preset values of Image Acquisition Parameters

- the operator changes the preset values of the image acquisition parameters that have been acquired in S710 as necessary, either for the acquisition parameters decided for this image of this acquisition alone, or changes the preset values.

- Any user interface may be used for changing the image acquisition parameters.

- the mouse 307 us used to change the image acquisition parameters values displayed as a slider on the monitor 305. This is not restrictive, and the changed values of the image acquisition parameters may be input from the keyboard 306 to an edit box of a combo box, for example, or the mouse 307 may be used to select changed values from a list box of combo box. In the present embodiment, none of the image acquisition parameters are changed, and the preset values obtained in S710 are used as they are.

- Step 730 Deciding Image Acquisition Parameters

- the determining unit 1311 and imaging parameter deciding unit 1312 overwrite with the changed value or the new preset value, and decide the final image acquisition parameters.

- a focus position of the neuroepithelial layer at a position 0.5 mm toward the nasal side from the fovea and 0.5 mm on the superior side has been specified as the acquisition position of high-magnification images, and no change was made in S720, so the determining unit 1311 determines that the wide-angle image Dlc, confocal image Dc, and non-confocal images Dnr and Dnl need to be acquired.

- Step 810 Acquiring Images and Related Data

- the data acquiring unit 110 requests the SLO imaging apparatus 20 to acquire wide-angle image Dlc and high-magnification images (confocal image Dc and non-confocal images Dnr and Dnl) based on the image acquisition parameters decided in S730. Acquisition of attribute data and fixation target positions Fl and Fcn corresponding to these images is also requested.

- the operator specifies a focus position of the neuroepithelial layer at a position 0.5 mm toward the nasal side from the fovea and 0.5 mm on the superior side for high-magnification images.

- the operator also specifies a focus position of the neuroepithelial layer at the center of the fovea.

- the data acquiring unit 110 also functions as a control unit that controls the ophthalmologic imaging apparatus based on conditions relating to image acquisition.

- the control unit does not have to be provided to the information processing apparatus 10, and may be configured as a CPU or the like provided in the ophthalmologic imaging apparatus.

- acquisition necessity number of gradients, field angle, resolution, frame rate, number of seconds for acquisition

- the SLO imaging apparatus 20 acquires and transmits the wide-angle image Dlc, confocal image Dc, non-confocal images Dnr and Dnl, corresponding attribute data, and fixation target positions Fl and Fcn in accordance with the acquisition request.

- the data acquiring unit 110 receives the wide-angle image Dlc, confocal image Dc, non-confocal images Dnr and Dnl, corresponding attribute data, and fixation target positions Fl and Fcn from the SLO imaging apparatus 20 via the LAN 30, and stores in the storage unit 120.

- Step 820 Positioning Images

- the positioning unit 132 performs positioning of the images acquired in S810. First, the positioning unit 132 performs inter-frame positioning of wide-angle image Dlc and confocal image Dc, and applies inter-frame positioning parameter values decided at the confocal image Dc to the non-confocal images Dlr and Dll as well. Specific frame positioning methods include the following. i) The positioning unit 132 sets a reference frame to serve as a reference for positioning. In the present embodiment, the frame with the smallest frame No. is the reference frame. Note that the frame setting method is not restricted to this, and any setting method may be used. ii) The positioning unit 132 performs general inter-frame correlation (rough positioning).

- the present embodiment performs rough positioning using a correlation coefficient as an inter-image similarity evaluation function, and Affine transform as a coordinate conversion technique. iii)

- the positioning unit 132 performs fine positioning based on the data of the correspondence relation in the general position among the frames.

- moving images that have been subjected to the rough positioning obtained in ii) are then subjected to inter-frame fine positioning, using free form deformation (FFD), which is a type of a non-rigid positioning technique.

- FFD free form deformation

- the fine positioning technique is not restricted to this, and any positioning technique may be used.

- the positioning unit 132 positions wide-angle image Dlc and high-magnification image Dcj, and finds the relative position of Dcj on Dlc.

- the positioning unit 132 acquires the fixation target position Fcn used at the time of imaging the high-magnification confocal images Dcj from the storage unit 120, to use as the initial point for searching for positioning parameters for the positioning of the wide-angle image Dlc and confocal image Dcj.

- the positioning of the wide-angle image Dlc and high-magnification confocal image Dcj is performed while changing combinations of the parameter values.

- the combination of positioning parameter values where the similarity between the wide-angle image Dlc and high-magnification confocal image Dcj is highest is decided to be the relative position of the confocal image Dcj as to the wide-angle image Dlc.

- the positioning technique is not restricted to this, and any positioning technique may be used.

- positioning is performed from images with lower magnification in order. For example, in a case where a high-magnification confocal image Dc1m and a mid-magnification confocal image Dc2o have been acquired, first, the wide-angle image Dlc and the mid-magnification image Dc2o are positioned, and next, the mid-magnification image Dc2o and the high-magnification image Dc1m are positioned.

- image tiling parameter values decided regarding the wide-angle confocal image Dlc and confocal image Dcj are applied to tiling of the wide-angle confocal image Dlc and non-confocal high-magnification images (Dnrk or Dnlk) as well.

- the relative positions of the high-magnification non-confocal images Dnrk and Dnlk, on the wide-angle confocal image Dlc are each decided.

- Step 830 Determining Whether or not Re-acquisition is Necessary

- the image processing unit 130 determines the necessity of re-acquisition of an image. First, if any of the frames of the moving image that has been acquired fall under a) Translation movement amount of moving image as to reference frame is equal to threshold Ttr or more b) Average luminance value of the frames is smaller than threshold Ti c) S/N ratio of the frames is smaller than threshold Tsn that frame is determined to be an exemption frame.

- the image processing unit 130 determines that this image needs to be re-acquired. In a case where the image processing unit 130 determines that re-acquiring of the image is necessary, the image processing unit 130 requests the data acquiring unit 110 to re-acquire the image, and the flow advances to S810.

- the information processing apparatus 10 acquires confocal images and non-confocal images using the image acquisition parameters decided for each image type, beforehand such that images that are more crucial for observation and measurement have a greater data amount. Accordingly, multiple types of images of the eye that are crucial for observation and analysis can be efficiently acquired.

- conditions relating to acquisition of the multiple types of images is decided beforehand for each type of the multiple types of images in the present embodiment, a configuration may be made where the conditions relating to acquisition for each type of the multiple types of images can be changed by user specification (e.g., selecting conditions relating to acquisition). Also, different conditions relating to acquisition for each type of the multiple types of images are preferably decided for each type of the multiple types of images. For example, conditions may be decided where confocal images are given priority over non-confocal images, with the non-confocal images not being acquired. Conversely, conditions may be decided where non-confocal images are given priority over confocal images, with the confocal images not being acquired. Also conditions of not acquiring images may be decided.

- the decision of conditions relating to acquisition preferably includes decision of whether or not to acquire images.

- the conditions relating to acquisition are preferably decided in accordance with the imaging position in the multiple types of images (e.g., photoreceptors and blood vessels).

- the conditions relating to acquisition are preferably decided so that the relationship in magnitude of the number of frames acquired for confocal images (example of data amount) and the number of frames acquired for non-confocal images differs depending on the imaging position.

- confocal images are more suitable than non-confocal images, so a greater number of frames of confocal images is preferably acquired.

- the information processing apparatus performs the following processing on images taken by a SLO apparatus that acquires confocal images and non-confocal images at the same time.

- the operator decides acquisition conditions for the images by selecting from basic patterns registered beforehand regarding multiple types of high-magnification image acquisition parameters at multiple locations. Specifically, in a case where there are provided image acquisition patterns indicated by Pr1 and Pr2 in Fig. 6I using an SLO apparatus such as illustrated in Figs. 3A and 3B that acquires confocal images Dcj and non-confocal images Dnk at the same time, the operator decides the image acquisition parameters by selecting Pr1.

- Fig. 9 illustrates a functional block diagram of the information processing apparatus 10 according to the present embodiment.

- This configuration differs from that in the first embodiment in that the storage unit 120 does not have the preset value list 121 of the imaging conditions, and instead the display control unit 134 has an image acquisition pattern presenting unit 1341.

- the image processing flow according to the present embodiment is as illustrated in Fig. 5B. The processing of the present embodiment is described below.

- Step 511 Deciding Image Acquisition Parameters (1)

- the display control unit 134 displays information relating to registered image acquisition patterns on the monitor 305.

- the image processing unit 130 acquires image preset values of acquisition parameters corresponding to an image acquisition pattern that the operator has selected via the instruction acquiring unit 140. In a case where the operator has specified constraint conditions (upper limit of total imaging time, etc.) or priority conditions (angle or imaging position to be give priority, etc.), the determining unit 1311 and imaging parameter deciding unit 1312 change the images that are the object of acquisitions, and the image acquisition parameters, to satisfy the constraint conditions and priority conditions.

- the image processing unit 130 also acquires individual image acquisition parameter values which the operator has specified as necessary, via the instruction acquiring unit 140.

- the determining unit 1311 determines final images that are the object of acquisition, based on preset values, constraint conditions, priority conditions, and individually specified parameter values, and the imaging parameter deciding unit 1312 decides the final image acquisition parameters. Specifics of the image acquisition parameter deciding processing will be described in detail in S711, S721, and S731. Image acquisition parameter deciding processing for wide-angle images is the same as in the first embodiment, so detailed description will be omitted.

- Step 521 Acquiring Images (1)

- the data acquiring unit 110 acquires images based on the image acquisition parameters decided in S511. Specifics of the image acquisition processing will be described in detail in S811, S821, S831, S841, and S851. Image acquisition parameter deciding processing for wide-angle images is the same as in the first embodiment, so detailed description will be omitted.

- Step 531 Display (1)

- the computing unit 133 performs computation among inter-frame-positioned images, and generates an image.

- the display control unit 134 displays the image acquired in S521 and the image generated in this step on the monitor 305.

- Step 541 Determining Whether or not to End

- the instruction acquiring unit 140 obtains an external instruction regarding whether or not to end the processing of the information processing apparatus 10 relating to wide-angle images Dl, high-magnification confocal images Dcj, and high-magnification non-confocal images Dnk.

- the instruction is input by the operator through the keyboard 306 or mouse 307. In a case of having obtained an instruction to end processing, the processing ends. On the other hand, in a case where an instruction is obtained to continue processing, the flow returns to S511, and processing is performed on the next examinee eye (or re-processing on the same examinee eye).

- Step 711 Selecting Image Acquisition Pattern

- the display control unit 134 displays information relating to registered image acquisition patterns on the monitor 305.

- the image processing unit 130 obtains preset values of image acquisition parameters corresponding to the image acquisition pattern that the user has selected via the instruction acquiring unit 140.

- the image acquisition pattern Pr1 settings are made such that the closer to the fovea both confocal images and non-confocal images are, the finer images are acquired with more frames, and with regard to image type, confocal images are acquired with a higher number of gradations, thereby multiple types of images crucial for observation can be effectively acquired.

- the following image acquisition parameters are set as preset values regarding images (Dc5, Dnr5, and Dnl5) since the acquisition position is the fovea, and these are decided as tentative image acquisition parameters.

- the following image acquisition parameters are set as preset values and these are decided as tentative image acquisition parameters.

- the determining unit 1311 and imaging parameter deciding unit 1312 change the images that are the object of acquisitions, and the image acquisition parameters, to satisfy the constraint conditions and priority conditions.

- the image processing unit 130 also acquires individual image acquisition parameter values which the operator has specified as necessary, via the instruction acquiring unit 140.

- the determining unit 1311 determines not to acquire some images with low priority, and the imaging parameter deciding unit 1312 decides the image acquisition parameters to satisfy the constraint conditions.

- the subfoveal images Dc5, Dnr5, and Dnl5 are acquired first, after which the parafoveal images Dc1 (and Dnr1 and Dnl1) through Dc4 (and Dnr4 and Dnl4), and Dc6 (and Dnr6 and Dnl6) through Dc9 (and Dnr9 and Dnl9) are acquired.

- Step 731 Deciding Image Acquisition Parameters

- the determining unit 1311 determines images that are the object of acquisition based on the following information, and the imaging parameter deciding unit 1312 decides the image acquisition parameters. - Preset values of image acquisition parameters corresponding to the image acquisition pattern selected by the operator in S711 - Constraint conditions and priority conditions the operator instructed as necessary in S721 - Individual image acquisition parameters values the operator has changed as necessary in S721

- Step 811 Acquiring Images and Related Data (1)

- the data acquiring unit 110 acquires images based on the image acquisition parameters decided in S511. Detailed procedures of image acquisition are the same as in S810 in the first embodiment, so detailed will be omitted.

- Step 821 Positioning Images (1)

- the positioning unit 132 performs positioning of image s acquired in S811. Detailed procedures of inter-frame positioning and image tiling are the same as in S820 in the first embodiment, so detailed will be omitted.

- Step 831 Determining Whether or not Re-acquisition is Necessary (1)

- the image processing unit 130 determines whether or not image re-acquisition is necessary according to the following procedures, and in a case where determination is made that re-acquisition is necessary, requests the data acquiring unit 110 for image re-acquisition, and the flow advances to S811. In a case where determination is made that re-acquisition is unnecessary, the flow advances to S841. Detailed procedures of image re-acquisition necessity determination are the same as in S830 in the first embodiment, so detailed will be omitted.

- Step 841 Saving Results (1)

- the saving unit 135 transmits the examination date, information identifying the examinee eye, and the images acquired in S521, to the data server 40 in a correlated manner.

- Step S851 Determining Whether or not to End Image Acquisition

- the image processing unit 130 references the list of image acquisition parameters decided in S511, and in a case where there is an image not yet acquired in the list, the flow returns to S811 and image acquisition processing is continued. On the other hand, in a case where there are no images not yet acquired in the list, the image acquisition processing ends, and the flow advances to S531.

- the image acquisition patterns are not restricted to that described in the present embodiment, and the present invention is also included in cases of acquiring the following image acquisition patterns as well.

- Multi-configuration type - Image acquisition patterns where multiple basic patterns are provided

- multi-magnification type - Image acquisition patterns defined as combinations of basic patterns (compound type) - Image acquisition patterns defined uniquely by user and registered (user-defined type)

- preregistered basic patterns are displayed on wide-angle images as the multiple types of high-magnification image acquisition parameters in multiple positions, with the user making a selection therefrom

- the present invention is not restricted to this.

- an arrangement may be made where a list is displayed of preregistered basic pattern names, as the multiple types of high-magnification image acquisition parameters, with the user selecting a desired basic pattern name therefrom.

- a list display may be made of the image acquisition parameters stipulated by the selected basic pattern name, or information relating to image acquisition parameters including image acquisition position may be spatially laid out for a visual display.

- the color may be changed for each image type, to display information relating image acquisition parameters values and image acquisition positions.

- the information processing apparatus 10 performs the following processing on images taken by an SLO apparatus that acquires confocal images and non-confocal images at the same time.

- the operator decides acquisition conditions for the images by selecting from basic patterns registered beforehand regarding multiple types of high-magnification image acquisition parameters at multiple locations. Accordingly, multiple types of images of the eye that are crucial of observation and analysis can be efficiently acquired. (Third Embodiment: Deciding Conditions relating to Acquisition of Images by Feature Regions in Images)

- the information processing apparatus performs the following processing regarding confocal images Dcj of retinal blood vessels at generally the same imaging positions, and images (Dnr or Dnl) of non-confocal images Dnk taken of the retinal blood vessels with the aperture opened wide and taken from the right side of left side of the way the retinal blood vessels run. That is to say, the configuration has been made so that the more crucial parts images included for observation and measurement, based on image features or lesion candidate regions acquired in the images, the more types of images, or finer images are acquired. Specifically, confocal images Dcj and non-confocal images Dnk of retinal blood vessels such as illustrated in Fig. 6J are acquired. A case will be described where the more arteriovenous crossing portions, which are areas of predilection for retinal vein occlusion, are included in an image, the more types of images, or finer images are acquired.

- Fig. 2B illustrates the configuration of apparatuses connected to the information processing apparatus 10 according to the present embodiment.

- the present embodiment differs from the first embodiment in that the information processing apparatus 10 is connected to a time phase data acquisition apparatus 50, in addition to the SLO imaging apparatus 20 and data server 40.

- the time phase data acquisition apparatus 50 is an apparatus that acquires biosignal data (time phase data) that autonomously and cyclically changes, such as a sphygmograph or electrocardiograph, for example.

- the time phase data acquisition apparatus 50 acquires time phase data Sj at the same time as acquiring images Dnk, in accordance with operations performed by an unshown operator.

- the acquired time phase data Sj is sent to the information processing apparatus 10 and data server 40.

- the time phase data acquisition apparatus 50 may be directly connected to the SLO imaging apparatus 20.

- the light reception method of the SLO imaging apparatus 20 according to the present embodiment is configured such that the diameter and position of the aperture (pinhole) can be changed, so as to received as confocal signals as illustrated in Fig. 3C, or the diameter of the aperture can be enlarged and moved so as to receive as non-confocal signals as illustrated in Fig. 3D.

- the data server 40 In addition to the wide-angle images Dlc and Dln and high-magnification images Dcj, Dnrk, and Dnlk of the examinee eye, and acquisition conditions such as the fixation target positions Fl and Fcn used at the time of acquisition, the data server 40 also holds image features of the eye. Any image features of the eye may be used, but the present embodiment handles retinal blood vessels and capillaries Q, and photoreceptor damage regions.

- the time phase data Sj output from the time phase data acquisition apparatus 50 and image features output from the information processing apparatus 10 are saved in the server.

- the time phase data Sj and image features of the eye are transmitted to the information processing apparatus 10 upon request by the information processing apparatus 10.

- Fig. 10 illustrates a functional block diagram of the information processing apparatus 10 according to the present embodiment.

- This configuration differs from the first embodiment with regard to the point that the data acquiring unit 110 has a time phase data acquiring unit 114, the deciding unit 131 has an image feature acquiring unit 1313, and the display control unit 134 does not include the image acquisition parameters presenting unit 1341.

- the image processing flow according to the present embodiment is the same as that illustrated in Fig. 5B. Other than S511, S521, and S531, the flow is the same as in the second embodiment, so just the processing of S511, S521, and S531 will be described in the present embodiment.

- the data acquiring unit 110 acquires images of image feature acquisition.

- wide-angle images Dlc are acquired as images for feature acquisition.

- the method of acquisition of wide-angle images is the same as in the first embodiment, so description will be omitted.

- the image feature acquiring unit 1313 acquires retinal blood vessel regions and arteriovenous crossing portions as image features from the wide-angle images Dlc.

- the image processing unit 130 tentatively determines the preset values of image acquisition parameters based on image features acquired by the image feature acquiring unit 1313.

- the determining unit 1311 and imaging parameter deciding unit 1312 change the images that are the object of acquisitions, and the image acquisition parameters, to satisfy the constraint conditions and priority conditions.

- the image processing unit 130 also acquires individual image acquisition parameter values which the operator has specified as necessary, via the instruction acquiring unit 140.

- the determining unit 1311 determines final images that are the object of acquisition, based on preset values, constraint conditions, priority conditions, and individually specified parameter values, and the imaging parameter deciding unit 1312 decides the final image acquisition parameters.

- Step 521 Acquiring Images (2)

- the data acquiring unit 110 acquires high-magnification images and time phase data based on the image acquisition parameters decided in S511. Confocal images Dcj and non-confocal images Dnk are acquired following a retinal artery arcade A in the present embodiment, as illustrated in Fig. 6J.

- the time phase data acquiring unit 114 requests the time phase data acquisition apparatus 50 for time phase data Sj relating to biological signals.

- a sphygmograph serves as a time phase data acquisition apparatus, used to acquire pulse wave data Sj from the earlobe of the subject. This pulse wave data Sj is expressed with acquisition time on one axis and a cyclic point sequence having the pulse wave signal values measured by the sphygmograph on the other axis.

- the time phase data acquisition apparatus 50 acquires and transmits the time phase data Sj corresponding to the acquisition request.

- the time phase data acquiring unit 114 receives this pulse wave data Sj from the time phase data acquisition apparatus 50 via the LAN 30.

- the time phase data acquiring unit 114 stores the received time phase data Sj in the storage unit 120.

- timings relating acquisition of the time phase data Sj by the time phase data acquisition apparatus 50 are two conceivable timings relating acquisition of the time phase data Sj by the time phase data acquisition apparatus 50; one is a case where the confocal data acquiring unit 111 or non-confocal data acquiring unit 112 starts image acquisition in conjunction with a particular phase of the time phase data Sj, the other is a case where acquisition of pulse wave data Pi and image acquisition are simultaneously started immediately after an image acquisition request.

- acquisition of time phase data Pi and image acquisition are simultaneously started immediately after an image acquisition request.

- the time phase data Pi of each image is acquired by the time phase data acquiring unit 114, the extreme value in each time phase data Pi is detected, and the cardiac cycle and relative cardiac cycle are calculated.

- the relative cardiac cycle is a relative value represented by a floating-point number between 0 and 1 where the cardiac cycle is 1.

- the data acquiring unit 110 requests the SLO imaging apparatus 20 for acquisition of confocal images Dcj, non-confocal images Dnrk and Dnlk, and corresponding fixation target position data.

- the SLO imaging apparatus 20 acquires and transmits the confocal images Dcj, non-confocal images Dnrk and Dnlk, and corresponding fixation target position Fcn.

- the data acquiring unit 110 receives the confocal images Dcj, non-confocal images Dnrk and Dnlk, and corresponding fixation target position Fcn, from the SLO imaging apparatus 20 via the LAN 30, and stores these in the storage unit 120.

- Figs. 6C and 6D illustrate an example of a confocal image Dc and non-confocal image Dnr in a case of having photographed a retinal blood vessel.

- the confocal image Dc has strong reflection from the nerve fiber layer, so the background noise makes positioning difficult.

- the non-confocal image Dnr (Dnl) of the R channel (L channel) has higher contrast at the blood vessel wall at the right side (left side).

- the R channel image Dnr is also called a knife edge image, and may be used alone to observe retinal blood vessels including blood vessel walls.

- Step 531 Display (2)

- the display control unit 134 displays images acquired in S511 and S521 on the monitor 305.

- An image obtained by compositing the R channel image and L channel image is displayed composited in the monitor 305 using the positioning parameter values decided in S821.

- the type of images to be display is switched using a graphical user interface (GUI) that has been prepared for this purpose.

- GUI graphical user interface

- radio buttons are used for switching in the present embodiment, any GUI arrangement may be used for the switching.

- the types of images to be switched are the two types of R channel images Dnr and L channel images Dnl.

- the computing unit 133 may perform computation among inter-frame positioned images, and generate an image.

- Step 712 Tentative Setting of Image Acquisition Parameters by Image Features

- the data acquiring unit 110 acquires images for image feature acquisition.

- wide-angle images Dlc are acquired as images for image feature acquisition.

- the image feature acquiring unit 1313 detects the retinal blood vessel regions and arteriovenous crossing portions as image features from the wide-angle images Dlc.

- the images from which image features are acquired are not restricted to wide-angle images, and cases of acquiring image features from acquired high-magnification images (Dcj or Dnk), for example, are also included in the present invention.

- Retinal blood vessels have linear shapes, so the present embodiment employs a retinal blood vessel region detection method where a filter that enhances linear structures is used to extract the retinal blood vessels.

- a wide-angle image Dln is smoothed by a Gaussian function of a size ⁇ equivalent to the radius of the arcade blood vessel, and thereupon a tube enhancement filter based on a Hessian matrix is applied, and binarization is performed at a threshold value Ta, thus extracting the arcade blood vessels.

- the detection method of arteriovenous crossing portions is not restricted to this, and any known retinal blood vessel extract technique may be used.

- a crossing portion is determined when there are four or more blood vessel regions at the perimeter of the filter, and there is a blood vessel region at the center portion of the filter.

- Retinal arteries contain more hemoglobin than retinal veins and thus are higher in luminance, so the lowest luminance value within each of the crossing blood vessel regions is calculated from the detected crossing portions, and in a case where the absolute value among the lowest luminance values is equal to or larger than a threshold value T1, this is determined to be an arteriovenous crossing.

- T1 threshold value

- Retinal veins tend to be lower with regard to luminance distribution of the intravascular space region (the region where blood flows), so in the present invention, if the lowest luminance value in the intravascular space region in confocal images and non-confocal images is smaller than Tv, this is identified as a retinal vein in the present invention, and if Tv or larger, as a retinal artery.

- the image feature acquiring unit 1313 acquires anatomical features such as arteriovenous crossing portions

- a lesion candidate region such as the photoreceptor defect portion Dc5 in Fig. 6K may be acquired as an image feature.

- a lesion candidate region will also be referred to as a lesion region that is an example of a state of observation of an object, such as photoreceptors or the like.

- detection is made in the present embodiment according to the following procedures.

- Fourier transform is performed on the confocal images Dcj, a low-pass filter is applied to cut out high-frequency signal values, following which inverse Fourier transform is performed, and each region having a value smaller than a threshold T2 is detected as a photoreceptor defect region.

- the determining unit 1311 and imaging parameter deciding unit 1312 tentatively decide preset values of the image acquisition parameters based on image features acquired by the image feature acquiring unit 1313. For example, in a case of observing whether or not there is a major retinal blood vessel lesion such as blockage of retinal veins, an image of a retinal blood vessel region including retinal blood vessel walls is acquired. It is understood that arteriovenous crossing portions within three diameters worth of the optic disc Od from the center thereof are areas of predilection for blockage of retinal veins, and accordingly this is a portion crucial for observation.

- the confocal image Dc here is not for observation and measurement, but is an image used for tiling as to the wide-angle image Dc, so the number of frames does not have to be very large.

- the determining unit 1311 and imaging parameter deciding unit 1312 change the images that are the object of acquisitions, and the image acquisition parameters, to satisfy the constraint conditions and priority conditions.

- the image processing unit 130 also acquires individual image acquisition parameter values which the operator has specified as necessary, via the instruction acquiring unit 140.

- the operator sets the upper limit of total imaging time to 60 seconds. Also, i) hold image acquisition parameters at positions including arteriovenous crossing portions within three diameters worth of the optic disc from the center thereof ii) order of priority regarding availability of acquisition of image types is R channel image, L channel image, confocal image, in that order from high priority to low are set as priority conditions. Further, i) has higher priority of ii). While the acquisition time of images was a total of 138 seconds for 26 locations in S712, instruction is given here to set the upper limit value for the total imaging time to 60 seconds, while holding image types and image acquisition parameters as much as possible at positions including arteriovenous crossing portions within three diameters worth of the optic disc from the center thereof.

- the determining unit 1311 and the parameter deciding unit 1312 change the images to be acquired and the image acquisition parameters so that the priority conditions and the upper limit value are satisfied. In other words, determination is made by the determining unit 1311 that a confocal image Dc, R channel image Dnr, and L channel image Dnl need to be acquired at positions including arteriovenous crossing portions within three diameters worth of the optic disc from the center thereof, but only the R channel image Dnr needs to be acquired at positions not including arteriovenous crossing portions within three diameters worth of the optic disc from the center thereof.

- the proposed technique can efficiently acquire various types of images of the eye that are crucial for observation.

- Step 732 Deciding Image Acquisition Parameters

- the determining unit 1311 determines images that are the object of acquisition based on the following information, and the imaging parameter deciding unit 1312 decides the image acquisition parameters. - Preset values of image acquisition parameters corresponding to the image acquisition pattern selected by the operator in S712 - Constraint conditions and priority conditions the operator instructed as necessary in S722 - Individual image acquisition parameters values the operator has changed as necessary in S722

- the determining unit 1311 determines that a confocal image Dc, R channel image Dnr, and L channel image Dnl are to be acquired at positions including arteriovenous crossing portions within three diameters worth of the optic disc from the center thereof at the image acquisition patter in Fig. 6J, but only the R channel image Dnr is to be acquired at positions not including arteriovenous crossing portions.

- the imaging parameter deciding unit 1312 decides, for positions not including arteriovenous crossing portions within three diameters worth of the optic disc from the center thereof (the total of 24 positions indicated by dotted frames and single-line frames in Fig. 6J)

- Step 811 Acquiring Images and Related Data (2)

- the data acquiring unit 110 acquires images based on the image acquisition parameters decided in S511. Detailed procedures of image acquisition are the same as in S521 and in S810 in the first embodiment, so detailed will be omitted.

- Step 821 Positioning Images (2)

- the positioning unit 132 performs positioning of image s acquired in S811. Detailed procedures of inter-frame positioning and image tiling are the same as in S820 in the first embodiment, so detailed will be omitted. Note however, in the present embodiment, the positioning unit 132 performs inter-frame positioning for each of the image types (Dcj, Dnr, and Dnl). Composited images are generated for the image types (Dcj, Dnr, and Dnl). Composited images are generated according to the following procedures to avoid change in the shape of the blood vessels among frames due to influence of the heartbeat when observing retinal blood vessel walls. That is to say, instead of averaging all frames, just frames correlated with pulse wave signals belonging to a particular phase interval are selected and composited.

- the phase intervals of pulse waves are divided into five intervals, and the frames belonging to the interval including the phase where the pulse wave signal value is minimal are selected and composited.

- the frame selection method is not restricted to this, and any selection method can be used yields the effects of eliminating the influence of the heartbeat can be used.

- the positioning unit 132 performs tiling of the image types (Dcj, Dnr, and Dnl) as to the wide-angle image Dlc. Further, the positioning unit 132 performs inter-image positioning among the various types of composited images at each acquisition position. Thus, the relative position among image types at generally the same acquisition position is calculated.

- Step 831 Determining Whether or not Re-acquisition is Necessary (2)

- the saving unit 135 transmits the examination date, information identifying the examinee eye, images acquired in S521, pulse wave data, and the positioning parameter values to the data server in a correlated manner.

- the image acquisition parameters may be decided based on lesion candidate region such as photoreceptor defect regions acquired by the image feature acquiring unit 1313 from confocal images as image features. It is generally understood that photoreceptors become defective from the outer segments, next become defective at the inner segments, and finally reach necrosis.

- NPL 2 describes that confocal images Dcj enable photoreceptor outer segment defects to be observed, while non-confocal images Dnk enable photoreceptor inner segment defects to be observed. Accordingly, it can be understood that photoreceptor defect regions in confocal images and Split Detector images at the same imaging position are important for observation and analysis of the level of the photoreceptor lesion.

- the image feature acquiring unit 1313 detects photoreceptor defect regions in each mid-magnification image Dc2o. In a case where no photoreceptor defect region is detected, the flow advances to confocal image acquisition of the mid-magnification image at the next acquisition position. In a case where a photoreceptor defect region has been detected, the determining unit 1311 determines that there is a need to acquire a high-magnification confocal image Dc1m at the same acquisition position, and corresponding high-magnification image non-confocal images Dnr and Dnl.

- the data acquiring unit 110 acquires the high-magnification images Dc1m, Dnr, and Dnl with the decided image acquisition parameters.

- the image acquisition parameters may be decided for the high-magnification images based on photoreceptor defect regions acquired from wide-angle images Dlc instead of mid-magnification images Dc2o.

- the information processing apparatus 10 performs the following processing on a confocal image Dcj acquired at generally the same position and on non-confocal images Dnk with the diameter of the aperture enlarged and moved to the right and left.

- the information processing apparatus is configured such that, in a case where multiple types of images have been acquired on different examination dates, the more examination dates on which an image has been acquired, the more that image is an object of acquisition in the present examination, and acquisition is performed with generally the same parameters.

- the configuration of apparatuses connected to the information processing apparatus 10 according to the present embodiment is as illustrated in Fig. 2A, and differs from the third embodiment with regard to the point no time phase data acquisition apparatus 50 is connected.

- the functional block configuration of the information processing apparatus 10 according to the present embodiment differs from that in the third embodiment with regard to the points that the data acquiring unit 110 does not have the time phase data acquiring unit 114 and image feature acquiring unit 1313, and the positioning unit 131 includes a conformability calculating unit 1314.

- the confocal signal and non-confocal signal reception method at the SLO imaging apparatus 20 according to the present embodiment is the same as in the first and second embodiments, in that the configuration is made to receive confocal signals and two channels of non-confocal signals, as illustrated in Fig. 3E.

- Step 511 Deciding Image Acquisition Parameters (3)

- the determining unit 1311 and image processing method deciding unit 1312 decide images for generating and image generating method, based on the conformability.

- the determining unit 1311 and imaging parameter deciding unit 1312 change the images that are the object of acquisitions, and the image acquisition parameters, to satisfy the constraint conditions and priority conditions.

- the image processing unit 130 also acquires individual image acquisition parameter values which the operator has specified as necessary, via the instruction acquiring unit 140.