JP2011123773A - Device having touch sensor, tactile feeling presentation method, and tactile feeling presentation program - Google Patents

Device having touch sensor, tactile feeling presentation method, and tactile feeling presentation program Download PDFInfo

- Publication number

- JP2011123773A JP2011123773A JP2009282259A JP2009282259A JP2011123773A JP 2011123773 A JP2011123773 A JP 2011123773A JP 2009282259 A JP2009282259 A JP 2009282259A JP 2009282259 A JP2009282259 A JP 2009282259A JP 2011123773 A JP2011123773 A JP 2011123773A

- Authority

- JP

- Japan

- Prior art keywords

- tactile sensation

- touch sensor

- storage unit

- data

- storage area

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

Images

Landscapes

- Position Input By Displaying (AREA)

- User Interface Of Digital Computer (AREA)

Abstract

【課題】ファイルの移動作業において、ファイルの移動後の移動先フォルダの空き容量をユーザに認識させることができる装置を提供することにある。

【解決手段】本発明に係るタッチセンサを有する装置101は、複数の記憶領域を有する記憶部104と、タッチセンサ103と、該タッチセンサ103をタッチしているタッチ対象に対して触感を呈示する触感呈示部105と、所定の記憶領域に記憶されているデータを前記所定の記憶領域と異なる記憶領域に記憶させる場合、前記データのサイズと前記異なる記憶領域の空き容量又は使用容量とに基づいて決定される触感を前記タッチ対象に対して呈示するように前記触感呈示部105を制御する制御部106と、を備える。

【選択図】図1An object of the present invention is to provide an apparatus that allows a user to recognize the free capacity of a destination folder after moving a file in a file moving operation.

An apparatus having a touch sensor according to the present invention presents a tactile sensation to a storage unit having a plurality of storage areas, a touch sensor, and a touch target touching the touch sensor. When the tactile sensation providing unit 105 and the data stored in the predetermined storage area are stored in a storage area different from the predetermined storage area, based on the size of the data and the free capacity or the used capacity of the different storage area A control unit 106 that controls the tactile sensation providing unit 105 to present the determined tactile sensation to the touch target.

[Selection] Figure 1

Description

本発明は、タッチセンサを有する装置、触感呈示方法及び触感呈示プログラムに関するものである。 The present invention relates to a device having a touch sensor, a tactile sensation presentation method, and a tactile sensation presentation program.

パーソナルコンピュータ(PC)やワークステーション等の機器において、グラフィカル・ユーザ・インタフェース(GUI)を備えるファイルシステムが広く普及している。昨今のCPUの高速化やメモリの廉価化により、携帯電話、PDA(Personal Digital Assistant)、携帯ゲーム機などの携帯機器においても、GUIを備えるファイルシステムが用いられることが増えている。このファイルシステムでは、ファイルやフォルダなどがアイコン(オブジェクト)として画面上に表示されている。アイコンをドラッグ・アンド・ドロップ(Drag&Drop)操作で動かすことにより、ファイルやフォルダの移動が可能となる。 In devices such as personal computers (PCs) and workstations, file systems having a graphical user interface (GUI) are widely used. With the recent increase in CPU speed and memory cost, file systems equipped with a GUI are increasingly used in portable devices such as mobile phones, PDAs (Personal Digital Assistants), and portable game machines. In this file system, files and folders are displayed on the screen as icons (objects). Files and folders can be moved by moving icons by a drag and drop operation.

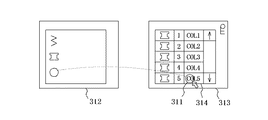

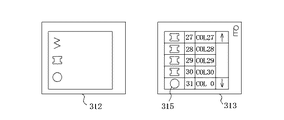

従来、ドラッグ・アンド・ドロップに関する種々の発明が行われている(例えば特許文献1参照)。図13〜15を用いて特許文献1の発明を説明する。図13は、ユーザがアイコン311をソースウィンドウ312(移動元フォルダのウィンドウ)からターゲットウィンドウ313(移動先フォルダのウィンドウ)にドラッグしている様子を示している。ドラッグ操作は通常、動かしたいアイコンの上にカーソルを重ね、マウスのボタンを押し、マウスのボタンを押下状態にしたままカーソルを移動させることにより行われる。図14のように、アイコン311がターゲットウィンドウ313の内部境界314に当たると、ターゲットウィンドウ313がスクロールされる。ユーザは、スクロールによって、ドロップ先である所望の位置315をターゲットウィンドウ313上に表示させ、所望の位置315でマウスのボタンを離すことによりドロップ操作が完了する。これにより、図15のように、アイコン311がターゲットウィンドウ313に表示される。

Conventionally, various inventions related to drag and drop have been made (see, for example, Patent Document 1). The invention of Patent Document 1 will be described with reference to FIGS. FIG. 13 shows a state where the user is dragging the

ドラッグ・アンド・ドロップによりファイルが移動すると、移動されるファイル(移動ファイル)のサイズだけ、移動先フォルダの空き容量が減ることになる。そのため、移動先フォルダの空き容量が移動ファイルのサイズよりも小さいと、ユーザはファイルを移動することができない。また、たとえファイルが移動されても、ファイル移動後の移動先フォルダの空き容量が少ないと、この移動先フォルダに記憶されているアプリケーション(オペレーティングシステムなど)の動作に影響を及ぼすこともある。このような容量不足に関する問題は、大容量の内部メモリを有さない携帯機器や外部インタフェースにより接続される容量の小さい外部メモリにおいて特に顕著となる。 When a file is moved by drag and drop, the free space of the destination folder is reduced by the size of the moved file (moved file). For this reason, if the free space of the destination folder is smaller than the size of the moving file, the user cannot move the file. Even if a file is moved, if the free space in the destination folder after moving the file is small, the operation of an application (such as an operating system) stored in the destination folder may be affected. Such a problem relating to the shortage of capacity is particularly noticeable in portable devices that do not have a large-capacity internal memory and in small-capacity external memories that are connected by an external interface.

このような問題を防ぐには、ファイルの移動前に、移動ファイルのサイズと移動先フォルダの容量とを確認するか、ファイルの移動後に、移動先フォルダの容量を確認しなければならない。ファイルのサイズや移動先フォルダの空き容量の確認作業は、ファイルの移動作業とは別に必要であり、ユーザにとって手間である。 In order to prevent such a problem, it is necessary to confirm the size of the moved file and the capacity of the destination folder before moving the file, or to confirm the capacity of the destination folder after moving the file. Checking the file size and the free space of the destination folder is necessary separately from the file moving operation, which is troublesome for the user.

従って、上記のような問題点に鑑みてなされた本発明の目的は、ファイルの移動作業において、ファイルの移動後の移動先フォルダの空き容量をユーザに認識させることができる装置を提供することにある。 Accordingly, an object of the present invention made in view of the above problems is to provide an apparatus that allows a user to recognize the free capacity of a destination folder after moving a file in a file moving operation. is there.

上述した諸課題を解決すべく、第1の観点によるタッチセンサを有する装置は、

複数の記憶領域を有する記憶部と、

タッチセンサと、

該タッチセンサをタッチしているタッチ対象に対して触感を呈示する触感呈示部と、

所定の記憶領域に記憶されているデータを前記所定の記憶領域と異なる記憶領域に記憶させる場合、前記データのサイズと前記異なる記憶領域の空き容量又は使用容量とに基づいて決定される触感を前記タッチ対象に対して呈示するように前記触感呈示部を制御する制御部と、

を備えるものである。

In order to solve the above-described problems, an apparatus having a touch sensor according to the first aspect

A storage unit having a plurality of storage areas;

A touch sensor;

A tactile sensation providing unit for presenting a tactile sensation to a touch target touching the touch sensor;

When storing data stored in a predetermined storage area in a storage area different from the predetermined storage area, the tactile sensation determined based on the size of the data and the free capacity or the used capacity of the different storage area is A control unit that controls the tactile sensation providing unit to present to the touch target;

Is provided.

また、当該装置は、更に、

表示部を備え、

前記タッチセンサへの入力により、前記表示部に表示されている前記データのアイコンが動いている場合、前記制御部は、前記データの動きベクトルを算出し、該動きベクトルに基づいて前記異なる記憶領域を特定する、

ことが好ましい。

In addition, the apparatus further includes

With a display,

When an icon of the data displayed on the display unit is moved by an input to the touch sensor, the control unit calculates a motion vector of the data, and the different storage areas are calculated based on the motion vector. Identify

It is preferable.

また、第2の観点によるタッチセンサを有する装置は、

第1の記憶部と、

第1の記憶部と異なる第2の記憶部と、

タッチセンサと、

該タッチセンサをタッチしているタッチ対象に対して触感を呈示する触感呈示部と、

前記第1の記憶部に記憶されているデータを前記第2の記憶部に記憶させる場合、前記データのサイズと前記第2の記憶部の空き容量又は使用容量とに基づいて決定される触感を前記タッチ対象に対して呈示するように前記触感呈示部を制御する制御部と、

を備えるものである。

An apparatus having a touch sensor according to the second aspect is

A first storage unit;

A second storage unit different from the first storage unit;

A touch sensor;

A tactile sensation providing unit for presenting a tactile sensation to a touch target touching the touch sensor;

When the data stored in the first storage unit is stored in the second storage unit, the tactile sensation determined based on the size of the data and the free capacity or the used capacity of the second storage unit. A control unit that controls the tactile sensation providing unit to present the touch target;

Is provided.

また、当該装置は、更に、

表示部を備え、

前記タッチセンサへの入力により、前記表示部に表示されている前記データのアイコンが動いている場合、前記制御部は、前記データの動きベクトルを算出し、該動きベクトルに基づいて前記第2の記憶部を特定する、

ことが好ましい。

In addition, the apparatus further includes

With a display,

When an icon of the data displayed on the display unit is moving due to an input to the touch sensor, the control unit calculates a motion vector of the data, and based on the motion vector, the second data Specify the storage unit,

It is preferable.

上述したように本発明の解決手段を装置として説明してきたが、本発明はこれらに実質的に相当する方法、プログラム、プログラムを記録した記憶媒体としても実現し得るものであり、本発明の範囲にはこれらも包含されるものと理解されたい。 As described above, the solution of the present invention has been described as an apparatus. However, the present invention can be realized as a method, a program, and a storage medium that stores the program substantially corresponding to these, and the scope of the present invention. It should be understood that these are also included.

例えば、本発明の第1の観点を方法として実現させた触感呈示方法は、

記憶部の所定の記憶領域に記憶されているデータと、前記所定の記憶領域と異なる記憶領域とをそれぞれ特定するステップと、

前記特定したデータのサイズと前記特定した異なる記憶領域の空き容量又は使用容量とに基づいて決定される触感を、タッチセンサをタッチしているタッチ対象に対して呈示するステップと、

を含むものである。

For example, a tactile sensation presentation method that realizes the first aspect of the present invention as a method is:

Identifying each of data stored in a predetermined storage area of the storage unit and a storage area different from the predetermined storage area;

Presenting a tactile sensation determined based on the specified data size and the free capacity or used capacity of the specified different storage areas to a touch target touching the touch sensor;

Is included.

また、当該触感呈示方法は、更に、

前記タッチセンサへの入力により、前記表示部に表示されている前記データのアイコンが動いている場合、前記データの動きベクトルを算出し、該動きベクトルに基づいて前記異なる記憶領域を特定するステップ、

を含むことが好ましい。

In addition, the tactile sensation presentation method further includes:

A step of calculating a motion vector of the data when the icon of the data displayed on the display unit is moving due to an input to the touch sensor, and specifying the different storage area based on the motion vector;

It is preferable to contain.

また、本発明の第2の観点を方法として実現させた触感呈示方法は、

第1の記憶部に記憶されているデータと、前記第1の記憶部と異なる第2の記憶部とをそれぞれ特定するステップと、

前記特定したデータのサイズと前記特定した第2の記憶部の空き容量又は使用容量とに基づいて決定される触感を、タッチセンサをタッチしているタッチ対象に対して呈示するステップと、

を含むものである。

In addition, a tactile sensation presentation method that realizes the second aspect of the present invention as a method is:

Identifying each of the data stored in the first storage unit and a second storage unit different from the first storage unit;

Presenting a tactile sensation determined based on the size of the specified data and the free or used capacity of the specified second storage unit to a touch target touching the touch sensor;

Is included.

また、当該触感呈示方法は、更に、

前記タッチセンサへの入力により、前記表示部に表示されている前記データのアイコンが動いている場合、前記データの動きベクトルを算出し、該動きベクトルに基づいて前記第2の記憶部を特定するステップ、

を含むことが好ましい。

In addition, the tactile sensation presentation method further includes:

When an icon of the data displayed on the display unit is moved by an input to the touch sensor, a motion vector of the data is calculated, and the second storage unit is specified based on the motion vector. Step,

It is preferable to contain.

また、本発明の第1の観点をプログラムとして実現させた触感呈示プログラムは、

タッチセンサを有する装置に搭載されるコンピュータを、

記憶部の所定の記憶領域に記憶されているデータと、前記所定の記憶領域と異なる記憶領域とをそれぞれ特定する手段、

前記特定したデータのサイズと前記特定した異なる記憶領域の空き容量又は使用容量とに基づいて決定される触感を、タッチセンサをタッチしているタッチ対象に対して呈示するように制御する手段、

として機能させるためのものである。

Further, a tactile sensation presentation program that realizes the first aspect of the present invention as a program is:

A computer mounted on a device having a touch sensor,

Means for respectively specifying data stored in a predetermined storage area of the storage unit and a storage area different from the predetermined storage area;

Means for controlling the tactile sensation determined based on the size of the specified data and the free capacity or the used capacity of the specified different storage areas so as to be presented to a touch target touching the touch sensor;

It is intended to function as.

また、当該触感呈示プログラムは、更に、前記コンピュータを、

前記タッチセンサへの入力により、前記表示部に表示されている前記データのアイコンが動いている場合、前記データの動きベクトルを算出し、該動きベクトルに基づいて前記異なる記憶領域を特定する手段、

として機能させることが好ましい。

Further, the tactile sensation presentation program further causes the computer to

Means for calculating a motion vector of the data when the icon of the data displayed on the display unit is moved by an input to the touch sensor, and specifying the different storage area based on the motion vector;

It is preferable to function as.

また、本発明の第2の観点をプログラムとして実現させた触感呈示プログラムは、

タッチセンサを有する装置に搭載されるコンピュータを、

第1の記憶部に記憶されているデータと、前記第1の記憶部と異なる第2の記憶部とをそれぞれ特定する手段、

前記特定したデータのサイズと前記特定した第2の記憶部の空き容量又は使用容量とに基づいて決定される触感を、タッチセンサをタッチしているタッチ対象に対して呈示するように制御する手段、

として機能させるためのものである。

In addition, a tactile sensation presentation program that realizes the second aspect of the present invention as a program is:

A computer mounted on a device having a touch sensor,

Means for respectively specifying data stored in the first storage unit and a second storage unit different from the first storage unit;

Means for controlling the tactile sensation determined based on the size of the specified data and the free or used capacity of the specified second storage unit to be presented to the touch target touching the touch sensor. ,

It is intended to function as.

また、当該触感呈示プログラムは、更に、前記コンピュータを、

前記タッチセンサへの入力により、前記表示部に表示されている前記データのアイコンが動いている場合、前記データの動きベクトルを算出し、該動きベクトルに基づいて前記第2の記憶部を特定する手段、

として機能させることが好ましい。

Further, the tactile sensation presentation program further causes the computer to

When an icon of the data displayed on the display unit is moved by an input to the touch sensor, a motion vector of the data is calculated, and the second storage unit is specified based on the motion vector. means,

It is preferable to function as.

上記のように構成された本発明にかかるタッチセンサを有する装置によれば、移動されるデータ(ファイル)のサイズと、移動先である記憶領域又は記憶部の空き容量又は使用容量(以下、移動先記憶領域等の空き容量と略する)に基づいて決定される触感がタッチ対象に対して呈示される。よって、ユーザは、データ移動後の移動先記憶領域等の空き容量を、触感により認識することができる。つまり、ユーザは、移動データのサイズ及び移動先記憶領域等の空き容量を確認する作業をファイルの移動作業とは別に行わなくても、データ移動後の移動先記憶領域等の空き容量を知ることができる。データ移動後の移動先記憶領域等の空き容量が分かるため、データが移動先記憶領域等に入るか(記憶されるか)否かが判明する。 According to the device having the touch sensor according to the present invention configured as described above, the size of the data (file) to be moved, the free space or the used capacity of the storage area or storage unit that is the movement destination (hereinafter referred to as movement) The tactile sensation determined based on the free space in the storage area or the like is presented to the touch target. Therefore, the user can recognize the free space in the destination storage area after the data movement by tactile sensation. In other words, the user knows the free space in the destination storage area after the data move without performing the work of checking the size of the move data and the free space in the move destination storage area separately from the file move work. Can do. Since the free capacity of the destination storage area after data movement is known, it is determined whether the data enters (stores) the destination storage area or the like.

以下、本発明に係る実施形態について、図面を参照して説明する。 Hereinafter, embodiments according to the present invention will be described with reference to the drawings.

(第1の実施形態)

図1は、本発明の第1の実施形態に係るタッチセンサを有する装置の概略構成を示す機能ブロック図である。本発明のタッチセンサを有する装置101(以下、装置101と略する)の一例としては、PDA、PC、携帯電話、携帯ゲーム機、携帯音楽プレイヤー、携帯テレビ、などが挙げられる。この装置101は、表示部102と、タッチセンサ103と、記憶部104と、触感呈示部105と、制御部106とを有する。

(First embodiment)

FIG. 1 is a functional block diagram showing a schematic configuration of a device having a touch sensor according to the first embodiment of the present invention. Examples of the device 101 (hereinafter abbreviated as the device 101) having the touch sensor of the present invention include a PDA, a PC, a mobile phone, a mobile game machine, a mobile music player, a mobile TV, and the like. The

表示部102は、ファイルやフォルダなどを示すアイコンやウィンドウなどを表示し、例えば、LCD(Liquid Crystal Display:液晶ディスプレイ)や有機ELディスプレイ等を用いて構成される。なお請求項におけるデータには、ファイルやフォルダ等が含まれる。以下、説明の便宜上、ファイルの移動についてとりあげる。

The

タッチセンサ103は、ユーザの指等によるタッチセンサ103への接触を装置101への入力として検出するもので、例えば、タッチパネルにおけるタッチセンサ、ノート型パーソナルコンピュータ(ラップトップ)に搭載されることの多いタッチパッド、マウスのボタン、ポインティングスティック、トラックボールなどが挙げられる。以下、説明の便宜上、タッチセンサ103はタッチパネルにおけるタッチセンサとする。タッチパネルのタッチセンサ103は、ユーザの指やスタイラスペン等のタッチ対象(以下、ユーザの指と略する)による入力を検出するもので、抵抗膜方式、静電容量方式、光学式等の公知の方式のもので構成される。なお、タッチセンサ103が入力を検出する上で、ユーザの指がタッチセンサ103を物理的に押圧することは必須ではない。例えば、タッチセンサ103が光学式である場合は、タッチセンサ103はタッチセンサ103上の赤外線がユーザの指で遮られた位置を検出するため、ユーザの指がタッチセンサ103を押圧することは不要である。

The

記憶部104は、入力された各種情報やファイルなどのデータを記憶するとともに、ワークメモリ等としても機能するもので、例えば、ハードディスクドライブ(HDD)、SDメモリカード、USBメモリ、スマートメディアなどである。記憶部104は、複数の記憶領域を有することができる。複数の記憶領域とは、例えば、パーティションによって分割された複数のドライブ(例えば、CドライブとDドライブ)や、同一のドライブ内の容量制限された複数のフォルダを指す。また、記憶部104は、装置101に内蔵されるハードディスクドライブに限定されるものではなく、例えば、SDメモリカード、USBメモリ、スマートメディアなどの外部メモリとすることもできる。更に、記憶部104は、1つのハードウェアに限定されるものではなく、複数のハードウェアを指してもよく、例えば、記憶部104として、ハードディスクドライブとSDメモリカードとの2つのハードウェアを存在させることもできる。この場合、複数の記憶部を区別するために、複数の記憶部に対して、104a、104b、104cと符号を付し、それぞれ第1の記憶部、第2の記憶部、第3の記憶部と称する。

The

触感呈示部105は、タッチセンサ103を振動させ、タッチセンサ103を押圧しているユーザの指(タッチ対象)に触感を呈示するもので、例えば、圧電素子などの振動素子を用いて構成される。周波数、周期(波長)、振幅、波形を適宜設定することにより様々な触感を呈示する。

The tactile

制御部106は、装置101の各機能ブロックをはじめとして装置101の全体を制御及び管理する。ここで、制御部106は、CPU(中央処理装置)等の任意の好適なプロセッサ上で実行されるソフトウェアとして構成したり、処理ごとに特化した専用のプロセッサ(例えばDSP(デジタルシグナルプロセッサ))によって構成したりすることもできる。また、制御部106とは独立して、表示部102を制御する表示部コントローラ(例えば、LCDコントローラ)、タッチセンサ103を制御するタッチセンサコントローラ、触感呈示部105を制御する触感呈示部ドライバを設けることもできる。

The

制御部106についてより詳細に説明する。制御部106は、記憶部104aの所定の記憶領域から所定の記憶領域とは異なる記憶部104aの記憶領域へ、又は第1の記憶部104aから第1の記憶部104aとは異なる第2の記憶部104bへのファイルの移動において、移動されるファイル(移動ファイル)のサイズと移動先である記憶領域又は第2の記憶部104b(以下、移動先記憶領域等と略する)の空き容量又は使用容量とに基づいて触感を決定する。つまり、この触感は、ファイル移動後の移動先記憶領域等の空き容量又は使用容量に基づいて決定される。なお、空き容量と使用容量とは、足し合わされたものが、記憶部又は記憶領域がデータを記憶できる記憶総容量になる。つまり、空き容量と使用容量とは、使用容量が増えればその分空き容量が減るというように一体不可分の関係性を有している。よって、以下では説明の便宜上、空き容量についてのみとりあげて説明する。制御部106は、例えば、ファイル移動後の移動先記憶領域等の空き容量がマイナスの場合(つまり、移動ファイルを移動先記憶領域等に記憶させることが不可能であるとき)、ファイル移動後の移動先フォルダの空き容量に余裕がない場合、ファイル移動後の移動先フォルダの空き容量に余裕がある場合のそれぞれに対して異なる触感を決定する。なお、余裕のあるなしを決定する規準となる容量は、任意に設定できる事項である。そして、制御部106は、決定した触感をユーザの指(タッチ対象)に対して呈示するように触感呈示部105を制御する。つまり、ユーザは指から伝わる触感により、ファイルが移動先記憶領域等に入るか否か、また入る場合には移動先記憶領域等の空き容量がどれくらいになるかを認識することができる。

The

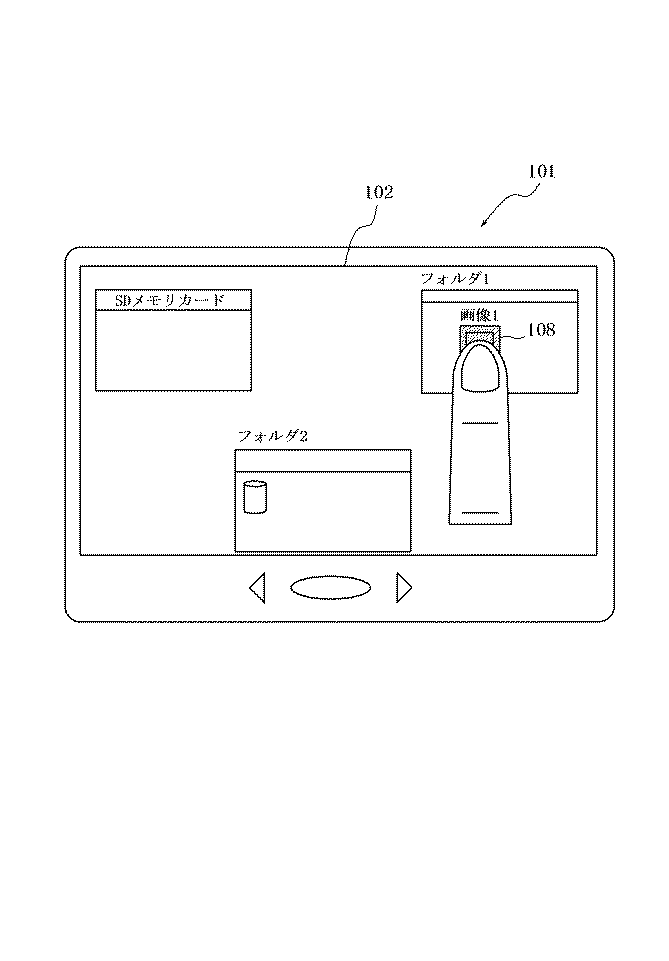

図1の装置101がタッチパネルを有するPDAであるとき、表示部102の表示例は、図2のようになる。なお、ここでは図示されないが、図1の装置101の記憶部104には、第1の記憶部104aと第2の記憶部104bとが含まれるものとする。第1の記憶部104aは、例えば、内部のHDDであり、第2の記憶部104bは、例えば、外部インタフェースにより接続されるSDメモリカードである。なお、外部インタフェースにより接続される外部メモリは、SDメモリカードに限定されるものではなく、例えば、USBメモリやスマートメディアとすることもできる。図1の表示部102には、装置101内部の第1の記憶部104aの記憶領域に関するフォルダ1及びフォルダ2のウィンドウ、並びに第2の記憶部104bに関するウィンドウ(SDメモリカード)が表示されている。フォルダ1には、「画像1」という画像ファイル(データ)が記憶されている。

When the

フォルダ1(第1の記憶部104aの所定の記憶領域)にある画像ファイルをフォルダ2(フォルダ1とは異なる記憶部104aの記憶領域)又はSDメモリカード(第2の記憶部104b)に移動して記憶させる場合について、図3のフローチャートを用いて説明する。 Move image files in folder 1 (predetermined storage area of first storage unit 104a) to folder 2 (storage area of storage unit 104a different from folder 1) or SD memory card (second storage unit 104b) Will be described with reference to the flowchart of FIG.

まず、ユーザが、図4のように、移動させたいファイル(移動ファイル)のアイコンに対してユーザの指による入力を行う。すると、タッチセンサ103は、この入力を検出する(ステップS101)。このとき、制御部106は、図4のように、検出された入力に対応するファイルのアイコンの表示を変化させることができる。これにより、ユーザは、移動させたいファイルのアイコンに対して間違わずに入力できたことを認識することができる。なお、表示の変化とは、例えば、ファイルのアイコンの色の反転やファイルのアイコンの表示の濃さの変化など、あるファイルのアイコンに対して、ユーザの指による入力があったことを認識できるような変化である。また、検出された入力に対応するファイルのアイコンの周囲の表示を変化させることにより、ユーザの指による入力があったことをユーザに認識させることもできる。

First, as shown in FIG. 4, the user performs input with the user's finger on the icon of the file to be moved (moved file). Then, the

ユーザが、画像ファイル(移動ファイル)をフォルダ1(移動元フォルダ)とは異なる記憶領域であるフォルダ2(移動先フォルダ)に移動させる場合、ユーザは、画像ファイルのアイコン108から指を離さずに、つまり入力し続けた状態で、図5のように画像ファイルのアイコン108をフォルダ2の方に動かしていく。この操作は、いわゆるドラッグ操作にあたる。タッチセンサ103がユーザの指による入力を検出し続けることにより、ユーザの指の動きが分かり、制御部106は、このユーザの指の動きに対応する位置に画像ファイルのアイコン108を表示部102に表示させる。

When the user moves an image file (move file) to folder 2 (destination folder), which is a storage area different from folder 1 (source folder), the user does not take his finger off the

制御部106は、ユーザの指がフォルダ2のウィンドウ(移動先ウィンドウ)上に位置しているか否かをタッチセンサ103の検出結果から判断する(ステップS102)。図6のようにファイルのアイコンがドラッグされていると、制御部106は、ユーザの指はフォルダ2のウィンドウ上に位置していると判断する(ステップS102のYes)。

The

制御部106は、上記したドラッグ操作により、ユーザは画像ファイルのアイコン108をフォルダ2に移動したいと判断する。すると、制御部106は、画像ファイルのサイズとフォルダ2の空き容量とに基づいて、タッチセンサ103をタッチしているユーザの指に呈示する触感を決定する(ステップS103)。そして、制御部106は、触感呈示部105を制御して、タッチセンサ103をタッチしているユーザの指に対して決定された触感を呈示する(ステップS104)。

The

この触感は、具体的には、計算又は表1又は2のような振動パターン表により決定される。触感を計算により決定するとは、例えば、移動ファイル移動前の移動先フォルダの空き容量に対する移動ファイルのサイズの割合と定数の和算、減算、乗算、除算を組合せて、周波数、振幅などを決定する。一例をあげると、以下のようになる。 Specifically, the tactile sensation is determined by calculation or a vibration pattern table such as Table 1 or 2. The tactile sensation is determined by calculation, for example, by determining the frequency, amplitude, etc. by combining the ratio of the size of the moving file to the free space of the destination folder before moving the moving file and the sum, subtraction, multiplication, and division of constants. . An example is as follows.

数1のように呈示する触感の周波数を決定すると、ファイル移動後の移動先フォルダの空き容量が少なくなればなるほど、高い周波数の触感がユーザの指に呈示されることになる。 When the frequency of the tactile sensation to be presented as shown in Equation 1 is determined, the tactile sensation with a higher frequency is presented to the user's finger as the free space of the destination folder after the file movement decreases.

以下、触感を振動パターン表から決定する場合について詳細に説明する。表1は、ファイル移動後の移動先フォルダの空き容量と呈示する触感との対応を示している。表1には、振動パターン1〜3の3つの振動パターンが用意されている。振動パターン1は、画像ファイルがフォルダ2に記憶されると仮定した場合のフォルダ2の空き容量がマイナスになる場合、つまり画像ファイルのサイズがフォルダ2の空き容量よりも大きいために、画像ファイルのフォルダ2への移動が不可能な場合に呈示される触感である。振動パターン2は、移動ファイル移動後の移動先フォルダの空き容量に余裕がなくなることをユーザに認識させるための触感であり、表1においては、画像ファイル移動後の移動先フォルダ2の空き容量が1MB(メガバイト)未満の場合に呈示される。振動パターン3は、移動ファイル移動後の移動先フォルダの空き容量に余裕があることをユーザに認識させるための触感であり、表1においては、画像ファイル移動後の移動先フォルダ2の空き容量が1MB以上の場合に呈示される。なお、振動パターンを変化させる基準として、1MBが設定されているが、この値は単なる例示に過ぎず、任意の値に設定できる。 Hereinafter, a case where the tactile sensation is determined from the vibration pattern table will be described in detail. Table 1 shows the correspondence between the free space of the destination folder after moving the file and the tactile sensation to be presented. In Table 1, three vibration patterns of vibration patterns 1 to 3 are prepared. In the vibration pattern 1, when the free space of the folder 2 assuming that the image file is stored in the folder 2 is negative, that is, the size of the image file is larger than the free space of the folder 2, This is a tactile sensation presented when the movement to the folder 2 is impossible. The vibration pattern 2 is a tactile sensation for allowing the user to recognize that there is no room in the free space in the destination folder after moving the moving file. In Table 1, the free space in the destination folder 2 after moving the image file is Presented when less than 1 MB (megabytes). The vibration pattern 3 is a tactile sensation for allowing the user to recognize that there is a free space in the destination folder after moving the moving file. In Table 1, the free space in the destination folder 2 after moving the image file is Presented in case of 1MB or more. Although 1 MB is set as a reference for changing the vibration pattern, this value is merely an example, and can be set to an arbitrary value.

振動パターン1〜3を区別するために、例えば、振幅を振動パターン1から振動パターン3にかけて小さくすることができる。周期(波長)や波形を変えて、振動パターン1〜3を区別することもできる。ユーザは、振動パターンの違いからファイル移動後の移動先フォルダの空き容量を認識することができる。 In order to distinguish the vibration patterns 1 to 3, for example, the amplitude can be reduced from the vibration pattern 1 to the vibration pattern 3. The vibration patterns 1 to 3 can also be distinguished by changing the period (wavelength) and the waveform. The user can recognize the free capacity of the destination folder after moving the file from the difference in vibration pattern.

更に、表2のように、移動ファイルのサイズに応じて、振動パターンを変化させることもできる。表2では、振動パターン1a、2a、3aは、移動ファイルのサイズが128kB(キロバイト)未満に対応し、振動パターン1b、2b、3bは、移動ファイルのサイズが128kB以上256kB未満に対応し、振動パターン1c、2c、3cは、移動ファイルのサイズが256kB以上に対応している。なお、振動パターンを変化させる基準として、128kB、256kBが設定されているが、この値は単なる例示に過ぎず、任意の値に設定できる。これにより、ユーザは、振動パターンの違いからファイル移動後の移動先フォルダの空き容量のみならず移動ファイルのサイズを認識することができる。 Furthermore, as shown in Table 2, the vibration pattern can be changed according to the size of the moving file. In Table 2, vibration patterns 1a, 2a, and 3a correspond to moving file sizes of less than 128 kB (kilobytes), and vibration patterns 1b, 2b, and 3b correspond to moving file sizes of 128 kB to less than 256 kB, and vibration. The patterns 1c, 2c, and 3c correspond to a moving file size of 256 kB or more. In addition, although 128 kB and 256 kB are set as a reference for changing the vibration pattern, this value is merely an example and can be set to an arbitrary value. Thereby, the user can recognize not only the free capacity of the destination folder after moving the file but also the size of the moved file from the difference in the vibration pattern.

ユーザの指に対して呈示される触感により、画像ファイルをフォルダ2に記憶させようとする場合、ユーザは、ドロップ操作に移ることになる。つまり、ユーザは、ドラッグ操作を終了し(指を再移動させることなく止め)、図6のように指がフォルダ2のウィンドウ上に位置する状態からドロップ操作に移る(ステップS105のNo)。ドロップ操作を装置101で実現させるために、例えば、装置101は更に、タッチセンサに対する押圧荷重を検出する荷重検出部(図示せず)を備え、この荷重検出部により検出された押圧荷重が、予め設定した荷重基準を満たすと、制御部106は、画像ファイルをフォルダ2に記憶させるように設定する。この荷重基準は、移動ファイルを移動先記憶領域又は記憶部に記憶させるために必要な押圧荷重を定めたものである。なお、荷重検出部は、例えば、歪みゲージセンサや圧電素子などの荷重に対して線形に反応する素子を用いて構成される。また、荷重検出部及び触感呈示部105が圧電素子を用いて構成される場合には、圧電素子を共用して、荷重検出部及び触感呈示部105を構成することができる。圧電素子は、圧力が加わると電力を発生し、電力が加えられると変形するためである。

When an image file is to be stored in the folder 2 due to the tactile sensation presented to the user's finger, the user moves to a drop operation. That is, the user ends the drag operation (stops without moving the finger again), and moves from the state where the finger is positioned on the window of the folder 2 as shown in FIG. 6 to the drop operation (No in step S105). In order to realize the drop operation with the

装置101が荷重検出部を備える構成において、ユーザは、ドロップ操作を行うために、ドラッグした状態(画像ファイルのアイコン108に対して入力し続けている状態)から、画像ファイルのアイコン108に対して荷重基準を満たす押圧荷重で押圧する。すると、制御部106は、画像ファイルをフォルダ2に記憶させる(ステップS106)。つまり、ファイルの移動が完了したことになる。

In a configuration in which the

ステップS104において、ユーザの指に対して、画像ファイルのフォルダ2への移動が不可能な触感(振動パターン1、1a、1b、1c)が呈示されると、ユーザは移動ファイルの移動先を変更することになる。また、ユーザの指に対して、画像ファイル移動後の移動先フォルダ2の空き容量に余裕がなくなることを示す触感(振動パターン2、2a、2b、2c)が呈示されると、ユーザは、移動ファイルの移動先を変更する場合もある。その理由としては、1つの記憶領域にぎりぎりまでデータを記憶させると、例えば、この記憶領域内に記憶されているアプリケーション(オペレーティングシステムなど)の動作に影響を及ぼす恐れがあるためである。移動ファイルの移動先を変更する場合、ステップS104では、画像ファイルのアイコン108のドラッグ中であるため、ユーザは、フォルダ2までドラッグした状態から、記憶部104aの他の記憶領域又は他の記憶部104b(例えば、SDメモリカード)へドラッグすることができる。つまり、ユーザはフォルダ1からドラッグし直す必要がない。タッチセンサ103が、ドラッグにより指が再移動していることを検出すると(ステップS105のYes)、指が新たな記憶領域又は記憶部に移動するごとにステップS102からS104が繰り返される。

In step S104, when a tactile sensation (vibration pattern 1, 1a, 1b, 1c) that cannot be moved to the folder 2 of the image file is presented to the user's finger, the user changes the destination of the moving file. Will do. When the user's finger is presented with a tactile sensation (vibration pattern 2, 2a, 2b, 2c) indicating that there is no room in the free space of the destination folder 2 after moving the image file, the user moves There are cases where the destination of the file is changed. The reason is that if data is stored to the limit in one storage area, for example, the operation of an application (such as an operating system) stored in the storage area may be affected. When changing the movement destination of the moving file, since the

ユーザが画像ファイルのアイコン108をSDメモリカードのウィンドウ上までドラッグしたことにより(ステップS102のYes)、制御部106が触感呈示部105を制御して、SDメモリカードの空き容量に余裕があることを示す触感(振動パターン3、3a、3b、3c)を呈示したとする(ステップS103及びS104)。すると、ユーザは、ドロップ操作として、SDメモリカードのウィンドウ上で画像ファイルのアイコン108を押圧し、押圧荷重が荷重基準を満たすと(ステップS106)、制御部106は画像ファイルをSDメモリカードに記憶する(ステップS107)。つまり、画像ファイルのフォルダ1からSDメモリカードへの移動が完了し、図7のようになる。なお、図7では、フォルダ1から画像ファイルが消去されているが、本実施形態に係るファイル移動は「切取りと貼付け」操作に限定されず、移動後も移動元から移動ファイル(画像ファイル)が消去されない「複写と貼付け」操作とすることもできる。

When the user drags the

このように本実施形態では、制御部106は、記憶部104aの所定の記憶領域(フォルダ1)に記憶されているデータ(画像ファイル)を所定の記憶領域と異なる記憶部104aの記憶領域(フォルダ2)に記憶させる場合、データのサイズと所定の記憶領域と異なる記憶領域の空き容量(又は使用容量)とに基づいて触感を決定する。そして、制御部106は、決定した触感をタッチ対象(ユーザの指)に対して呈示するように触感呈示部105を制御する。つまり、呈示される触感は、データ移動後の移動先である記憶領域(移動先記憶領域)の空き容量に基づいて決定される。よって、ユーザは、指に呈示される触感から、データが移動先記憶領域に入るか否か、また入る場合には移動先記憶領域の空き容量がどれくらいになるかをデータの移動作業のみにより認識することができる。

As described above, in the present embodiment, the

また、本実施形態では、制御部106は、第1の記憶部104a(HDD)に記憶されているデータ(画像ファイル)を第1の記憶部104aとは異なる第2の記憶部104b(SDメモリカード)に記憶させる場合、データのサイズと第2の記憶部104bの空き容量(又は使用容量)に基づいて触感を決定する。そして、制御部106は、決定される触感をタッチ対象(ユーザの指)に対して呈示するように触感呈示部105を制御する。つまり、本実施形態における装置101は、1つの記憶部(ハードウェア)におけるある領域から別の領域へのデータ移動だけでなく、複数の記憶部間におけるデータ移動にも対応することができる。装置101内部の記憶部104aから外部の記憶部104bへのデータ移動において、ユーザは、呈示される触感から、データが第2の記憶部104bに入るか否か、また入る場合には第2の記憶部104bの空き容量がどれくらいになるかをデータの移動作業のみにより認識することができる。

In the present embodiment, the

また、本実施形態における装置101が、ドロップ操作を可能にするための荷重検出部を備える場合、制御部106は、単なる振動ではなく、機械的なキーを押した際に感じられるカチッとした硬質的な触感(リアルなクリック感)をタッチ対象に対して呈示するように触感呈示部105を制御することができる。リアルなクリック感を呈示するためには、例えば、ステップS104で、押圧荷重がある値(例えば1N[ニュートン])を超えると触感を呈示する設定にする。これにより、押圧荷重がある値を超えるまでは、ユーザの圧覚を刺激し、押圧荷重がある値を超えると、触感呈示部105により、タッチ面を振動させてユーザの触覚を刺激することが可能になる。このように、ユーザの圧覚と触覚を刺激することにより、カチッとした硬質的な触感をユーザに呈示できる。タッチセンサ103自体は、タッチ面が押圧されても機械的なキーのように物理的に変位しないが、上記のような触感をタッチ対象に呈示することにより、ユーザは、機械的なキーを操作した場合と同様のリアルなクリック感を得ることができる。これにより、ユーザは、押圧によるフィードバックが本来ないタッチセンサ103への入力操作を違和感なく行うことが可能となる。なお、カチッとした硬質的な触感は、例えば200Hz〜500Hzのサイン波を1周期又は矩形波を1周期呈示することにより実現できる。ブルやブニとした軟質的な触感は、例えば200Hz〜500Hzのサイン波を2又は3周期呈示することにより実現できる。ブルルといった振動として認知できる触感は、例えばサイン波を4周期以上呈示することにより実現できる。

In addition, when the

(第2の実施形態)

第1の実施形態では、ドラッグ操作により、ユーザの指が移動先の記憶領域又は記憶部のウィンドウ上に位置している状態で触感を呈示する場合について説明したが、第2の実施形態では、ドラッグ操作の途中で、つまり、ユーザの指が移動先の記憶領域又は記憶部のウィンドウ上に位置していない状態で、触感を呈示する場合について説明する。図8は、本発明の第2の実施形態に係る入力装置の概略構成を示す機能ブロック図である。本発明のタッチセンサを有する装置201(以下、装置201と略する)の一例としては、PDA、PC、携帯電話、携帯ゲーム機、携帯音楽プレイヤー、携帯テレビ、などが挙げられる。この入力装置201は、表示部202と、タッチセンサ203と、記憶部204と、触感呈示部205と、制御部206とを有する。制御部206以外の機能部202〜205は、図1の機能部102〜105と同じ機能を有するので、説明は省略する。

(Second Embodiment)

In the first embodiment, a case has been described in which the user's finger presents a tactile sensation in a state where the user's finger is positioned on the destination storage area or the window of the storage unit by the drag operation. In the second embodiment, A case will be described in which a tactile sensation is presented in the middle of a drag operation, that is, in a state where the user's finger is not positioned on the destination storage area or the window of the storage unit. FIG. 8 is a functional block diagram showing a schematic configuration of the input device according to the second embodiment of the present invention. Examples of the

制御部206は、記憶部204aの所定の記憶領域から所定の記憶領域とは異なる記憶部204aの記憶領域へ、又は第1の記憶部204aから第1の記憶部204aとは異なる第2の記憶部204bへのファイル(データ)の移動において、タッチセンサ203が検出した入力を基に、データの動きベクトルを求める。つまり、動きベクトルとは、ドラッグ操作等により動いているデータのアイコンの軌跡から算出されるものであり、データのアイコンの動き方を表すベクトルである。そして、求めた動きベクトルから、移動先である記憶領域又は記憶部(記憶領域等)を特定する。つまり、制御部206は、該動きベクトルの方向に存在する記憶領域等をファイルの移動先と予測し、予測された移動先記憶領域等の空き容量と移動ファイルのサイズとに基づいて触感を決定し、ユーザの指にこの触感を呈示するように触感呈示部205を制御する。つまり、ユーザは、ドラッグ操作等でファイルを移動先記憶領域等まで動かす前に呈示される触感により、予測された移動先記憶領域等の空き容量を認識することができる。

The

第1の実施形態での説明と同様に、図8の装置201がタッチパネルを有するPDAであるとする。このとき、表示部202の表示例は、図9のようになる。図9において、画像ファイルのアイコン208は、図2の画像ファイルのアイコン108と同様のデータのアイコンであり、フォルダ1、フォルダ2及びSDメモリカードは、図9と図2とにおいて共通である。よって、図9の説明は省略する。

Similarly to the description in the first embodiment, it is assumed that the

フォルダ1(第1の記憶部204aの所定の記憶領域)にある画像ファイルをフォルダ2(フォルダ1とは異なる記憶部204aの記憶領域)又はSDメモリカード(第2の記憶部204b)に移動して記憶させる場合について、図10のフローチャートを用いて説明する。図10において、ステップS201、S204〜S207の処理は、図3に示したステップS101、S104〜S107と同じ処理である。この場合、各ステップに関する記載のうち、第1の実施形態の各機能部(表示部102、タッチセンサ103、記憶部104、触感呈示部105)に関する記載については、適宜、第2の実施形態の各機能部(表示部202、タッチセンサ203、記憶部204、触感呈示部205)で読み替えるものとする。

Move image files in folder 1 (predetermined storage area of first storage unit 204a) to folder 2 (storage area of storage unit 204a different from folder 1) or SD memory card (second storage unit 204b) Will be described with reference to the flowchart of FIG. In FIG. 10, the processes of steps S201 and S204 to S207 are the same as the processes of steps S101 and S104 to S107 shown in FIG. In this case, among the descriptions relating to each step, the descriptions relating to the respective functional units (the

ユーザが、移動させたいファイル(移動ファイル)のアイコンに対してユーザの指による入力を行うと、S101と同様に、タッチセンサ203は、この入力を検出する(ステップS201)。

When the user performs an input with the user's finger on the icon of the file to be moved (moved file), the

そして、ユーザが、画像ファイル(移動ファイル)をフォルダ1(移動元フォルダ)とは異なる記憶領域であるフォルダ2(移動先フォルダ)に移動させる場合、ユーザは、ドラッグ操作により、図11のように画像ファイルのアイコン208をフォルダ2の方に動かしていく。

When the user moves the image file (move file) to folder 2 (destination folder), which is a storage area different from folder 1 (source folder), the user performs a drag operation as shown in FIG. The

ドラッグ操作中、タッチセンサ203が画像ファイルのアイコン208の動き(軌跡)を検出し、制御部206は、この検出された軌跡から画像ファイルのアイコン208の動きベクトルを算出する(ステップS211)。動きベクトルは、例えば、現在の画像ファイルのアイコン208の位置と、過去の画像ファイルのアイコン208の位置(例えば、ドラッグ前の画像ファイルのアイコン208の位置)との2点を結ぶことにより求めることができる。また、ドラッグ操作により画像ファイルのアイコン208が動いた位置を複数点取り上げて、最小二乗法により求めることもできる。動きベクトルは、装置201の処理能力及び必要な精度を勘案して、任意の近似式を採用して求めることができる。

During the drag operation, the

続いて、制御部206は、算出した動きベクトルから、画像ファイルの移動先として予測される記憶領域又は記憶部があるか否か判断する(ステップS212)。つまり、算出した動きベクトル上に記憶領域又は記憶部に関するウィンドウが存在するか否かを判断する。存在する場合(ステップS212のYes)、制御部206は、算出した動きベクトル上のウィンドウに関する記憶領域又は記憶部をユーザの所望の移動先であると予測する。図11では、算出した動きベクトル上にフォルダ2が存在するため(ステップS212のYes)、フォルダ2をユーザの所望の移動先フォルダであると予測する。

Subsequently, the

そして、制御部206は、画像ファイルのサイズと予測されたフォルダ2の空き容量又は使用容量とに基づいて、タッチセンサ203をタッチしているユーザの指に対して呈示する触感を決定する(ステップS213)。この触感は、第1の実施形態と同様に、計算又は表1又は2のような振動パターン表により決定される。制御部206は、触感呈示部205を制御して、タッチセンサ203をタッチしているユーザの指に対して決定した触感を呈示する(ステップS204)。つまり、制御部206は、画像ファイルのアイコン208が移動先フォルダ2のウィンドウ上に位置する前に、ユーザの所望の移動先をフォルダ2と予測し、ファイル移動後の移動先フォルダの空き容量に対応する触感をユーザの指に対して呈示することになる。

Then, the

ステップS204において、ユーザの指に対して、画像ファイルのフォルダ2への移動が不可能な触感が呈示されると、ユーザは移動ファイルの移動先を変更することになる。移動ファイルの移動先を変更する場合、ユーザは、図11の状態から、記憶部104aの他の記憶領域又は他の記憶部204b(例えば、SDメモリカード)へドラッグすることができる。つまり、ユーザはフォルダ1からドラッグし直す必要がない。 In step S204, when a tactile sensation that the image file cannot be moved to the folder 2 is presented to the user's finger, the user changes the destination of the moved file. When changing the move destination of the move file, the user can drag from the state of FIG. 11 to another storage area of the storage unit 104a or another storage unit 204b (for example, an SD memory card). That is, the user does not need to drag again from the folder 1.

ユーザが、最終的に画像ファイルのアイコン208をSDメモリカードに移動した場合、図12のようになる。画像ファイルのアイコン208が移動先フォルダ2のウィンドウ上に位置する前に、画像ファイルのアイコン208の移動先がSDメモリカードに変更されたため、第1の実施形態における図7と比べて、画像ファイルのアイコン208の移動軌跡が短くなる。

When the user finally moves the

このように本実施形態では、記憶部104aの所定の記憶領域(フォルダ1)に記憶されているデータ(画像ファイル)を所定の記憶領域と異なる記憶部104aの記憶領域(フォルダ2)に記憶させる場合、制御部206は、データの移動先である記憶領域をデータのアイコンの動きベクトルにより特定する。具体的には、制御部206は、ドラッグ操作により、データのアイコンが動いている場合、データのアイコンの軌跡から動きベクトルを算出し、該動きベクトルの方向に位置する記憶領域を移動先として特定する。つまり、制御部206は、画像ファイルのアイコン208が移動先フォルダ2のウィンドウ上に位置する前に、ユーザの所望の移動先をフォルダ2と予測することになる。よって、制御部206は、画像ファイルのアイコン208が移動先フォルダ2にドラッグされる前に、画像ファイル移動後のフォルダ2の空き容量に対応する触感をユーザの指に対して呈示することができる。これにより、ユーザは、画像ファイルのアイコン208のドラッグ操作の途中で、画像ファイルがフォルダ2に入るか否か、また入る場合にはフォルダ2の空き容量がどれくらいになるかを認識することができる。特に、画像ファイルがフォルダ2に入らない場合、ユーザは、ドラッグ操作の途中でファイルの移動先を変更することが可能となり、ファイル移動を効率的に行うことができる。

Thus, in this embodiment, data (image file) stored in a predetermined storage area (folder 1) of the storage unit 104a is stored in a storage area (folder 2) of the storage unit 104a different from the predetermined storage area. In this case, the

また、本実施形態では、第1の記憶部204a(フォルダ1に関するHDD)に記憶されているデータ(画像ファイル)を第1の記憶部とは異なる第2の記憶部204b(SDメモリカード)に記憶させる場合、データの移動先である第2の記憶部204bをデータのアイコンの動きベクトルにより特定する。つまり、本実施形態における装置201は、1つの記憶部(ハードウェア)におけるある領域から別の領域へのデータ移動だけでなく、複数の記憶部間におけるデータ移動においても、データのアイコンの動きベクトルから移動先の特定が可能である。これにより、ユーザは、画像ファイルのアイコン208のドラッグ操作の途中で(画像ファイルのアイコン208が移動先であるSDメモリカードのウィンドウ上に位置する前に)、画像ファイルがフォルダ2に入るか否か、また入る場合にはフォルダ2の空き容量がどれくらいになるかを認識することができる。

In the present embodiment, the data (image file) stored in the first storage unit 204a (HDD related to the folder 1) is transferred to the second storage unit 204b (SD memory card) different from the first storage unit. In the case of storing, the second storage unit 204b, which is the data movement destination, is specified by the motion vector of the data icon. In other words, the

本発明を諸図面や実施例に基づき説明してきたが、当業者であれば本開示に基づき種々の変形や修正を行うことが容易であることに注意されたい。従って、これらの変形や修正は本発明の範囲に含まれることに留意されたい。 Although the present invention has been described based on the drawings and examples, it should be noted that those skilled in the art can easily make various modifications and corrections based on the present disclosure. Therefore, it should be noted that these variations and modifications are included in the scope of the present invention.

例えば、各部材、各手段、各ステップなどに含まれる機能などは論理的に矛盾しないように再配置可能であり、複数の手段やステップなどを1つに組み合わせたり、或いは分割したりすることが可能である。 For example, functions included in each member, each means, each step, etc. can be rearranged so as not to be logically contradictory, and a plurality of means, steps, etc. can be combined or divided into one. Is possible.

上記の第1の実施形態においては、移動させるファイルのアイコンに対して指による入力を行い、入力し続けた状態で移動先フォルダまで指を動かすドラッグ操作について説明したが、移動させるファイルと移動先フォルダとの特定ができればドラッグ操作に限定されるものではない点に留意すべきである。例えば、ユーザが移動させるファイルのアイコンに対して指による入力を行い、該入力をタッチセンサが検出する。そして、制御部は、この検出された入力をユーザによる移動ファイルの特定と判断し、どのファイルが特定されたかという情報を本発明に係る装置の内部メモリに記憶させる。これにより、ドラッグ操作によって入力し続けることにより、移動させるファイルを特定し続ける必要がなくなる。このような設定のもと、一度指をタッチセンサから離し、移動先フォルダのウィンドウ上に入力を行う。この入力をタッチセンサが検出することにより、制御部は移動先フォルダの特定ができる。移動ファイルと移動先フォルダとの特定により、制御部は、ファイル移動後の移動先フォルダの空き容量に基づいて触感を決定することができる。 In the first embodiment described above, a drag operation has been described in which a finger is input to the icon of a file to be moved, and the finger is moved to the destination folder while the input is continued. However, the file to be moved and the destination It should be noted that if the folder can be specified, the operation is not limited to the drag operation. For example, a user performs input with a finger on an icon of a file to be moved, and the touch sensor detects the input. Then, the control unit determines that the detected input is the specification of the moving file by the user, and stores information on which file is specified in the internal memory of the apparatus according to the present invention. This eliminates the need to continue to specify the file to be moved by continuing to input by a drag operation. Under such settings, the finger is once released from the touch sensor and input is performed on the window of the destination folder. When the touch sensor detects this input, the control unit can specify the destination folder. By specifying the moving file and the moving destination folder, the control unit can determine the tactile sensation based on the free space of the moving destination folder after moving the file.

また上記の第2の実施形態においては、ファイルのアイコンの動きベクトルをドラッグ操作によるファイルのアイコンの軌跡から算出することについて説明したが、ファイルのアイコンの軌跡が分かればドラッグ操作に限定されるものではない点に留意すべきである。例えば、ユーザが移動させるファイルのアイコンに対して指による第1の入力を行い、該入力をタッチセンサが検出する。そして、制御部は、この検出された入力をユーザによる移動ファイルの特定と判断する。そして、ユーザが記憶領域又は記憶部に関するウィンドウ以外の位置に対して第2の入力を行い、該入力をタッチセンサが検出する。ファイルのアイコンの位置と、第2の入力による表示部上の位置とを結んだ線をファイルのアイコンの軌跡とすることにより、ドラッグ操作なくファイルのアイコンの動きベクトルを算出することができる。 In the second embodiment, the calculation of the file icon motion vector from the file icon trajectory by the drag operation has been described. However, if the file icon trajectory is known, the file icon is limited to the drag operation. It should be noted that this is not the case. For example, the user performs a first input with a finger on the icon of the file to be moved, and the touch sensor detects the input. Then, the control unit determines that the detected input is the specification of the moving file by the user. Then, the user makes a second input to a position other than the window related to the storage area or the storage unit, and the touch sensor detects the input. By using a line connecting the position of the file icon and the position on the display unit by the second input as the locus of the file icon, the motion vector of the file icon can be calculated without a drag operation.

上記の第1及び第2の実施形態においては、複数の記憶部間におけるデータ移動について、装置内部の記憶部から外部の記憶部へのデータ移動について説明したが、装置外部の記憶部から内部の記憶部へのデータ移動において、制御部は、呈示する触感を変化させることにより、データが移動先フォルダに入るか否か、また入る場合にはファイル移動後の移動先フォルダの空き容量がどれくらいかになるかをユーザに認識させることができる。 In the first and second embodiments described above, the data movement between the plurality of storage units has been described from the storage unit inside the apparatus to the external storage unit. In moving data to the storage unit, the control unit changes whether or not the data will enter the destination folder by changing the tactile sensation to be presented, and if so, how much free space is available in the destination folder after moving the file. It is possible to make the user recognize whether or not

上記の第1及び第2の実施形態においては、装置外部の記憶部の記憶領域がパーティションやフォルダ等で複数に分割されていない場合について説明したが、装置外部の記憶部が複数の記憶領域を有している場合も同様にして、本発明の装置を適用することができる。外部の記憶部の複数の記憶領域間、又は、外部の記憶部のある記憶領域と内部の記憶部若しくは記憶領域との間のデータ移動においても、制御部は、呈示する触感を変化させることにより、データが移動先フォルダに入るか否か、また入る場合にはファイル移動後の移動先フォルダの空き容量がどれくらいかをユーザに認識させることができる。 In the first and second embodiments described above, the case where the storage area of the storage unit outside the apparatus is not divided into a plurality of partitions, folders, or the like has been described. However, the storage unit outside the apparatus has a plurality of storage areas. The apparatus of the present invention can be applied in the same manner even if it has such a device. In the data movement between a plurality of storage areas of the external storage unit or between a storage area of the external storage unit and the internal storage unit or the storage area, the control unit changes the tactile sensation to be presented. In this case, the user can recognize whether or not the data enters the destination folder, and if so, how much free space is available in the destination folder after moving the file.

101、201 タッチセンサを有する装置

102、202 表示部

103、203 タッチセンサ

104、204 記憶部

105、205 触感呈示部

106、206 制御部

108、208 画像ファイルのアイコン

311 アイコン

312 ソースウィンドウ

313 ターゲットウィンドウ

314 内部境界

315 所望の位置

101, 201 Device having a

Claims (12)

タッチセンサと、

該タッチセンサをタッチしているタッチ対象に対して触感を呈示する触感呈示部と、

所定の記憶領域に記憶されているデータを前記所定の記憶領域と異なる記憶領域に記憶させる場合、前記データのサイズと前記異なる記憶領域の空き容量又は使用容量とに基づいて決定される触感を前記タッチ対象に対して呈示するように前記触感呈示部を制御する制御部と、

を備えるタッチセンサを有する装置。 A storage unit having a plurality of storage areas;

A touch sensor;

A tactile sensation providing unit for presenting a tactile sensation to a touch target touching the touch sensor;

When storing data stored in a predetermined storage area in a storage area different from the predetermined storage area, the tactile sensation determined based on the size of the data and the free capacity or the used capacity of the different storage area is A control unit that controls the tactile sensation providing unit to present to the touch target;

A device having a touch sensor comprising:

表示部を備え、

前記タッチセンサへの入力により、前記表示部に表示されている前記データのアイコンが動いている場合、前記制御部は、前記データの動きベクトルを算出し、該動きベクトルに基づいて前記異なる記憶領域を特定することを特徴とする、

タッチセンサを有する装置。 The apparatus having the touch sensor according to claim 1, further comprising:

With a display,

When an icon of the data displayed on the display unit is moved by an input to the touch sensor, the control unit calculates a motion vector of the data, and the different storage areas are calculated based on the motion vector. Characterized by specifying

A device having a touch sensor.

第1の記憶部と異なる第2の記憶部と、

タッチセンサと、

該タッチセンサをタッチしているタッチ対象に対して触感を呈示する触感呈示部と、

前記第1の記憶部に記憶されているデータを前記第2の記憶部に記憶させる場合、前記データのサイズと前記第2の記憶部の空き容量又は使用容量とに基づいて決定される触感を前記タッチ対象に対して呈示するように前記触感呈示部を制御する制御部と、

を備えるタッチセンサを有する装置。 A first storage unit;

A second storage unit different from the first storage unit;

A touch sensor;

A tactile sensation providing unit for presenting a tactile sensation to a touch target touching the touch sensor;

When the data stored in the first storage unit is stored in the second storage unit, the tactile sensation determined based on the size of the data and the free capacity or the used capacity of the second storage unit. A control unit that controls the tactile sensation providing unit to present the touch target;

A device having a touch sensor comprising:

表示部を備え、

前記タッチセンサへの入力により、前記表示部に表示されている前記データのアイコンが動いている場合、前記制御部は、前記データの動きベクトルを算出し、該動きベクトルに基づいて前記第2の記憶部を特定することを特徴とする、

タッチセンサを有する装置。 The apparatus having a touch sensor according to claim 3, further comprising:

With a display,

When an icon of the data displayed on the display unit is moving due to an input to the touch sensor, the control unit calculates a motion vector of the data, and based on the motion vector, the second data The storage unit is specified,

A device having a touch sensor.

前記特定したデータのサイズと前記特定した異なる記憶領域の空き容量又は使用容量とに基づいて決定される触感を、タッチセンサをタッチしているタッチ対象に対して呈示するステップと、

を含む触感呈示方法。 Identifying each of data stored in a predetermined storage area of the storage unit and a storage area different from the predetermined storage area;

Presenting a tactile sensation determined based on the specified data size and the free capacity or used capacity of the specified different storage areas to a touch target touching the touch sensor;

A tactile sensation presentation method including:

前記タッチセンサへの入力により、前記表示部に表示されている前記データのアイコンが動いている場合、前記データの動きベクトルを算出し、該動きベクトルに基づいて前記異なる記憶領域を特定するステップ、

を含む触感呈示方法。 The tactile sensation presentation method according to claim 5, further comprising:

A step of calculating a motion vector of the data when the icon of the data displayed on the display unit is moving due to an input to the touch sensor, and specifying the different storage area based on the motion vector;

A tactile sensation presentation method including:

前記特定したデータのサイズと前記特定した第2の記憶部の空き容量又は使用容量とに基づいて決定される触感を、タッチセンサをタッチしているタッチ対象に対して呈示するステップと、

を含む触感呈示方法。 Identifying each of the data stored in the first storage unit and a second storage unit different from the first storage unit;

Presenting a tactile sensation determined based on the size of the specified data and the free or used capacity of the specified second storage unit to a touch target touching the touch sensor;

A tactile sensation presentation method including:

前記タッチセンサへの入力により、前記表示部に表示されている前記データのアイコンが動いている場合、前記データの動きベクトルを算出し、該動きベクトルに基づいて前記第2の記憶部を特定するステップ、

を含む触感呈示方法。 The tactile sensation presentation method according to claim 7, further comprising:

When an icon of the data displayed on the display unit is moved by an input to the touch sensor, a motion vector of the data is calculated, and the second storage unit is specified based on the motion vector. Step,

A tactile sensation presentation method including:

記憶部の所定の記憶領域に記憶されているデータと、前記所定の記憶領域と異なる記憶領域とをそれぞれ特定する手段、

前記特定したデータのサイズと前記特定した異なる記憶領域の空き容量又は使用容量とに基づいて決定される触感を、タッチセンサをタッチしているタッチ対象に対して呈示するように制御する手段、

として機能させるための触感呈示プログラム。 A computer mounted on a device having a touch sensor,

Means for respectively specifying data stored in a predetermined storage area of the storage unit and a storage area different from the predetermined storage area;

Means for controlling the tactile sensation determined based on the size of the specified data and the free capacity or the used capacity of the specified different storage areas so as to be presented to a touch target touching the touch sensor;

Tactile sensation presentation program to function as

前記タッチセンサへの入力により、前記表示部に表示されている前記データのアイコンが動いている場合、前記データの動きベクトルを算出し、該動きベクトルに基づいて前記異なる記憶領域を特定する手段、

として機能させるための触感呈示プログラム。 The tactile sensation providing program according to claim 9, further comprising:

Means for calculating a motion vector of the data when the icon of the data displayed on the display unit is moved by an input to the touch sensor, and specifying the different storage area based on the motion vector;

Tactile sensation presentation program to function as

第1の記憶部に記憶されているデータと、前記第1の記憶部と異なる第2の記憶部とをそれぞれ特定する手段、

前記特定したデータのサイズと前記特定した第2の記憶部の空き容量又は使用容量とに基づいて決定される触感を、タッチセンサをタッチしているタッチ対象に対して呈示するように制御する手段、

として機能させるための触感呈示プログラム。 A computer mounted on a device having a touch sensor,

Means for respectively specifying data stored in the first storage unit and a second storage unit different from the first storage unit;

Means for controlling the tactile sensation determined based on the size of the specified data and the free or used capacity of the specified second storage unit to be presented to the touch target touching the touch sensor. ,

Tactile sensation presentation program to function as

前記タッチセンサへの入力により、前記表示部に表示されている前記データのアイコンが動いている場合、前記データの動きベクトルを算出し、該動きベクトルに基づいて前記第2の記憶部を特定する手段、

として機能させるための触感呈示プログラム。 The tactile sensation providing program according to claim 11, further comprising:

When an icon of the data displayed on the display unit is moved by an input to the touch sensor, a motion vector of the data is calculated, and the second storage unit is specified based on the motion vector. means,

Tactile sensation presentation program to function as

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| JP2009282259A JP5490508B2 (en) | 2009-12-11 | 2009-12-11 | Device having touch sensor, tactile sensation presentation method, and tactile sensation presentation program |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| JP2009282259A JP5490508B2 (en) | 2009-12-11 | 2009-12-11 | Device having touch sensor, tactile sensation presentation method, and tactile sensation presentation program |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| JP2011123773A true JP2011123773A (en) | 2011-06-23 |

| JP5490508B2 JP5490508B2 (en) | 2014-05-14 |

Family

ID=44287585

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| JP2009282259A Active JP5490508B2 (en) | 2009-12-11 | 2009-12-11 | Device having touch sensor, tactile sensation presentation method, and tactile sensation presentation program |

Country Status (1)

| Country | Link |

|---|---|

| JP (1) | JP5490508B2 (en) |

Cited By (46)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2014102829A (en) * | 2012-11-20 | 2014-06-05 | Immersion Corp | System and method for feedforward and feedback with haptic effects |

| JP2014519128A (en) * | 2011-10-27 | 2014-08-07 | 騰訊科技(深▲セン▼)有限公司 | Method and apparatus for uploading and downloading files |

| CN104423922A (en) * | 2013-08-26 | 2015-03-18 | 夏普株式会社 | Image display apparatus and data transfer method |

| JP2015521316A (en) * | 2012-05-09 | 2015-07-27 | アップル インコーポレイテッド | Device, method, and graphical user interface for providing tactile feedback of actions performed within a user interface |

| CN104866179A (en) * | 2015-05-29 | 2015-08-26 | 小米科技有限责任公司 | Method and apparatus for managing terminal application program |

| CN104994419A (en) * | 2015-06-29 | 2015-10-21 | 天脉聚源(北京)科技有限公司 | Method and device for realizing synchronous display of plurality of virtual players |

| US9602729B2 (en) | 2015-06-07 | 2017-03-21 | Apple Inc. | Devices and methods for capturing and interacting with enhanced digital images |

| US9612741B2 (en) | 2012-05-09 | 2017-04-04 | Apple Inc. | Device, method, and graphical user interface for displaying additional information in response to a user contact |

| US9619076B2 (en) | 2012-05-09 | 2017-04-11 | Apple Inc. | Device, method, and graphical user interface for transitioning between display states in response to a gesture |

| US9632664B2 (en) | 2015-03-08 | 2017-04-25 | Apple Inc. | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US9639184B2 (en) | 2015-03-19 | 2017-05-02 | Apple Inc. | Touch input cursor manipulation |

| US9645732B2 (en) | 2015-03-08 | 2017-05-09 | Apple Inc. | Devices, methods, and graphical user interfaces for displaying and using menus |

| US9674426B2 (en) | 2015-06-07 | 2017-06-06 | Apple Inc. | Devices and methods for capturing and interacting with enhanced digital images |

| US9753639B2 (en) | 2012-05-09 | 2017-09-05 | Apple Inc. | Device, method, and graphical user interface for displaying content associated with a corresponding affordance |

| US9778771B2 (en) | 2012-12-29 | 2017-10-03 | Apple Inc. | Device, method, and graphical user interface for transitioning between touch input to display output relationships |

| US9785305B2 (en) | 2015-03-19 | 2017-10-10 | Apple Inc. | Touch input cursor manipulation |

| US9830048B2 (en) | 2015-06-07 | 2017-11-28 | Apple Inc. | Devices and methods for processing touch inputs with instructions in a web page |

| US9880735B2 (en) | 2015-08-10 | 2018-01-30 | Apple Inc. | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US9886184B2 (en) | 2012-05-09 | 2018-02-06 | Apple Inc. | Device, method, and graphical user interface for providing feedback for changing activation states of a user interface object |

| US9891811B2 (en) | 2015-06-07 | 2018-02-13 | Apple Inc. | Devices and methods for navigating between user interfaces |

| JP2018067048A (en) * | 2016-10-17 | 2018-04-26 | コニカミノルタ株式会社 | Display device and display device control program |

| US9959025B2 (en) | 2012-12-29 | 2018-05-01 | Apple Inc. | Device, method, and graphical user interface for navigating user interface hierarchies |

| US9990107B2 (en) | 2015-03-08 | 2018-06-05 | Apple Inc. | Devices, methods, and graphical user interfaces for displaying and using menus |

| US9990121B2 (en) | 2012-05-09 | 2018-06-05 | Apple Inc. | Device, method, and graphical user interface for moving a user interface object based on an intensity of a press input |

| US9996231B2 (en) | 2012-05-09 | 2018-06-12 | Apple Inc. | Device, method, and graphical user interface for manipulating framed graphical objects |

| US10037138B2 (en) | 2012-12-29 | 2018-07-31 | Apple Inc. | Device, method, and graphical user interface for switching between user interfaces |

| US10042542B2 (en) | 2012-05-09 | 2018-08-07 | Apple Inc. | Device, method, and graphical user interface for moving and dropping a user interface object |

| US10048757B2 (en) | 2015-03-08 | 2018-08-14 | Apple Inc. | Devices and methods for controlling media presentation |

| US10067653B2 (en) | 2015-04-01 | 2018-09-04 | Apple Inc. | Devices and methods for processing touch inputs based on their intensities |

| US10073615B2 (en) | 2012-05-09 | 2018-09-11 | Apple Inc. | Device, method, and graphical user interface for displaying user interface objects corresponding to an application |

| US10078442B2 (en) | 2012-12-29 | 2018-09-18 | Apple Inc. | Device, method, and graphical user interface for determining whether to scroll or select content based on an intensity theshold |

| US10095396B2 (en) | 2015-03-08 | 2018-10-09 | Apple Inc. | Devices, methods, and graphical user interfaces for interacting with a control object while dragging another object |

| US10095391B2 (en) | 2012-05-09 | 2018-10-09 | Apple Inc. | Device, method, and graphical user interface for selecting user interface objects |

| US10126930B2 (en) | 2012-05-09 | 2018-11-13 | Apple Inc. | Device, method, and graphical user interface for scrolling nested regions |

| US10162452B2 (en) | 2015-08-10 | 2018-12-25 | Apple Inc. | Devices and methods for processing touch inputs based on their intensities |

| US10175864B2 (en) | 2012-05-09 | 2019-01-08 | Apple Inc. | Device, method, and graphical user interface for selecting object within a group of objects in accordance with contact intensity |

| US10200598B2 (en) | 2015-06-07 | 2019-02-05 | Apple Inc. | Devices and methods for capturing and interacting with enhanced digital images |

| US10235035B2 (en) | 2015-08-10 | 2019-03-19 | Apple Inc. | Devices, methods, and graphical user interfaces for content navigation and manipulation |

| US10248308B2 (en) | 2015-08-10 | 2019-04-02 | Apple Inc. | Devices, methods, and graphical user interfaces for manipulating user interfaces with physical gestures |

| US10275087B1 (en) | 2011-08-05 | 2019-04-30 | P4tents1, LLC | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US10346030B2 (en) | 2015-06-07 | 2019-07-09 | Apple Inc. | Devices and methods for navigating between user interfaces |

| JP2019133410A (en) * | 2018-01-31 | 2019-08-08 | 富士ゼロックス株式会社 | Information processing device and information processing program |

| US10416800B2 (en) | 2015-08-10 | 2019-09-17 | Apple Inc. | Devices, methods, and graphical user interfaces for adjusting user interface objects |

| US10437333B2 (en) | 2012-12-29 | 2019-10-08 | Apple Inc. | Device, method, and graphical user interface for forgoing generation of tactile output for a multi-contact gesture |

| US10496260B2 (en) | 2012-05-09 | 2019-12-03 | Apple Inc. | Device, method, and graphical user interface for pressure-based alteration of controls in a user interface |

| US10620781B2 (en) | 2012-12-29 | 2020-04-14 | Apple Inc. | Device, method, and graphical user interface for moving a cursor according to a change in an appearance of a control icon with simulated three-dimensional characteristics |

Citations (10)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2005267058A (en) * | 2004-03-17 | 2005-09-29 | Seiko Epson Corp | Touch panel device and terminal device |

| JP2005286476A (en) * | 2004-03-29 | 2005-10-13 | Nec Saitama Ltd | Electronic equipment having memory free space notification function, and notification method thereof |

| JP2005332063A (en) * | 2004-05-18 | 2005-12-02 | Sony Corp | Input device with tactile function, information input method, and electronic device |

| JP2006011914A (en) * | 2004-06-28 | 2006-01-12 | Fuji Photo Film Co Ltd | Image display controller and image display control program |

| JP2006018582A (en) * | 2004-07-01 | 2006-01-19 | Ricoh Co Ltd | Operation display device |

| JP2006024039A (en) * | 2004-07-08 | 2006-01-26 | Sony Corp | Information processing apparatus and program used therefor |

| JP2006252550A (en) * | 2005-02-14 | 2006-09-21 | Seiko Epson Corp | File operation restriction system, file operation restriction program, file operation restriction method, electronic apparatus, and printing apparatus |

| JP2007179095A (en) * | 2005-12-26 | 2007-07-12 | Fujifilm Corp | Display control method, information processing apparatus, and display control program |

| JP2008033739A (en) * | 2006-07-31 | 2008-02-14 | Sony Corp | Touch screen interaction method and apparatus based on force feedback and pressure measurement |

| US20090295739A1 (en) * | 2008-05-27 | 2009-12-03 | Wes Albert Nagara | Haptic tactile precision selection |

-

2009

- 2009-12-11 JP JP2009282259A patent/JP5490508B2/en active Active

Patent Citations (10)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2005267058A (en) * | 2004-03-17 | 2005-09-29 | Seiko Epson Corp | Touch panel device and terminal device |

| JP2005286476A (en) * | 2004-03-29 | 2005-10-13 | Nec Saitama Ltd | Electronic equipment having memory free space notification function, and notification method thereof |

| JP2005332063A (en) * | 2004-05-18 | 2005-12-02 | Sony Corp | Input device with tactile function, information input method, and electronic device |

| JP2006011914A (en) * | 2004-06-28 | 2006-01-12 | Fuji Photo Film Co Ltd | Image display controller and image display control program |

| JP2006018582A (en) * | 2004-07-01 | 2006-01-19 | Ricoh Co Ltd | Operation display device |

| JP2006024039A (en) * | 2004-07-08 | 2006-01-26 | Sony Corp | Information processing apparatus and program used therefor |

| JP2006252550A (en) * | 2005-02-14 | 2006-09-21 | Seiko Epson Corp | File operation restriction system, file operation restriction program, file operation restriction method, electronic apparatus, and printing apparatus |

| JP2007179095A (en) * | 2005-12-26 | 2007-07-12 | Fujifilm Corp | Display control method, information processing apparatus, and display control program |

| JP2008033739A (en) * | 2006-07-31 | 2008-02-14 | Sony Corp | Touch screen interaction method and apparatus based on force feedback and pressure measurement |

| US20090295739A1 (en) * | 2008-05-27 | 2009-12-03 | Wes Albert Nagara | Haptic tactile precision selection |

Cited By (133)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US10275087B1 (en) | 2011-08-05 | 2019-04-30 | P4tents1, LLC | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US10664097B1 (en) | 2011-08-05 | 2020-05-26 | P4tents1, LLC | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US10656752B1 (en) | 2011-08-05 | 2020-05-19 | P4tents1, LLC | Gesture-equipped touch screen system, method, and computer program product |

| US10649571B1 (en) | 2011-08-05 | 2020-05-12 | P4tents1, LLC | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US10540039B1 (en) | 2011-08-05 | 2020-01-21 | P4tents1, LLC | Devices and methods for navigating between user interface |

| US10386960B1 (en) | 2011-08-05 | 2019-08-20 | P4tents1, LLC | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US10365758B1 (en) | 2011-08-05 | 2019-07-30 | P4tents1, LLC | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US10345961B1 (en) | 2011-08-05 | 2019-07-09 | P4tents1, LLC | Devices and methods for navigating between user interfaces |

| US10338736B1 (en) | 2011-08-05 | 2019-07-02 | P4tents1, LLC | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| JP2014519128A (en) * | 2011-10-27 | 2014-08-07 | 騰訊科技(深▲セン▼)有限公司 | Method and apparatus for uploading and downloading files |

| US9996231B2 (en) | 2012-05-09 | 2018-06-12 | Apple Inc. | Device, method, and graphical user interface for manipulating framed graphical objects |

| US10073615B2 (en) | 2012-05-09 | 2018-09-11 | Apple Inc. | Device, method, and graphical user interface for displaying user interface objects corresponding to an application |

| US12340075B2 (en) | 2012-05-09 | 2025-06-24 | Apple Inc. | Device, method, and graphical user interface for selecting user interface objects |

| US12067229B2 (en) | 2012-05-09 | 2024-08-20 | Apple Inc. | Device, method, and graphical user interface for providing feedback for changing activation states of a user interface object |

| US12045451B2 (en) | 2012-05-09 | 2024-07-23 | Apple Inc. | Device, method, and graphical user interface for moving a user interface object based on an intensity of a press input |

| US11947724B2 (en) | 2012-05-09 | 2024-04-02 | Apple Inc. | Device, method, and graphical user interface for providing tactile feedback for operations performed in a user interface |

| US9753639B2 (en) | 2012-05-09 | 2017-09-05 | Apple Inc. | Device, method, and graphical user interface for displaying content associated with a corresponding affordance |

| US11354033B2 (en) | 2012-05-09 | 2022-06-07 | Apple Inc. | Device, method, and graphical user interface for managing icons in a user interface region |

| US11314407B2 (en) | 2012-05-09 | 2022-04-26 | Apple Inc. | Device, method, and graphical user interface for providing feedback for changing activation states of a user interface object |

| US9823839B2 (en) | 2012-05-09 | 2017-11-21 | Apple Inc. | Device, method, and graphical user interface for displaying additional information in response to a user contact |

| US11221675B2 (en) | 2012-05-09 | 2022-01-11 | Apple Inc. | Device, method, and graphical user interface for providing tactile feedback for operations performed in a user interface |

| US10168826B2 (en) | 2012-05-09 | 2019-01-01 | Apple Inc. | Device, method, and graphical user interface for transitioning between display states in response to a gesture |

| US11068153B2 (en) | 2012-05-09 | 2021-07-20 | Apple Inc. | Device, method, and graphical user interface for displaying user interface objects corresponding to an application |

| US11023116B2 (en) | 2012-05-09 | 2021-06-01 | Apple Inc. | Device, method, and graphical user interface for moving a user interface object based on an intensity of a press input |

| US11010027B2 (en) | 2012-05-09 | 2021-05-18 | Apple Inc. | Device, method, and graphical user interface for manipulating framed graphical objects |

| US9886184B2 (en) | 2012-05-09 | 2018-02-06 | Apple Inc. | Device, method, and graphical user interface for providing feedback for changing activation states of a user interface object |

| US10996788B2 (en) | 2012-05-09 | 2021-05-04 | Apple Inc. | Device, method, and graphical user interface for transitioning between display states in response to a gesture |

| US10969945B2 (en) | 2012-05-09 | 2021-04-06 | Apple Inc. | Device, method, and graphical user interface for selecting user interface objects |

| US10942570B2 (en) | 2012-05-09 | 2021-03-09 | Apple Inc. | Device, method, and graphical user interface for providing tactile feedback for operations performed in a user interface |

| US10908808B2 (en) | 2012-05-09 | 2021-02-02 | Apple Inc. | Device, method, and graphical user interface for displaying additional information in response to a user contact |

| US10884591B2 (en) | 2012-05-09 | 2021-01-05 | Apple Inc. | Device, method, and graphical user interface for selecting object within a group of objects |

| US10782871B2 (en) | 2012-05-09 | 2020-09-22 | Apple Inc. | Device, method, and graphical user interface for providing feedback for changing activation states of a user interface object |

| US9990121B2 (en) | 2012-05-09 | 2018-06-05 | Apple Inc. | Device, method, and graphical user interface for moving a user interface object based on an intensity of a press input |

| US10775994B2 (en) | 2012-05-09 | 2020-09-15 | Apple Inc. | Device, method, and graphical user interface for moving and dropping a user interface object |

| US10191627B2 (en) | 2012-05-09 | 2019-01-29 | Apple Inc. | Device, method, and graphical user interface for manipulating framed graphical objects |

| US10175864B2 (en) | 2012-05-09 | 2019-01-08 | Apple Inc. | Device, method, and graphical user interface for selecting object within a group of objects in accordance with contact intensity |

| US10775999B2 (en) | 2012-05-09 | 2020-09-15 | Apple Inc. | Device, method, and graphical user interface for displaying user interface objects corresponding to an application |

| US10042542B2 (en) | 2012-05-09 | 2018-08-07 | Apple Inc. | Device, method, and graphical user interface for moving and dropping a user interface object |

| JP2015521316A (en) * | 2012-05-09 | 2015-07-27 | アップル インコーポレイテッド | Device, method, and graphical user interface for providing tactile feedback of actions performed within a user interface |

| US10592041B2 (en) | 2012-05-09 | 2020-03-17 | Apple Inc. | Device, method, and graphical user interface for transitioning between display states in response to a gesture |

| US9612741B2 (en) | 2012-05-09 | 2017-04-04 | Apple Inc. | Device, method, and graphical user interface for displaying additional information in response to a user contact |

| US10175757B2 (en) | 2012-05-09 | 2019-01-08 | Apple Inc. | Device, method, and graphical user interface for providing tactile feedback for touch-based operations performed and reversed in a user interface |

| US9619076B2 (en) | 2012-05-09 | 2017-04-11 | Apple Inc. | Device, method, and graphical user interface for transitioning between display states in response to a gesture |

| US10496260B2 (en) | 2012-05-09 | 2019-12-03 | Apple Inc. | Device, method, and graphical user interface for pressure-based alteration of controls in a user interface |

| US10095391B2 (en) | 2012-05-09 | 2018-10-09 | Apple Inc. | Device, method, and graphical user interface for selecting user interface objects |

| US10481690B2 (en) | 2012-05-09 | 2019-11-19 | Apple Inc. | Device, method, and graphical user interface for providing tactile feedback for media adjustment operations performed in a user interface |

| US10114546B2 (en) | 2012-05-09 | 2018-10-30 | Apple Inc. | Device, method, and graphical user interface for displaying user interface objects corresponding to an application |

| US10126930B2 (en) | 2012-05-09 | 2018-11-13 | Apple Inc. | Device, method, and graphical user interface for scrolling nested regions |

| JP2018110000A (en) * | 2012-11-20 | 2018-07-12 | イマージョン コーポレーションImmersion Corporation | System and method for feedforward and feedback with haptic effects |

| JP2014102829A (en) * | 2012-11-20 | 2014-06-05 | Immersion Corp | System and method for feedforward and feedback with haptic effects |

| US9836150B2 (en) | 2012-11-20 | 2017-12-05 | Immersion Corporation | System and method for feedforward and feedback with haptic effects |

| US10078442B2 (en) | 2012-12-29 | 2018-09-18 | Apple Inc. | Device, method, and graphical user interface for determining whether to scroll or select content based on an intensity theshold |

| US12050761B2 (en) | 2012-12-29 | 2024-07-30 | Apple Inc. | Device, method, and graphical user interface for transitioning from low power mode |

| US9996233B2 (en) | 2012-12-29 | 2018-06-12 | Apple Inc. | Device, method, and graphical user interface for navigating user interface hierarchies |

| US10037138B2 (en) | 2012-12-29 | 2018-07-31 | Apple Inc. | Device, method, and graphical user interface for switching between user interfaces |

| US10185491B2 (en) | 2012-12-29 | 2019-01-22 | Apple Inc. | Device, method, and graphical user interface for determining whether to scroll or enlarge content |

| US9965074B2 (en) | 2012-12-29 | 2018-05-08 | Apple Inc. | Device, method, and graphical user interface for transitioning between touch input to display output relationships |

| US12135871B2 (en) | 2012-12-29 | 2024-11-05 | Apple Inc. | Device, method, and graphical user interface for switching between user interfaces |

| US9857897B2 (en) | 2012-12-29 | 2018-01-02 | Apple Inc. | Device and method for assigning respective portions of an aggregate intensity to a plurality of contacts |

| US10175879B2 (en) | 2012-12-29 | 2019-01-08 | Apple Inc. | Device, method, and graphical user interface for zooming a user interface while performing a drag operation |

| US9959025B2 (en) | 2012-12-29 | 2018-05-01 | Apple Inc. | Device, method, and graphical user interface for navigating user interface hierarchies |

| US9778771B2 (en) | 2012-12-29 | 2017-10-03 | Apple Inc. | Device, method, and graphical user interface for transitioning between touch input to display output relationships |

| US10620781B2 (en) | 2012-12-29 | 2020-04-14 | Apple Inc. | Device, method, and graphical user interface for moving a cursor according to a change in an appearance of a control icon with simulated three-dimensional characteristics |

| US10915243B2 (en) | 2012-12-29 | 2021-02-09 | Apple Inc. | Device, method, and graphical user interface for adjusting content selection |

| US10101887B2 (en) | 2012-12-29 | 2018-10-16 | Apple Inc. | Device, method, and graphical user interface for navigating user interface hierarchies |

| US10437333B2 (en) | 2012-12-29 | 2019-10-08 | Apple Inc. | Device, method, and graphical user interface for forgoing generation of tactile output for a multi-contact gesture |

| CN104423922A (en) * | 2013-08-26 | 2015-03-18 | 夏普株式会社 | Image display apparatus and data transfer method |

| US10387029B2 (en) | 2015-03-08 | 2019-08-20 | Apple Inc. | Devices, methods, and graphical user interfaces for displaying and using menus |

| US10067645B2 (en) | 2015-03-08 | 2018-09-04 | Apple Inc. | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US9645709B2 (en) | 2015-03-08 | 2017-05-09 | Apple Inc. | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US9645732B2 (en) | 2015-03-08 | 2017-05-09 | Apple Inc. | Devices, methods, and graphical user interfaces for displaying and using menus |

| US9990107B2 (en) | 2015-03-08 | 2018-06-05 | Apple Inc. | Devices, methods, and graphical user interfaces for displaying and using menus |

| US12436662B2 (en) | 2015-03-08 | 2025-10-07 | Apple Inc. | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US11112957B2 (en) | 2015-03-08 | 2021-09-07 | Apple Inc. | Devices, methods, and graphical user interfaces for interacting with a control object while dragging another object |

| US10860177B2 (en) | 2015-03-08 | 2020-12-08 | Apple Inc. | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US10402073B2 (en) | 2015-03-08 | 2019-09-03 | Apple Inc. | Devices, methods, and graphical user interfaces for interacting with a control object while dragging another object |

| US9632664B2 (en) | 2015-03-08 | 2017-04-25 | Apple Inc. | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US10048757B2 (en) | 2015-03-08 | 2018-08-14 | Apple Inc. | Devices and methods for controlling media presentation |

| US10180772B2 (en) | 2015-03-08 | 2019-01-15 | Apple Inc. | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US10268342B2 (en) | 2015-03-08 | 2019-04-23 | Apple Inc. | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US10095396B2 (en) | 2015-03-08 | 2018-10-09 | Apple Inc. | Devices, methods, and graphical user interfaces for interacting with a control object while dragging another object |

| US10268341B2 (en) | 2015-03-08 | 2019-04-23 | Apple Inc. | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US10338772B2 (en) | 2015-03-08 | 2019-07-02 | Apple Inc. | Devices, methods, and graphical user interfaces for manipulating user interface objects with visual and/or haptic feedback |

| US11977726B2 (en) | 2015-03-08 | 2024-05-07 | Apple Inc. | Devices, methods, and graphical user interfaces for interacting with a control object while dragging another object |

| US10613634B2 (en) | 2015-03-08 | 2020-04-07 | Apple Inc. | Devices and methods for controlling media presentation |

| US10599331B2 (en) | 2015-03-19 | 2020-03-24 | Apple Inc. | Touch input cursor manipulation |

| US11550471B2 (en) | 2015-03-19 | 2023-01-10 | Apple Inc. | Touch input cursor manipulation |

| US11054990B2 (en) | 2015-03-19 | 2021-07-06 | Apple Inc. | Touch input cursor manipulation |

| US9639184B2 (en) | 2015-03-19 | 2017-05-02 | Apple Inc. | Touch input cursor manipulation |

| US9785305B2 (en) | 2015-03-19 | 2017-10-10 | Apple Inc. | Touch input cursor manipulation |

| US10222980B2 (en) | 2015-03-19 | 2019-03-05 | Apple Inc. | Touch input cursor manipulation |

| US10067653B2 (en) | 2015-04-01 | 2018-09-04 | Apple Inc. | Devices and methods for processing touch inputs based on their intensities |

| US10152208B2 (en) | 2015-04-01 | 2018-12-11 | Apple Inc. | Devices and methods for processing touch inputs based on their intensities |

| JP2017523484A (en) * | 2015-05-29 | 2017-08-17 | シャオミ・インコーポレイテッド | Method and apparatus for managing terminal applications |

| CN104866179A (en) * | 2015-05-29 | 2015-08-26 | 小米科技有限责任公司 | Method and apparatus for managing terminal application program |

| CN104866179B (en) * | 2015-05-29 | 2020-03-17 | 小米科技有限责任公司 | Terminal application program management method and device |

| US10705718B2 (en) | 2015-06-07 | 2020-07-07 | Apple Inc. | Devices and methods for navigating between user interfaces |

| US11681429B2 (en) | 2015-06-07 | 2023-06-20 | Apple Inc. | Devices and methods for capturing and interacting with enhanced digital images |

| US12346550B2 (en) | 2015-06-07 | 2025-07-01 | Apple Inc. | Devices and methods for capturing and interacting with enhanced digital images |

| US10200598B2 (en) | 2015-06-07 | 2019-02-05 | Apple Inc. | Devices and methods for capturing and interacting with enhanced digital images |

| US9674426B2 (en) | 2015-06-07 | 2017-06-06 | Apple Inc. | Devices and methods for capturing and interacting with enhanced digital images |

| US11240424B2 (en) | 2015-06-07 | 2022-02-01 | Apple Inc. | Devices and methods for capturing and interacting with enhanced digital images |

| US9706127B2 (en) | 2015-06-07 | 2017-07-11 | Apple Inc. | Devices and methods for capturing and interacting with enhanced digital images |

| US11835985B2 (en) | 2015-06-07 | 2023-12-05 | Apple Inc. | Devices and methods for capturing and interacting with enhanced digital images |

| US9916080B2 (en) | 2015-06-07 | 2018-03-13 | Apple Inc. | Devices and methods for navigating between user interfaces |

| US9891811B2 (en) | 2015-06-07 | 2018-02-13 | Apple Inc. | Devices and methods for navigating between user interfaces |

| US10841484B2 (en) | 2015-06-07 | 2020-11-17 | Apple Inc. | Devices and methods for capturing and interacting with enhanced digital images |