WO2022250031A1 - 情報処理装置、情報処理方法、及びコンピュータプログラム - Google Patents

情報処理装置、情報処理方法、及びコンピュータプログラム Download PDFInfo

- Publication number

- WO2022250031A1 WO2022250031A1 PCT/JP2022/021185 JP2022021185W WO2022250031A1 WO 2022250031 A1 WO2022250031 A1 WO 2022250031A1 JP 2022021185 W JP2022021185 W JP 2022021185W WO 2022250031 A1 WO2022250031 A1 WO 2022250031A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- learning model

- information

- calculation

- organ

- unit

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V10/00—Arrangements for image or video recognition or understanding

- G06V10/70—Arrangements for image or video recognition or understanding using pattern recognition or machine learning

- G06V10/87—Arrangements for image or video recognition or understanding using pattern recognition or machine learning using selection of the recognition techniques, e.g. of a classifier in a multiple classifier system

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B1/00—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor

- A61B1/04—Instruments for performing medical examinations of the interior of cavities or tubes of the body by visual or photographical inspection, e.g. endoscopes; Illuminating arrangements therefor combined with photographic or television appliances

- A61B1/045—Control thereof

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/0002—Inspection of images, e.g. flaw detection

- G06T7/0012—Biomedical image inspection

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V10/00—Arrangements for image or video recognition or understanding

- G06V10/70—Arrangements for image or video recognition or understanding using pattern recognition or machine learning

- G06V10/77—Processing image or video features in feature spaces; using data integration or data reduction, e.g. principal component analysis [PCA] or independent component analysis [ICA] or self-organising maps [SOM]; Blind source separation

- G06V10/776—Validation; Performance evaluation

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V10/00—Arrangements for image or video recognition or understanding

- G06V10/70—Arrangements for image or video recognition or understanding using pattern recognition or machine learning

- G06V10/77—Processing image or video features in feature spaces; using data integration or data reduction, e.g. principal component analysis [PCA] or independent component analysis [ICA] or self-organising maps [SOM]; Blind source separation

- G06V10/80—Fusion, i.e. combining data from various sources at the sensor level, preprocessing level, feature extraction level or classification level

- G06V10/809—Fusion, i.e. combining data from various sources at the sensor level, preprocessing level, feature extraction level or classification level of classification results, e.g. where the classifiers operate on the same input data

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V10/00—Arrangements for image or video recognition or understanding

- G06V10/70—Arrangements for image or video recognition or understanding using pattern recognition or machine learning

- G06V10/82—Arrangements for image or video recognition or understanding using pattern recognition or machine learning using neural networks

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V10/00—Arrangements for image or video recognition or understanding

- G06V10/98—Detection or correction of errors, e.g. by rescanning the pattern or by human intervention; Evaluation of the quality of the acquired patterns

-

- G—PHYSICS

- G16—INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR SPECIFIC APPLICATION FIELDS

- G16H—HEALTHCARE INFORMATICS, i.e. INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR THE HANDLING OR PROCESSING OF MEDICAL OR HEALTHCARE DATA

- G16H30/00—ICT specially adapted for the handling or processing of medical images

- G16H30/40—ICT specially adapted for the handling or processing of medical images for processing medical images, e.g. editing

-

- G—PHYSICS

- G16—INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR SPECIFIC APPLICATION FIELDS

- G16H—HEALTHCARE INFORMATICS, i.e. INFORMATION AND COMMUNICATION TECHNOLOGY [ICT] SPECIALLY ADAPTED FOR THE HANDLING OR PROCESSING OF MEDICAL OR HEALTHCARE DATA

- G16H50/00—ICT specially adapted for medical diagnosis, medical simulation or medical data mining; ICT specially adapted for detecting, monitoring or modelling epidemics or pandemics

- G16H50/20—ICT specially adapted for medical diagnosis, medical simulation or medical data mining; ICT specially adapted for detecting, monitoring or modelling epidemics or pandemics for computer-aided diagnosis, e.g. based on medical expert systems

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/10—Image acquisition modality

- G06T2207/10068—Endoscopic image

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/20—Special algorithmic details

- G06T2207/20081—Training; Learning

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/20—Special algorithmic details

- G06T2207/20084—Artificial neural networks [ANN]

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V2201/00—Indexing scheme relating to image or video recognition or understanding

- G06V2201/03—Recognition of patterns in medical or anatomical images

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V2201/00—Indexing scheme relating to image or video recognition or understanding

- G06V2201/03—Recognition of patterns in medical or anatomical images

- G06V2201/031—Recognition of patterns in medical or anatomical images of internal organs

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V2201/00—Indexing scheme relating to image or video recognition or understanding

- G06V2201/03—Recognition of patterns in medical or anatomical images

- G06V2201/034—Recognition of patterns in medical or anatomical images of medical instruments

Definitions

- the present invention relates to an information processing device, an information processing method, and a computer program.

- pathological diagnosis requires specialized knowledge and experience, so part of the body tissue is excised and diagnosis is performed outside the body using a microscope.

- An object of the present invention is to provide an information processing device, an information processing method, and a computer program for deriving an integrated recognition result from the computation results of multiple types of learning models.

- An information processing apparatus includes a first calculation unit that executes calculation by a first learning model according to an input of an operating field image, and a calculation by a second learning model according to an input of the operating field image. Based on the second calculation unit to be executed, the calculation result output from the first calculation unit, and the calculation result output from the second calculation unit, an integrated recognition result for the surgical field image is derived. A derivation unit and an output unit for outputting information based on the derived recognition result are provided.

- An information processing method performs calculation by a first learning model according to an input of a surgical field image, executes calculation by a second learning model according to an input of the surgical field image, A computer executes a process of deriving an integrated recognition result for the surgical field image based on the calculation result of the first learning model and the calculation result of the second learning model, and outputting information based on the derived recognition result. do.

- a computer program executes an operation using a first learning model according to an input of an operating field image, executes an operation using a second learning model according to an input of the operating field image, Based on the computation result of the learning model and the computation result of the second learning model, an integrated recognition result for the surgical field image is derived, and the computer is caused to execute processing for outputting information based on the derived recognition result.

- an integrated recognition result can be derived from the computation results of multiple types of learning models.

- FIG. 1 is a schematic diagram illustrating a schematic configuration of a surgery support system according to Embodiment 1; FIG. It is a block diagram explaining the internal structure of an information processing apparatus.

- FIG. 4 is a schematic diagram showing an example of an operating field image;

- FIG. 4 is a schematic diagram showing a configuration example of a first learning model;

- FIG. 11 is a schematic diagram showing a configuration example of a second learning model;

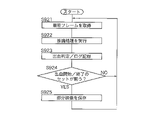

- 4 is a flow chart showing the procedure of processing executed by the information processing apparatus according to the first embodiment;

- 5 is a schematic diagram showing a display example of a recognition result according to Embodiment 1.

- FIG. 4 is a schematic diagram showing a display example of warning information according to Embodiment 1.

- FIG. 5 is a schematic diagram showing a display example of a recognition result according to certainty.

- FIG. 11 is a schematic diagram showing a configuration example of a third learning model; 9 is a flow chart showing the procedure of processing executed by the information processing apparatus according to the second embodiment;

- FIG. 11 is a schematic diagram showing a display example of a recognition result according to Embodiment 2;

- FIG. 11 is a schematic diagram showing a configuration example of a fourth learning model;

- 10 is a flow chart showing a procedure of processing executed by an information processing apparatus according to Embodiment 3; 4 is a flowchart showing a procedure for deriving dimension information;

- FIG. 11 is a schematic diagram showing a configuration example of a third learning model

- 9 is a flow chart showing the procedure of processing executed by the information processing apparatus according to the second embodiment

- FIG. 11 is a schematic diagram showing a display example of a recognition result according to Embodiment 2

- FIG. 11 is a schematic diagram showing a configuration example of a fourth

- FIG. 11 is a schematic diagram showing a configuration example of a fifth learning model

- 13 is a flow chart showing a procedure of processing executed by an information processing apparatus according to a fourth embodiment

- FIG. 13 is a flow chart showing a processing procedure in modification 4-1

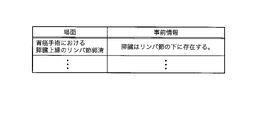

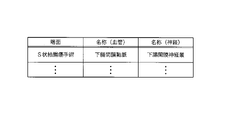

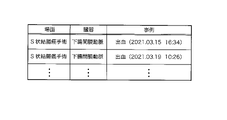

- FIG. FIG. 4 is a conceptual diagram showing an example of a prior information table

- FIG. 13 is a flow chart showing a processing procedure in modification 4-2

- FIG. 16 is a flow chart showing a processing procedure in modification 4-3

- FIG. FIG. 16 is a flow chart showing a processing procedure in modification 4-4

- FIG. FIG. 4 is a conceptual diagram showing an example of a unique name table

- FIG. 21 is an explanatory diagram for explaining an estimation method in Embodiment 7; 20 is a flow chart showing an estimation procedure in Embodiment 7.

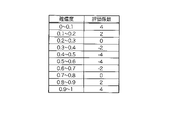

- FIG. FIG. 10 is an explanatory diagram for explaining a method of analyzing calculation results; It is a figure which shows an example of an evaluation coefficient table. It is a figure which shows an example of the calculation result of a score.

- FIG. 13 is a flow chart showing a procedure of processing executed by an information processing apparatus according to an eighth embodiment;

- FIG. FIG. 14 is a sequence diagram showing an example of processing executed by an information processing apparatus according to a ninth embodiment; 4 is a flow chart showing the procedure of processing executed by a first calculation unit; 9 is a flow chart showing a procedure of processing executed by a second calculation unit;

- FIG. 1 is a schematic diagram for explaining a schematic configuration of a surgery support system according to Embodiment 1.

- FIG. 1 is a schematic diagram for explaining a schematic configuration of a surgery support system according to Embodiment 1.

- trocars 10 In laparoscopic surgery, instead of performing an open surgery, a plurality of opening instruments called trocars 10 are attached to the patient's abdominal wall, and through the openings provided in the trocars 10, a laparoscope 11, an energy treatment instrument 12, and forceps 13 are inserted. Insert an instrument into the patient's body.

- the operator uses the energy treatment tool 12 to perform a treatment such as excision of the affected area while viewing an image of the inside of the patient's body (operative field image) captured by the laparoscope 11 in real time.

- Surgical instruments such as the laparoscope 11, the energy treatment instrument 12, and the forceps 13 are held by an operator, a robot, or the like.

- a surgeon is a medical worker involved in laparoscopic surgery, and includes a surgeon, an assistant, a nurse, a doctor who monitors the surgery, and the like.

- the laparoscope 11 includes an insertion section 11A to be inserted into the patient's body, an imaging device 11B built in the distal end portion of the insertion section 11A, an operation section 11C provided in the rear end portion of the insertion section 11A, and a camera control unit (CCU). ) 110 and a universal cord 11D for connecting to the light source device 120.

- the insertion section 11A of the laparoscope 11 is made of a rigid tube.

- a curved portion is provided at the distal end portion of the rigid tube.

- the bending mechanism in the bending section is a well-known mechanism incorporated in a general laparoscope, and is configured to bend in four directions, for example, up, down, left, and right by pulling an operation wire linked to the operation of the operation section 11C.

- the laparoscope 11 is not limited to the flexible scope having a bending portion as described above, and may be a rigid scope having no bending portion, or an imaging device having no bending portion or rigid tube.

- the imaging device 11B includes a solid-state imaging device such as CMOS (Complementary Metal Oxide Semiconductor), a driver circuit including a timing generator (TG), an analog signal processing circuit (AFE), and the like.

- the driver circuit of the imaging device 11B takes in the RGB color signals output from the solid-state imaging device in synchronization with the clock signal output from the TG, and performs necessary processing such as noise removal, amplification, and AD conversion in the AFE. , to generate image data in digital form.

- the driver circuit of the imaging device 11B transmits the generated image data to the CCU 110 via the universal code 11D.

- the operation unit 11C includes an angle lever, a remote switch, and the like operated by the operator.

- the angle lever is an operation tool that receives an operation for bending the bending portion.

- a bending operation knob, a joystick, or the like may be provided instead of the angle lever.

- the remote switch includes, for example, a changeover switch for switching between moving image display and still image display of the observation image, a zoom switch for enlarging or reducing the observation image, and the like.

- the remote switch may be assigned a specific predetermined function, or may be assigned a function set by the operator.

- the operation unit 11C may incorporate a vibrator configured by a linear resonance actuator, a piezo actuator, or the like.

- the CCU 110 vibrates the operation unit 11C by activating the vibrator built in the operation unit 11C to notify the occurrence of the event. You can let the operator know.

- Transmission cables for transmitting control signals output from the CCU 110 to the imaging device 11B and image data output from the imaging device 11B are provided inside the insertion portion 11A, the operation portion 11C, and the universal cord 11D of the laparoscope 11.

- a light guide or the like is arranged to guide the illumination light emitted from the light source device 120 to the distal end portion of the insertion portion 11A. Illumination light emitted from the light source device 120 is guided to the distal end portion of the insertion section 11A through the light guide, and is irradiated onto the surgical field through an illumination lens provided at the distal end portion of the insertion section 11A.

- light source device 120 is described as an independent device in the present embodiment, light source device 120 may be built in CCU 110 .

- the CCU 110 includes a control circuit that controls the operation of the imaging device 11B provided in the laparoscope 11, an image processing circuit that processes image data from the imaging device 11B input through the universal code 11D, and the like.

- the control circuit includes a CPU (Central Processing Unit), a ROM (Read Only Memory), a RAM (Random Access Memory), and the like.

- a control signal is output to the imaging device 11B to control imaging start, imaging stop, zooming, and the like.

- the image processing circuit is equipped with a DSP (Digital Signal Processor), image memory, etc., and performs appropriate processing such as color separation, color interpolation, gain correction, white balance adjustment, and gamma correction on image data input through the universal code 11D. process.

- the CCU 110 generates moving image frame images from the processed image data, and sequentially outputs the generated frame images to the information processing apparatus 200, which will be described later.

- the frame rate of frame images is, for example, 30 FPS (Frames Per Second).

- the CCU 110 may generate video data conforming to a predetermined standard such as NTSC (National Television System Committee), PAL (Phase Alternating Line), DICOM (Digital Imaging and Communication in Medicine). By outputting the generated video data to the display device 130, the CCU 110 can display the operative field image (video) on the display screen of the display device 130 in real time.

- the display device 130 is a monitor including a liquid crystal panel, an organic EL (Electro-Luminescence) panel, or the like. Further, CCU 110 may output the generated video data to recording device 140 and cause recording device 140 to record the video data.

- the recording device 140 includes a recording device such as an HDD (Hard Disk Drive) that records video data output from the CCU 110 together with an identifier identifying each surgery, the date and time of surgery, the location of the surgery, the name of the patient, the name of the operator, and the like.

- HDD Hard Disk Drive

- the information processing device 200 acquires the image data of the operative field image from the CCU 110, and inputs the acquired image data of the operative field image to each of the plurality of learning models, thereby executing calculations by each learning model.

- the information processing apparatus 200 derives an integrated recognition result for the surgical field image from the computation results of the plurality of learning models, and outputs information based on the derived recognition result.

- FIG. 2 is a block diagram for explaining the internal configuration of the information processing device 200.

- the information processing apparatus 200 is a dedicated or general-purpose computer including a control unit 201, a storage unit 202, an operation unit 203, an input unit 204, a first calculation unit 205, a second calculation unit 206, an output unit 207, a communication unit 208, and the like. be.

- the information processing apparatus 200 may be a computer installed in the operating room, or may be a computer installed outside the operating room.

- the information processing apparatus 200 may be a server installed in a hospital where laparoscopic surgery is performed, or may be a server installed outside the hospital.

- the information processing apparatus 200 is not limited to a single computer, and may be a computer system including a plurality of computers and peripheral devices.

- the information processing device 200 may be a virtual machine that is virtually constructed by software.

- the control unit 201 includes, for example, a CPU, ROM, and RAM.

- the ROM included in the control unit 201 stores a control program and the like for controlling the operation of each hardware unit included in the information processing apparatus 200 .

- the CPU in the control unit 201 executes the control program stored in the ROM and various computer programs stored in the storage unit 202, which will be described later, and controls the operation of each hardware unit, so that the entire apparatus can perform the information processing according to the present application. function as a device.

- the RAM provided in the control unit 201 temporarily stores data and the like that are used during execution of calculations.

- control unit 201 is configured to include a CPU, a ROM, and a RAM. Programmable Gate Array), quantum processor, volatile or non-volatile memory, etc. Further, the control unit 201 may have functions such as a clock that outputs date and time information, a timer that measures the elapsed time from when a measurement start instruction is given until a measurement end instruction is given, and a counter that counts the number of good.

- the storage unit 202 includes a storage device such as a hard disk and flash memory.

- the storage unit 202 stores a computer program executed by the control unit 201, various data acquired from the outside, various data generated inside the apparatus, and the like.

- the computer programs stored in the storage unit 202 include a recognition processing program PG1 that causes the control unit 201 to execute processing for recognizing a recognition target included in the operative field image, and a recognition processing program PG1 that causes the display device 130 to display information based on the recognition result. and a display processing program PG2 for causing the control unit 201 to execute the processing of .

- the recognition processing program PG1 and the display processing program PG2 do not need to be independent computer programs, and may be implemented as one computer program.

- These programs are provided, for example, by a non-temporary recording medium M on which computer programs are readable.

- the recording medium M is a portable memory such as a CD-ROM, a USB memory, and an SD (Secure Digital) card.

- the control unit 201 uses a reading device (not shown) to read a desired computer program from the recording medium M, and stores the read computer program in the storage unit 202 .

- the computer program may be provided by communication.

- the control unit 201 may download a desired computer program through the communication unit 208 and store the downloaded computer program in the storage unit 202 .

- the storage unit 202 has a first learning model 310 and a second learning model 320 .

- the first learning model 310 is a learned learning model trained to output information about the first organ included in the operative field image in response to the input of the operative field image.

- the first organ is a specific organ such as esophagus, stomach, large intestine, pancreas, spleen, ureter, lung, prostate, uterus, gallbladder, liver, vas deferens, or connective tissue, fat, nerve, blood vessel, muscle, membranous. Includes non-specific organs such as structures.

- the first organ is not limited to a specific organ, and may be any structure existing in the body.

- the second learning model 320 is a learned learning model trained to output information about the second organ included in the operative field image according to the input of the operative field image.

- the second organ includes the specific organ or non-specific organ described above.

- the second organ is not limited to a specific organ, and may be any structure existing within the body. In the following description, when there is no need to distinguish between a specific organ and a non-specific organ, they are simply referred to as an organ.

- the second organ to be recognized has characteristics similar to those of the first organ, and an organ that may be misidentified as the first organ in recognition processing is selected.

- the recognition target of the first learning model 310 is the loose connective tissue

- the neural tissue (or membrane structure) is selected as the recognition target of the second learning model 320 .

- the combination of the first organ and the second organ is not limited to (a) loose connective tissue and nerve tissue (or membranous structures), (b) fat and pancreas, (c) fat to be excised and fat to be preserved, (d) two of hemorrhages, scars, and blood vessels; (e) two of ureters, arteries, and membranous structures; (f) stomach and intestine; (g) liver and spleen; There may be.

- the first learning model 310 is trained to output information about the loose connective tissue included in the operative field image in accordance with the input of the operative field image.

- a first learning model 310 includes an operative field image obtained by imaging the operative field, and correct data indicating the first organ portion (the loose connective tissue portion in the first embodiment) in the operative field image. Training data is generated by learning using an appropriate learning algorithm. The correct data indicating the first organ portion is generated by manual annotation by a specialist such as a doctor. The same is true for the second learning model 320 as well.

- the first and second learning models 310, 320 may be generated inside the information processing device 200, or may be generated by an external server. In the latter case, the information processing apparatus 200 downloads the first and second learning models 310 and 320 generated by the external server through communication, and stores the downloaded first and second learning models 310 and 320 in the storage unit 202. It should be memorized.

- the storage unit 202 as definition information of the first and second learning models 310 and 320, information of layers included in the first and second learning models 310 and 320, information of nodes constituting each layer, weight coefficients between nodes and information such as parameters determined by learning such as bias.

- the operation unit 203 includes operation devices such as a keyboard, mouse, touch panel, non-contact panel, stylus pen, and voice input using a microphone.

- the operation unit 203 receives an operation by an operator or the like, and outputs information regarding the received operation to the control unit 201 .

- the control unit 201 executes appropriate processing according to operation information input from the operation unit 203 .

- information processing apparatus 200 is configured to include operation unit 203, but may be configured to receive operations through various devices such as CCU 110 connected to the outside.

- the input unit 204 has a connection interface for connecting input devices.

- the input device connected to input unit 204 is CCU 110 .

- the input unit 204 receives image data of an operating field image captured by the laparoscope 11 and processed by the CCU 110 .

- the input unit 204 outputs input image data to the control unit 201 .

- the control unit 201 may cause the storage unit 202 to store the image data acquired from the input unit 204 .

- Image data of the operative field image may be obtained from an image processing device (not shown) that is detachably attached to the device.

- the information processing device 200 may also acquire image data of the operative field image recorded in the recording device 140 .

- the first computing unit 205 includes a processor, memory, and the like.

- An example of a processor is GPU (Graphics Processing Unit) and an example of memory is VRAM (Video RAM).

- the first calculation unit 205 executes the calculation by the first learning model 310 with the built-in processor and outputs the calculation result to the control unit 201 .

- the first calculation unit 205 draws an image to be displayed on the display device 130 on the built-in memory in accordance with an instruction from the control unit 201, and outputs the image to the display device 130 through the output unit 207, thereby obtaining a desired image. is displayed on the display device 130 .

- the second computing unit 206 like the first computing unit 205, includes a processor, memory, and the like.

- the second calculation unit 206 may be equivalent to the first calculation unit 205 or may have a lower calculation capability than the first calculation unit 205 .

- the second calculation unit 206 executes the calculation by the second learning model 320 with the built-in processor and outputs the calculation result to the control unit 201 .

- the second calculation unit 206 draws an image to be displayed on the display device 130 on the built-in memory in accordance with an instruction from the control unit 201, and outputs the image to the display device 130 through the output unit 207, thereby obtaining a desired image. is displayed on the display device 130 .

- the output unit 207 includes a connection interface for connecting output devices.

- the output device connected to the output unit 207 is the display device 130 .

- the control unit 201 When the control unit 201 generates information to be notified to the operator or the like, such as integrated recognition results derived from the calculation results of the learning models 310 and 320, the control unit 201 outputs the generated information from the output unit 207 to the display device 130. By doing so, information is displayed on the display device 130 .

- the output unit 207 may output information to be notified to the operator or the like by voice or sound.

- the communication unit 208 has a communication interface for transmitting and receiving various data.

- the communication interface provided in the communication unit 208 is a communication interface conforming to a wired or wireless communication standard used in Ethernet (registered trademark) or WiFi (registered trademark).

- Ethernet registered trademark

- WiFi registered trademark

- FIG. 3 is a schematic diagram showing an example of an operating field image.

- the operative field image in the present embodiment is an image obtained by imaging the inside of the patient's abdominal cavity with the laparoscope 11 .

- the operative field image does not need to be a raw image output by the imaging device 11B of the laparoscope 11, and may be an image (frame image) processed by the CCU 110 or the like.

- the operative field imaged by the laparoscope 11 includes specific organs, tissues constituting blood vessels, nerves, etc., connective tissues existing between tissues, tissues including lesions such as tumors, and membranes and layers covering the tissues. organization is included. While grasping the relationship between these anatomical structures, the operator uses surgical tools such as the energy treatment tool 12 and the forceps 13 to dissect the tissue including the lesion.

- the surgical field image shown as an example in FIG. A scene in which tissue 502 is about to be peeled is shown.

- the loose connective tissue 502 is a fibrous connective tissue that fills between tissues and organs, and has a relatively small amount of fibers (elastic fibers) constituting the tissue.

- the loose connective tissue 502 is peeled off as necessary when expanding the organ 501 or excising a lesion.

- the loose connective tissue 502 runs vertically (in the direction of the white arrow in the figure), and the nerve tissue 503 runs in the horizontal direction (indicated by the black arrow in the figure) so as to intersect the loose connective tissue 502. direction).

- Loose connective tissue is a tissue that is detached as needed, but if nerves are damaged during the process of traction or detachment, functional impairment may occur after surgery. For example, damage to the hypogastric nerve in colon surgery can cause dysuria. Damage to the recurrent laryngeal nerve during esophagectomy or pulmonary resection can also cause dysphagia. For this reason, it would be useful for the operator if information on the results of recognition of loose connective tissue and nerve tissue could be provided to the operator.

- the information processing apparatus 200 executes calculation by the first learning model 310 and calculation by the second learning model 320 for the same operative field image, and calculates an operative field image from the two calculation results. By deriving an integrated recognition result and outputting information based on the derived recognition result, information on loose connective tissue and nerve tissue is provided to the operator.

- FIG. 4 is a schematic diagram showing a configuration example of the first learning model 310.

- the first learning model 310 is a learning model for performing image segmentation, and is constructed by a neural network with convolutional layers such as SegNet, for example.

- the learning model 310 is not limited to SegNet, but is constructed using any neural network that can perform image segmentation, such as FCN (Fully Convolutional Network), U-Net (U-Shaped Network), PSPNet (Pyramid Scene Parsing Network). good too.

- the learning model 310 may be constructed using a neural network for object detection such as YOLO (You Only Look Once) or SSD (Single Shot Multi-Box Detector) instead of the neural network for image segmentation.

- the calculation by the first learning model 310 is executed in the first calculation unit 205.

- the first computation unit 205 performs computation according to the definition information of the first learning model 310 including learned parameters.

- the first learning model 310 comprises an encoder 311, a decoder 312, and a softmax layer 313, for example.

- the encoder 311 is configured by alternately arranging convolution layers and pooling layers.

- the convolution layers are multi-layered into 2 to 3 layers. In the example of FIG. 4, the convolutional layers are shown without hatching, and the pooling layers are shown with hatching.

- the convolution layer performs a convolution operation between the input data and a filter of a predetermined size (for example, 3 ⁇ 3, 5 ⁇ 5, etc.). That is, the input value input to the position corresponding to each element of the filter is multiplied by the weighting factor preset in the filter for each element, and the linear sum of the multiplied values for each element is calculated.

- the output in the convolutional layer is obtained by adding the set bias to the calculated linear sum.

- the result of the convolution operation may be transformed by an activation function.

- ReLU Rectified Linear Unit

- the output of the convolutional layer represents a feature map that extracts the features of the input data.

- the pooling layer calculates the local statistics of the feature map output from the convolution layer, which is the upper layer connected to the input side. Specifically, a window of a predetermined size (for example, 2 ⁇ 2, 3 ⁇ 3) corresponding to the position of the upper layer is set, and local statistics are calculated from the input values within the window. For example, the maximum value can be used as the statistic.

- the size of the feature map output from the pooling layer is reduced (downsampled) according to the size of the window.

- the encoder 311 successively repeats the operations in the convolution layer and the operation in the pooling layer, thereby converting the input image of 224 pixels ⁇ 224 pixels into 112 ⁇ 112, 56 ⁇ 56, 28 ⁇ 28, . It shows that the feature map is sequentially down-sampled to a ⁇ 1 feature map.

- the output of the encoder 311 (1 ⁇ 1 feature map in the example of FIG. 4) is input to the decoder 312 .

- the decoder 312 is composed of alternating deconvolution layers and depooling layers.

- the deconvolution layers are multi-layered in 2 to 3 layers. In the example of FIG. 4, the deconvolution layers are shown without hatching, and the depooling layers are shown with hatching.

- the input feature map is deconvolved.

- the deconvolution operation is an operation to restore the feature map before the convolution operation under the presumption that the input feature map is the result of the convolution operation using a specific filter.

- a specific filter is represented by a matrix

- the product of the transposed matrix for this matrix and the input feature map is calculated to generate a feature map for output.

- the operation result of the deconvolution layer may be transformed by an activation function such as ReLU described above.

- the inverse pooling layers included in the decoder 312 are individually associated one-to-one with the pooling layers included in the encoder 311, and the associated pairs have substantially the same size.

- the inverse pooling layer again enlarges (upsamples) the size of the feature map downsampled in the pooling layer of the encoder 311 .

- the example of FIG. 4 sequentially upsamples to 1 ⁇ 1, 7 ⁇ 7, 14 ⁇ 14, . indicates that

- the output of decoder 312 (224 ⁇ 224 feature map in the example of FIG. 4) is input to softmax layer 313 .

- the softmax layer 313 outputs the probability of the label identifying the site at each position (pixel) by applying the softmax function to the input values from the deconvolution layer connected to the input side.

- the first learning model 310 may output, from the softmax layer 313, the probability indicating whether or not each pixel corresponds to loose connective tissue with respect to the input of the surgical field image.

- a calculation result by the first learning model 310 is output to the control unit 201 .

- An image (recognition image) showing the recognition result of the loose connective tissue portion is obtained by extracting pixels whose label probability output from the softmax layer 313 is equal to or greater than a threshold value (for example, 60% or greater).

- the first calculation unit 205 draws the recognition image of the loose connective tissue portion in the built-in memory (VRAM) and outputs the recognition result of the first learning model 310 to the display device 130 through the output unit 207. 130 may be displayed.

- the recognition image is an image of the same size as the operative field image, and is generated as an image in which pixels recognized as loose connective tissue are assigned specific colors.

- the color assigned to the pixels of the loose connective tissue is preferably a color that does not exist inside the human body so that it can be distinguished from organs, blood vessels, and the like.

- the color that does not exist inside the human body is, for example, a cold (blue) color such as blue or light blue.

- information indicating the degree of transparency is added to each pixel constituting the recognition image, and a non-transparent value is set for pixels recognized as loose connective tissue, and a transparent value is set for other pixels.

- an image of 224 pixels ⁇ 224 pixels is used as the input image to the first learning model 310, but the size of the input image is not limited to the above. , can be appropriately set according to the size of the operative field image obtained from the laparoscope 11 and the like. Also, the input image to the first learning model 310 does not have to be the entire operative field image obtained from the laparoscope 11, and may be a partial image generated by cutting out the region of interest of the operative field image. A region of interest that includes a treatment target is often located near the center of the operative field image. may be used. By reducing the size of the image input to the first learning model 310, it is possible to improve the recognition accuracy while increasing the processing speed.

- FIG. 5 is a schematic diagram showing a configuration example of the second learning model 320.

- the second learning model 320 includes an encoder 321, a decoder 322, and a softmax layer 323, and is configured to output information about neural tissue portions contained in the surgical field image in response to the input of the surgical field image.

- the configurations of the encoder 321, the decoder 322, and the softmax layer 323 included in the second learning model 320 are the same as those of the first learning model 310, so detailed description thereof will be omitted.

- the calculation by the second learning model 320 is executed in the second calculation unit 206.

- the second computation unit 206 performs computation according to the definition information of the second learning model 320 including learned parameters.

- the second learning model 320 according to Embodiment 1 may output, from the softmax layer 323, the probability indicating whether or not each pixel corresponds to nerve tissue with respect to the input of the surgical field image.

- a calculation result by the second learning model 320 is output to the control unit 201 .

- an image (recognition image) showing the recognition result of the neural tissue portion is obtained.

- the second calculation unit 206 draws the recognition image of the nerve tissue part in the built-in memory (VRAM) and outputs the recognition result of the second learning model 320 to the display device 130 by outputting it to the display device 130 through the output unit 207. may be displayed.

- the structure of the recognition image showing nerve tissue is similar to that of loose connective tissue, but the color assigned to the pixels of nerve tissue is a color that distinguishes it from loose connective tissue (for example, green or yellow). color).

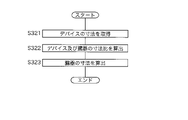

- FIG. 6 is a flow chart showing the procedure of processing executed by the information processing apparatus 200 according to the first embodiment.

- the control unit 201 of the information processing apparatus 200 reads out the recognition processing program PG1 and the display processing program PG2 from the storage unit 202 and executes them, thereby performing processing according to the following procedure.

- the operative field image obtained by imaging the operative field with the imaging device 11B of the laparoscopic 11 is output to the CCU 110 through the universal code 11D at any time.

- the control unit 201 of the information processing apparatus 200 acquires, from the input unit 204, the surgical field image in frame units output from the CCU 110 (step S101).

- the control unit 201 executes the following processing each time a surgical field image is acquired in units of frames.

- the control unit 201 sends the surgical field image in units of frames acquired through the input unit 204 to the first calculation unit 205 and the second calculation unit 206, and instructs the first calculation unit 205 and the second calculation unit 206 to perform calculation.

- a start instruction is given (step S102).

- the first calculation unit 205 executes calculation by the first learning model 310 (step S103). That is, the first computing unit 205 generates a feature map from the input surgical field image, performs computation by the encoder 311 that sequentially down-samples the generated feature map, and performs computation by the encoder 311 that sequentially up-samples the feature map input from the encoder 311. 312 and a softmax layer 313 that identifies each pixel of the feature map finally obtained from the decoder 312 .

- the first calculation unit 205 outputs the calculation result of the learning model 310 to the control unit 201 (step S104).

- the second calculation unit 206 executes calculation by the second learning model 320 (step S105). That is, the second calculation unit 206 generates a feature map from the input surgical field image, performs calculation by the encoder 321 that sequentially down-samples the generated feature map, and performs calculation by the encoder 321 that sequentially up-samples the feature map input from the encoder 321. 322 and a softmax layer 323 that identifies each pixel of the feature map finally obtained from the decoder 322 .

- the second calculation unit 206 outputs the calculation result of the learning model 320 to the control unit 201 (step S106).

- the procedure of executing the calculation by the second calculation unit 206 after the calculation by the first calculation unit 205 is performed. is preferably performed concurrently with the calculation by .

- the control unit 201 derives an integrated recognition result for the operative field image based on the computation result of the first learning model 310 and the computation result of the second learning model 320. Specifically, the control unit 201 executes the following processes.

- the control unit 201 refers to the calculation result of the first learning model 310 and executes the process of recognizing the loose connective tissue (step S107).

- the control unit 201 extracts pixels whose labels output from the softmax layer 313 of the first learning model 310 have a probability equal to or higher than a threshold value (for example, 60% or higher), thereby extracting loose connective tissue included in the surgical field image. can recognize.

- a threshold value for example, 60% or higher

- the control unit 201 refers to the calculation result of the second learning model 320 and executes the neural tissue recognition process (step S108).

- the control unit 201 extracts pixels whose labels output from the softmax layer 323 of the second learning model 320 have a probability of a threshold value or more (for example, 60% or more), thereby recognizing nerve tissue included in the operative field image. be able to.

- the control unit 201 determines whether or not the recognition result of the loose connective tissue overlaps with the recognition result of the neural tissue (step S109). In this step, it is checked whether a specific structure contained in the operative field image is recognized as loose connective tissue on the one hand and nerve tissue on the other hand. Specifically, when one pixel in the surgical field image is recognized as loose connective tissue on one side and nerve tissue on the other side, the control unit 201 determines that the recognition results overlap. Alternatively, a region in the operating field image recognized as loose connective tissue and a region in the operating field image recognized as nerve tissue may be compared to determine overlap of recognition results.

- the area ratio of the overlap between the two is a predetermined ratio or more (for example, 40% or more)

- it is determined that the recognition results overlap and if the area ratio is less than the predetermined ratio, it is determined that the recognition results do not overlap. good.

- the control unit 201 When it is determined that the recognition results do not overlap (S109: NO), the control unit 201 outputs the loose connective tissue recognition result and the neural tissue recognition result (step S110). Specifically, the control unit 201 instructs the first calculation unit 205 to superimpose the recognition image of the loose connective tissue on the surgical field image, and instructs the second calculation unit 206 to , the recognized image of the nerve tissue is superimposed on the operative field image. The first calculation unit 205 and the second calculation unit 206 draw the recognition images of the loose connective tissue and the nerve tissue in the built-in VRAM according to the instruction from the control unit 201, and output the images to the display device 130 through the output unit 207. By outputting, the recognition images of loose connective tissue and nerve tissue are superimposed on the surgical field image and displayed.

- FIG. 7 is a schematic diagram showing a display example of recognition results in the first embodiment.

- the portion recognized as the loose connective tissue 502 is shown as a hatched region

- the portion recognized as the nerve tissue 503 is shown as another hatched region for the convenience of drawing. It is shown.

- pixels recognized as loose connective tissue 502 are displayed in, for example, a blue color

- pixels recognized as nerve tissue 503 are displayed, for example, in a green color.

- the operator can distinguish between the loose connective tissue 502 and the nerve tissue 503, and can recognize, for example, the presence of the nerve tissue 503 that should not be damaged.

- the energy treatment device 12 can be used to ablate the loose connective tissue 502 .

- both the loose connective tissue recognized based on the calculation result of the first calculation unit 205 and the nerve tissue recognized based on the calculation result of the second calculation unit 206 are displayed.

- a configuration in which only one of them is displayed may be used.

- the tissue to be displayed may be selected by the operator, or may be switched by the operator's operation.

- step S109 of the flowchart shown in FIG. 6 when it is determined that the recognition results overlap (S109: YES), the control unit 201 outputs warning information indicating that a similar structure has been recognized (step S111).

- FIG. 8 is a schematic diagram showing a display example of warning information according to the first embodiment.

- FIG. 8 shows a warning when the structure 504 included in the operative field image is recognized as loose connective tissue based on the calculation result of the first calculation unit 205, and as nerve tissue based on the calculation result of the second calculation unit 206.

- An example of information display is shown.

- the first learning model 310 used by the first computation unit 205 is trained to output information on loose connective tissue in accordance with the input of the operative field image

- the second learning model 320 used by the second computation unit 206 is trained to output information about nerve tissue according to the input of the operative field image. If recognition processing is performed independently using the models 310 and 320, recognition results may overlap.

- the control unit 201 can prompt the operator for confirmation by displaying warning information as shown in FIG.

- control unit 201 is configured to display text information indicating a warning superimposed on the surgical field image.

- text information indicating the warning may be displayed on another display device (not shown).

- the control unit 201 may display a graphic indicating a warning, or issue a warning by outputting voice or sound instead of displaying text information indicating a warning.

- the control unit 201 of the information processing device 200 selects the recognition result with the higher degree of certainty. to output information based on the selected recognition result.

- the certainty factor of the recognition result by the first learning model 310 is calculated based on the probability output from the softmax layer 313 .

- the control unit 201 may calculate the degree of certainty by averaging probability values for each pixel recognized as loose connective tissue. The same applies to the certainty factor of the recognition result by the second learning model 320 . For example, as a result of recognizing the structure 504 shown in FIG. If the structure 504 is recognized as a nerve tissue with a certainty of %, the control unit 201 should present the recognition result that the structure 504 is a loose connective tissue to the operator.

- FIG. 9 is a schematic diagram showing a display example of a recognition result according to certainty. For example, as a result of recognizing the structure 505 included in the operative field image by the first learning model 310, it is recognized as a loose connective tissue with a certainty of 95%, and the same structure 505 is recognized by the second learning model 320.

- the control unit 201 colors this structure 505 in, for example, a blue-based color (black in the drawing) and presents it to the operator.

- the control unit 201 colors this structure 506 with, for example, a greenish color (white on the drawing) and presents it to the operator.

- the control unit 201 colors this structure 507 with, for example, an intermediate color between blue and green colors (gray on the drawing), and displays it to the operator.

- a configuration that changes saturation, transparency, etc. may be employed.

- Modification 1-4 When a structure recognized as a loose connective tissue and a structure recognized as a nerve tissue exist in the operative field image, the control unit 201 of the information processing apparatus 200 determines that both structures Information such as appropriate positional relationships of bodies, distances to feature points, distances to other structures, areas of other structures, etc. may be derived.

- Embodiment 1 based on the calculation result of the first learning model 310 and the calculation result of the second learning model 320, the integrated recognition result of the organs included in the surgical field is acquired. can be done.

- the information processing apparatus 200 issues a warning or stops outputting information when recognition results for similar structures are duplicated, thereby avoiding presenting results that may be erroneous in recognition to the operator. can.

- the information processing apparatus 200 includes a first learning model 310 for recognizing organs and a third learning model 330 for recognizing events.

- the organ recognized by the first learning model 310 is not limited to loose connective tissue, and may be a preset organ.

- Events recognized by the third learning model 330 are events such as bleeding, injury, and pulsation. Since other configurations of the information processing apparatus 200 are the same as those of the first embodiment, description thereof will be omitted.

- FIG. 10 is a schematic diagram showing a configuration example of the third learning model 330.

- the third learning model 330 includes an encoder 331, a decoder 332, and a softmax layer 333, and is configured to output information about events occurring in the operative field image in response to the input of the operative field image.

- Information related to events output by the third learning model 330 is information related to events such as bleeding, damage (burns caused by the energy treatment device 12), and pulsation.

- the third learning model 330 is not limited to learning models for image segmentation and object detection, but also CNN (Convolutional Neural Networks), RNN (Recurrent Neural Networks), LSTM (Long Short Term Memory), GAN (Generative Adversarial Network), etc. It may be a learning model.

- the calculation by the third learning model 330 is executed in the second calculation unit 206.

- the second calculation unit 206 performs calculation according to the definition information of the third learning model 330 including learned parameters.

- the third learning model 330 may output from the softmax layer 333 the probability indicating whether or not an event has occurred with respect to the input of the surgical field image.

- a calculation result by the third learning model 330 is output to the control unit 201 .

- the control unit 201 determines that an event has occurred in the surgical field image when the probability of the label output from the softmax layer 333 is greater than or equal to a threshold value (for example, 60% or greater).

- the control unit 201 may determine whether an event has occurred in units of pixels of the surgical field image, or may determine whether an event has occurred in units of the surgical field image.

- FIG. 11 is a flow chart showing the procedure of processing executed by the information processing apparatus 200 according to the second embodiment.

- the information processing apparatus 200 executes steps S201 to S206 in the same manner as in the first embodiment each time a surgical field image is acquired.

- the control unit 201 of the information processing apparatus 200 acquires the calculation result of the first learning model 310 and the calculation result of the third learning model 330, and based on these calculation results, the integrated recognition result of the surgical field image. to derive Specifically, the control unit 201 executes the following processes.

- the control unit 201 refers to the calculation result of the first learning model 310 and executes organ recognition processing (step S207).

- the control unit 201 extracts pixels whose label probability output from the softmax layer 313 of the first learning model 310 is equal to or greater than a threshold value (e.g., 60% or greater), thereby recognizing the organ included in the operative field image. can be done.

- a threshold value e.g. 60% or greater

- the control unit 201 refers to the calculation result of the third learning model 330 and executes event recognition processing (step S208).

- the control unit 201 can determine whether an event has occurred for each pixel by extracting pixels whose label probability output from the softmax layer 333 of the third learning model 330 is equal to or greater than a threshold (for example, 60% or greater).

- the control unit 201 determines whether an event has occurred in the organ recognized in step S207 (step S209).

- the control unit 201 compares the pixels recognized as the organ in step S207 with the pixels recognized as the occurrence of the event in step S208, and if they match, determines that the event occurred in the recognized organ. .

- control unit 201 When it is determined that no event has occurred in the recognized organ (S209: NO), the control unit 201 terminates the processing according to this flowchart. Note that the control unit 201 may individually display the recognition result of the organ without associating it with the event, or may display the recognition result of the event individually without associating it with the organ.

- the control unit 201 When it is determined that an event has occurred in the recognized organ (S209: YES), the control unit 201 outputs information on the organ in which the event occurred (step S210). For example, the control unit 201 may display the name of the organ in which the event occurred as text information superimposed on the operative field image. Instead of superimposed display on the operative field image, the name of the organ in which the event occurred may be displayed outside the operative field image, or may be output by sound or voice.

- FIG. 12 is a schematic diagram showing a display example of recognition results in the second embodiment.

- the display example of FIG. 12 shows an example in which character information to the effect that bleeding has occurred from the surface of the stomach is displayed superimposed on the surgical field image.

- the control unit 201 may display information about an organ damaged by the energy treatment device 12 or the like, and may display information about an organ in which pulsation is occurring, without being limited to the bleeding organ.

- the organ may be displayed blinking in synchronization with the pulsation. Synchronization does not necessarily have to perfectly match the pulsation, and may be a periodic display close to the pulsation.

- the control unit 201 When the control unit 201 recognizes bleeding from a specific organ (for example, an important blood vessel), the data of the patient's vital signs (pulse, blood pressure, respiration, body temperature) constantly detected by a sensor (not shown) is collected. The data may be acquired as needed and the acquired data may be displayed on the display device 130 . Further, when the control unit 201 recognizes bleeding from a specific organ (for example, important blood vessels), the control unit 201 may notify the external device through the communication unit 208 .

- the notification destination external device may be a terminal carried by an anesthesiologist, or may be an in-hospital server or the like that supervises and manages events in the hospital.

- control unit 201 when the control unit 201 recognizes bleeding from an organ, the control unit 201 may change the threshold used in organ recognition or stop organ recognition. Furthermore, when the control unit 201 recognizes bleeding from an organ, it may become difficult to recognize the organ. Therefore, the control unit 201 switches to a learning model (not shown) improved for bleeding, and continues organ recognition using this learning model. You may

- control unit 201 may automatically estimate the amount of bleeding or the bleeding rate and suggest blood transfusion.

- the control unit 201 can estimate the bleeding amount by calculating the bleeding area on the image, and can estimate the bleeding rate by calculating the time change of the bleeding area.

- an integrated recognition result obtained by combining the learning model 310 for organ recognition and the learning model 320 for event recognition can be presented to the operator.

- Embodiment 3 will describe a configuration for deriving an integrated recognition result by combining organ recognition and device recognition.

- the information processing apparatus 200 includes a first learning model 310 for recognizing organs and a fourth learning model 340 for recognizing devices.

- the organ recognized by the first learning model 310 is not limited to loose connective tissue, and may be a preset organ.

- Devices recognized by the fourth learning model 340 are surgical tools used during surgery, such as the energy treatment tool 12 and the forceps 13 . Since other configurations of the information processing apparatus 200 are the same as those of the first embodiment, description thereof will be omitted.

- FIG. 13 is a schematic diagram showing a configuration example of the fourth learning model 340.

- the fourth learning model 340 includes an encoder 341, a decoder 342, and a softmax layer 343, and is configured to output information about devices included in the operative field image in response to the input of the operative field image.

- the device-related information output by the fourth learning model 340 is information related to surgical tools used during surgery, such as the energy treatment tool 12 and the forceps 13 .

- the fourth learning model 340 is not limited to a learning model for image segmentation or object detection, and may be a learning model based on CNN, RNN, LSTM, GAN, or the like.

- a plurality of fourth learning models 340 may be prepared according to the type of device.

- the calculation by the fourth learning model 340 is executed in the second calculation unit 206.

- the second calculation unit 206 performs calculation according to the definition information of the fourth learning model 340 including learned parameters.

- the fourth learning model 340 may output from the softmax layer 343 probabilities indicating whether or not each pixel corresponds to a specific device with respect to the input of the surgical field image.

- a calculation result by the fourth learning model 340 is output to the control unit 201 .

- the control unit 201 determines that a specific device included in the operative field image has been recognized.

- FIG. 14 is a flow chart showing the procedure of processing executed by the information processing apparatus 200 according to the third embodiment.

- the information processing apparatus 200 executes steps S301 to S306 in the same manner as in the first embodiment each time a surgical field image is acquired.

- the control unit 201 of the information processing apparatus 200 acquires the calculation result of the first learning model 310 and the calculation result of the fourth learning model 340, and based on these calculation results, the integrated recognition result of the surgical field image. to derive Specifically, the control unit 201 executes the following processes. It is assumed that the storage unit 202 stores information on organs and information on devices recognized in the most recent (for example, one frame previous) surgical field image.

- the control unit 201 refers to the calculation result of the first learning model 310 and executes organ recognition processing (step S307).

- the control unit 201 extracts pixels whose label probability output from the softmax layer 313 of the first learning model 310 is equal to or greater than a threshold value (e.g., 60% or greater), thereby recognizing the organ included in the operative field image. can be done.

- a threshold value e.g. 60% or greater

- the control unit 201 refers to the calculation result of the fourth learning model 340 and executes device recognition processing (step S308).

- the control unit 201 extracts pixels whose labels output from the softmax layer 343 of the fourth learning model 340 have a probability of a threshold value or more (for example, 60% or more), thereby recognizing devices included in the operative field image. can be done.

- the control unit 201 determines whether the device is moving over the organ recognized in step S307 (step S309).

- the control unit 201 reads from the storage unit 202 the information on the organ and device recognized from the most recent operative field image, compares the read information on the organ and device with the information on the newly recognized organ and device, By detecting a change in position, it can be determined whether the device is moving over the organ.

- control unit 201 When determining that the device has not moved on the organ (S309: NO), the control unit 201 terminates the processing according to this flowchart. Note that the control unit 201 may display the recognition result of the organ regardless of the device, or may display the recognition result of the device regardless of the organ.

- the control unit 201 If it is determined that the device is moving on the organ (S309: YES), the control unit 201 generates display data in which the display mode of the organ is changed, and outputs the data to the display device 130 through the output unit 207 (step S310). ).

- the control unit 201 may change the display mode by changing the display color, saturation, and transparency of the organ, or may change the display mode by blinking the organ.

- the display device 130 displays the organ whose display mode has been changed.

- the information processing apparatus 200 may misrecognize the organ. Therefore, by changing the display mode and displaying, the operator can be prompted to make a decision.

- the control unit 201 may instruct the first calculation unit 205 to change the display mode, and the display mode may be changed by the processing of the first calculation unit 205 .

- the organ recognition process may be stopped.

- the control unit 201 may continuously perform the device recognition process and restart the organ recognition process at the timing when it is determined that the device has stopped.

- control unit 201 of the information processing apparatus 200 may stop outputting the recognition result of the organ.

- the calculations by the first learning model 310 and the fourth learning model 340, the organ recognition processing based on the calculation results of the first learning model 310, and the device recognition processing based on the calculation results of the fourth learning model 340 are continuously performed. is executed, and the display of the recognition image indicating the recognition result of the organ is stopped.

- the control unit 201 may restart the output process when it determines that the device has stopped.

- the control unit 201 of the information processing apparatus 200 may derive information on the organ being processed by the device based on the organ recognition processing and the device recognition processing.

- the control unit 201 can derive information on the organ being processed by the device by comparing the pixels recognized as the organ in step S307 and the pixels recognized as the device in step S308.

- the control unit 201 may output the information of the organ being processed by the device and display it on the display device 130, for example.

- the control unit 201 of the information processing apparatus 200 may acquire dimension information of the recognized device and derive dimension information of the recognized organ based on the acquired dimension information of the device.

- FIG. 15 is a flowchart showing the procedure for deriving dimension information.

- the control unit 201 acquires dimension information of the recognized device (step S321).

- the device dimension information may be stored in advance in the storage unit 202 of the information processing apparatus 200, or may be stored in an external device. In the former case, the control unit 201 may acquire dimension information by reading desired information from the storage unit 202, and in the latter case, the control unit 201 may acquire dimension information by accessing an external device. Note that the dimension information does not have to be the dimensions of the entire device, and may be the dimensions of a portion of the device (for example, the cutting edge portion).

- the control unit 201 calculates the ratio between the size of the image information of the device portion indicated by the acquired size information and the size of the recognized organ on the image (step S322).

- the control unit 201 calculates the dimensions of the organ based on the device dimension information acquired in step S321 and the dimension ratio calculated in step S322 (step S323).

- the control unit 201 may output the calculated dimension information of the organ and display it on the display device 130, for example.

- the control unit 201 of the information processing apparatus 200 may derive information on the organ damaged by the device based on the organ recognition processing and the device recognition processing. For example, when the control unit 201 recognizes a device on an organ and determines that a part of the organ has changed color, it determines that damage to the organ caused by the device has been detected. A device on an organ is recognized by a procedure similar to that shown in the flow chart of FIG. Discoloration of an organ is recognized by changes in pixel values over time. When the control unit 201 detects organ damage caused by the device, the control unit 201 outputs information to that effect and causes the display device 130 to display the information, for example.

- the control unit 201 of the information processing apparatus 200 may derive information indicating whether or not the device used for the organ is appropriate based on the organ recognition processing and the device recognition processing.

- the storage unit 202 of the information processing apparatus 200 has a definition table that defines the relationship between the types of organs and devices that can be used (or devices that should not be used) for each organ. This definition table defines, for example, that sharp forceps should not be used for the intestinal tract.

- the control unit 201 recognizes the organ and device from the operative field image, and refers to the definition table described above to determine whether the device used for the organ is appropriate.

- control unit 201 If it is determined to be inappropriate (for example, sharp forceps are being used for the intestinal tract), the control unit 201 outputs information indicating that an incorrect device is being used, and displays it on the display device 130, for example. Let Also, the control unit 201 may issue a warning by voice or warning sound.

- the control unit 201 of the information processing apparatus 200 may output the operation support information of the device and display it on the display device 130, for example.

- the device operation support information is a device usage manual, and may be stored in the storage unit 202 of the information processing apparatus 200 or an external device.

- an integrated recognition result obtained by combining the learning model 310 for organ recognition and the learning model 340 for device recognition can be presented to the operator.

- Embodiment 4 will describe a configuration for deriving an integrated recognition result by combining organ recognition and scene recognition.

- the information processing apparatus 200 includes a first learning model 310 for recognizing organs and a fifth learning model 350 for recognizing scenes.

- the organ recognized by the first learning model 310 is not limited to loose connective tissue, and may be a preset organ.

- a model may be prepared for each type of organ so as to correspond to various organs.

- a scene recognized by the fifth learning model 350 is, for example, a characteristic scene indicating a characteristic scene of surgery. Since other configurations of the information processing apparatus 200 are the same as those of the first embodiment, description thereof will be omitted.

- FIG. 16 is a schematic diagram showing a configuration example of the fifth learning model 350.

- the fifth learning model 350 includes an input layer 351, an intermediate layer 352, and an output layer 353, and is configured to output information about the scene indicated by the surgical field image in response to the surgical field image input.

- the information about the scene output by the fifth learning model 350 includes the probability that the scene includes specific organs such as blood vessels, important nerves, and specific organs (ureter, spleen, etc.), blood vessel dissection, lymph node dissection, and the like.

- a fifth learning model 350 is constructed, for example, by CNN.

- the fifth learning model 350 may be a learning model built by RNN, LSTM, GAN, etc., and may be a learning model for image segmentation or object detection.

- the calculation by the fifth learning model 350 is executed in the second calculation unit 206.

- the second computation unit 206 performs computation according to the definition information of the fifth learning model 350 including learned parameters.

- the fifth learning model 350 outputs, from each node forming the output layer 353, the probability of corresponding to a specific scene with respect to the input of the surgical field image.

- a calculation result by the fifth learning model 350 is output to the control unit 201 .

- the control unit 201 performs scene recognition by selecting the scene with the highest probability among the probabilities of the scenes output from the output layer 353 .

- FIG. 17 is a flow chart showing the procedure of processing executed by the information processing apparatus 200 according to the fourth embodiment.

- the control unit 201 of the information processing apparatus 200 acquires, from the input unit 204, the surgical field image in units of frames output from the CCU 110 (step S401).

- the control unit 201 executes the following processing each time a surgical field image is acquired in units of frames.

- the control unit 201 sends the surgical field image in units of frames acquired through the input unit 204 to the first calculation unit 205 and the second calculation unit 206, and gives a calculation start instruction to the second calculation unit 206 (step S402).