WO2022201835A1 - 撮像素子及び撮像装置 - Google Patents

撮像素子及び撮像装置 Download PDFInfo

- Publication number

- WO2022201835A1 WO2022201835A1 PCT/JP2022/002785 JP2022002785W WO2022201835A1 WO 2022201835 A1 WO2022201835 A1 WO 2022201835A1 JP 2022002785 W JP2022002785 W JP 2022002785W WO 2022201835 A1 WO2022201835 A1 WO 2022201835A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- pixel

- photoelectric conversion

- semiconductor substrate

- imaging device

- charges

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N25/00—Circuitry of solid-state image sensors [SSIS]; Control thereof

- H04N25/70—SSIS architectures; Circuits associated therewith

- H04N25/76—Addressed sensors, e.g. MOS or CMOS sensors

- H04N25/77—Pixel circuitry, e.g. memories, A/D converters, pixel amplifiers, shared circuits or shared components

- H04N25/771—Pixel circuitry, e.g. memories, A/D converters, pixel amplifiers, shared circuits or shared components comprising storage means other than floating diffusion

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N25/00—Circuitry of solid-state image sensors [SSIS]; Control thereof

- H04N25/10—Circuitry of solid-state image sensors [SSIS]; Control thereof for transforming different wavelengths into image signals

- H04N25/11—Arrangement of colour filter arrays [CFA]; Filter mosaics

- H04N25/13—Arrangement of colour filter arrays [CFA]; Filter mosaics characterised by the spectral characteristics of the filter elements

- H04N25/134—Arrangement of colour filter arrays [CFA]; Filter mosaics characterised by the spectral characteristics of the filter elements based on three different wavelength filter elements

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N25/00—Circuitry of solid-state image sensors [SSIS]; Control thereof

- H04N25/40—Extracting pixel data from image sensors by controlling scanning circuits, e.g. by modifying the number of pixels sampled or to be sampled

- H04N25/46—Extracting pixel data from image sensors by controlling scanning circuits, e.g. by modifying the number of pixels sampled or to be sampled by combining or binning pixels

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N25/00—Circuitry of solid-state image sensors [SSIS]; Control thereof

- H04N25/50—Control of the SSIS exposure

- H04N25/57—Control of the dynamic range

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N25/00—Circuitry of solid-state image sensors [SSIS]; Control thereof

- H04N25/60—Noise processing, e.g. detecting, correcting, reducing or removing noise

- H04N25/62—Detection or reduction of noise due to excess charges produced by the exposure, e.g. smear, blooming, ghost image, crosstalk or leakage between pixels

- H04N25/621—Detection or reduction of noise due to excess charges produced by the exposure, e.g. smear, blooming, ghost image, crosstalk or leakage between pixels for the control of blooming

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N25/00—Circuitry of solid-state image sensors [SSIS]; Control thereof

- H04N25/70—SSIS architectures; Circuits associated therewith

- H04N25/703—SSIS architectures incorporating pixels for producing signals other than image signals

- H04N25/704—Pixels specially adapted for focusing, e.g. phase difference pixel sets

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N25/00—Circuitry of solid-state image sensors [SSIS]; Control thereof

- H04N25/70—SSIS architectures; Circuits associated therewith

- H04N25/79—Arrangements of circuitry being divided between different or multiple substrates, chips or circuit boards, e.g. stacked image sensors

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10F—INORGANIC SEMICONDUCTOR DEVICES SENSITIVE TO INFRARED RADIATION, LIGHT, ELECTROMAGNETIC RADIATION OF SHORTER WAVELENGTH OR CORPUSCULAR RADIATION

- H10F39/00—Integrated devices, or assemblies of multiple devices, comprising at least one element covered by group H10F30/00, e.g. radiation detectors comprising photodiode arrays

- H10F39/011—Manufacture or treatment of image sensors covered by group H10F39/12

- H10F39/014—Manufacture or treatment of image sensors covered by group H10F39/12 of CMOS image sensors

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10F—INORGANIC SEMICONDUCTOR DEVICES SENSITIVE TO INFRARED RADIATION, LIGHT, ELECTROMAGNETIC RADIATION OF SHORTER WAVELENGTH OR CORPUSCULAR RADIATION

- H10F39/00—Integrated devices, or assemblies of multiple devices, comprising at least one element covered by group H10F30/00, e.g. radiation detectors comprising photodiode arrays

- H10F39/10—Integrated devices

- H10F39/12—Image sensors

- H10F39/18—Complementary metal-oxide-semiconductor [CMOS] image sensors; Photodiode array image sensors

- H10F39/186—Complementary metal-oxide-semiconductor [CMOS] image sensors; Photodiode array image sensors having arrangements for blooming suppression

- H10F39/1865—Overflow drain structures

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10F—INORGANIC SEMICONDUCTOR DEVICES SENSITIVE TO INFRARED RADIATION, LIGHT, ELECTROMAGNETIC RADIATION OF SHORTER WAVELENGTH OR CORPUSCULAR RADIATION

- H10F39/00—Integrated devices, or assemblies of multiple devices, comprising at least one element covered by group H10F30/00, e.g. radiation detectors comprising photodiode arrays

- H10F39/80—Constructional details of image sensors

- H10F39/802—Geometry or disposition of elements in pixels, e.g. address-lines or gate electrodes

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10F—INORGANIC SEMICONDUCTOR DEVICES SENSITIVE TO INFRARED RADIATION, LIGHT, ELECTROMAGNETIC RADIATION OF SHORTER WAVELENGTH OR CORPUSCULAR RADIATION

- H10F39/00—Integrated devices, or assemblies of multiple devices, comprising at least one element covered by group H10F30/00, e.g. radiation detectors comprising photodiode arrays

- H10F39/80—Constructional details of image sensors

- H10F39/803—Pixels having integrated switching, control, storage or amplification elements

- H10F39/8037—Pixels having integrated switching, control, storage or amplification elements the integrated elements comprising a transistor

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10F—INORGANIC SEMICONDUCTOR DEVICES SENSITIVE TO INFRARED RADIATION, LIGHT, ELECTROMAGNETIC RADIATION OF SHORTER WAVELENGTH OR CORPUSCULAR RADIATION

- H10F39/00—Integrated devices, or assemblies of multiple devices, comprising at least one element covered by group H10F30/00, e.g. radiation detectors comprising photodiode arrays

- H10F39/80—Constructional details of image sensors

- H10F39/807—Pixel isolation structures

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10F—INORGANIC SEMICONDUCTOR DEVICES SENSITIVE TO INFRARED RADIATION, LIGHT, ELECTROMAGNETIC RADIATION OF SHORTER WAVELENGTH OR CORPUSCULAR RADIATION

- H10F39/00—Integrated devices, or assemblies of multiple devices, comprising at least one element covered by group H10F30/00, e.g. radiation detectors comprising photodiode arrays

- H10F39/80—Constructional details of image sensors

- H10F39/811—Interconnections

-

- H—ELECTRICITY

- H10—SEMICONDUCTOR DEVICES; ELECTRIC SOLID-STATE DEVICES NOT OTHERWISE PROVIDED FOR

- H10F—INORGANIC SEMICONDUCTOR DEVICES SENSITIVE TO INFRARED RADIATION, LIGHT, ELECTROMAGNETIC RADIATION OF SHORTER WAVELENGTH OR CORPUSCULAR RADIATION

- H10F39/00—Integrated devices, or assemblies of multiple devices, comprising at least one element covered by group H10F30/00, e.g. radiation detectors comprising photodiode arrays

- H10F39/80—Constructional details of image sensors

- H10F39/813—Electronic components shared by multiple pixels, e.g. one amplifier shared by two pixels

Definitions

- the present disclosure relates to imaging elements and imaging devices.

- An imaging device in which pixels with two photoelectric conversion units are arranged in a two-dimensional matrix.

- This imaging device can pupil-divide incident light from a subject and generate a phase difference signal for detecting an image plane phase difference and an image signal based on the incident light from the subject.

- a phase difference signal two image signals based on charges respectively generated by the photoelectric conversion of the two photoelectric conversion units are output as the phase difference signal.

- an image signal the charges generated by the photoelectric conversion of the two photoelectric conversion units are summed in the pixel, and the image signal generated based on the summed charges is output.

- the two photoelectric conversion units can be separated, for example, by a semiconductor region having a relatively high impurity concentration. Also, a semiconductor region having a relatively high impurity concentration is arranged between the pixels, so that the pixels can be separated from each other.

- the conventional technology described above has a problem that the saturation charge amount of the photoelectric conversion unit when generating the phase difference signal is reduced. Since the potential barrier of the semiconductor region between the two photoelectric conversion units is lowered, the saturated charge amount of each photoelectric conversion unit is lowered. There is a problem that the phase difference detectable range is narrowed when detecting the phase difference signal, and the convenience is lowered.

- the present disclosure proposes an imaging device and an imaging device that prevent the saturation charge amount of the photoelectric conversion unit from decreasing when generating the phase difference signal.

- the present disclosure has been made to solve the above-described problems, and its aspect is to provide a plurality of photoelectric conversion units that are formed on a semiconductor substrate and perform photoelectric conversion of incident light from an object to generate charges.

- a pixel an intra-pixel separation section arranged in the pixel for separating the plurality of photoelectric conversion sections, and an arrangement in the intra-pixel separation section for mutually transferring charges overflowing between the plurality of photoelectric conversion sections.

- an overflow path an overflow gate arranged in the pixel to adjust the potential of the overflow path, a pixel separating section arranged at the boundary of the pixel, a charge holding section holding the generated charge, and the plurality of a plurality of charge transfer units arranged for each of the photoelectric conversion units and transferring the generated charges to the charge holding unit to hold them; and an image signal generation unit for producing image signals based on the held charges image sensor.

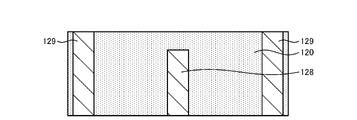

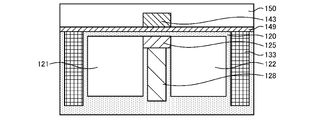

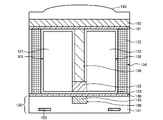

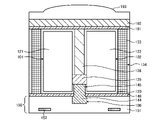

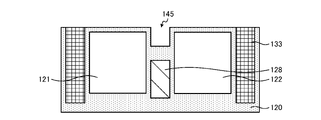

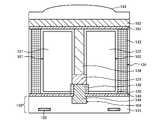

- FIG. 2 is a diagram showing a configuration example of a pixel according to an embodiment of the present disclosure

- FIG. 3 is a diagram showing a configuration example of a pixel according to the first embodiment of the present disclosure

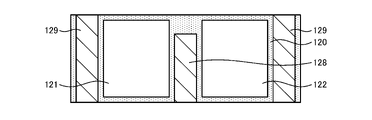

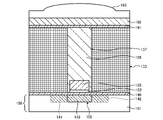

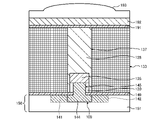

- FIG. 2 is a cross-sectional view showing a configuration example of a pixel according to the first embodiment of the present disclosure

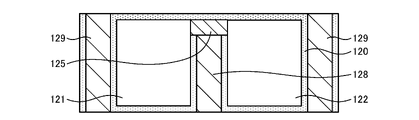

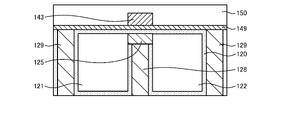

- FIG. 2 is a cross-sectional view showing a configuration example of a pixel according to the first embodiment of the present disclosure

- FIG. 4 is a diagram illustrating an example of generation of an image signal and a phase difference signal according to an embodiment of the present disclosure

- FIG. 4 is a diagram illustrating the effect of an overflow gate according to an embodiment of the present disclosure

- FIG. FIG. 4 is a diagram illustrating the effect of an overflow gate according to an embodiment of the present disclosure

- FIG. FIG. 4 is a diagram illustrating the effect of an overflow gate according to an embodiment of the present disclosure

- FIG. FIG. 4 is a diagram showing an example of a method for manufacturing an imaging device according to the first embodiment of the present disclosure

- FIG. FIG. 4 is a diagram showing an example of a method for manufacturing an imaging device according to the first embodiment of the present disclosure

- FIG. FIG. 4 is a diagram showing an example of a method for manufacturing an imaging device according to the first embodiment of the present disclosure

- FIG. FIG. 4 is a diagram showing an example of a method for manufacturing an imaging device according to the first embodiment of the present disclosure

- FIG. FIG. 4 is a diagram showing an example of a method for manufacturing an imaging device according to the first embodiment of the present disclosure

- FIG. 4 is a diagram showing an example of a method for manufacturing an imaging device according to the first embodiment of the present disclosure

- FIG. FIG. 4 is a diagram showing an example of gate voltage measurement of an overflow gate according to the first embodiment of the present disclosure

- FIG. 7 is a diagram showing a configuration example of a pixel according to the second embodiment of the present disclosure

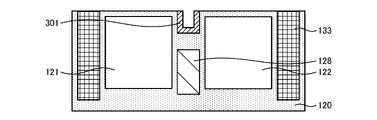

- FIG. 7 is a cross-sectional view showing a configuration example of a pixel according to the second embodiment of the present disclosure

- FIG. 7 is a cross-sectional view showing a configuration example of a pixel according to the second embodiment of the present disclosure

- FIG. 10 is a diagram illustrating an example of a method for manufacturing an imaging device according to the second embodiment of the present disclosure

- FIG. 10 is a diagram illustrating an example of a method for manufacturing an imaging device according to the second embodiment of the present disclosure

- FIG. 10 is a diagram illustrating an example of a method for manufacturing an imaging device according to the second embodiment of the present disclosure

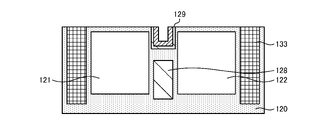

- FIG. 10 is a diagram illustrating a configuration example of a pixel according to a third embodiment of the present disclosure

- FIG. FIG. 7 is a cross-sectional view showing a configuration example of a pixel according to a third embodiment of the present disclosure

- FIG. 7 is a cross-sectional view showing a configuration example of a pixel according to a third embodiment of the present disclosure

- FIG. 10 is a diagram showing an example of a method for manufacturing an imaging device according to the third embodiment of the present disclosure

- FIG. 10 is a diagram showing an example of a method for manufacturing an imaging device according to the third embodiment of the present disclosure

- FIG. 10 is a diagram showing an example of a method for manufacturing an imaging device according to the third embodiment of the present disclosure

- FIG. 10 is a diagram showing an example of a method for manufacturing an imaging device according to the third embodiment of the present disclosure

- FIG. 10 is

- FIG. 10 is a diagram showing an example of a method for manufacturing an imaging device according to the third embodiment of the present disclosure

- FIG. 10 is a diagram showing an example of a method for manufacturing an imaging device according to the third embodiment of the present disclosure

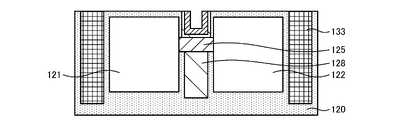

- FIG. 11 is a diagram showing a configuration example of a pixel according to a fourth embodiment of the present disclosure

- FIG. 11 is a cross-sectional view showing a configuration example of a pixel according to a fourth embodiment of the present disclosure

- FIG. 11 is a cross-sectional view showing a configuration example of a pixel according to a fourth embodiment of the present disclosure

- FIG. 11 is a diagram showing another configuration example of a pixel according to the fourth embodiment of the present disclosure

- FIG. 11 is a cross-sectional view showing another configuration example of a pixel according to the fourth embodiment of the present disclosure;

- FIG. 11 is a cross-sectional view showing another configuration example of a pixel according to the fourth embodiment of the present disclosure;

- FIG. 11 is a diagram illustrating a configuration example of a pixel according to a fifth embodiment of the present disclosure;

- FIG. FIG. 11 is a diagram illustrating a configuration example of a pixel according to a sixth embodiment of the present disclosure;

- FIG. FIG. 11 is a cross-sectional view showing a configuration example of a pixel according to a sixth embodiment of the present disclosure;

- FIG. 11 is a cross-sectional view showing a configuration example of a pixel according to a sixth embodiment of the present disclosure;

- FIG. 11 is a cross-sectional view showing a configuration example of a pixel according to a sixth embodiment of the present disclosure;

- FIG. 11 is a cross-sectional view showing a configuration example of a pixel according

- FIG. 12 is a diagram showing an example of a method for manufacturing an imaging device according to the sixth embodiment of the present disclosure

- FIG. 12 is a diagram showing an example of a method for manufacturing an imaging device according to the sixth embodiment of the present disclosure

- FIG. 12 is a diagram showing an example of a method for manufacturing an imaging device according to the sixth embodiment of the present disclosure

- FIG. 12 is a diagram showing an example of a method for manufacturing an imaging device according to the sixth embodiment of the present disclosure

- FIG. 12 is a diagram showing an example of a method for manufacturing an imaging device according to the sixth embodiment of the present disclosure

- FIG. 13 is a diagram illustrating a configuration example of a pixel according to a seventh embodiment of the present disclosure

- FIG. 21 is a cross-sectional view showing a configuration example of a pixel according to a seventh embodiment of the present disclosure

- FIG. 21 is a cross-sectional view showing a configuration example of a pixel according to a seventh embodiment of the present disclosure

- FIG. 21 is a diagram illustrating a configuration example of an imaging element according to an eighth embodiment of the present disclosure

- FIG. 21 is a diagram illustrating a configuration example of an imaging element according to an eighth embodiment of the present disclosure

- FIG. FIG. 21 is a diagram illustrating a configuration example of an imaging element according to an eighth embodiment of the present disclosure

- FIG. 1 is a block diagram showing a configuration example of an imaging device mounted on an electronic device;

- FIG. 1 is a block diagram showing a configuration example of an imaging device mounted on an electronic device;

- FIG. 1 is a block diagram showing a configuration example of an imaging device mounted on an electronic device;

- FIG. 1 is a block diagram showing an example of a schematic configuration of a vehicle control system

- FIG. FIG. 4 is an explanatory diagram showing an example of installation positions of an outside information detection unit and an imaging unit

- 1 is a diagram showing an example of a schematic configuration of an endoscopic surgery system

- FIG. 3 is a block diagram showing an example of functional configurations of a camera head and a CCU;

- FIG. 1 is a diagram showing a configuration example of an imaging device according to an embodiment of the present disclosure.

- the imaging device 1 of this example has a pixel region (so-called imaging region) 3 in which pixels 100 each including a plurality of photoelectric conversion elements are regularly arranged two-dimensionally on a semiconductor substrate 11, such as a silicon substrate. and a peripheral circuit section.

- the pixel 100 has, for example, a photodiode serving as a photoelectric conversion element and a plurality of pixel transistors (so-called MOS transistors).

- a plurality of pixel transistors can be composed of, for example, three transistors, a transfer transistor, a reset transistor, and an amplification transistor.

- Pixel 100 can also be a shared pixel structure.

- This pixel-sharing structure is composed of a plurality of photodiodes, a plurality of transfer transistors, one shared floating diffusion region, and one shared pixel transistor each.

- the peripheral circuit section includes a vertical drive circuit 4, a column signal processing circuit 5, a horizontal drive circuit 6, an output circuit 7, a control circuit 8, and the like.

- the control circuit 8 receives an input clock and data instructing the operation mode, etc., and outputs data such as internal information of the imaging device. That is, the control circuit 8 generates a clock signal and a control signal that serve as a reference for the operation of the vertical driving circuit 4, the column signal processing circuit 5, the horizontal driving circuit 6, etc. based on the vertical synchronizing signal, the horizontal synchronizing signal, and the master clock. do. These signals are input to the vertical drive circuit 4, the column signal processing circuit 5, the horizontal drive circuit 6, and the like.

- the vertical drive circuit 4 is composed of, for example, a shift register, selects the pixel drive wiring 13, supplies a pulse for driving the pixels to the selected pixel drive wiring, and drives the pixels row by row. That is, the vertical driving circuit 4 sequentially selectively scans each pixel 100 in the pixel region 3 in the vertical direction on a row-by-row basis. A pixel signal based on the generated signal charge is supplied to the column signal processing circuit 5 .

- the column signal processing circuit 5 is arranged, for example, for each column of the pixels 100, and performs signal processing such as noise removal on the signals output from the pixels 100 of one row for each pixel column. That is, the column signal processing circuit 5 performs signal processing such as CDS (Correlated Double Sampling) for removing fixed pattern noise unique to the pixels 100, signal amplification, and AD conversion.

- a horizontal selection switch (not shown) is connected between the horizontal signal line 10 and the output stage of the column signal processing circuit 5 . Note that the column signal processing circuit 5 is an example of the processing circuit described in the claims.

- the horizontal driving circuit 6 is composed of, for example, a shift register, and sequentially outputs horizontal scanning pulses to select each of the column signal processing circuits 5 in turn, and outputs pixel signals from each of the column signal processing circuits 5 to the horizontal signal line. output to 10.

- the output circuit 7 performs signal processing on the signals sequentially supplied from each of the column signal processing circuits 5 through the horizontal signal line 10 and outputs the processed signals. For example, only buffering may be performed, or black level adjustment, column variation correction, and various digital signal processing may be performed.

- the input/output terminal 12 exchanges signals with the outside.

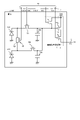

- FIG. 2 is a diagram illustrating a configuration example of a pixel according to an embodiment of the present disclosure

- the figure is a circuit diagram showing a configuration example of the pixel 100 according to the embodiment of the present disclosure.

- the pixel 100 includes photoelectric conversion units 101 and 102, charge holding units 103 and 104, charge transfer units 105 and 106, a reset transistor 111, an amplification transistor 112, a selection transistor 113, an overflow path 108, and an overflow gate. 109.

- a circuit configured by the reset transistor 111 , the amplification transistor 112 and the selection transistor 113 configures the image signal generator 110 .

- the charge transfer units 105 and 106, the reset transistor 111, the amplification transistor 112 and the selection transistor 113 can be configured by n-channel MOS transistors.

- the pixel driving wiring 13 and the vertical signal line 9 are wired to the pixel 100 .

- the pixel drive wiring 13 shown in the figure includes a signal line GATE, a signal line TRG1, a signal line TRG2, a signal line RST, and a signal line SEL.

- the vertical signal lines 9 also include the signal lines VO.

- the pixel 100 is wired with a power line Vdd.

- the power supply line Vdd is a wiring that supplies power to the pixels 100 .

- the anode of photoelectric conversion unit 101 is grounded, and the cathode is connected to the source of charge transfer unit 105 .

- the anode of photoelectric conversion unit 102 is grounded, and the cathode is connected to the source of charge transfer unit 106 .

- the drain of the charge transfer section 105 is connected to the source of the reset transistor 111 , the gate of the amplification transistor 112 , the drain of the charge transfer section 106 , one end of the charge holding section 103 and one end of the charge holding section 104 .

- the other end of charge holding portion 103 and the other end of 104 are grounded.

- a drain of the reset transistor 111 is connected to the power supply line Vdd.

- the amplification transistor 112 has a drain connected to the power supply line Vdd and a source connected to the drain of the selection transistor 113 .

- a source of the select transistor 113 is connected to the signal line VO.

- the overflow path 108 is connected between the cathode of the photoelectric conversion section 101 and the cathode of the photoelectric conversion section 102 .

- a signal line GATE, a signal line TRG1, and a signal line TRG2 are connected to the overflow gate 109, the gate of the charge transfer section 105, and the gate of the charge transfer section 106, respectively.

- a signal line RST and a signal line SEL are connected to the gate of the reset transistor 111 and the gate of the select transistor 113, respectively.

- the photoelectric conversion units 101 and 102 perform photoelectric conversion of incident light.

- the photoelectric conversion units 101 and 102 can be composed of photodiodes formed on a semiconductor substrate 120, which will be described later.

- the photoelectric conversion units 101 and 102 perform photoelectric conversion of incident light during an exposure period and hold charges generated by the photoelectric conversion.

- the charge holding units 103 and 104 hold charges generated by the photoelectric conversion units 101 and 102, respectively.

- the charge holding units 103 and 104 in the figure represent an example in which they are connected in parallel.

- the charge holding portions 103 and 104 can be configured by floating diffusion regions (FDs), which are semiconductor regions formed in the semiconductor substrate 120 .

- FDs floating diffusion regions

- the charge transfer units 105 and 106, the reset transistor 111, the amplification transistor 112 and the selection transistor 113 in the figure can be configured by n-channel MOS transistors.

- the drain-source can be made conductive by applying a voltage exceeding the threshold of the gate-source voltage Vgs to the gate.

- a voltage exceeding the threshold of the gate-source voltage Vgs is hereinafter referred to as an on-voltage.

- a control signal including this on-voltage is transmitted by a signal line TRG1 or the like.

- the charge transfer units 105 and 106 transfer charges.

- the charge transfer unit 105 transfers charges generated by photoelectric conversion of the photoelectric conversion unit 101 to the charge holding units 103 and 104

- the charge transfer unit 106 transfers charges generated by photoelectric conversion of the photoelectric conversion unit 102 to the charge holding unit 103 . and 104.

- the charge transfer units 105 and 106 transfer charges by establishing conduction between the photoelectric conversion units 101 and 102 and the charge holding units 103 and 104, respectively.

- Control signals for the charge transfer units 105 and 106 are transmitted by signal lines TRG1 and TRG2, respectively.

- the image signal generation unit 110 generates image signals based on the charges held in the charge holding units 103 and 104 .

- the image signal generator 110 is configured with the reset transistor 111 , the amplification transistor 112 and the selection transistor 113 .

- the reset transistor 111 resets the charge holding units 103 and 104 . This reset can be performed by conducting between the charge holding portions 103 and 104 and the power supply line Vdd to discharge the charges in the charge holding portions 103 and 104 . A control signal for the reset transistor 111 is transmitted through a signal line RST.

- the amplification transistor 112 amplifies the voltages of the charge holding units 103 and 104 .

- a gate of the amplification transistor 112 is connected to the charge holding portions 103 and 104 . Therefore, at the source of the amplifying transistor 112, an image signal having a voltage corresponding to the charges held in the charge holding portions 103 and 104 is generated.

- the selection transistor 113 By turning on the selection transistor 113, the image signal can be output to the signal line VO.

- a control signal for the select transistor 113 is transmitted by a signal line SEL.

- the overflow path 108 transfers charges overflowing between the photoelectric conversion units 101 and 102 to each other.

- This overflow path 108 can be configured by a semiconductor region arranged between the photoelectric conversion units 101 and 102 .

- the overflow gate 109 is an electrode arranged adjacent to the overflow path 108 . This overflow gate 109 adjusts the potential barrier of overflow path 108 .

- the charge transfer units 105 and 106 individually transfer the charges generated by the photoelectric conversion units 101 and 102 to the charge holding units 103 and 104 . This charge transfer is called individual transfer.

- the image signal generation unit 110 generates phase difference signals based on the charges individually transferred to the charge holding units 103 and 104 .

- the mode for generating the phase difference signal will be referred to as the phase difference signal mode.

- the charge transfer units 105 and 106 commonly transfer the charges generated by the photoelectric conversion units 101 and 102 to the charge holding units 103 and 104 .

- the charge holding units 103 and 104 collectively hold the charges generated by the photoelectric conversion units 101 and 102 at the same time. This charge transfer is referred to as collective transfer.

- a mode for generating this image signal is called an image signal mode.

- FIG. 3 is a diagram illustrating a configuration example of a pixel according to the first embodiment of the present disclosure

- the figure is a plan view showing a configuration example of the pixel 100 according to the first embodiment of the present disclosure.

- This drawing shows the configuration of the pixel 100 on the semiconductor substrate 120 .

- outline rectangles represent semiconductor regions formed in the semiconductor substrate 120 and gate electrodes arranged on the surface side of the semiconductor substrate 120 .

- Semiconductor regions 121 and 122 forming photoelectric conversion units 101 and 102 are arranged in the pixel 100

- charge transfer units 105 and 106 are arranged adjacent to the semiconductor regions 121 and 122 .

- gate electrodes 141 and 142 of charge transfer portions 105 and 106 are shown.

- Semiconductor regions 123 and 124 forming the charge holding portions 103 and 104 are arranged adjacent to the charge transfer portions 105 and 106 .

- An intra-pixel separation unit 128 is arranged between the photoelectric conversion units 101 and 102 .

- the intra-pixel separation section 128 separates the photoelectric conversion sections 101 and 102 .

- a pixel separation unit 129 is arranged at the boundary of the pixels 100 .

- the pixel separating portion 129 is configured in a shape surrounding the pixel 100 and separates the adjacent pixels 100 from each other.

- the image signal generation unit 110 is also shown in the figure.

- the figure shows an example in which the image signal generator 110 is arranged in a region other than the pixels 100 .

- the pixels in the figure are connected to the charge holding portions 103 and 104 by signal lines 119 .

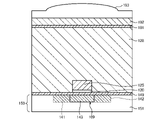

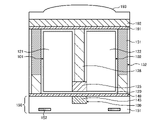

- FIG. 4A and 4B are cross-sectional views showing configuration examples of pixels according to the first embodiment of the present disclosure.

- 4A corresponds to a cross-sectional view of the pixel 100 along the line aa' in FIG. 3

- FIG. 4B corresponds to the cross-sectional view of the pixel 100 along the bb' line in FIG.

- a pixel 100 in the figure includes a semiconductor substrate 120 , insulating films 149 and 191 , a wiring region 150 , a color filter 192 and an on-chip lens 193 .

- the semiconductor substrate 120 is a semiconductor substrate on which the diffusion layers of the elements of the pixel 100 are arranged.

- the semiconductor substrate 120 can be made of silicon (Si), for example.

- Elements such as the photoelectric conversion unit 101 can be arranged in a well region formed in the semiconductor substrate 120 .

- the semiconductor substrate 120 in the figure is configured as a p-type well region. By arranging an n-type or p-type semiconductor region in this well region, a diffusion layer of the device can be formed. Photoelectric conversion units 101 and 102 and an overflow path 108 are shown in FIG.

- the photoelectric conversion unit 101 is composed of an n-type semiconductor region 121 .

- a photodiode with a pn junction at the interface between the n-type semiconductor region 121 and the surrounding p-type well region corresponds to the photoelectric conversion unit 101 .

- the photoelectric conversion unit 102 is composed of an n-type semiconductor region 122 .

- Charges generated by photoelectric conversion of the photoelectric conversion units 101 and 102 during the exposure period are accumulated in the n-type semiconductor regions 121 and 122 .

- the accumulated charges are transferred to and held in the charge holding units 103 and 104 by the charge transfer units 105 and 106 after the exposure period has elapsed.

- An intra-pixel separation unit 128 is arranged between the photoelectric conversion units 101 and 102 .

- the in-pixel isolation portion 128 is composed of a semiconductor region having a relatively high p-type impurity concentration, and electrically isolates the n-type semiconductor regions 121 and 122 forming the photoelectric conversion portions 101 and 102 .

- a pixel separating portion 129 is arranged at the boundary of the pixels 100 on the semiconductor substrate 120 .

- the pixel isolation portion 129 is composed of a semiconductor region having a relatively high p-type impurity concentration, and electrically isolates adjacent pixels 100 from each other.

- the insulating film 149 is a film that insulates the surface side of the semiconductor substrate 120 .

- This insulating film 149 can be made of silicon oxide (SiO 2 ) or silicon nitride (SiN).

- Gate electrodes 141 , 142 and 143 are arranged on the surface side of the semiconductor substrate 120 . Gate electrodes 141 and 142 form the gates of charge transfer sections 105 and 106, respectively. Gate electrode 143 forms overflow gate 109 . This overflow gate 109 adjusts the potential barrier of the overflow path 108 . By applying a voltage to the overflow gate 109, the height of the potential barrier of the overflow path 108 can be adjusted.

- the gate electrodes 141, 142 and 143 can be made of polycrystalline silicon.

- the insulating film 149 under the gate electrodes 141, 142 and 143 forms a gate insulating film.

- the wiring region 150 is arranged on the surface side of the semiconductor substrate 120 and is a region where the wiring of the pixels 100 is arranged.

- the wiring region 150 includes wiring 152 and an insulating layer 151 .

- the wiring 152 transmits signals and the like of the elements of the pixel 100 .

- This wiring 152 can be made of a conductor such as copper (Cu) or tungsten (W).

- the insulating layer 151 insulates the wiring 152 and the like.

- This insulating layer 151 can be made of, for example, SiO 2 .

- the insulating film 191 insulates the back side of the semiconductor substrate 120 .

- This insulating film 191 can be made of, for example, SiO 2 .

- the color filter 192 is an optical filter that transmits light of a predetermined wavelength among incident light.

- a color filter that transmits red light, green light and blue light can be used.

- the on-chip lens 193 is a lens that collects incident light. This on-chip lens 193 is configured in a hemispherical shape and converges incident light onto the photoelectric conversion units 101 and 102 .

- the amount of charge that can be stored in the n-type semiconductor regions 121 and 122 is limited. This storable charge amount is called a saturated charge amount Qs. This Qs changes depending on the size of the n-type semiconductor region 121 and the like, and the charges generated exceeding Qs overflow from the n-type semiconductor region 121 and the like. If the overflowed charges flow into the photoelectric conversion units 101 of other pixels 100 and the like to generate image signals, errors will occur in the image signals. Therefore, the charge that overflows from the photoelectric conversion unit 101 or the like is discharged to the charge holding unit 103 or the like of the pixel 100 itself.

- the charges discharged to the charge holding unit 103 and the like during the exposure period are discharged by resetting after the exposure period has elapsed.

- photoelectric conversion units 101 and 102 are arranged in the pixel 100 in FIG.

- Charge overflow also occurs when the charge in either of the photoelectric conversion units 101 and 102 exceeds Qs.

- This overflowing charge flows into the charge holding portion 103 and the like. Since this charge is discharged by resetting after the exposure period has elapsed, an error occurs in the image signal in the image signal mode in which the image signal is generated by summing the charges generated by the photoelectric conversion units 101 and 102. . This is because charges overflowing from one of the photoelectric conversion units 101 and 102 are not reflected in the combined image signal, and the linearity of the characteristics of the image signal voltage with respect to the amount of light incident on the pixel 100 is degraded.

- an overflow path 108 is arranged between the photoelectric conversion units 101 and 102 .

- This overflow path 108 is composed of an n-type semiconductor region 125 .

- the overflow path 108 is configured as a potential barrier lower than the potential barrier of the charge transfer section 105 and the like, and can transfer charges overflowing from the photoelectric conversion sections 101 and 102 to each other. For example, when the charge of the photoelectric conversion unit 101 reaches Qs while the charge of the photoelectric conversion unit 102 does not reach Qs, photoelectric conversion is performed from the n-type semiconductor region 121 of the photoelectric conversion unit 101 through the overflow path 108 . Charge moves to the n-type semiconductor region 122 of the portion 102 .

- the overflow path 108 it is possible to reduce the error of the image signal when the charges of the photoelectric conversion units 101 and 102 are added.

- the potential barrier of the overflow path 108 is lowered, so the amount of charge that can be accumulated in each of the photoelectric conversion units 101 and 102 is lower than Qs.

- the maximum value of the signal level of the phase difference signal is lowered. For this reason, the detectable phase difference range of the object is narrowed. Therefore, overflow gate 109 is placed adjacent to overflow path 108 . By applying a voltage to the overflow gate 109, it is possible to adjust the potential of the overflow path 108 and adjust the potential barrier.

- FIG. 5 is a diagram illustrating an example of generation of an image signal and a phase difference signal according to an embodiment of the present disclosure; The figure is a timing chart showing an example of generation of an image signal and a phase difference signal in the pixel 100.

- FIG. 5 is a diagram illustrating an example of generation of an image signal and a phase difference signal according to an embodiment of the present disclosure. The figure is a timing chart showing an example of generation of an image signal and a phase difference signal in the pixel 100.

- RST, SEL, TRG1”, “TRG2” and “GATE” in the figure represent signals of the signal line RST, signal line SEL, signal line TRG1, signal line TRG2 and signal line GATE, respectively. These represent the waveforms of the binarized control signals, and the portion of the value "1" represents the region to which the ON signal is transmitted. Also, the dashed line represents the level of 0V. Also, “FD” represents the potential of the charge holding portions 103 and 104 . Also, “VO” represents the output of the signal line VO. The first half of the figure represents the procedure for the phase difference signal mode, and the latter half represents the procedure for the image signal mode.

- the value "0" is applied to the signal line RST, signal line SEL, signal line TRG1, and signal line TRG2.

- a negative gate voltage is applied to the signal line GATE.

- an ON signal is applied to the signal lines RST, TRG1 and TRG2.

- the reset transistor 111 and the charge transfer units 105 and 106 become conductive, and the photoelectric conversion units 101 and 102 and the charge holding units 103 and 104 are reset.

- a positive gate voltage is applied to the signal line GATE. Thereby, the potential barrier of the overflow path 108 can be lowered.

- an ON signal is applied to the signal line SEL.

- the application of the ON signal to the signal line SEL continues until the phase difference signals of all the pixels 100 in the pixel region 3 are output.

- an ON signal is applied to the signal line TRG1.

- the charge transfer unit 105 becomes conductive, and the charges accumulated in the photoelectric conversion unit 101 are transferred to the charge holding units 103 and 104 . This lowers the potential of the FD.

- the ON signal to the signal line TRG1 is stopped.

- the image signal b corresponding to the charge of the photoelectric conversion unit 101 is output from the signal line VO.

- the CDS described above is performed by the image signal a and the image signal b to generate a phase difference signal.

- an ON signal is applied to the signal line RST, and the charge holding units 103 and 104 are reset.

- an ON signal is applied to the signal line TRG2.

- the charge transfer unit 106 becomes conductive, and the charges accumulated in the photoelectric conversion unit 102 are transferred to the charge holding units 103 and 104 . This lowers the potential of the FD.

- phase difference signals can be generated in the phase difference signal mode.

- image signal mode will be explained.

- an ON signal is applied to the signal lines RST, TRG1 and TRG2.

- the reset transistor 111 and the charge transfer units 105 and 106 become conductive, and the photoelectric conversion units 101 and 102 and the charge holding units 103 and 104 are reset.

- a positive gate voltage is applied to the signal line GATE. Thereby, the potential barrier of the overflow path 108 can be lowered.

- a negative gate voltage is applied to the signal line GATE.

- an ON signal is applied to the signal line SEL.

- the application of the ON signal to the signal line SEL continues until the image signals of all the pixels 100 in the pixel region 3 are output.

- an ON signal is applied to the signal lines TRG1 and TRG2.

- the charge transfer units 105 and 106 become conductive, and charges accumulated in the photoelectric conversion units 101 and 102 are transferred to the charge holding units 103 and 104 . This lowers the potential of the FD.

- the gate voltage of the signal line GATE in T3-T4 in the phase difference signal mode and the gate voltage of the signal line GATE in T16-T17 in the image signal mode can be set to different values. Specifically, the gate voltage in T3-T4 can be set to a low voltage. This makes it possible to make the potential barrier of the overflow path 108 higher in the phase difference signal mode than in the image signal mode.

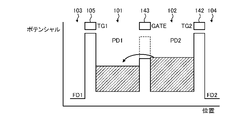

- FIG. 6A is a diagram showing potential barriers of the photoelectric conversion units 101 and 102, the charge transfer units 105 and 106, the charge retention units 103 and 104, and the overflow path 108 of the pixel 100.

- FIG. The horizontal axis represents the position in the pixel 100, and the vertical axis represents the potential.

- "FD1”, “TG1”, “PD1”, “GATE”, “PD2”, “TG2” and “FD2" represent the positions described in FIG.

- a high potential barrier is formed in the charge transfer portions 105 and 106 during the exposure period. As a result, charges are accumulated in the photoelectric conversion units 101 and 102 .

- the hatched rectangles in the figure represent charges that are accumulated.

- a high gate voltage is applied to the overflow gate 109, and the potential barrier of the overflow path 108 becomes relatively low. Therefore, as shown in FIG. 6A, charges overflowing from the photoelectric conversion unit 102 are transferred to the photoelectric conversion unit 101 through the overflow path 108 .

- a dotted line potential barrier of the overflow path 108 represents a potential barrier when no gate voltage is applied to the overflow gate 109 .

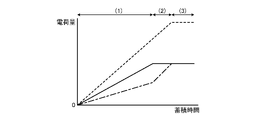

- FIG. 6B is a diagram showing the relationship between charge accumulation time and charge amount in the image signal mode.

- the horizontal axis represents the accumulation time

- the vertical axis represents the charge amount.

- the solid-line graph in FIG. 6A is a graph representing the change in the charge amount of the photoelectric conversion unit 102 in FIG. 6A.

- the dashed-dotted line graph in FIG. 6A is a graph showing changes in the amount of charge in the photoelectric conversion unit 101 in FIG. 6A.

- the dotted line graph in FIG. 2 is a graph showing the charge amount obtained by adding the charges of the photoelectric conversion units 101 and 102 .

- FIG. 6C shows the potential barrier in the phase difference signal mode.

- the charges of the photoelectric conversion units 101 and 102 are individually transferred to the charge holding units 103 and 104 .

- This figure shows an example of transferring the charge of the photoelectric conversion unit 101 .

- the voltage applied to the gate electrode may modulate the potential of the overflow path 108 and lower the potential barrier of the overflow path 108 .

- the vertical drive circuit 3 By applying a negative gate voltage to the overflow gate 109 , the potential barrier of the overflow path 108 can be prevented from lowering, and the charge transfer from the photoelectric conversion unit 102 to the photoelectric conversion unit 101 can be prevented.

- Such gate voltage adjustment is performed by the vertical drive circuit 3 .

- FIG. 7A-7D are diagrams illustrating an example of a method for manufacturing an imaging device according to the first embodiment of the present disclosure.

- 7A-7D are diagrams showing an example of the manufacturing process of the pixel 100 portion of the imaging device 1.

- FIG. 7A-7D are diagrams showing an example of the manufacturing process of the pixel 100 portion of the imaging device 1.

- a well region is formed in the semiconductor substrate 120, and an intra-pixel isolation portion 128 and a pixel isolation portion 129 are formed (FIG. 7A). This can be done by ion implantation of impurities. At this time, in the ion implantation, an acceptor impurity is implanted to make the p-type, which is the same conductivity type as the well region.

- the photoelectric conversion section 101 and the like are formed on the semiconductor substrate 120 .

- n-type semiconductor regions 121 and 122 and the like are formed in a semiconductor substrate 120 . This can be done by ion implantation of impurities (FIG. 7B).

- a semiconductor region 125 forming the overflow path 108 is formed in the semiconductor substrate 120 . This can be done by ion implantation of impurities (FIG. 7C).

- an insulating film 149 is formed on the surface side of the semiconductor substrate 120, and a gate electrode 143 forming the overflow gate 109 is arranged.

- a wiring region 150 is formed on the surface side of the semiconductor substrate 120 (FIG. 7D).

- the back side of the semiconductor substrate 120 is ground to be thinned.

- the pixel 100 can be manufactured by arranging the insulating film 191 , the color filter 192 and the on-chip lens 193 on the back side of the semiconductor substrate 120 .

- FIG. 8 is a diagram showing an example of gate voltage measurement of the overflow gate according to the first embodiment of the present disclosure.

- FIG. 8 is a flow chart showing an example of measurement of the gate voltage of the overflow gate 109 in the manufacturing process of the imaging device 1.

- step S101 the amount of electric charge is measured for each of the photoelectric conversion units 101 and 102 of the pixel 100 of the manufactured image sensor 1. This can be done by measuring the Qs of the photoelectric conversion units 101 and 102 .

- the inter-pixel potential is measured (step S102). This can be done by measuring the potential between pixels 100 .

- the gate voltage is detected (step S103).

- step S104 This can be done by holding the detected gate voltage in a holding unit such as a register arranged in the imaging device 1 .

- a desired characteristic can be obtained by applying the gate voltage read from the register to the overflow gate 109 when the imaging device 1 is used.

- the pixel 100 of the present disclosure arranges the overflow gate 109 adjacent to the overflow path 108 arranged between the plurality of photoelectric conversion units 101 and 102, and adjusts the voltage applied to the overflow gate 109.

- This makes it possible to adjust the potential barrier of the overflow path 108, improve the linearity of the image signal, and widen the phase difference detectable range when generating the phase difference signal. Convenience can be improved.

- the imaging device 1 of the first embodiment described above the pixel separation section 129 formed of a semiconductor region is arranged at the boundary between the pixels 100 .

- the imaging device 1 of the second embodiment of the present disclosure differs from the above-described first embodiment in that a pixel separating section made of an insulating material is arranged.

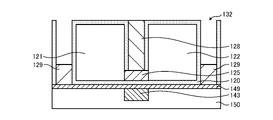

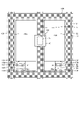

- FIG. 9 is a diagram showing a configuration example of the pixel 100 according to the second embodiment of the present disclosure. This figure, like FIG. 3, is a plan view showing a configuration example of the imaging element 1. As shown in FIG. A pixel 100 in FIG. 3 differs from the pixel 100 in FIG. 3 in that a pixel separation section 131 is provided instead of the pixel separation section 129 .

- the pixel separation section 131 is a pixel separation section configured by embedding an insulating member in the semiconductor substrate 120 .

- the pixel separating portion 131 can be formed by embedding an insulating film such as SiO 2 in a groove portion 132 formed in the semiconductor substrate 120 .

- FIGS. 10A and 10B are cross-sectional views showing configuration examples of pixels according to the second embodiment of the present disclosure. Similar to FIGS. 4A and 4B, FIGS. 10A and 10B are cross-sectional views showing configuration examples of the pixel 100.

- FIG. Pixel 100 of FIGS. 10A and 10B differs from pixel 100 of FIGS. 4A and 4B in that pixel separator 131 is arranged instead of pixel separator 129 .

- the pixel separation section 131 can be configured by arranging an insulating film in a groove section 132 formed from the rear surface side of the semiconductor substrate 120 at the boundary of the pixels 100 .

- FIGS. 7A-7D are diagrams illustrating an example of a method for manufacturing an imaging device according to the second embodiment of the present disclosure.

- 11A and 11B are diagrams showing an example of the manufacturing process of the pixel 100 portion of the image sensor 1.

- FIG. 1 the steps of FIGS. 7A-7D are performed.

- the imaging device 1 is turned upside down, and a groove portion 132 is formed from the back side of the semiconductor substrate 120 (FIG. 11A). This can be done, for example, by dry etching.

- an insulating member such as SiO 2 is placed in the groove 132 . This can be done, for example, by CVD (FIG. 11B). Thereby, the pixel separating portion 131 can be formed.

- the configuration of the imaging device 1 other than this is the same as the configuration of the imaging device 1 in the first embodiment of the present disclosure, the description is omitted.

- the image pickup device 1 of the second embodiment of the present disclosure can improve insulation characteristics between the pixels 100 by arranging the pixel separation section 131 configured by an insulating member.

- the pixel separation section 131 is arranged in which an insulating material is embedded in the groove section 132 formed from the rear surface side of the semiconductor substrate 120 at the boundary between the pixels 100 .

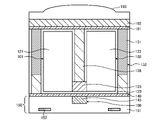

- the imaging device 1 of the third embodiment of the present disclosure has a pixel separation section configured by embedding an insulator in a groove formed from the surface of the semiconductor substrate 120, and is different from the above-described third embodiment. 2 embodiment.

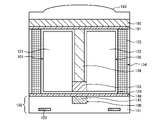

- FIG. 12 is a diagram showing a configuration example of the pixel 100 according to the third embodiment of the present disclosure.

- This figure like FIG. 9, is a plan view showing a configuration example of the imaging device 1.

- a pixel 100 in the figure differs from the pixel 100 in FIG. 9 in that a pixel separation section 133 is provided instead of the pixel separation section 131 .

- the pixel separation section 133 is a pixel separation section configured by embedding an insulating member in the semiconductor substrate 120 .

- the pixel separating portion 133 can be formed by embedding an insulating film such as SiO 2 in a groove portion 134 formed in the semiconductor substrate 120 .

- FIGS. 13A and 13B are cross-sectional views showing configuration examples of pixels according to the third embodiment of the present disclosure.

- 13A and 13B are cross-sectional views showing configuration examples of the pixel 100, similar to FIGS. 10A and 10B.

- Pixel 100 in FIGS. 13A and 13B differs from pixel 100 in FIGS. 10A and 10B in that pixel separator 133 is arranged instead of pixel separator 131 .

- the pixel separating portion 133 is formed in a shape penetrating the semiconductor substrate 120 .

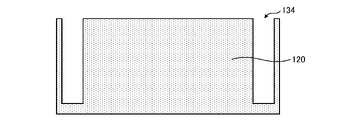

- the groove portion 134 is a groove portion formed from the surface of the semiconductor substrate 120 at the boundary of the pixel 100 .

- a pixel separating portion 133 can be formed by arranging an insulating film in the groove portion 134 .

- FIG. 14A and 14D are diagrams illustrating an example of a method for manufacturing an imaging device according to the third embodiment of the present disclosure.

- 14A and 14D are diagrams showing an example of the manufacturing process of the pixel 100 portion of the image sensor 1.

- a pixel separating portion 133 is formed by embedding an insulating film such as SiO 2 in the groove portion 134 (FIG. 14B).

- the intra-pixel isolation section 128, the semiconductor regions 121 and 122, the semiconductor region 125, the insulating film 149, the gate electrode 143 and the wiring region 150 are formed (FIG. 14C).

- the back side of the semiconductor substrate 120 is ground to thin it (FIG. 14D). Thereby, the pixel isolation part 133 having a shape penetrating the semiconductor substrate 120 can be formed.

- the configuration of the imaging device 1 other than this is the same as the configuration of the imaging device 1 according to the second embodiment of the present disclosure, so the description is omitted.

- the imaging device 1 of the third embodiment of the present disclosure includes the pixel separation section 133 having a shape penetrating through the semiconductor substrate 120 .

- the insulating properties between the pixels 100 on the surface side of the semiconductor substrate 120 can be further improved.

- the imaging device 1 of the second embodiment described above the pixel separating section 131 is arranged at the boundary between the pixels 100 .

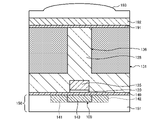

- the imaging device 1 of the fourth embodiment of the present disclosure is different from the above-described second embodiment in that an isolation portion made of an insulating material is further arranged between the photoelectric conversion portions 101 and 102 of the pixel 100. Different from the form.

- FIG. 15 is a diagram showing a configuration example of the pixel 100 according to the fourth embodiment of the present disclosure. This figure, like FIG. 9, is a plan view showing a configuration example of the imaging device 1. As shown in FIG. A pixel 100 in FIG. 9 differs from the pixel 100 in FIG. 9 in that an intra-pixel separation section 135 is further provided.

- the in-pixel separation section 135 is a separation section arranged between the photoelectric conversion sections 101 and 102 and is a separation section formed by embedding an insulating member in a groove formed in the semiconductor substrate 120 .

- the groove portion 134 in the figure is also formed between the photoelectric conversion portions 101 and 102 in addition to the boundary of the pixel 100 .

- the intra-pixel separation portion 135 can be formed by embedding an insulating film similar to the pixel separation portion 131 in the groove portion 134 between the photoelectric conversion portions 101 and 102 .

- 16A and 16B are cross-sectional views showing configuration examples of pixels according to the fourth embodiment of the present disclosure.

- 16A and 16B are cross-sectional views showing configuration examples of the pixel 100, similar to FIGS. 10A and 10B.

- the pixel 100 of FIGS. 16A and 16B differs from the pixel 100 of FIGS. 10A and 10B in that an intra-pixel separator 135 is further provided.

- an intra-pixel isolation portion 128 is arranged in the semiconductor substrate 120 below the overflow path 108 .

- the intra-pixel separating section 128 is an example of the second intra-pixel separating section described in the claims.

- FIG. 17 is a diagram showing another configuration example of the pixel 100 according to the fourth embodiment of the present disclosure. Similar to FIG. 16, this figure is a plan view showing a configuration example of the imaging element 1. As shown in FIG. A pixel 100 in FIG. 16 differs from the pixel 100 in FIG. 16 in that a pixel separation section 133 and an intra-pixel separation section 137 are provided instead of the pixel separation section 131 and the intra-pixel separation section 135 .

- the in-pixel separation portion 137 is a separation portion having a shape penetrating through the semiconductor substrate 120 in the same manner as the pixel separation portion 133 .

- FIGS. 18A and 18B are cross-sectional views showing other configuration examples of pixels according to the fourth embodiment of the present disclosure.

- 18A and 18B are cross-sectional views showing configuration examples of the pixel 100, similar to FIGS. 10A and 10B.

- Pixel 100 in FIGS. 18A and 18B differs from pixel 100 in FIGS. 10A and 10B in that pixel separator 133 and intra-pixel separator 137 are provided instead of pixel separator 131 and intra-pixel separator 135 .

- the in-pixel isolation portion 137 is formed in a shape penetrating the semiconductor substrate 120 .

- an intra-pixel isolation portion 128 is arranged in the semiconductor substrate 120 below the overflow path 108 .

- the configuration of the imaging device 1 other than this is the same as the configuration of the imaging device 1 according to the second embodiment of the present disclosure, so the description is omitted.

- the imaging device 1 includes the intra-pixel separation sections 135 and 137 made of insulating members. Thereby, the insulation characteristics between the photoelectric conversion units 101 and 102 can be improved.

- the overflow path 108 and the overflow gate 109 are arranged in the center of the pixel 100 .

- the image sensor 1 of the fifth embodiment of the present disclosure differs from the above-described first embodiment in that the overflow path 108 and the overflow gate 109 are arranged near the edge of the pixel 100 .

- FIG. 19 is a diagram showing a configuration example of the pixel 100 according to the fifth embodiment of the present disclosure. This figure, like FIG. 3, is a plan view showing a configuration example of the imaging element 1. As shown in FIG. Pixel 100 in FIG. 3 differs from pixel 100 in FIG. 3 in that overflow path 108 and overflow gate 109 are located near the edges of pixel 100 .

- An overflow path 108 and an overflow gate 109 in the figure are arranged near the edge of the pixel 100 and separated from the charge transfer sections 105 and 106 . That is, the overflow path 108, the overflow gate 109, and the charge transfer units 105 and 106 are arranged near the boundaries of the pixels 100 facing each other. Since the overflow path 108 is arranged apart from the charge transfer sections 105 and 106, the potential modulation of the overflow path 108 described in FIG. 6C can be reduced.

- the configuration of the imaging device 1 other than this is the same as the configuration of the imaging device 1 in the first embodiment of the present disclosure, the description is omitted.

- the overflow path 108 is arranged apart from the charge transfer sections 105 and 106, the gate voltage of the charge transfer sections 105 and 106 with respect to the overflow path 108 is can reduce the impact of

- the overflow gate 109 is arranged on the surface side of the semiconductor substrate 120 .

- the imaging device 1 of the sixth embodiment of the present disclosure differs from the above-described fourth embodiment in that overflow gates embedded in the semiconductor substrate 120 are arranged.

- FIG. 20 is a diagram showing a configuration example of the pixel 100 according to the sixth embodiment of the present disclosure. Similar to FIG. 17, this figure is a plan view showing a configuration example of the imaging element 1. As shown in FIG. A pixel 100 in FIG. 17 differs from the pixel 100 in FIG. 17 in that it includes an overflow gate 109 composed of a gate electrode 144 .

- the overflow gate 109 in the same figure has a gate electrode 144 .

- the gate electrode 144 is a gate electrode at least partially embedded in the semiconductor substrate 120 .

- 21A and 21B are cross-sectional views showing configuration examples of pixels according to the sixth embodiment of the present disclosure.

- 21A and 21B are cross-sectional views showing configuration examples of the pixel 100, similar to FIGS. 18A and 18B.

- Pixel 100 of FIGS. 21A and 21B differs from pixel 100 of FIGS. 18A and 18B in that overflow gate 109 with gate electrode 144 is arranged instead of gate electrode 143 .

- the gate electrode 144 is configured such that the lower portion thereof is embedded in the semiconductor substrate 120 .

- the overflow path 108 in the same figure can be arranged in a layer below the gate electrode 144 .

- Overflow path 108 can be isolated from charge transfer sections 105 and 106 .

- FIG. 22A-22E are diagrams illustrating an example of a method for manufacturing an imaging device according to the sixth embodiment of the present disclosure. 22A-22E are diagrams showing an example of the manufacturing process of the pixel 100 portion of the imaging device 1.

- an in-pixel isolation portion 128 is formed on the semiconductor substrate 120 .

- semiconductor regions 121 and 122 are formed in the semiconductor substrate 120 .

- an opening 145 is formed on the surface side of the semiconductor substrate 120 . This can be done by dry etching (Fig. 22A).

- a film 301 such as SiO 2 is placed in the opening 145 (FIG. 22B).

- This film 301 is called a so-called sacrificial film.

- ion implantation is performed through the film 301 to form a p-type semiconductor region 129 in the semiconductor substrate 120 on the bottom and side surfaces of the opening 145 (FIG. 22C).

- the semiconductor region 125 of the overflow path 108 is formed (FIG. 22D). This can be done by ion implantation.

- the film 301 is peeled off.

- a gate oxide film is then placed in the opening 145 .

- a gate electrode member is placed in the opening 145 to form the gate electrode 144 (FIG. 22E).

- the gate electrode 144 can be formed through the above steps.

- the semiconductor region 129 formed in FIG. 22C is arranged in order to prevent the potential from being modulated by the gate electrode 144 in areas other than the overflow path 108 to form an unintended electron path. Also, by arranging this semiconductor region 129, it is possible to suppress the generation of dark current due to the defect level of the wall surface of the opening 145. FIG.

- the configuration of the imaging device 1 other than this is the same as the configuration of the imaging device 1 according to the fourth embodiment of the present disclosure, so the description is omitted.

- the imaging device 1 of the sixth embodiment of the present disclosure includes the overflow gate 109 having the gate electrode 144 at least partially embedded in the semiconductor substrate 120 . Thereby, the influence of the charge transfer units 105 and 106 on the overflow path 108 can be reduced.

- the gate electrode 144 embedded in the semiconductor substrate 120 is arranged.

- the imaging element 1 of the seventh embodiment of the present disclosure differs from the sixth embodiment described above in that a buried insulating film is arranged around the gate electrode 144 .

- FIG. 23 is a diagram showing a configuration example of the pixel 100 according to the seventh embodiment of the present disclosure. This figure is a plan view showing a configuration example of the imaging element 1, like FIG. The pixel 100 shown in the figure differs from the pixel 100 shown in FIG. 20 in that a buried insulating film is arranged around the gate electrode 144 .

- FIGS. 24A and 24B are cross-sectional views showing configuration examples of pixels according to the seventh embodiment of the present disclosure.

- 24A and 24B are cross-sectional views showing configuration examples of the pixel 100, similar to FIGS. 21A and 21B.

- the pixel 100 of FIGS. 24A and 24B differs from the pixel 100 of FIGS. 21A and 21B in that a buried insulating film 138 is arranged around the gate electrode 144.

- FIG. As shown in the figure, the embedded insulating film 138 is arranged around the gate electrode 144 embedded in the semiconductor substrate 120 .

- Such a buried insulating film 138 is called STI (Shallow Trench Isolation).

- This STI can be formed by forming an opening in the semiconductor substrate 120 and embedding an insulating film.

- the embedded insulating film 138 can be arranged around the gate electrode 144 . Since the side surface of the gate electrode 144 is insulated by the buried insulating film 138, it is possible to prevent the potential barrier other than the overflow path 108 from being modulated by the gate electrode 144. FIG. Controllability of the potential barrier of the overflow path 108 can be improved.

- a semiconductor region 129 or the like can be arranged as described in FIG. 22C.

- the embedded insulating film 138 is an example of the separating portion described in the claims.

- the configuration of the imaging device 1 other than this is the same as the configuration of the imaging device 1 according to the sixth embodiment of the present disclosure, so the description is omitted.

- the imaging device 1 of the seventh embodiment of the present disclosure has the buried insulating film 138 arranged around the gate electrode 144 to prevent the potential barrier other than the overflow path 108 from being modulated by the gate electrode 144. be able to. Controllability of the potential barrier of the overflow path 108 can be improved.

- the imaging device 1 of the above-described first embodiment was configured with the semiconductor substrate 120 .

- the imaging element 1 of the eighth embodiment of the present disclosure differs from the first embodiment described above in that it is configured by stacking a plurality of semiconductor substrates.

- FIG. 25 is a diagram illustrating a configuration example of an imaging element according to the eighth embodiment of the present disclosure; This figure is a cross-sectional view showing a configuration example of the imaging device 1 .

- the image pickup device 1 in FIG. 1 is different from the image pickup device 1 in FIG. 1 in that semiconductor substrates 220 and 320 are laminated on a semiconductor substrate 120 .

- the photoelectric conversion units 101 and 102, the charge transfer units 105 and 106 (not shown), and the charge holding units 103 and 104 (not shown) of the pixel 100 are arranged. Also, the overflow path 108 and the overflow gate 109 of the pixel 100 are arranged on the semiconductor substrate 120 . note that. Pixels 100a and 100b are described on the semiconductor substrate 120 in the figure.

- a wiring 152 is shown in the wiring area 150 in the figure.

- the wiring 152 is commonly connected to the gate electrodes 143 of the pixels 100a and 100b.

- the wiring 152 can be made of, for example, impurity-implanted polycrystalline silicon.

- the semiconductor substrate 220 is a semiconductor substrate that has a wiring region 250 and is laminated on the semiconductor substrate 120 .

- the image signal generator 110 is arranged on the semiconductor substrate 220 .

- the amplification transistor 112 is described as an example.

- the amplifying transistor 112 is composed of semiconductor regions 221 and 222 formed in a semiconductor substrate 220 and a gate electrode 242 .

- the wiring region 250 includes an insulating layer 251 , wiring 252 , via plugs 253 and connection pads 259 .

- the connection pads 259 are electrically connected to connection pads 359 in the wiring region 350 of the semiconductor substrate 320, which will be described later.

- a through via 154 for connecting the semiconductor substrate 120 and the semiconductor substrate 220 is arranged.

- a through via 154 in the figure connects the wirings 152 and 252 .

- the through via 154 can be made of columnar tungsten (W) or the like.

- the semiconductor substrate 320 is a semiconductor substrate having a wiring region 350 and laminated on the semiconductor substrate 220 .

- the vertical driving circuit 4 and the like are arranged on the semiconductor substrate 320 .

- an insulating layer 351, wiring 352, via plugs 354 and connection pads 359 are arranged.

- the gate voltage of the gate electrode 143 of the overflow gate 109 is supplied from the vertical driving circuit 4 via the wiring 152, the through via 154, the wiring 252, the via plug 253, the connection pads 259 and 359, and the via plug 354.

- the number of through vias 154 can be reduced.

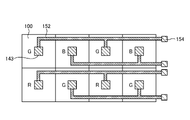

- FIGS. 26A and 26B are diagrams showing configuration examples of an imaging device according to the eighth embodiment of the present disclosure.

- 26A and 26B are plan views showing an arrangement example of the wiring 152 on the semiconductor substrate 120.

- FIG. Four pixels 100 are described in FIGS. 26A and 26B. The descriptions of “R”, “G” and “B” of these pixels 100 represent the types of color filters 192 .

- FIG. 26A shows an example in which the gate electrodes 143 of the pixels 100 in which the same type of color filters 192 are arranged are commonly connected to the same wiring 152.

- FIG. A gate voltage is applied to the gate electrode 143 at a common timing in the pixels 100 in which the same type of color filters 192 are arranged. Therefore, the gate voltage of these pixels 100 is supplied from the common wiring 152 .

- FIG. 26B shows an example in which the wiring 152 is commonly connected to the gate electrodes 143 of eight pixels 100 .

- the number of through vias 154 can be further reduced.

- the imaging device 1 as described above can be applied to various electronic devices such as imaging systems such as digital still cameras and digital video cameras, mobile phones with imaging functions, and other devices with imaging functions. can be done.

- FIG. 27 is a block diagram showing a configuration example of an imaging device mounted on an electronic device.

- an imaging device 701 includes an optical system 702, an imaging device 703, and a DSP (Digital Signal Processor) 704. , a recording device 709, and a power supply system 710 are connected, and can capture still images and moving images.

- DSP Digital Signal Processor

- the optical system 702 is configured with one or more lenses, guides image light (incident light) from the subject to the imaging element 703, and forms an image on the light receiving surface (sensor section) of the imaging element 703.

- the image pickup device 703 As the image pickup device 703, the image pickup device 1 having any of the configuration examples described above is applied. Electrons are accumulated in the imaging element 703 for a certain period of time according to the image formed on the light receiving surface via the optical system 702 . A signal corresponding to the electrons accumulated in the image sensor 703 is input to the DSP 704 .

- the DSP 704 performs various signal processing on the signal from the image sensor 703 to obtain an image, and temporarily stores the image data in the memory 708 .

- the image data stored in the memory 708 is recorded in the recording device 709 or supplied to the display device 705 to display the image.

- An operation system 706 receives various operations by a user and supplies an operation signal to each block of the imaging apparatus 701 , and a power supply system 710 supplies electric power necessary for driving each block of the imaging apparatus 701 .

- the technology (the present technology) according to the present disclosure can be applied to various products.

- the technology according to the present disclosure can be realized as a device mounted on any type of moving body such as automobiles, electric vehicles, hybrid electric vehicles, motorcycles, bicycles, personal mobility, airplanes, drones, ships, and robots. may

- FIG. 28 is a block diagram showing a schematic configuration example of a vehicle control system, which is an example of a mobile control system to which the technology according to the present disclosure can be applied.

- a vehicle control system 12000 includes a plurality of electronic control units connected via a communication network 12001.

- the vehicle control system 12000 includes a drive system control unit 12010, a body system control unit 12020, an exterior information detection unit 12030, an interior information detection unit 12040, and an integrated control unit 12050.

- a microcomputer 12051, an audio/image output unit 12052, and an in-vehicle network I/F (Interface) 12053 are illustrated.

- the drive system control unit 12010 controls the operation of devices related to the drive system of the vehicle according to various programs.

- the driving system control unit 12010 includes a driving force generator for generating driving force of the vehicle such as an internal combustion engine or a driving motor, a driving force transmission mechanism for transmitting the driving force to the wheels, and a steering angle of the vehicle. It functions as a control device such as a steering mechanism to adjust and a brake device to generate braking force of the vehicle.