WO2019077908A1 - ジェスチャ入力装置 - Google Patents

ジェスチャ入力装置 Download PDFInfo

- Publication number

- WO2019077908A1 WO2019077908A1 PCT/JP2018/033391 JP2018033391W WO2019077908A1 WO 2019077908 A1 WO2019077908 A1 WO 2019077908A1 JP 2018033391 W JP2018033391 W JP 2018033391W WO 2019077908 A1 WO2019077908 A1 WO 2019077908A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- gesture

- unit

- display

- display unit

- determination

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B60—VEHICLES IN GENERAL

- B60R—VEHICLES, VEHICLE FITTINGS, OR VEHICLE PARTS, NOT OTHERWISE PROVIDED FOR

- B60R16/00—Electric or fluid circuits specially adapted for vehicles and not otherwise provided for; Arrangement of elements of electric or fluid circuits specially adapted for vehicles and not otherwise provided for

- B60R16/02—Electric or fluid circuits specially adapted for vehicles and not otherwise provided for; Arrangement of elements of electric or fluid circuits specially adapted for vehicles and not otherwise provided for electric constitutive elements

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/01—Input arrangements or combined input and output arrangements for interaction between user and computer

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/16—Sound input; Sound output

Definitions

- the present disclosure relates to a gesture input device that enables an operator's gesture input.

- the gesture input device (operation device) of Patent Document 1 includes an imaging unit that captures an operator's hand, a plurality of image display units that individually display operation screens of various in-vehicle devices, and a line of sight that detects a driver's line of sight.

- a detection unit and a gaze area determination unit that detects which image display unit the gaze detected by the gaze detection unit gazes at. Then, when the gaze area determination unit detects that the driver is gazing at any of the image display units, the operation screen of the image display unit being gazed and the shape of the operated hand are superimposed and displayed. It is supposed to be. That is, the image display unit (vehicle-mounted device) to be operated is selected according to the line of sight of the driver.

- Patent Document 1 when detecting whether or not the driver is gazing at the image display unit, the gaze is fixed when the sight line stays in one place (any image display unit) for a predetermined time or more. Since it is determined that the vehicle is in operation, there is a possibility that basic safety confirmation (forward confirmation etc.) during driving may be neglected.

- An object of the present disclosure is to provide a gesture input device capable of selecting an operation target without interfering with safety confirmation related to driving.

- a gesture detection unit that detects the gesture operation of the operator; And a determination unit. If the determination unit determines that the gesture operation detected by the gesture detection unit is a manual insertion gesture pointed by the hand of the operator, the determination unit selects and confirms the pointed target as the operation target.

- the operator since the operation target is selected and confirmed by the operator's manual feed gesture, the operator needs to gaze at the operation target as in the prior art in order to select and confirm the operation target. There is no Therefore, it is possible to select and confirm the operation target without disturbing the safety confirmation related to driving.

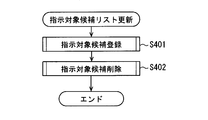

- FIG. 21 is a sub-flowchart showing the gist of updating of an instruction target candidate list in FIG. 20.

- the gesture input device 100A according to the first embodiment will be described with reference to FIGS. 1 to 4B.

- the gesture input device 100A according to the present embodiment is mounted on a vehicle, and performs an input operation on various vehicle devices based on the movement (gesture operation) of a specific part of the body of a driver (operator).

- an air conditioner 111 for air conditioning the vehicle interior a head-up display device 112 (hereinafter referred to as HUD device 112) for displaying various vehicle information as a virtual image on the front window, current position display of the vehicle or purpose

- a plurality of car navigation devices 113 (hereinafter referred to as car navigation devices 113) and the like for performing guidance display on the ground are set.

- the air conditioner 111, the HUD device 112, the car navigation system 113, and the like correspond to the operation target.

- the various vehicle devices are not limited to the above, and include room lamp devices, audio devices, rear seat sunshade devices, electric seat devices, glow box opening and closing devices, and the like.

- the air conditioning apparatus 111 has the air conditioning display part 111a which displays the operation state of an air conditioning, the switch for an air conditioning condition setting, etc.

- the air conditioning display unit 111a is formed of, for example, a liquid crystal display or an organic EL display.

- the air conditioning display unit 111a is disposed, for example, at a central position in the width direction of the vehicle, on the surface on the operator's side of the instrument panel.

- the air conditioning display unit 111a has, for example, a touch panel, and can input various switches by a touch operation by the driver's finger.

- the air conditioning display unit 111a corresponds to a display unit or an arbitrary display unit.

- the HUD device 112 also includes a head-up display unit 112 a that displays various types of vehicle information (for example, vehicle speed and the like).

- the head-up display unit 112a is formed at a position facing the driver of the front window.

- the head-up display unit 112 a corresponds to a display unit or another display unit.

- the car navigation apparatus 113 has a navigation display unit 113a for displaying navigation information such as the position of the vehicle on the map and guidance of a destination.

- the navigation display unit 113a is formed of, for example, a liquid crystal display or an organic EL display.

- the navigation display unit 113a is also used as the above-mentioned air conditioning display unit 111a, and switching of the screen is performed by the display control unit 140 described later, and the air conditioning information and the navigation information are switched and displayed. It is supposed to be.

- a navigation switch for setting destination guidance is displayed on the navigation display unit 113a, and as in the case of the air conditioning display unit 111a, an input to the navigation switch is possible by a touch operation by the driver's finger.

- the navigation display unit 113a corresponds to the display unit.

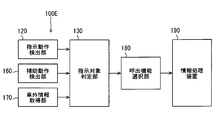

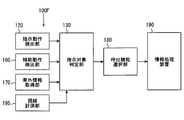

- the gesture input device 100A includes, as shown in FIG. 1, an instruction operation detection unit 120, an instruction target determination unit 130, a display control unit 140, and the like.

- the pointing operation detection unit 120 is a gesture detection unit that detects the movement of a specific part of the driver's body.

- the pointing motion detection unit 120 detects a specific part of the driver's body by an image, and detects the movement of the driver's specific part from the change in the image with respect to the passage of time.

- the specific part of the driver's body may be, for example, the finger of the driver's hand, the palm of the hand, an arm (manual gesture) or the like.

- a finger of the hand (a pointing gesture) is mainly used as a specific part of the driver's body.

- the pointing motion detection unit 120 a sensor, a camera, or the like that forms a two-dimensional image or a three-dimensional image can be used.

- the sensor examples include a near infrared sensor using near infrared light, a far infrared sensor using far infrared light, and the like.

- a camera for example, a stereo camera capable of simultaneously photographing from a plurality of directions and recording information in the depth direction, or a ToF camera for photographing an object stereoscopically using a ToF (Time of Flight) method is there.

- a camera is used as the pointing operation detection unit 120.

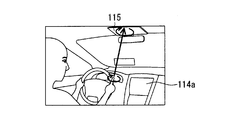

- the pointing motion detection unit 120 is attached to, for example, a vehicle ceiling, and detects a driver's finger (the shape of a finger, movement, which vehicle equipment, etc. is pointed to) pointed while holding the steering wheel. It has become.

- Various patterns can be determined in advance for finger movements (gesture), but here, as a selection confirmation, a pointing gesture for pointing one finger at a vehicle device to be operated, and A flick gesture or the like that shakes a finger quickly is set for the next operation after that.

- the gesture signal of the finger detected by the pointing operation detection unit 120 is output to the pointing object determination unit 130 described later.

- the instruction target determination unit 130 is a determination unit that determines, from the gesture signal obtained by the instruction operation detection unit 120, which vehicle device the driver has set as the operation target. That is, when the pointing object determination unit 130 determines that the gesture operation detected by the pointing operation detection unit 120 is the pointing gesture indicated by the driver's finger, the pointing target is selected and determined as the operation target. It is supposed to

- the display control unit 140 is a control unit that controls the display state of the plurality of display units 111 a to 113 a according to the determination content by the instruction target determination unit 130. Details of display control performed by the display control unit 140 will be described later.

- the configuration of the gesture input device 100A according to the present embodiment is as described above, and the operation and effects will be described below with reference to FIGS. 2 to 4B.

- the pointing motion detection unit 120 detects a pointing gesture by the driver. That is, the pointing motion detection unit 120 detects the motion of the driver's finger as an image, and outputs the detected image data (gesture signal) to the pointing target determination unit 130.

- step S110 the pointing object determination unit 130 determines from the image data whether the finger movement is a pointing gesture pointing to the display unit of any vehicle device (state in FIG. 3A).

- the display unit of any vehicle device is, for example, the air conditioning display unit 111a.

- step S120 the pointing object determination unit 130 determines whether the pointing gesture is continued to a display unit of any vehicle device for a predetermined time or more.

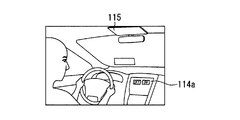

- step S120 If it is not determined at step S120, the process returns to step S100, and steps S110 to S120 are repeated. However, if an affirmative determination is made in step S120, the instruction target determination unit 130 selects and confirms the display unit of the pointed source (for example, the air conditioning display unit 111a) in step S130 as the operation target.

- the pointed source for example, the air conditioning display unit 111a

- the instruction target determination unit 130 determines the switching operation in step S140. That is, it is determined whether there is another pointing gesture (hand-in-hand gesture) to another display unit (for example, the head-up display unit 112a) different from the air conditioning display unit 111a pointed by the pointing gesture.

- another pointing gesture hand-in-hand gesture

- another display unit for example, the head-up display unit 112a

- step S150 as another pointing gesture in step S140, it is determined whether or not it is a flick operation defined in advance, and when it is determined in step S150 that no is given, the pointing object determination unit 130 determines in step S160. It is determined whether or not the pointing gesture continues for a certain period of time or more for the display units other than the target (state in FIG. 3B).

- step S160 the display control unit 140 causes the display in the original air conditioning display unit 111a pointed to earlier to be pointed next in step S170.

- the up display unit 112 a is displayed. That is, the air conditioning information displayed on the air conditioning display unit 111a is selected and confirmed by the first finger pointing, and the air conditioning information is switched and displayed on the head-up display unit 112a pointed next.

- step S170 the process proceeds to step S190. If NO at step S160, the process skips step S170 and shifts to step S190 described later.

- step S150 when the determination in step S150 is affirmative, the display control unit 140 switches the display of the original air conditioning information in the air conditioning display unit 111a to the display content of another vehicle device, for example, navigation information in step S180 (FIG. 4B ). That is, the air conditioning information displayed on the air conditioning display unit 111a is selected and confirmed by the first finger pointing (FIG. 4A), and the air conditioning information is switched to navigation information by another pointing gesture (flick operation) It is

- step S190 the end of the switching operation is confirmed, and the process returns to step S100. If the switching operation has not ended, the process returns to step S150.

- the driver can select and confirm the operation target as in the related art. There is no need to pay attention to the operation target. Therefore, it is possible to select and confirm the operation target without disturbing the safety confirmation related to driving.

- a pointing gesture is adopted as a manual insertion gesture, and a pointing object can be selected and confirmed as an operation target by using the pointing gesture, and intuitive gesture operations can be made easy to understand.

- the pointing gesture for example, while holding the steering, it is possible to point at the vehicle device and steering operation is not hindered.

- a gesture for moving the display content from the original display unit (the air conditioning display unit 111a) to the next display unit (the head-up display unit 112a) described in FIG. A gesture to carry to the part side, or a gesture to pay from the original display unit to the next display unit, or a gesture using a hand (whole arm) instead of the pointing (manual gesture), etc. Good.

- a gesture for changing the display content described in FIG. 4B instead of the flick operation gesture, a gesture in which the display portion is pointed and curled, a gesture in which a finger is tapped with steering, and a finger is used instead. , Or may be a gesture using the hand (entire arm).

- the instruction target determination unit 130 determines a predetermined default gesture. Then, the display control unit 140 may perform predetermined default display (original air conditioning display) for a predetermined time.

- the default gesture may be, for example, a gesture that holds down by hand. Thereby, the original display content in the original display part (air-conditioning display part 111a) can be confirmed.

- FIGS. 5 to 7B A gesture input device 100B according to a second embodiment is shown in FIGS. 5 to 7B.

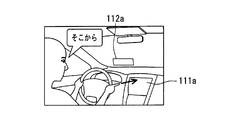

- a voice detection unit 150 that detects a driver's voice and outputs the detected voice to the instruction target determination unit 130 is added to the first embodiment.

- a microphone is used as the voice detection unit 150 to detect the driver's voice and to output the detected voice signal to the instruction target determination unit 130.

- the pointing object determination unit 130 and the display control unit 140 set the condition (determination result) of the gesture operation from the voice detection unit 150.

- the voice condition is added to confirm the selection and to change the display.

- the pointing object determination unit 130 determines the pointing gesture from the pointing operation detection unit 120 and the driver “from there” from the voice detection unit 150. Based on the utterance, the display unit (air conditioning display unit 111a) to be operated is selected and confirmed. Further, as shown in FIG. 6B, the pointing object determination unit 130 performs a pointing gesture on another display unit (head-up display unit 112a) from the pointing operation detection unit 120, and “to there” from the voice detection unit 150. The display control unit 140 is instructed to change the display so that the display content of the original display unit (air conditioning display unit 111a) is moved to another display unit (head-up display unit 112a) based on the driver's utterance Do.

- the pointing object determination unit 130 when switching the display content, as shown in FIG. 7A, the pointing object determination unit 130 generates the pointing gesture from the pointing operation detection unit 120 and the driver's utterance "it" from the voice detection unit 150. And select and confirm the display unit (the air conditioning display unit 111a) to be operated. Further, as shown in FIG. 7B, based on the pointing finger gesture continued from the pointing motion detection unit 120 and the driver's utterance "Navi" from the voice detection unit 150, as shown in FIG. 7B. The display control unit 140 is instructed to change the display so that the display content of the original display unit (air conditioning display unit 111a) is switched to another display content.

- the driver's voice is also used to select and confirm the operation target, and the display form in the display unit is further changed. As a result, it is possible to make sure that the gesture input operation can be performed without the input by the unintended gesture.

- FIGS. 8 to 10C A gesture input device 100C according to a third embodiment is shown in FIGS. 8 to 10C.

- an operation screen for any vehicle equipment is displayed on the display unit after selecting and confirming any vehicle equipment (operation target) with the finger gesture according to the first embodiment.

- an operation gesture is performed on the operation screen, an input operation is accepted.

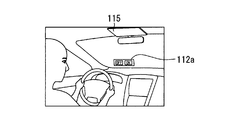

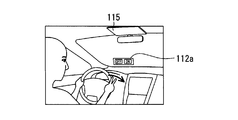

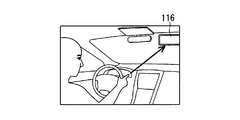

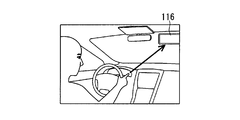

- room lamp device 115 will be described as an example of an input operation as an arbitrary operation target among a plurality of vehicle devices. As shown in FIGS. 10A, 10B, and 10C, the room lamp device 115 is provided, for example, at the front of the ceiling of the vehicle, at a position close to the rearview mirror, and operated as a switch when necessary. The lighting makes it possible to illuminate the vehicle interior.

- steps S100 to S120 are the same as steps S100 to S120 described in FIG. 2 of the first embodiment. That is, in step S100, a pointing gesture by the driver is detected, and in step S110, it is determined whether a pointing gesture is being performed on an arbitrary operation target (here, the room lamp device 115) (state in FIG. 10A). ). Then, in step S120, it is determined whether the pointing gesture is continued toward the room lamp device 115 for a predetermined time or more.

- step S230 the instruction target determination unit 130 sets, for example, the head for the operation related to the room lamp device 115 pointed to the display control unit 140 as the display unit.

- the up display unit 112 a is displayed. For example, a switch image for selecting whether to turn on or turn off the room lamp device 115 is displayed on the operation screen (state in FIG. 10B).

- step S240 the pointing object determination unit 130 determines whether a flick operation with a plurality of fingers has been newly made.

- the multiple fingers are, for example, two fingers.

- the flick operation with multiple fingers corresponds to an operation gesture different from the manual feed gesture. If it determines with no by step S240, step S240 will be repeated. When an affirmative determination is made in step S240, the process proceeds to step S250.

- step S250 the instruction target determination unit 130 determines whether the direction of the flick operation with a plurality of fingers is the direction of ON (right direction in FIG. 10C) displayed in the head-up display unit 112a or the direction of OFF (left direction in FIG. 10C). ) Is determined.

- step S250 If it is determined that the direction of the flick operation by the plurality of fingers is the direction of ON in step S250, the room lamp device 115 is turned on in step S260 (an input operation for lighting is received Will be

- step S250 when it is determined that the direction of the flick operation by the plurality of fingers is the direction of OFF in step S250, the room lamp device 115 is extinguished in step S270 (an input operation for extinguishing is performed. Will be accepted).

- step S250 the instruction target determination unit 130 skips steps S260 and S270 and proceeds to step S280 if the direction of the flick operation with a plurality of fingers is other than the ON and OFF directions.

- step S280 the end of the operation is confirmed in step S280, and the process returns to step S100. If the operation has not ended, the process returns to step S240.

- the operation screen of the vehicle device (room lamp device 115) selected and confirmed by the finger is displayed on the display unit (head-up display unit 112a), and the operation screen is displayed.

- the input operation of the vehicle device is accepted by performing the operation gesture different from the first pointing gesture. Therefore, even for vehicle devices that are hard to reach, input operation can be easily performed at hand.

- FIGS. 11, 12A, 12B, 12C and 12D A gesture input device 100D according to the fourth embodiment is shown in FIGS. 11, 12A, 12B, 12C and 12D.

- the fourth embodiment displays an operation screen for an arbitrary vehicle device after selecting and confirming an arbitrary vehicle device (operation target) by the finger gesture with respect to the first embodiment, and the operation screen

- the input operation is accepted.

- the room lamp device 115 is described as a target example of the input operation among the plurality of vehicle devices as in the third embodiment.

- the display unit for displaying the operation screen is, for example, a center display unit 114a disposed at the center position in the width direction of the vehicle on the surface on the operator side of the instrument panel.

- the center display unit 114a displays operation states of various vehicle devices, displays various switches for setting, and the like, and serves as a display unit capable of switching the display for each of the various vehicle devices.

- the center display unit 114 a has a touch panel, and can input various displayed switches by a touch operation by the driver's finger.

- the position of the center display unit 114a is a position where the driver can easily perform touch operation. Further, input setting can be made to the center display unit 114a also by the driver's utterance.

- the room lamp device 115 is circled while pointing at the room lamp device 115 here. It is like a gesture.

- a screen for operation according to the room lamp device 115 is displayed on the center display unit 114 a.

- a switch image for selecting whether to turn on or turn off the room lamp device 115 is displayed on the operation screen (the state of FIG. 12B).

- the operation screen of the vehicle device (room lamp device 115) selected and confirmed by the finger is displayed on the display unit (center display unit 114a) at hand, and the operation screen is displayed.

- the input operation is accepted by direct touch operation, or the input operation is accepted by generation. Therefore, as in the third embodiment, the input operation can be easily performed at hand even for vehicle equipment that is hard to reach.

- the operation target is a plurality of information display units 116 provided outside the vehicle and providing specific information to the driver.

- the information display unit 116 pointed by the pointing gesture of the driver is selected and confirmed.

- the information display unit 116 is, for example, a plurality of display units including a guidance display provided at the side of a road, a landmark (building), and the like.

- the guidance display shows, for example, place names, facility names (service areas, parking lots, etc.), etc., and shows the driver in which direction they are or how many kilometers they are ahead.

- the gesture input device 100E includes an instruction operation detection unit 120, an auxiliary operation detection unit 160, an external information acquisition unit 170, an instruction target determination unit 130, a calling function selection unit 180, an information processing device 190 Have.

- the pointing motion detection unit 120 is the same as that described in the first embodiment, and detects a pointing gesture of the driver.

- the pointing finger gesture signal detected by the pointing motion detection unit 120 is output to the pointing object determination unit 130.

- a camera similar to that of the pointing motion detection unit 120 is used as the assist motion detection unit 160 to detect a driver's assist gesture.

- the assistance gesture corresponds to another gesture.

- the assist gesture for example, peeping, winking, movement of a tap operation with a finger for steering, and the like are set in advance.

- the assist operation detection unit 160 is attached to, for example, a vehicle ceiling, detects an assist gesture of the driver, and outputs the assist gesture signal to the instruction target determination unit 130.

- the assistance movement detection unit 160 may be used as the pointing operation detection unit 120 as well.

- the outside information acquiring unit 170 acquires a plurality of information display units 116 existing in front of the vehicle by capturing a view in front of the outside of the vehicle. For example, a camera similar to the pointing operation detecting unit 120 is used .

- the outside information acquiring unit 170 is attached, for example, in the front of the vehicle ceiling, in such a way as to be juxtaposed to a rearview mirror, and directed forward at the outside of the vehicle.

- the information display unit 116 (signal of the information display unit) acquired by the external information acquisition unit 170 is output to the instruction target determination unit 130.

- the pointing object determination unit 130 associates the finger pointing gesture detected by the pointing operation detection unit 120 with the plurality of information display units 116 acquired by the external information acquisition unit 170, and among the plurality of information display units 116, The information display unit 116 pointed by the pointing gesture is selected and confirmed as the operation target.

- the information display unit 116 (signal of the information display unit) selected and confirmed is output to the calling function selection unit 180 described later.

- the instruction target determination unit 130 grasps the presence or absence of the occurrence of the assistance gesture from the assistance gesture signal detected by the assistance operation detection unit 160.

- the call function selection unit 180 selects a menu such as a detailed display to be provided to the driver or a purpose guidance on the basis of the information display unit 116 selected and confirmed by the instruction target determination unit 130 in a predetermined table.

- An execution instruction to the information processing apparatus 190 is issued.

- the information processing device 190 is, for example, the HUD device 112 (display device), the car navigation device 113, and the like.

- the configuration of the gesture input device 100E according to the present embodiment is as described above, and the operation and effects will be described below with reference to FIGS. 14 to 16.

- the pointing motion detection unit 120 detects a pointing gesture by the driver. That is, the pointing motion detection unit 120 detects the motion of the driver's finger as an image, and outputs the detected image data (gesture signal) to the pointing target determination unit 130.

- step S310 the outside-vehicle information acquisition unit 170 acquires a plurality of information display units 116 by capturing a scene in front of the outside of the vehicle, and outputs the acquired information display unit signals to the instruction target determination unit 130. .

- step S320 the pointing object determination unit 130 associates the pointing gesture detected by the pointing operation detection unit 120 with the plurality of information display units 116 acquired by the external information acquisition unit 170. Then, among the plurality of information display units 116, an arbitrary information display unit 116 pointed by a pointing gesture is detected (state in FIG. 15A).

- step S330 the pointing object determination unit 130 determines whether the pointing gesture in step S320 is continued toward an arbitrary information display unit 116 for a predetermined time or more.

- step S330 the instruction object determination part 130 will set the arbitrary information display parts 116 as a candidate of an instruction object (operation object) by step S340.

- step S330 it is determined in step S350 whether or not a predetermined time has elapsed without a pointing gesture, and if positive determination is made, the process proceeds to step S390, and if negative determination is made, the process proceeds to step S360. That is, in a state in which a pointing gesture is not made, and in a state in which a predetermined time has not elapsed, the detection of peeping in the next step S360 is made effective.

- step S360 the instruction target determination unit 130 detects the driver's nodding gesture from the assist operation detection unit 160 (state in FIG. 15B). Then, in step S370, it is determined whether or not there is an instruction target by the driver, and if it is determined affirmative, in step S380, the instruction target determination unit 130 selects and confirms the instruction target in step S340 as the operation target, and the call function It is output to the selection unit 180. If NO is determined in step S370, the present control is ended.

- the calling function selection unit 180 may, for example, display the facility or building on the head-up display unit 112 a of the HUD device 112 as shown in FIG. Display detailed information of.

- the calling function selection unit 180 performs purpose guidance to the head-up display unit 112 a or the car navigation apparatus 113. Give instructions.

- step S380 the instruction

- the external information acquisition unit 170 can be provided, and the information display unit 116 outside the vehicle can be operated by using the pointing gesture and the assist gesture, as well as the vehicle device.

- the operation target is selected and determined based on the pointing gesture, so that it is not necessary to look at the operation target as in the prior art, which hinders safety confirmation related to driving. I have not.

- the assistant gesture (the gesture of peeping) is added to the pointing gesture and the selection confirmation of the information display unit 116 is made, the selection confirmation which surely reflects the driver's intention can be made.

- the external information acquisition unit 170 is provided to acquire the external information display unit 116.

- the information display unit using road-to-vehicle communication or GPS etc. 116 may be acquired.

- the nodding gesture is used as the auxiliary gesture in the fifth embodiment, the invention is not limited thereto, and may be a wink shown in FIG. 17B, a tap operation gesture on steering shown in FIG. 18B, etc. It is.

- control content of step S360 described with reference to FIG. 14 may be changed from “skip” to “wink” or “tap operation” or the like.

- FIGS. 19 to 24B A gesture input device 100F according to a sixth embodiment is shown in FIGS. 19 to 24B.

- a sight line measurement unit 195 is provided in the fifth embodiment to create a plurality of information display units 116 visually recognized by the driver as a registration list. Then, when there is a pointing gesture on any of the plurality of information display units listed in the registration list, it is selected and confirmed as the operation target.

- the sight line measurement unit 195 is attached to, for example, a vehicle ceiling, and measures the sight line direction from the position of the face of the driver and the position of the pupil relative to the direction of the face.

- the line-of-sight measurement unit 195 is configured to output the measured signal of the line-of-sight direction of the driver to the instruction target determination unit 130.

- the line of sight measurement unit 195 may be used as the pointing movement detection unit 120 as well.

- the configuration of the gesture input device 100F according to the present embodiment is as described above, and the operations and effects will be described below with reference to FIGS. 20 to 24B.

- the instruction target determination unit 130 updates the instruction target candidate list in step S400.

- the instruction target candidate list is a list of a plurality of information display sections 116 visually recognized by the driver as objects that may be instructed.

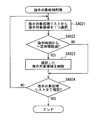

- the update procedure of the instruction target candidate list is executed by registering the instruction target candidate in step S401 and deleting the instruction target candidate in step S402, as shown in the subflow of FIG.

- step S4011 The registration of the instruction target candidate in step S401 is executed based on the subflow of FIG. That is, in step S4011, the sight line measurement unit 195 measures the changing sight line direction of the driver. Next, in step S4012, the out-of-vehicle information acquisition unit 170 acquires out-of-vehicle information (a plurality of information display units 116). Next, in step S4013, the instruction target determination unit 130 associates the sight line direction with the information display unit 116, and detects a plurality of information display units 116 visually recognized by the driver as the visual targets (state in FIG. 24A). ).

- step S4014 the instruction target determination unit 130 determines whether an arbitrary information display unit 116 has been visually recognized for a predetermined time or more. If a negative determination is made in step S4014, the present control is ended. On the other hand, if the determination in step S4014 is affirmative, the instruction target determination unit 130 determines in step S4015 whether the visually recognized information display unit 116 has been registered in the candidate list (registration list) of the instruction target. If an affirmative determination is made in step S4015, the process proceeds to step S4016. If a negative determination is made, the process proceeds to step S4017.

- step S4016 since the visual target has already been registered in the candidate list for the target, the target determination unit 130 does not change the candidate list at all, and does not change the candidate list (time when visual recognition occurred) Update only). In other words, at this time, the candidate list is left to be checked.

- step S4017 since the visual target is not registered in the candidate list of the instruction target, the instruction target determination unit 130 registers the visual target in the candidate list as the instruction target. That is, the information display unit 116 that is newly recognized is added to the candidate list.

- step S402 the deletion of the instruction target candidate in step S402 is executed based on the subflow of FIG. That is, in step S4021, the pointing target determination unit 130 selects one pointing target candidate from a plurality of pointing targets (candidate list).

- step S4022 the instruction target determination unit 130 determines whether a predetermined time has elapsed from the instruction time. If a negative determination is made, the process proceeds to step S2024. If a positive determination is made, the instruction target determination unit 130 deletes the selected instruction target candidate in step S4023. That is, instruction target candidates that have not been viewed again are deleted after a predetermined time or more.

- step S4024 it is determined whether or not all the confirmations in steps 4021 to 4023 have been performed for each of the plurality of instruction targets. If a negative determination is made, steps S4021 to S4024 are repeated, and if a positive determination is made, this control is ended.

- the pointing operation detection unit 120 detects a pointing gesture by the driver in step S410, and the out-of-vehicle information acquisition unit 170 in step S420.

- a plurality of information display units 116 are acquired by photographing the scene in front of the outside of the vehicle.

- step S430 the pointing object determination unit 130 associates the pointing gesture detected by the pointing operation detection unit 120 with the plurality of information display units 116 acquired by the external information acquisition unit 170. Then, among the plurality of information display units 116, an arbitrary information display unit 116 pointed by a pointing gesture is detected (state in FIG. 24B).

- step S440 the pointing object determination unit 130 determines whether the pointing gesture in step S430 is continued toward the arbitrary information display unit 116 for a predetermined time or more. If a negative determination is made, this control is ended.

- step S440 the instruction target determination unit 130 determines in step S450 whether the arbitrary information display unit 116 has been registered in the instruction target candidate list. If a negative determination is made, this control is ended.

- step S450 If an affirmative determination is made in step S450, the designated object is selected and confirmed as the operation target in step S460, and is output to the call function selection unit 180.

- the call function selection unit 180 causes the head-up display unit 112 a of the HUD device 112 to display detailed information on facilities and buildings.

- the information display unit 116 selected and confirmed is, for example, a place name to which the driver is heading, the call function selection unit 180 instructs the head-up display unit 112 a or the car navigation apparatus 113 to perform purpose guidance. .

- the visually recognized target (information display unit 116) is placed on the target list (registration list), and the manual feed gesture is performed on any of the plurality of visual targets picked up in the target list.

- the instructed target (information display unit 116) is selected and confirmed as the operation target. Therefore, among the plurality of information display sections 116, selection and confirmation are made on the assumption that the driver visually recognizes, and therefore, selection and selection of the driver's unintended ones will not be made.

- the instruction target candidate list of the present embodiment may be adopted in the fifth embodiment and the modification of the fifth embodiment.

- a vibration generating unit for vibrating the steering may be provided, and the steering may be vibrated after the instruction target is selected and confirmed.

- a generation unit may be provided to generate sound effects and sounds, and after the instruction target is selected and confirmed, sound effects (peep) and sounds (selected and determined) may be transmitted to the driver.

- peep sound effects

- sounds selected and determined

- an end gesture for ending the input operation for example, a gesture for stopping pointing and grasping the steering, or a gesture to be performed by a hand is set in advance, and the pointing object determination unit 130 determines this end gesture.

- one input operation may be ended.

- the pointing operation detection unit 120 the sensor or the camera is used as the pointing operation detection unit 120.

- a glove glove

- a sensor capable of detecting bending of a finger The pointing gesture may be detected using

- the target operator is not limited to the driver but may be a passenger.

- the assistant driver by performing the above-described various gestures, the assistant driver also performs gesture recognition by the pointing operation detection unit 120, and can operate various vehicle devices.

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- General Engineering & Computer Science (AREA)

- Human Computer Interaction (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Mechanical Engineering (AREA)

- Health & Medical Sciences (AREA)

- Audiology, Speech & Language Pathology (AREA)

- General Health & Medical Sciences (AREA)

- User Interface Of Digital Computer (AREA)

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| JP2017203204A JP6819539B2 (ja) | 2017-10-20 | 2017-10-20 | ジェスチャ入力装置 |

| JP2017-203204 | 2017-10-20 |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| WO2019077908A1 true WO2019077908A1 (ja) | 2019-04-25 |

Family

ID=66174023

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/JP2018/033391 Ceased WO2019077908A1 (ja) | 2017-10-20 | 2018-09-10 | ジェスチャ入力装置 |

Country Status (2)

| Country | Link |

|---|---|

| JP (1) | JP6819539B2 (enExample) |

| WO (1) | WO2019077908A1 (enExample) |

Families Citing this family (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP7495795B2 (ja) * | 2020-02-28 | 2024-06-05 | 株式会社Subaru | 車両の乗員監視装置 |

| JP7157188B2 (ja) * | 2020-10-28 | 2022-10-19 | 株式会社日本総合研究所 | 情報処理方法、情報処理システム及びプログラム |

Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2001216069A (ja) * | 2000-02-01 | 2001-08-10 | Toshiba Corp | 操作入力装置および方向検出方法 |

| JP2005178473A (ja) * | 2003-12-17 | 2005-07-07 | Denso Corp | 車載機器用インターフェース |

| JP2007080060A (ja) * | 2005-09-15 | 2007-03-29 | Matsushita Electric Ind Co Ltd | 対象物特定装置 |

| JP2016091192A (ja) * | 2014-10-31 | 2016-05-23 | パイオニア株式会社 | 虚像表示装置、制御方法、プログラム、及び記憶媒体 |

-

2017

- 2017-10-20 JP JP2017203204A patent/JP6819539B2/ja not_active Expired - Fee Related

-

2018

- 2018-09-10 WO PCT/JP2018/033391 patent/WO2019077908A1/ja not_active Ceased

Patent Citations (4)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JP2001216069A (ja) * | 2000-02-01 | 2001-08-10 | Toshiba Corp | 操作入力装置および方向検出方法 |

| JP2005178473A (ja) * | 2003-12-17 | 2005-07-07 | Denso Corp | 車載機器用インターフェース |

| JP2007080060A (ja) * | 2005-09-15 | 2007-03-29 | Matsushita Electric Ind Co Ltd | 対象物特定装置 |

| JP2016091192A (ja) * | 2014-10-31 | 2016-05-23 | パイオニア株式会社 | 虚像表示装置、制御方法、プログラム、及び記憶媒体 |

Also Published As

| Publication number | Publication date |

|---|---|

| JP2019079097A (ja) | 2019-05-23 |

| JP6819539B2 (ja) | 2021-01-27 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| US10466800B2 (en) | Vehicle information processing device | |

| US9649938B2 (en) | Method for synchronizing display devices in a motor vehicle | |

| JP2023060258A (ja) | 表示装置、制御方法、プログラム及び記憶媒体 | |

| US20190004614A1 (en) | In-Vehicle Device | |

| US10346118B2 (en) | On-vehicle operation device | |

| JP2019175449A (ja) | 情報処理装置、情報処理システム、移動体、情報処理方法、及びプログラム | |

| WO2016084360A1 (ja) | 車両用表示制御装置 | |

| WO2019016936A1 (ja) | 操作支援装置および操作支援方法 | |

| CN105523041A (zh) | 车道偏离警告系统和用于控制该系统的方法 | |

| CN108153411A (zh) | 用于与图形用户界面交互的方法和装置 | |

| KR20170010066A (ko) | 차량 내 사용자 인터페이스 제공 방법 및 그 장치 | |

| JP2011102732A (ja) | ナビゲーションシステム | |

| WO2018061603A1 (ja) | ジェスチャ操作システム、ジェスチャ操作方法およびプログラム | |

| WO2019077908A1 (ja) | ジェスチャ入力装置 | |

| JP2014021748A (ja) | 操作入力装置及びそれを用いた車載機器 | |

| JP2011255812A (ja) | 駐車支援装置 | |

| WO2018116565A1 (ja) | 車両用情報表示装置及び車両用情報表示プログラム | |

| JP2008185452A (ja) | ナビゲーション装置 | |

| TWM564749U (zh) | 車輛多螢幕控制系統 | |

| JP2009126366A (ja) | 車両用表示装置 | |

| JP2020181279A (ja) | 車両用メニュー表示制御装置、車載機器操作システム、及びguiプログラム | |

| WO2016031152A1 (ja) | 車両用入力インターフェイス | |

| CN103857551A (zh) | 用于提供在车辆中的操作装置的方法和操作装置 | |

| JP2013250942A (ja) | 入力システム | |

| JP2018063521A (ja) | 表示制御システムおよび表示制御プログラム |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| 121 | Ep: the epo has been informed by wipo that ep was designated in this application |

Ref document number: 18868275 Country of ref document: EP Kind code of ref document: A1 |

|

| NENP | Non-entry into the national phase |

Ref country code: DE |

|

| 122 | Ep: pct application non-entry in european phase |

Ref document number: 18868275 Country of ref document: EP Kind code of ref document: A1 |