WO2018203501A1 - Object control system and object control method - Google Patents

Object control system and object control method Download PDFInfo

- Publication number

- WO2018203501A1 WO2018203501A1 PCT/JP2018/016759 JP2018016759W WO2018203501A1 WO 2018203501 A1 WO2018203501 A1 WO 2018203501A1 JP 2018016759 W JP2018016759 W JP 2018016759W WO 2018203501 A1 WO2018203501 A1 WO 2018203501A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- user

- internal state

- emotion

- robot

- management unit

- Prior art date

Links

Images

Classifications

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B25—HAND TOOLS; PORTABLE POWER-DRIVEN TOOLS; MANIPULATORS

- B25J—MANIPULATORS; CHAMBERS PROVIDED WITH MANIPULATION DEVICES

- B25J11/00—Manipulators not otherwise provided for

- B25J11/0005—Manipulators having means for high-level communication with users, e.g. speech generator, face recognition means

- B25J11/001—Manipulators having means for high-level communication with users, e.g. speech generator, face recognition means with emotions simulating means

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B25—HAND TOOLS; PORTABLE POWER-DRIVEN TOOLS; MANIPULATORS

- B25J—MANIPULATORS; CHAMBERS PROVIDED WITH MANIPULATION DEVICES

- B25J11/00—Manipulators not otherwise provided for

- B25J11/0005—Manipulators having means for high-level communication with users, e.g. speech generator, face recognition means

- B25J11/0015—Face robots, animated artificial faces for imitating human expressions

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B25—HAND TOOLS; PORTABLE POWER-DRIVEN TOOLS; MANIPULATORS

- B25J—MANIPULATORS; CHAMBERS PROVIDED WITH MANIPULATION DEVICES

- B25J11/00—Manipulators not otherwise provided for

- B25J11/003—Manipulators for entertainment

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10L—SPEECH ANALYSIS OR SYNTHESIS; SPEECH RECOGNITION; SPEECH OR VOICE PROCESSING; SPEECH OR AUDIO CODING OR DECODING

- G10L25/00—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00

- G10L25/48—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00 specially adapted for particular use

- G10L25/51—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00 specially adapted for particular use for comparison or discrimination

- G10L25/63—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00 specially adapted for particular use for comparison or discrimination for estimating an emotional state

Definitions

- the present invention relates to a technique for controlling the behavior of an object that is an actual object or a virtual object.

- the present inventor has paid attention to the possibility of utilizing the robot as a user's experience of co-viewing. For example, during game play by the user, the robot watching the game play beside the user, and being happy or sad with the user increases the user's affinity for the robot, and motivation to play the game. It is expected to improve. Further, not only for games but also for movies, TV programs, and the like, it is expected that the user can enjoy the content more by watching with the robot than when watching alone.

- the present invention has been made in view of these problems, and an object of the present invention is to provide a technique by which a user can obtain a co-view experience with an object such as a robot.

- an aspect of the present invention is an object control system that controls an object, and an emotion estimation unit that estimates a user's emotion and a user that stores an internal state of the user including the user's emotion Internal state storage unit, object internal state storage unit that stores the internal state of the object including the emotion of the object, and internal state management that manages the internal state of the object and the internal state of the user based on the estimated user emotion

- An behavior management unit that determines the behavior of the object based on the internal state of the object, and an output processing unit that causes the object to perform the behavior determined by the behavior management unit.

- the method includes estimating a user's emotion, managing a user's internal state including the user's emotion, managing an object's internal state including the object's emotion, and estimating the user's emotion.

- the method includes updating the internal state of the object and the internal state of the user, determining the behavior of the object based on the internal state of the object, and causing the object to perform the determined behavior.

- any combination of the above components, the expression of the present invention converted between a method, an apparatus, a system, a computer program, a recording medium on which the computer program is recorded so as to be readable, a data structure, and the like are also included in the present invention. It is effective as an embodiment of

- the object control system provides a mechanism for realizing a co-view experience with an actual object or a virtual object.

- the actual object may be a human-type or pet-type robot, and it is preferable that at least sound can be output, and a motor is provided at the joint part to move an arm, leg, neck, or the like.

- the user puts a robot beside them and watches the content together, and the robot outputs a reaction that sympathizes with the user based on the estimated emotion of the user, or outputs a reaction that repels the user, Communicate with users.

- the virtual object may be a character such as a person or a pet composed of a 3D model, and exists in a virtual space generated by a computer.

- a virtual space constructed when the user wears a head mounted display (HMD)

- the content is played in front of the user, and when the user turns sideways, the virtual character watches the content together.

- HMD head mounted display

- the virtual character also communicates with the user by outputting a reaction that sympathizes with the user, or by outputting a reaction that repels the user.

- Fig. 1 shows an example of the appearance of an actual object.

- This object is a humanoid robot 20 and includes a speaker that outputs sound, a microphone that inputs sound of the outside world, a motor that moves each joint, and a drive mechanism that includes a link that connects the motors.

- the robot 20 preferably has an interactive function of having a conversation with the user, and more preferably has an autonomous movement function.

- FIG. 2 is a diagram for explaining the outline of the object control system 1.

- FIG. 2 shows a situation where the user sits on the sofa and plays a game, and the robot 20 sits on the same sofa and watches the user's game play.

- the robot 20 should have a high level of autonomous movement function that allows the user to sit on the sofa. If the robot 20 does not have such a function, the user can bring the robot 20 to the sofa and Sit sideways.

- the robot 20 may participate in the game as a virtual player, and for example, may virtually operate the opponent team of the baseball game that the user is playing.

- the information processing apparatus 10 receives operation information input to the input device 12 by the user and executes an application such as a game.

- the information processing apparatus 10 may be capable of reproducing content media such as a DVD or streaming content from a content server by connecting to a network.

- the camera 14 is a stereo camera, captures a user sitting in front of the display device 11, which is a television, at a predetermined cycle, and supplies the captured image to the information processing apparatus 10.

- the object control system 1 controls the behavior of the robot 20 that is a real object by estimating the emotion from the reaction of the user.

- the robot 20 that supports the user's game play is basically controlled in behavior so as to express sympathy to the user suddenly together with the user and to give the user a common vision experience.

- the object control system 1 of the embodiment manages the internal state of the robot 20 and the user. For example, even if the user is pleased, if the preference of the robot 20 to the user is low, the object control system 1 is not pleased (not sympathized) together. Control behavior.

- the behavior may be controlled so as to be sad if the user is happy.

- the robot 20 basically expresses emotions by outputting the utterance content indicating emotions as voice, but may express emotions by moving the body.

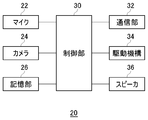

- FIG. 3 shows the input / output system of the robot 20.

- the control unit 30 is a main processor that processes and outputs various data such as voice data and sensor data and commands.

- the microphone 22 collects ambient sounds and converts them into audio signals, and the camera 24 captures the surroundings to obtain a captured image.

- the storage unit 26 temporarily stores data, commands, and the like that are processed by the control unit 30.

- the communication unit 32 transmits data output from the control unit 30 to the external information processing apparatus 10 by wireless communication via the antenna. In addition, the communication unit 32 receives the audio data and the drive data from the information processing apparatus 10 via the antenna through wireless communication and outputs them to the control unit 30.

- the drive mechanism 34 includes a motor incorporated in a joint part that is a movable part of the robot 20 and a link mechanism that connects the motors, and the arm, leg, neck, and the like of the robot 20 are moved by driving the motor. .

- FIG. 4 shows the configuration of an object control system that controls objects.

- the object control system 1 includes an emotion estimation unit 100, an internal state management unit 110, an action management unit 120, an internal state storage unit 130, and an output processing unit 140.

- the internal state storage unit 130 includes an object internal state storage unit 132 and a user internal state storage unit 134.

- the emotion estimation unit 100 includes a first emotion estimation unit 102, a second emotion estimation unit 104, and a third emotion estimation unit 106, and performs a process of estimating emotions in three systems.

- the object control system 1 also includes a camera 14 that captures the user, a biosensor 15 that detects the user's biometric information, a motion sensor 16 that detects the user's movement, a microphone 17 that obtains an audio signal around the user, and an event occurrence. It has the event detection part 40 to detect.

- each element described as a functional block for performing various processes can be configured by a circuit block, a memory, and other LSIs in terms of hardware, and loaded into the memory in terms of software. Realized by programs. Therefore, it is understood by those skilled in the art that these functional blocks can be realized in various forms by hardware only, software only, or a combination thereof, and is not limited to any one.

- each configuration of the emotion estimation unit 100, the internal state management unit 110, the behavior management unit 120, the internal state storage unit 130, and the output processing unit 140 is provided in the information processing apparatus 10, and the information processing apparatus 10

- the behavior of the robot 20 may be controlled.

- Each configuration of the emotion estimation unit 100, the internal state management unit 110, the behavior management unit 120, the internal state storage unit 130, and the output processing unit 140 is provided in the robot 20, and the robot 20 autonomously performs its own behavior. You may control.

- the robot 20 is configured as an autonomous robot and can act alone.

- the emotion estimation unit 100, the internal state management unit 110, the behavior management unit 120, the internal state storage unit 130, and a part of the output processing unit 140 may be provided in the information processing apparatus 10 and the rest may be provided in the robot 20. .

- the information processing apparatus 10 and the robot 20 work together to control the behavior of the robot 20.

- the object control system 1 shown in FIG. 4 may be realized in various modes.

- the audio signal acquired by the microphone 22 of the robot 20 and the captured image captured by the camera 24 are transmitted to the information processing apparatus 10 for use in processing in the information processing apparatus 10. May be.

- the audio signal acquired by the microphone 17 connected to the information processing apparatus 10 and the captured image captured by the camera 14 are transmitted to the robot 20 for use in processing in the robot 20. It's okay.

- an audio signal or a photographed image may be acquired by a microphone or camera other than those illustrated, and provided to the information processing apparatus 10 or the robot 20.

- the camera 14 representatively captures the state of the user

- the microphone 17 collects the user's utterance and converts it into an audio signal.

- the emotion estimation unit 100 estimates a user's emotion based on outputs from various sensors and data from an external server.

- the emotion estimation unit 100 estimates a user's emotion by deriving an evaluation value for each of emotion indexes such as joy, anger, affection, and surprise.

- the user's emotion is expressed by a simple model, and the emotion estimation unit 100 converts the user's emotion into a good emotion (positive emotion), a bad emotion (negative emotion), a good or bad emotion (neutral). Estimate from three evaluation values.

- each of “positive” and “negative” may be subdivided into a plurality of stages so that a fine evaluation value can be derived.

- the first emotion estimation unit 102 is a non-language based on paralinguistic information or non-linguistic information based on outputs of various sensors, that is, outputs of the camera 14, the biological sensor 15, the motion sensor 16 and the microphone 17 which are image sensors. Understand behavior and estimate user's emotions. Compared with the second emotion estimation unit 104, the first emotion estimation unit 102 is characterized by estimating the user's emotion based on the utterance content.

- the first emotion estimation unit 102 extracts changes in facial expression, line-of-sight direction, hand gesture, and the like of the user's face from the captured image of the camera 14, and estimates the user's emotion.

- the biological sensor 15 is attached to the user and detects biological information such as the user's heart rate and sweating state, and the first emotion estimation unit 102 derives the user's psychological state from the biological information and estimates the user's emotion. To do.

- the motion sensor 16 is attached to the user and detects the user's movement, and the first emotion estimation unit 102 estimates the user's emotion from the user's movement.

- the role of the motion sensor 16 can be substituted by analyzing the captured image of the camera 14.

- the first emotion estimation unit 102 estimates the user's emotion from the voice signal of the microphone 17 using the feature amount of the paralinguistic information.

- Paralinguistic information includes information such as speech speed, volume, voice inflection, intonation, and wording.

- the first emotion estimation unit 102 collects the user's emotions estimated from the outputs of the various sensors as one evaluation value for each evaluation index, and supplies the evaluation value to the internal state management unit 110.

- the second emotion estimation unit 104 estimates the user's emotion based on the user's utterance content, and specifically performs natural language understanding that analyzes the user's utterance content from the output of the microphone 17 to obtain the user's emotion. Is estimated. As a natural language understanding method, a known algorithm may be used. If the user speaks “Yo Sha. I hit a home run” while playing the baseball game, the second emotion estimation unit 104 estimates the emotion of the user's “joy” index as “positive”, while the user If he / she utters “I have hit a home run”, the second emotion estimation unit 104 may estimate the emotion of the user's “joy” index as “negative”. The second emotion estimation unit 104 supplies the estimated evaluation value of the user's emotion to the internal state management unit 110.

- the event detection unit 40 detects the occurrence of an event in the object control system 1 and notifies the third emotion estimation unit 106 of the content of the event that has occurred.

- the event detection unit 40 may be notified of the event by executing the game program on the emulator so that the emulator can detect an event such as a home run.

- the event detection unit 40 may detect a home run event by referring to access to effect data reproduced during a home run, or may detect a home run event from an effect actually displayed on the display device 11. .

- the third emotion estimation unit 106 estimates the user's emotion from the notified event content, and supplies the evaluation value to the internal state management unit 110.

- the event detection unit 40 may be provided with event occurrence timing from an external server that accumulates big data. For example, when the information processing apparatus 10 plays a movie content, the event detection unit 40 obtains a correspondence table between the time information of the attention scene of the movie content and the user emotion estimated in the attention scene from an external server in advance. Acquired and provided to the third emotion estimation unit 106. When the time of the scene of interest arrives, the event detection unit 40 notifies the third emotion estimation unit 106 of time information, and the third emotion estimation unit 106 refers to the correspondence relationship table and is associated with the time information. Emotion is acquired, and the user's emotion in the scene of interest is estimated. As described above, the third emotion estimation unit 106 may estimate the user's emotion based on information from the external server.

- the object internal state storage unit 132 stores the internal state of the robot 20 as an object

- the user internal state storage unit 134 stores the internal state of the user.

- the internal state of the robot 20 is defined by at least emotions and favorable feelings of the robot 20 with respect to the user

- the internal state of the users is defined by the emotions of the user and the favorable feelings of the user with respect to the robot 20.

- the likability is generated based on the evaluation values of the latest emotions up to the present, and is evaluated based on the long-term relationship between the user and the robot 20.

- the internal state of the robot 20 is derived from the user's behavior with respect to the robot 20.

- the internal state management unit 110 manages the internal state of the robot 20 and the internal state of the user based on the user's emotion estimated by the emotion estimation unit 100.

- the internal state of the user will be described first, and then the internal state of the robot 20 will be described.

- the user's “emotion” is set by the emotion estimated by the emotion estimation unit 100.

- the internal state management unit 110 updates the evaluation value in the user internal state storage unit 134 based on the emotion estimated by the emotion estimation unit 100.

- the internal state management unit 110 updates the evaluation value of the emotion index to “positive”, and the “negative” evaluation value is When supplied, the evaluation value of the emotion index is updated to “negative”.

- “update” means a process of overwriting the original evaluation value in the internal state storage unit 130, the original evaluation value is “positive”, the evaluation value to be overwritten is “positive”, and the evaluation value Even if there is no change, the evaluation value is updated.

- the emotion estimation unit 100 estimates the user's emotions in the three systems of the first emotion estimation unit 102, the second emotion estimation unit 104, and the third emotion estimation unit 106.

- the evaluation values may contradict each other.

- the evaluation value update processing regarding the “joy” index when the user hits a home run in a baseball game will be described.

- the third emotion estimation unit 106 holds an evaluation value table for estimating emotions as “positive” when the user hits a home run and “negative” when the user hits a home run. ing.

- the third emotion estimation unit 106 receives an event notification that the user has made a home run from the event detection unit 40, the third emotion estimation unit 106 refers to the evaluation value table and estimates the evaluation value of the “joy” index as “positive”.

- both the first emotion estimation unit 102 and the second emotion estimation unit 104 estimate the evaluation value of the “joy” index as “positive”. Therefore, the evaluation values of the “joy” index supplied from the emotion estimation unit 100 to the internal state management unit 110 in three systems are all “positive”, and the internal state management unit 110 stores the internal state management unit 110 in the user internal state storage unit 134. Update the evaluation value of the “joy” index to “positive”.

- the user may not always exhibit joyful behavior. For example, if a batter hits a batter at a batter's bat, and the user is aiming for a third strike to establish a cycle hit (one batter hits at least one single strike, two strikes, three strikes, and one home run in one game), I can't be happy even if I hit it. In addition to winning and losing games, there are many mechanisms for awarding special plays such as cycle hits, and there are cases where users who are aiming for award are more happy with three strikes than home runs. Therefore, when the user who hit the home run utters “Has a home run?”, Both the first emotion estimation unit 102 and the second emotion estimation unit 104 estimate the evaluation value of the “joy” index as “negative”. .

- the internal state management unit 110 is supplied with a “negative” evaluation value from the first emotion estimation unit 102 and the second emotion estimation unit 104, and a “positive” evaluation value from the third emotion estimation unit 106. .

- the internal state management unit 110 may employ an evaluation value that matches in more than half of the evaluation values supplied from the three systems. That is, the internal state management unit 110 employs an evaluation value that matches more than half of the evaluation values supplied independently from a plurality of systems according to the majority rule, and therefore, in this case, the evaluation of the “joy” index. The value may be updated to “negative”.

- the internal state management unit 110 may set a priority order for the evaluation values supplied from the three systems, and may determine an evaluation value to be adopted according to the priority order. Hereinafter, ranking of each evaluation value will be described.

- the evaluation value estimated by the third emotion estimation unit 106 is a unique evaluation value defined in the event, and does not reflect the actual user state. Therefore, the evaluation order estimated by the third emotion estimation unit 106 may be set at the lowest priority order.

- the second emotion estimation unit 104 performs emotion estimation based only on the user's utterance content, and the amount of information for estimation is small. There are few aspects. In addition, even if the utterance content itself is positive, the user may speak masochistically. For example, it was a case where he was aiming for a three-stroke strike, but hit his home run and dropped his shoulders. In this case, the second emotion estimation unit 104 estimates a “positive” evaluation value, but the first emotion estimation unit 102 estimates a “negative” evaluation value from the user's attitude and paralinguistic information.

- the internal state management unit 110 determines the reliability of the evaluation value obtained by the first emotion estimation unit 102 and the evaluation value obtained by the second emotion estimation unit 104. You may set higher than reliability. Therefore, the internal state management unit 110 may set priorities in the order of evaluation values by the first emotion estimation unit 102, the second emotion estimation unit 104, and the third emotion estimation unit 106.

- the internal state management unit 110 can update the user's internal state if the evaluation value is obtained in at least one of the systems by setting the priority order.

- the internal state management unit 110 performs the first emotion estimation according to the priority order.

- the user's internal state is updated. For example, when the user hits a home run, the first emotion estimation unit 102 and the second emotion estimation unit 104 cannot estimate the user's positive or negative emotion (the user does not move at all and does not speak) ),

- the internal state management unit 110 may adopt the evaluation value estimated by the third emotion estimation unit 106.

- the “favorability” of the user with respect to the robot 20 is derived from a plurality of evaluation values of emotions estimated from the behaviors of the user with respect to the robot 20 so far. Assuming that the name of the robot 20 is “Hikoemon”, when the robot 20 speaks to the user, if the user responds “As Hikoemon says,” the emotion estimation unit 100 evaluates the “love” index of the user. If the value is estimated to be “positive” while the user responds “Hikoemon, quiet from noisy”, the emotion estimation unit 100 sets the evaluation value of the user's “love” index to “negative”. Presume that there is.

- the emotion estimation unit 100 sets the evaluation value of the user's “love” index to “positive”. If, on the other hand, it is difficult to charge, the emotion estimation unit 100 estimates that the evaluation value of the user's “love” index is “negative”. When the user strokes the head of the robot 20, the emotion estimation unit 100 estimates that the evaluation value of the “love” index of the user is “positive”, and when the user kicks the robot 20, the emotion estimation unit 100 The evaluation value of the “love” index is estimated to be “negative”.

- the internal state management unit 110 When the internal state management unit 110 receives the evaluation value of the “love” index from the emotion estimation unit 100, the internal state management unit 110 reflects the evaluation value of the user's likability.

- the internal state management unit 110 stocks a plurality of evaluation values of the “love” index up to the present, and estimates the user's preference for the robot 20 based on, for example, the most recent (for example, 21) evaluation values. .

- the higher evaluation value of the positive evaluation value and the negative evaluation value may be set as the favorable evaluation value. That is, if the positive evaluation value is 11 or more among the latest 21 evaluation values, the favorable evaluation value is set to “positive”, and if the negative evaluation value is 11 or more, the favorable evaluation value is It may be set as “negative”.

- the above is an explanation of the internal state of the user, and then the internal state of the robot 20 is described.

- the “favorability” for the user of the robot 20 is derived from a plurality of evaluation values of emotions estimated from the speech and actions that the user has made to the robot 20 so far.

- the internal state management unit 110 receives the evaluation value of the “love” index from the emotion estimation unit 100 and reflects it in the evaluation value of the favorableness for the user of the robot 20.

- the user's preference for the robot 20 tends to be linked to the user's preference for the robot 20 described above.

- the internal state management unit 110 stocks a plurality of evaluation values of the “love” index up to the present, and determines the favorableness of the robot 20 to the user based on, for example, the most recent (for example, 11) evaluation values. To do.

- the reason why the number of evaluation values to be referred to when estimating the user's favorability is smaller is to make it easier to change the favorability of the robot 20 to the user.

- the preference for the user of the robot 20 is an important parameter when determining the emotion of the robot 20. It should be noted that the user's favorability may be evaluated not only by “positive” and “negative” but by “multiple” for “positive” and “negative”.

- the robot 20 if the likability of the robot 20 to the user is positive, the robot 20 sympathizes with the user and takes action to realize a common vision experience. On the other hand, if the likability to the user is negative, the robot 20 20 does not sympathize with the user, but rather takes an action repelling the user.

- the behavior management unit 120 determines the behavior including the voice output of the robot 20 based on the internal state of the robot 20 and the internal state of the user.

- the behavior of the robot 20 when the user hits a home run in a baseball game will be described.

- the emotion estimation unit 100 estimates the evaluation value of the “joy” index as “positive”, and the internal state management unit 110 updates the evaluation value of the user ’s “joy” index to “positive”. Further, the internal state management unit 110 determines the evaluation value of the “joy” index of the robot 20 with reference to the evaluation value of the “joy” index of the user and the evaluation value of the favorableness of the robot 20 to the user.

- the internal state management unit 110 manages the emotion of the robot 20 so as to sympathize with the emotion of the user. Therefore, if the evaluation value of the “joy” index of the user is updated to positive, the internal state management unit 110 also updates the evaluation value of the “joy” index of the robot 20 to positive.

- the internal state management unit 110 provides the behavior management unit 120 with opportunity information indicating that it is time to determine the behavior of the robot 20.

- the behavior management unit 120 When the behavior management unit 120 receives the trigger information, the behavior management unit 120 performs behavior including voice output of the robot 20 based on the internal state of the robot 20 updated in the object internal state storage unit 132, specifically, the updated evaluation value of the emotion. To decide. For example, the behavior management unit 120 generates the utterance content “Oh, the player is amazing. I hit the home run.” Also, the behavior of the robot 20 is determined twice. The utterance content and operation are notified to the output processing unit 140, and the output processing unit 140 causes the robot 20 to perform the action determined by the action management unit 120.

- the output processing unit 140 generates voice data that controls the output timing of the robot 20 such as the speed of speech and the timing of speech and movement, and drive data that drives the robot 20, and outputs them to the robot 20.

- the control unit 30 supplies the voice data to the speaker 36 and outputs the voice, and drives the motor of the drive mechanism 34 based on the drive data.

- the robot 20 performs utterances and actions that sympathize with the user who hit the home run. The user sees that the robot 20 is happy together, so that the familiarity with the robot 20 is enhanced and the motivation for the game is also enhanced.

- the internal state management unit 110 uses the evaluation value of the “joy” index of the robot 20. Update to negative.

- the internal state management unit 110 provides opportunity information to the behavior management unit 120.

- the behavior management unit 120 determines the behavior including the voice output of the robot 20 based on the evaluation value of the “joy” index of the robot 20 updated in the object internal state storage unit 132. For example, the behavior management unit 120 generates an utterance content “Ah. You have been hit by a home run.” Also, as the operation of the robot 20, the behavior that is weak is determined. The utterance content and operation are notified to the output processing unit 140, and the output processing unit 140 causes the robot 20 to perform the action determined by the action management unit 120.

- the internal state management unit 110 manages the emotion of the robot 20 so as not to sympathize with the emotion of the user.

- the internal state management unit 110 rather manages the emotion of the robot 20 so as to repel the user, so that the user can realize that it is better to handle the robot 20 more carefully.

- the internal state management unit 110 updates the evaluation value of the “joy” index of the robot 20 to negative. If the preference of the robot 20 to the user is negative, the internal state management unit 110 updates the evaluation value of the emotion of the robot 20 to a value opposite to the evaluation value of the user's emotion. Therefore, if the user's emotion evaluation value is positive, the emotion evaluation value of the robot 20 may be updated to negative, and if the user's emotion evaluation value is negative, the emotion evaluation value of the robot 20 may be updated to positive.

- the internal state management unit 110 provides the behavior management unit 120 with opportunity information indicating that it is time to determine the behavior of the robot 20.

- the behavior management unit 120 determines the behavior including the voice output of the robot 20 based on the internal state of the robot 20 updated in the object internal state storage unit 132, specifically, the negative evaluation value of the “joy” index.

- the behavior management unit 120 may determine the behavior that does not generate the utterance content (that is, ignores the user) and faces the user in the opposite direction (facing the side) as the operation of the robot 20. This action content is notified to the output processing unit 140, and the output processing unit 140 causes the robot 20 to perform the action determined by the action management unit 120.

- the user notices that he was pleased with him before but not this time.

- the user looks back on his attitude so far and recognizes that he / she cannot be pleased together because he / she is in contact with the robot 20 coldly.

- the robot 20 reacts repulsively, the user is given an opportunity to keep touching the robot 20 from now on.

- the object control system 1 manages the relationship between the user and the robot 20 in the same manner as the human relationship in the actual human society.

- good relationships are created by interacting with each other with compassion, but if one loses compassion, the other will not be compassionate.

- this compassion is expressed by the emotion of the “love” index

- the human relationship through the compassion is expressed by the index of “favorability”. Therefore, the evaluation value of the user's preference in the object control system 1 and the evaluation value of the preference of the robot 20 tend to work together.

- the user's preference for the robot 20 is lowered, the user's thoughtful contact with the robot 20 again improves the evaluation value of the user's preference for the user from negative to positive. You will be able to get a common vision experience.

- the robot 20 can become a kind of friend when the user increases the familiarity with the robot 20. If the user has an irregular life, the robot 20 may suggest improvement of life rhythm, such as “Let's go to sleep soon,” and the user may listen as advice from a friend in the future. In order to realize such a thing, it is also possible to expand the future possibilities of the robot 20 by constructing a mechanism in which the user enhances the familiarity with the robot 20 through the joint viewing experience of the robot 20. .

- the virtual object may be a character such as a person or a pet composed of a 3D model, and exists in a virtual space generated by a computer.

- the content in a virtual space constructed when the user wears a head mounted display (HMD), the content is played in front of the user, and when the user turns sideways, the virtual character watches the content together.

- HMD head mounted display

- the virtual character also outputs a reaction that sympathizes with the user, or conversely, outputs a reaction that repels the user, thereby communicating with the user.

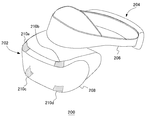

- FIG. 5 shows an example of the external shape of the HMD 200.

- the HMD 200 includes an output mechanism unit 202 and a mounting mechanism unit 204.

- the wearing mechanism unit 204 includes a wearing band 206 that goes around the head when worn by the user and fixes the HMD 200 to the head.

- the output mechanism unit 202 includes a housing 208 shaped to cover the left and right eyes when the user wears the HMD 200, and includes a display panel that faces the eyes when worn.

- the display panel may be a liquid crystal panel or an organic EL panel.

- the housing 208 is further provided with a pair of left and right optical lenses that are positioned between the display panel and the user's eyes and expand the viewing angle of the user.

- the HMD 200 may further include a speaker or an earphone at a position corresponding to the user's ear, and may be configured to connect an external headphone.

- a camera that captures the user's face is provided inside the housing 208 and is used to detect the facial expression of the user.

- the outer surface of the housing 208 is provided with light emitting markers 210a, 210b, 210c, and 210d that are tracking LEDs.

- the light emitting marker 210 is photographed by the camera 14, and the information processing apparatus 10 analyzes the image of the marker position.

- the HMD 200 is equipped with posture sensors (acceleration sensor and gyro sensor).

- the HMD 200 is connected to the information processing apparatus 10 by a known wireless communication protocol, and transmits sensor data detected by the attitude sensor to the information processing apparatus 10.

- the information processing apparatus 10 specifies the direction in which the HMD 200 is facing based on the imaging position of the light emitting marker 210 and the sensor data of the orientation sensor.

- the information processing apparatus 10 transmits content data such as a movie to the HMD 200.

- the information processing apparatus 10 sets a reference direction in which the HMD 200 is directed, sets a virtual display in the reference direction, and displays content reproduction data on the virtual display.

- the information processing apparatus 10 arranges a virtual object facing the virtual display at a position rotated by 90 degrees around the viewpoint position with respect to the reference direction, for example. As a result, when the user rotates the line-of-sight direction 90 degrees horizontally, it is possible to see a situation in which a virtual object is watching content together next.

- the user wearing the HMD 200 can obtain a co-view experience with a virtual object.

- the virtual object action determination process is as described for the robot 20.

- various positive or negative emotion expressions can be expressed.

- the user can increase the sense of familiarity through the experience of visual observation with an actual object or a virtual object, and the user's life can be enriched.

- the behavior management unit 120 generates an utterance content “XX player is amazing. You hit a home run.” As a sympathy expression when the user hits a home run.

- the behavior management unit 120 may positively output from the object a sound in which the target object is replaced with a pronoun. Specifically, the expression “ ⁇ ⁇ player” may be replaced with “kodoko” which is a pronoun, and the content of the utterance “had this guy run. By using the indicating pronouns, the user can further enhance the sense of co-viewing with the robot 20.

- the present invention can be used in the technical field of controlling the behavior of an object.

Abstract

A feeling deduction unit 100 deduces a user's feeling. An internal state management unit 110 manages the internal state of an object and the internal state of the user on the basis of the user's deduced feeling. An action management unit 120 determines the action of the object on the basis of the internal state of the object. An output processing unit 140 causes the object to carry out the action determined by the action management unit 120.

Description

本発明は、実際のオブジェクトまたは仮想的なオブジェクトであるオブジェクトの行動を制御する技術に関する。

The present invention relates to a technique for controlling the behavior of an object that is an actual object or a virtual object.

他人と良好な関係を構築するために、「向かい合う関係」ではなく、共に同じ物を視る「共視体験」が有効であると言われている。同じ場所で同じ物を視て互いに共感することで、他人との距離は縮まり親近感が高まることが知られている。

”It is said that in order to build a good relationship with others, not“ face-to-face relationships ”but“ co-view experience ”in which the same thing is seen together is effective. It is known that by looking at the same thing at the same place and empathizing with each other, the distance to others is reduced and the sense of familiarity is increased.

本発明者はロボットをユーザの共視体験者として活用する可能性に注目した。たとえばユーザによるゲームプレイ中、ロボットがユーザの横でゲームプレイを観戦し、ユーザと一緒に喜んだり悲しんだりすることで、ユーザのロボットに対する親近感が高まり、またゲームをプレイすることへのモチベーションが向上することが期待される。またゲームに限らず、映画やテレビ番組等に関しても、ユーザはロボットと一緒に視聴することで、一人で視聴する場合と比較して、コンテンツをより楽しめることも期待される。

The present inventor has paid attention to the possibility of utilizing the robot as a user's experience of co-viewing. For example, during game play by the user, the robot watching the game play beside the user, and being happy or sad with the user increases the user's affinity for the robot, and motivation to play the game. It is expected to improve. Further, not only for games but also for movies, TV programs, and the like, it is expected that the user can enjoy the content more by watching with the robot than when watching alone.

本発明はこうした課題に鑑みてなされたものであり、その目的は、ユーザがロボットなどのオブジェクトと共視体験を得られる技術を提供することにある。

The present invention has been made in view of these problems, and an object of the present invention is to provide a technique by which a user can obtain a co-view experience with an object such as a robot.

上記課題を解決するために、本発明のある態様は、オブジェクトを制御するオブジェクト制御システムであって、ユーザの感情を推定する感情推定部と、ユーザの感情を含むユーザの内部状態を記憶するユーザ内部状態記憶部と、オブジェクトの感情を含むオブジェクトの内部状態を記憶するオブジェクト内部状態記憶部と、推定されたユーザの感情にもとづいて、オブジェクトの内部状態およびユーザの内部状態を管理する内部状態管理部と、オブジェクトの内部状態にもとづいて、オブジェクトの行動を決定する行動管理部と、行動管理部により決定された行動をオブジェクトに実施させる出力処理部とを備える。

In order to solve the above problems, an aspect of the present invention is an object control system that controls an object, and an emotion estimation unit that estimates a user's emotion and a user that stores an internal state of the user including the user's emotion Internal state storage unit, object internal state storage unit that stores the internal state of the object including the emotion of the object, and internal state management that manages the internal state of the object and the internal state of the user based on the estimated user emotion A behavior management unit that determines the behavior of the object based on the internal state of the object, and an output processing unit that causes the object to perform the behavior determined by the behavior management unit.

本発明の別の態様は、オブジェクト制御方法である。この方法は、ユーザの感情を推定するステップと、ユーザの感情を含むユーザの内部状態を管理するステップと、オブジェクトの感情を含むオブジェクトの内部状態を管理するステップと、推定されたユーザの感情にもとづいて、オブジェクトの内部状態およびユーザの内部状態を更新するステップと、オブジェクトの内部状態にもとづいて、オブジェクトの行動を決定するステップと、決定された行動をオブジェクトに実施させるステップとを有する。

Another aspect of the present invention is an object control method. The method includes estimating a user's emotion, managing a user's internal state including the user's emotion, managing an object's internal state including the object's emotion, and estimating the user's emotion. Basically, the method includes updating the internal state of the object and the internal state of the user, determining the behavior of the object based on the internal state of the object, and causing the object to perform the determined behavior.

なお、以上の構成要素の任意の組合せ、本発明の表現を方法、装置、システム、コンピュータプログラム、コンピュータプログラムを読み取り可能に記録した記録媒体、データ構造などの間で変換したものもまた、本発明の態様として有効である。

Note that any combination of the above components, the expression of the present invention converted between a method, an apparatus, a system, a computer program, a recording medium on which the computer program is recorded so as to be readable, a data structure, and the like are also included in the present invention. It is effective as an embodiment of

実施例のオブジェクト制御システムは、実際のオブジェクトまたは仮想的なオブジェクトとの共視体験を実現する仕組みを提供する。実際のオブジェクトは人型やペット型などのロボットであってよく、少なくとも音声を出力可能とし、また関節部分にモータを有して腕や脚、首などを動かせることが好ましい。ユーザは傍らにロボットを置いて一緒にコンテンツを視聴し、ロボットは、推定されるユーザの感情にもとづいて、ユーザに共感する反応を出力したり、逆にユーザに反発する反応を出力して、ユーザとのコミュニケーションを図る。

The object control system according to the embodiment provides a mechanism for realizing a co-view experience with an actual object or a virtual object. The actual object may be a human-type or pet-type robot, and it is preferable that at least sound can be output, and a motor is provided at the joint part to move an arm, leg, neck, or the like. The user puts a robot beside them and watches the content together, and the robot outputs a reaction that sympathizes with the user based on the estimated emotion of the user, or outputs a reaction that repels the user, Communicate with users.

仮想的なオブジェクトは3Dモデルにより構成される人やペットなどのキャラクタであってよく、コンピュータにより生成される仮想空間に存在する。実施例ではユーザがヘッドマウントディスプレイ(HMD)を装着したときに構築される仮想空間において、ユーザの正面にコンテンツが再生され、ユーザが横を向くと仮想的なキャラクタが一緒にコンテンツを視聴している様子を見ることのできる仕組みを提案する。仮想キャラクタも、ロボットと同様に、ユーザに共感する反応を出力したり、逆にユーザに反発する反応を出力することで、ユーザとのコミュニケーションを図る。

The virtual object may be a character such as a person or a pet composed of a 3D model, and exists in a virtual space generated by a computer. In the embodiment, in a virtual space constructed when the user wears a head mounted display (HMD), the content is played in front of the user, and when the user turns sideways, the virtual character watches the content together. We propose a mechanism that allows you to see how you are. The virtual character also communicates with the user by outputting a reaction that sympathizes with the user, or by outputting a reaction that repels the user.

図1は、実際のオブジェクトの外観例を示す。このオブジェクトは人型のロボット20であって、音声を出力するスピーカ、外界の音声を入力するマイク、各関節を動かすモータやモータ間を連結するリンクから構成される駆動機構等を備える。ロボット20は、ユーザとの間で会話する対話機能を有することが好ましく、さらに自律移動機能を有することが好ましい。

Fig. 1 shows an example of the appearance of an actual object. This object is a humanoid robot 20 and includes a speaker that outputs sound, a microphone that inputs sound of the outside world, a motor that moves each joint, and a drive mechanism that includes a link that connects the motors. The robot 20 preferably has an interactive function of having a conversation with the user, and more preferably has an autonomous movement function.

図2は、オブジェクト制御システム1の概要を説明するための図である。図2には、ユーザがソファに腰掛けてゲームをプレイし、ロボット20が同じソファに座って、ユーザのゲームプレイを観戦している様子を示している。ロボット20は、ソファに自分で腰掛けられるような高度な自律移動機能を有することが理想的であるが、そのような機能を有していなければ、ユーザがロボット20をソファに運んで、自分の横に座らせる。ロボット20は、ゲームに仮想的なプレイヤとして参加し、たとえばユーザがプレイしている野球ゲームの相手チームを仮想的に操作してもよい。

FIG. 2 is a diagram for explaining the outline of the object control system 1. FIG. 2 shows a situation where the user sits on the sofa and plays a game, and the robot 20 sits on the same sofa and watches the user's game play. Ideally, the robot 20 should have a high level of autonomous movement function that allows the user to sit on the sofa. If the robot 20 does not have such a function, the user can bring the robot 20 to the sofa and Sit sideways. The robot 20 may participate in the game as a virtual player, and for example, may virtually operate the opponent team of the baseball game that the user is playing.

情報処理装置10は、ユーザにより入力装置12に入力された操作情報を受け付けて、ゲームなどのアプリケーションを実行する。なお情報処理装置10は、DVDなどのコンテンツメディアを再生したり、またネットワークに接続して、コンテンツサーバからコンテンツをストリーミング再生できてもよい。カメラ14はステレオカメラであって、テレビである表示装置11の前方に座っているユーザを所定の周期で撮影し、撮影画像を情報処理装置10に供給する。

The information processing apparatus 10 receives operation information input to the input device 12 by the user and executes an application such as a game. The information processing apparatus 10 may be capable of reproducing content media such as a DVD or streaming content from a content server by connecting to a network. The camera 14 is a stereo camera, captures a user sitting in front of the display device 11, which is a television, at a predetermined cycle, and supplies the captured image to the information processing apparatus 10.

オブジェクト制御システム1は、ユーザの反応から感情を推定することで、実オブジェクトであるロボット20の行動を制御する。ユーザのゲームプレイを応援するロボット20は、基本的には、ユーザと一緒に喜んでユーザへの共感を表現し、ユーザに共視体験を与えるように行動を制御される。なお実施例のオブジェクト制御システム1は、ロボット20およびユーザの内部状態を管理し、たとえばユーザが喜んでいても、ロボット20のユーザに対する好感度が低ければ、一緒に喜ばない(共感しない)ように行動を制御する。なおロボット20がユーザの対戦相手としてゲームに仮想的に参加する場合、ユーザが喜んでいれば、逆に悲しむように行動を制御されてよい。ロボット20は、基本的には感情を示す発話内容を音声出力することで感情表現するが、体を動かすことで感情表現してもよい。

The object control system 1 controls the behavior of the robot 20 that is a real object by estimating the emotion from the reaction of the user. The robot 20 that supports the user's game play is basically controlled in behavior so as to express sympathy to the user happily together with the user and to give the user a common vision experience. Note that the object control system 1 of the embodiment manages the internal state of the robot 20 and the user. For example, even if the user is pleased, if the preference of the robot 20 to the user is low, the object control system 1 is not pleased (not sympathized) together. Control behavior. When the robot 20 virtually participates in the game as the user's opponent, the behavior may be controlled so as to be sad if the user is happy. The robot 20 basically expresses emotions by outputting the utterance content indicating emotions as voice, but may express emotions by moving the body.

図3は、ロボット20の入出力系統を示す。制御部30は、音声データ、センサデータなどの各種データや、命令を処理して出力するメインプロセッサである。マイク22は周囲の音声を集音して音声信号に変換し、カメラ24は周囲を撮影して、撮影画像を取得する。記憶部26は、制御部30が処理するデータや命令などを一時的に記憶する。通信部32はアンテナを介して、制御部30から出力されるデータを無線通信により外部の情報処理装置10に送信する。また通信部32はアンテナを介して、情報処理装置10から音声データおよび駆動データを無線通信により受信し、制御部30に出力する。

FIG. 3 shows the input / output system of the robot 20. The control unit 30 is a main processor that processes and outputs various data such as voice data and sensor data and commands. The microphone 22 collects ambient sounds and converts them into audio signals, and the camera 24 captures the surroundings to obtain a captured image. The storage unit 26 temporarily stores data, commands, and the like that are processed by the control unit 30. The communication unit 32 transmits data output from the control unit 30 to the external information processing apparatus 10 by wireless communication via the antenna. In addition, the communication unit 32 receives the audio data and the drive data from the information processing apparatus 10 via the antenna through wireless communication and outputs them to the control unit 30.

制御部30は、音声データを受け取ると、スピーカ36に供給して音声出力させ、駆動データを受け取ると、駆動機構34のモータを回転させる。駆動機構34は、ロボット20の可動部である関節部分に組み込まれたモータおよびモータ間を連結するリンク機構を含み、モータが駆動されることで、ロボット20の腕や脚、首などが動かされる。

When the control unit 30 receives the sound data, the control unit 30 supplies the sound to the speaker 36 and outputs the sound. When the control data is received, the control unit 30 rotates the motor of the drive mechanism 34. The drive mechanism 34 includes a motor incorporated in a joint part that is a movable part of the robot 20 and a link mechanism that connects the motors, and the arm, leg, neck, and the like of the robot 20 are moved by driving the motor. .

図4は、オブジェクトを制御するオブジェクト制御システムの構成を示す。オブジェクト制御システム1は、感情推定部100、内部状態管理部110、行動管理部120、内部状態記憶部130および出力処理部140を備える。内部状態記憶部130は、オブジェクト内部状態記憶部132およびユーザ内部状態記憶部134を有する。感情推定部100は、第1感情推定部102、第2感情推定部104および第3感情推定部106を有して、3系統で感情を推定する処理を実施する。

FIG. 4 shows the configuration of an object control system that controls objects. The object control system 1 includes an emotion estimation unit 100, an internal state management unit 110, an action management unit 120, an internal state storage unit 130, and an output processing unit 140. The internal state storage unit 130 includes an object internal state storage unit 132 and a user internal state storage unit 134. The emotion estimation unit 100 includes a first emotion estimation unit 102, a second emotion estimation unit 104, and a third emotion estimation unit 106, and performs a process of estimating emotions in three systems.

またオブジェクト制御システム1は、ユーザを撮影するカメラ14、ユーザの生体情報を検出する生体センサ15、ユーザの動きを検出するモーションセンサ16、ユーザ周囲の音声信号を取得するマイク17、イベントの発生を検知するイベント検知部40を有する。

The object control system 1 also includes a camera 14 that captures the user, a biosensor 15 that detects the user's biometric information, a motion sensor 16 that detects the user's movement, a microphone 17 that obtains an audio signal around the user, and an event occurrence. It has the event detection part 40 to detect.

図4において、さまざまな処理を行う機能ブロックとして記載される各要素は、ハードウェア的には、回路ブロック、メモリ、その他のLSIで構成することができ、ソフトウェア的には、メモリにロードされたプログラムなどによって実現される。したがって、これらの機能ブロックがハードウェアのみ、ソフトウェアのみ、またはそれらの組合せによっていろいろな形で実現できることは当業者には理解されるところであり、いずれかに限定されるものではない。

In FIG. 4, each element described as a functional block for performing various processes can be configured by a circuit block, a memory, and other LSIs in terms of hardware, and loaded into the memory in terms of software. Realized by programs. Therefore, it is understood by those skilled in the art that these functional blocks can be realized in various forms by hardware only, software only, or a combination thereof, and is not limited to any one.

図4において、感情推定部100、内部状態管理部110、行動管理部120、内部状態記憶部130および出力処理部140の各構成は、情報処理装置10に設けられて、情報処理装置10が、ロボット20の行動を制御してもよい。また感情推定部100、内部状態管理部110、行動管理部120、内部状態記憶部130および出力処理部140の各構成は、ロボット20に設けられて、ロボット20が、自身の行動を自律的に制御してもよい。この場合、ロボット20は自律型ロボットとして構成され、単独で行動できる。また感情推定部100、内部状態管理部110、行動管理部120、内部状態記憶部130および出力処理部140の一部が、情報処理装置10に設けられ、残りがロボット20に設けられてもよい。この場合、情報処理装置10とロボット20とが協働して、ロボット20の行動を制御するために動作する。

4, each configuration of the emotion estimation unit 100, the internal state management unit 110, the behavior management unit 120, the internal state storage unit 130, and the output processing unit 140 is provided in the information processing apparatus 10, and the information processing apparatus 10 The behavior of the robot 20 may be controlled. Each configuration of the emotion estimation unit 100, the internal state management unit 110, the behavior management unit 120, the internal state storage unit 130, and the output processing unit 140 is provided in the robot 20, and the robot 20 autonomously performs its own behavior. You may control. In this case, the robot 20 is configured as an autonomous robot and can act alone. The emotion estimation unit 100, the internal state management unit 110, the behavior management unit 120, the internal state storage unit 130, and a part of the output processing unit 140 may be provided in the information processing apparatus 10 and the rest may be provided in the robot 20. . In this case, the information processing apparatus 10 and the robot 20 work together to control the behavior of the robot 20.

このように図4に示すオブジェクト制御システム1は、様々な態様で実現されてよい。上記各構成が情報処理装置10に設けられる場合、ロボット20のマイク22が取得した音声信号およびカメラ24が撮影した撮影画像は、情報処理装置10における処理で利用するために情報処理装置10に送信されてよい。また上記各構成がロボット20に設けられる場合、情報処理装置10に接続するマイク17が取得した音声信号およびカメラ14が撮影した撮影画像は、ロボット20における処理で利用するためにロボット20に送信されてよい。また図示する以外のマイクやカメラにより音声信号や撮影画像が取得されて、情報処理装置10またはロボット20に提供されてもよい。以下では、説明の便宜上、代表してカメラ14がユーザの様子を撮影し、マイク17がユーザの発声を集音して音声信号に変換する。

As described above, the object control system 1 shown in FIG. 4 may be realized in various modes. When each of the above configurations is provided in the information processing apparatus 10, the audio signal acquired by the microphone 22 of the robot 20 and the captured image captured by the camera 24 are transmitted to the information processing apparatus 10 for use in processing in the information processing apparatus 10. May be. When each of the above components is provided in the robot 20, the audio signal acquired by the microphone 17 connected to the information processing apparatus 10 and the captured image captured by the camera 14 are transmitted to the robot 20 for use in processing in the robot 20. It's okay. In addition, an audio signal or a photographed image may be acquired by a microphone or camera other than those illustrated, and provided to the information processing apparatus 10 or the robot 20. In the following, for convenience of explanation, the camera 14 representatively captures the state of the user, and the microphone 17 collects the user's utterance and converts it into an audio signal.

感情推定部100は、各種センサの出力や外部サーバからのデータをもとに、ユーザの感情を推定する。感情推定部100は、喜び、怒り、愛情、驚きなどの感情指標のそれぞれについて評価値を導出することで、ユーザの感情を推定する。なお実施例ではユーザの感情を単純なモデルで表現し、感情推定部100は、ユーザの感情を、良い感情(ポジティブな感情)、悪い感情(ネガティブな感情)、良くも悪くもない感情(ニュートラルな感情)の3つの評価値の中から推定する。なお実際には、「ポジティブ」、「ネガティブ」のそれぞれが複数段階に細分化されて、きめ細かな評価値を導出可能としてもよい。

The emotion estimation unit 100 estimates a user's emotion based on outputs from various sensors and data from an external server. The emotion estimation unit 100 estimates a user's emotion by deriving an evaluation value for each of emotion indexes such as joy, anger, affection, and surprise. In the embodiment, the user's emotion is expressed by a simple model, and the emotion estimation unit 100 converts the user's emotion into a good emotion (positive emotion), a bad emotion (negative emotion), a good or bad emotion (neutral). Estimate from three evaluation values. In practice, each of “positive” and “negative” may be subdivided into a plurality of stages so that a fine evaluation value can be derived.

第1感情推定部102は、各種センサの出力、すなわちイメージセンサであるカメラ14、生体センサ15、モーションセンサ16およびマイク17の出力をもとに、パラ言語情報や非言語情報にもとづいた非言語行動理解を行い、ユーザの感情を推定する。第1感情推定部102は、第2感情推定部104と比較すると、発話内容にもとづかずにユーザの感情を推定することを特徴とする。

The first emotion estimation unit 102 is a non-language based on paralinguistic information or non-linguistic information based on outputs of various sensors, that is, outputs of the camera 14, the biological sensor 15, the motion sensor 16 and the microphone 17 which are image sensors. Understand behavior and estimate user's emotions. Compared with the second emotion estimation unit 104, the first emotion estimation unit 102 is characterized by estimating the user's emotion based on the utterance content.

第1感情推定部102は、カメラ14の撮影画像から、ユーザの顔の表情、視線方向、手振りなどの変化を抽出し、ユーザの感情を推定する。生体センサ15はユーザに取り付けられて、ユーザの心拍数や発汗状態などの生体情報を検出し、第1感情推定部102は、生体情報からユーザの心理状態を導出して、ユーザの感情を推定する。モーションセンサ16はユーザに取り付けられて、ユーザの動きを検出し、第1感情推定部102は、ユーザの動きからユーザの感情を推定する。なおモーションセンサ16の役割は、カメラ14の撮像画像を解析することで代用することも可能である。また第1感情推定部102は、マイク17の音声信号からパラ言語情報の特徴量を用いて、ユーザの感情を推定する。パラ言語情報は、話速、音量、声の抑揚、イントネーション、言葉遣いなどの情報を含む。第1感情推定部102は、各種センサの出力のそれぞれから推定したユーザの感情を評価指標ごとに一つの評価値としてまとめて、内部状態管理部110に供給する。

The first emotion estimation unit 102 extracts changes in facial expression, line-of-sight direction, hand gesture, and the like of the user's face from the captured image of the camera 14, and estimates the user's emotion. The biological sensor 15 is attached to the user and detects biological information such as the user's heart rate and sweating state, and the first emotion estimation unit 102 derives the user's psychological state from the biological information and estimates the user's emotion. To do. The motion sensor 16 is attached to the user and detects the user's movement, and the first emotion estimation unit 102 estimates the user's emotion from the user's movement. The role of the motion sensor 16 can be substituted by analyzing the captured image of the camera 14. Further, the first emotion estimation unit 102 estimates the user's emotion from the voice signal of the microphone 17 using the feature amount of the paralinguistic information. Paralinguistic information includes information such as speech speed, volume, voice inflection, intonation, and wording. The first emotion estimation unit 102 collects the user's emotions estimated from the outputs of the various sensors as one evaluation value for each evaluation index, and supplies the evaluation value to the internal state management unit 110.

第2感情推定部104は、ユーザの発話内容をもとにユーザの感情を推定し、具体的にはマイク17の出力からユーザの発話内容を音声解析する自然言語理解を行って、ユーザの感情を推定する。自然言語理解の手法は、既知のアルゴリズムを利用してよい。ユーザが野球ゲームのプレイ中に、「よっしゃ。ホームラン打ったぜ。」と発話すれば、第2感情推定部104はユーザの「喜び」指標の感情を「ポジティブ」と推定し、一方でユーザが「ホームランを打たれてしまった。」と発話すれば、第2感情推定部104はユーザの「喜び」指標の感情を「ネガティブ」と推定してよい。第2感情推定部104は、推定したユーザの感情の評価値を、内部状態管理部110に供給する。

The second emotion estimation unit 104 estimates the user's emotion based on the user's utterance content, and specifically performs natural language understanding that analyzes the user's utterance content from the output of the microphone 17 to obtain the user's emotion. Is estimated. As a natural language understanding method, a known algorithm may be used. If the user speaks “Yo Sha. I hit a home run” while playing the baseball game, the second emotion estimation unit 104 estimates the emotion of the user's “joy” index as “positive”, while the user If he / she utters “I have hit a home run”, the second emotion estimation unit 104 may estimate the emotion of the user's “joy” index as “negative”. The second emotion estimation unit 104 supplies the estimated evaluation value of the user's emotion to the internal state management unit 110.

イベント検知部40は、オブジェクト制御システム1におけるイベントの発生を検知し、第3感情推定部106に、発生したイベントの内容を通知する。ゲームのイベントに関して言えば、エミュレータ上でゲームプログラムを実行することで、エミュレータがホームランなどのイベントを検知できるため、イベント検知部40は、エミュレータからイベントを通知されてよい。なおイベント検知部40は、ホームラン時に再生される演出データへのアクセスを参照してホームランイベントを検知したり、また実際に表示装置11に画面表示される演出から、ホームランイベントを検知してもよい。第3感情推定部106は、通知されたイベント内容から、ユーザの感情を推定して、評価値を内部状態管理部110に供給する。

The event detection unit 40 detects the occurrence of an event in the object control system 1 and notifies the third emotion estimation unit 106 of the content of the event that has occurred. Regarding the game event, the event detection unit 40 may be notified of the event by executing the game program on the emulator so that the emulator can detect an event such as a home run. Note that the event detection unit 40 may detect a home run event by referring to access to effect data reproduced during a home run, or may detect a home run event from an effect actually displayed on the display device 11. . The third emotion estimation unit 106 estimates the user's emotion from the notified event content, and supplies the evaluation value to the internal state management unit 110.

なおイベント検知部40は、ビッグデータを蓄積する外部サーバから、イベント発生タイミングを提供されてもよい。たとえば情報処理装置10が映画コンテンツを再生する場合、イベント検知部40は、その映画コンテンツの注目シーンの時間情報と、その注目シーンで推定されるユーザ感情との対応関係テーブルを事前に外部サーバから取得して、第3感情推定部106に提供しておく。イベント検知部40は、注目シーンの時間が到来すると、時間情報を第3感情推定部106に通知し、第3感情推定部106は、対応関係テーブルを参照して時間情報に対応付けられたユーザ感情を取得し、注目シーンにおけるユーザの感情を推定する。このように第3感情推定部106は、外部サーバからの情報をもとにユーザの感情を推定してもよい。

The event detection unit 40 may be provided with event occurrence timing from an external server that accumulates big data. For example, when the information processing apparatus 10 plays a movie content, the event detection unit 40 obtains a correspondence table between the time information of the attention scene of the movie content and the user emotion estimated in the attention scene from an external server in advance. Acquired and provided to the third emotion estimation unit 106. When the time of the scene of interest arrives, the event detection unit 40 notifies the third emotion estimation unit 106 of time information, and the third emotion estimation unit 106 refers to the correspondence relationship table and is associated with the time information. Emotion is acquired, and the user's emotion in the scene of interest is estimated. As described above, the third emotion estimation unit 106 may estimate the user's emotion based on information from the external server.

内部状態記憶部130において、オブジェクト内部状態記憶部132は、オブジェクトであるロボット20の内部状態を記憶し、ユーザ内部状態記憶部134は、ユーザの内部状態を記憶する。ロボット20の内部状態は、少なくともロボット20のユーザに対する感情および好感度によって定義され、ユーザの内部状態は、ユーザの感情と、ユーザのロボット20に対する好感度によって定義される。好感度は、現在に至る直近の複数の感情の評価値をもとに生成されるものであって、ユーザとロボット20との長期的な関係性により評価される。ロボット20の内部状態は、ロボット20に対するユーザの言動から導出される。

In the internal state storage unit 130, the object internal state storage unit 132 stores the internal state of the robot 20 as an object, and the user internal state storage unit 134 stores the internal state of the user. The internal state of the robot 20 is defined by at least emotions and favorable feelings of the robot 20 with respect to the user, and the internal state of the users is defined by the emotions of the user and the favorable feelings of the user with respect to the robot 20. The likability is generated based on the evaluation values of the latest emotions up to the present, and is evaluated based on the long-term relationship between the user and the robot 20. The internal state of the robot 20 is derived from the user's behavior with respect to the robot 20.

内部状態管理部110は、感情推定部100により推定されたユーザの感情にもとづいて、ロボット20の内部状態およびユーザの内部状態を管理する。以下、最初にユーザの内部状態について説明し、その後、ロボット20の内部状態について説明する。

ユーザの「感情」は、感情推定部100により推定される感情によって設定される。内部状態管理部110は、感情推定部100により推定される感情にもとづいて、ユーザ内部状態記憶部134における評価値を更新する。 The internalstate management unit 110 manages the internal state of the robot 20 and the internal state of the user based on the user's emotion estimated by the emotion estimation unit 100. Hereinafter, the internal state of the user will be described first, and then the internal state of the robot 20 will be described.

The user's “emotion” is set by the emotion estimated by theemotion estimation unit 100. The internal state management unit 110 updates the evaluation value in the user internal state storage unit 134 based on the emotion estimated by the emotion estimation unit 100.

ユーザの「感情」は、感情推定部100により推定される感情によって設定される。内部状態管理部110は、感情推定部100により推定される感情にもとづいて、ユーザ内部状態記憶部134における評価値を更新する。 The internal

The user's “emotion” is set by the emotion estimated by the

内部状態管理部110は、ユーザの感情指標について「ポジティブ」の評価値が感情推定部100から供給されると、その感情指標の評価値を「ポジティブ」に更新し、「ネガティブ」の評価値が供給されると、その感情指標の評価値を「ネガティブ」に更新する。ここで「更新」とは、内部状態記憶部130における元の評価値を上書きする処理を意味し、元の評価値が「ポジティブ」であり、上書きする評価値が「ポジティブ」であって評価値に変化がない場合であっても、評価値の更新と呼ぶ。

When the “positive” evaluation value is supplied from the emotion estimation unit 100 for the emotion index of the user, the internal state management unit 110 updates the evaluation value of the emotion index to “positive”, and the “negative” evaluation value is When supplied, the evaluation value of the emotion index is updated to “negative”. Here, “update” means a process of overwriting the original evaluation value in the internal state storage unit 130, the original evaluation value is “positive”, the evaluation value to be overwritten is “positive”, and the evaluation value Even if there is no change, the evaluation value is updated.

なお感情推定部100は、第1感情推定部102、第2感情推定部104および第3感情推定部106の3つの系統でユーザの感情を推定するため、各系統で推定されたユーザの感情の評価値が、互いに矛盾することが生じうる。以下、ユーザが野球ゲームでホームランを打ったときの「喜び」指標に関する評価値更新処理について説明する。

The emotion estimation unit 100 estimates the user's emotions in the three systems of the first emotion estimation unit 102, the second emotion estimation unit 104, and the third emotion estimation unit 106. The evaluation values may contradict each other. Hereinafter, the evaluation value update processing regarding the “joy” index when the user hits a home run in a baseball game will be described.

第3感情推定部106は、ホームランイベントに関して、ユーザがホームランを打った場合には「ポジティブ」、ユーザがホームランを打たれた場合には「ネガティブ」と感情推定するための評価値テーブルを保持している。第3感情推定部106は、イベント検知部40からユーザがホームランを打ったイベント通知を受けると、評価値テーブルを参照して、「喜び」指標の評価値を「ポジティブ」と推定する。

The third emotion estimation unit 106 holds an evaluation value table for estimating emotions as “positive” when the user hits a home run and “negative” when the user hits a home run. ing. When the third emotion estimation unit 106 receives an event notification that the user has made a home run from the event detection unit 40, the third emotion estimation unit 106 refers to the evaluation value table and estimates the evaluation value of the “joy” index as “positive”.

通常、ユーザはホームランを打てば、体を動かしてはしゃいだり、「よっしゃ。ホームラン打ったぜ。」と叫んだりする喜びの感情表現を行う。このとき第1感情推定部102および第2感情推定部104ともに、「喜び」指標の評価値を「ポジティブ」と推定する。したがって内部状態管理部110に対して感情推定部100から3系統で供給される「喜び」指標の評価値は、すべて「ポジティブ」であり、内部状態管理部110は、ユーザ内部状態記憶部134の「喜び」指標の評価値を「ポジティブ」に更新する。

Ordinarily, when a user hits a home run, the user expresses the emotion of joy by moving his body or screaming “Yo Sha. I hit the home run.” At this time, both the first emotion estimation unit 102 and the second emotion estimation unit 104 estimate the evaluation value of the “joy” index as “positive”. Therefore, the evaluation values of the “joy” index supplied from the emotion estimation unit 100 to the internal state management unit 110 in three systems are all “positive”, and the internal state management unit 110 stores the internal state management unit 110 in the user internal state storage unit 134. Update the evaluation value of the “joy” index to “positive”.

しかしながら、ユーザがホームラン以外を狙っていた場合、ユーザは必ずしも喜びの言動を示さないことがある。たとえば、あるバッターの打席で、サイクル安打(1人の打者が1試合で単打、二塁打、三塁打、ホームランのそれぞれを1本以上打つこと)を成立させるために三塁打を狙っていると、ユーザはホームランを打っても喜べない。ゲームでは勝敗以外に、サイクル安打のような特別なプレーを表彰する仕組みが用意されていることも多く、表彰狙いのユーザにとっては、ホームランよりも三塁打の方が嬉しいケースもある。そこでホームランを打ったユーザが元気なく「しまった。ホームランかよ。」と発話すると、第1感情推定部102および第2感情推定部104ともに、「喜び」指標の評価値を「ネガティブ」と推定する。

However, if the user is aiming for something other than a home run, the user may not always exhibit joyful behavior. For example, if a batter hits a batter at a batter's bat, and the user is aiming for a third strike to establish a cycle hit (one batter hits at least one single strike, two strikes, three strikes, and one home run in one game), I can't be happy even if I hit it. In addition to winning and losing games, there are many mechanisms for awarding special plays such as cycle hits, and there are cases where users who are aiming for award are more happy with three strikes than home runs. Therefore, when the user who hit the home run utters “Has a home run?”, Both the first emotion estimation unit 102 and the second emotion estimation unit 104 estimate the evaluation value of the “joy” index as “negative”. .

そのため内部状態管理部110は、第1感情推定部102および第2感情推定部104から「ネガティブ」の評価値を、第3感情推定部106から「ポジティブ」の評価値を供給されることになる。内部状態管理部110は、3系統から供給される評価値のうち、半数以上で一致する評価値を採用してもよい。つまり内部状態管理部110は、複数系統から独立して供給される評価値のうち、多数決のルールにしたがって半数以上で一致する評価値を採用し、したがって、このケースでは、「喜び」指標の評価値を「ネガティブ」に更新してよい。

Therefore, the internal state management unit 110 is supplied with a “negative” evaluation value from the first emotion estimation unit 102 and the second emotion estimation unit 104, and a “positive” evaluation value from the third emotion estimation unit 106. . The internal state management unit 110 may employ an evaluation value that matches in more than half of the evaluation values supplied from the three systems. That is, the internal state management unit 110 employs an evaluation value that matches more than half of the evaluation values supplied independently from a plurality of systems according to the majority rule, and therefore, in this case, the evaluation of the “joy” index. The value may be updated to “negative”.

なお内部状態管理部110は、3系統から供給される評価値に優先順位を設定し、優先順位にしたがって、採用する評価値を決定してよい。以下、各評価値の順位付けについて説明する。

まず第3感情推定部106により推定される評価値については、イベントに定義された固有の評価値であって、実際のユーザの状態を反映したものではない。そのため第3感情推定部106により推定される評価値は、採用する優先順位を最下位に設定されてよい。 The internalstate management unit 110 may set a priority order for the evaluation values supplied from the three systems, and may determine an evaluation value to be adopted according to the priority order. Hereinafter, ranking of each evaluation value will be described.

First, the evaluation value estimated by the thirdemotion estimation unit 106 is a unique evaluation value defined in the event, and does not reflect the actual user state. Therefore, the evaluation order estimated by the third emotion estimation unit 106 may be set at the lowest priority order.

まず第3感情推定部106により推定される評価値については、イベントに定義された固有の評価値であって、実際のユーザの状態を反映したものではない。そのため第3感情推定部106により推定される評価値は、採用する優先順位を最下位に設定されてよい。 The internal

First, the evaluation value estimated by the third

次に第1感情推定部102と第2感情推定部104とを比較すると、第2感情推定部104は、ユーザの発話内容にのみもとづいて感情推定を行っており、推定のための情報量が少ないという側面がある。また発話内容自体はポジティブな内容であっても、ユーザは自虐的に発話することもある。たとえば三塁打を狙っていたのに「ホームラン打ったぜ。」と肩を落として元気なく発話するようなケースである。この場合、第2感情推定部104は「ポジティブ」の評価値を推定するが、第1感情推定部102は、ユーザの態度やパラ言語情報から「ネガティブ」の評価値を推定する。このようにユーザは、気持ちとは裏腹な言葉を発することがあるため、内部状態管理部110は、第1感情推定部102による評価値の信頼性を、第2感情推定部104による評価値の信頼性より高く設定してよい。したがって内部状態管理部110は、第1感情推定部102、第2感情推定部104、第3感情推定部106による評価値の順番で、優先順位を設定してよい。

Next, when the first emotion estimation unit 102 and the second emotion estimation unit 104 are compared, the second emotion estimation unit 104 performs emotion estimation based only on the user's utterance content, and the amount of information for estimation is small. There are few aspects. In addition, even if the utterance content itself is positive, the user may speak masochistically. For example, it was a case where he was aiming for a three-stroke strike, but hit his home run and dropped his shoulders. In this case, the second emotion estimation unit 104 estimates a “positive” evaluation value, but the first emotion estimation unit 102 estimates a “negative” evaluation value from the user's attitude and paralinguistic information. As described above, since the user may utter words contrary to feelings, the internal state management unit 110 determines the reliability of the evaluation value obtained by the first emotion estimation unit 102 and the evaluation value obtained by the second emotion estimation unit 104. You may set higher than reliability. Therefore, the internal state management unit 110 may set priorities in the order of evaluation values by the first emotion estimation unit 102, the second emotion estimation unit 104, and the third emotion estimation unit 106.

内部状態管理部110は、優先順位を設定することで、少なくともいずれか1系統で評価値を得られれば、ユーザの内部状態を更新できるようになる。内部状態管理部110は、第1感情推定部102により推定されるユーザの感情と、第2感情推定部104により推定されるユーザの感情とが一致しない場合に、優先順位にしたがって第1感情推定部102により推定されるユーザの感情をもとに、ユーザの内部状態を更新する。また、たとえばユーザがホームランを打ったときに、第1感情推定部102および第2感情推定部104によってはユーザのポジティブまたはネガティブの感情を推定できない場合(ユーザが全く動くこともなく発話もしない場合)、内部状態管理部110は、第3感情推定部106により推定される評価値を採用すればよい。

The internal state management unit 110 can update the user's internal state if the evaluation value is obtained in at least one of the systems by setting the priority order. When the user's emotion estimated by the first emotion estimation unit 102 does not match the user's emotion estimated by the second emotion estimation unit 104, the internal state management unit 110 performs the first emotion estimation according to the priority order. Based on the user's emotion estimated by the unit 102, the user's internal state is updated. For example, when the user hits a home run, the first emotion estimation unit 102 and the second emotion estimation unit 104 cannot estimate the user's positive or negative emotion (the user does not move at all and does not speak) ), The internal state management unit 110 may adopt the evaluation value estimated by the third emotion estimation unit 106.

ユーザのロボット20に対する「好感度」は、現在までの間にユーザがロボット20に対して行った言動から推定された感情の複数の評価値から導出される。ロボット20の名前を「ヒコエモン」とすると、ロボット20がユーザに話しかけたときに、ユーザが「ヒコエモンの言うとおりだね。」と応答すれば、感情推定部100はユーザの「愛情」指標の評価値を「ポジティブ」であると推定し、一方で、ユーザが「ヒコエモン、うるさいから静かにして。」と応答すると、感情推定部100は、ユーザの「愛情」指標の評価値を「ネガティブ」であると推定する。またロボット20がユーザに「そろそろ充電してください。」と話しかけたときに、ユーザがすぐに充電してくれれば、感情推定部100はユーザの「愛情」指標の評価値を「ポジティブ」であると推定し、一方でなかなか充電してくれなければ、感情推定部100は、ユーザの「愛情」指標の評価値を「ネガティブ」であると推定する。またユーザがロボット20の頭をなでると、感情推定部100はユーザの「愛情」指標の評価値を「ポジティブ」であると推定し、ユーザがロボット20を蹴ると、感情推定部100はユーザの「愛情」指標の評価値を「ネガティブ」であると推定する。

The “favorability” of the user with respect to the robot 20 is derived from a plurality of evaluation values of emotions estimated from the behaviors of the user with respect to the robot 20 so far. Assuming that the name of the robot 20 is “Hikoemon”, when the robot 20 speaks to the user, if the user responds “As Hikoemon says,” the emotion estimation unit 100 evaluates the “love” index of the user. If the value is estimated to be “positive” while the user responds “Hikoemon, quiet from noisy”, the emotion estimation unit 100 sets the evaluation value of the user's “love” index to “negative”. Presume that there is. Further, when the robot 20 speaks to the user “please charge soon”, if the user charges immediately, the emotion estimation unit 100 sets the evaluation value of the user's “love” index to “positive”. If, on the other hand, it is difficult to charge, the emotion estimation unit 100 estimates that the evaluation value of the user's “love” index is “negative”. When the user strokes the head of the robot 20, the emotion estimation unit 100 estimates that the evaluation value of the “love” index of the user is “positive”, and when the user kicks the robot 20, the emotion estimation unit 100 The evaluation value of the “love” index is estimated to be “negative”.

内部状態管理部110は、感情推定部100から「愛情」指標の評価値を受け取ると、ユーザの好感度の評価値に反映する。内部状態管理部110は、現在までの「愛情」指標の複数の評価値をストックし、たとえば直近の複数個(たとえば21個)の評価値をもとにユーザのロボット20に対する好感度を推定する。ここではニュートラルの評価値を除いた複数の評価値のうち、ポジティブ評価値とネガティブ評価値のうち多い方の評価値を、好感度の評価値と設定してよい。つまり直近の21個の評価値のうち、ポジティブ評価値が11個以上あれば、好感度の評価値は「ポジティブ」と設定され、ネガティブ評価値が11個以上あれば、好感度の評価値は「ネガティブ」と設定されてよい。