WO2013157602A1 - Performance evaluation device, karaoke device, and server device - Google Patents

Performance evaluation device, karaoke device, and server device Download PDFInfo

- Publication number

- WO2013157602A1 WO2013157602A1 PCT/JP2013/061488 JP2013061488W WO2013157602A1 WO 2013157602 A1 WO2013157602 A1 WO 2013157602A1 JP 2013061488 W JP2013061488 W JP 2013061488W WO 2013157602 A1 WO2013157602 A1 WO 2013157602A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- performance

- facial expression

- data

- pitch

- music

- Prior art date

Links

- 238000011156 evaluation Methods 0.000 title claims abstract description 112

- 230000008921 facial expression Effects 0.000 claims description 176

- 230000014509 gene expression Effects 0.000 claims description 64

- 230000005236 sound signal Effects 0.000 claims description 23

- 238000013500 data storage Methods 0.000 claims 2

- 230000001747 exhibiting effect Effects 0.000 claims 1

- 238000000034 method Methods 0.000 description 40

- 230000008569 process Effects 0.000 description 23

- 239000008186 active pharmaceutical agent Substances 0.000 description 21

- 230000001755 vocal effect Effects 0.000 description 19

- 238000012854 evaluation process Methods 0.000 description 18

- 235000014196 Magnolia kobus Nutrition 0.000 description 10

- 240000005378 Magnolia kobus Species 0.000 description 10

- 241000238876 Acari Species 0.000 description 9

- 238000004891 communication Methods 0.000 description 7

- 238000010586 diagram Methods 0.000 description 7

- 230000005540 biological transmission Effects 0.000 description 5

- 230000035945 sensitivity Effects 0.000 description 5

- 238000001514 detection method Methods 0.000 description 4

- 230000003111 delayed effect Effects 0.000 description 2

- 230000000694 effects Effects 0.000 description 2

- 238000005516 engineering process Methods 0.000 description 2

- 230000001815 facial effect Effects 0.000 description 2

- 230000004044 response Effects 0.000 description 2

- 241001070941 Castanea Species 0.000 description 1

- 235000014036 Castanea Nutrition 0.000 description 1

- 238000009825 accumulation Methods 0.000 description 1

- 230000008859 change Effects 0.000 description 1

- 230000001934 delay Effects 0.000 description 1

- 235000013601 eggs Nutrition 0.000 description 1

- 230000002123 temporal effect Effects 0.000 description 1

- 230000007704 transition Effects 0.000 description 1

Images

Classifications

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10K—SOUND-PRODUCING DEVICES; METHODS OR DEVICES FOR PROTECTING AGAINST, OR FOR DAMPING, NOISE OR OTHER ACOUSTIC WAVES IN GENERAL; ACOUSTICS NOT OTHERWISE PROVIDED FOR

- G10K15/00—Acoustics not otherwise provided for

- G10K15/04—Sound-producing devices

-

- G—PHYSICS

- G09—EDUCATION; CRYPTOGRAPHY; DISPLAY; ADVERTISING; SEALS

- G09B—EDUCATIONAL OR DEMONSTRATION APPLIANCES; APPLIANCES FOR TEACHING, OR COMMUNICATING WITH, THE BLIND, DEAF OR MUTE; MODELS; PLANETARIA; GLOBES; MAPS; DIAGRAMS

- G09B15/00—Teaching music

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10H—ELECTROPHONIC MUSICAL INSTRUMENTS; INSTRUMENTS IN WHICH THE TONES ARE GENERATED BY ELECTROMECHANICAL MEANS OR ELECTRONIC GENERATORS, OR IN WHICH THE TONES ARE SYNTHESISED FROM A DATA STORE

- G10H1/00—Details of electrophonic musical instruments

- G10H1/36—Accompaniment arrangements

- G10H1/361—Recording/reproducing of accompaniment for use with an external source, e.g. karaoke systems

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10L—SPEECH ANALYSIS TECHNIQUES OR SPEECH SYNTHESIS; SPEECH RECOGNITION; SPEECH OR VOICE PROCESSING TECHNIQUES; SPEECH OR AUDIO CODING OR DECODING

- G10L25/00—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00

- G10L25/48—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00 specially adapted for particular use

- G10L25/51—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00 specially adapted for particular use for comparison or discrimination

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10L—SPEECH ANALYSIS TECHNIQUES OR SPEECH SYNTHESIS; SPEECH RECOGNITION; SPEECH OR VOICE PROCESSING TECHNIQUES; SPEECH OR AUDIO CODING OR DECODING

- G10L25/00—Speech or voice analysis techniques not restricted to a single one of groups G10L15/00 - G10L21/00

- G10L25/90—Pitch determination of speech signals

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10H—ELECTROPHONIC MUSICAL INSTRUMENTS; INSTRUMENTS IN WHICH THE TONES ARE GENERATED BY ELECTROMECHANICAL MEANS OR ELECTRONIC GENERATORS, OR IN WHICH THE TONES ARE SYNTHESISED FROM A DATA STORE

- G10H2210/00—Aspects or methods of musical processing having intrinsic musical character, i.e. involving musical theory or musical parameters or relying on musical knowledge, as applied in electrophonic musical tools or instruments

- G10H2210/031—Musical analysis, i.e. isolation, extraction or identification of musical elements or musical parameters from a raw acoustic signal or from an encoded audio signal

- G10H2210/091—Musical analysis, i.e. isolation, extraction or identification of musical elements or musical parameters from a raw acoustic signal or from an encoded audio signal for performance evaluation, i.e. judging, grading or scoring the musical qualities or faithfulness of a performance, e.g. with respect to pitch, tempo or other timings of a reference performance

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10H—ELECTROPHONIC MUSICAL INSTRUMENTS; INSTRUMENTS IN WHICH THE TONES ARE GENERATED BY ELECTROMECHANICAL MEANS OR ELECTRONIC GENERATORS, OR IN WHICH THE TONES ARE SYNTHESISED FROM A DATA STORE

- G10H2220/00—Input/output interfacing specifically adapted for electrophonic musical tools or instruments

- G10H2220/155—User input interfaces for electrophonic musical instruments

- G10H2220/441—Image sensing, i.e. capturing images or optical patterns for musical purposes or musical control purposes

- G10H2220/455—Camera input, e.g. analyzing pictures from a video camera and using the analysis results as control data

Definitions

- This invention relates to a technique for evaluating the skill of music performance.

- karaoke apparatus for singing having a scoring function for scoring the skill of a singer's singing performance

- karaoke apparatus For example, various techniques relating to a karaoke apparatus for singing having a scoring function for scoring the skill of a singer's singing performance (hereinafter simply referred to as “karaoke apparatus” unless otherwise specified) have been proposed.

- Patent Document 1 As a document disclosing this kind of technology.

- the karaoke device disclosed in this document calculates the difference between the pitch extracted from the user's singing sound and the pitch extracted from the data prepared in advance as the guide melody for each note of the singing song, and based on this difference Calculate the basic score.

- this karaoke apparatus calculates the bonus point according to the frequency

- This karaoke device presents the total score of the basic score and bonus points to the user as the final evaluation result.

- Patent Documents 2 to 6 disclose documents that disclose a technique for detecting a singing using a technique such as vibrato or shackle from a waveform indicating a singing sound.

- Japanese Unexamined Patent Publication No. 2005-107334 Japanese Unexamined Patent Publication No. 2005-107330 Japanese Laid-Open Patent Publication No. 2005-107087 Japanese Unexamined Patent Publication No. 2008-268370 Japanese Unexamined Patent Publication No. 2005-107336 Japanese Unexamined Patent Publication No. 2008-225115

- the present invention has been made in view of such a problem, and an object of the present invention is to make it possible to present an evaluation result closer to human sensitivity in the evaluation of music performance such as karaoke singing.

- the present invention relates to the expression performance to be performed during the performance of the music and the timing at which the expression performance should be performed in the music with reference to the pronunciation start time of the note or note group included in the music.

- Facial expression performance reference data acquisition means for acquiring facial expression performance reference data to indicate

- pitch volume data generation means for generating pitch volume data indicating the pitch and volume of the performance sound from the performance sound of the music by the performer

- the pitch volume At least one characteristic of pitch and volume indicated by the pitch volume data generated by the data generation means should be performed by the facial expression performance reference data within a predetermined time range indicated by the facial expression performance reference data in the music piece. Characteristics of facial expression performance If shown, it provides a performance evaluation apparatus and a playing evaluating means to improve the evaluation of the performance of the music by the player.

- the present invention also provides the performance evaluation apparatus, accompaniment data acquisition means for acquiring accompaniment data for instructing accompaniment of music, and sound signal output means for outputting a sound signal indicating a musical sound of accompaniment according to the instruction of the accompaniment data

- the pitch volume data generation means includes the pitch and volume of the performance sound of the music performed by the performer according to the accompaniment emitted from a speaker according to the sound signal output from the sound signal output means

- a karaoke apparatus for generating pitch sound volume data indicating the above is provided.

- the present invention relates to each of the performance sounds of music by an arbitrary number of arbitrary performers, and one facial expression performance appears at one timing based on the pronunciation start time of notes or note groups included in the music

- Each of a note or a group of notes included in the music based on an expression performance appearance data acquisition means for acquiring expression performance appearance data indicating that, and an arbitrary number of expression performance appearance data acquired by the expression performance appearance data acquisition means Is performed during the performance of the musical piece according to the specified information, specifying which facial expression performance appears at which timing with reference to the sound generation start time of the note or group of notes.

- the expression of a note or a group of notes included in the music indicates the power expression performance and the timing at which the expression performance should be performed in the music

- a server apparatus comprising facial expression performance reference data generating means for generating facial expression performance reference data indicating the start time as reference, and transmitting means for transmitting facial expression performance reference data generated by the facial expression performance reference data generating means to the performance evaluation apparatus I will provide a.

- the present invention is a singing evaluation system, wherein a facial expression performance to be performed during the performance of a musical piece and a timing at which the facial expression performance is to be performed in the musical piece are determined as a pronunciation start time of a note or a note group included in the musical piece.

- Facial expression performance reference data acquisition means for acquiring first facial expression performance reference data shown as a reference; pitch volume data generation means for generating pitch volume data indicating the pitch and volume of the performance sound from the performance sound of the music by the performer; And at least one of the pitch and volume characteristics indicated by the pitch volume data generated by the pitch volume data generation means is within the predetermined time range indicated by the first facial expression performance reference data in the music. Should be done with performance reference data

- the performance evaluation means for improving the performance of the music performed by the performer and each of the performance sounds of the music performed by an arbitrary number of the performers.

- Expression performance appearance data acquisition means for acquiring expression performance appearance data indicating that one expression performance has appeared at one timing based on a pronunciation start time of a note or a group of notes included in the music, and the expression performance appearance data Based on the arbitrary number of facial expression performance appearance data acquired by the acquisition means, any timing with respect to each note or group of notes included in the musical piece by the arbitrary player based on the pronunciation start time of the notes or group of notes And which facial expression performance appears at what frequency, and according to the identified information, the arbitrary performance The facial expression performance to be performed during the performance of the musical piece by and the timing at which the facial expression performance should be performed in the musical piece by the arbitrary player, based on the pronunciation start time of the note or the note group included in the musical piece by the arbitrary player.

- a singing evaluation system comprising expression performance reference data generating means for generating second expression performance reference data to be shown.

- the present invention also provides facial expression performance reference data that indicates the facial expression performance to be performed during the performance of a musical piece and the timing at which the facial expression performance is to be performed in the musical piece, with reference to the pronunciation start time of the note or note group included in the musical piece. And generating pitch volume data indicating the pitch and volume of the performance sound from the performance sound of the music by the performer, and at least one of the characteristics of the pitch and volume indicated by the pitch volume data is A performance evaluation method for improving the performance of the music performed by the performer when the characteristics of the facial expression performance that should be performed by the expression performance reference data within a predetermined time range indicated by the expression performance reference data I will provide a.

- the present invention is a computer-executable program, in which a facial expression performance to be performed during the performance of a musical piece and a timing at which the facial expression performance should be performed in the musical piece are pronounced in a note or a group of notes included in the musical piece

- Expression performance reference data acquisition processing for acquiring expression performance reference data indicating the start time as a reference

- pitch volume data generation processing for generating pitch volume data indicating the pitch and volume of the performance sound from the performance sound of the music by the performer

- at least one of the pitch and volume characteristics indicated by the pitch volume data generated by the pitch volume data generation means is within the predetermined time range indicated by the expression performance reference data in the music piece. Should be done by When showing the characteristics of the expression performance that is, to provide a program for executing a performance evaluation process for improving the evaluation of the performance of the music by the player to the computer.

- a performance evaluation device that gives a high evaluation to the performer is realized.

- the evaluation is performed with little deviation from human sensitivity.

- FIG. 1 is a diagram showing a configuration of a singing evaluation system 1 according to an embodiment of the present invention.

- a karaoke device 10-m 1, 2,... M: M is the total number of karaoke devices

- M is the total number of karaoke devices

- One or a plurality of karaoke apparatuses 10-m are installed in each karaoke store.

- the server device 30 is installed in the system management center.

- the karaoke apparatus 10-m and the server apparatus 30 are connected to the network 90, and can transmit and receive various data to and from each other.

- the karaoke device 10-m is a device that performs singing effects through sound emission of accompaniment music that supports the user's singing and display of lyrics, and evaluation of the skill of the user's singing.

- the karaoke apparatus 10-m evaluates the skill of the singing skill by evaluating the pitch and volume of the user's singing sound and the following five types of facial expression singing.

- the score that is the evaluation result of the two evaluations is presented to the user together with the comment message.

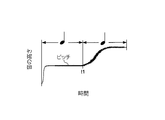

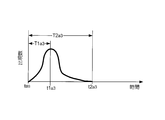

- a1. Tame This is a facial expression song that intentionally delays the singing of a specific sound in the song. As shown in FIG. 2, when this singing is performed, the time at which the pitch of the sound changes from the sound before the singing sound to that of the sound corresponds to both sounds in the score (exemplary singing).

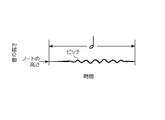

- Vibrato This is a facial expression song that vibrates finely while maintaining the apparent pitch of a specific sound in the song. As shown in FIG. 3, when this singing is performed, the pitch of the singing sound periodically changes across the height of the note corresponding to the sound in the score.

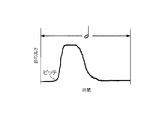

- Kobushi This is a facial expression song that changes the tone of a specific sound in the song so that it sings during pronunciation. As shown in FIG. 4, when this singing is performed, the pitch of the singing sound rises temporarily in the middle of the note corresponding to the sound in the score. d1.

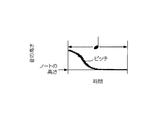

- Shakuri This is a singing technique in which a specific sound in a song is pronounced with a voice lower than the original pitch and then brought close to the original pitch.

- the pitch of the singing sound at the sounding start time is lower than the height of the note corresponding to the sound in the score. Then, the pitch of this singing sound rises slowly after the start of sounding and reaches almost the same height as the note. e1.

- Fall This is a singing technique in which a specific sound in a song is pronounced with a voice higher than its original height and then brought close to its original height.

- FIG. 6 when this singing is performed, the pitch of the singing sound at the sounding start time is higher than the height of the note corresponding to the sound in the score. The pitch of the singing sound gradually falls after the start of sounding and reaches almost the same height as the note.

- the karaoke apparatus 10-m includes a sound source 11, a speaker 12, a microphone 13, a display unit 14, a communication interface 15, a vocal adapter 16, a CPU 17, a RAM 18, a ROM 19, a hard disk 20, and a sequencer 21.

- Sound source 11 outputs a sound signal S A in accordance with the various messages of MIDI (Musical Instrument Digital Interface).

- the speaker 12 emits a given signal as sound.

- the microphone 13 collects sound and outputs a sound collection signal S M.

- the display unit 14 displays an image corresponding to the image signal S I.

- the communication interface 15 transmits / receives data to / from devices connected to the network 90.

- the CPU 17 executes a program stored in the ROM 19 or the hard disk 20 while using the RAM 18 as a work area. Details of the operation of the CPU 17 will be described later.

- the ROM 19 stores IPL (Initial Program Loader) and the like.

- the song data MD-n of each song is data in which the accompaniment content of the song, the lyrics of the song, and the exemplary song content of the song are recorded in SMF (Standard MIDI File) format.

- the music data MD-n has a header HD, an accompaniment track TR AC , a lyrics track TR LY , and a model song reference track TR NR .

- the header HD information such as a song number, a song title, a genre, a performance time, and a time base (the number of ticks corresponding to the time of one quarter note) is described.

- each note NT (i) in the score of the accompaniment part of singing songs indicates the order counted from the beginning of the notebook NT of the score of the relevant part (1)

- the event EV (i) ON to be turned on indicating the order counted from the beginning of the notebook NT of the score of the relevant part (1)

- the delta time DT indicating the execution time difference (number of ticks) of the succeeding events are described in chronological order.

- the lyrics track TR LY includes each data D LY indicating the lyrics of the song and the display time of each lyrics (more specifically, the time difference between the display time of each lyrics and the display time of each previous lyrics) Delta time DT indicating (number of ticks)) is described in chronological order.

- the model singing reference track TR NR includes an event EV (i) ON for instructing the sound of each note NT (i) in the singing part of the score of the song, and an event EV (i) OFF for instructing to mute the sound.

- a delta time DT indicating a difference in execution time (number of ticks) between successive events is described in chronological order.

- the reference database DBRK stores five types of facial expression singing reference data DD a1 , DD a2 , DD a3 , DD a4 , DD a5 .

- the facial expression singing reference data DD a1 is obtained when the singing is performed at each time t on the time axis with the pronunciation start time of the note NT (i) included in the singing song as the reference point t BS and at those times t. Is a data showing each pair of evaluation points VSR (t).

- the facial expression singing reference data DD a2 is obtained when the vibrato singing is performed at each time t on the time axis with the pronunciation start time of the note NT (i) included in the singing song as a reference point t BS and those times t. Is a data showing each pair of evaluation points VSR (t).

- the facial expression singing reference data DD a3 is obtained when each time t on the time axis with the pronunciation start time of the note NT (i) included in the singing song as a reference point t BS and the time t at which the singing is performed by Kobushi. Is a data showing each pair of evaluation points VSR (t).

- the facial expression singing reference data DD a4 is obtained when the singing is performed at each time t on the time axis with the pronunciation start time of the note NT (i) included in the singing song as the reference point t BS and at the time t. Is a data showing each pair of evaluation points VSR (t).

- the facial expression singing reference data DD a5 is obtained when the singing by the fall is performed at each time t on the time axis with the sound generation start time of the note NT (i) included in the singing song as the reference point t BS and at those times t Is a data showing each pair of evaluation points VSR (t).

- the five types of facial expression song reference data DD a1 , DD a2 , DD a3 , DD a4 , DD a5 are referred to as facial expression song reference data DD.

- the song evaluation program VPG has the following three functions. a2. Standard Evaluation Function This is because each note NT (determined by each event EV (i) ON and EV (i) OFF in the exemplary singing reference track TR NR indicated by the output signals S L and S P of the vocal adapter 16 This is a function for comparing the model pitch PCH REF and the model volume LV REF of i) and evaluating the skill of singing based on the result of this comparison. b2.

- Expression singing evaluation function which, each time the characteristic waveform expression singing appear in the pitch waveform indicated by the output signal S P output vocal adapter 16, the reference point pronunciation start time of notebook NT is the subject expression singing (i) The appearance time of the feature waveform of the facial expression song on the time axis as t BS is obtained, and the evaluation point VSR (t) corresponding to this appearance time is set as each evaluation point VSR of the corresponding facial expression song reference data DD in the reference database DBRK ( This is a function of selecting from t) and evaluating the skill of singing based on this evaluation point VSR (t). c2.

- Evaluation result presentation function This is a function for calculating a score from the evaluation result of the evaluation by a2 and the evaluation result of the evaluation by b2, and displaying the score on the display unit 14 together with the comment message.

- the sequencer 21 When the song data MD-n of the corresponding song is transferred from the hard disk 20 to the RAM 18 in response to the singing start operation of the song by a remote controller (not shown), the sequencer 21 performs an event EV in the song data MD-n. (I) ON , EV (i) OFF , and data DLY are supplied to each part of the apparatus. Specifically, when the music piece data MD-n is stored in the RAM 18, the sequencer 21 stores the time base described in the header HD of the music piece data MD-n and the tempo designated by the remote controller (not shown). The time length of one tick is determined based on the above, and the following three processes are performed while counting ticks as the time length elapses.

- the sequencer 21 reads out the event EV (i) ON following thereafter each time the count number of ticks matches the delta time DT in accompaniment track TR AC (or EV (i) OFF) Instrument 11 is supplied.

- the event EV (i) ON is supplied from the sequencer 21

- the sound source 11 supplies the sound signal S A specified by the event EV (i) ON to the speaker 12, and the event EV (i) OFF is supplied from the sequencer 21. Then, the supply of the sound signal S A to the speaker 12 is stopped.

- the sequencer 21 reads the subsequent data DLY and supplies it to the display unit 14 every time the tick count matches the delta time DT in the lyrics track TRLY .

- the display unit 14 converts the data D LY into a lyrics telop image, and displays the image on a display (not shown).

- the accompaniment sound is emitted from the speaker 12 and the lyrics are displayed on the display.

- the user sings the lyrics displayed on the display toward the microphone 13 while listening to the accompaniment sound emitted from the speaker 12. While the user is singing into the microphone 13, the microphone 13 outputs a collected sound signal S M of the user's singing sound, vocal adapter 16 signal S P and showing the pitch and volume of the signal S M S L is output.

- the sequencer 21 counts the number of ticks read event EV (i) ON following thereafter every time matches the delta time DT within model singing Reference track TR NR (or EV (i) OFF) To the CPU 17.

- the CPU 17 evaluates the skill of the user's singing using the events EV (i) ON and EV (i) OFF supplied from the sequencer 21 and the output signals S P and S L of the vocal adapter 16. Details will be described later.

- the server device 30 is a device that plays a role of supporting the provision of services at a karaoke store.

- the server device 30 includes a communication interface 35, a CPU 37, a RAM 38, a ROM 39, and a hard disk 40.

- the communication interface 35 transmits / receives data to / from devices connected to the network 90.

- the CPU 37 executes various programs stored in the ROM 39 and the hard disk 40 while using the RAM 38 as a work area. Details of the operation of the CPU 37 will be described later.

- the ROM 39 stores IPL and the like.

- the hard disk 40 stores a song sample database DBS, a reference database DBRS, and a song analysis program APG.

- singing sample database DBS singing sample data DS groups each corresponding to one singing song are individually stored.

- the singing sample data DS is data in which a pitch waveform and a volume waveform of a singing sound when a person who has a singing ability exceeding a certain level sings a singing song is recorded.

- the reference database DBRS stores the latest facial expression singing reference data DD to be stored in the reference database DBRK of each karaoke apparatus 10-m.

- the song analysis program APG has the following three functions. a3. Accumulation function This is a function for acquiring the song sample data DS for each song from the karaoke apparatus 10-m one by one, and accumulating the acquired song sample data DS in the song sample database DBS. b3. Rewriting function This is to search the characteristic waveform of the facial expression song from the waveform indicated by the song sample data DS for each of the song sample data DS stored in the song sample database DBS, and to be the target of the facial expression song from the search result.

- FIG. 7 is a flowchart showing the operation of this embodiment.

- the CPU 17 of the karaoke apparatus 10-m supplies a control signal S O to the sequencer 21 when the singing start operation of the song is performed (S100: Yes), and processes the sequencer 21 (the above-described first process).

- S100: Yes the control signal

- S120 the standard song evaluation process

- S140 a facial expression song evaluation process

- Standard song evaluation process (S130) In this processing, the CPU 17 determines the time from when the event EV (i) ON is supplied from the sequencer 21 to when the next event EV (i) OFF is supplied to the sound corresponding to the i-th note NT (i). Let the pronunciation time T NT (i).

- the difference PCH DEF of a model pitch PCH REF output signal S P output vocal adapter 16 converts the note number of the pitch and event EV (i) ON shown during the sounding time T NT (i), and in between determining a difference LV DEF of a model volume LV REF obtained by converting the volume and event EV (i) velocity oN indicated by the signal S P, notebook NT if this difference PCH DEF and differences LV DEF is within a predetermined range (i) It is determined that the singing is successful.

- the CPU 17 performs this note determination from the start to the end of the singing by the user, and divides the number of all notes TN (i) at the end of the singing by the number of the notes NT (i) determined to be acceptable.

- a value obtained by multiplying the obtained value by 100 is defined as a basic score SR BASE .

- CPU 17 determines, in a pitch waveform indicated by the output signal S P output vocal adapter 16, Tame, vibrato, fist, jerking, whether any of the expression singing features waveform fall appeared .

- Patent Document 2 details of the method for determining the feature waveform of the patent are disclosed in Patent Document 2

- Patent Document 3 details of the method for determining the characteristic waveform of the vibrato are described in Patent Document 3

- Patent Document 4 details of the method of determining the feature waveform of Kobushi are described in Patent Document 4

- Patent Document 6 for details of the fall feature waveform determination method.

- the CPU 17 performs this characteristic waveform determination from the start to the end of the singing by the user, and sets a value obtained by multiplying the number of appearances of the facial expression song at the end of the singing by a predetermined coefficient as the addition point SR ADD .

- the total of the basic score SR BASE and the addition point SR ADD is set as the standard score SR NOR .

- Expression song evaluation process (S140) In this process, the CPU 17 sets the time from the output of the sound source event EV (i) ON to the output of the next event EV (i) OFF as the sound generation time T NT (i) corresponding to the i-th note NT (i). ). Then, CPU 17, when the characteristic waveform expression singing in pitch waveform indicated by the output signal S P output vocal adapter 16 between the sounding time T NT (i) are noticed, within sounding time T NT (i) Find the appearance time of the facial expression song and the type of facial expression song that appeared. The CPU 17 generates facial expression song appearance data indicating the type and appearance time of the facial expression song specified as described above.

- the CPU 17 selects the facial expression song indicated in the generated facial expression song appearance data and the evaluation point VSR (t) corresponding to the appearance time from the series of evaluation points VSR (t) indicated by the facial expression song reference data DD. To do.

- the CPU selects such evaluation points VSR (t) from the start to the end of singing by the user, and the average value of the evaluation points VSR (t) at the end of the singing is used as the facial expression score SR EX.

- CPU17 will perform an evaluation result presentation process, after the song of the song by a user is complete

- the CPU 17 selects a higher score from the standard score SR NOR scored by the standard song evaluation process and the facial score SR EX scored by the facial expression song evaluation process. Then, CPU17 is, if you choose the standard score SR NOR, and this score SR NOR, to display a comment messages in accordance with the score SR NOR for example, such as "It is cool and refined song" on the display unit 14.

- CPU17 is, if you choose a facial expression score SR EX, this and score SR EX, for example, to display a comment message corresponding to the facial expression score such as "I have full of kindness" SR EX on the display unit 14.

- the CPU 17 performs a sample transmission process (S160).

- Sample transmission process CPU 17 is vocal signal S P and S L adapter 16 has output a singing sample data DS of the singing music piece, steps and the singing sample data DS between the start and end of singing singing voice

- a message MS1 including the basic score SR BASE (singing evaluation data) obtained in S130 is transmitted to the server device 30.

- the CPU 37 of the server device 30 obtains the message MS1 from the karaoke device 10-m (S200: Yes), the singing sample data DS and the basic score SR BASE are extracted from this message MS1, and this basic score SR BASE is obtained from the advanced player. It is compared with a reference score SR TH (for example, 80 points) that separates those who are not (S220). When the basic score SR BASE is higher than the reference score SR TH (S220: Yes), the CPU 37 accumulates the song sample data DS extracted from the message MS1 in the song sample database DBS (S230).

- a reference score SR TH for example, 80 points

- the CPU 37 performs a rewriting process (S240).

- the CPU 37 performs the following five processes.

- the CPU 37 searches for the characteristic waveform of the ticks from within the pitch waveform indicated by each singing sample data DS stored in the singing sample database DBS, and the facial expression singing appearance data indicating the search results (the appearance of the ticks). generating a note NT data indicating each time t on the time axis of the reproduction starting time of (i) a reference point t BS).

- CPU 37 based on the expression singing occurrence data generated relates Tame, expression singing at each time t and their time t on the time axis to the reproduction starting time of the notebook NT (i) as a reference point t BS "tame"

- Statistical data showing the relationship with the number of occurrences Num of the synthesizer, and the evaluation point VSR (t) corresponding to each time t in the facial expression singing reference data DD a1 is rewritten based on the contents of this statistical data.

- FIG. 8 is a diagram illustrating an example of statistical data on the eggs.

- the statistics of this example the number of occurrences Num expression singing between the reference point t BS time T1 a1 only before time t1 a1 and the reference point t BS than the time T4 a1 time t4 after only a1 is distributed Yes.

- the maximum peak of the number of appearances Num appears at the time t2 a1 immediately after the reference point t BS , and the second peak of the number of appearances Num at the time t3 a1 later than the time t2 a1. Appears.

- the evaluation point VSR (t2 a1 ) at the time t2 a1 is the highest, and the evaluation point VSR (t3 a1 ) at the time t3 a1 is the second. Get higher.

- the CPU 37 searches for the characteristic waveform of vibrato from within the pitch waveform indicated by each singing sample data DS stored in the singing sample database DBS, and facial expression singing appearance data (vibrato has appeared) indicating the search result. generating a note NT data indicating each time t on the time axis of the reproduction starting time of (i) a reference point t BS). Subsequently, based on the expression song appearance data generated for the vibrato, the CPU 37 uses the time t on the time axis where the pronunciation start time of the note NT (i) is the reference point t BS and the number Num of appearances of the expression song at those times t. Is generated, and the evaluation score VSR (t) corresponding to each time t in the facial expression song reference data DD a2 is rewritten based on the contents of the statistical data.

- FIG. 9 is a diagram illustrating an example of statistical data on vibrato.

- appearance number Num expression singing between the reference point t BS and the reference point t BS than the time T2 a2 time after only t2 a2 are distributed.

- to be the reference point t BS time T1 a2 only after the time t1 a2 is the maximum peak number of occurrences Num has appeared. Therefore, in the facial expression song reference data DD a2 after rewriting by the statistical data of this example, the evaluation point VSR (t1 a2 ) at the time t1 a2 is the highest.

- the CPU 37 searches for the characteristic waveform of Kobushi from within the pitch waveform indicated by each singing sample data DS stored in the singing sample database DBS, and the facial expression singing appearance data (Kobushi appears) indicating the search result. generating a note NT data indicating each time t on the time axis of the reproduction starting time of (i) a reference point t BS). Subsequently, based on the facial expression song appearance data generated for Kobushi, the CPU 37 uses each time t on the time axis with the pronunciation start time of the note NT (i) as the reference point t BS and the number of facial expression songs at those times t. Statistical data indicating the relationship with Num is generated, and the evaluation point VSR (t) corresponding to each time t in the facial expression song reference data DD a3 is rewritten based on the contents of the statistical data.

- FIG. 10 is a diagram illustrating an example of statistical data regarding Kobushi.

- appearance number Num expression singing between the reference point t BS and the reference point t BS than the time T2 a3 time after only t2 a3 are distributed.

- to be the reference point t BS time T1 a3 only after time t1 a3 maximum peak number of occurrences Num has appeared. Therefore, in the facial expression song reference data DD a3 after rewriting by the statistical data of this example, the evaluation point VSR (t1 a3 ) at the time t1 a3 is the highest.

- the CPU 37 searches for the characteristic waveform of the crisp from the pitch waveform indicated by each singing sample data DS stored in the singing sample database DBS, and the facial expression singing appearance data indicating the search result (the appearance of the crisp appears). generating a note NT data indicating each time t on the time axis of the reproduction starting time of (i) a reference point t BS). Subsequently, based on the expression song appearance data generated for the shackle, the CPU 37 uses each time t on the time axis with the pronunciation start time of the note NT (i) as a reference point t BS and the number of appearances of the expression song at those times t. Statistical data indicating the relationship with Num is generated, and the evaluation point VSR (t) corresponding to each time t in the facial expression song reference data DD a4 is rewritten based on the contents of the statistical data.

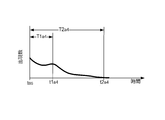

- FIG. 11 is a diagram illustrating an example of the statistical data regarding shackle.

- appearance number Num expression singing between the reference point t BS and the reference point t BS than the time T2 a4 time after only t2 a4 are distributed.

- the statistics in this example the reference point t BS have appeared up to the peak number of occurrences Num

- the time t1 a4 than the reference point t BS delayed by time T1 a4 is a second peak number of occurrences Num Appears.

- the evaluation point VSR (t BS ) at the time t BS is the highest, and the evaluation point VSR (t 1 a4 ) at the time t1 a4 is the second. Get higher.

- the CPU 37 searches for the characteristic waveform of the fall from within the pitch waveform indicated by each singing sample data DS stored in the singing sample database DBS, and the facial expression singing appearance data (fall has appeared) indicating this search result. generating a note NT data indicating each time t on the time axis of the reproduction starting time of (i) a reference point t BS).

- CPU 37 based on the expression singing occurrence data generated relates fall, the number of occurrences of facial expression singing at each time t and their time t on the time axis to the reproduction starting time of the notebook NT (i) as a reference point t BS Statistical data indicating the relationship with Num is generated, and the evaluation point VSR (t) corresponding to each time in the facial expression song reference data DD a5 is rewritten based on the contents of the statistical data.

- FIG. 12 is a diagram illustrating an example of statistical data regarding a fall.

- the statistics of this example the number of occurrences of facial expression singing between the reference point t BS than the time T1 a5 only after the time t1 a5 and time t BS from the time T2 a5 only after the time t2 a5 of Num is distributed .

- the maximum peak of the number of occurrences Num appears at time t2 a5 . Therefore, in the facial expression song reference data DD a5 after rewriting by the statistical data of this example, the evaluation point VSR (t2 a5 ) at time t2 a5 is the highest.

- the CPU 17 of the karaoke apparatus 10-m performs inquiry processing every time a predetermined inquiry time arrives (S110: Yes) (S170). In this inquiry process, the CPU 17 transmits a message MS2 for requesting transmission of the latest data to the server device 30 (S170).

- the CPU 37 of the server device 30 receives the message MS2 from the karaoke device 10-m (S210: Yes), the facial expression singing reference in which the contents are rewritten between the previous message MS2 reception time and the current message MS2 reception time.

- the data DD is transmitted to the karaoke apparatus 10-m that is the transmission source of the message M2 (S250).

- the CPU 17 of the karaoke apparatus 10-m overwrites the facial expression song reference data DD on the reference database DBRK and updates the content (S180).

- each time the characteristic waveform of the facial expression song appears in the waveform of the output signal of the vocal adapter 16 the pronunciation of the note NT (i) that is the target of the facial expression song is started.

- the appearance time of the feature waveform of the facial expression song on the time axis with the time as the reference point is obtained, and the evaluation point VSR (t) corresponding to this appearance time is selected from the evaluation points VSR (t) in the song reference data DD.

- the skill of singing is evaluated based on the selected evaluation point VSR (t). Therefore, according to this embodiment, even if the user performs facial expression singing, good evaluation cannot be obtained unless the timing is appropriate. Therefore, according to this embodiment, it is possible to present an evaluation result closer to that based on human sensitivity.

- the facial expression song characteristic waveform is searched from the waveform indicated by the data DD, and the facial expression song is obtained from the search result.

- Statistical data indicating the relationship between each time on the time axis with the pronunciation start time of the note NT (i) as a reference point being the reference point and the number of facial expression singings appearing at those times, and singing reference data DD

- the evaluation score VSR (t) corresponding to each time at is rewritten based on the contents of the statistical data. Therefore, according to this embodiment, the change of the tendency of how to sing advanced users who are singing a song can be reflected in the evaluation result.

- the present invention may have other embodiments.

- it is as follows.

- facial expressions other than these five types may be detected.

- a song with inflection may be detected.

- the CPU 17 performs the standard singing evaluation process using both the output signals S P and S L of the vocal adapter 16 and indicates the pitch among the output signals S P and S L of the vocal adapter 16. was facial expression singing evaluation process by using only the signal S P. However, CPU 17 may perform a standard singing evaluation process using only one signal S P and S L. Further, CPU 17 may perform facial expression singing evaluation process using both signals S P and S L.

- the skill of the song was evaluated based on the appearance time of the characteristic waveform of the facial expression song.

- the evaluation may be performed in consideration of elements other than the appearance time of the feature waveform of the facial expression song (for example, the length and depth of each of the choke, vibrato, kobushi, shakuri, and fall).

- a configuration is adopted in which a facial expression song that appears in the song sound corresponding to each of the notes included in the song song is adopted, but a series of plural songs included in the song song are included.

- the structure which detects the facial expression song which appears in the song sound according to the note (note group) may be employ

- a facial expression song such as crescendo decrescendo is a facial expression song performed in a series of notes, and it is desirable that detection and evaluation of those facial expressions be performed in units of notes. Therefore, it is desirable that the facial expression song reference data DD relating to such facial expression song is also configured in units of notes.

- the singing sample data DS (pitch) including the signals SP and S L output from the vocal adapter 16 to the server device 30 from the start to the end of the singing of the singing song.

- the sound volume data is transmitted, and the server apparatus 30 employs a configuration in which each facial expression song is detected from the singing sample data DS and the timing of the appearance is specified.

- the server device 30 from the karaoke device 10 transmits a sound signal S M indicating the picked-up sound (sound waveform data indicating the singing sound) by microphones 13, the sound signal S M in the server apparatus 30 processing for generating a signal S p and the signal S L (processing vocal adapter 16 in the above embodiment does) configuration may be employed that originate.

- the karaoke device 10 transmits to the server device 30 data (facial singing appearance data) indicating the type of facial expression singing specified in the facial expression singing evaluation processing (S140) performed in accordance with the singing evaluation program VPG and the timing of its appearance.

- the server device 30 may employ a configuration in which the facial expression song reference data DD is updated based on the facial expression song appearance data transmitted from the karaoke device 10 without performing facial expression song detection processing.

- the server device 30 generates statistical data and rewrites the facial expression song reference data DD based on the statistical data.

- each of the karaoke apparatuses 10-m generates a sound signal S M indicating a singing sound generated by the own apparatus in the past, or directly from another karaoke apparatus 10-m or via the server apparatus 30, and their sound signals S

- the signal S p and the signal S L generated from M , or data (expression song appearance data) indicating the type and expression timing of the expression song specified using these signals are stored in the hard disk 20, and the CPU 17 They may be read and used to perform processing similar to the processing performed by the server device 30 in S240, that is, generation of statistical data and rewriting of facial expression song reference data DD based thereon.

- the standard score SR NOR is calculated by summing the addition point SR ADD calculated based on the number of appearances of the expression song in the standard song evaluation process (S130) with the basic score SR BASE.

- the appearance of the facial expression singing is not taken into account, and a configuration for calculating only the basic score SR BASE may be adopted.

- the higher score of the standard score SR NOR scored by the standard song evaluation process and the expression score SR EX scored by the expression song evaluation process is displayed to the singer.

- the evaluation result for the singer may be presented in other manners such as displaying both and displaying their total score.

- a singer whose basic score SR BASE is higher than the standard score SR TH is regarded as an advanced person, and the facial expression singing reference is made using only the singing sample data DS relating to the advanced person.

- a configuration for updating the data DD is employed.

- the method of selecting the singing sample data DS used for updating the facial expression singing reference data DD is not limited to this.

- the basic score instead of the SR BASE, may be used standard scoring SR NOR that the sum of the summing junction SR ADD basic score SR BASE as the basis for advanced estimation.

- an upper threshold is provided in addition to a lower threshold (reference score SR TH ).

- the singing sample data DS of a singer with a higher basic score SR BASE (or other score) may not be used for updating the facial expression song reference data DD.

- the singing sample data DS of the singer with a high basic score SR BASE is given a high weight and the facial expression singing reference data DD is updated. You may make it use for.

- a performance evaluation device that evaluates a music performance

- a performance evaluation device that is provided in a karaoke device for singing and evaluates a singing performance is shown.

- the present invention is not limited to the evaluation of singing performances, and can be applied to the evaluation of musical performances using various musical instruments. That is, the term “singing” used in the above embodiment is replaced with the more general term “performance”. Note that in a performance evaluation device that evaluates instrumental music performance, for example, choking on a guitar, and the like, evaluation regarding facial expression performance corresponding to each instrument is performed.

- the karaoke apparatus for musical instrument performance uses, for example, data indicating the score and each section of the score (for example, the song data MD instead of the lyrics track TR LY (for example, The delta time indicating the display time of 2 bars or 4 bars) is configured to include a score track which is data described in chronological order, and the sequencer 21 and the display unit 14 follow the score track to progress the music. Accordingly, an image signal indicating a musical score corresponding to the accompaniment location is output to the display.

- the image signal output processing by the sequencer 21 and the display unit 14 may not be performed.

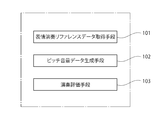

- the performance evaluation apparatus is such that, as illustrated in FIG. Facial expression performance reference data acquisition means 101 for acquiring facial expression performance reference data that indicates the timing to be performed in reference to the pronunciation start time of a note or note group included in the music, and the performance from the performance sound of the music by the performer Pitch volume data generation means 102 for generating pitch volume data indicating the pitch and volume of sound, and at least one of the characteristics of pitch and volume indicated by the pitch volume data generated by the pitch volume data generation means 102 is Within a predetermined time range indicated by the expression performance reference data in the music

- a performance evaluation means 103 for improving the performance of the music performed by the performer when the expression performance characteristics are supposed to be performed by the expression performance reference data. Expressed, the presence or absence of other elements and the specific mode of other elements are arbitrary.

- the performance evaluation device according to the present invention is not limited to a dedicated device.

- a configuration that realizes the performance evaluation device according to the present invention by causing various devices such as a personal computer, a portable information terminal (for example, a mobile phone or a smart phone), and a game device to perform processing according to a program is adopted.

- this program can be distributed by being stored in a recording medium such as a CD-ROM, or can be distributed by using an electric communication line such as the Internet.

- This application is based on Japanese Patent Application No. 2012-094853 filed on Apr. 18, 2012, the contents of which are incorporated herein by reference.

- SYMBOLS 1 Singing evaluation system, 10 ... Karaoke apparatus, 11 ... Sound source, 12 ... Speaker, 13 ... Microphone, 14 ... Display part, 15 ... Communication interface, 16 ... Vocal adapter, 17 ... CPU, 18 ... RAM, 19 ... ROM, DESCRIPTION OF SYMBOLS 20 ... Hard disk, 21 ... Sequencer, 30 ... Server apparatus, 35 ... Communication interface, 37 ... CPU, 38 ... RAM, 39 ... ROM, 40 ... Hard disk, 90 ... Network

Landscapes

- Engineering & Computer Science (AREA)

- Multimedia (AREA)

- Physics & Mathematics (AREA)

- Acoustics & Sound (AREA)

- Audiology, Speech & Language Pathology (AREA)

- Human Computer Interaction (AREA)

- Health & Medical Sciences (AREA)

- Signal Processing (AREA)

- Computational Linguistics (AREA)

- Business, Economics & Management (AREA)

- Educational Administration (AREA)

- Educational Technology (AREA)

- General Physics & Mathematics (AREA)

- Theoretical Computer Science (AREA)

- Auxiliary Devices For Music (AREA)

- Reverberation, Karaoke And Other Acoustics (AREA)

- Electrophonic Musical Instruments (AREA)

Abstract

This performance evaluation device is provided with: an expressiveness reference data acquisition means that acquires expressiveness reference data that shows expressiveness that should be achieved during the performance of a piece of music and timing at which the expressiveness should be achieved for that piece of music with utterance start timing for notes or note groups included in the piece of music as a standard; a pitch and volume data generating means that generates pitch and volume data showing the pitch and volume of the performance sound from the performance sound of the piece of music by a performer; and a performance evaluation means that improves evaluation of the performance of the piece of music by the performer when at least one of the characteristics of pitch and volume shown by the pitch and volume data generated by the pitch and volume data generating means exhibits expressiveness characteristics that should be achieved according to the expressiveness reference data within a prescribed time range shown by the expressiveness reference data for the piece of music.

Description

この発明は、楽曲演奏の巧拙を評価する技術に関する。

This invention relates to a technique for evaluating the skill of music performance.

例えば、歌唱者の歌唱演奏の巧拙を採点する採点機能を備えた歌唱用のカラオケ装置(以下、特に断らない限り、単に「カラオケ装置」という)に関わる技術が各種提案されている。この種の技術を開示した文献として、特許文献1がある。同文献に開示されたカラオケ装置は、利用者の歌唱音から抽出したピッチとガイドメロディとしてあらかじめ準備されたデータから抽出したピッチとの差分を歌唱曲のノート毎に算出し、この差分に基づいて基本得点を算出する。また、このカラオケ装置は、ビブラートやしゃくりなどの技法を駆使した歌唱が行われた場合にはその歌唱が行われた回数に応じたボーナスポイントを算出する。このカラオケ装置は、基本得点とボーナスポイントの合計点を最終的な評価結果として利用者に提示する。この技術によると、ビブラートやしゃくりなどといった難度の高い技法を駆使した歌唱を評価結果に反映させることができる。

For example, various techniques relating to a karaoke apparatus for singing having a scoring function for scoring the skill of a singer's singing performance (hereinafter simply referred to as “karaoke apparatus” unless otherwise specified) have been proposed. There is Patent Document 1 as a document disclosing this kind of technology. The karaoke device disclosed in this document calculates the difference between the pitch extracted from the user's singing sound and the pitch extracted from the data prepared in advance as the guide melody for each note of the singing song, and based on this difference Calculate the basic score. Moreover, this karaoke apparatus calculates the bonus point according to the frequency | count that the singing was performed, when the singing using techniques, such as a vibrato and a shawl, was performed. This karaoke device presents the total score of the basic score and bonus points to the user as the final evaluation result. According to this technology, singing that makes full use of highly difficult techniques such as vibrato and shackle can be reflected in the evaluation results.

また、歌唱音を示す波形から、ビブラートやしゃくりなどの技法を用いた歌唱が行われたことを検出する技術を開示した文献として、例えば特許文献2乃至6がある。

Further, for example, Patent Documents 2 to 6 disclose documents that disclose a technique for detecting a singing using a technique such as vibrato or shackle from a waveform indicating a singing sound.

しかしながら、特許文献1の技術の場合、本来であればビブラートやしゃくりなどの技法を駆使した歌唱を行うことが好ましくない歌唱箇所についてそのような歌唱が行われた場合であっても、ボーナスポイントが加算されてしまう。このため、評価結果として提示される得点が人間の感性によるものと乖離してしまうという問題があった。

However, in the case of the technique of Patent Document 1, even if such singing is performed for a singing place where it is not preferable to perform singing using techniques such as vibrato and shackle, It will be added. For this reason, there is a problem that the score presented as the evaluation result deviates from that due to human sensitivity.

本発明は、このような課題に鑑みてなされたものであり、カラオケ歌唱等の楽曲演奏の評価において、人間の感性によるものにより近い評価結果を提示できるようにすることを目的とする。

The present invention has been made in view of such a problem, and an object of the present invention is to make it possible to present an evaluation result closer to human sensitivity in the evaluation of music performance such as karaoke singing.

上記課題を解決するため、本発明は、楽曲の演奏中に行われるべき表情演奏と当該表情演奏が前記楽曲において行われるべきタイミングを前記楽曲に含まれるノートまたはノート群の発音開始時刻を基準として示す表情演奏リファレンスデータを取得する表情演奏リファレンスデータ取得手段と、演奏者による前記楽曲の演奏音から当該演奏音のピッチおよび音量を示すピッチ音量データを生成するピッチ音量データ生成手段と、前記ピッチ音量データ生成手段により生成された前記ピッチ音量データにより示されるピッチおよび音量の少なくとも一方の特性が、前記楽曲における前記表情演奏リファレンスデータにより示される所定時間範囲内において前記表情演奏リファレンスデータにより行われるべきであるとされる表情演奏の特性を示す場合、前記演奏者による前記楽曲の演奏に対する評価を向上させる演奏評価手段とを備える演奏評価装置を提供する。

In order to solve the above-mentioned problems, the present invention relates to the expression performance to be performed during the performance of the music and the timing at which the expression performance should be performed in the music with reference to the pronunciation start time of the note or note group included in the music. Facial expression performance reference data acquisition means for acquiring facial expression performance reference data to indicate, pitch volume data generation means for generating pitch volume data indicating the pitch and volume of the performance sound from the performance sound of the music by the performer, and the pitch volume At least one characteristic of pitch and volume indicated by the pitch volume data generated by the data generation means should be performed by the facial expression performance reference data within a predetermined time range indicated by the facial expression performance reference data in the music piece. Characteristics of facial expression performance If shown, it provides a performance evaluation apparatus and a playing evaluating means to improve the evaluation of the performance of the music by the player.

また、本発明は、上記の演奏評価装置と、楽曲の伴奏を指示する伴奏データを取得する伴奏データ取得手段と、前記伴奏データの指示に従い伴奏の楽音を示す音信号を出力する音信号出力手段とを備え、前記ピッチ音量データ生成手段は、前記音信号出力手段から出力された音信号に従いスピーカから放音された伴奏に応じて前記演奏者により行われた前記楽曲の演奏音のピッチおよび音量を示すピッチ音量データを生成するカラオケ装置を提供する。

The present invention also provides the performance evaluation apparatus, accompaniment data acquisition means for acquiring accompaniment data for instructing accompaniment of music, and sound signal output means for outputting a sound signal indicating a musical sound of accompaniment according to the instruction of the accompaniment data The pitch volume data generation means includes the pitch and volume of the performance sound of the music performed by the performer according to the accompaniment emitted from a speaker according to the sound signal output from the sound signal output means A karaoke apparatus for generating pitch sound volume data indicating the above is provided.

また、本発明は、任意数の任意の演奏者による楽曲の演奏音の各々に関し、前記楽曲に含まれるノートまたはノート群の発音開始時刻を基準とする一のタイミングにおいて一の表情演奏が出現したことを示す表情演奏出現データを取得する表情演奏出現データ取得手段と、前記表情演奏出現データ取得手段により取得された任意数の表情演奏出現データに基づき、前記楽曲に含まれるノートまたはノート群の各々に関し、当該ノートまたはノート群の発音開始時刻を基準とするいずれのタイミングでいずれの表情演奏がいずれの頻度で出現しているかを特定し、当該特定した情報に従い、前記楽曲の演奏中に行われるべき表情演奏と当該表情演奏が前記楽曲において行われるべきタイミングを前記楽曲に含まれるノートまたはノート群の発音開始時刻を基準として示す表情演奏リファレンスデータを生成する表情演奏リファレンスデータ生成手段と、前記表情演奏リファレンスデータ生成手段により生成された表情演奏リファレンスデータを演奏評価装置に送信する送信手段とを備えるサーバ装置を提供する。

また、本発明は、歌唱評価システムであって、楽曲の演奏中に行われるべき表情演奏と当該表情演奏が前記楽曲において行われるべきタイミングを前記楽曲に含まれるノートまたはノート群の発音開始時刻を基準として示す第一表情演奏リファレンスデータを取得する表情演奏リファレンスデータ取得手段と、 演奏者による前記楽曲の演奏音から当該演奏音のピッチおよび音量を示すピッチ音量データを生成するピッチ音量データ生成手段と、前記ピッチ音量データ生成手段により生成された前記ピッチ音量データにより示されるピッチおよび音量の少なくとも一方の特性が、前記楽曲における前記第一表情演奏リファレンスデータにより示される所定時間範囲内において前記第一表情演奏リファレンスデータにより行われるべきであるとされる表情演奏の特性を示す場合、前記演奏者による前記楽曲の演奏に対する評価を向上させる演奏評価手段と、任意数の任意の演奏者による楽曲の演奏音の各々に関し、前記任意の演奏者による前記楽曲に含まれるノートまたはノート群の発音開始時刻を基準とする一のタイミングにおいて一の表情演奏が出現したことを示す表情演奏出現データを取得する表情演奏出現データ取得手段と、前記表情演奏出現データ取得手段により取得された任意数の表情演奏出現データに基づき、前記任意の演奏者による楽曲に含まれるノートまたはノート群の各々に関し、当該ノートまたはノート群の発音開始時刻を基準とするいずれのタイミングでいずれの表情演奏がいずれの頻度で出現しているかを特定し、当該特定した情報に従い、前記任意の演奏者による楽曲の演奏中に行われるべき表情演奏と当該表情演奏が前記任意の演奏者による楽曲において行われるべきタイミングを前記任意の演奏者による楽曲に含まれるノートまたはノート群の発音開始時刻を基準として示す第二表情演奏リファレンスデータを生成する表情演奏リファレンスデータ生成手段と、を備える歌唱評価システムを提供する。

また、本発明は、楽曲の演奏中に行われるべき表情演奏と当該表情演奏が前記楽曲において行われるべきタイミングを前記楽曲に含まれるノートまたはノート群の発音開始時刻を基準として示す表情演奏リファレンスデータを取得し、演奏者による前記楽曲の演奏音から当該演奏音のピッチおよび音量を示すピッチ音量データを生成し、前記ピッチ音量データにより示されるピッチおよび音量の少なくとも一方の特性が、前記楽曲における前記表情演奏リファレンスデータにより示される所定時間範囲内において前記表情演奏リファレンスデータにより行われるべきであるとされる表情演奏の特性を示す場合、前記演奏者による前記楽曲の演奏に対する評価を向上させる演奏評価方法を提供する。

また、本発明は、コンピュータが実行可能なプログラムであって、楽曲の演奏中に行われるべき表情演奏と当該表情演奏が前記楽曲において行われるべきタイミングを前記楽曲に含まれるノートまたはノート群の発音開始時刻を基準として示す表情演奏リファレンスデータを取得する表情演奏リファレンスデータ取得処理と、演奏者による前記楽曲の演奏音から当該演奏音のピッチおよび音量を示すピッチ音量データを生成するピッチ音量データ生成処理と、前記ピッチ音量データ生成手段により生成された前記ピッチ音量データにより示されるピッチおよび音量の少なくとも一方の特性が、前記楽曲における前記表情演奏リファレンスデータにより示される所定時間範囲内において前記表情演奏リファレンスデータにより行われるべきであるとされる表情演奏の特性を示す場合、前記演奏者による前記楽曲の演奏に対する評価を向上させる演奏評価処理とを前記コンピュータに実行させるプログラムを提供する。 Further, the present invention relates to each of the performance sounds of music by an arbitrary number of arbitrary performers, and one facial expression performance appears at one timing based on the pronunciation start time of notes or note groups included in the music Each of a note or a group of notes included in the music based on an expression performance appearance data acquisition means for acquiring expression performance appearance data indicating that, and an arbitrary number of expression performance appearance data acquired by the expression performance appearance data acquisition means Is performed during the performance of the musical piece according to the specified information, specifying which facial expression performance appears at which timing with reference to the sound generation start time of the note or group of notes. The expression of a note or a group of notes included in the music indicates the power expression performance and the timing at which the expression performance should be performed in the music A server apparatus comprising facial expression performance reference data generating means for generating facial expression performance reference data indicating the start time as reference, and transmitting means for transmitting facial expression performance reference data generated by the facial expression performance reference data generating means to the performance evaluation apparatus I will provide a.

Further, the present invention is a singing evaluation system, wherein a facial expression performance to be performed during the performance of a musical piece and a timing at which the facial expression performance is to be performed in the musical piece are determined as a pronunciation start time of a note or a note group included in the musical piece. Facial expression performance reference data acquisition means for acquiring first facial expression performance reference data shown as a reference; pitch volume data generation means for generating pitch volume data indicating the pitch and volume of the performance sound from the performance sound of the music by the performer; And at least one of the pitch and volume characteristics indicated by the pitch volume data generated by the pitch volume data generation means is within the predetermined time range indicated by the first facial expression performance reference data in the music. Should be done with performance reference data The performance evaluation means for improving the performance of the music performed by the performer and each of the performance sounds of the music performed by an arbitrary number of the performers. Expression performance appearance data acquisition means for acquiring expression performance appearance data indicating that one expression performance has appeared at one timing based on a pronunciation start time of a note or a group of notes included in the music, and the expression performance appearance data Based on the arbitrary number of facial expression performance appearance data acquired by the acquisition means, any timing with respect to each note or group of notes included in the musical piece by the arbitrary player based on the pronunciation start time of the notes or group of notes And which facial expression performance appears at what frequency, and according to the identified information, the arbitrary performance The facial expression performance to be performed during the performance of the musical piece by and the timing at which the facial expression performance should be performed in the musical piece by the arbitrary player, based on the pronunciation start time of the note or the note group included in the musical piece by the arbitrary player There is provided a singing evaluation system comprising expression performance reference data generating means for generating second expression performance reference data to be shown.

The present invention also provides facial expression performance reference data that indicates the facial expression performance to be performed during the performance of a musical piece and the timing at which the facial expression performance is to be performed in the musical piece, with reference to the pronunciation start time of the note or note group included in the musical piece. And generating pitch volume data indicating the pitch and volume of the performance sound from the performance sound of the music by the performer, and at least one of the characteristics of the pitch and volume indicated by the pitch volume data is A performance evaluation method for improving the performance of the music performed by the performer when the characteristics of the facial expression performance that should be performed by the expression performance reference data within a predetermined time range indicated by the expression performance reference data I will provide a.

In addition, the present invention is a computer-executable program, in which a facial expression performance to be performed during the performance of a musical piece and a timing at which the facial expression performance should be performed in the musical piece are pronounced in a note or a group of notes included in the musical piece Expression performance reference data acquisition processing for acquiring expression performance reference data indicating the start time as a reference, and pitch volume data generation processing for generating pitch volume data indicating the pitch and volume of the performance sound from the performance sound of the music by the performer And at least one of the pitch and volume characteristics indicated by the pitch volume data generated by the pitch volume data generation means is within the predetermined time range indicated by the expression performance reference data in the music piece. Should be done by When showing the characteristics of the expression performance that is, to provide a program for executing a performance evaluation process for improving the evaluation of the performance of the music by the player to the computer.

また、本発明は、歌唱評価システムであって、楽曲の演奏中に行われるべき表情演奏と当該表情演奏が前記楽曲において行われるべきタイミングを前記楽曲に含まれるノートまたはノート群の発音開始時刻を基準として示す第一表情演奏リファレンスデータを取得する表情演奏リファレンスデータ取得手段と、 演奏者による前記楽曲の演奏音から当該演奏音のピッチおよび音量を示すピッチ音量データを生成するピッチ音量データ生成手段と、前記ピッチ音量データ生成手段により生成された前記ピッチ音量データにより示されるピッチおよび音量の少なくとも一方の特性が、前記楽曲における前記第一表情演奏リファレンスデータにより示される所定時間範囲内において前記第一表情演奏リファレンスデータにより行われるべきであるとされる表情演奏の特性を示す場合、前記演奏者による前記楽曲の演奏に対する評価を向上させる演奏評価手段と、任意数の任意の演奏者による楽曲の演奏音の各々に関し、前記任意の演奏者による前記楽曲に含まれるノートまたはノート群の発音開始時刻を基準とする一のタイミングにおいて一の表情演奏が出現したことを示す表情演奏出現データを取得する表情演奏出現データ取得手段と、前記表情演奏出現データ取得手段により取得された任意数の表情演奏出現データに基づき、前記任意の演奏者による楽曲に含まれるノートまたはノート群の各々に関し、当該ノートまたはノート群の発音開始時刻を基準とするいずれのタイミングでいずれの表情演奏がいずれの頻度で出現しているかを特定し、当該特定した情報に従い、前記任意の演奏者による楽曲の演奏中に行われるべき表情演奏と当該表情演奏が前記任意の演奏者による楽曲において行われるべきタイミングを前記任意の演奏者による楽曲に含まれるノートまたはノート群の発音開始時刻を基準として示す第二表情演奏リファレンスデータを生成する表情演奏リファレンスデータ生成手段と、を備える歌唱評価システムを提供する。

また、本発明は、楽曲の演奏中に行われるべき表情演奏と当該表情演奏が前記楽曲において行われるべきタイミングを前記楽曲に含まれるノートまたはノート群の発音開始時刻を基準として示す表情演奏リファレンスデータを取得し、演奏者による前記楽曲の演奏音から当該演奏音のピッチおよび音量を示すピッチ音量データを生成し、前記ピッチ音量データにより示されるピッチおよび音量の少なくとも一方の特性が、前記楽曲における前記表情演奏リファレンスデータにより示される所定時間範囲内において前記表情演奏リファレンスデータにより行われるべきであるとされる表情演奏の特性を示す場合、前記演奏者による前記楽曲の演奏に対する評価を向上させる演奏評価方法を提供する。

また、本発明は、コンピュータが実行可能なプログラムであって、楽曲の演奏中に行われるべき表情演奏と当該表情演奏が前記楽曲において行われるべきタイミングを前記楽曲に含まれるノートまたはノート群の発音開始時刻を基準として示す表情演奏リファレンスデータを取得する表情演奏リファレンスデータ取得処理と、演奏者による前記楽曲の演奏音から当該演奏音のピッチおよび音量を示すピッチ音量データを生成するピッチ音量データ生成処理と、前記ピッチ音量データ生成手段により生成された前記ピッチ音量データにより示されるピッチおよび音量の少なくとも一方の特性が、前記楽曲における前記表情演奏リファレンスデータにより示される所定時間範囲内において前記表情演奏リファレンスデータにより行われるべきであるとされる表情演奏の特性を示す場合、前記演奏者による前記楽曲の演奏に対する評価を向上させる演奏評価処理とを前記コンピュータに実行させるプログラムを提供する。 Further, the present invention relates to each of the performance sounds of music by an arbitrary number of arbitrary performers, and one facial expression performance appears at one timing based on the pronunciation start time of notes or note groups included in the music Each of a note or a group of notes included in the music based on an expression performance appearance data acquisition means for acquiring expression performance appearance data indicating that, and an arbitrary number of expression performance appearance data acquired by the expression performance appearance data acquisition means Is performed during the performance of the musical piece according to the specified information, specifying which facial expression performance appears at which timing with reference to the sound generation start time of the note or group of notes. The expression of a note or a group of notes included in the music indicates the power expression performance and the timing at which the expression performance should be performed in the music A server apparatus comprising facial expression performance reference data generating means for generating facial expression performance reference data indicating the start time as reference, and transmitting means for transmitting facial expression performance reference data generated by the facial expression performance reference data generating means to the performance evaluation apparatus I will provide a.

Further, the present invention is a singing evaluation system, wherein a facial expression performance to be performed during the performance of a musical piece and a timing at which the facial expression performance is to be performed in the musical piece are determined as a pronunciation start time of a note or a note group included in the musical piece. Facial expression performance reference data acquisition means for acquiring first facial expression performance reference data shown as a reference; pitch volume data generation means for generating pitch volume data indicating the pitch and volume of the performance sound from the performance sound of the music by the performer; And at least one of the pitch and volume characteristics indicated by the pitch volume data generated by the pitch volume data generation means is within the predetermined time range indicated by the first facial expression performance reference data in the music. Should be done with performance reference data The performance evaluation means for improving the performance of the music performed by the performer and each of the performance sounds of the music performed by an arbitrary number of the performers. Expression performance appearance data acquisition means for acquiring expression performance appearance data indicating that one expression performance has appeared at one timing based on a pronunciation start time of a note or a group of notes included in the music, and the expression performance appearance data Based on the arbitrary number of facial expression performance appearance data acquired by the acquisition means, any timing with respect to each note or group of notes included in the musical piece by the arbitrary player based on the pronunciation start time of the notes or group of notes And which facial expression performance appears at what frequency, and according to the identified information, the arbitrary performance The facial expression performance to be performed during the performance of the musical piece by and the timing at which the facial expression performance should be performed in the musical piece by the arbitrary player, based on the pronunciation start time of the note or the note group included in the musical piece by the arbitrary player There is provided a singing evaluation system comprising expression performance reference data generating means for generating second expression performance reference data to be shown.

The present invention also provides facial expression performance reference data that indicates the facial expression performance to be performed during the performance of a musical piece and the timing at which the facial expression performance is to be performed in the musical piece, with reference to the pronunciation start time of the note or note group included in the musical piece. And generating pitch volume data indicating the pitch and volume of the performance sound from the performance sound of the music by the performer, and at least one of the characteristics of the pitch and volume indicated by the pitch volume data is A performance evaluation method for improving the performance of the music performed by the performer when the characteristics of the facial expression performance that should be performed by the expression performance reference data within a predetermined time range indicated by the expression performance reference data I will provide a.

In addition, the present invention is a computer-executable program, in which a facial expression performance to be performed during the performance of a musical piece and a timing at which the facial expression performance should be performed in the musical piece are pronounced in a note or a group of notes included in the musical piece Expression performance reference data acquisition processing for acquiring expression performance reference data indicating the start time as a reference, and pitch volume data generation processing for generating pitch volume data indicating the pitch and volume of the performance sound from the performance sound of the music by the performer And at least one of the pitch and volume characteristics indicated by the pitch volume data generated by the pitch volume data generation means is within the predetermined time range indicated by the expression performance reference data in the music piece. Should be done by When showing the characteristics of the expression performance that is, to provide a program for executing a performance evaluation process for improving the evaluation of the performance of the music by the player to the computer.

本発明によれば、個々の楽曲の演奏において、望ましいタイミングで望ましい表情演奏が行われると、演奏者に対し高い評価を与える演奏評価装置が実現される。その結果、演奏者により表情演奏が行われた場合、人間の感性との乖離の少ない評価がなされる。

According to the present invention, when a desired facial expression performance is performed at a desired timing in the performance of each piece of music, a performance evaluation device that gives a high evaluation to the performer is realized. As a result, when an expression performance is performed by the performer, the evaluation is performed with little deviation from human sensitivity.