WO2012147596A1 - 画像データ送信装置、画像データ送信方法、画像データ受信装置および画像データ受信方法 - Google Patents

画像データ送信装置、画像データ送信方法、画像データ受信装置および画像データ受信方法 Download PDFInfo

- Publication number

- WO2012147596A1 WO2012147596A1 PCT/JP2012/060516 JP2012060516W WO2012147596A1 WO 2012147596 A1 WO2012147596 A1 WO 2012147596A1 JP 2012060516 W JP2012060516 W JP 2012060516W WO 2012147596 A1 WO2012147596 A1 WO 2012147596A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- image data

- stream

- elementary

- information

- elementary stream

- Prior art date

Links

Images

Classifications

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N13/00—Stereoscopic video systems; Multi-view video systems; Details thereof

- H04N13/10—Processing, recording or transmission of stereoscopic or multi-view image signals

- H04N13/194—Transmission of image signals

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/20—Servers specifically adapted for the distribution of content, e.g. VOD servers; Operations thereof

- H04N21/23—Processing of content or additional data; Elementary server operations; Server middleware

- H04N21/235—Processing of additional data, e.g. scrambling of additional data or processing content descriptors

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N13/00—Stereoscopic video systems; Multi-view video systems; Details thereof

- H04N13/10—Processing, recording or transmission of stereoscopic or multi-view image signals

- H04N13/106—Processing image signals

- H04N13/128—Adjusting depth or disparity

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N13/00—Stereoscopic video systems; Multi-view video systems; Details thereof

- H04N13/10—Processing, recording or transmission of stereoscopic or multi-view image signals

- H04N13/106—Processing image signals

- H04N13/161—Encoding, multiplexing or demultiplexing different image signal components

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N13/00—Stereoscopic video systems; Multi-view video systems; Details thereof

- H04N13/10—Processing, recording or transmission of stereoscopic or multi-view image signals

- H04N13/106—Processing image signals

- H04N13/172—Processing image signals image signals comprising non-image signal components, e.g. headers or format information

- H04N13/178—Metadata, e.g. disparity information

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N19/00—Methods or arrangements for coding, decoding, compressing or decompressing digital video signals

- H04N19/46—Embedding additional information in the video signal during the compression process

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N19/00—Methods or arrangements for coding, decoding, compressing or decompressing digital video signals

- H04N19/50—Methods or arrangements for coding, decoding, compressing or decompressing digital video signals using predictive coding

- H04N19/597—Methods or arrangements for coding, decoding, compressing or decompressing digital video signals using predictive coding specially adapted for multi-view video sequence encoding

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N19/00—Methods or arrangements for coding, decoding, compressing or decompressing digital video signals

- H04N19/70—Methods or arrangements for coding, decoding, compressing or decompressing digital video signals characterised by syntax aspects related to video coding, e.g. related to compression standards

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/20—Servers specifically adapted for the distribution of content, e.g. VOD servers; Operations thereof

- H04N21/23—Processing of content or additional data; Elementary server operations; Server middleware

- H04N21/236—Assembling of a multiplex stream, e.g. transport stream, by combining a video stream with other content or additional data, e.g. inserting a URL [Uniform Resource Locator] into a video stream, multiplexing software data into a video stream; Remultiplexing of multiplex streams; Insertion of stuffing bits into the multiplex stream, e.g. to obtain a constant bit-rate; Assembling of a packetised elementary stream

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/20—Servers specifically adapted for the distribution of content, e.g. VOD servers; Operations thereof

- H04N21/23—Processing of content or additional data; Elementary server operations; Server middleware

- H04N21/236—Assembling of a multiplex stream, e.g. transport stream, by combining a video stream with other content or additional data, e.g. inserting a URL [Uniform Resource Locator] into a video stream, multiplexing software data into a video stream; Remultiplexing of multiplex streams; Insertion of stuffing bits into the multiplex stream, e.g. to obtain a constant bit-rate; Assembling of a packetised elementary stream

- H04N21/2362—Generation or processing of Service Information [SI]

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/40—Client devices specifically adapted for the reception of or interaction with content, e.g. set-top-box [STB]; Operations thereof

- H04N21/43—Processing of content or additional data, e.g. demultiplexing additional data from a digital video stream; Elementary client operations, e.g. monitoring of home network or synchronising decoder's clock; Client middleware

- H04N21/435—Processing of additional data, e.g. decrypting of additional data, reconstructing software from modules extracted from the transport stream

Definitions

- the present technology relates to an image data transmission device, an image data transmission method, an image data reception device, and an image data reception method, and more particularly to an image data transmission device that transmits stereoscopic image data, scalable encoded image data, and the like.

- H.264 has been used as a video encoding method.

- H.264 / AVC Advanced Video Coding

- H.264 H.264 / MVC Multi-view Video Coding

- MVC a mechanism for collectively encoding multi-view image data is employed.

- multi-view image data is encoded as one base-view image data and one or more non-baseview image data.

- H.264 / SVC Scalable Video Coding

- SVC Scalable Video Coding

- the transport stream Transport Stream

- PSI Program Specific Information

- the PMT may not always be dynamically updated depending on the transmission side equipment.

- the following inconveniences can be considered when the delivery content is switched from a stereoscopic (3D) image to a two-dimensional (2D) image. That is, the receiver may continue to wait for the data on the assumption that the stream type (Stream_Type) is “0x1B” and the stream type (Stream_Type) is “0x20”.

- FIG. 27 shows a configuration example of a video elementary stream and a PMT (ProgramMap Table) in the transport stream.

- the period of the access units (AU: Access ⁇ Unit)“ 001 ”to“ 009 ”of the video elementary streams ES1 and ES2 is a period in which two video elementary streams exist. This period is a main period of a 3D program, for example, and these two streams constitute a stream of stereoscopic (3D) image data.

- the subsequent period of access units “010” to “014” of the video elementary stream ES1 is a period in which only one video elementary stream exists. This period is, for example, a CM period inserted between the main body periods of the 3D program, and this one stream constitutes a stream of two-dimensional image data.

- the subsequent period of access units “0015” to “016” of video elementary streams ES1 and ES2 is a period in which two video elementary streams exist. This period is a main period of a 3D program, for example, and these two streams constitute a stream of stereoscopic (3D) image data.

- the period (for example, 100 msec) for updating the registration of the video elementary stream in the PMT cannot follow the video frame period (for example, 33.3 msec).

- the configuration in the transport stream of the elementary stream and the PMT is asynchronous. Don't be.

- a conventional AVC (in this case, a high profile) stream may be considered.

- stereoscopic (3D) image data is used, but the base view video elementary stream remains the same as the conventional AVC (2D) video elementary stream. May be recommended.

- the stream of stereoscopic image data includes an AVC (2D) video elementary stream and a non-base-view video elementary stream (Non-Base-view sub-bitstream).

- TS Tranport Stream

- a stream of stereoscopic image data is composed of some of the video elementary streams. For example, consider a case where the following video stream is multiplexed on one transport stream.

- the video elementary stream of “PID0” is a conventional two-dimensional (2D) image data stream itself.

- This video elementary stream constitutes a stream of stereoscopic (3D) image data together with a non-base-view video elementary stream of “PID2” (Non-Base) view sub-bitstream).

- AVC 3D FrameatCompatible indicates stereoscopic (3D) image data such as a side-by-side method or a top-and-bottom method.

- the encoding method of base-view image data and the encoding method of non-base-view image data is MPEG4-AVC.

- the encoding method of the base view image data and the encoding method of the non-base view image data are other encoding methods such as the MPEG2 video method

- the encoding method of the base view image data and the non-viewing image data encoding method is not the same and may be different.

- the purpose of the present technology is to enable a receiver corresponding to MVC or SVC, for example, to accurately respond to dynamic changes in distribution contents and perform correct stream reception.

- the concept of this technology is A first elementary stream including first image data and a predetermined number of second elementary streams each including a predetermined number of second image data and / or metadata related to the first image data.

- the encoding part to generate, A transmission unit for transmitting a transport stream having each packet obtained by packetizing each elementary stream generated by the encoding unit;

- the encoding unit is in at least an image data transmitting apparatus that inserts stream association information indicating the association of each elementary stream into the first elementary stream.

- the encoding unit includes a predetermined number of first elementary streams including the first image data and a predetermined number of second image data and / or metadata related to the first image data.

- a second elementary stream is generated.

- the transmission unit transmits a transport stream including each packet obtained by packetizing each elementary stream generated by the encoding unit.

- an arbitrary encoding method may be used as the encoding method of the first image data included in the first elementary stream and the encoding method of the second image data included in the predetermined number of second elementary streams. Can be combined.

- the encoding method is only MPEG4-AVC

- the encoding method is only MPEG2 video

- a combination of these encoding methods can be considered. Note that the encoding method is not limited to these MPEG4-AVC and MPEG2 video.

- the first image data is base view image data constituting stereoscopic (3D) image data

- the second image data is a view (non-base) other than the base view constituting stereoscopic image (3D) data.

- View) image data

- the first image data is one of left eye and right eye image data for obtaining a stereo stereoscopic image

- the second image data is a left eye and right eye for obtaining a stereo stereoscopic image. This is the other image data of the eye.

- the metadata is disparity information (disparity vector, depth data, etc.) corresponding to stereoscopic image data.

- the first image data is the lowest layer encoded image data constituting the scalable encoded image data

- the second image data is other than the lowest layer constituting the scalable encoded image data. This is encoded image data of a hierarchy.

- the stream association information is a position indicating which view in the multi-viewing should be displayed in the stereoscopic display when the view based on the image data included in the elementary stream in which the stream association information is inserted. Information may be included.

- the stream association information indicating the association of each elementary stream is inserted into at least the first elementary stream by the encoding unit.

- the stream association information indicates an association of each elementary stream by using an identifier for identifying each elementary stream.

- a descriptor (descriptor) indicating the correspondence between the identifier of each elementary stream and the packet identifier or component tag of each elementary stream is inserted into the transport stream.

- this descriptor is inserted under the program map table. Note that this correspondence may be defined in advance. As a result, the registration status of each elementary stream in the transport stream layer and the stream association information are linked.

- the receiving side can easily determine whether or not the transport stream includes the second elementary stream related to the first elementary stream based on the stream association information.

- the receiving side can accurately respond to the change in the configuration of the elementary stream, that is, the dynamic change in the delivery content, based on this stream association information. The correct stream reception can be performed.

- the stream association information may include prelude information informing that the change occurs before the change of the association of each elementary stream actually occurs.

- prelude information informing that the change occurs before the change of the association of each elementary stream actually occurs.

- the encoding unit may insert the stream association information into the elementary stream in units of pictures or GOPs.

- the configuration of elementary streams for example, the change in the number of views of stereoscopic image data or the change in the number of layers of scalable encoded image data can be managed in units of pictures or GOPs.

- the transmission unit determines whether the stream association information is inserted into the elementary stream or whether the stream association information inserted into the elementary stream is changed in the transport stream. May be inserted. With this descriptor, it is possible to prompt the reference of the stream association information inserted in the elementary stream, and a stable reception operation can be performed on the reception side.

- the stream association information may further include control information of output resolutions of the first image data and the second image data.

- the receiving side can adjust the output resolution of the first image data and the second image data to a predetermined resolution based on the control information.

- the stream association information may further include control information that specifies whether display is mandatory for each of a predetermined number of second image data.

- control information specifies whether display is mandatory for each of a predetermined number of second image data.

- a first elementary stream including first image data and a predetermined number of second elementary streams each including a predetermined number of second image data and / or metadata related to the first image data.

- a receiving unit for receiving a transport stream having each packet obtained by packetization; In the first elementary stream, stream association information indicating the association of each elementary stream is inserted, Based on the stream association information, the first image data is acquired from the first elementary stream received by the receiving unit, and the predetermined number of second elementary streams received by the receiving unit.

- an image data receiving device further comprising a data acquisition unit for acquiring the second image data and / or metadata related to the first image data.

- the transport stream is received by the receiving unit.

- the transport stream includes a first elementary stream that includes first image data and a predetermined number of second images and / or metadata that includes a predetermined number of second image data and / or metadata associated with the first image data.

- Each of the packets obtained by packetizing the elementary stream is inserted into the first elementary stream.

- the image data and / or metadata is acquired from the transport stream received by the receiving unit by the data acquiring unit.

- first image data is acquired from the first elementary stream

- second image data and / or metadata is acquired from a predetermined number of second elementary streams. Is done.

- stream association information indicating the association of each elementary stream is inserted into the transport stream. Therefore, based on this stream association information, it can be easily determined whether or not the transport stream includes the second elementary stream related to the first elementary stream. Also, since the stream association information is inserted into the elementary stream itself, it is possible to accurately respond to a change in the configuration of the elementary stream, that is, a dynamic change in the distribution contents, based on this stream association information, and the correct stream Can receive.

- a resolution adjustment unit that adjusts and outputs the resolutions of the first image data and the second image data acquired by the data acquisition unit

- the stream association information includes the first image data

- the resolution adjustment unit adjusts the resolution of the first image data and the second image data based on the output resolution control information. May be. In this case, even if the resolutions of the first image data and the predetermined number of second image data are different, the output resolution can be adjusted by the resolution adjustment unit.

- the present technology further includes, for example, an image display mode selection unit that selects an image display mode based on the first image data and the second image data acquired by the data acquisition unit, and the stream association information includes a predetermined number Control information for designating whether or not display is mandatory for each of the second image data, and the image display mode selection unit is limited to select an image display mode based on the control information. May be.

- the metadata acquired by the data acquisition unit is disparity information corresponding to stereoscopic image data

- the first image data and the first image data acquired by the data acquisition unit using the disparity information are used.

- the image processing apparatus may further include a post processing unit that performs interpolation processing on the second image data to obtain display image data of a predetermined number of views.

- the reception side it is possible to accurately cope with the configuration change of the elementary stream, that is, the dynamic change of the delivery content, and the stream reception can be performed satisfactorily.

- FIG. 1st Embodiment of this invention It is a block diagram which shows the structural example of the image transmission / reception system as 1st Embodiment of this invention. It is a figure which shows the example in which a program is comprised by two video elementary streams. It is a block diagram which shows the structural example of the transmission data generation part of the broadcasting station which comprises an image transmission / reception system. It is a figure which shows the structural example of the general transport stream containing a video elementary stream, a graphics elementary stream, an audio elementary stream, etc. It is a figure which shows the structural example of a transport stream in the case of inserting stream association information in an elementary stream, and inserting an ES_ID descriptor and an ES_association descriptor in a transport stream.

- FIG. 10 is a diagram illustrating a structure example (Syntax) of “user_data ()”.

- FIG. 10 is a diagram illustrating a structure example (Syntax) of stream association information “stream_association ()”. It is a figure which shows the content (Semantics) of each information in the structural example (Syntax) of stream association information "stream_association ()". It is a figure which shows an example in case a transport stream has the stream of the base view (base

- the transport stream TS includes an MVC base-view stream ES1, an MVC non-base-view stream ES2, and a metadata stream ES3.

- Embodiment 2 modes for carrying out the present technology (hereinafter referred to as “embodiments”) will be described. The description will be given in the following order. 1. Embodiment 2. FIG. Modified example

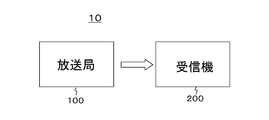

- FIG. 1 shows a configuration example of an image transmission / reception system 10 as an embodiment.

- the image transmission / reception system 10 includes a broadcasting station 100 and a receiver 200.

- the broadcast station 100 transmits a transport stream on a broadcast wave.

- the transport stream includes image data and metadata constituting a program.

- the transport stream includes a first elementary stream that includes the first image data and a predetermined number that includes a predetermined number of second image data and / or metadata associated with the first image data.

- PES Packetized Elementary Stream

- the predetermined number includes 0.

- the transport stream is a packet obtained by packetizing the first elementary stream including the first image data. Will have only.

- the first image data When only the first elementary stream including the first image data exists, the first image data constitutes two-dimensional (2D) image data.

- the first image data and the predetermined number are included.

- the second image data constitutes stereoscopic (3D) image data.

- the first image data is base view image data

- the predetermined number of second image data constitutes non-base view image data.

- the non-base view image data constituting the stereoscopic image data is single. That is, the predetermined number is 1.

- the image data of the base view is image data of one of the left eye and the right eye

- the image data of the non-base view is image data of the other of the left eye and the right eye.

- FIG. 2 shows an example in which a program is composed of a plurality of video elementary streams, for example, two video elementary streams.

- At least stream association information indicating the association of each elementary stream is inserted in the first elementary stream.

- This stream association information is inserted in GOP (Group of Picture) units that are display access units including a picture unit or a predicted image. Details of this stream association information will be described later.

- the receiver 200 receives a transport stream sent from the broadcasting station 100 on a broadcast wave. As described above, this transport stream has each packet obtained by packetizing the first elementary stream and a predetermined number of second elementary streams.

- the first elementary stream includes first image data.

- the predetermined number of second elementary streams includes a predetermined number of second image data and / or metadata related to the first image data.

- At least stream association information indicating the association of each elementary stream is inserted in the first elementary stream. Based on the stream association information, the receiver 200 acquires first image data from the first elementary stream, and acquires image data and metadata from a predetermined number of second elementary streams.

- FIG. 3 shows a configuration example of the transmission data generation unit 110 that generates the above-described transport stream in the broadcast station 100.

- the transmission data generation unit 110 includes a data extraction unit (archive unit) 111, a video encoder 112, a parallax information encoder 113, and an audio encoder 114.

- the transmission data generation unit 110 includes a graphics generation unit 115, a graphics encoder 116, and a multiplexer 117.

- a data recording medium 111a is detachably attached to the data extraction unit 111, for example.

- audio data corresponding to the image data is recorded along with the image data of the program to be transmitted.

- the image data is switched to stereoscopic (3D) image data or two-dimensional (2D) image data according to the program.

- the image data is switched to stereoscopic image data or two-dimensional image data in the program according to the contents of the main story and commercials.

- the stereoscopic image data includes base view image data and a predetermined number of non-base view image data.

- parallax information is also recorded on the data recording medium 111a corresponding to the stereoscopic image data.

- This disparity information is a disparity vector indicating the disparity between the base view and each non-base view, or depth data.

- the depth data can be handled as a disparity vector by a predetermined conversion.

- the disparity information is, for example, disparity information for each pixel (pixel) or disparity information of each divided region obtained by dividing a view (image) into a predetermined number.

- this parallax information is used on the receiving side to adjust the position of the same superimposition information (graphics information or the like) to be superimposed on the images of the base view and each non-base view to give parallax.

- the disparity information is used on the reception side to obtain display image data of a predetermined number of views by performing interpolation processing (post processing) on the image data of the base view and each non-base view.

- the data recording medium 111a is a disk-shaped recording medium, a semiconductor memory, or the like.

- the data extraction unit 111 extracts and outputs image data, audio data, parallax information, and the like from the data recording medium 111a.

- the video encoder 112 performs encoding such as MPEG4-AVC (MVC) or MPEG2 video on the image data output from the data extraction unit 111 to obtain encoded video data. Further, the video encoder 112 generates a video elementary stream by a stream formatter (not shown) provided in the subsequent stage.

- MVC MPEG4-AVC

- MPEG2 MPEG2 video

- the video encoder 112 when the image data is 2D image data, the video encoder 112 generates a video elementary stream including the 2D image data (first image data). In addition, when the image data is stereoscopic image data, the video encoder 112 and a video elementary stream including base view image data (first image data) and a predetermined number of non-base view image data (second A video elementary stream including (image data) is generated.

- the video encoder 112 inserts stream association information into a video elementary stream (first elementary stream) including at least the first image data.

- This stream association information is information indicating the association of each elementary stream.

- the second elementary stream includes the above-described second image data and / or metadata.

- the video encoder 112 inserts this stream association information in units of pictures or units of GOP that are display access units including a predicted image.

- the audio encoder 114 performs encoding such as MPEG2 Audio AAC on the audio data output from the data extraction unit 111 to generate an audio elementary stream.

- the disparity information encoder 113 performs predetermined encoding on the disparity information output from the data extraction unit 111, and generates an elementary stream of disparity information. Note that when the disparity information is disparity information for each pixel (pixel) as described above, the disparity information can be handled like pixel data. In this case, the disparity information encoder 113 can generate disparity information elementary streams by encoding the disparity information using the same encoding method as that of the above-described image data. In this case, a configuration in which the video encoder 112 encodes the disparity information output from the data extraction unit 111 is also conceivable. In this case, the disparity information encoder 113 is not necessary.

- the graphics generation unit 115 generates data (graphics data) of graphics information (including subtitle information) to be superimposed on an image.

- the graphics encoder 116 generates a graphics elementary stream including the graphics data generated by the graphics generating unit 115.

- the graphics information constitutes superimposition information.

- the graphics information is, for example, a logo.

- the subtitle information is, for example, a caption.

- This graphics data is bitmap data.

- the graphics data is added with idling offset information indicating the superimposed position on the image.

- the idling offset information indicates, for example, offset values in the vertical direction and the horizontal direction from the upper left origin of the image to the upper left pixel of the superimposed position of the graphics information.

- the standard for transmitting caption data as bitmap data is standardized and operated as “DVB_Subtitling” in DVB, which is a European digital broadcasting standard.

- the multiplexer 117 packetizes and multiplexes the elementary streams generated by the video encoder 112, the disparity information encoder 113, the audio encoder 114, and the graphics encoder 116, and generates a transport stream TS.

- Image data (stereoscopic image data or two-dimensional image data) output from the data extraction unit 111 is supplied to the video encoder 112.

- video encoder 112 for example, MPEG4-AVC (MVC), MPEG2 video, or the like is encoded on the image data, and a video elementary stream including the encoded video data is generated and supplied to the multiplexer 117. .

- MVC MPEG4-AVC

- MPEG2 video or the like

- a video elementary stream including the 2D image data (first image data) is generated.

- the image data is stereoscopic (3D) image data

- this video encoder 112 at least a video elementary stream (first video elementary stream) including the first image data is streamed in units of pictures or in units of GOPs which are display access units including predicted images. Association information is inserted. As a result, information indicating the presence or absence of the second elementary stream including the second image data is transmitted to the receiving side with respect to the first elementary stream including the first image data. Become.

- the parallax information corresponding to the stereoscopic image data is also output from the data extraction unit 111.

- This parallax information is supplied to the parallax information encoder 113.

- the disparity information encoder 113 performs predetermined encoding on the disparity information, and generates a disparity information elementary stream including the encoded data. This disparity information elementary stream is supplied to the multiplexer 117.

- audio data corresponding to the image data is also output from the data extracting unit 111.

- This audio data is supplied to the audio encoder 114.

- the audio encoder 114 performs encoding such as MPEG2Audio AAC on the audio data, and generates an audio elementary stream including the encoded audio data. This audio elementary stream is supplied to the multiplexer 117.

- the graphics generation unit 115 in response to the image data output from the data extraction unit 111, the graphics generation unit 115 generates data (graphics data) of graphics information (including subtitle information) to be superimposed on the image (view).

- This graphics data is supplied to the graphics encoder 116.

- the graphics data is subjected to predetermined coding, and a graphics elementary stream including the coded data is generated. This graphics elementary stream is supplied to the multiplexer 117.

- the elementary streams supplied from the encoders are packetized and multiplexed to generate a transport stream TS.

- the transport stream TS includes a base-view video elementary stream and a predetermined number of non-base-view video elementary streams during a period in which stereoscopic (3D) image data is output from the data extraction unit 111.

- a transport stream TS has a video elementary stream including the two-dimensional image data during a period in which the two-dimensional (2D) image data is output from the data extraction unit 111.

- FIG. 4 shows a configuration example of a general transport stream including a video elementary stream, an audio elementary stream, and the like.

- the transport stream includes PES packets obtained by packetizing each elementary stream.

- two video elementary stream PES packets “Video PES1” and “Video PES2” are included.

- a PES packet “Graphics PES” of the graphics elementary stream and a PES packet “DisparityData PES” of the private elementary stream are included.

- the PES packet “AudioPES” of the audio elementary stream is included.

- the transport stream includes (Program Map Table) as PSI (Program Specific Information).

- PSI Program Specific Information

- This PSI is information describing to which program each elementary stream included in the transport stream belongs.

- the transport stream includes an EIT (EventInformation Table) as SI (Serviced Information) for managing each event.

- the PMT has a program descriptor (ProgramDescriptor) that describes information related to the entire program.

- the PMT includes an elementary loop having information related to each elementary stream. In this configuration example, there are a video elementary loop, a graphics elementary loop, a private elementary loop, and an audio elementary loop.

- information such as a packet identifier (PID) and a stream type (Stream_Type) is arranged for each stream, and although not shown, there is a descriptor that describes information related to the elementary stream. Be placed.

- PID packet identifier

- Stream_Type stream type

- the video encoder 112 inserts stream association information into a video elementary stream (first elementary stream) including at least first image data in units of pictures or GOPs.

- This stream association information is information indicating the association of each elementary stream.

- This stream association information indicates an association of each elementary stream by using an identifier for identifying each elementary stream.

- the multiplexer 117 inserts into the transport stream a descriptor indicating the correspondence between the identifier of each elementary stream and the packet identifier or component tag of each elementary stream, that is, an ES_ID descriptor.

- the multiplexer 117 inserts this ES_ID descriptor, for example, under the PMT.

- the multiplexer 117 inserts a descriptor indicating the presence of stream association information, that is, an ES_association descriptor, into the transport stream.

- This descriptor indicates whether or not the above-described stream association information is inserted into the elementary stream, or whether or not there is a change in the above-described stream association information inserted into the elementary stream.

- the multiplexer 117 inserts this ES_association descriptor under the PMT or the EIT.

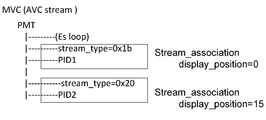

- FIG. 5 shows a configuration example of the transport stream in the case where the stream association information is inserted into the first elementary stream and further the ES_ID descriptor and the ES_association descriptor are inserted into the transport stream.

- the transport stream includes the PES packet “Video PES1” of the video elementary stream (Stream_Type video 1) of the base view.

- the transport stream includes a PES packet “Video PES2” of a non-base-view video elementary stream (Stream_Type video 2).

- illustration of other PES packets is omitted for simplification of the drawing.

- an ES_ID descriptor indicating the correspondence between the identifier ES_ID of each elementary stream and the packet identifier PID or component tag of each elementary stream is inserted in the video elementary loop.

- FIG. 6 shows a structural example (Syntax) of the ES_ID descriptor.

- “Descriptor_tag” is 8-bit data indicating a descriptor type, and here indicates an ES_ID descriptor.

- “Descriptor _length” is 8-bit data indicating the length (size) of the descriptor. This data indicates the number of bytes after “descriptor _length” as the length of the descriptor.

- Stream_count_for_association is 4-bit data indicating the number of streams. Repeat the for loop for the number of streams.

- a 4-bit field of “stream_Association_ID” indicates an identifier (ES_ID) of each elementary stream.

- the 13-bit field of “Associated_stream_Elementary_PID” indicates the packet identifier PID of each elementary stream.

- “Component_tag” is arranged instead of “Associated_stream_Elementary_PID”.

- FIG. 7A shows a structural example (Syntax) of the ES_association descriptor.

- “Descriptor_tag” is 8-bit data indicating a descriptor type, and here indicates that it is an ES_association descriptor.

- “Descriptor_length” is 8-bit data indicating the length (size) of the descriptor. This data indicates the number of bytes after “descriptor _length” as the length of the descriptor.

- the 1-bit field of “existence_of_stream_association_info” is a flag indicating whether stream association information exists in the elementary stream, as shown in FIG. 7B. “1” indicates that stream association information exists in the elementary stream, and “0” indicates that stream association information does not exist in the elementary stream. Alternatively, “1” indicates that there is a change in the stream association information inserted in the elementary stream, and “0” indicates that there is no change in the stream association information inserted in the elementary stream.

- FIG. 8 shows an example of the relationship between the stream association information inserted in the elementary stream and the ES_association descriptor inserted in the transport stream. Since the stream association information exists in the elementary stream, the ES_association descriptor is inserted and transmitted under the PMT, for example, of the transport stream so as to prompt the reference on the receiving side.

- the decoder on the receiving side refers to the ES_association descriptor and can detect in advance that the association configuration of each elementary stream changes from the next GOP, so that a stable reception operation is possible.

- the ES_association descriptor is placed under the EIT.

- stream association information indicating the relationship between the base view elementary stream and the non-base view video elementary stream is inserted into the base view elementary stream.

- This stream association information is inserted in picture units or GOP units using the user data area.

- the stream association information is inserted in the “SELs” portion of the access unit as “Stream Association Information SEI message”.

- FIG. 9A shows the top access unit of a GOP (Group Of Pictures)

- FIG. 9B shows an access unit other than the top of the GOP.

- “Stream Association Information SEI message” is inserted only in the first access unit of the GOP.

- FIG. 10A shows a structural example (Syntax) of “Stream ⁇ Association InformationSEI message ”. “Uuid_iso_iec_11578” has a UUID value indicated by “ISO / IEC 11578: 1996AnnexA.”. “Userdata_for_stream_association ()” is inserted in the field of “user_data_payload_byte”.

- FIG. 10B shows a structural example (Syntax) of “userdata_for_stream_association ()”, in which “stream_association ()” is inserted as stream association information. “Stream_association_id” is an identifier of stream association information indicated by unsigned 16 bits.

- FIG. 11 illustrates a structure example (Syntax) of “user_data ()”.

- a 32-bit field of “user_data_start_code” is a start code of user data (user_data), and is a fixed value of “0x000001B2”.

- a 16-bit field following this start code is an identifier for identifying the contents of user data.

- it is “Stream_Association_identifier”, and it is possible to identify that the user data is stream association information.

- “stream_association ()” as stream association information is inserted.

- FIG. 12 shows a structure example (Syntax) of the stream association information “stream_association ()”.

- FIG. 13 shows the contents (Semantics) of each piece of information in the structural example shown in FIG.

- the 8-bit field of “stream_association_length” indicates the entire byte size after this field.

- a 4-bit field of “stream_count_for_association” indicates the number of elementary streams to be associated, and takes a value of 0 to 15.

- the 4-bit field of “self_ES_id” indicates the association identifier of the elementary stream (this elementary stream) itself in which this stream association information is arranged. For example, the identifier of the basic elementary stream (first elementary stream including the first image data) is set to “0”.

- the 1-bit field of “indication_of_selected_stream_display” is a flag indicating whether or not there is a display-required elementary stream that displays the decoder output in addition to this elementary stream. “1” indicates that there is an elementary stream that must be displayed in addition to this elementary stream. “0” indicates that there is no display-required elementary stream other than this elementary stream. In the case of “1”, the non-base view elementary stream set by “display_mandatory_flag” described later is required to be displayed together with the base view video elementary stream.

- the 1-bit field of “indication_of_other_resolution_master” is a flag indicating whether or not another elementary stream is a display reference for resolution or sampling frequency, not this elementary stream. “1” indicates that another elementary stream is a display reference. “0” indicates that this elementary stream is a display reference.

- “1-bit field of“ terminating_current_association_flag ” indicates whether the configuration of the elementary stream is changed from the next access unit (AU: Access Unit). “1” indicates that the configuration of the elementary stream is changed from the next access unit. “0” indicates that the next access unit also has the same elementary stream configuration as the current access unit. This flag information constitutes prelude information.

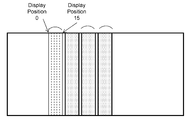

- the 4-bit field of “display_position” indicates which view in the multi-viewing is to be displayed when the view based on the image data included in the elementary stream is displayed in 3D (3D). It takes a value of ⁇ 15.

- a view Non baseNview

- FIG. 15 shows a view display example at the time of stereoscopic (3D) display on the receiving side in that case. That is, the view based on the image data included in the base view stream is displayed as a view at the display position “0”. Further, the view based on the image data included in the non-base view stream is displayed as a view at the display position “15”.

- 3D stereoscopic

- the 4-bit field of “associated_ES_id” indicates an identifier (association identifier) of the elementary stream associated with this elementary stream, and takes a value of 0 to 15.

- a 1-bit field of “display_mandatory_flag” indicates whether or not the elementary stream of “associated_ES_id” is mandatory to be displayed. “1” indicates that the corresponding elementary stream must be displayed. “0” indicates that the corresponding elementary stream is not necessarily displayed.

- the 1-bit field of “resolution_master_flag” indicates whether the elementary stream of “associated_ES_id” is a display standard for resolution or sampling frequency. “1” indicates that the corresponding elementary stream is a display standard. “0” indicates that the corresponding elementary stream is not a display standard.

- FIG. 16 shows an insertion example of “indication_of_selected_stream_display”.

- the base view elementary stream includes left eye (L) image data, and there are two non-base view elementary streams each including right eye (R) image data and center (C) image data. It is an example.

- “indication_of_selected_stream_display” is abbreviated as “indication_display”

- “display_mandatory_flag” is abbreviated as “mandatory_flag”.

- the display essential in the receiver is the left eye (L), right eye (R), and center (C) images.

- FIG. 17 shows a configuration example of the receiver 200.

- the receiver 200 includes a CPU 201, a flash ROM 202, a DRAM 203, an internal bus 204, a remote control receiver 205, and a remote controller transmitter 206.

- the receiver 200 also includes an antenna terminal 211, a digital tuner 212, a transport stream buffer (TS buffer) 213, and a demultiplexer 214.

- TS buffer transport stream buffer

- the receiver 200 also includes a video decoder 215, view buffers 216, 216-1 to 216-N, scalers 224, 224-1 to 224-N, and video superimposing units 217 and 217-1 to 217-N.

- the receiver 200 includes a graphics decoder 218, a graphics generation unit 219, a disparity information decoder 220, graphics buffers 221, 221-1 to 221-N, an audio decoder 222, and a channel processing unit 223. Yes.

- the CPU 201 controls the operation of each unit of receiver 200.

- the flash ROM 202 stores control software and data.

- the DRAM 203 constitutes a work area for the CPU 201.

- the CPU 201 develops software and data read from the flash ROM 202 on the DRAM 203 and activates the software to control each unit of the receiver 200.

- the remote control receiving unit 205 receives a remote control signal (remote control code) transmitted from the remote control transmitter 206 and supplies it to the CPU 201.

- CPU201 controls each part of receiver 200 based on this remote control code.

- the CPU 201, flash ROM 202 and DRAM 203 are connected to the internal bus 204.

- the antenna terminal 211 is a terminal for inputting a television broadcast signal received by a receiving antenna (not shown).

- the digital tuner 212 processes the television broadcast signal input to the antenna terminal 211 and outputs a predetermined transport stream (bit stream data) TS corresponding to the user's selected channel.

- the transport stream buffer (TS buffer) 213 temporarily accumulates the transport stream TS output from the digital tuner 212.

- this transport stream TS has each packet obtained by packetizing elementary streams such as video, disparity information, graphics, and audio.

- the transport stream TS has a first elementary stream including the first image data.

- the transport stream TS has a predetermined number of second elementary streams each including a predetermined number of second image data and / or metadata related to the first image data.

- the first image data when only the first elementary stream including the first image data exists, the first image data constitutes two-dimensional (2D) image data.

- the first image data and the predetermined number are included.

- the second image data constitutes stereoscopic (3D) image data.

- the first image data constitutes base view image data

- the predetermined number of second image data constitutes non-base view image data.

- the stream association information is obtained using the user data area in units of pictures or units of GOP. (See FIG. 12) is inserted.

- an ES_ID descriptor (see FIG. 6) is inserted in the transport stream TS, for example, under the PMT.

- This ES_ID descriptor indicates the correspondence between the identifier of each elementary stream and the packet identifier or component tag of each elementary stream.

- the stream association information indicates an association of each elementary stream using an identifier for identifying each elementary stream. Therefore, the ES_ID descriptor links the registration state of each elementary stream in the transport stream layer with the stream association information.

- an ES_association descriptor (see FIG. 7A) is inserted into the transport stream TS, for example, under the PMT or EIT.

- This ES_association descriptor indicates whether or not stream association information is inserted in the elementary stream, or whether or not there is a change in the stream association information inserted in the elementary stream. Therefore, the ES_association descriptor prompts reference to stream association information.

- the demultiplexer 214 extracts video, disparity information, graphics, and audio elementary streams from the transport stream TS temporarily stored in the TS buffer 213.

- the disparity information elementary stream is extracted only when the transport elementary stream includes a video elementary stream of stereoscopic (3D) image data.

- the demultiplexer 214 extracts the ES_ID descriptor and the ES_association descriptor included in the transport stream TS, and supplies them to the CPU 201.

- the CPU 201 recognizes the correspondence between the identifier of each elementary stream and the packet identifier or component tag of each elementary stream using the ES_ID descriptor. Further, the CPU 201 uses the ES_association descriptor to determine whether or not stream association information is inserted into a video elementary stream, for example, a video elementary stream including the first image data, or whether or not the information is changed. recognize.

- the video decoder 215 performs processing reverse to that of the video encoder 112 of the transmission data generation unit 110 described above. That is, the video decoder 215 performs a decoding process on the encoded image data included in each video elementary stream extracted by the demultiplexer 214 to obtain decoded image data.

- the video decoder 215 obtains the first image data as two-dimensional (2D) image data.

- the video decoder 215 performs stereoscopic (3D) Obtain image data. That is, the first image data is obtained as base view image data, and a predetermined number of second image data is obtained as non-base view image data.

- the video decoder 215 extracts stream association information from a video elementary stream, for example, a first elementary stream including first image data, and supplies the stream association information to the CPU 201.

- the video decoder 215 performs this extraction process under the control of the CPU 201.

- the CPU 201 can recognize whether the stream association information exists or whether there is a change in the information using the ES_association descriptor. Therefore, the video decoder 215 performs the extraction process as necessary. Can be done.

- the CPU 201 can recognize the presence of a predetermined number of second elementary streams related to the first elementary stream including the first image data based on the stream association information extracted by the video decoder 215. Based on this recognition, the CPU 201 controls the demultiplexer 214 so that a predetermined number of second elementary streams related to the first elementary stream are extracted together with the first elementary stream.

- the view buffer (video buffer) 216 temporarily stores the first image data acquired by the video decoder 215 under the control of the CPU 201.

- the first image data is two-dimensional image data or base view image data constituting stereoscopic image data.

- the view buffers (video buffers) 216-1 to 216-N temporarily store N non-base view image data constituting the stereoscopic image data acquired by the video decoder 215 under the control of the CPU 201, respectively. To do.

- the CPU 201 performs read control of the view buffers 216 and 216-1 to 216-N.

- the CPU 201 can know in advance whether or not the configuration of the elementary stream is changed from the next access unit (picture) based on the “terminating_current_association_flag” flag included in the stream association information. Therefore, it is possible to change the reading control from the view buffers 216 and 216-1 to 216-N dynamically and smartly.

- the scalers 224 and 224-1 to 224-N control the CPU 201 so that the output resolution of the image data of each view output from the view buffers 216 and 216-1 to 216-N becomes a predetermined resolution. Adjust to.

- the scalers 224, 224-1 to 224-N constitute a resolution adjustment unit. Image data of each view whose resolution is adjusted is sent to the video superimposing units 217 and 217-1 to 217-N.

- the CPU 201 acquires the resolution information of the image data of each view from the video decoder 215.

- the CPU 201 executes the filter settings of the scalers 224 and 224-1 to 224-N based on the resolution information of each view so that the output resolution of the image data of each view becomes the target resolution.

- the scalers 224 and 224-1 to 224-N when the resolution of the input image data is different from the target resolution, resolution conversion is performed by interpolation processing, and output image data of the target resolution is obtained.

- the CPU 201 sets the target resolution based on the “indication_of_other_resolution_master” and “resolution_master_flag” flags included in the stream association information. That is, the resolution of the image data included in the elementary stream that is set as the resolution reference by these flags is set as the target resolution.

- the graphics decoder 218 performs processing reverse to that of the graphics encoder 116 of the transmission data generation unit 110 described above. That is, the graphics decoder 218 obtains decoded graphics data (including subtitle data) by performing a decoding process on the encoded graphics data included in the graphics elementary stream extracted by the demultiplexer 214.

- the disparity information decoder 220 performs processing opposite to that of the disparity information encoder 113 of the transmission data generation unit 110 described above. That is, the disparity information decoder 220 performs decoding processing on the encoded disparity information included in the disparity information elementary stream extracted by the demultiplexer 214 to obtain decoded disparity information.

- This disparity information is a disparity vector indicating the disparity between the base view and each non-base view, or depth data.

- the depth data can be handled as a disparity vector by a predetermined conversion.

- the disparity information is, for example, disparity information for each pixel (pixel) or disparity information for each divided region obtained by dividing a view (image) into a predetermined number.

- the graphics generation unit 219 generates graphics information data to be superimposed on an image based on the graphics data obtained by the graphics decoder 218 under the control of the CPU 201.

- the graphics generator 219 When only two-dimensional image data (first image data) is output from the video decoder 215, the graphics generator 219 generates graphics information data to be superimposed on the two-dimensional image data.

- image data of each view constituting stereoscopic (3D) image data is output from the video decoder 215, the graphics generation unit 219 generates data of graphics information to be superimposed on the image data of each view.

- the graphics buffer 221 stores graphics information data to be superimposed on the first image data generated by the graphics generation unit 219 under the control of the CPU 201.

- the first image data is two-dimensional image data or base view image data constituting stereoscopic image data.

- the graphics buffers 221-1 to 221-N store graphics information data to be superimposed on the N non-base view image data generated by the graphics generation unit 219.

- the video superimposing unit (display buffer) 217 outputs first image data on which graphics information is superimposed under the control of the CPU 201.

- the first image data is two-dimensional image data SV or base view image data BN constituting stereoscopic image data.

- the video superimposing unit 217 superimposes the graphics information data accumulated in the graphics buffer 221 on the first image data whose resolution is adjusted by the scaler 224.

- the video superimposing units (display buffers) 217-1 to 217-N output N pieces of non-base view image data NB-1 to NB-N on which graphics information is superimposed under the control of the CPU 201.

- the video superimposing units 217-1 to 217-N respectively store the graphics stored in the graphics buffers 221-1 to 221-N in the base view image data whose resolution is adjusted by the scalers 224-1 to 224-N, respectively. Superimpose information data.

- the video decoder 215 when the image data of each view constituting the stereoscopic (3D) image data is output from the video decoder 215, basically, as described above, the video superimposing units 217, 217-1 to 217-N transmit them. Image data is output. However, the CPU 201 controls the output of non-base view image data in accordance with the user's selection operation.

- the CPU 201 performs control so that non-base view image data, which must be displayed, is always output regardless of the user's selection operation.

- the CPU 201 can recognize the non-base view image data that is required to be displayed based on the “indication_of_selected_stream_display” and “display_mandatory_flag” flags included in the stream association information.

- the audio decoder 222 performs processing opposite to that of the audio encoder 114 of the transmission data generation unit 110 described above. That is, the audio decoder 222 performs decoding processing on the encoded audio data included in the audio elementary stream extracted by the demultiplexer 214 to obtain decoded audio data.

- the channel processing unit 223 generates and outputs audio data SA of each channel for realizing, for example, 5.1ch surround with respect to the audio data obtained by the audio decoder 222.

- a television broadcast signal input to the antenna terminal 211 is supplied to the digital tuner 212.

- the television broadcast signal is processed, and a predetermined transport stream TS corresponding to the user's selected channel is output.

- This transport stream TS is temporarily stored in the TS buffer 213.

- the demultiplexer 214 extracts elementary streams of video, disparity information, graphics, and audio from the transport stream TS temporarily stored in the TS buffer 213.

- the disparity information elementary stream is extracted only when the transport elementary stream includes a video elementary stream of stereoscopic (3D) image data.

- the ES_ID descriptor and the ES_association descriptor included in the transport stream TS are extracted and supplied to the CPU 201.

- the CPU 201 the correspondence between the identifier of each elementary stream and the packet identifier or component tag of each elementary stream is recognized by the ES_ID descriptor.

- the CPU 201 uses the ES_association descriptor to determine whether or not stream association information is inserted into a video elementary stream, for example, a video elementary stream including the first image data, or whether or not the information is changed. Be recognized.

- the video decoder 215 performs a decoding process on the encoded image data included in each video elementary stream extracted by the demultiplexer 214 to obtain decoded image data.

- the video decoder 215 obtains the first image data as two-dimensional (2D) image data.

- the video decoder 215 performs stereoscopic (3D). Image data is obtained. That is, the first image data is obtained as base view image data, and a predetermined number of second image data is obtained as non-base view image data.

- the video decoder 215 extracts stream association information from a video elementary stream, for example, a first elementary stream including first image data, and supplies the stream association information to the CPU 201.

- this extraction process is performed under the control of the CPU 201.

- the CPU 201 can recognize whether the stream association information exists or whether there is a change in the information using the ES_association descriptor. Therefore, the video decoder 215 performs the extraction process as necessary. Can be done.

- the CPU 201 recognizes the presence of a predetermined number of second elementary streams related to the first elementary stream including the first image data based on the stream association information extracted by the video decoder 215. Based on this recognition, the CPU 201 controls the demultiplexer 214 so that a predetermined number of second elementary streams related to the first elementary stream are extracted together with the first elementary stream. Is called.

- the first image data acquired by the video decoder 215 is temporarily accumulated under the control of the CPU 201.

- the first image data is two-dimensional image data or base view image data constituting stereoscopic image data.

- N pieces of non-base view image data constituting the stereoscopic image data acquired by the video decoder 215 are temporarily stored under the control of the CPU 201, respectively. Is done.

- the CPU 201 controls the reading of the view buffers 216 and 216-1 to 216-N.

- the CPU 201 recognizes in advance whether or not the configuration of the elementary stream is changed from the next access unit (picture) based on the “terminating_current_association_flag” flag included in the stream association information. Therefore, the read control from the view buffers 216, 216-1 to 216-N can be dynamically changed dynamically.

- the output resolution of the image data of each view output from view buffers 216 and 216-1 to 216-N becomes a predetermined resolution. Adjusted to Then, the image data of each view whose resolution is adjusted is sent to the video superimposing units 217 and 217-1 to 217-N. In this case, the CPU 201 acquires resolution information of image data of each view from the video decoder 215.

- the CPU 201 performs filter settings of the scalers 224 and 224-1 to 224-N based on the resolution information of each view so that the output resolution of the image data of each view becomes the target resolution. Therefore, in the scalers 224, 224-1 to 224-N, when the resolution of the input image data is different from the target resolution, resolution conversion is performed by interpolation processing, and output image data of the target resolution is obtained.

- the CPU 201 sets the target resolution based on the “indication_of_other_resolution_master” and “resolution_master_flag” flags included in the stream association information. In this case, the resolution of the image data included in the elementary stream that is set as the resolution reference by these flags is set as the target resolution.

- decoding processing is performed on the encoded graphics data included in the graphics elementary stream extracted by the demultiplexer 214, and decoded graphics data (including subtitle data) is obtained.

- disparity information decoder 220 decoding processing is performed on the encoded disparity information included in the disparity information elementary stream extracted by the demultiplexer 214, and decoded disparity information is obtained.

- This disparity information is a disparity vector indicating the disparity between the base view and each non-base view, or depth data.

- the depth data can be handled as a disparity vector by a predetermined conversion.

- the graphics generation unit 219 generates graphics information data to be superimposed on the image based on the graphics data obtained by the graphics decoder 218.

- the graphics generator 219 When only two-dimensional image data (first image data) is output from the video decoder 215, the graphics generator 219 generates graphics information data to be superimposed on the two-dimensional image data. Further, in the graphics generation unit 219, when image data of each view constituting stereoscopic (3D) image data is output from the video decoder 215, data of graphics information to be superimposed on the image data of each view is generated.

- 3D stereoscopic

- the graphics buffer 221 data of graphics information to be superimposed on the first image data generated by the graphics generator 219 is accumulated.

- the first image data is two-dimensional image data or base view image data constituting stereoscopic image data.

- graphics information data to be superimposed on N non-base view image data generated by the graphics generating unit 219 is accumulated.

- the graphics information data accumulated in the graphics buffer 221 is superimposed on the first image data whose resolution is adjusted by the scaler 224.

- the video superimposing unit 217 outputs first image data on which graphics information is superimposed.

- the first image data is two-dimensional image data SV or base view image data BN constituting stereoscopic image data.

- the base view image data whose resolution is adjusted by the scalers 224-1 to 224-N are stored in the graphics buffers 221-1 to 221-N, respectively.

- the graphics information data is superimposed.

- the video superimposing units 217-1 to 217-N output N non-base view image data NB-1 to NB-N on which graphics information is superimposed.

- the audio decoder 222 performs a decoding process on the encoded audio data included in the audio elementary stream extracted by the demultiplexer 214 to obtain decoded audio data.

- the audio data obtained by the audio decoder 222 is processed, and for example, audio data SA of each channel for realizing 5.1ch surround is generated and output.

- the stream association information indicating the association of each elementary stream is included in the elementary stream included in the transport stream TS transmitted from the broadcasting station 100 to the receiver 200. Inserted (see FIG. 12).

- the stream association information includes a first elementary stream including first image data (two-dimensional image data or base view image data), and a second including a predetermined number of second image data and / or metadata. It shows the relationship with elementary streams. Therefore, the receiver 200 can accurately cope with a dynamic change in the configuration of the elementary stream, that is, a dynamic change in the distribution contents, based on the stream association information, and can perform correct stream reception.

- An example in which ES2 is intermittently included is shown. In this case, stream association information is inserted into the stream ES1. Note that the identifier ES_id of the stream ES1 is 0, and the identifier ES_id of the stream ES2 is 1.

- the first image data for example, left eye image data

- the second image data for example, right eye image data

- An example in which ES2 is intermittently included is shown. In this case, stream association information is inserted into the stream ES1. Note that the identifier ES_id of the stream ES1 is 0, and the identifier ES_id of the stream ES2 is 1.

- the first image data for example, left eye image data

- the second image data for example, right eye image data

- the stream association information inserted into the elementary stream is control information for designating whether display is mandatory for each of a predetermined number of second image data. It is included. That is, the stream association information includes “indication_of_selected_stream_display” and “display_mandatory_flag” (see FIG. 12). Therefore, the receiver 200 can know which of the predetermined number of second image data is display-required based on this control information, and can limit the selection of the image display mode by the user. It becomes.

- An example in which the base view stream ES2 is continuously included is illustrated. In this case, stream association information is inserted into the stream ES1. Note that the identifier ES_id of the stream ES1 is 0, and the identifier ES_id of the stream ES2 is 1.

- both streams ES1 and ES2 are extracted and decoded, and base view image data such as left eye image data and non-base view image data such as right eye image data are displayed as display image data.

- the three-dimensional (3D) display is performed.

- both streams ES1 and ES2 are extracted and decoded.

- display of the stream ES2 is not indispensable, two-dimensional (2D) display is permitted in addition to stereoscopic (3D) display by a user's selection operation.

- base view image data such as left eye image data and non-base view image data such as right eye image data are output as display image data.

- base view image data is output as display image data.

- the stream association information inserted into the elementary stream includes pre-notification information for informing that the change occurs before the change of the association of each elementary stream actually occurs.

- the stream association information includes “terminating_current_association_flag” (see FIG. 12). Therefore, in the receiver 200, it is possible to skillfully and dynamically change the read control from the decoder buffer on the basis of the preceding information.

- stream association information is inserted into the stream ES1. Note that the identifier ES_id of the stream ES1 is 0, and the identifier ES_id of the stream ES2 is 1.

- terminatating_current_association_flag 1” is set, indicating that the configuration of the elementary stream is changed from the next access unit.

- “terminating_current_association_flag” is abbreviated as “Terminating_flg”.

- both the streams ES1 and ES2 are extracted and decoded, and base-view image data, for example, left-eye image data, and non-base-view image data, for example, right-eye image data, are output as display image data.

- 3D) Display is performed.

- stream association information is inserted into the elementary stream in units of pictures or units of GOP. Therefore, the receiver 200 can manage the configuration of elementary streams, for example, changes in the number of views of stereoscopic image data, in units of pictures or units of GOPs.

- the stream association information inserted into the elementary stream includes control information of the output resolution of the first image data and the second image data. That is, this stream association information includes “indication_of_other_resolution_master” and “resolution_master_flag”. Therefore, the receiver 200 can adjust the output resolution of the first image data and the second image data to the display reference resolution based on this control information.

- stream association information is inserted into the stream ES1

- stream association information is also inserted into the streams ES2 and ES3.

- the identifier ES_id of the stream ES1 is 0, the identifier ES_id of the stream ES2 is 1, and the identifier ES_id of the stream ES3 is 2.

- stream association information is inserted into the stream ES1

- the stream ES2 is inserted.

- Stream association information is also inserted into ES 3. It is assumed that the identifier ES_id of stream ES1 is 0, the identifier ES_id of stream ES2 is 1, and the identifier ES_id of stream ES3 is 2.

- MPEG4-AVC and MPEG2 video encoding are used as the image data encoding method.

- the encoding applied to the image data is not limited to these.

- the base view and the predetermined number of non-base view streams are associated mainly by the stream association information.

- this stream association information for example, it is conceivable to associate metadata related to the image data of the base view.

- the metadata for example, disparity information (disparity vector or depth data) can be considered.

- stream association information is not inserted in the streams ES2 and ES3.

- FIG. 26 illustrates a configuration example of a receiver 200A including a post processing unit.

- the receiver 200A includes a CPU 201, a flash ROM 202, a DRAM 203, an internal bus 204, a remote control receiving unit 205, and a remote control transmitter 206.

- the receiver 200 also includes an antenna terminal 211, a digital tuner 212, a transport stream buffer (TS buffer) 213, and a demultiplexer 214.

- TS buffer transport stream buffer

- the receiver 200A also includes a video decoder 215, view buffers 216, 216-1 to 216-N, video superimposing units 217, 217-1 to 217-N, a metadata buffer 225, and a post processing unit 226.

- the receiver 200 includes a graphics decoder 218, a graphics generation unit 219, a disparity information decoder 220, graphics buffers 221, 221-1 to 221-N, an audio decoder 222, and a channel processing unit 223. Yes.

- the metadata buffer 225 temporarily stores disparity information for each pixel (pixel) acquired by the video decoder 215.

- the parallax information is parallax information for each pixel (pixel)

- the parallax information can be handled like pixel data.

- the video decoder 215 obtains disparity information for each pixel (pixel)

- the disparity information is encoded on the transmission side by the same encoding method as the image data on the disparity information. A stream is being generated.

- the post processing unit 226 uses the disparity information for each pixel (pixel) accumulated in the metadata buffer 225, and performs interpolation processing on the image data of each view output from the view buffers 216, 216-1 to 216-N ( Post-processing) to obtain display image data Display View 1 through Display View P for a predetermined number of views.

- receiver 200A shown in FIG. 26 are omitted from the detailed description, but are configured in the same manner as the receiver 200 shown in FIG.

- the SVC stream includes a video elementary stream of encoded image data of the lowest layer that constitutes scalable encoded image data. Further, the SVC stream includes video elementary streams of a predetermined number of higher-layer encoded image data other than the lowest layer constituting the scalable encoded image data.

- the transport stream TS is distributed on the broadcast wave.

- the present invention is similarly applied when the transport stream TS is distributed through a network such as the Internet. Applicable.

- the above-described association data configuration can be applied to Internet distribution in a container file format other than the transport stream TS.

- this technique can also take the following structures.

- a predetermined number of second elementary elements each including a first elementary stream including first image data and a predetermined number of second image data and / or metadata related to the first image data.

- An encoding unit for generating a stream;

- a transmission unit for transmitting a transport stream having each packet obtained by packetizing each elementary stream generated by the encoding unit;

- the said encoding part is an image data transmission apparatus which inserts the stream correlation information which shows the relationship of each said elementary stream at least to said 1st elementary stream.

- the stream association information includes pre-notification information for notifying that the change occurs before the change of the association of each elementary stream actually occurs.

- the image data transmitting apparatus according to (1) or (2), wherein the encoding unit inserts the stream association information into the elementary stream in units of pictures or GOPs.