EP3729832B1 - Traitement d'un signal monophonique dans un décodeur audio 3d restituant un contenu binaural - Google Patents

Traitement d'un signal monophonique dans un décodeur audio 3d restituant un contenu binaural Download PDFInfo

- Publication number

- EP3729832B1 EP3729832B1 EP18833274.6A EP18833274A EP3729832B1 EP 3729832 B1 EP3729832 B1 EP 3729832B1 EP 18833274 A EP18833274 A EP 18833274A EP 3729832 B1 EP3729832 B1 EP 3729832B1

- Authority

- EP

- European Patent Office

- Prior art keywords

- signal

- rendering

- processing

- channel

- channels

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Active

Links

Images

Classifications

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10L—SPEECH ANALYSIS TECHNIQUES OR SPEECH SYNTHESIS; SPEECH RECOGNITION; SPEECH OR VOICE PROCESSING TECHNIQUES; SPEECH OR AUDIO CODING OR DECODING

- G10L19/00—Speech or audio signals analysis-synthesis techniques for redundancy reduction, e.g. in vocoders; Coding or decoding of speech or audio signals, using source filter models or psychoacoustic analysis

- G10L19/008—Multichannel audio signal coding or decoding using interchannel correlation to reduce redundancy, e.g. joint-stereo, intensity-coding or matrixing

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04S—STEREOPHONIC SYSTEMS

- H04S7/00—Indicating arrangements; Control arrangements, e.g. balance control

- H04S7/30—Control circuits for electronic adaptation of the sound field

- H04S7/302—Electronic adaptation of stereophonic sound system to listener position or orientation

- H04S7/303—Tracking of listener position or orientation

- H04S7/304—For headphones

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04S—STEREOPHONIC SYSTEMS

- H04S2400/00—Details of stereophonic systems covered by H04S but not provided for in its groups

- H04S2400/01—Multi-channel, i.e. more than two input channels, sound reproduction with two speakers wherein the multi-channel information is substantially preserved

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04S—STEREOPHONIC SYSTEMS

- H04S2400/00—Details of stereophonic systems covered by H04S but not provided for in its groups

- H04S2400/11—Positioning of individual sound objects, e.g. moving airplane, within a sound field

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04S—STEREOPHONIC SYSTEMS

- H04S2420/00—Techniques used stereophonic systems covered by H04S but not provided for in its groups

- H04S2420/01—Enhancing the perception of the sound image or of the spatial distribution using head related transfer functions [HRTF's] or equivalents thereof, e.g. interaural time difference [ITD] or interaural level difference [ILD]

Definitions

- the present invention relates to the processing of an audio signal in a 3D audio decoding system of standardized coded type MPEG-H 3D audio.

- the invention relates more particularly to the processing of a monophonic signal intended to be reproduced on a headset also receiving binaural audio signals.

- binaural refers to a restitution on headphones or a pair of headphones, of a sound signal with nevertheless spatialization effects.

- Binaural processing of audio signals subsequently called binauralization or binauralization processing, uses HRTF filters (for “Head Related Transfer Function” in English) in the frequency domain or HRIR, BRIR (For “Head Related Transfer Function”, “ Binaural Room Impulse Response” in the time domain that reproduce the acoustic transfer functions between sound sources and the listener's ears. These filters are used to simulate auditory localization cues that allow a listener to locate sound sources as in a real listening situation.

- the signal from the right ear is obtained by filtering a monophonic signal by the transfer function (HRTF) of the right ear and the signal from the left ear is obtained by filtering this same monophonic signal by the transfer function of the right ear. left ear.

- HRTF transfer function

- NGA type codecs for “Next Generation Audio” in English

- MPEG-H 3D audio described in the document referenced ISO/IEC 23008-3: “High efficiency coding and media delivery in heterogeneous environments - Part 3: 3D audio” published on 07/25/2014 or AC4 described in the document referenced ETSI TS 103 190: “Digital Audio Compression Standard” published in April 2014

- the signals received at the decoder are initially decoded then undergo processing. binauralization as described above before being reproduced on headphones.

- the codecs cited therefore provide the possibility of reproduction on several virtual speakers thanks to listening to a binauralized signal on headphones but also provide the possibility of reproduction on several real speakers, of a sound spatialized.

- a head tracking processing function for the listener's head is associated with binauralization processing, which we will call dynamic rendering, as opposed to static rendering. This processing takes into account the movement of the listener's head to modify the sound reproduction on each ear in order to keep the restitution of the sound scene stable. In other words, the listener will perceive sound sources at the same location in physical space whether or not they move their head.

- a content producer may want a sound signal to be reproduced independently of the soundstage, that is to say, to be perceived as a sound separate from the soundstage, for example as in the case of an “OFF” voice.

- This type of restitution can make it possible, for example, to give explanations on a sound scene that is otherwise reproduced.

- the content producer may want the sound to be reproduced on only one ear to be able to obtain a voluntary “earpiece” type effect, that is to say that the sound is only heard in one ear .

- We may also want this sound to remain permanently only on this ear even if the listener moves his head, which is the case in the previous example.

- the content producer may also want this sound to be reproduced at a precise position in the sound space, relative to one ear of the listener (and not just inside a single ear) and this, even if he moves his head.

- Such a monophonic signal decoded and put at the input of a restitution system of an MPEG-H 3D audio or AC4 type codec will be binauralized.

- the sound will then be distributed over both ears (even if it will be less loud in the contralateral ear) and if the listener moves his head, he will not perceive the sound in the same way on his ear, since the Head tracking processing, if implemented, will ensure that the position of the sound source remains the same as in the initial sound scene: depending on the position of the head, the sound will therefore appear louder in the either ear.

- a “Dichotic” identification is associated with content that should not be processed by binauralization.

- an information bit indicates that a signal is already virtualized. This bit allows post-processing to be deactivated.

- the content thus identified is content already formatted for the audio headset, i.e. binaural. They have two channels.

- the present invention improves the situation.

- a method for processing a monophonic audio signal in a 3D audio decoder comprising a binauralization processing step of the decoded signals intended to be spatially reproduced by an audio headset.

- the method is such that, upon detection, in a data stream representative of the monophonic signal, of an indication of non-processing of binauralization associated with restitution spatial position information, the restitution spatial position information being a binary data indicating a single channel of the restitution audio headset, the decoded monophonic signal is directed to a mixing module comprising a stereophonic rendering engine taking into account the position information to construct two restitution channels processed by a direct mixing step summing these two channels with a binauralized signal resulting from binauralization processing, to be reproduced on the audio headset.

- stereophonic signals are characterized by the fact that each sound source is present in each of the 2 output channels (left and right) with a difference in intensity (or ILD for “Interaural Level Difference”) and sometimes in time. (or ITD for “Interaural Time Difference”) between the channels.

- ILD Interaural Level Difference

- ITD Interaural Time Difference

- Binaural signals are opposed to stereophonic signals in that the sources have a filter applied that reproduces the acoustic path from the source to the listener's ear.

- the sources are perceived outside the head, at a location on a sphere, depending on the filter used.

- Stereophonic and binaural signals are similar in that they are made up of 2 left and right channels, and are distinguished by the content of these 2 channels.

- This reproduced mono signal (for monophonic) is then superimposed on the other reproduced signals which form a 3D sound scene.

- the flow rate necessary to indicate this type of content is optimized since it is enough to code only an indication of position in the sound scene in addition to the indication of non-binauralization to inform the decoder of the processing to be carried out, unlike a method which would require encoding, transmitting then decoding a stereophonic signal taking into account this spatial position.

- the restitution spatial position information is binary data indicating a single channel of the restitution audio headset.

- This information only requires one coding bit, which further allows the necessary flow to be restricted.

- the summation thus carried out is simple to implement and provides the desired “earphone” effect, of superposition of the mono signal on the reproduced sound scene.

- the monophonic signal is a channel type signal directed to the stereophonic rendering engine with the restitution spatial position information.

- the monophonic signal does not undergo a binauralization processing step and is not treated like channel type signals usually processed by state of the art methods.

- This signal is processed by a stereophonic rendering engine different from that existing for channel type signals.

- This rendering engine consists of duplicating the signal monophonic on the 2 channels, by applying factors based on the restitution spatial position information, on the two channels.

- This stereophonic rendering engine can also be integrated into the channel rendering engine with differentiated processing depending on the detection made for the signal at the input of this rendering engine or in the direct mixing module summing the channels coming from this rendering engine. stereophonic to the binauralized signal coming from the binauralization processing module.

- the monophonic signal is an object type signal associated with a set of rendering parameters including the indication of non-binauralization and the restitution position information, the signal being directed to the rendering engine stereophonic with restitution spatial position information.

- the monophonic signal does not undergo a binauralization processing step and is not processed like object type signals usually processed by state of the art methods.

- This signal is processed by a stereophonic rendering engine different from that existing for object type signals.

- the indication of non-processing of binauralization as well as the restitution position information are included in the restitution parameters (Metadata) associated with the object type signal.

- This rendering engine can also be integrated into the object rendering engine or the direct mixing module adding the channels from this stereophonic rendering engine to the binauralized signal coming from the binauralization processing module.

- This device has the same advantages as the method described above, which it implements.

- This signal can be channel type or object type.

- the monophonic signal is a channel type signal and the stereophonic rendering engine is integrated into a channel rendering engine which also constructs restitution channels for signals with several channels.

- the monophonic signal is an object type signal and the stereophonic rendering engine is integrated into an object rendering engine which also constructs restitution channels for monophonic signals associated with sets of restitution parameters.

- the present invention relates to an audio decoder comprising a processing device as described as well as a computer program comprising code instructions for implementing the steps of the processing method as described, when these instructions are executed by a processor.

- the invention relates to a storage medium, readable by a processor, integrated or not into the processing device, possibly removable, memorizing a computer program comprising instructions for the execution of the processing method as described above.

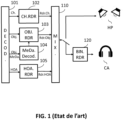

- Block 101 is a core decoding module which decodes both multichannel audio signals (Ch.) of “channel” type, monophonic audio signals of “object” type (Obj.) associated with parameters of spatialization (“Metadata”) (Obj.MeDa.) and audio signals in higher order surround audio (HOA) format (HOA for “Higher Order Ambisonic” in English).

- Ch. multichannel audio signals

- Obj. monophonic audio signals of “object” type

- Methodadata OFbj.MeDa.

- HOA higher order surround audio

- a channel type signal is decoded and processed by a channel rendering engine 102 (“Channel renderer” in English, also called “Format Converter” in MPEG-H 3D Audio) in order to adapt this channel signal to the audio reproduction system.

- the channel rendering engine knows the characteristics of the restitution system and thus provides a signal per restitution channel (Rdr.Ch.) to power either real speakers or virtual speakers (which will then be binauralized for rendering at helmet).

- restitution channels are mixed by the mixing module 110, with other restitution channels coming from the object rendering engines 103 and HOA 105 described later.

- Object type signals are monophonic signals associated with data (“Metadata”) such as spatialization parameters (azimuth angles, elevation) which make it possible to position the monophonic signal in the spatialized sound scene, priority parameters or sound volume settings.

- Metadata such as spatialization parameters (azimuth angles, elevation) which make it possible to position the monophonic signal in the spatialized sound scene, priority parameters or sound volume settings.

- object signals are decoded as well as the associated parameters, by the decoding module 101 and are processed by an object rendering engine 103 (“Object Renderer” in English) which, knowing the characteristics of the restitution system, adapts these monophonic signals to these characteristics.

- the different restitution channels (Rdr.Obj.) thus created are mixed with the other restitution channels coming from the channel and HOA rendering engines, by the mixing module 110.

- the surround type signals (HOA for “Higher Order Ambisonic” in English) are decoded and the decoded surround components are put at the input of an surround sound rendering engine 105 (“HOA renderer” in English) to adapt these components to the sound reproduction system.

- the rendering channels (Rdr .HOA) created by this HOA rendering engine are mixed in 110 with the rendering channels created by the other rendering engines 102 and 103.

- the signals at the output of the mixing module 110 can be reproduced by real HP speakers located in a reproduction room.

- the signals output from the mixing module can directly feed these real speakers, one channel corresponding to one speaker.

- the signals output from the mixing module are to be reproduced on CA audio headphones, then these signals are processed by a binauralization processing module 120 according to binauralization techniques described for example in the document cited for the MPEG standard -H 3D audio.

- a step E200 detects whether the data stream (SMo) representative of the monophonic signal (for example the bitstream at the input of the audio decoder) includes an indication of non-binauralization processing associated with restitution spatial position information. Otherwise (N in step E200), the signal must be binauralized. It is processed by binauralization processing, in step E210, before being reproduced in E240 on an audio reproduction headset. This binauralized signal can be mixed with other stereophonic signals from step E220 described below.

- the signal decoded monophonic is directed to a stereophonic rendering engine to be processed by a step E220.

- the spatial restitution position information can be for example an azimuth angle indicating the position of sound reproduction relative to an ear, right or left, or even an indication level difference between the left and right channels as ILD information allowing the energy of the monaural signal to be distributed between the left and right channels.

- the spatial position information consists of the indication of a single restitution channel, corresponding to the right or left ear. In the latter case, this information is binary information which requires very little throughput (only 1 bit of information).

- step E220 the position information is taken into account to construct two restitution channels for the two headphones of the audio headset. These two restitution channels thus constructed are processed directly by a direct mixing step E230 summing these two stereophonic channels with the two channels of the binauralized signal resulting from the binauralization processing E210.

- Each of the stereophonic restitution channels is then summed with the corresponding channel of the binauralized signal.

- the restitution spatial position information is binary data indicating a single channel of the restitution audio headset

- the two restitution channels constructed in step E220 by the stereophonic rendering engine consist of one channel comprising the monophonic signal, the other channel being zero, and therefore possibly absent.

- the listener wearing headphones hears, on the one hand, a spatialized sound scene coming from the binauralized signal, this sound scene is heard by him in the same physical location even if he moves his head in the case of a dynamic rendering and on the other hand, a sound positioned inside the head, between one ear and the center of the head, which is superimposed on the sound scene independently, that is to say if the listener moves your head, this sound will be heard at the same position relative to one ear.

- This sound is therefore perceived as a superposition of other binauralized sounds in the soundstage, and will act, for example, as an “OFF” voice to this soundstage.

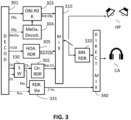

- FIG. 3 illustrates a first embodiment of a decoder comprising a processing device implementing the processing method described with reference to the figure 2 .

- the monophonic signal processed by the method implemented is a channel type signal (Ch.).

- the object type (obj.) and HOA type (HOA) signals are processed in the same way by the respective blocks 303, 304 and 305 as the blocks 103, 104 and 105 described with reference to the figure 1 .

- the mixing block 310 performs mixing as described for the block 110 of the figure 1 .

- the block 330 receiving the channel type signals treats a monophonic signal comprising an indication of non-binauralization (Di.) associated with restitution spatial position information (Pos.) differently than another signal not comprising this information, in especially a multichannel signal. For these signals not including this information, they are processed by block 302 in the same way as block 102 described with reference to figure 1 .

- the block 330 acts as a router or switch and directs the decoded monophonic signal (Mo.) to a stereophonic rendering engine 331.

- This stereophonic rendering engine also receives, from the decoding module, spatial restitution position information (Pos.). With this information, it constructs two restitution channels (2 Vo.), corresponding to the left and right channels of the restitution audio headphones, so that these channels are reproduced on the AC audio headphones.

- the restitution spatial position information is interaural sound level difference information between the left and right channels. This information makes it possible to define a factor to be applied to each of the restitution channels to respect this spatial restitution position.

- these restitution channels are added to the channels of a binauralized signal coming from the binauralization module 320 which carries out binauralization processing in the same way as block 120 of the figure 1 .

- This step of summing the channels is carried out by the direct mixing module 340 which sums the left channel coming from the stereophonic rendering engine 331 to the left channel of the binauralized signal coming from the binauralization processing module 320 and the right channel coming from the engine stereophonic rendering 331 to the right channel of the binauralized signal coming from the binauralization processing module 320, before restitution on the CA headset.

- the monophonic signal does not pass through the binauralization processing module 320, it is transmitted directly to the stereophonic rendering engine 331 before being mixed directly with a binauralized signal.

- This signal will therefore not undergo head tracking processing either.

- the sound reproduced will therefore be at a restitution position relative to one ear of the listener and will remain at this position even if the listener moves his head.

- the stereophonic rendering engine 331 can be integrated into the channel rendering engine 302.

- this channel rendering engine implements both the adaptation of conventional channel type signals, as described in there figure 1 and the construction of the two restitution channels of the rendering engine 331 as explained above by receiving the restitution spatial position information (Pos.). Only the two restitution channels are then redirected to the direct mixing module 340 before reproduction on the CA audio headphones.

- the stereophonic rendering engine 331 is integrated into the direct mixing module 340.

- the routing module 330 directs the decoded monophonic signal (for which the indication of non-binauralization has been detected and restitution spatial position information) to the direct mixing module 340.

- the decoded restitution spatial position information (Pos.) is also transmitted to the direct mixing module 340.

- This direct mixing module then comprising the stereophonic rendering engine, implements the construction of the two restitution channels taking into account the restitution spatial position information as well as the mixing of these two restitution channels with the restitution channels of a binauralized signal from of the binauralization processing module 320.

- FIG. 4 illustrates a second embodiment of a decoder comprising a processing device implementing the processing method described with reference to figure 2 .

- the monophonic signal processed by the method implemented is an object type signal (Obj.).

- the channel type (Ch.) and HOA type (HOA) signals are processed in the same way by the respective blocks 402 and 405 as the blocks 102 and 105 described with reference to the figure 1 .

- the mixing block 410 performs mixing as described for the block 110 of the figure 1 .

- the block 430 receiving the object type signals (Obj.) treats differently a monophonic signal for which an indication of non-binauralization (Di.) associated with restitution spatial position information (Pos.) has been detected than a other monophonic signal for which this information has not been detected.

- the block 430 acts as a router or switch and directs the decoded monophonic signal (Mo.) to a 431 stereophonic rendering engine.

- the non-binauralization indication (Di.) as well as the restitution spatial position information (Pos.) are decoded by the decoding block 404 of the metadata or parameters associated with the object type signals.

- the non-binauralization indication (Di.) is transmitted to the routing block 430 and the restitution spatial position information is transmitted to the stereophonic rendering engine 431.

- This stereophonic rendering engine thus receiving the restitution spatial position information (Pos.), constructs two restitution channels, corresponding to the left and right channels of the restitution audio headset, so that these channels are reproduced on the CA audio headset.

- the spatial restitution position information is azimuth angle information defining an angle between the desired restitution position and the center of the listener's head.

- This information makes it possible to define a factor to be applied to each of the restitution channels to respect this spatial restitution position.

- the gain factors for the left and right channels can be calculated as presented in the document entitled “Virtual Sound Source Positioning Using Vector Base Amplitude Panning” by Ville Pulkki in J. Audio Eng. Soc., Vol.45, No.6, from June 1997 .

- g1 and g2 correspond to the factors for the left and right channel signals

- O is the angle between the frontal direction and the object (called azimuth)

- H is the angle between the frontal direction and the top position.

- virtual speaker (corresponding to the half-angle between the speakers), set for example at 45°.

- these restitution channels are added to the channels of a binauralized signal coming from the binauralization module 420 which carries out binauralization processing in the same way as block 120 of the figure 1 .

- This step of summing the channels is carried out by the direct mixing module 440 which sums the left channel coming from the stereophonic rendering engine 431 to the left channel of the binauralized signal coming from the binauralization processing module 420 and the right channel coming from the engine stereophonic rendering 431 to the right channel of the binauralized signal coming from the binauralization processing module 420, before restitution on the CA headphones.

- the monophonic signal does not pass through the binauralization processing module 420, it is transmitted directly to the stereophonic rendering engine 431 before being mixed directly with a binauralized signal.

- This signal will therefore not undergo head tracking processing either.

- the sound reproduced will therefore be at a restitution position relative to one ear of the listener and will remain at this position even if the listener moves his head.

- the stereophonic rendering engine 431 can be integrated into the object rendering engine 403.

- this object rendering engine implements both the adaptation of the signals of classic object type, as described in figure 1 and the construction of the two restitution channels of the rendering engine 431 as explained above by receiving the restitution spatial position information (Pos.) from the decoding module 404 of the parameters. Only the two restitution channels (2Vo.) are then redirected to the direct mixing module 440 before reproduction on the CA audio headphones.

- the stereophonic rendering engine 431 is integrated into the direct mixing module 440.

- the routing module 430 directs the decoded monophonic signal (Mo.) (for which the indication of non-binauralization and spatial restitution position information) to the direct mixing module 440.

- the decoded spatial restitution position information (Pos.) is also transmitted to the direct mixing module 440 by the parameter decoding module 404.

- This direct mixing module then comprising the stereophonic rendering engine, implements the construction of the two restitution channels taking into account the restitution spatial position information as well as the mixing of these two reproduction channels. restitution with the restitution channels of a binauralized signal coming from the binauralization processing module 420.

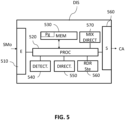

- FIG. 5 now illustrates an example of a material embodiment of a processing device capable of implementing the processing method according to the invention.

- the DIS device comprises a storage space 530, for example a memory MEM, a processing unit 520 comprising a processor PROC, controlled by a computer program Pg, stored in the memory 530 and implementing the processing method according to the invention .

- the computer program Pg comprises code instructions for implementing the steps of the processing method within the meaning of the invention, when these instructions are executed by the processor PROC, and in particular, upon detection, in a representative data stream of the monophonic signal, an indication of non-processing of binauralization associated with restitution spatial position information, a step of directing the decoded monophonic signal towards a stereophonic rendering engine taking into account the position information to construct two channels restitution processed directly by a direct mixing step summing these two channels with a binauralized signal resulting from the binauralization processing, to be reproduced on the audio headphones.

- the code instructions of the program Pg are for example loaded into a RAM memory (not shown) before being executed by the processor PROC of the processing unit 520.

- the program instructions can be stored on a storage medium such as flash memory, a hard disk or any other non-transitory storage medium.

- the DIS device comprises a reception module 510 capable of receiving an SMo data stream representative in particular of a monophonic signal. It includes a detection module 540 capable of detecting, in this data stream, an indication of non-processing of binauralization associated with restitution spatial position information. It comprises a direction module 550, in the case of positive detection by the detection module 540, of the monophonic signal decoded to a stereophonic rendering engine 560, the stereophonic rendering engine 560 being able to take into account the information of position to build two restitution channels.

- the DIS device also includes a direct mixing module 570 capable of directly processing the two restitution channels by summing them with the two channels of a binauralized signal coming from a binauralization processing module.

- the restitution channels thus obtained are transmitted to a CA audio headset via an output module 560, to be reproduced.

- module can correspond as well to a software component as to a hardware component or a set of hardware and software components, a software component itself corresponding to one or more programs or subprograms computer or more generally to any element of a program capable of implementing a function or a set of functions as described for the modules concerned.

- a hardware component corresponds to any element of a hardware assembly capable of implementing a function or a set of functions for the module concerned (integrated circuit, smart card, memory card, etc. .)

- the device can be integrated into an audio decoder as described in Figure 3 Or 4 and can be integrated for example into multimedia equipment such as a living room decoder, "set top box” or audio or video content player. They can also be integrated into communications equipment such as mobile phones or communications gateways.

Landscapes

- Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- Acoustics & Sound (AREA)

- Signal Processing (AREA)

- Mathematical Physics (AREA)

- Computational Linguistics (AREA)

- Health & Medical Sciences (AREA)

- Audiology, Speech & Language Pathology (AREA)

- Human Computer Interaction (AREA)

- Multimedia (AREA)

- Stereophonic System (AREA)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| EP22197901.6A EP4135350A1 (fr) | 2017-12-19 | 2018-12-07 | Traitement d'un signal monophonique dans un décodeur audio 3d restituant un contenu binaural |

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| FR1762478A FR3075443A1 (fr) | 2017-12-19 | 2017-12-19 | Traitement d'un signal monophonique dans un decodeur audio 3d restituant un contenu binaural |

| PCT/FR2018/053161 WO2019122580A1 (fr) | 2017-12-19 | 2018-12-07 | Traitement d'un signal monophonique dans un décodeur audio 3d restituant un contenu binaural |

Related Child Applications (2)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| EP22197901.6A Division EP4135350A1 (fr) | 2017-12-19 | 2018-12-07 | Traitement d'un signal monophonique dans un décodeur audio 3d restituant un contenu binaural |

| EP22197901.6A Division-Into EP4135350A1 (fr) | 2017-12-19 | 2018-12-07 | Traitement d'un signal monophonique dans un décodeur audio 3d restituant un contenu binaural |

Publications (3)

| Publication Number | Publication Date |

|---|---|

| EP3729832A1 EP3729832A1 (fr) | 2020-10-28 |

| EP3729832C0 EP3729832C0 (fr) | 2024-06-26 |

| EP3729832B1 true EP3729832B1 (fr) | 2024-06-26 |

Family

ID=62222744

Family Applications (2)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| EP18833274.6A Active EP3729832B1 (fr) | 2017-12-19 | 2018-12-07 | Traitement d'un signal monophonique dans un décodeur audio 3d restituant un contenu binaural |

| EP22197901.6A Pending EP4135350A1 (fr) | 2017-12-19 | 2018-12-07 | Traitement d'un signal monophonique dans un décodeur audio 3d restituant un contenu binaural |

Family Applications After (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| EP22197901.6A Pending EP4135350A1 (fr) | 2017-12-19 | 2018-12-07 | Traitement d'un signal monophonique dans un décodeur audio 3d restituant un contenu binaural |

Country Status (10)

| Country | Link |

|---|---|

| US (1) | US11176951B2 (enExample) |

| EP (2) | EP3729832B1 (enExample) |

| JP (2) | JP7279049B2 (enExample) |

| KR (1) | KR102555789B1 (enExample) |

| CN (1) | CN111492674B (enExample) |

| BR (1) | BR112020012071A2 (enExample) |

| ES (1) | ES2986617T3 (enExample) |

| FR (1) | FR3075443A1 (enExample) |

| PL (1) | PL3729832T3 (enExample) |

| WO (1) | WO2019122580A1 (enExample) |

Families Citing this family (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| FR3075443A1 (fr) | 2017-12-19 | 2019-06-21 | Orange | Traitement d'un signal monophonique dans un decodeur audio 3d restituant un contenu binaural |

| US11895479B2 (en) | 2019-08-19 | 2024-02-06 | Dolby Laboratories Licensing Corporation | Steering of binauralization of audio |

| JP7661742B2 (ja) * | 2021-03-29 | 2025-04-15 | ヤマハ株式会社 | オーディオミキサ及び音響信号の処理方法 |

| TW202348047A (zh) * | 2022-03-31 | 2023-12-01 | 瑞典商都比國際公司 | 用於沉浸式3自由度/6自由度音訊呈現的方法和系統 |

| WO2024212118A1 (zh) * | 2023-04-11 | 2024-10-17 | 北京小米移动软件有限公司 | 音频码流信号处理方法、装置、电子设备和存储介质 |

Family Cites Families (13)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| JPH09327100A (ja) * | 1996-06-06 | 1997-12-16 | Matsushita Electric Ind Co Ltd | ヘッドホン再生装置 |

| US20090299756A1 (en) * | 2004-03-01 | 2009-12-03 | Dolby Laboratories Licensing Corporation | Ratio of speech to non-speech audio such as for elderly or hearing-impaired listeners |

| US7634092B2 (en) * | 2004-10-14 | 2009-12-15 | Dolby Laboratories Licensing Corporation | Head related transfer functions for panned stereo audio content |

| KR100754220B1 (ko) * | 2006-03-07 | 2007-09-03 | 삼성전자주식회사 | Mpeg 서라운드를 위한 바이노럴 디코더 및 그 디코딩방법 |

| CN101690269A (zh) * | 2007-06-26 | 2010-03-31 | 皇家飞利浦电子股份有限公司 | 双耳的面向对象的音频解码器 |

| PT2146344T (pt) * | 2008-07-17 | 2016-10-13 | Fraunhofer Ges Forschung | Esquema de codificação/descodificação de áudio com uma derivação comutável |

| TWI475896B (zh) * | 2008-09-25 | 2015-03-01 | Dolby Lab Licensing Corp | 單音相容性及揚聲器相容性之立體聲濾波器 |

| EP2209328B1 (en) * | 2009-01-20 | 2013-10-23 | Lg Electronics Inc. | An apparatus for processing an audio signal and method thereof |

| KR20120006060A (ko) * | 2009-04-21 | 2012-01-17 | 코닌클리케 필립스 일렉트로닉스 엔.브이. | 오디오 신호 합성 |

| JP5678048B2 (ja) * | 2009-06-24 | 2015-02-25 | フラウンホッファー−ゲゼルシャフト ツァ フェルダールング デァ アンゲヴァンテン フォアシュンク エー.ファオ | カスケード化されたオーディオオブジェクト処理ステージを用いたオーディオ信号デコーダ、オーディオ信号を復号化する方法、およびコンピュータプログラム |

| CN117376809A (zh) * | 2013-10-31 | 2024-01-09 | 杜比实验室特许公司 | 使用元数据处理的耳机的双耳呈现 |

| CN106162500B (zh) * | 2015-04-08 | 2020-06-16 | 杜比实验室特许公司 | 音频内容的呈现 |

| FR3075443A1 (fr) | 2017-12-19 | 2019-06-21 | Orange | Traitement d'un signal monophonique dans un decodeur audio 3d restituant un contenu binaural |

-

2017

- 2017-12-19 FR FR1762478A patent/FR3075443A1/fr not_active Ceased

-

2018

- 2018-12-07 WO PCT/FR2018/053161 patent/WO2019122580A1/fr not_active Ceased

- 2018-12-07 EP EP18833274.6A patent/EP3729832B1/fr active Active

- 2018-12-07 ES ES18833274T patent/ES2986617T3/es active Active

- 2018-12-07 BR BR112020012071-5A patent/BR112020012071A2/pt unknown

- 2018-12-07 JP JP2020533148A patent/JP7279049B2/ja active Active

- 2018-12-07 PL PL18833274.6T patent/PL3729832T3/pl unknown

- 2018-12-07 EP EP22197901.6A patent/EP4135350A1/fr active Pending

- 2018-12-07 KR KR1020207018299A patent/KR102555789B1/ko active Active

- 2018-12-07 CN CN201880081437.9A patent/CN111492674B/zh active Active

- 2018-12-07 US US16/955,398 patent/US11176951B2/en active Active

-

2023

- 2023-05-09 JP JP2023077357A patent/JP7639053B2/ja active Active

Also Published As

| Publication number | Publication date |

|---|---|

| US20210012782A1 (en) | 2021-01-14 |

| BR112020012071A2 (pt) | 2020-11-24 |

| JP2023099599A (ja) | 2023-07-13 |

| EP3729832C0 (fr) | 2024-06-26 |

| ES2986617T3 (es) | 2024-11-12 |

| JP2021508195A (ja) | 2021-02-25 |

| FR3075443A1 (fr) | 2019-06-21 |

| CN111492674A (zh) | 2020-08-04 |

| KR20200100664A (ko) | 2020-08-26 |

| PL3729832T3 (pl) | 2024-11-04 |

| CN111492674B (zh) | 2022-03-15 |

| WO2019122580A1 (fr) | 2019-06-27 |

| EP4135350A1 (fr) | 2023-02-15 |

| JP7279049B2 (ja) | 2023-05-22 |

| KR102555789B1 (ko) | 2023-07-13 |

| RU2020121890A (ru) | 2022-01-04 |

| JP7639053B2 (ja) | 2025-03-04 |

| EP3729832A1 (fr) | 2020-10-28 |

| US11176951B2 (en) | 2021-11-16 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| EP3729832B1 (fr) | Traitement d'un signal monophonique dans un décodeur audio 3d restituant un contenu binaural | |

| EP2042001B1 (fr) | Spatialisation binaurale de donnees sonores encodees en compression | |

| US20190174246A1 (en) | Spatial audio processing emphasizing sound sources close to a focal distance | |

| WO2011045506A1 (fr) | Traitement de donnees sonores encodees dans un domaine de sous-bandes | |

| FR3007564A3 (fr) | Decodeur audio avec metadonnees d'informations de programme | |

| US12035127B2 (en) | Spatial audio capture, transmission and reproduction | |

| EP3741139A1 (en) | Associated spatial audio playback | |

| US12432516B2 (en) | Method and system for spatially rendering three-dimensional (3D) scenes | |

| CN114631332B (zh) | 比特流中音频效果元数据的信令 | |

| FR3011373A1 (fr) | Terminal portable d'ecoute haute-fidelite personnalisee | |

| EP3603076B1 (fr) | Procédé de sélection d'au moins une partie d'image à télécharger par anticipation pour restituer un flux audiovisuel | |

| RU2779295C2 (ru) | Обработка монофонического сигнала в декодере 3d-аудио, предоставляющая бинауральный информационный материал | |

| FR3040253B1 (fr) | Procede de mesure de filtres phrtf d'un auditeur, cabine pour la mise en oeuvre du procede, et procedes permettant d'aboutir a la restitution d'une bande sonore multicanal personnalisee | |

| KR20050061808A (ko) | 가상 입체 음향 생성 장치 및 그 방법 | |

| FR2922404A1 (fr) | Methode pour creer un environnement audio avec n haut-parleurs | |

| EP3108670B1 (fr) | Procédé et dispositif de restitution d'un signal audio multicanal dans une zone d'écoute | |

| WO2006075079A1 (fr) | Procede d’encodage de pistes audio d’un contenu multimedia destine a une diffusion sur terminaux mobiles | |

| WO2009081002A1 (fr) | Traitement d'un flux audio 3d en fonction d'un niveau de presence de composantes spatiales |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| STAA | Information on the status of an ep patent application or granted ep patent |

Free format text: STATUS: UNKNOWN |

|

| STAA | Information on the status of an ep patent application or granted ep patent |

Free format text: STATUS: THE INTERNATIONAL PUBLICATION HAS BEEN MADE |

|

| PUAI | Public reference made under article 153(3) epc to a published international application that has entered the european phase |

Free format text: ORIGINAL CODE: 0009012 |

|

| STAA | Information on the status of an ep patent application or granted ep patent |

Free format text: STATUS: REQUEST FOR EXAMINATION WAS MADE |

|

| 17P | Request for examination filed |

Effective date: 20200703 |

|

| AK | Designated contracting states |

Kind code of ref document: A1 Designated state(s): AL AT BE BG CH CY CZ DE DK EE ES FI FR GB GR HR HU IE IS IT LI LT LU LV MC MK MT NL NO PL PT RO RS SE SI SK SM TR |

|

| AX | Request for extension of the european patent |

Extension state: BA ME |

|

| DAV | Request for validation of the european patent (deleted) | ||

| DAX | Request for extension of the european patent (deleted) | ||

| RAP3 | Party data changed (applicant data changed or rights of an application transferred) |

Owner name: ORANGE |

|

| STAA | Information on the status of an ep patent application or granted ep patent |

Free format text: STATUS: EXAMINATION IS IN PROGRESS |

|

| 17Q | First examination report despatched |

Effective date: 20220824 |

|

| GRAP | Despatch of communication of intention to grant a patent |

Free format text: ORIGINAL CODE: EPIDOSNIGR1 |

|

| STAA | Information on the status of an ep patent application or granted ep patent |

Free format text: STATUS: GRANT OF PATENT IS INTENDED |

|

| INTG | Intention to grant announced |

Effective date: 20240311 |

|

| GRAS | Grant fee paid |

Free format text: ORIGINAL CODE: EPIDOSNIGR3 |

|

| GRAA | (expected) grant |

Free format text: ORIGINAL CODE: 0009210 |

|

| STAA | Information on the status of an ep patent application or granted ep patent |

Free format text: STATUS: THE PATENT HAS BEEN GRANTED |

|

| AK | Designated contracting states |

Kind code of ref document: B1 Designated state(s): AL AT BE BG CH CY CZ DE DK EE ES FI FR GB GR HR HU IE IS IT LI LT LU LV MC MK MT NL NO PL PT RO RS SE SI SK SM TR |

|

| REG | Reference to a national code |

Ref country code: GB Ref legal event code: FG4D Free format text: NOT ENGLISH |

|

| REG | Reference to a national code |

Ref country code: CH Ref legal event code: EP |

|

| REG | Reference to a national code |

Ref country code: DE Ref legal event code: R096 Ref document number: 602018071082 Country of ref document: DE |

|

| U01 | Request for unitary effect filed |

Effective date: 20240724 |

|

| U07 | Unitary effect registered |

Designated state(s): AT BE BG DE DK EE FI FR IT LT LU LV MT NL PT RO SE SI Effective date: 20240902 |

|

| PG25 | Lapsed in a contracting state [announced via postgrant information from national office to epo] |

Ref country code: HR Free format text: LAPSE BECAUSE OF FAILURE TO SUBMIT A TRANSLATION OF THE DESCRIPTION OR TO PAY THE FEE WITHIN THE PRESCRIBED TIME-LIMIT Effective date: 20240626 |

|

| PG25 | Lapsed in a contracting state [announced via postgrant information from national office to epo] |

Ref country code: NO Free format text: LAPSE BECAUSE OF FAILURE TO SUBMIT A TRANSLATION OF THE DESCRIPTION OR TO PAY THE FEE WITHIN THE PRESCRIBED TIME-LIMIT Effective date: 20240926 Ref country code: HR Free format text: LAPSE BECAUSE OF FAILURE TO SUBMIT A TRANSLATION OF THE DESCRIPTION OR TO PAY THE FEE WITHIN THE PRESCRIBED TIME-LIMIT Effective date: 20240626 Ref country code: RS Free format text: LAPSE BECAUSE OF FAILURE TO SUBMIT A TRANSLATION OF THE DESCRIPTION OR TO PAY THE FEE WITHIN THE PRESCRIBED TIME-LIMIT Effective date: 20240926 |

|

| REG | Reference to a national code |

Ref country code: ES Ref legal event code: FG2A Ref document number: 2986617 Country of ref document: ES Kind code of ref document: T3 Effective date: 20241112 |

|

| REG | Reference to a national code |

Ref country code: GR Ref legal event code: EP Ref document number: 20240402209 Country of ref document: GR Effective date: 20241111 |

|

| U20 | Renewal fee for the european patent with unitary effect paid |

Year of fee payment: 7 Effective date: 20241121 |

|

| PGFP | Annual fee paid to national office [announced via postgrant information from national office to epo] |

Ref country code: MC Payment date: 20241126 Year of fee payment: 7 |

|

| PGFP | Annual fee paid to national office [announced via postgrant information from national office to epo] |

Ref country code: PL Payment date: 20241126 Year of fee payment: 7 Ref country code: GR Payment date: 20241122 Year of fee payment: 7 |

|

| PGFP | Annual fee paid to national office [announced via postgrant information from national office to epo] |

Ref country code: GB Payment date: 20241122 Year of fee payment: 7 |

|

| PG25 | Lapsed in a contracting state [announced via postgrant information from national office to epo] |

Ref country code: IS Free format text: LAPSE BECAUSE OF FAILURE TO SUBMIT A TRANSLATION OF THE DESCRIPTION OR TO PAY THE FEE WITHIN THE PRESCRIBED TIME-LIMIT Effective date: 20241026 |

|

| PGFP | Annual fee paid to national office [announced via postgrant information from national office to epo] |

Ref country code: CZ Payment date: 20241126 Year of fee payment: 7 Ref country code: IE Payment date: 20241122 Year of fee payment: 7 |

|

| PG25 | Lapsed in a contracting state [announced via postgrant information from national office to epo] |

Ref country code: SK Free format text: LAPSE BECAUSE OF FAILURE TO SUBMIT A TRANSLATION OF THE DESCRIPTION OR TO PAY THE FEE WITHIN THE PRESCRIBED TIME-LIMIT Effective date: 20240626 |

|

| PG25 | Lapsed in a contracting state [announced via postgrant information from national office to epo] |

Ref country code: SM Free format text: LAPSE BECAUSE OF FAILURE TO SUBMIT A TRANSLATION OF THE DESCRIPTION OR TO PAY THE FEE WITHIN THE PRESCRIBED TIME-LIMIT Effective date: 20240626 |

|

| PG25 | Lapsed in a contracting state [announced via postgrant information from national office to epo] |

Ref country code: SM Free format text: LAPSE BECAUSE OF FAILURE TO SUBMIT A TRANSLATION OF THE DESCRIPTION OR TO PAY THE FEE WITHIN THE PRESCRIBED TIME-LIMIT Effective date: 20240626 Ref country code: SK Free format text: LAPSE BECAUSE OF FAILURE TO SUBMIT A TRANSLATION OF THE DESCRIPTION OR TO PAY THE FEE WITHIN THE PRESCRIBED TIME-LIMIT Effective date: 20240626 Ref country code: IS Free format text: LAPSE BECAUSE OF FAILURE TO SUBMIT A TRANSLATION OF THE DESCRIPTION OR TO PAY THE FEE WITHIN THE PRESCRIBED TIME-LIMIT Effective date: 20241026 |

|

| PGFP | Annual fee paid to national office [announced via postgrant information from national office to epo] |

Ref country code: TR Payment date: 20241127 Year of fee payment: 7 |

|

| PGFP | Annual fee paid to national office [announced via postgrant information from national office to epo] |

Ref country code: ES Payment date: 20250102 Year of fee payment: 7 |

|

| PGFP | Annual fee paid to national office [announced via postgrant information from national office to epo] |

Ref country code: CH Payment date: 20250101 Year of fee payment: 7 |

|

| PLBE | No opposition filed within time limit |

Free format text: ORIGINAL CODE: 0009261 |

|

| STAA | Information on the status of an ep patent application or granted ep patent |

Free format text: STATUS: NO OPPOSITION FILED WITHIN TIME LIMIT |

|

| 26N | No opposition filed |

Effective date: 20250327 |