CN110141239B - Movement intention recognition and device method for lower limb exoskeleton - Google Patents

Movement intention recognition and device method for lower limb exoskeleton Download PDFInfo

- Publication number

- CN110141239B CN110141239B CN201910460094.3A CN201910460094A CN110141239B CN 110141239 B CN110141239 B CN 110141239B CN 201910460094 A CN201910460094 A CN 201910460094A CN 110141239 B CN110141239 B CN 110141239B

- Authority

- CN

- China

- Prior art keywords

- time

- lower limb

- signals

- module

- model

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Active

Links

Images

Classifications

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B5/00—Measuring for diagnostic purposes; Identification of persons

- A61B5/103—Detecting, measuring or recording devices for testing the shape, pattern, colour, size or movement of the body or parts thereof, for diagnostic purposes

- A61B5/1036—Measuring load distribution, e.g. podologic studies

- A61B5/1038—Measuring plantar pressure during gait

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B5/00—Measuring for diagnostic purposes; Identification of persons

- A61B5/103—Detecting, measuring or recording devices for testing the shape, pattern, colour, size or movement of the body or parts thereof, for diagnostic purposes

- A61B5/11—Measuring movement of the entire body or parts thereof, e.g. head or hand tremor, mobility of a limb

- A61B5/1118—Determining activity level

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B5/00—Measuring for diagnostic purposes; Identification of persons

- A61B5/24—Detecting, measuring or recording bioelectric or biomagnetic signals of the body or parts thereof

- A61B5/316—Modalities, i.e. specific diagnostic methods

- A61B5/389—Electromyography [EMG]

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B5/00—Measuring for diagnostic purposes; Identification of persons

- A61B5/68—Arrangements of detecting, measuring or recording means, e.g. sensors, in relation to patient

- A61B5/6801—Arrangements of detecting, measuring or recording means, e.g. sensors, in relation to patient specially adapted to be attached to or worn on the body surface

- A61B5/6802—Sensor mounted on worn items

- A61B5/6804—Garments; Clothes

- A61B5/6807—Footwear

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B5/00—Measuring for diagnostic purposes; Identification of persons

- A61B5/72—Signal processing specially adapted for physiological signals or for diagnostic purposes

- A61B5/7203—Signal processing specially adapted for physiological signals or for diagnostic purposes for noise prevention, reduction or removal

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B5/00—Measuring for diagnostic purposes; Identification of persons

- A61B5/72—Signal processing specially adapted for physiological signals or for diagnostic purposes

- A61B5/7235—Details of waveform analysis

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B5/00—Measuring for diagnostic purposes; Identification of persons

- A61B5/72—Signal processing specially adapted for physiological signals or for diagnostic purposes

- A61B5/7235—Details of waveform analysis

- A61B5/725—Details of waveform analysis using specific filters therefor, e.g. Kalman or adaptive filters

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B5/00—Measuring for diagnostic purposes; Identification of persons

- A61B5/72—Signal processing specially adapted for physiological signals or for diagnostic purposes

- A61B5/7235—Details of waveform analysis

- A61B5/7253—Details of waveform analysis characterised by using transforms

-

- A—HUMAN NECESSITIES

- A61—MEDICAL OR VETERINARY SCIENCE; HYGIENE

- A61B—DIAGNOSIS; SURGERY; IDENTIFICATION

- A61B5/00—Measuring for diagnostic purposes; Identification of persons

- A61B5/72—Signal processing specially adapted for physiological signals or for diagnostic purposes

- A61B5/7235—Details of waveform analysis

- A61B5/7264—Classification of physiological signals or data, e.g. using neural networks, statistical classifiers, expert systems or fuzzy systems

- A61B5/7267—Classification of physiological signals or data, e.g. using neural networks, statistical classifiers, expert systems or fuzzy systems involving training the classification device

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B25—HAND TOOLS; PORTABLE POWER-DRIVEN TOOLS; MANIPULATORS

- B25J—MANIPULATORS; CHAMBERS PROVIDED WITH MANIPULATION DEVICES

- B25J9/00—Programme-controlled manipulators

- B25J9/0006—Exoskeletons, i.e. resembling a human figure

Landscapes

- Health & Medical Sciences (AREA)

- Life Sciences & Earth Sciences (AREA)

- Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- Veterinary Medicine (AREA)

- Pathology (AREA)

- Biomedical Technology (AREA)

- Heart & Thoracic Surgery (AREA)

- Medical Informatics (AREA)

- Molecular Biology (AREA)

- Surgery (AREA)

- Animal Behavior & Ethology (AREA)

- General Health & Medical Sciences (AREA)

- Public Health (AREA)

- Biophysics (AREA)

- Artificial Intelligence (AREA)

- Physiology (AREA)

- Signal Processing (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Psychiatry (AREA)

- Dentistry (AREA)

- Oral & Maxillofacial Surgery (AREA)

- Robotics (AREA)

- Mechanical Engineering (AREA)

- Evolutionary Computation (AREA)

- Fuzzy Systems (AREA)

- Mathematical Physics (AREA)

- Measurement Of The Respiration, Hearing Ability, Form, And Blood Characteristics Of Living Organisms (AREA)

Abstract

A motion intention recognition and device method for a lower limb exoskeleton belongs to the technical field of lower limb exoskeleton robot perception. The movement intention recognition device comprises a lower limb electromyogram signal acquisition module, a sole pressure acquisition module, a lower limb inertia information measurement module, a data acquisition and transmission module and a central controller. The method adopts one-to-one SVM multi-classification to obtain the gait track of an off-line database based on plantar pressure signals, adopts a CNN on-line joint angle estimation model to obtain the joint angle real-time prediction track based on electromyographic signals, and performs variable gain information fusion on the two tracks according to the muscle fatigue degree to provide an accurate motion expectation track for the lower limb joint driving of the exoskeleton robot. The invention effectively combines the advance of the electromyographic signals and the stability of the plantar pressure signals, greatly improves the accuracy and the real-time property of the movement intention identification, and provides sufficient guarantee for the safety and the assistance efficiency of the exoskeleton robot.

Description

Technical Field

The invention belongs to the technical field of lower limb exoskeleton robot perception, and particularly relates to a device and a method for recognizing a motion intention of a lower limb exoskeleton robot.

Background

With the rapid development of modern science and technology, the weapon equipment level becomes more and more important guarantee for the victory of the modern war, and the status of the exoskeleton of the individual combat in the military war gradually rises. Meanwhile, the aging problem of the modern society is increasingly serious, wherein the inconvenience of legs and feet is one of the serious problems in the life of the old, and the light walking assisting exoskeleton has important significance for improving the life quality of the old.

The exoskeleton robot, as a typical human-computer cooperative robot, has been a great trouble in research for accurately and quickly identifying human body movement intentions. At present, three types of motion intention identification methods applied to a lower limb exoskeleton robot are mainly provided: 1. the gait phase recognition based on the sole pressure can only recognize the phase division of the human body movement gait cycle, and further the phase division can be used as a switching value signal to be matched with a compliance control algorithm, so that different road conditions and different movement actions cannot be recognized; 2. the method comprises the following steps of identifying movement intentions based on surface electromyographic signals (sEMG), wherein the surface electromyographic signals have certain advance before actions are executed, but the electromyographic signals contain too much movement information, the prior art mainly focuses on muscle activation degree, and identification models are complex; 3. the human motion intention recognition based on the force sensor detects the interaction force between the human body and the exoskeleton through the force sensor and recognizes the human motion intention according to the magnitude of the interaction force, the recognition effect is good, but the multidimensional force sensor is expensive, and meanwhile, the size is large, so that the multidimensional force sensor needs to be fixed on the exoskeleton robot, and the cost is increased.

Disclosure of Invention

Aiming at the defects of the prior art, the invention provides a method and a device for recognizing the movement intention of the lower limb exoskeleton.

The technical scheme of the invention is as follows:

a movement intention recognition device for a lower limb exoskeleton comprises a lower limb electromyographic signal acquisition module, a sole pressure acquisition module, a lower limb inertia information measurement module, a data acquisition and transmission module and a central controller;

the sole pressure acquisition module is fixed on the exoskeleton sensing shoe and comprises a sole pressure sensor and a sole pressure transmitting module; the sole pressure sensors are three film type pressure sensors which are respectively fixed on the sensing shoes at the corresponding positions of the front end of the big toe, the tail end of the small toe and the heel of the wearer; the plantar pressure transmitting module adopts a full-bridge circuit to detect the resistance change of the pressure sensor, a second-order low-pass filter circuit amplifies and filters a voltage signal, and the output end of the plantar pressure transmitting module is connected with the data acquisition and transmission module;

the lower limb electromyographic signal acquisition module mainly comprises an Ag/AgCl electrode, a pre-amplification circuit, a power frequency trap circuit, a low-pass and high-pass filter circuit, a secondary amplification circuit and a voltage lifting circuit, and is used for respectively acquiring surface electromyographic signals of six muscle groups related to joint movement of thighs and calves;

the lower limb inertial information measuring module comprises two inertial sensors and is used for acquiring acceleration information, angular velocity information and angle information of the lower limb of a wearer, and the inertial sensors are arranged at the position of the shank and the position of the thigh of the wearer; the output end of the inertial sensor is connected with the data acquisition and transmission module;

the data acquisition and transmission module comprises a microcontroller and a bus communication module, is fixed at the ankle of the exoskeleton robot, acquires and preprocesses myoelectric signals, plantar pressure and man-machine interaction force signals, and transmits the preprocessed signals to the industrial personal computer module through the CAN bus;

the central controller receives the data sent by the data acquisition and processing module through the CAN bus, processes the sensor signals in real time, optimally matches the predefined human gait and road conditions, and identifies the movement intention of the wearer in real time.

A movement intention identification method for a lower extremity exoskeleton, comprising the steps of:

step 1: a wearer wears the movement intention recognition device for the lower limb exoskeleton, different gait actions are completed under different road conditions through the sole pressure acquisition module and the lower limb electromyogram signal acquisition module, and a sole pressure signal and a surface electromyogram signal of the wearer are synchronously acquired;

step 2: the collected plantar pressure signals and surface electromyogram signals are subjected to data preprocessing and feature extraction, and the extracted features are input into a classifier as the basis of different action classifications under different road conditions to perform human gait recognition training;

step 2.1, denoising pretreatment is carried out on the acquired plantar pressure signals by utilizing a Butterworth low-pass filtering algorithm, and time domain root mean square characteristics and power spectral density characteristics are extracted from the denoised signals;

step 2.2: inputting the extracted time domain root mean square features and power spectral density features into a classifier, adopting a Support Vector Machine (SVM) as the classifier, and classifying by utilizing a one-to-one SVM multi-classification method, wherein the input of the classifier is the feature vectors of plantar pressure signals and surface electromyographic signals which do not dynamically act under different road conditions, and the output of the classifier is classification labels of different actions under different road conditions;

step 2.3: selecting a corresponding hip-knee joint angle curve from an off-line gait database as an expected input angle of the identification device according to the classification label;

and step 3: the method comprises the following steps of collecting surface electromyographic signals of six muscles of the lower limb of a wearer through a lower limb electromyographic signal collection module, introducing characteristic quantity into a CNN (continuous neural network) online movement intention recognition model through data processing and characteristic extraction, and realizing real-time prediction of joint posture angles;

step 3.1: denoising by adopting an IIR trap and wavelet packet transformation, and further improving the denoising effect on the basis of hardware filtering denoising;

step 3.2: building a Convolutional Neural Network (CNN) online joint angle estimation model based on an electromyographic signal, inputting the extracted features into the model, and predicting the joint motion angle in real time;

and 4, step 4: calculating the fatigue degree of each muscle group through the surface electromyographic signals, and performing joint angle algorithm fusion according to the current human body physiological state;

step 4.1: preprocessing myoelectric signals of semitendinosus and rectus femoris parts acquired by a lower limb myoelectric signal acquisition module, and introducing the preprocessed myoelectric signals into a time-varying AR model, wherein AR characteristic parameters can reflect the current fatigue degree of muscles;

step 4.2: and according to the current fatigue degree of muscles, performing information fusion on a gait track obtained based on the sole pressure and a joint angle predicted based on a surface electromyogram signal by adopting a centralized fusion method based on the update of the weight of the human body physiological state, so as to obtain the final expected angle of each joint of the exoskeleton robot.

In the step 2.1, the root mean square feature extraction calculation formula is as follows:

wherein T is time, T is sampling period, and F (T) is signal value at the time T;

the power spectral density feature extraction is defined as:

wherein ω is frequency; xT(ω) is a continuous fourier transform;

and (3) estimating the maximum entropy spectrum based on the Burg algorithm, namely estimating the AR coefficient by using L evison recursion relation under the constraint when the order m is increased from 1, and finally determining model parameters and solving a power spectral density value, wherein the formula is as follows:

prediction error power: pm=(1-|Km|2)Pm-1;

wherein n is a sampling time point; x (n) is a signal sequence at the time of sampling point n;andforward and backward prediction error values; f. ofm(n) and gm(n) is the output value of the m-order filter at the sampling point n time;for the dual output values of the filter, the filter is,is the even reflection coefficient.

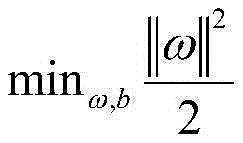

In step 2.2, the SVM classifier divides the hyperplane as: omegaTX + b ═ 0; wherein ω is a weight vector and b is an offset vector;

order toTraining sample points closest to the hyperplane are established with equal signs of the above formula, and are called as support vectors;

the sum of the distances from the two heterogeneous support vectors to the hyperplane is:finding the maximally spaced hyperplane of division, i.e. finding w and b, so that the value of r is maximized, i.e.

s.t.yi(ωT*xi+b)≥1,i=1,2,…m

In the equivalent manner to that of the above-described embodiment,

s.t.yi(ωT*xi+b)≥1,i=1,2,…m

namely, solving the above formula to obtain a model corresponding to the maximum interval division hyperplane:

f(x)=ωT*x+b

one-to-one SVM multi-classification:

respectively selecting two different categories to form an SVM sub-classifier, wherein for k categories, the number of classifiers is (k × k-1)/2 in total; when the classifiers of i and j are constructed, setting the training sample of the class i as 1 and setting the sample of the j as-1 for training;

when testing, the test data x is used for testing all the classifiers respectively, and a decision function is used for carrying out test on all the classifiers

If x is obtained to belong to the ith class, adding 1 to the ith class, and if x belongs to the j class, adding 1 to the jth voting; accumulating the scores of all the categories, and selecting the category corresponding to the highest score as the category of the test data; in the formula: sgn () is a sign function; omegaijAnd bijFor the weight vector and offset vector in classifying the i-j class, [ phi ] (x) is a spatial transformation function, αiIs a lagrange multiplier; k (x, x)i) Is a kernel function.

The step 3.2 is specifically as follows: applying a sliding window to the collected sEMG time sequence, inputting data in the sliding window into a neural network for recognition after characteristic extraction, achieving the delay requirement of human motion intention recognition by setting step length, namely the length of the sliding time window is 5ms, and inputting the sEMG sequence on line after offline training of a model so as to recognize the human motion intention, namely predicting the joint motion angle;

the convolutional neural network CNN is composed of an input layer, a convolutional layer, an activation function, a pooling layer and a full-link layer, input data are scanned by using different types of convolutional kernels, each convolutional kernel has the capability of identifying different numerical characteristics, and a plurality of characteristic mapping graphs for recording the occurrence degree coefficients of the characteristics are generated through convolutional operation; the convolution neural network shares a convolution kernel and has no pressure on high-dimensional data processing; and the characteristics need to be selected manually, and the weight needs to be trained.

In the step 4.1, a time-varying AR model parameter is adopted to evaluate the muscle fatigue degree, a time-varying parameter model method is adopted to extract the characteristic parameters of the electromyographic signals, the order p is 4, the basis function is L egendare basis, a recurrence least square method is adopted to calculate to obtain a p x N time-varying AR characteristic parameter matrix, then the fatigue state of the muscle is determined through judgment of the characteristic parameters, and further basis is provided for evaluation of the muscle fatigue state.

In the step 4.2, the fusion method adopts a centralized fusion method based on weight updating of human physiological state, the root mean square value of the track data point is calculated in the fusion process, the weight related to the human physiological state is α, wherein α1P1+α2P2Representing a middle process track formula, updating the weight α according to the physiological state degree, when the physiological state is normal, namely the autonomous movement ability of the wearer is high, the weight α is small, namely the real-time prediction track based on sEMG is dominant, the expected track is close to the real-time prediction angle track based on sEMG, when the human body is in a fatigue state, and the autonomous movement ability of the wearer is weak, the weight α is large, and the expected track is close to the historical track of the database based on the sole pressure.

The invention has the beneficial effects that:

the invention adopts a sole pressure sensor and a surface myoelectric sensor as devices for identifying the exoskeleton motion intention of the robot, obtains an off-line database gait track based on a sole pressure signal by adopting one-to-one SVM multi-classification, obtains a joint angle real-time prediction track based on a myoelectric signal by adopting a CNN on-line joint angle estimation model, and performs variable gain information fusion on the two tracks according to the muscle fatigue degree to provide an accurate motion expectation track for the lower limb joint drive of the exoskeleton robot. The invention effectively combines the advance of the electromyographic signals and the stability of the plantar pressure signals, greatly improves the accuracy and the real-time property of the movement intention identification, and provides sufficient guarantee for the safety and the assistance efficiency of the exoskeleton robot. The invention has wide application range and mainly comprises an individual combat exoskeleton, a medical rehabilitation exoskeleton, a walking assisting exoskeleton and the like.

Drawings

FIG. 1 is a schematic diagram of the overall architecture of a lower extremity exoskeleton system;

FIG. 2 is a schematic view of a lower extremity exoskeleton sensing module in a mounted position;

FIG. 3 is a schematic block diagram of a surface electromyographic signal acquisition circuit;

FIG. 4 is a view showing the installation position of the diaphragm pressure sensor;

FIG. 5 is a graph of a diaphragm pressure sensor characteristic; wherein the curve represents force-resistance and the straight line represents force-conductance;

FIG. 6 is a lower limb inertia measurement module;

FIG. 7 is a schematic diagram of STM32F103C8T 6;

FIG. 8 is a CAN bus communication block diagram of the exoskeleton system;

FIG. 9 is an exoskeleton system movement intent recognition flow diagram;

FIG. 10 is a diagram of plantar pressure signals with out-of-sync action; wherein (a) running; (b) walking; (c) going upstairs; (d) going down stairs;

FIG. 11 is a root mean square eigenvalue distribution plot;

FIG. 12 is a power spectral density profile;

FIG. 13 is a one-to-one SVM multi-classification model testing process;

FIG. 14 is a graph of joint angles for different dynamic motions; wherein (a) running; (b) walking; (c) going upstairs; (d) going down stairs; in the figure, the upper curve represents the knee joint angle and the lower curve represents the hip joint angle;

FIG. 15 is a diagram of muscle group positions for acquiring SEMG signals;

FIG. 16 is a diagram of a double layer convolution-pooling structure CNN;

FIG. 17 is a diagram of the effect of denoised electromyographic signals;

FIG. 18 is a time-varying AR model process;

FIG. 19 is a process diagram of a desired trajectory fusion algorithm.

In the figure: 1, a lower limb electromyogram signal acquisition module; 2, a plantar pressure acquisition module; 3, a lower limb inertia information measuring module; 4, a data acquisition and transmission module; 5 a central controller.

Detailed Description

The following detailed description of embodiments of the present invention is provided in connection with the accompanying drawings and examples. The following examples are intended to illustrate the invention but are not intended to limit the scope of the invention.

The lower limb assistance exoskeleton system comprises an exoskeleton bionic mechanism system, a sensing system, a control system, a power supply system and a driving system, which are shown in fig. 1. The bionic mechanism system comprises a bionic lower limb structure, a bionic upper limb structure and a bionic back support; the perception system comprises acquisition of a sole pressure signal, a leg electromyogram signal and a joint posture signal and recognition of a movement intention; the control system comprises a motion planning algorithm, a balance control algorithm and a servo control algorithm; the power supply system comprises a power supply management system and an energy interface; the servo system comprises a servo motor and a servo driver.

The embodiment provides a movement intention recognition system for a lower limb assistance exoskeleton, which is an input layer of the lower limb exoskeleton system, acquires lower limb movement data of a human body through a foot bottom film pressure sensor and a lower limb myoelectric sensor, transmits foot bottom pressure signals and lower limb myoelectric signals to a main controller through a data acquisition and transmission module by adopting a CAN (controller area network) bus, finishes gait recognition and joint angle prediction, transmits an expected angle to a driving system through the CAN bus, and drives an exoskeleton body and a wearer to finish corresponding actions by the driving system, so that an assistance effect is realized.

The embodiment provides a method and a device for recognizing a movement intention of a lower limb assistance exoskeleton, wherein a sensing system of the method comprises a plurality of sensors and modules, and a lower limb exoskeleton sensing module is arranged in a position shown in fig. 2 and comprises a lower limb electromyogram signal acquisition module 1, a sole pressure acquisition module 2, a lower limb inertia information measurement module 3, a data acquisition and transmission module 4 and a central controller 5.

The design of the lower limb electromyographic signal acquisition module needs to fully consider the characteristics of weak surface electromyographic signal strength and low signal-to-noise ratio, so that the hardware acquisition circuit respectively comprises a preamplifier circuit, a power frequency trap circuit, a second-order high-pass and low-pass filter circuit and a second-order amplification and voltage lifting circuit. The pre-amplification circuit adopts an instrument amplifier with high common mode rejection ratio, large input impedance and high precision, can eliminate common mode interference and amplify signals. The surface electromyogram signal has low-frequency characteristics, and the frequency spectrum range is mainly concentrated between 10 Hz and 500Hz, so that a high-pass filter and a low-pass filter are built to filter out interference signals outside the frequency spectrum range; meanwhile, a power frequency trap circuit is designed based on the UAF42 chip, and power frequency electromagnetic interference from the space and the skin surface of a human body is eliminated. The integral amplification factor of the surface electromyogram signal needs to be about 1000 times, so that a variable-gain secondary amplification circuit is designed on the basis of the circuit, and finally the pure surface electromyogram signal subjected to gain amplification is output. The functional block diagram of the surface electromyogram signal acquisition circuit is shown in fig. 3.

The sole pressure measuring module is divided into a sole pressure collecting module and a sole pressure transmitting module. The sole pressure acquisition module adopts three film pressure sensors which are respectively fixed on the sensing shoes at the corresponding positions of the front end of the big toe, the tail end of the small toe and the heel of a wearer, and the installation positions of the film pressure sensors are shown in figure 4. The film pressure sensor is a film type pressure sensor IMS-C20 produced by I-Motion group, and the sensor is equivalent to a piezoresistor in a circuit. When the pressure sensor has no external load, the circuit is in a high-resistance state. When external pressure is applied to the sensor, the resistance of the circuit decreases. Pressure is inversely proportional to resistance, but pressure is positively proportional to conductance, so conductance can be used for calibration calculations, and the resistance, conductance and pressure relationship curve, i.e., the characteristic curve of the thin film pressure sensor, is shown in fig. 5. The plantar pressure transmitting module adopts a full-bridge circuit to detect the resistance change of the pressure sensor, and a second-order low-pass filter circuit amplifies and filters a voltage signal.

The lower limb inertia measurement module comprises two inertia sensors which are respectively fixed at the thigh and the shank through clamping groove bandages. The inertial sensor selects a high-precision inertial navigation module MPU6050, the measurement data of the MPU6050 is read by an internal integrated processor and then output through a serial port, and meanwhile, an original I2C interface is reserved so as to meet the requirement that a high-level user wants to access the bottom measurement data. An attitude resolver is integrated in the module, and the current attitude of the module can be accurately output under a dynamic environment by matching with a dynamic Kalman filtering algorithm, and the attitude measurement precision reaches 0.01 degree. The module is internally provided with a voltage stabilizing circuit, can be compatible with a 3.3V/5V embedded system, and can obtain data of a three-dimensional square attitude angle, a three-dimensional angular velocity and a three-dimensional acceleration. The lower limb inertia measurement module is shown in fig. 6.

The data acquisition and transmission module comprises a data acquisition module, namely a microcontroller 1, and a signal transmission module, namely a CAN bus communication module 2. STM32F103C8T6 is selected for use by the microcontroller, the schematic diagram is shown in FIG. 7, STM32F103C8T6 is a 32-bit microcontroller based on ARM Cortex-M kernel STM32 series, the capacity of the program memory is 64KB, the main frequency can reach 72MHz, the microcontroller is provided with a USB2.0 interface, 2 paths of UARTs, 2 paths of I2C, 1 path of SPI and 4 16-bit timers, the microcontroller is provided with 16 paths of AD acquisition channels, the conversion speed is 1us, and the sampling precision is 12 bits. The data transmission adopts a CAN bus communication mode, and is a serial communication network which effectively supports distributed control or real-time control. Compared with communication buses such as RS-485 and RS-232, the distributed control system based on the CAN bus has the advantages of strong real-time performance, short development period and long transmission distance. The CAN bus communication block diagram of the exoskeleton system in this example is shown in FIG. 8.

The central controller identifies and fuses the collected multi-source sensing signals, and the specific process is as follows: the method comprises the following steps of collecting signals through a plantar pressure signal collection module and a surface electromyogram signal collection module, then carrying out preprocessing such as filtering and denoising, and extracting corresponding time-frequency domain characteristics; in the off-line training model stage, firstly, building a corresponding gait classifier model and an angle estimation model, analyzing different gait motions and angle curves, and building a final recognition model; in the real-time detection stage, the electromyographic signals and the plantar pressure signals collected in real time are respectively led into an angle estimation model and a gait classification model to obtain the current gait motion and gait phase, and a corresponding gait angle curve is called from a gait database; and meanwhile, the joint posture angle estimated by the electromyographic signal is fused with the gait angle curve. And finally, the fused result data is sent to a servo driver through a CAN bus, and the motor drives the exoskeleton support to move according to the instruction of the controller, so that the real-time assistance effect is achieved.

A motion intention identification method for the lower extremity exoskeleton system, as shown in fig. 9, comprises the following steps:

step 1: a wearer wears the exoskeleton system, and different dynamic actions are completed under different road conditions through the sole pressure acquisition device and the lower limb surface electromyogram signal acquisition device, sole pressure signals and surface electromyogram signals of the wearer are synchronously acquired, the sole pressure signals under the different dynamic actions are as shown in figure 10, and the three groups of sole pressure signals under the different dynamic actions have obvious differences in time domain and frequency domain.

Step 2: the collected plantar pressure information and lower limb inertia information are subjected to data preprocessing, feature extraction and other steps, and the extracted features are input into a classifier as the basis of different action classifications under different road conditions to perform human gait recognition training.

Step 2.1, denoising preprocessing is carried out on the acquired plantar pressure signals by utilizing a Butterworth low-pass filtering algorithm, and the cutoff frequency of a low-pass filter is set to be 15Hz as effective signals of the plantar pressure signals are mainly distributed below 15 Hz; and extracting time domain root mean square characteristics and power spectral density characteristics from the denoised signal.

2.1.1 root mean square feature extraction

The root mean square is the most common time domain feature, and can represent the average level of the plantar pressure signal in a certain time. The sole has three main stress points, the root mean square characteristic value of which can reflect the basic gait condition, and the calculation formula is as follows:

different gait motions, the average pressure value of the sole in a certain time is different. The root mean square eigenvalue profile of the three-way plantar pressure signal is shown in fig. 11.

2.1.2 Power spectral Density feature extraction

The power spectral density is defined as the signal power in a unit frequency band and is expressed as the distribution of the signal power in a frequency domain. For a finite signal, assuming that the signal is stationary and the random signal has ergodicity, the power spectrum can be estimated, defined as:

maximum entropy spectrum estimation is a spectrum analysis method with higher resolution, the Burg algorithm is one of the earliest algorithms of maximum entropy spectrum analysis, the sum of forward and backward prediction error power is minimized when the power spectrum estimation is carried out through the Burg algorithm, when the order m is increased from 1, the AR coefficient is estimated by using L evison recursion relational expression under the constraint, and finally, model parameters are determined and the power spectrum value is solved, wherein the formula is as follows:

Pm=(1-|Km|2)Pm-1

the power spectral density characteristic of the plantar pressure signal is finally obtained, and the characteristic diagram is shown in fig. 12.

Step 2.2: inputting the extracted features into a classifier, adopting a Support Vector Machine (SVM) as the classifier, inputting the feature vectors of plantar pressure signals and lower limb inertia signals which do not dynamically act under different road conditions into the model, and outputting classification labels of different actions under different road conditions.

SVM classifier model:

svm (support Vector mac), also known as support Vector machine, is a two-class model. Of course, if modified, it can be used for classification of multi-class problems. Support vector machines can be divided into two broad categories, linear kernel and non-linear. The main idea is to find a hyperplane in space that can more easily separate all data samples, and to make the distance from all data in a sample set to each hyperplane shortest.

Dividing the hyperplane can be expressed as: omegaT*x+b=0;

order toThen, a plurality of training sample points closest to the hyperplane make the equal signs of the above formula hold, and the equal signs are called as support vectors;

the sum of the distances from the two heterogeneous support vectors to the hyperplane is:finding the maximally spaced hyperplane of division, i.e. finding w and b, so that the value of r is maximized, i.e.

s.t.yi(ωT*xi+b)≥1,i=1,2,…m

In the equivalent manner to that of the above-described embodiment,

s.t.yi(ωT*xi+b)≥1,i=1,2,…m

namely, solving the above formula to obtain a model corresponding to the maximum interval division hyperplane:

f(x)=ωT*x+b

one-to-one SVM multi-classification:

two different classes are selected to form an SVM sub-classifier, so that there are (K × K-1)/2 classifiers for the K classes. In constructing the classifiers for i and j, training may be performed with the training sample for class i set to 1 and the sample for j set to-1.

At the time of testing, the most used was the voting strategy proposed by Friedman: respectively testing all classifiers by the test data x if the test data x is determined by the decision function

And if x is obtained to belong to the ith class, adding 1 to the ith class, and if x belongs to the j class, adding 1 to the jth voting, accumulating the scores of all the classes, and selecting the class corresponding to the highest score as the class of the test data. Starting from a one-to-one mode, a classification method of a Directed Acyclic Graph (Directed Acyclic Graph) appears, a training process is similar to the one-to-one mode, and a model testing process is shown in fig. 13

The advantages of this approach are: in the case of speech addition, we do not need to retrain all SVMs, but only need to retrain and add classifiers associated with the speech samples. The relative speed is fast when training a single model.

Step 2.3: and selecting the corresponding hip-knee joint angle curve from the off-line gait database as the expected input angle of the system according to the classification label. The joint angle curves for the different dynamic motions are shown in fig. 14.

And step 3: surface electromyogram signals of six muscles of the lower limb of a wearer are acquired through an electromyogram sensor (the positions of muscle groups are shown in figure 15), and through data processing and feature extraction, feature quantities are introduced into a recognition model to realize real-time prediction of joint posture angles.

Step 3.1: denoising by adopting an IIR wave trap and wavelet packet transformation, further improving the denoising effect, wherein the electromyographic signal effect graph after denoising is shown in FIG. 17.

Step 3.2: and (3) building a CNN (neural network) online joint angle estimation model based on the electromyographic signals, inputting the extracted features into the model, and predicting the joint motion angle in real time.

An angle acquired by an inertial sensor is taken as an output target, a characteristic vector of the sEMG is taken as input, a correlation model between the angle and the characteristic vector is established, and a CNN online movement intention recognition model (volumetric Neural Network) is introduced. Specifically, electromyographic data needs to be preprocessed, a sample feature set is generated in the processes of feature extraction and feature selection, and a joint angle value is calculated by a gyroscope sensor and an acceleration sensor which are attached to the skin and serves as a training label. In the process of feature extraction and feature selection, a research scheme based on non-specific person identification is adopted, features of time domain, frequency domain, time-frequency domain and nonlinear field are extracted, and evaluation calculation is carried out on the feature schemes aiming at differences of experimental objects, so that common features of different experimental objects are screened out and used for constructing an offline training sample, and influence of the differences among the non-specific persons on the model is reduced. In addition, the trained model needs to be optimized through cross validation parameter optimization, so that the generalization capability of the model is improved on the premise of ensuring certain precision.

Double layer convolution-pooling structure CNN:

the CNN scans input data by using different types of convolution kernels, wherein each convolution kernel has the capability of identifying different numerical characteristics, such as some tendency that data can be identified to rise and some tendency that data can be identified to fall; a plurality of feature maps for recording the feature occurrence degree coefficients are generated by convolution operation. The input is considered by referring to the algorithm models of the deep learning related data and other open source computer vision items, and a double-layer convolution-pooling structure CNN shown in FIG. 16 is designed.

In specific implementation, a sliding window is applied to an acquired sEMG time sequence, data in the sliding window is input into a neural network for recognition after feature extraction, the delay requirement of human motion intention recognition is met by setting a step length, namely the length of the sliding time window is 5ms, and after offline training of a model, the sEMG sequence is input online so as to recognize the human motion intention, namely, the joint motion angle is predicted. Through repeated tests and fine adjustment of model parameters, the online movement intention recognition, namely joint angle prediction, has the advantages that the advance and the accuracy rate are more than 98%.

And 4, step 4: the fatigue degree of each muscle group is calculated through the electromyographic signals, so that the joint angle algorithm fusion is carried out according to the current human body physiological state.

Step 4.1: and preprocessing the acquired electromyographic signals, and introducing the preprocessed electromyographic signals into a time-varying AR model, wherein AR characteristic parameters can reflect the current fatigue degree of muscles.

The method for evaluating the muscle fatigue degree by adopting the time-varying AR model parameters comprises the steps of extracting characteristic parameters of electromyographic signals by adopting a time-varying parameter model method, determining the fatigue state of muscles by judging the characteristic parameters, and providing a certain basis for evaluating the muscle fatigue state, wherein the order p is 4, and the basis function is L egendare basis.

Step 4.2: according to the fatigue degree of muscles, a centralized fusion method based on updating of the weight of the human physiological state is adopted, and information fusion is carried out on the gait track obtained based on the sole pressure and the joint angle predicted based on the surface electromyographic signal, so that the final expected angle of each joint of the exoskeleton robot is obtained.

A centralized fusion algorithm based on human physiological state weight updating comprises the following steps:

the surface electromyogram signal is easily interfered by the external environment, so that the real-time predicted track result is not accurate enough; the trajectory obtained based on the plantar pressure is not good enough in real time. Therefore, it is necessary to effectively fuse the two kinds of information to obtain a more accurate desired trajectory of the joint movement of the lower limbs.

The fusion method adopts a centralized fusion method based on weight update of human physiological state, the fusion process is shown in figure 19, the idea of the fusion algorithm is to update the weights of two tracks according to the human physiological state so as to obtain a complete expected track of joint movement, the root mean square value of a track data point is calculated in the fusion process, the weight related to the human physiological state is α, wherein α1P1+α2P2Representing the intermediate process trace equation.

The weight α is updated according to the physiological state degree, when the physiological state is normal, namely the autonomous movement ability of the wearer is high, the weight α is small, namely the real-time prediction track based on sEMG is dominant, the expected track is close to the real-time prediction angle track based on sEMG, when the human body is in a fatigue state and the autonomous movement ability of the wearer is weak, the weight α is large, and the expected track is close to the historical track of the database based on the sole pressure.

Taking the root mean square value of the trace data point, and making the weight value related to the human physiological state be α, wherein α1P1+α2P2Representing the intermediate process trace equation.

The weight α is updated according to the physiological state degree, when the physiological state is normal, namely the autonomous movement ability of the wearer is high, the weight α is small, namely the real-time prediction track based on sEMG is dominant, the expected track is close to the real-time prediction angle track based on sEMG, when the human body is in a fatigue state and the autonomous movement ability of the wearer is weak, the weight α is large, and the expected track is close to the historical track of the database based on the sole pressure.

Claims (9)

1. A method of locomotion intent recognition using a locomotion intent recognition apparatus for a lower extremity exoskeleton, comprising:

the movement intention recognition device comprises a lower limb electromyogram signal acquisition module, a sole pressure acquisition module, a lower limb inertia information measurement module, a data acquisition and transmission module and a central controller;

the sole pressure acquisition module is fixed on the exoskeleton sensing shoe and comprises a sole pressure sensor and a sole pressure transmitting module; the sole pressure sensors are three film type pressure sensors which are respectively fixed on the sensing shoes at the corresponding positions of the front end of the big toe, the tail end of the small toe and the heel of the wearer; the plantar pressure transmitting module adopts a full-bridge circuit to detect the resistance change of the pressure sensor, a second-order low-pass filter circuit amplifies and filters a voltage signal, and the output end of the plantar pressure transmitting module is connected with the data acquisition and transmission module;

the lower limb electromyographic signal acquisition module mainly comprises an Ag/AgCl electrode, a pre-amplification circuit, a power frequency trap circuit, a low-pass and high-pass filter circuit, a secondary amplification circuit and a voltage lifting circuit, and is used for respectively acquiring surface electromyographic signals of six muscle groups related to joint movement of thighs and calves;

the lower limb inertial information measuring module comprises two inertial sensors and is used for acquiring acceleration information, angular velocity information and angle information of the lower limb of a wearer, and the inertial sensors are arranged at the position of the shank and the position of the thigh of the wearer; the output end of the inertial sensor is connected with the data acquisition and transmission module;

the data acquisition and transmission module comprises a microcontroller and a bus communication module, is fixed at the ankle of the exoskeleton robot, acquires and preprocesses myoelectric signals, plantar pressure and man-machine interaction force signals, and transmits the preprocessed signals to the industrial personal computer module through the CAN bus;

the central controller receives data sent by the data acquisition and processing module through the CAN bus, processes the sensor signals in real time, optimally matches the predefined human gait and road conditions, and identifies the movement intention of the wearer in real time;

the method for recognizing the movement intention comprises the following steps:

step 1: a wearer wears the movement intention recognition device for the lower limb exoskeleton, different gait actions are completed under different road conditions through the sole pressure acquisition module and the lower limb electromyogram signal acquisition module, and a sole pressure signal and a surface electromyogram signal of the wearer are synchronously acquired;

step 2: the collected plantar pressure signals and surface electromyogram signals are subjected to data preprocessing and feature extraction, and the extracted features are input into a classifier as the basis of different action classifications under different road conditions to perform human gait recognition training;

step 2.1, denoising pretreatment is carried out on the acquired plantar pressure signals by utilizing a Butterworth low-pass filtering algorithm, and time domain root mean square characteristics and power spectral density characteristics are extracted from the denoised signals;

step 2.2: inputting the extracted time domain root mean square features and power spectral density features into a classifier, adopting a Support Vector Machine (SVM) as the classifier, and classifying by utilizing a one-to-one SVM multi-classification method, wherein the input of the classifier is the feature vectors of plantar pressure signals and surface electromyographic signals which do not dynamically act under different road conditions, and the output of the classifier is classification labels of different actions under different road conditions;

step 2.3: selecting a corresponding hip-knee joint angle curve from an off-line gait database as an expected input angle of the identification device according to the classification label;

and step 3: the method comprises the steps that surface electromyographic signals of six muscles of the lower limb of a wearer are collected through a lower limb electromyographic signal collection module, then an angle collected by an inertial sensor is taken as an output target, a characteristic vector of sEMG is taken as input, a correlation model between the sEMG and the sEMG is established, and a CNN online movement intention recognition model is introduced to realize real-time prediction of joint posture angles;

step 3.1: denoising by adopting an IIR trap and wavelet packet transformation, and further improving the denoising effect on the basis of hardware filtering denoising;

step 3.2: building a convolutional neural network CNN online movement intention recognition model based on an electromyographic signal, inputting the extracted features into the model, and predicting a joint movement angle in real time;

and 4, step 4: calculating the fatigue degree of each muscle group through the surface electromyographic signals, and performing joint angle algorithm fusion according to the current human body physiological state;

step 4.1: preprocessing myoelectric signals of semitendinosus and rectus femoris parts acquired by a lower limb myoelectric signal acquisition module, and introducing the preprocessed myoelectric signals into a time-varying AR model, wherein AR characteristic parameters can reflect the current fatigue degree of muscles;

step 4.2: and according to the current fatigue degree of muscles, performing information fusion on a gait track obtained based on the sole pressure and a joint angle predicted based on a surface electromyogram signal by adopting a centralized fusion method based on the update of the weight of the human body physiological state, so as to obtain the final expected angle of each joint of the exoskeleton robot.

2. The method according to claim 1, wherein in step 2.1, the root mean square feature extraction calculation formula is as follows:

wherein T is time, T is sampling period, and F (T) is signal value at the time T;

the power spectral density feature extraction is defined as:

wherein ω is frequency; xT(ω) is a continuous fourier transform;

estimating the AR coefficient by using L evison recursion relational expression under the constraint defined by the power spectral density feature extraction when the order m is increased from 1, finally determining model parameters and solving the power spectral density value, wherein the formula is as follows:

prediction error power: pm=(1-|Km|2)Pm-1;

wherein n is a sampling time point; x (n) is a signal sequence at the time of sampling point n;andforward and backward prediction error values; f. ofm(n) and gm(n) is the output value of the m-order filter at the sampling point n time;for the dual output values of the filter, the filter is,is the even reflection coefficient.

3. The method according to claim 1 or 2, characterized in that in step 2.2, the SVM classifier partitions the hyperplane as: omegaTX + b ═ 0; wherein ω is a weight vector and b is an offset vector;

order toTraining sample points closest to the hyperplane are established with equal signs of the above formula, and are called as support vectors;

the sum of the distances from the two heterogeneous support vectors to the hyperplane is:finding the maximally spaced hyperplane of division, i.e. finding w and b, so that the value of r is maximized, i.e.

s.t.yi(ωT*xi+b)≥1,i=1,2,…m

In the equivalent manner to that of the above-described embodiment,

s.t.yi(ωT*xi+b)≥1,i=1,2,…m

namely, solving the above formula to obtain a model corresponding to the maximum interval division hyperplane:

f(x)=ωT*x+b

one-to-one SVM multi-classification:

respectively selecting two different categories to form an SVM sub-classifier, wherein for k categories, the number of classifiers is (k × k-1)/2 in total; when the classifiers of i and j are constructed, setting the training sample of the class i as 1 and setting the sample of the j as-1 for training;

when testing, the test data x is used for testing all the classifiers respectively, and a decision function is used for carrying out test on all the classifiers

If x is obtained to belong to the ith class, adding 1 to the ith class, and if x belongs to the j class, adding 1 to the jth voting; accumulating the scores of all the categories, and selecting the category corresponding to the highest score as the category of the test data; in the formula: sgn () is a sign function; omegaijAnd bijFor the weight vector and offset vector in classifying the i-j class, [ phi ] (x) is a spatial transformation function, αiIs a lagrange multiplier; k (x, x)i) Is a kernel function.

4. The method according to claim 1 or 2, characterized in that said step 3.2 is in particular: applying a sliding window to the collected sEMG time sequence, inputting data in the sliding window into a neural network for recognition after characteristic extraction, achieving the delay requirement of human motion intention recognition by setting step length, namely the length of the sliding time window is 5ms, and inputting the sEMG sequence on line after offline training of a model so as to recognize the human motion intention, namely predicting the joint motion angle;

the convolutional neural network CNN is composed of an input layer, a convolutional layer, an activation function, a pooling layer and a full-link layer, input data are scanned by using different types of convolutional kernels, each convolutional kernel has the capability of identifying different numerical characteristics, and a plurality of characteristic mapping graphs for recording the occurrence degree coefficients of the characteristics are generated through convolutional operation; the convolution neural network shares a convolution kernel and has no pressure on high-dimensional data processing; and the characteristics need to be selected manually, and the weight needs to be trained.

5. The method according to claim 3, characterized in that said step 3.2 is in particular: applying a sliding window to the collected sEMG time sequence, inputting data in the sliding window into a neural network for recognition after characteristic extraction, achieving the delay requirement of human motion intention recognition by setting step length, namely the length of the sliding time window is 5ms, and inputting the sEMG sequence on line after offline training of a model so as to recognize the human motion intention, namely predicting the joint motion angle;

the convolutional neural network CNN is composed of an input layer, a convolutional layer, an activation function, a pooling layer and a full-link layer, input data are scanned by using different types of convolutional kernels, each convolutional kernel has the capability of identifying different numerical characteristics, and a plurality of characteristic mapping graphs for recording the occurrence degree coefficients of the characteristics are generated through convolutional operation; the convolution neural network shares a convolution kernel and has no pressure on high-dimensional data processing; and the characteristics need to be selected manually, and the weight needs to be trained.

6. The method according to claim 1, 2 or 5, wherein in the step 4.1, a time-varying AR model parameter is used for evaluating the muscle fatigue degree, a time-varying parameter model method is used for extracting the characteristic parameter of the electromyographic signal, the order p is 4, a basis function is L egenderre basis, a recurrence least square method is used for calculating to obtain a p x N time-varying AR characteristic parameter matrix, the fatigue state of the muscle is determined through judgment of the characteristic parameter, and then a basis is provided for evaluation of the muscle fatigue state.

7. The method according to claim 3, wherein in step 4.1, the time-varying AR model parameters are used to evaluate muscle fatigue degree, the time-varying parameter model method is used to extract the characteristic parameters of the electromyographic signals, the order p is 4, the basis function is L egendre, the recursive least square method is used to calculate to obtain a p N time-varying AR characteristic parameter matrix, and then the fatigue state of the muscle is determined by judging the characteristic parameters, so as to provide a basis for evaluating the muscle fatigue state.

8. The method according to claim 4, wherein in step 4.1, the time-varying AR model parameters are used to evaluate muscle fatigue degree, the time-varying parameter model method is used to extract the characteristic parameters of the electromyographic signals, the order p is 4, the basis function is L egendre, the recursive least square method is used to calculate to obtain a p N time-varying AR characteristic parameter matrix, the fatigue state of the muscle is determined by judging the characteristic parameters, and the basis is provided for evaluating the muscle fatigue state.

9. The method according to claim 1, 2, 5, 7 or 8, wherein in the step 4.2, the fusion method adopts a centralized fusion method based on weight update of the human physiological state, and in the fusion process, the root mean square value of the track data point is obtained, and the weight related to the human physiological state is α, wherein α1P1+α2P2Representing a middle process track formula, updating the weight α according to the physiological state degree, when the physiological state is normal, namely the autonomous movement ability of the wearer is high, the weight α is small, namely the real-time prediction track based on sEMG is dominant, the expected track is close to the real-time prediction angle track based on sEMG, when the human body is in a fatigue state, and the autonomous movement ability of the wearer is weak, the weight α is large, and the expected track is close to the historical track of the database based on the sole pressure.

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201910460094.3A CN110141239B (en) | 2019-05-30 | 2019-05-30 | Movement intention recognition and device method for lower limb exoskeleton |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201910460094.3A CN110141239B (en) | 2019-05-30 | 2019-05-30 | Movement intention recognition and device method for lower limb exoskeleton |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN110141239A CN110141239A (en) | 2019-08-20 |

| CN110141239B true CN110141239B (en) | 2020-08-04 |

Family

ID=67592188

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN201910460094.3A Active CN110141239B (en) | 2019-05-30 | 2019-05-30 | Movement intention recognition and device method for lower limb exoskeleton |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN110141239B (en) |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| IT202000022933A1 (en) * | 2020-09-29 | 2022-03-29 | Bionit Labs S R L | INTEGRATED SYSTEM FOR THE DETECTION AND PROCESSING OF ELECTROMYOGRAPHIC SIGNALS |

Families Citing this family (44)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN110537921A (en) * | 2019-08-28 | 2019-12-06 | 华南理工大学 | Portable gait multi-sensing data acquisition system |

| CN110710970B (en) * | 2019-09-17 | 2021-01-29 | 北京海益同展信息科技有限公司 | Method and device for recognizing limb actions, computer equipment and storage medium |

| CN110757433A (en) * | 2019-10-14 | 2020-02-07 | 电子科技大学 | Guyed knee joint power assisting device |

| CN110900638B (en) * | 2019-10-31 | 2022-10-14 | 东北大学 | Upper limb wearable transfer robot motion recognition system based on multi-signal fusion |

| CN111062247B (en) * | 2019-11-07 | 2023-05-26 | 郑州大学 | Human motion intention prediction method for exoskeleton control |

| CN110977961A (en) * | 2019-11-07 | 2020-04-10 | 郑州大学 | Motion information acquisition system of self-adaptive power-assisted exoskeleton robot |

| CN111079927B (en) * | 2019-12-12 | 2022-07-08 | 福州大学 | Patella pain detection system based on extreme learning machine |

| CN111291865B (en) * | 2020-01-21 | 2021-12-10 | 北京工商大学 | Gait recognition method based on convolutional neural network and isolated forest |

| CN111267071A (en) * | 2020-02-14 | 2020-06-12 | 上海航天控制技术研究所 | Multi-joint combined control system and method for exoskeleton robot |

| CN111345783B (en) * | 2020-03-26 | 2021-02-23 | 山东大学 | Vestibular dysfunction detection system based on inertial sensor |

| CN111506189B (en) * | 2020-03-31 | 2023-05-09 | 哈尔滨工业大学 | Motion mode prediction and switching control method for complex motion of human body |

| CN113143298B (en) * | 2020-03-31 | 2023-06-02 | 重庆牛迪创新科技有限公司 | Limb skeletal muscle stress state detection device and method and stress state identification equipment |

| CN111469117B (en) * | 2020-04-14 | 2022-06-03 | 武汉理工大学 | Human motion mode detection method of rigid-flexible coupling active exoskeleton |

| CN111582108B (en) * | 2020-04-28 | 2022-09-20 | 河北工业大学 | Gait recognition and intention perception method |

| CN111658246B (en) * | 2020-05-19 | 2021-11-30 | 中国科学院计算技术研究所 | Intelligent joint prosthesis regulating and controlling method and system based on symmetry |

| CN111631923A (en) * | 2020-06-02 | 2020-09-08 | 中国科学技术大学先进技术研究院 | Neural network control system of exoskeleton robot based on intention recognition |

| CN111803250A (en) * | 2020-07-03 | 2020-10-23 | 北京联合大学 | Knee joint angle prediction method and system based on electromyographic signals and angle signals |

| CN111832528B (en) * | 2020-07-24 | 2024-02-09 | 北京深醒科技有限公司 | Detection and anomaly analysis method for behavior analysis |

| CN111898205B (en) * | 2020-07-29 | 2022-09-13 | 吉林大学 | RBF neural network-based human-machine performance perception evaluation prediction method and system |

| CN112085169B (en) * | 2020-09-11 | 2022-05-20 | 西安交通大学 | Autonomous learning and evolution method for limb exoskeleton auxiliary rehabilitation brain-myoelectricity fusion sensing |

| CN112107397B (en) * | 2020-10-19 | 2021-08-24 | 中国科学技术大学 | Myoelectric signal driven lower limb artificial limb continuous control system |

| CN112515657A (en) * | 2020-12-02 | 2021-03-19 | 吉林大学 | Plantar pressure analysis method based on lower limb exoskeleton neural network control |

| CN112641603A (en) * | 2020-12-17 | 2021-04-13 | 迈宝智能科技(苏州)有限公司 | Exoskeleton device and exoskeleton motion control method |

| CN112949676B (en) * | 2020-12-29 | 2022-07-08 | 武汉理工大学 | Self-adaptive motion mode identification method of flexible lower limb assistance exoskeleton robot |

| CN112618284B (en) * | 2020-12-30 | 2023-10-24 | 安徽三联机器人科技有限公司 | Footwear for assisting lower limbs |

| CN112617866A (en) * | 2020-12-31 | 2021-04-09 | 深圳美格尔生物医疗集团有限公司 | EMG (electromagnetic EMG) electromyographic signal digital acquisition circuit and system |

| CN112884015A (en) * | 2021-01-26 | 2021-06-01 | 山西三友和智慧信息技术股份有限公司 | Fault prediction method for log information of water supply network partition metering system |

| CN112807647A (en) * | 2021-01-28 | 2021-05-18 | 重庆工程职业技术学院 | Simulated actual combat training system |

| CN113001546B (en) * | 2021-03-08 | 2021-10-29 | 常州刘国钧高等职业技术学校 | Method and system for improving motion speed safety of industrial robot |

| CN113081703A (en) * | 2021-03-10 | 2021-07-09 | 上海理工大学 | Method and device for distinguishing direction intention of user of walking aid |

| CN113043248B (en) * | 2021-03-16 | 2022-03-11 | 东北大学 | Transportation and assembly whole-body exoskeleton system based on multi-source sensor and control method |

| CN113043249B (en) * | 2021-03-16 | 2022-08-12 | 东北大学 | Wearable exoskeleton robot of whole body of high accuracy assembly |

| CN113456065B (en) * | 2021-08-10 | 2022-08-26 | 长春理工大学 | Limb action recognition method, device and system and readable storage medium |

| CN113885696A (en) * | 2021-08-20 | 2022-01-04 | 深圳先进技术研究院 | Exoskeleton device interaction control method and interaction control device thereof |

| CN113693604B (en) * | 2021-08-30 | 2022-04-08 | 北京中医药大学东直门医院 | Method and device for evaluating muscle tension grade based on fatigue state of wearer |

| CN114028775B (en) * | 2021-12-08 | 2022-10-14 | 福州大学 | Ankle joint movement intention identification method and system based on sole pressure |

| CN114343617A (en) * | 2021-12-10 | 2022-04-15 | 中国科学院深圳先进技术研究院 | Patient gait real-time prediction method based on edge cloud cooperation |

| CN114366559A (en) * | 2021-12-31 | 2022-04-19 | 华南理工大学 | Multi-mode sensing system for lower limb rehabilitation robot |

| CN114392137B (en) * | 2022-01-13 | 2023-05-23 | 上海理工大学 | Wearable flexible lower limb assistance exoskeleton control system |

| CN114680875A (en) * | 2022-03-11 | 2022-07-01 | 中国科学院深圳先进技术研究院 | Human motion monitoring method and device based on multi-source information fusion |

| CN114800445A (en) * | 2022-04-02 | 2022-07-29 | 中国科学技术大学先进技术研究院 | Amphibious exoskeleton robot system for underwater rescue |

| CN115192050A (en) * | 2022-07-22 | 2022-10-18 | 吉林大学 | Lower limb exoskeleton gait prediction method based on surface electromyography and feedback neural network |

| CN116439693A (en) * | 2023-05-18 | 2023-07-18 | 四川大学华西医院 | Gait detection method and system based on FMG |

| CN117064380B (en) * | 2023-10-17 | 2023-12-19 | 四川大学华西医院 | Anti-fall early warning system and method for myoelectricity detection on lower limb surface and related products |

Citations (9)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO1995031933A1 (en) * | 1994-05-20 | 1995-11-30 | T & T Medilogic | Measuring arrangement for analysing the human gait |

| WO1996041599A1 (en) * | 1995-06-13 | 1996-12-27 | Otto Bock Orthopädische Industrie Besitz- Und Verwaltungskommanditgesellschaft | Process for controlling the knee brake of a knee prosthesis and thigh prosthesis |

| CN101587546A (en) * | 2009-06-12 | 2009-11-25 | 浙江大学 | Identification method of lower limb action pattern based on supporting vector multivariate classification |

| CN104027218A (en) * | 2014-06-05 | 2014-09-10 | 电子科技大学 | Rehabilitation robot control system and method |

| CN105455996A (en) * | 2015-11-25 | 2016-04-06 | 燕山大学 | Multisource signal feedback control rehabilitation training device based on wireless |

| CN105561567A (en) * | 2015-12-29 | 2016-05-11 | 中国科学技术大学 | Step counting and motion state evaluation device |

| CN106821680A (en) * | 2017-02-27 | 2017-06-13 | 浙江工业大学 | A kind of upper limb healing ectoskeleton control method based on lower limb gait |

| CN107397649A (en) * | 2017-08-10 | 2017-11-28 | 燕山大学 | A kind of upper limbs exoskeleton rehabilitation robot control method based on radial base neural net |

| CN108785997A (en) * | 2018-05-30 | 2018-11-13 | 燕山大学 | A kind of lower limb rehabilitation robot Shared control method based on change admittance |

-

2019

- 2019-05-30 CN CN201910460094.3A patent/CN110141239B/en active Active

Patent Citations (9)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO1995031933A1 (en) * | 1994-05-20 | 1995-11-30 | T & T Medilogic | Measuring arrangement for analysing the human gait |

| WO1996041599A1 (en) * | 1995-06-13 | 1996-12-27 | Otto Bock Orthopädische Industrie Besitz- Und Verwaltungskommanditgesellschaft | Process for controlling the knee brake of a knee prosthesis and thigh prosthesis |

| CN101587546A (en) * | 2009-06-12 | 2009-11-25 | 浙江大学 | Identification method of lower limb action pattern based on supporting vector multivariate classification |

| CN104027218A (en) * | 2014-06-05 | 2014-09-10 | 电子科技大学 | Rehabilitation robot control system and method |

| CN105455996A (en) * | 2015-11-25 | 2016-04-06 | 燕山大学 | Multisource signal feedback control rehabilitation training device based on wireless |

| CN105561567A (en) * | 2015-12-29 | 2016-05-11 | 中国科学技术大学 | Step counting and motion state evaluation device |

| CN106821680A (en) * | 2017-02-27 | 2017-06-13 | 浙江工业大学 | A kind of upper limb healing ectoskeleton control method based on lower limb gait |

| CN107397649A (en) * | 2017-08-10 | 2017-11-28 | 燕山大学 | A kind of upper limbs exoskeleton rehabilitation robot control method based on radial base neural net |

| CN108785997A (en) * | 2018-05-30 | 2018-11-13 | 燕山大学 | A kind of lower limb rehabilitation robot Shared control method based on change admittance |

Non-Patent Citations (2)

| Title |

|---|

| 《下肢外骨骼机器人控制系统平台设计及控制算法分析》;李川;《万方数据库》;20141231;全文 * |

| 《肌电信号控制下肢外骨骼的步态识别与步态规划研究》;刘爱永;《万方数据库》;20141231;全文 * |

Cited By (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| IT202000022933A1 (en) * | 2020-09-29 | 2022-03-29 | Bionit Labs S R L | INTEGRATED SYSTEM FOR THE DETECTION AND PROCESSING OF ELECTROMYOGRAPHIC SIGNALS |

| WO2022069976A1 (en) * | 2020-09-29 | 2022-04-07 | Bionit Labs S.r.l. | Integrated system for detection and processing electromyographic signals |

Also Published As

| Publication number | Publication date |

|---|---|

| CN110141239A (en) | 2019-08-20 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN110141239B (en) | Movement intention recognition and device method for lower limb exoskeleton | |

| CN112754468B (en) | Human body lower limb movement detection and identification method based on multi-source signals | |

| CN110537922B (en) | Human body walking process lower limb movement identification method and system based on deep learning | |

| CN107753026B (en) | Intelligent shoe self-adaptive monitoring method for spinal leg health | |

| Chen et al. | A novel gait pattern recognition method based on LSTM-CNN for lower limb exoskeleton | |

| CN110653817A (en) | Exoskeleton robot power-assisted control system and method based on neural network | |

| CN108319928B (en) | Deep learning method and system based on multi-target particle swarm optimization algorithm | |

| KR102124095B1 (en) | System and Method for Analyzing Foot Pressure Change and Gait Pattern | |

| KR20160031246A (en) | Method and apparatus for gait task recognition | |

| Wang et al. | sEMG-based consecutive estimation of human lower limb movement by using multi-branch neural network | |

| CN112949676B (en) | Self-adaptive motion mode identification method of flexible lower limb assistance exoskeleton robot | |

| CN110977961A (en) | Motion information acquisition system of self-adaptive power-assisted exoskeleton robot | |

| US11772259B1 (en) | Enhanced activated exoskeleton system | |

| CN113043248B (en) | Transportation and assembly whole-body exoskeleton system based on multi-source sensor and control method | |

| Prado et al. | Prediction of gait cycle percentage using instrumented shoes with artificial neural networks | |

| Khodabandelou et al. | A fuzzy convolutional attention-based GRU network for human activity recognition | |

| Peng et al. | Human walking pattern recognition based on KPCA and SVM with ground reflex pressure signal | |

| Schmid et al. | SVM versus MAP on accelerometer data to distinguish among locomotor activities executed at different speeds | |

| Song et al. | Adaptive neural fuzzy reasoning method for recognizing human movement gait phase | |

| CN111531537A (en) | Mechanical arm control method based on multiple sensors | |

| Ye et al. | An adaptive method for gait event detection of gait rehabilitation robots | |

| Zhen et al. | Hybrid deep-learning framework based on Gaussian fusion of multiple spatiotemporal networks for walking gait phase recognition | |

| Hu et al. | A novel fusion strategy for locomotion activity recognition based on multimodal signals | |

| Chen et al. | Gait recognition for lower limb exoskeletons based on interactive information fusion | |

| KR101829356B1 (en) | An EMG Signal-Based Gait Phase Recognition Method Using a GPES library and ISMF |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |