WO2024085084A1 - アバター制御装置 - Google Patents

アバター制御装置 Download PDFInfo

- Publication number

- WO2024085084A1 WO2024085084A1 PCT/JP2023/037207 JP2023037207W WO2024085084A1 WO 2024085084 A1 WO2024085084 A1 WO 2024085084A1 JP 2023037207 W JP2023037207 W JP 2023037207W WO 2024085084 A1 WO2024085084 A1 WO 2024085084A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- avatar

- virtual space

- movement

- user

- motion

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/01—Input arrangements or combined input and output arrangements for interaction between user and computer

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T13/00—Animation

- G06T13/20—3D [Three Dimensional] animation

- G06T13/40—3D [Three Dimensional] animation of characters, e.g. humans, animals or virtual beings

-

- G—PHYSICS

- G06—COMPUTING OR CALCULATING; COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T19/00—Manipulating 3D models or images for computer graphics

Definitions

- This disclosure relates to an avatar control device.

- Patent Document 1 discloses a technology that uses computer graphics to display an avatar, a virtual representation of a person, in a three-dimensional virtual space, and that moves the avatar in the virtual space in accordance with the person's movements.

- An object of the present disclosure is to provide an avatar control device that can suppress unnatural movements of an avatar.

- An avatar control device includes a motion detection unit that detects motion of a user based on an image obtained by imaging the user, a motion determination unit that determines one motion pattern from among a plurality of preset motion patterns for an avatar corresponding to the user based on the motion detected by the motion detection unit, and an avatar generation unit that generates an image of the avatar moving in accordance with the motion pattern determined by the motion determination unit.

- unnatural avatar movements can be suppressed.

- FIG. 1 is a diagram illustrating an example of a configuration of a virtual space service system according to an embodiment of the present disclosure.

- FIG. 2 is a block diagram showing an example of an electrical configuration of a main device.

- FIG. 2 is a block diagram showing an example of an electrical configuration of a virtual space server.

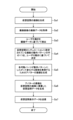

- 13 is a flowchart showing an example of an operation of the virtual space server.

- FIG. 2 is an explanatory diagram of a user's movements and the motion patterns reflected in an avatar.

- Embodiment Fig. 1 is a diagram showing an example of the configuration of a virtual space service system 1 according to this embodiment.

- the virtual space service system 1 is a system that enables a user U to experience a virtual space VS.

- the virtual space VS is a space that includes virtual objects.

- one aspect of the virtual object is an avatar A that represents a virtual body of the user U.

- the virtual space service system 1 of this embodiment enhances the user U's sense of immersion in the virtual space VS by moving the avatar A in the virtual space VS in response to the user U's movement.

- the virtual space service system 1 includes a user device 10 and a virtual space server 20.

- the user device 10 and the virtual space server 20 each access the network NW and communicate with each other via the network NW. Note that there may be multiple users U and multiple user devices 10.

- the user utilization device 10 is a device that allows the user U to experience the virtual space VS.

- the user utilization device 10 displays the virtual space VS provided by the virtual space server 20, thereby allowing the user U to experience the virtual space VS visually.

- the user utilization device 10 also transmits imaging data B1 acquired by imaging the user U to the virtual space server 20.

- the imaging data B1 is data used to detect the movements of the user U.

- the virtual space server 20 detects the movements of the user U based on the imaging data B1. This makes it possible to reflect the movements of the user U in the operation of the avatar A.

- the user utilization device 10 includes a main device 12, a display device 14, and an imaging device 16.

- the main device 12 includes a function for accessing the network NW.

- the main device 12 is a device that communicates with the virtual space server 20 through the network NW.

- the specific configuration and functions of the main device 12 will be described later.

- the display device 14 is a device that displays the virtual space VS based on a video signal C1 output from the main device 12.

- the imaging device 16 is a device that captures an image of the user U.

- the imaging device 16 is, for example, a camera.

- the imaging device 16 outputs an imaging signal C2 of an image acquired by imaging to the main device 12.

- FIG. 2 is a block diagram showing an example of the electrical configuration of the main device 12.

- the main device 12 includes a first processing device 100, a first storage device 120, a first communication device 140, and an input/output interface device 160.

- the first processing device 100, the first storage device 120, the first communication device 140, and the input/output interface device 160 are connected to a bus so as to be able to transmit and receive data to and from each other.

- the first processing device 100 includes one or more central processing units (CPUs).

- the CPUs include interfaces with peripheral devices, an arithmetic unit, registers, etc.

- the one or more CPUs are an example of one or more processors.

- a device including a processor, and a device including a CPU are an example of a computer.

- circuits such as a DSP (Digital Signal Processor), an ASIC (Application Specific Integrated Circuit), a PLD (Programmable Logic Device), and an FPGA (Field Programmable Gate Array) may be used in place of or in conjunction with the CPU.

- the first storage device 120 is a recording medium readable by the first processing device 100.

- the first storage device 120 includes a non-volatile memory and a volatile memory.

- the non-volatile memory is, for example, a ROM (Read Only Memory), an EPROM (Erasable Programmable Read Only Memory), and an EEPROM (Electrically Erasable Programmable Read Only Memory).

- the volatile memory is, for example, a RAM (Random Access Memory).

- the first storage device 120 stores a first program PR1 for controlling the main device 12.

- the first communication device 140 is hardware that functions as a transmitting/receiving device that accesses the network NW and communicates with other devices via this network NW.

- the first communication device 140 is also called, for example, a network device, a network controller, a network card, and a communication module.

- the first communication device 140 has a connector for wired connection, and may also have an interface circuit corresponding to the connector.

- the first communication device 140 may also have a wireless communication interface.

- the input/output interface device 160 is a device that includes an interface circuit for transmitting and receiving signals between the display device 14 and the imaging device 16.

- the input/output interface device 160 may include an input/output interface device for the display device 14 and an input/output interface device for the imaging device 16. Any standard may be used for transmitting and receiving signals between the input/output interface device 160 and the display device 14 and the imaging device 16.

- the main device 12 of this embodiment functions as a first reception control unit 101, a display control unit 102, an image signal acquisition control unit 103, and a first transmission control unit 104 by the first processing device 100 executing the first program PR1.

- the first reception control unit 101 receives data transmitted from the virtual space server 20 by controlling the first communication device 140.

- One of the pieces of data transmitted from the virtual space server 20 is virtual space display data B2.

- the virtual space display data B2 is data for displaying the virtual space VS on the display device 14.

- the virtual space display data B2 is data for an image of the virtual space VS including avatar A.

- the display control unit 102 generates a video signal C1 for displaying the virtual space VS on the display device 14 based on the virtual space display data B2.

- the display control unit 102 outputs this video signal C1 to the display device 14.

- the display device 14 displays an image based on the video signal C1, whereby the virtual space VS is displayed on the display device 14.

- the imaging signal acquisition control unit 103 acquires an imaging signal C2 from the imaging device 16 via the input/output interface device 160.

- This imaging signal C2 is a signal of an image acquired by the imaging device 16 capturing an image of the user U.

- the imaging device 16 generates captured images at a predetermined frame rate, such as 60 fps and 120 fps, and the imaging signal acquisition control unit 103 sequentially acquires the imaging signals C2 of each captured image.

- the first transmission control unit 104 sequentially transmits the imaging signals C2 acquired by the imaging signal acquisition control unit 103 to the virtual space server 20 by controlling the first communication device 140. After the imaging signals C2 are converted into moving image data by compression encoding, the moving image data may be transmitted by the first transmission control unit 104 as the imaging signals C2. Any standard may be used for the compression encoding.

- the compression encoding process may be performed by any hardware such as the first processing device 100.

- the main device 12, the display device 14, and the imaging device 16 may all or some of the devices be integrated into one device.

- the main device 12 include a portable computer, a stationary computer, or a wearable device.

- the portable computer include a notebook personal computer, a tablet computer, or a smartphone.

- An example of the wearable device is a head-mounted display.

- FIG. 3 is a block diagram showing an example of the electrical configuration of the virtual space server 20.

- the virtual space server 20 includes a second processing device 200, a second storage device 220, and a second communication device 240.

- the second processing device 200, the second storage device 220, and the second communication device 240 are connected to a bus so as to be able to transmit and receive data to and from each other.

- the second processing device 200 includes one or more CPUs.

- the CPU includes an interface with peripheral devices, an arithmetic unit, and registers, etc.

- the one or more CPUs are an example of one or more processors.

- a device including a processor and a device including a CPU are an example of a computer.

- circuits such as a DSP, ASIC, PLD, and FPGA may be used in place of or in conjunction with the CPU.

- the second storage device 220 is a recording medium readable by the second processing device 200.

- the second storage device 220 includes a non-volatile memory and a volatile memory.

- the non-volatile memory is, for example, a ROM, an EPROM, and an EEPROM.

- the volatile memory is, for example, a RAM.

- the second storage device 220 of this embodiment stores a second program PR2, virtual space data E1, avatar data E2, and movement pattern data E3.

- the second program PR2 is a program for controlling the virtual space server 20.

- the virtual space data E1 is data for generating an image of the virtual space VS.

- the virtual space data E1 includes data for generating a three-dimensional virtual space VS and generating an image of the virtual space VS viewed in an arbitrary direction from an arbitrary viewpoint position in the virtual space VS. Furthermore, in the present disclosure, the virtual space data E1 includes data for virtually generating a situation F1 described later.

- Avatar data E2 is data for generating an image of avatar A corresponding to user U. More specifically, avatar A includes five movable parts P, each of which can move independently, as shown in FIG. 1. The five movable parts P have a one-to-one correspondence with the five main parts of the human body: the head, torso, arms, and legs. The movements of avatar A are expressed by the movements of these movable parts P. Each movable part P may include multiple movable parts P that are further subdivided based on the joints contained in the parts of the human body. Furthermore, the appearance of avatar A does not need to match the appearance of user U.

- the movement pattern data E3 is data that defines multiple movement patterns F2 that avatar A can take for each situation F1 in the virtual space VS in which avatar A is placed. Details of this movement pattern data E3 will be explained later together with the operation of the virtual space server 20.

- the second communication device 240 is hardware that functions as a transmitting/receiving device that accesses the network NW and communicates with other devices via this network NW.

- the second communication device 240 is also called, for example, a network device, a network controller, a network card, and a communication module.

- the second communication device 240 may include a connector for wired connection and an interface circuit corresponding to the connector.

- the second communication device 240 may also include a wireless communication interface.

- the virtual space server 20 of this embodiment functions as a second reception control unit 201, a virtual space control unit 202, an avatar control unit 203, and a second transmission control unit 204 by the second processing device 200 executing the second program PR2.

- the second reception control unit 201 receives data transmitted from the user device 10 by controlling the second communication device 240.

- One of the pieces of data transmitted from the user device 10 is the imaging data B1 described above.

- the virtual space control unit 202 generates an image of the virtual space VS in which avatar A is placed. Specifically, the virtual space control unit 202 reads out virtual space data E1 of the virtual space VS corresponding to the situation F1 from the second storage device 220, and generates an image of the virtual space VS based on this virtual space data E1. In addition, the virtual space control unit 202 changes the image of the virtual space VS in accordance with the operation of the user U, the movement of the user U, or the line of sight of the user U, thereby enhancing the user U's sense of immersion in the virtual space VS.

- the avatar control unit 203 is an example of a functional unit that causes the second processing device 200 to function as the avatar control device of the present disclosure.

- the avatar control unit 203 includes an avatar generation unit 2031 that reads out avatar data E2 of the avatar A corresponding to the user U from the second storage device 220 and generates an image of the avatar A based on the avatar data E2.

- the image of the avatar A is superimposed on the image of the virtual space VS by, for example, the avatar control unit 203 and the virtual space control unit 202, thereby obtaining an image of the virtual space VS including the avatar A.

- the second transmission control unit 204 controls the second communication device 240 to transmit image data of the virtual space VS including avatar A to the user utilization device 10 as virtual space display data B2.

- the avatar control unit 203 includes, in addition to the above-mentioned avatar generation unit 2031, an imaging data acquisition unit 2032, a movement detection unit 2033, and a movement determination unit 2034 in order to reflect the movement of the user U in the movement of the avatar A.

- the imaging data acquisition unit 2032 acquires the imaging data B1 received under the control of the second reception control unit 201.

- the motion detection unit 2033 detects the motion of the user U based on the imaging data B1. Specifically, the motion detection unit 2033 detects the motion of each part of the body of the user U captured in the imaging data B1.

- a method for detecting the motion of the human body based on the imaging data B1 may be bone detection that detects the motion of the human body skeleton.

- the movement determination unit 2034 determines a movement pattern F2 to be performed by the avatar A based on the movement of the user U detected by the movement detection unit 2033 and the situation F1 in the virtual space VS by referring to the movement pattern data E3. Then, the avatar generation unit 2031 generates a plurality of images of the avatar A to represent the manner in which the relative positions of the plurality of movable parts P change in accordance with the movement pattern F2. When the plurality of images of the avatar A are each still images, the avatar generation unit 2031 may generate an animated video in which the relative positions of the plurality of movable parts P change in accordance with the movement pattern F2, based on the plurality of images of the avatar A.

- FIG. 4 is a flowchart showing an example of the operation of the virtual space server 20.

- the virtual space control unit 202 generates an image of the virtual space VS corresponding to the situation F1 based on the virtual space data E1 (step Sa1).

- situation F1 refers to the situation in which avatar A is placed.

- Situation F1 is classified by the activity performed by avatar A in virtual space VS, the location where avatar A is located, or a combination of the activity and the location.

- Activities include, for example, classes, meetings, church services, parties, concerts, festivals, and sports.

- Locations include, for example, classrooms, conference rooms, chapels, venues, parks, sports fields, or one's own room at home. Note that multiple situations F1 may be further classified by the presence or absence of avatar A of another user U in virtual space VS, the date and time, the weather, and the like.

- any one of these situations F1 is determined by selection by the user U or the virtual space server 20.

- the situation F1 may be dynamically determined based on the location where the avatar A is located. In this case, the situation F1 for each location is pre-registered in the movement pattern data E3.

- the avatar control unit 203 executes control to display the avatar A, which moves in accordance with the movements of the user U, in the virtual space VS. That is, in the avatar control unit 203, the imaging data acquisition unit 2032 acquires imaging data B1 received under the control of the second reception control unit 201 (step Sa2).

- the movement detection unit 2033 detects the movement of the user U based on the imaging data B1 (step Sa3). More specifically, the motion detection unit 2033 detects the motion of each part of the user U's body that is captured in the image data B1. In step Sa3, if the amount of displacement of a part at a preset time is equal to or less than a predetermined threshold, the motion detection unit 2033 excludes the motion of that part from the detection result. This process prevents minute motions of the user U, in other words, noise-like motions, from being reflected in the motion of the avatar A.

- the threshold value of the amount of displacement at a preset time is appropriate, and may be set according to, for example, the size of the range of motion of the corresponding part. In addition, if noise-like motions can be removed from the detection result by the motion detection unit 2033, any physical quantity indicating the motion of a part, such as the frequency of displacement of the part, may be used in addition to the amount of displacement of the part.

- the movement determination unit 2034 refers to the movement pattern data E3 to determine one movement pattern F2 from among the multiple movement patterns F2 set in the situation F1 in the virtual space VS based on the detection result of the movement of the user U (step Sa4).

- the motion pattern F2 is information preset for each human body movement

- the motion of each of the movable parts P of the avatar A is information that is preset according to the human body movement.

- a plurality of motion patterns F2 that are at least not unnatural motions in the situation F1 are preset for each situation F1.

- the situation F1 is “class”

- motions such as “raise hand”, “stand”, “sit”, and “read” are set as the motion pattern F2.

- the situation F1 is “prayer”

- motions such as “stand”, “sit”, “read”, and “pray” are set as the motion pattern F2.

- the motion of "raise hand” is an unnatural motion during prayer, so it is not set as the motion pattern F2.

- "still" which indicates that the avatar A does not move, is registered for all situations F1.

- the movement determining unit 2034 of the present embodiment determines one movement pattern F2 by identifying a movement pattern F2 similar to the movement of the user U from among the multiple movement patterns F2. In this case, when a movement pattern F2 similar to the movement of the user U is not registered in the situation F1, the movement determining unit 2034 determines "immobile" as the movement pattern F2.

- the method for determining the similarity is arbitrary. For example, the similarity is determined based on the consistency between the change in the relative positional relationship between each part of the human body and the change in the relative positional relationship between each movable part P of the avatar A.

- the avatar generation unit 2031 generates multiple images of avatar A based on the movement pattern F2 determined by the movement determination unit 2034 to represent the manner in which the relative positions of the multiple moving parts P change in accordance with the movement pattern F2 (step Sa5).

- the multiple images of avatar A may be a moving image including those images. Furthermore, if the movement pattern F2 is "stationary," an image of avatar A in which the relative positions of each moving part P are fixed is generated in step Sa5.

- the avatar control unit 203 and the virtual space control unit 202 generate virtual space display data B2 showing an image obtained by superimposing the image of avatar A on the image of the virtual space VS (step Sa6).

- the second communication device 240 transmits the virtual space display data B2 to the user device 10 (step Sa7).

- the virtual space server 20 of this embodiment includes the second processing device 200, which functions as the avatar control unit 203.

- the avatar control unit 203 includes a motion detection unit 2033, a motion determination unit 2034, and an avatar generation unit 2031.

- the motion detection unit 2033 detects the motion of the user U based on a captured image acquired by capturing an image of the user U.

- the motion determination unit 2034 determines one motion pattern F2 from a plurality of preset motion patterns F2 for the avatar A corresponding to the user U based on the motion detected by the motion detection unit 2033.

- the avatar generation unit 2031 generates an image of the avatar A that moves according to the motion pattern F2 determined by the motion determination unit 2034.

- the avatar A moves according to one of a plurality of preset movement patterns F2. Therefore, unnatural movements can be suppressed compared to a case where the movement of the user U is sequentially reflected in the movement of the avatar A.

- a plurality of movement patterns F2 are set in advance for each of a plurality of situations F1 in the virtual space VS. Then, the movement determination unit 2034 determines one movement pattern F2 from the plurality of movement patterns F2 corresponding to the situation F1 in the virtual space VS in which the avatar A is placed. This configuration prevents avatar A from performing actions that a third party would find unnatural because they are inappropriate for the situation F1 of the virtual space VS in which avatar A is placed.

- the situation F1 is classified by the activity that avatar A performs in the virtual space VS, the location where avatar A is located, or a combination of the activity and the location.

- an appropriate movement pattern F2 that avatar A can take for a situation F1 in the virtual space VS can be set more accurately and in detail based on activity, location, or a combination of activity and location.

- the movement detection unit 2033 excludes the movement of the user U for which the physical amount indicating the movement is equal to or less than a threshold value from the detection result of the movement of the user U.

- minute movements of the user U in other words, noisy movements, are prevented from being reflected in the movement of the avatar A.

- the multiple movement patterns F2 include a pattern "still", which corresponds to avatar A not moving. According to this configuration, even if the multiple movement patterns F2 do not include a movement pattern F2 that is similar to the movement of the user U, the movement determination unit 2034 can reliably determine one movement pattern F2 that does not appear unnatural by determining a ⁇ still'' pattern.

- the avatar A includes a plurality of movable parts P that can move independently of one another, and the plurality of movable parts P correspond to a plurality of parts that constitute a human body, respectively.

- the plurality of motion patterns F2 indicate changes in the relative positions of the plurality of movable parts P. According to this configuration, the corresponding movable parts P in the avatar A move in response to the movements of parts of the user U's body, thereby further enhancing the user U's sense of immersion.

- the avatar control unit 203 may generate an image of avatar A moving in accordance with the movement of user U detected by the movement detection unit 2033.

- the movement detection unit 2033 may prevent the noisy movement of user U from being reflected in the movement of avatar A by excluding the movement of user U for which the physical quantity indicating the movement is equal to or less than a threshold value from the detection results of the user U, as described above.

- prohibited movement patterns which are patterns of movements of user U that are prohibited from being reflected in the movements of avatar A

- the movement determination unit 2034 may determine that the movement pattern F2 is "still," which is a pattern corresponding to avatar A not moving.

- each functional block may be realized using one device that is physically or logically coupled, or may be realized using two or more devices that are physically or logically separated and directly or indirectly connected (for example, using wires, wirelessly, etc.) and these multiple devices.

- the functional block may be realized by combining the one device or the multiple devices with software.

- Functions include, but are not limited to, judgment, determination, judgment, calculation, calculation, processing, derivation, investigation, search, confirmation, reception, transmission, output, access, resolution, selection, selection, establishment, comparison, assumption, expectation, consideration, broadcasting, notifying, communicating, forwarding, configuring, reconfiguring, allocation, mapping, and assignment.

- a functional block (component) that functions as a transmission is called a transmitting unit or a transmitter.

- the implementation method of each is not particularly limited.

- notification of information is not limited to the aspects/embodiments described in this disclosure and may be performed using other methods.

- notification of information may be performed by physical layer signaling (e.g., DCI (Downlink Control Information), UCI (Uplink Control Information)), higher layer signaling (e.g., RRC (Radio Resource Control) signaling, MAC (Medium Access Control) signaling, broadcast information (MIB (Master Information Block), SIB (System Information Block)), other signals, or combinations thereof.

- RRC signaling may be referred to as an RRC message, such as an RRC Connection Setup message or an RRC Connection Reconfiguration message.

- LTE Long Term Evolution

- LTE-A Long Term Evolution-Advanced

- SUPER 3G IMT-Advanced

- 4G 4th generation mobile communication system

- 5G 5th generation mobile communication system

- 6G 6th generation mobile communication system

- xG xG (x is, for example, an integer or a decimal)

- FRA Frequency

- the present invention may be applicable to at least one of systems utilizing IEEE 802.11 (Wi-Fi®), IEEE 802.16 (WiMAX®), IEEE 802.20, UWB (Ultra-Wide Band), Bluetooth®, or other suitable systems, and next-generation systems that are extended, modified, created, or defined based thereon. Additionally, multiple systems may be combined (for example, a combination of at least one of LTE and LTE-A with 5G, etc.).

- (1-6) Information, etc. can be output from a higher layer (or a lower layer) to a lower layer (or a higher layer). It may be input and output via multiple network nodes.

- Input and output information, etc. may be stored in a specific location (e.g., memory) or may be managed using a management table. Input and output information, etc. may be overwritten, updated, or added to. Output information, etc. may be deleted. Input information, etc. may be sent to another device.

- a specific location e.g., memory

- Input and output information, etc. may be overwritten, updated, or added to.

- Output information, etc. may be deleted.

- Input information, etc. may be sent to another device.

- the determination may be made based on a value represented by one bit (0 or 1), a Boolean value (true or false), or a comparison of numerical values (e.g., a comparison with a predetermined value).

- Software shall be construed broadly to mean instructions, instruction sets, code, code segments, program code, programs, subprograms, software modules, applications, software applications, software packages, routines, subroutines, objects, executable files, threads of execution, procedures, functions, and the like, whether referred to as software, firmware, middleware, microcode, hardware description language, or otherwise.

- Software, instructions, information, etc. may also be transmitted or received over a transmission medium.

- the software is transmitted from a website, server, or other remote source using wired technologies (such as coaxial cable, fiber optic cable, twisted pair, Digital Subscriber Line (DSL)), and/or wireless technologies (such as infrared, microwave, etc.), then these wired and/or wireless technologies are included within the definition of transmission media.

- wired technologies such as coaxial cable, fiber optic cable, twisted pair, Digital Subscriber Line (DSL)

- wireless technologies such as infrared, microwave, etc.

- Information, signals, etc. described in this disclosure may be represented using any of a variety of different technologies.

- data, instructions, commands, information, signals, bits, symbols, chips, etc. that may be referred to throughout the above description may be represented by voltages, currents, electromagnetic waves, magnetic fields or magnetic particles, optical fields or photons, or any combination thereof.

- the channel and the symbol may be a signal (signaling).

- the signal may be a message.

- a component carrier (CC) may be called a carrier frequency, a cell, a frequency carrier, etc.

- the information, parameters, etc. described in this disclosure may be represented using absolute values, may be represented using relative values from a predetermined value, or may be represented using other corresponding information.

- radio resources may be indicated by an index.

- the names used for the above-mentioned parameters are not limiting in any respect.

- the formulas, etc. using these parameters may differ from those explicitly disclosed in this disclosure.

- the various channels (e.g., PUCCH, PDCCH, etc.) and information elements may be identified by any suitable names, and therefore the various names assigned to these various channels and information elements are not limiting in any respect.

- base station BS

- radio base station fixed station

- NodeB NodeB

- eNodeB eNodeB

- gNodeB gNodeB

- a base station may also be referred to by terms such as a macro cell, small cell, femto cell, and pico cell.

- a base station may accommodate one or more (e.g., three) cells.

- the entire coverage area of the base station can be divided into multiple smaller areas, and each smaller area can provide communication services by a base station subsystem (e.g., a small indoor base station (RRH: Remote Radio Head).

- a base station subsystem e.g., a small indoor base station (RRH: Remote Radio Head).

- RRH Remote Radio Head

- the term "cell” or “sector” refers to a part or the entire coverage area of at least one of the base station and base station subsystem that provides communication services in this coverage.

- the base station transmitting information to a terminal may be interpreted as the base station instructing the terminal to control and operate based on the information.

- a mobile station may also be referred to by those skilled in the art as a subscriber station, mobile unit, subscriber unit, wireless unit, remote unit, mobile device, wireless device, wireless communication device, remote device, mobile subscriber station, access terminal, mobile terminal, wireless terminal, remote terminal, handset, user agent, mobile client, client, or some other suitable term.

- At least one of the base station and the mobile station may be called a transmitting device, a receiving device, a communication device, etc.

- At least one of the base station and the mobile station may be a device mounted on a moving object, the moving object itself, etc.

- the moving object is a movable object, and the moving speed is arbitrary. It also includes the case where the moving object is stopped.

- the moving object includes, but is not limited to, for example, a vehicle, a transport vehicle, an automobile, a motorcycle, a bicycle, a connected car, an excavator, a bulldozer, a wheel loader, a dump truck, a forklift, a train, a bus, a handcart, a rickshaw, a ship and other watercraft, an airplane, a rocket, an artificial satellite, a drone (registered trademark), a multicopter, a quadcopter, a balloon, and objects mounted thereon.

- the moving object may also be a moving object that travels autonomously based on an operation command.

- the base station may be a vehicle (e.g., a car, an airplane, etc.), an unmanned moving body (e.g., a drone, an autonomous vehicle, etc.), or a robot (manned or unmanned).

- At least one of the base station and the mobile station may include a device that does not necessarily move during communication operation.

- at least one of the base station and the mobile station may be an IoT (Internet of Things) device such as a sensor.

- the base station in the present disclosure may be read as a user terminal.

- each aspect/embodiment of the present disclosure may be applied to a configuration in which communication between a base station and a user terminal is replaced with communication between multiple user terminals (which may be called, for example, D2D (Device-to-Device), V2X (Vehicle-to-Everything), etc.).

- the user terminal may be configured to have the functions of the above-mentioned base station.

- terms such as "upstream” and "downstream” may be read as terms corresponding to terminal-to-terminal communication (e.g., "side").

- the uplink channel, downlink channel, etc. may be replaced with a side channel.

- the user terminal in this disclosure may be replaced with a base station.

- the base station may be configured to have the functions of the user terminal described above.

- determining and “determining” as used in this disclosure may encompass a wide variety of actions. “Determining” and “determining” may include, for example, judging, calculating, computing, processing, deriving, investigating, looking up, search, inquiry (e.g., searching in a table, database, or other data structure), and considering something that has been ascertained as having been “determined” or “determined.” In addition, “judgment” and “decision” may include regarding receiving (e.g., receiving information), transmitting (e.g., sending information), input, output, and accessing (e.g., accessing data in memory) as having been “judged” or “decided”.

- judgment and “decision” may include regarding resolving, selecting, choosing, establishing, comparing, and the like as having been “judged” or “decided”. In other words, “judgment” and “decision” may include regarding some action as having been “judged” or “decided”. In addition, “judgment” may be interpreted as “assuming”, “expecting”, “considering”, and the like.

- connection and “coupled”, or any variation thereof refer to any direct or indirect connection or coupling between two or more elements, and may include the presence of one or more intermediate elements between two elements that are “connected” or “coupled” to each other.

- the coupling or connection between elements may be physical, logical, or a combination thereof.

- “connected” may be read as "access”.

- two elements may be considered to be “connected” or “coupled” to each other using at least one of one or more wires, cables, and printed electrical connections, as well as electromagnetic energy having wavelengths in the radio frequency range, microwave range, and optical (both visible and invisible) range, as some non-limiting and non-exhaustive examples.

- the reference signal may be abbreviated as RS (Reference Signal) or may be called a pilot depending on the applicable standard.

- 1...virtual space service system 10...user device, 12...main device, 14...display device, 16...imaging device, 20...virtual space server, 200...second processing device (avatar control device), 203...avatar control unit, 2031...avatar generation unit, 2032...imaging data acquisition unit, 2033...movement detection unit, 2034...movement determination unit, A...avatar, B1...imaging data, E3...movement pattern data, F1...situation, F2...movement pattern, P...movable parts, U...user, VS...virtual space.

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- General Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Human Computer Interaction (AREA)

- Computer Graphics (AREA)

- Computer Hardware Design (AREA)

- Software Systems (AREA)

- Processing Or Creating Images (AREA)

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| JP2024551776A JPWO2024085084A1 (enExample) | 2022-10-21 | 2023-10-13 |

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| JP2022-168969 | 2022-10-21 | ||

| JP2022168969 | 2022-10-21 |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| WO2024085084A1 true WO2024085084A1 (ja) | 2024-04-25 |

Family

ID=90737603

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/JP2023/037207 Ceased WO2024085084A1 (ja) | 2022-10-21 | 2023-10-13 | アバター制御装置 |

Country Status (2)

| Country | Link |

|---|---|

| JP (1) | JPWO2024085084A1 (enExample) |

| WO (1) | WO2024085084A1 (enExample) |

Citations (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2020203999A1 (ja) * | 2019-04-01 | 2020-10-08 | 住友電気工業株式会社 | コミュニケーション支援システム、コミュニケーション支援方法、および画像制御プログラム |

| JP2021108030A (ja) * | 2019-12-27 | 2021-07-29 | グリー株式会社 | コンピュータプログラム、サーバ装置及び方法 |

-

2023

- 2023-10-13 JP JP2024551776A patent/JPWO2024085084A1/ja active Pending

- 2023-10-13 WO PCT/JP2023/037207 patent/WO2024085084A1/ja not_active Ceased

Patent Citations (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2020203999A1 (ja) * | 2019-04-01 | 2020-10-08 | 住友電気工業株式会社 | コミュニケーション支援システム、コミュニケーション支援方法、および画像制御プログラム |

| JP2021108030A (ja) * | 2019-12-27 | 2021-07-29 | グリー株式会社 | コンピュータプログラム、サーバ装置及び方法 |

Also Published As

| Publication number | Publication date |

|---|---|

| JPWO2024085084A1 (enExample) | 2024-04-25 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN113170335B (zh) | 波束配置方法、波束配置装置及存储介质 | |

| US11770724B2 (en) | Mobile terminal for displaying whether QoS is satisfied in wireless communication system | |

| US20210058838A1 (en) | Method and apparatus for performing conditional cell change in wireless communication system | |

| KR20210109567A (ko) | 자원 예약 방법 및 장치 | |

| CN115486183B (zh) | 用于pusch的通信方法、用于pusch的通信装置及存储介质 | |

| JPWO2016199494A1 (ja) | 制御装置、基地局、端末装置及び制御方法 | |

| CN113170343B (zh) | 波束失败检测方法、波束失败检测装置及存储介质 | |

| CN110557847B (zh) | 通信方法、装置及存储介质 | |

| JP2020524967A (ja) | クロスキャリアスケジューリング方法及び装置 | |

| CN113170472A (zh) | 传输配置指示状态配置方法、装置及存储介质 | |

| US11901962B2 (en) | Apparatus for radio carrier analyzation | |

| JP2019176428A (ja) | 基地局、及びユーザ装置 | |

| WO2024085084A1 (ja) | アバター制御装置 | |

| CN117641501A (zh) | 网络指示、连接方法、装置及终端 | |

| JP2024079939A (ja) | 画像生成装置 | |

| EP4075918B1 (en) | Communication method and device | |

| CN112351497B (zh) | 通信方法和装置 | |

| CN119585634A (zh) | 无源无线电设备的充电和追踪 | |

| US20250308172A1 (en) | Virtual object data selection apparatus | |

| WO2024075512A1 (ja) | アバター画像生成装置 | |

| JP7757529B2 (ja) | 表示制御装置 | |

| US20240163647A1 (en) | Call integration with computer-generated reality | |

| US20250337472A1 (en) | Apparatus and method for selecting an odd number of antenna ports for sounding reference signal resource transmission | |

| WO2024250562A1 (en) | Method, apparatus, and system for update of map or mapping configuration | |

| EP4611451A1 (en) | Method and apparatus for determining beam failure detection reference signal resources, and storage medium |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| 121 | Ep: the epo has been informed by wipo that ep was designated in this application |

Ref document number: 23879727 Country of ref document: EP Kind code of ref document: A1 |

|

| WWE | Wipo information: entry into national phase |

Ref document number: 2024551776 Country of ref document: JP |

|

| NENP | Non-entry into the national phase |

Ref country code: DE |

|

| 122 | Ep: pct application non-entry in european phase |

Ref document number: 23879727 Country of ref document: EP Kind code of ref document: A1 |