WO2018058773A1 - Video data processing method and apparatus - Google Patents

Video data processing method and apparatus Download PDFInfo

- Publication number

- WO2018058773A1 WO2018058773A1 PCT/CN2016/107111 CN2016107111W WO2018058773A1 WO 2018058773 A1 WO2018058773 A1 WO 2018058773A1 CN 2016107111 W CN2016107111 W CN 2016107111W WO 2018058773 A1 WO2018058773 A1 WO 2018058773A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- information

- spatial

- code stream

- representation

- view

- Prior art date

Links

Images

Classifications

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/20—Servers specifically adapted for the distribution of content, e.g. VOD servers; Operations thereof

- H04N21/23—Processing of content or additional data; Elementary server operations; Server middleware

- H04N21/234—Processing of video elementary streams, e.g. splicing of video streams, manipulating MPEG-4 scene graphs

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/60—Network structure or processes for video distribution between server and client or between remote clients; Control signalling between clients, server and network components; Transmission of management data between server and client, e.g. sending from server to client commands for recording incoming content stream; Communication details between server and client

- H04N21/65—Transmission of management data between client and server

- H04N21/658—Transmission by the client directed to the server

- H04N21/6587—Control parameters, e.g. trick play commands, viewpoint selection

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/80—Generation or processing of content or additional data by content creator independently of the distribution process; Content per se

- H04N21/81—Monomedia components thereof

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/80—Generation or processing of content or additional data by content creator independently of the distribution process; Content per se

- H04N21/81—Monomedia components thereof

- H04N21/816—Monomedia components thereof involving special video data, e.g 3D video

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/80—Generation or processing of content or additional data by content creator independently of the distribution process; Content per se

- H04N21/83—Generation or processing of protective or descriptive data associated with content; Content structuring

- H04N21/84—Generation or processing of descriptive data, e.g. content descriptors

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/80—Generation or processing of content or additional data by content creator independently of the distribution process; Content per se

- H04N21/83—Generation or processing of protective or descriptive data associated with content; Content structuring

- H04N21/845—Structuring of content, e.g. decomposing content into time segments

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/80—Generation or processing of content or additional data by content creator independently of the distribution process; Content per se

- H04N21/83—Generation or processing of protective or descriptive data associated with content; Content structuring

- H04N21/845—Structuring of content, e.g. decomposing content into time segments

- H04N21/8456—Structuring of content, e.g. decomposing content into time segments by decomposing the content in the time domain, e.g. in time segments

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/80—Generation or processing of content or additional data by content creator independently of the distribution process; Content per se

- H04N21/85—Assembly of content; Generation of multimedia applications

- H04N21/858—Linking data to content, e.g. by linking an URL to a video object, by creating a hotspot

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N21/00—Selective content distribution, e.g. interactive television or video on demand [VOD]

- H04N21/80—Generation or processing of content or additional data by content creator independently of the distribution process; Content per se

- H04N21/85—Assembly of content; Generation of multimedia applications

- H04N21/858—Linking data to content, e.g. by linking an URL to a video object, by creating a hotspot

- H04N21/8586—Linking data to content, e.g. by linking an URL to a video object, by creating a hotspot by using a URL

Definitions

- the present invention relates to the field of streaming media data processing, and in particular, to a method and an apparatus for processing video data.

- VR virtual reality

- FOV field of view

- the VR video is divided into multiple code streams corresponding to multiple fixed space objects, and each fixed space object is correspondingly based on a hypertext transfer protocol (HTTP).

- HTTP hypertext transfer protocol

- DASH Dynamic adaptive streaming over HTTP

- the terminal selects one or more fixed space objects in the video that include the space object according to the new space object after the user switches, and each fixed space object includes a part of the switched space object.

- the terminal acquires the code stream of the panoramic space object, and decodes the code stream of the one or more fixed space objects, and then presents the video content corresponding to the space object according to the new space object.

- the terminal needs to store the code stream of the panoramic space object into the local storage space, and then select the corresponding code stream according to the new space object for presentation, and the code stream corresponding to the non-presented space object is excess video data, not only It occupies the local storage space, and also causes a waste of network transmission bandwidth of video data transmission, and has poor applicability.

- the DASH technical specification is mainly composed of two major parts: the media presentation description (English: Media Presentation) Description, MPD) and media file format (English: file format).

- the server prepares multiple versions of the code stream for the same video content.

- Each version of the code stream is called a representation in the DASH standard (English: representation).

- Representation is a collection and encapsulation of one or more codestreams in a transport format, one representation containing one or more segments.

- Different versions of the code stream may have different coding parameters such as code rate and resolution, and each code stream is divided into a plurality of small files, and each small file is called segmentation (or segmentation, English: segment).

- segmentation or segmentation, English: segment

- rep1 is a high-definition video with a code rate of 4mbps (megabits per second)

- rep2 is a standard-definition video with a code rate of 2mbps

- rep3 is a standard-definition video with a code rate of 1mbps.

- the segment marked as shaded in Figure 3 is the segmentation data requested by the client.

- the first three segments requested by the client are the segments of the media representation rep3, the fourth segment is switched to rep2, and the fourth segment is requested. Segment, then switch to rep1, request the fifth segment and the sixth segment, and so on.

- Each represented segment can be stored in a file end to end, or it can be stored as a small file.

- the segment may be packaged in accordance with the standard ISO/IEC 14496-12 (ISO BMFF (Base Media File Format)) or may be encapsulated in accordance with ISO/IEC 13818-1 (MPEG-2 TS).

- the media presentation description is called MPD

- the MPD can be an xml file.

- the information in the file is described in a hierarchical manner. As shown in FIG. 2, the information of the upper level is completely inherited by the next level. Some media metadata is described in this file, which allows the client to understand the media content information in the server and can use this information to construct the request. Find the http-URL of the segment.

- media presentation is a collection of structured data for presenting media content

- media presentation description English: media presentation description

- a standardized description of media presentation files for providing streaming media services Period English: period

- representation English: representation

- a structured data set (encoded individual media types, such as audio, video, etc.) is a collection and encapsulation of one or more code streams in a transport format, one representation comprising one or more segments

- Set (English: AdaptationSet), which represents a set of multiple interchangeable coded versions of the same media content component, an adaptive set containing one or more representations

- a subset English: subset

- the information is a media unit referenced by the HTTP uniform resource locator in the media presentation description, and the segmentation information describes segmentation

- the related technical concept of the MPEG-DASH technology of the present invention can refer to the relevant provisions in ISO/IEC23009-1:2014 Information technology--Dynamic adaptive streaming over HTTP(DASH)--Part 1:Media presentation description and segment formats, You can refer to the relevant provisions in the historical standard version, such as ISO/IEC 23009-1:2013 or ISO/IEC 23009-1:2012.

- Virtual reality technology is a computer simulation system that can create and experience virtual worlds. It uses computer to generate a simulation environment. It is a multi-source information fusion interactive 3D dynamic vision and system simulation of entity behavior. The user is immersed in the environment.

- VR mainly includes Simulate aspects such as environment, perception, natural skills and sensing equipment.

- the simulation environment is a computer-generated, real-time, dynamic, three-dimensional, realistic image. Perception means that the ideal VR should have the perception that everyone has.

- there are also perceptions such as hearing, touch, force, and motion, and even smell and taste, also known as multi-perception.

- Natural skills refer to the rotation of the person's head, eyes, gestures, or other human behaviors.

- a sensing device is a three-dimensional interactive device.

- VR video or 360 degree video, or Omnidirectional video

- only the video image representation and associated audio presentation corresponding to the orientation portion of the user's head are presented.

- VR video is that the entire video content will be presented to the user; VR video is only a subset of the entire video is presented to the user (English: in VR typically only a Subset of the entire video region represented by the video pictures).

- a Spatial Object is defined as a spatial part of a content component (ega region of interest, or a tile ) and represented by either an Adaptation Set or a Sub-Representation.”

- spatial object is defined as a part of a content component, such as an existing region of interest (ROI) and tiles; spatial relationships can be described in Adaptation Set and Sub-Representation.

- ROI region of interest

- the existing DASH standard defines some descriptor elements in the MPD. Each descriptor element has two attributes, schemeIdURI and value. Where schemeIdURI describes what the current descriptor is, value Is the parameter value of the descriptor.

- SupplementalProperty and EssentialProperty SupplementalProperty and EssentialProperty (supplemental feature descriptors and basic property descriptors).

- the spatial location information may further include a spatial location corresponding to the thick wire frame in the figure and a spatial location corresponding to the thin wire frame, and the spatial object corresponding to the thick wire frame completely covers the spatial object of the thin wire frame, and the thin wire frame Space object covers part of the thick line space object;

- Method 1 using the upper and lower angular ranges of the viewing angle center position and the viewing angle to describe the spatial position in the thick line frame; using the upper and lower angular ranges of the viewing angle center position and the viewing angle to describe the spatial position in the thin line frame;

- All the spatial location information syntax in the present invention can exist independently in a box, such as a strp box. If there is an identifier in the upper level of the strp box, and the identifier strp box exists, the space position in the thick line box is described in the strp box. Information and thin wireframe space location information.

- the spatial position of the thick line frame is described by the upper and lower angular ranges of the central position of the viewing angle and the viewing angle;

- the spatial position of the thin line frame is described by the offset of the central position of the viewing angle and the upper and lower angular extent of the viewing angle;

- the spatial position of the thin line frame is described by the upper and lower angle ranges of the center position of the angle of view and the angle of view; the spatial position of the thick line frame is described by the offset of the center position of the angle of view and the range of the upper and lower angles of the angle of view.

- the spatial position information is described by the width and height of the center position and the angle of view, and the above-mentioned center position may also be replaced with the upper left starting position;

- the angular position of the spatial position described above may be replaced by a spatial position at coordinates x, y, and z of the three coordinate axes.

- Figure 6 is a schematic diagram of the spatial relationship of spatial objects.

- the image AS can be set as a content component, and AS1, AS2, AS3, and AS4 are four spatial objects included in the AS, and each spatial object is associated with a space.

- the spatial relationship of each spatial object is described in the MPD, for example, each spatial object. The relationship between the associated spaces.

- the spatial object of the view code stream can completely contain the spatial object described by the spatial information, and may also have some deviation

- the server may divide a space within a 360-degree view range to obtain a plurality of spatial objects, each spatial object corresponding to a sub-view of the user,

- the splicing of multiple sub-views forms a complete human eye viewing angle.

- the dynamic change of the viewing angle of the human eye can usually be 120 degrees * 120 degrees.

- the space object 1 corresponding to the frame 1 and the space object 1 corresponding to the frame 2 described in FIG. The server may prepare a set of video code streams for each spatial object.

- the server may obtain encoding configuration parameters of each code stream in the video, and generate a code stream corresponding to each spatial object of the video according to the encoding configuration parameters of the code stream.

- the client may request the video stream segment corresponding to a certain angle of view for a certain period of time to be output to the spatial object corresponding to the perspective when the video is output.

- the client outputs the video stream segment corresponding to all the angles of view within the 360-degree viewing angle range in the same period of time, and the complete video image in the time period can be outputted in the entire 360-degree space.

- the server may first map the spherical surface into a plane, and divide the space on the plane. Specifically, the server may map the spherical surface into a latitude and longitude plan by using a latitude and longitude mapping manner.

- FIG. 8 is a schematic diagram of a spatial object according to an embodiment of the present invention. The server can map the spherical surface into a latitude and longitude plan, and divide the latitude and longitude plan into a plurality of spatial objects such as A to I.

- the server may also map the spherical surface into a cube, expand the plurality of faces of the cube to obtain a plan view, or map the spherical surface to other polyhedrons, and expand the plurality of faces of the polyhedron to obtain a plan view or the like.

- the server can also map the spherical surface to a plane by using more mapping methods, which can be determined according to the requirements of the actual application scenario, and is not limited herein. The following will be described in conjunction with FIG. 8 in a latitude and longitude mapping manner.

- each spatial object corresponds to one sub-view

- each set of DASH code streams corresponding to each spatial object is for each sub-view.

- Viewing stream The spatial information of the spatial objects associated with each image in a view code stream is the same, whereby the view code stream can be set as a static view code stream.

- the view code stream of each sub-view is part of the entire video stream, and the view code streams of all sub-views constitute a complete video stream.

- the DASH code stream corresponding to the corresponding spatial object may be selected for playing according to the viewing angle currently viewed by the user.

- the client can determine the DASH code stream corresponding to the target space object of the switch according to the new perspective selected by the user, and then switch the video play content to the DASH code stream corresponding to the target space object.

- the embodiment of the invention provides a method and a device for processing video data based on HTTP dynamic adaptive streaming media, which can save transmission bandwidth resources of video data, improve flexibility and applicability of video presentation, and enhance user experience of video viewing.

- the first aspect provides a method for processing video data based on HTTP dynamic adaptive streaming, which may include:

- the media presentation description includes information of at least two representations, a first representation of the at least two representations is an author view code stream, and the author view code stream includes a plurality of images, The spatial information of the spatial object associated with at least two of the plurality of images is different, the second representation of the at least two representations is a static view code stream, and the static view code stream includes a plurality of images, the plurality of The spatial information of the spatial objects associated with the images is the same;

- the segment of the first representation is acquired, otherwise, the segment of the second representation is obtained.

- the embodiment of the present invention may describe the author view code stream and the static view code stream in the media presentation description, wherein the spatial information of the spatial object associated with the image included in the author view code stream may dynamically change, and the static view code stream includes The spatial information of the spatial object associated with the image does not change.

- the embodiment may select a segment of the corresponding code stream from the author view code stream and the lens view code stream, thereby improving the flexibility of the stream stream segment selection and enhancing the video viewing.

- User experience The embodiment of the invention obtains the segmentation of the corresponding code stream from the author view code stream and the static view code stream, and does not need to acquire all segments, which can save the transmission bandwidth resource of the video data and enhance the applicability of the data processing.

- the media presentation description further includes identifier information, where the identifier information is used to identify an author view code stream of the video.

- the media presentation description includes information of an adaptation set

- the adaptation set is used to describe attributes of media data segments of the plurality of replaceable coded versions of the same media content component.

- the information of the adaptive set includes the identifier information.

- the media presentation description includes information indicating, the representation being a set and encapsulation of one or more code streams in a transmission format

- the information that is represented includes the identifier information.

- the media presentation description includes information about a descriptor, and the descriptor is used to describe spatial information of a spatial object to which the association is associated;

- the information of the descriptor includes the identifier information.

- the embodiment of the invention can add the identifier information of the author view code stream in the media program description, which can improve the recognizability of the author view code stream.

- the embodiment of the present invention may also carry the identification information of the author's view code stream in the information of the adaptive set of the media presentation description, or the information of the representation of the media presentation description, or the information of the description of the media presentation description, and the operation is flexible. High applicability.

- the server needs to add a syntax element corresponding to the author view code stream when generating the MPD, and the client may obtain the author view code stream information according to the syntax element.

- a representation for describing the author's view code stream may be added to the MPD, and the first representation is set.

- a representation existing in the MPD for describing a static view stream may be referred to as a second representation.

- the representation of several possible MPD syntax elements is as follows. It can be understood that the MPD example of the embodiment of the present invention only shows the relevant part of the existing standard that modifies the syntax element of the MPD in the existing standard, and does not show all the syntax elements of the MPD file. The technical solution of the embodiment of the present invention can be applied by the operator in combination with the relevant provisions in the DASH standard.

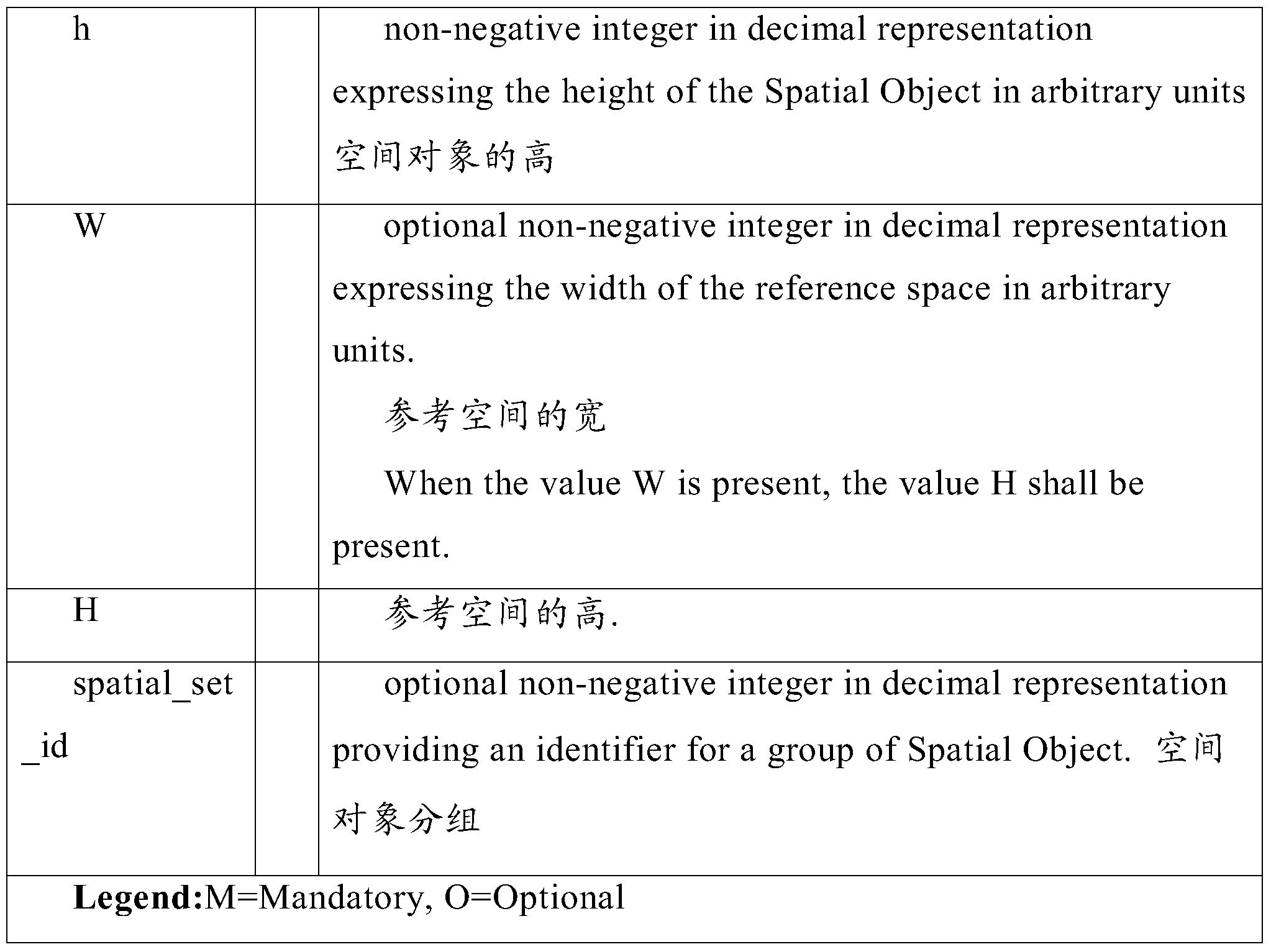

- Table 2 is an attribute information table of the newly added syntax element:

- the attribute @view_type is used to mark whether the corresponding representation is a non-author view (or static view) code stream or an author view (or dynamic view) code stream.

- the view_type value is 0, it indicates that the corresponding representation is a non-author view code stream; when the view_type value is 1, it indicates that the corresponding representation is the author view code stream.

- the client parses the MPD file locally, it can determine whether the current video stream contains the author view stream according to the attribute.

- Example 1 Described in the MPD descriptor

- the server can insert a new value at the position where the EssentialProperty of the existing MPD syntax contains the second value of the value attribute, the second value of the original value, and the second value.

- the value is followed by a value.

- Example 2 Described in the representation

- the syntax element view_type has been added to the property information of Representation.

- Example 3 Described in the attribute information of the adaptation set (adaptationSet)

- the syntax element view_type is added to the property information of the AdaptationSet (ie, the attribute information of the code stream set where the author's view stream is located).

- description information of the independent file of the spatial information may also be added, such as adding an adaptation set, and describing the information of the spatial information file in the adaptation set;

- the segment of the first representation carries spatial information of a spatial object associated with an image included in the segment of the first representation

- the method further includes:

- the spatial information of the spatial object associated with the image is a spatial relationship of the spatial component and the content component associated with the spatial object.

- the spatial information is carried in a designated box in the segment of the first representation or in a specified box in a metadata representation associated with the segment of the first representation.

- the specified box is a trun box included in a segment of the first representation, and the trun box is used to describe a set of consecutive samples of a track.

- the embodiment of the present invention may add spatial information of a spatial object associated with an image included in an author view code stream in an author view code stream (specifically, a segment in an author view code stream), for the client to view the code stream according to the author view.

- the spatial information of the spatial object associated with the image contained in the segmentation performs segmentation switching of the author view code stream, or switching between the author view code stream and the static view code stream, thereby improving the applicability of code stream switching and enhancing the client user.

- the server may further add spatial information of one or more author space objects in the author view code stream.

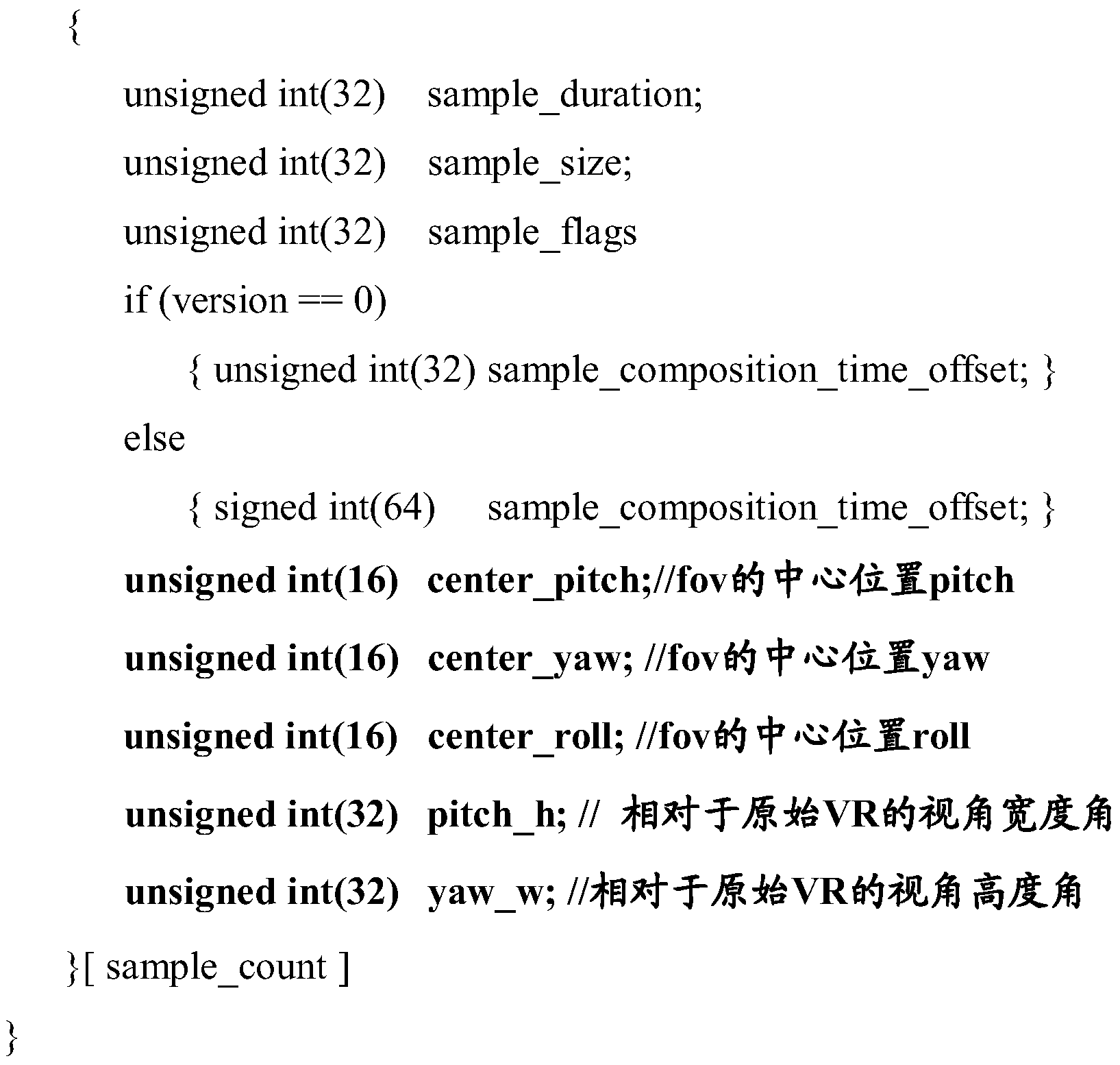

- the server may add the above spatial information to the trun box in the existing file format for describing the spatial information of the spatial object associated with each frame image of the author view code stream.

- the server may add the syntax element tr_flags to the existing trun box and set the value of tr_flags to 0x001000, and the spatial information for marking the relative position of the preset space object in the global space object is included in Trun box.

- FIG. 11 is a schematic illustration of the relative positions of author space objects in a panoramic space.

- the point O is the center of the sphere corresponding to the 360-degree VR panoramic video spherical image, and can be regarded as the position of the human eye when viewing the VR panoramic image.

- Point A is the center point of the author's perspective image

- C and F are the boundary points of the A-point of the author's perspective image along the horizontal coordinate axis of the image

- E and D are the points along the longitudinal axis of the image in the image of the author's perspective.

- Boundary point B is the projection point of point A along the spherical meridian on the equator line

- I is the starting coordinate point in the horizontal direction on the equator line.

- Center_pitch the center position of the image of the author space object is mapped to the vertical direction of the point on the panoramic spherical (ie global space) image, such as ⁇ AOB in FIG. 11;

- Center_yaw the center position of the image of the author space object is mapped to the horizontal deflection angle of the point on the panoramic spherical image, as shown in FIG. 11 ⁇ IOB;

- Center_roll the center position of the image of the author space object is mapped to the rotation angle of the point on the panoramic spherical image and the direction of the connection of the spherical center, as shown in FIG. 11 ⁇ DOB;

- Pitch_h the image of the author's spatial object in the field of view of the panoramic spherical image, expressed as the maximum angle of the field of view, as shown in Figure 11 ⁇ DOE; yaw_w: the image of the author's spatial object in the field of view of the panoramic spherical image, with the field of view

- ⁇ COF the horizontal maximum angle

- the unsigned int (16) may not be included in the trun box.

- the flag identifier and the spatial location information may not exist in the same box.

- the flag may exist in the upper level box of the box where the spatial location information is located, such as in the newly added box and its syntax description information.

- strp box is a box describing spatial information

- the box above the box is stbl (or other)

- add a flag description in stbl if the flag description contains strp box, Then the strp box can be parsed in stbl.

- the server may also add a new box and its syntax description to the video format for describing the spatial information of the author space object.

- a new box and its syntax description information are as follows (example 2):

- the information contained in the strp box is the spatial information of the newly added author space object, and the meaning of each syntax element included is the same as the meaning of each syntax element included in the above example 1.

- the "unsigned int(16)center_roll;//fov center position roll" in the box may not exist in the example, and may be determined according to actual application scenario requirements, and is not limited herein.

- the above-mentioned strp box can be included in the stbl box, and the flag in the stbl box is marked as the spatial location information strp box.

- the upper box of the specific strp box can be determined according to the actual application scenario requirements, and no limitation is imposed here.

- the strp box described above may be described in the metadata (traf) of the segmentation of the DASH, or may be in the metadata (matadata) of a track based on the ISOBMAF format package.

- the above strp box may also be included in a separate metadata stream or track associated with the author's view stream, and the sample contained in the metadata stream or track is spatial information related to the author's view stream. ;

- the spatial information in the present invention may be the yaw angle and the width of the center of the angle of view; or may be expressed as the yaw angle at the upper left of the angle of view and the yaw angle at the lower right.

- a flag is extended in a parameter set of the code stream, and the flag value is 1, indicating that the spatial position information of the current frame is included in the code stream data of each frame;

- the code stream data contains spatial position information of the current frame.

- the semantics of the attributes describing the spatial location information are consistent with the syntax semantics of the trun box, strp box.

- the spatial location information is encapsulated in SEI (Supplemental enhancement information)

- the ROI in the above grammar indicates a specific value, such as 190, which is not limited herein.

- the semantics of the ROI and the syntax of the trun box and the strp box are consistent.

- ROI_payload(payloadSize) description method one:

- the spatial information is described by the center point position yaw angle (center_pitch and center_yaw) or the center position yaw angle offset (center_pitch_offset and center_yaw_offset) and the spatial position width angle (pitch_w) and the elevation angle (pitch_h).

- the yaw angle of the upper left position of the space object and the yaw angle of the lower right position can also be used to describe.

- the second aspect provides a processing device for video data based on HTTP dynamic adaptive streaming, which may include:

- a receiving module configured to receive a media presentation description, where the media presentation description includes at least two representations, the first representation of the at least two representations is an author view code stream, and the author view code stream includes multiple The spatial information of the spatial object associated with at least two of the plurality of images is different; the second representation of the at least two representations is a static view code stream, and the static view code stream includes multiple An image, wherein spatial information of the spatial objects associated with the plurality of images is the same;

- the obtaining module is further configured to: when the obtained instruction information is a viewing author view code stream And acquiring the segment of the first representation, otherwise acquiring the segment of the second representation.

- the media presentation description further includes identifier information, where the identifier information is used to identify an author view code stream of the video.

- the media presentation description includes information of an adaptation set

- the adaptation set is used to describe attributes of media data segments of the plurality of replaceable coded versions of the same media content component.

- the information of the adaptive set includes the identifier information.

- the media presentation description includes information indicating, the representation being a set and encapsulation of one or more code streams in a transmission format

- the information that is represented includes the identifier information.

- the media presentation description includes information about a descriptor, and the descriptor is used to describe spatial information of a spatial object to which the association is associated;

- the information of the descriptor includes the identifier information.

- the segment of the first representation carries spatial information of a spatial object associated with an image included in the segment of the first representation

- the obtaining module is further configured to:

- the spatial information of the spatial object associated with the image is a spatial relationship of the spatial component and its associated content component.

- the spatial information is carried in a specified box in the segment of the first representation, or in a specified box in a metadata representation associated with the segment of the first representation.

- the specified box is a trun box included in a segment of the first representation, and the trun box is used to describe a set of consecutive samples of a track.

- the embodiment of the present invention may describe the author view code stream and the static view code stream in the media presentation description, wherein the spatial information of the spatial object associated with the image included in the author view code stream may dynamically change, and the static view code stream includes The spatial information of the spatial object associated with the image does not change.

- the embodiment of the present invention can select a segment of the corresponding code stream from the author view code stream and the lens view code stream according to the obtained instruction information, improve the flexibility of the code stream segment selection, and enhance the user experience of the video view.

- the embodiment of the invention obtains the segmentation of the corresponding code stream from the author view code stream and the static view code stream, and does not need to acquire all segments, which can save the transmission bandwidth resource of the video data and enhance the applicability of the data processing.

- the embodiment of the present invention may add spatial information of a spatial object associated with an image included in an author view code stream in an author view code stream (specifically, a segment in an author view code stream), for the client to view the code stream according to the author view.

- the spatial information of the spatial object associated with the image contained in the segmentation performs segmentation switching of the author view code stream, or switching between the author view code stream and the static view code stream, thereby improving the applicability of code stream switching and enhancing the client user.

- FIG. 1 is a schematic diagram of an example of a framework for DASH standard transmission used in system layer video streaming media transmission

- FIG. 2 is a schematic structural diagram of an MPD transmitted by a DASH standard used for system layer video streaming media transmission

- FIG. 3 is a schematic diagram of switching of a code stream segment according to an embodiment of the present invention.

- FIG. 4 is a schematic diagram of a segmentation storage manner in code stream data

- 5 is another schematic diagram of a segmentation storage manner in code stream data

- Figure 6 is a schematic diagram of the spatial relationship of spatial objects

- FIG. 7 is a schematic view of a perspective corresponding to a change in viewing angle

- Figure 8 is another schematic diagram of the spatial relationship of spatial objects

- FIG. 9 is a schematic flowchart of a method for processing video data based on HTTP dynamic adaptive streaming media according to an embodiment of the present invention.

- Figure 10 is another schematic diagram of the spatial relationship of spatial objects

- Figure 11 is a schematic illustration of the relative positions of author space objects in a panoramic space

- FIG. 12 is a schematic structural diagram of a device for processing video data based on HTTP dynamic adaptive streaming media according to an embodiment of the present invention.

- FIG. 1 is a schematic diagram of a frame example of DASH standard transmission used in system layer video streaming media transmission.

- the data transmission process of the system layer video streaming media transmission scheme includes two processes: a server side (such as an HTTP server, a media content preparation server, hereinafter referred to as a server) generates media data for video content, responds to a client request process, and a client ( The process of requesting and obtaining media data from a server, such as an HTTP streaming client.

- the media data includes a media presentation description (MPD) and a media stream.

- MPD media presentation description

- the MPD on the server includes a plurality of representations (also called presentations, English: representation), each representation describing a plurality of segments.

- the HTTP streaming request control module of the client obtains the MPD sent by the server, analyzes the MPD, determines the information of each segment of the video code stream described in the MPD, and further determines the segment to be requested, and sends the corresponding segment to the server.

- the segment's HTTP request is decoded and played through the media player.

- the media data generated by the server for the video content includes a video stream corresponding to different versions of the same video content, and an MPD of the code stream.

- the server generates a low-resolution low-rate low frame rate (such as 360p resolution, 300kbps code rate, 15fps frame rate) for the video content of the same episode, and a medium-rate medium-rate high frame rate (such as 720p).

- Resolution 1200 kbps, 25 fps frame rate, high resolution, high bit rate, high frame rate (such as 1080p resolution, 3000 kbps, 25 fps frame rate).

- FIG. 2 is a schematic structural diagram of an MPD of a system transmission scheme DASH standard.

- each of the information indicating a plurality of segments is described in time series, for example, Initialization Segment, Media Segment 1, Media Segment2, ..., Media Segment20, etc.

- the representation may include segmentation information such as a playback start time, a playback duration, and a network storage address (for example, a network storage address expressed in the form of a Uniform Resource Locator (URL)).

- URL Uniform Resource Locator

- the client In the process of the client requesting and obtaining the media data from the server, when the user selects to play the video, the client obtains the corresponding MPD according to the video content requested by the user to the server.

- the client sends a request for downloading the code stream segment corresponding to the network storage address to the server according to the network storage address of the code stream segment described in the MPD, and the server sends the code stream segment to the client according to the received request.

- the client After the client obtains the stream segment sent by the server, it can perform decoding, playback, and the like through the media player.

- the system layer video streaming media transmission scheme adopts the DASH standard, and realizes the transmission of video data by analyzing the MPD by the client, requesting the video data to the server as needed, and receiving the data sent by the server.

- FIG. 3 is a schematic diagram of switching of a code stream segment according to an embodiment of the present invention.

- Service Three different versions of code stream data can be prepared for the same video content (such as a movie), and three different versions of the code stream data are described in the MPD using three Representations.

- the above three Representations (hereinafter referred to as rep) can be assumed to be rep1, rep2, rep3, and the like.

- rep1 is a high-definition video with a code rate of 4mbps (megabits per second)

- rep2 is a standard-definition video with a code rate of 2mbps

- rep3 is a normal video with a code rate of 1mbps.

- Each rep segment contains a video stream within a time period.

- each rep describes the segments of each time segment according to the time series, and the segment lengths of the same time period are the same, thereby enabling content switching of segments on different reps.

- the segment marked as shadow in the figure is the segmentation data requested by the client, wherein the first 3 segments requested by the client are segments of rep3, and the client may request rep2 when requesting the 4th segment.

- the fourth segment in the middle can be switched to play on the fourth segment of rep2 after the end of the third segment of rep3.

- the playback end point of the third segment of Rep3 (corresponding to the time end of the playback time) is the playback start point of the fourth segment (corresponding to the time start time of playback), and also rep2 or rep1.

- the playback start point of the 4th segment is used to achieve alignment of segments on different reps. After the client requests the 4th segment of rep2, it switches to rep1, requests the 5th segment and the 6th segment of rep1, and so on. Then you can switch to rep3, request the 7th segment of rep3, then switch to rep1, request the 8th segment of rep1.

- Each rep segment can be stored in a file end to end, or it can be stored as a small file.

- the segment may be packaged in accordance with the standard ISO/IEC 14496-12 (ISO BMFF (Base Media File Format)) or may be encapsulated in accordance with ISO/IEC 13818-1 (MPEG-2 TS). It can be determined according to the requirements of the actual application scenario, and no limitation is imposed here.

- FIG. 4 is a schematic diagram of a segment storage mode in the code stream data; All the segments on the same rep are stored in one file, as shown in Figure 5.

- Figure 5 is another schematic diagram of the segmentation storage mode in the code stream data.

- each segment in the segment of repA is stored as a file separately, and each segment in the segment of repB is also stored as a file separately.

- the server may describe each segment in the form of a template or a list in the MPD of the code stream. URL and other information.

- the server may use an index segment (English: index segment, that is, sidx in FIG. 5) in the MPD of the code stream to describe related information of each segment.

- the index segment describes the byte offset of each segment in its stored file, the size of each segment, and the duration of each segment (duration, also known as the duration of each segment).

- the spatial area of the VR video is a 360-degree panoramic space (or omnidirectional space), which exceeds the normal of the human eye.

- the visual range therefore, the user will change the viewing angle (ie, the angle of view, FOV) at any time while watching the video.

- the viewing angle of the user is different, and the video images seen will also be different, so the content presented by the video needs to change as the user's perspective changes.

- FIG. 7 is a schematic diagram of a perspective corresponding to a change in viewing angle.

- Box 1 and Box 2 are two different perspectives of the user, respectively.

- the user can switch the viewing angle of the video viewing from the frame 1 to the frame 2 through the operation of the eye or the head rotation or the screen switching of the video viewing device.

- the video image viewed when the user's perspective is box 1 is a video image presented by the one or more spatial objects corresponding to the perspective at the moment.

- the user's perspective is switched to box 2.

- the video image viewed by the user should also be switched to the video image presented by the space object corresponding to box 2 at that moment.

- the server may divide the panoramic space within a 360-degree viewing angle range to obtain a plurality of spatial objects, each spatial object corresponding to a sub-view of the user.

- the splicing of multiple sub-views forms a complete human eye viewing angle. That is, the human eye angle of view (hereinafter referred to as the angle of view) may correspond to one or more spatial objects, and the spatial objects corresponding to the angle of view are all spatial objects corresponding to the content objects within the scope of the human eye.

- the viewing angle of the human eye can be dynamically changed, and the spatial object corresponding to the content object in the range of the human eye angle of 120 degrees*120 degrees and 120 degrees*120 degrees may include one or There are a plurality of, for example, the viewing angle 1 corresponding to the frame 1 described in FIG. 7 and the viewing angle 2 corresponding to the frame 2.

- the client may obtain the spatial information of the video code stream prepared by the server for each spatial object through the MPD, and then request the video code stream corresponding to one or more spatial objects in a certain period of time according to the requirement of the perspective.

- the segment outputs the corresponding spatial object according to the perspective requirements.

- the client outputs the video stream segment corresponding to all the spatial objects within the 360-degree viewing angle range in the same time period, and then displays the complete video image in the entire 360-degree panoramic space.

- the server may first map the spherical surface into a plane, and divide the spatial object on the plane. Specifically, the server may map the spherical surface into a latitude and longitude plan by using a latitude and longitude mapping manner.

- FIG. 8 is a schematic diagram of a spatial object according to an embodiment of the present invention. The server can map the spherical surface into a latitude and longitude plan, and divide the latitude and longitude plan into a plurality of spatial objects such as A to I.

- the server may also map the spherical surface into a cube, expand the plurality of faces of the cube to obtain a plan view, or map the spherical surface to other polyhedrons, and expand the plurality of faces of the polyhedron to obtain a plan view or the like.

- the server can also map the spherical surface to a plane by using more mapping methods, which can be determined according to the requirements of the actual application scenario, and is not limited herein. The following will be described in conjunction with FIG. 8 in a latitude and longitude mapping manner. As shown in FIG. 8, after the server can divide the spherical panoramic space into a plurality of spatial objects such as A to I, a set of DASH code streams can be prepared for each spatial object.

- each spatial object corresponds to a set of DASH code streams.

- the client user switches the viewing angle of the video viewing, the client can obtain the code stream corresponding to the new spatial object according to the new perspective selected by the user, and then the video content of the new spatial object code stream can be presented in the new perspective.

- FIG. 9 is a schematic flowchart diagram of a method for processing video data according to an embodiment of the present invention.

- the method provided by the embodiment of the present invention includes the following steps:

- instruction information is a viewing author view code stream, acquiring the segment of the first representation, and otherwise acquiring the segment of the second representation.

- the video image to be presented to the user at each play time during video playback can be set according to the above-mentioned main plot route, and the video sequence of each play time can be obtained by stringing the time series to obtain the above main plot route.

- Storyline The video image to be presented to the user at each of the playing times may be presented with a spatial object corresponding to each playing time, that is, a video image to be presented in the time period is presented on the spatial object.

- the angle of view corresponding to the video image to be presented at each of the playing times may be set as the author's perspective

- the spatial object that presents the video image in the perspective of the author may be set as the author space object.

- the code stream corresponding to the author view object can be set as the author view code stream.

- the author's view stream contains multiple video frames, and each video frame can be rendered as one image, that is, the author's view stream contains multiple images.

- the image presented by the author's perspective is only part of the panoramic image (or VR image or omnidirectional image) that the entire video is to present.

- the spatial information of the spatial object associated with the image presented by the author video stream may be different or the same, that is, the spatial information of the spatial object associated with at least two images of the plurality of images included in the author's perspective stream. different.

- the corresponding code stream can be prepared by the server for the author perspective of each play time.

- the code stream corresponding to the author view may be set as the author view code stream.

- the server may encode the author view code stream and transmit it to the client.

- the story scene picture corresponding to the author view code stream may be presented to the user.

- the server does not need to transmit the code stream of other perspectives other than the author's perspective (set to the non-author perspective, that is, the static view stream) to the client, which can save resources such as the transmission bandwidth of the video data.

- the author's perspective is a spatial object that presents the preset image according to the video storyline

- the author's space object at different playing moments may be different or the same, thereby knowing that the author's perspective is one.

- the author A spatial object is a dynamic spatial object with a constantly changing position, that is, the position of the author space object corresponding to each play time is different in the panoramic space.

- Each of the spatial objects shown in FIG. 8 is a spatial object divided according to a preset rule, and is a spatial object fixed in a relative position in the panoramic space.

- the author space object corresponding to any play time is not necessarily fixed as shown in FIG. 8.

- One of the spatial objects and a spatial object whose relative position is constantly changing in the global space.

- the content presented by the video obtained by the client from the server is stringed by each author's perspective. It does not contain the spatial object corresponding to the non-author perspective.

- the author view code stream only contains the content of the author space object, and the MPD obtained from the server does not.

- the spatial information of the author's spatial object containing the author's perspective the client can only decode and present the code stream of the author's perspective. If the viewing angle of the viewing is switched to the non-author perspective during the video viewing process, the client cannot present the corresponding video content to the user.

- the embodiment of the invention modifies the MPD file and the video file format (file format) of the video provided in the DASH standard, so as to realize the video content presentation in the process of switching between the author perspective and the non-author perspective in the video playback process.

- the modification of the DASH MPD file provided by the present invention can also be carried in a .m3u8 file defined by the HTTP protocol-based real-time stream (English: Http Live Streaming, HLS) or a smooth stream (English: Smooth Streaming, SS.ismc file)

- HTTP protocol-based real-time stream English: Http Live Streaming, HLS

- a smooth stream English: Smooth Streaming, SS.ismc file

- SDP session description protocol

- the modification of the file format can also be applied to the file format of ISOBMFF or MPEG2-TS, which can be determined according to the requirements of the actual application scenario.

- the embodiment of the present invention will be described by taking the above identification information in the DASH code stream as an example.

- the identifier information may be added to the media presentation description for identifying the author view code stream of the video, that is, the author view code stream.

- the identifier information may be carried in the attribute information of the code stream set in which the author view code stream is carried in the media presentation description, that is, the identifier information may be carried in the information of the adaptive set in the media presentation description, where the identifier is

- the information can also be carried in the information contained in the presentation contained in the media presentation description. Further, the foregoing identification information may also be carried in the information of the descriptor in the media presentation description.

- the client can quickly obtain the code stream of the author view code stream and the non-author view by parsing the MPD to obtain the syntax elements added in the MPD.

- the specific modification or added syntax is described in Table 2 below, and Table 2 is new.

- Table 2 The attribute information table of the grammar element:

- the attribute @view_type is used to mark whether the corresponding representation is a non-author view (or static view) code stream or an author view (or dynamic view) code stream.

- the view_type value is 0, it indicates that the corresponding representation is a non-author view code stream; when the view_type value is 1, it indicates that the corresponding representation is the author view code stream.

- the client parses the MPD file locally, it can determine whether the current video stream contains the author view stream according to the attribute.

- Example 1 Described in the MPD descriptor

- the server can insert a new value at the position where the EssentialProperty of the existing MPD syntax contains the second value of the value attribute, the second value of the original value, and the second value.

- the value is followed by a value.

- the client parses the MPD, it can obtain the second value of the value. That is, in this example, the second value of value is view_type.

- Example 2 Described in the representation

- the syntax element view_type has been added to the property information of Representation.

- Example 3 Described in the attribute information of the adaptation set (adaptationSet)

- the syntax element view_type is added to the property information of the AdaptationSet (ie, the attribute information of the code stream set where the author's view stream is located).

- the space may be added.

- Descriptive information of the information file such as adding an adaptation set, describing the information of the spatial information file in the adaptation set;

- the client can determine the author view code stream according to the identification information such as the view_type carried in the MPD by parsing the MPD. Further, the client may obtain a segment in the author view code stream when the received instruction information indicates to view the author view code stream, and present a segment of the author view code stream. If the instruction information indicates that the author's view code stream is not viewed, the segment of the static view code stream may be acquired for presentation. If the spatial information related to the author view stream is encapsulated in a separate metadata file, the client can parse the MPD and obtain the metadata of the spatial information according to the codec identifier, thereby parsing the spatial information;

- the switching instruction information received by the client may include the above-mentioned head rotation, eyes, gestures, or other human behavior action information, and may also include input information of the user, and the input information may include keyboard input information. , voice input information and touch screen input information.

- the server may also add spatial information of one or more author space objects to the author view code stream.

- each author space object corresponds to one or Multiple images, ie one or more images, may be associated with the same spatial object, or each image may be associated with one spatial object.

- the server can add the spatial information of each author space object in the author view code stream, and can also use the space information as a sample and independently encapsulate it in a track or file.

- the spatial information of an author space object is the spatial relationship between the author space object and its associated content component, that is, the spatial relationship between the author space object and the panoramic space.

- the space described by the spatial information of the author space object may specifically be a partial space in the panoramic space, such as any one of the above-mentioned FIG. 8 or the solid line frame (or any one of the dotted lines) in FIG.

- the server may add the foregoing spatial information to the trun box included in the segment of the author view code stream in the existing file format, and describe each frame image of the author view code stream.

- the spatial information of the associated spatial object may be a partial space in the panoramic space, such as any one of the above-mentioned FIG. 8 or the solid line frame (or any one of the dotted lines) in FIG.

- the server may add the foregoing spatial information to the trun box included in the segment of the author view code stream in the existing file format, and describe each frame image of the author view code stream.

- the spatial information of the associated spatial object may be a partial space in the panoramic space, such as any one of the above-mentioned FIG. 8 or the solid line frame (or any one of the dotted lines)

- the server may add the syntax element tr_flags to the existing trun box and set the value of tr_flags to 0x001000, and the spatial information for marking the relative position of the preset space object in the global space object is included in Trun box.

- the spatial information of the author space object included in the trun box described above is described by a yaw angle, or the spatial position description of the latitude and longitude map may be used, or other geometric solid figures may be used for description. limit.

- the trun box described above uses yaw angle descriptions such as center_pitch, center_yaw, center_roll, pitch_h, and yaw_w to describe the center position (center_pitch, center_yaw, center_roll), height (pitch_h), width yaw_w yaw angle of the spatial information in the sphere.

- Figure 11 is a schematic illustration of the relative positions of author space objects in a panoramic space. In FIG.

- the point O is the center of the sphere corresponding to the 360-degree VR panoramic video spherical image, and can be regarded as the position of the human eye when viewing the VR panoramic image.

- Point A is the center point of the author's perspective image

- C and F are the boundary points of the A-point of the author's perspective image along the horizontal coordinate axis of the image

- E and D are the points along the longitudinal axis of the image in the image of the author's perspective.

- Boundary point, B is the projection point of point A along the spherical meridian on the equator line

- I is the starting coordinate point in the horizontal direction on the equator line. The meaning of each element is explained as follows:

- Center_pitch the center position of the image of the author space object is mapped to the vertical direction of the point on the panoramic spherical (ie global space) image, such as ⁇ AOB in FIG. 11;

- Center_yaw the center position of the image of the author space object is mapped to the horizontal deflection angle of the point on the panoramic spherical image, as shown in FIG. 11 ⁇ IOB;

- Center_roll the center position of the image of the author space object is mapped to the rotation angle of the point on the panoramic spherical image and the direction of the connection of the spherical center, as shown in FIG. 11 ⁇ DOB;

- Pitch_h the image of the author's spatial object in the field of view of the panoramic spherical image, expressed as the maximum angle of the field of view, as shown in Figure 11 ⁇ DOE; yaw_w: the image of the author's spatial object in the field of view of the panoramic spherical image, with the field of view

- ⁇ COF the horizontal maximum angle

- the server may also add a new box and its syntax description to the video format for describing the spatial information of the author space object.

- a new box and its syntax description information are as follows (example 2):

- the information contained in the strp box is the spatial information of the newly added author space object, and the meaning of each syntax element included is the same as the meaning of each syntax element included in the above example 1.

- the "unsigned int(16)center_roll;//fov center position roll" in the box may not exist in the example, and may be determined according to actual application scenario requirements, and is not limited herein.

- the above-mentioned strp box can be included in the stbl box, and the flag in the stbl box is marked as the spatial location information strp box.

- the upper box of the specific strp box can be determined according to the actual application scenario requirements, and no limitation is imposed here.

- the strp box described above may be described in the metadata (traf) of the segmentation of the DASH, or in the metadata (matadata) of a track based on the ISOBMAF format.

- Spatial information (fov center position and width and height, or fov upper left) described in the above various embodiments

- the location and the lower right position may also be included in a separate metadata stream or trajectory associated with the author's view stream, each spatial information corresponding to a metadata trajectory or a sample in the metadata file.

- the client parses the segment of the author's view code stream, and obtains the spatial information of the author space object, and then determines the relative position of the author space object in the panoramic space, and then can be in the video playback process according to the currently viewed author view code stream.

- the space information of the author's spatial object and the trajectory of the perspective switching determine the position of the spatial object after the perspective switching, so as to realize the switching between the author's perspective and the non-author's perspective stream.

- the spatial information of the author's spatial object can also be analyzed by the analytic element. Data track or metadata file acquisition.

- the spatial information of the non-author perspective after the switching may be determined according to the spatial information of the author's perspective to obtain the non-author.

- the code stream corresponding to the spatial information of the perspective is presented.

- the client may set the center position of the author space object determined above or the specified boundary position included in the author space object as a starting point, or a position corresponding to the upper left and the lower right of the space object, for example, the above center_pitch, center_yaw, center_roll , pitch_h and

- the position indicated by one or more parameters in yaw_w is set as the starting point.

- the client may calculate the end point space object indicated by the space object switching trajectory according to the spatial object switching trajectory switched by the viewing angle, and determine the end point space object as the target space object.

- the solid line area shown in FIG. 10 is an author space object

- the dotted line area is a target space object calculated based on the author space object and the space object switching trajectory.

- the server may request the code corresponding to the target space object. flow.

- the client may send a request for acquiring a code stream of the target space object to the server according to information such as a URL of a code stream of each spatial object described in the MPD.

- the server may send the code stream corresponding to the target space object to the client.

- the client obtains the code stream of the target space object, the code stream of the target space object can be decoded and played, and the switching of the view code stream is realized.

- the client may determine the author view according to the identification information carried in the MPD.

- the corner stream can also obtain the spatial information of the author space object corresponding to the author view stream carried in the author's view stream, and then can obtain the author view stream according to the position of the author space object during the view switching process, or The target space object of the non-author perspective after the perspective switching is determined according to the author space object.

- the server may request the non-author view code stream corresponding to the target space object to be played, and the code stream of the view switching is played. The client does not need to load the panoramic video stream when the code stream is switched according to the switching of the view, which can save the transmission bandwidth of the video data and the local storage space of the client.

- the client requests the code stream corresponding to the target space object to play according to the target space object determined in the perspective switching process, and receives the bandwidth of the video data transmission and the like, and also realizes the stream switching of the view switching, thereby improving the video switching play. Applicability to enhance the user experience of video viewing.

- FIG. 12 is a schematic structural diagram of a device for processing video data based on HTTP dynamic adaptive streaming media according to an embodiment of the present invention.

- the processing device provided by the embodiment of the present invention includes:

- the receiving module 121 is configured to receive a media presentation description, where the media presentation description includes information of at least two representations, and the first representation of the at least two representations is an author view code stream, where the author view code stream is included a plurality of images, wherein spatial information of the spatial objects associated with at least two of the plurality of images is different; the second representation of the at least two representations is a static view code stream, and the static view code stream includes more An image in which the spatial information of the spatial objects associated with the plurality of images is the same.

- the media presentation describes a file that includes at least one spatial information associated with the author view codestream.

- the obtaining module 122 is configured to obtain instruction information.

- the obtaining module 122 is further configured to: acquire the segment of the first representation when the obtained instruction information is a viewing author view code stream, and otherwise acquire the segment of the second representation.

- the media presentation description further includes identification information, where the identification information is used to identify an author view code stream of the video.

- the media presentation description includes information of an adaptation set for describing attributes of media data segments of a plurality of replaceable encoded versions of the same media content component.

- the information of the adaptive set includes the identifier information.

- the media presentation description includes information indicating, the representation being a set and encapsulation of one or more code streams in a transport format;

- the information that is represented includes the identifier information.

- the media presentation includes information describing a descriptor, and the descriptor is used to describe spatial information of the spatial object to which the association is associated;

- the information of the descriptor includes the identifier information.

- the segment of the first representation carries spatial information of a spatial object associated with an image included in the segment of the first representation

- the media presentation description includes a description information of a spatial information file

- the obtaining module 122 is further configured to: acquire part or all of the data in the spatial information file;

- the obtaining module 122 is further configured to:

- the spatial information of the spatial object associated with the image is a spatial relationship of the spatial object and its associated content component.

- the spatial information is carried in a designated box in the segment of the first representation, or in a specified box in a metadata representation associated with the segment of the first representation.

- the designated box is a trun box included in a segment of the first representation, and the trun box is used to describe a set of consecutive samples of a track.

- the obtaining module 122 is further configured to:

- the processing device for the video data provided by the embodiment of the present invention may be specifically the client in the foregoing embodiment, and the implementation manners described in each step of the foregoing video data processing method may be implemented by using the built-in modules. I will not repeat them here.

- the client may determine the author view code stream according to the identifier information carried in the MPD, and may also obtain the author space pair corresponding to the author view code stream carried in the author view code stream.

- the spatial information of the image can be obtained by acquiring the author's perspective stream according to the position of the author's spatial object during the perspective switching, or determining the target space object of the non-author perspective after the perspective switching according to the author's spatial object.

- the server may request the non-author view code stream corresponding to the target space object to be played, and the code stream of the view switching is played.

- the client does not need to load the panoramic video stream when the code stream is switched according to the switching of the view, which can save the transmission bandwidth of the video data and the local storage space of the client.

- the client requests the code stream corresponding to the target space object to play according to the target space object determined in the perspective switching process, and receives the bandwidth of the video data transmission and the like, and also realizes the stream switching of the view switching, thereby improving the video switching play. Applicability to enhance the user experience of video viewing.

- the storage medium may be a magnetic disk, an optical disk, a read-only memory (ROM), or a random access memory (RAM).

Abstract

Disclosed in the embodiments of the present invention are a method and apparatus for processing hypertext transfer protocol (HTTP) dynamic adaptive streaming media-based video data, the method comprising: receiving a media presentation description, the media presentation description comprising information of at least two representations. A first representation among the at least two representations is an author view code stream, the author view code stream comprising a plurality of images, and space information of space objects associated with at least two images in the plurality of images is different. A second representation of the at least two representations is a static view code stream, the static view code stream comprising a plurality of images, and space information of space objects associated with the plurality of images is identical; obtaining instruction information; and acquiring a segment of the first representation if the instruction information is viewing the author view code stream, otherwise acquiring a segment of the second representation. Using the embodiments of the present invention has the advantages of saving transmission resources of video data, improving flexibility and applicability of video presentation, and enhancing video viewing experience of a user.

Description

本发明涉及流媒体数据处理领域,尤其涉及一种视频数据的处理方法及装置。The present invention relates to the field of streaming media data processing, and in particular, to a method and an apparatus for processing video data.

随着虚拟现实(英文:virtual reality,VR)技术的日益发展完善,360度视角等VR视频的观看应用越来越多地呈现在用户面前。在VR视频观看过程中,用户随时可能变换视角(英文:field of view,FOV),每个视角对应一个空间对象的视频码流,视角切换时呈现在用户视角内的VR视频图像也应当随着切换。With the development of virtual reality (VR) technology, VR video viewing applications such as 360-degree viewing angles are increasingly presented to users. During the VR video viewing process, the user may change the view angle (English: field of view, FOV) at any time. Each view corresponds to a video stream of a spatial object, and the VR video image presented in the user's perspective when the view is switched should also follow Switch.

现有技术在VR视频准备阶段将VR全景视频划分为多个的固定空间对象对应的多个码流,每个固定空间对象对应一组基于通过超文本传输协议(英文:hypertext transfer protocol,HTTP)动态自适应流(英文:dynamic adaptive streaming over HTTP,DASH)码流。在用户变换视角时,终端根据用户切换后的新空间对象选择视频中包含该空间对象的一个或者多个固定空间对象,每个固定空间对象包含切换后的空间对象的一部分。终端获取全景空间对象的码流,并解码上述一个或者多个固定空间对象的码流后再根据新空间对象呈现该空间对象对应的视频内容。现有技术中终端需要将全景空间对象的码流存储到本地存储空间中,再根据新空间对象选择相应的码流进行呈现,不呈现的空间对象对应的码流则为过剩的视频数据,不仅占用了本地存储空间,还造成了视频数据传输的网络传输带宽的浪费,适用性差。In the VR video preparation phase, the VR video is divided into multiple code streams corresponding to multiple fixed space objects, and each fixed space object is correspondingly based on a hypertext transfer protocol (HTTP). Dynamic adaptive streaming over HTTP (DASH) code stream. When the user changes the view angle, the terminal selects one or more fixed space objects in the video that include the space object according to the new space object after the user switches, and each fixed space object includes a part of the switched space object. The terminal acquires the code stream of the panoramic space object, and decodes the code stream of the one or more fixed space objects, and then presents the video content corresponding to the space object according to the new space object. In the prior art, the terminal needs to store the code stream of the panoramic space object into the local storage space, and then select the corresponding code stream according to the new space object for presentation, and the code stream corresponding to the non-presented space object is excess video data, not only It occupies the local storage space, and also causes a waste of network transmission bandwidth of video data transmission, and has poor applicability.

发明内容