WO2007001786A2 - A reduced complexity recursive least square lattice structure adaptive filter by means of limited recursion of the backward and forward error prediction squares - Google Patents

A reduced complexity recursive least square lattice structure adaptive filter by means of limited recursion of the backward and forward error prediction squares Download PDFInfo

- Publication number

- WO2007001786A2 WO2007001786A2 PCT/US2006/022286 US2006022286W WO2007001786A2 WO 2007001786 A2 WO2007001786 A2 WO 2007001786A2 US 2006022286 W US2006022286 W US 2006022286W WO 2007001786 A2 WO2007001786 A2 WO 2007001786A2

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- error prediction

- filter

- adaptive filter

- squares

- backward

- Prior art date

Links

Classifications

-

- H—ELECTRICITY

- H03—ELECTRONIC CIRCUITRY

- H03H—IMPEDANCE NETWORKS, e.g. RESONANT CIRCUITS; RESONATORS

- H03H21/00—Adaptive networks

- H03H21/0012—Digital adaptive filters

- H03H21/0014—Lattice filters

-

- H—ELECTRICITY

- H03—ELECTRONIC CIRCUITRY

- H03H—IMPEDANCE NETWORKS, e.g. RESONANT CIRCUITS; RESONATORS

- H03H21/00—Adaptive networks

- H03H21/0012—Digital adaptive filters

- H03H21/0043—Adaptive algorithms

- H03H2021/0049—Recursive least squares algorithm

Definitions

- the present invention relates in general to adaptive filters and, more particularly to a reduced complexity recursive least square lattice structure adaptive filter.

- Adaptive filters are found in a wide range of applications and come in a wide variety of configurations each with distinctive properties.

- a particular configuration chosen may depend on specific properties needed for a target application. These properties, which include among others, rate of convergence, mis- adjustment, tracking, and computational requirements, are evaluated and weighed against each other to determine the appropriate configuration for the target application.

- a recursive least squares (RLS) algorithm is generally a good tool for the non-stationary signal environment due to its fast convergence rate and low level of mis-adjustment.

- RLS recursive least squares lattice

- the initial RLSL algorithm was introduced by Simon Haykin, and can be found in the "Adaptive Filter Theory Third Edition" book.

- the RLS class of adaptive filters exhibit fast convergence rates and are relatively insensitive to variations in an eigenvalue spread. Eigenvalues are a measure of correlation properties of the reference signal and the eigenvalue spread is typically defined as a ratio of the highest eigenvalue to the lowest eigenvalue. A large eigenvalue spread significantly slows down the rate of convergence for most adaptive algorithms.

- FIGs. Ia - Id illustrate four schematic diagrams of applications employing an adaptive filter

- FIG. 2 is a block diagram of a RLSL structure adaptive filter according to the invention.

- FIG. 3 is a block diagram of a backward reflection coefficient update of the adaptive filter of FIG. 2;

- FIG. 4 is a block diagram of a forward reflection coefficient update of the adaptive filter of FIG. 2;

- FIG. 5 is a graph illustrating backward error prediction squares for fifty samples of an input signal

- FIG. 6 is a graph illustrating forward error prediction squares for fifty samples of the input signal

- FIG. 7 is a graph illustrating echo return loss enhancements(ERLE) sing limited recursion for backward prediction

- FIG. 8 is a graph illustrating a performance of the adaptive filter of FIG. 2 versus a number of recursions of backward error prediction squares updates.

- FIG. 9 is a graph illustrating measured timing requirements versus a number of forward and backward error prediction updates

- FIG. 10 is a graph illustrating measured timing requirements versus a number of forward and backward error prediction updates

- FIG. 11 is a block diagram of a communication device employing an adaptive filter.

- FIGs Ia - Id illustrate four schematic diagrams of filter circuits 90 employing an adaptive filter 10.

- the filter circuits 90 in general and the adaptive filter 10 may be constructed in any suitable manner.

- the adaptive filter 10 may be formed using electrical components such as digital and analog integrated circuits.

- the adaptive filter 10 is formed using a digital signal processor (DSP) operating in response to stored program code and data maintained in a memory.

- DSP digital signal processor

- the DSP and memory may be integrated in a single component such as an integrated circuit, or may be maintained separately. Further, the DSP and memory may be components of another system, such as a speech processing system or a communication device.

- an input signal u(n) is supplied to the filter circuit 90 and to the adaptive filter 10.

- the adaptive filter 10 may be configured in a multitude of arrangements between a system input and a system output. It is intended that the improvements described herein may be applied to the widest variety of applications for the adaptive filter 10.

- FIG. Ia an identification type application of the adaptive filter 10 is shown.

- the filter circuit 90 includes an adaptive filter 10, a plant 14 and a summer.

- the plant 14 may be any suitable signal source being monitored.

- the input signal u(n) received at an input 12 and is supplied to the adaptive filter 10 and to a signal processing plant 14 from a system input 16.

- a filtered signal y(n) 18 produced at an output by adaptive filter 10 is subtracted from a signal d(n) 20 supplied by plant 14 at an output to produce an error signal e(n) 22.

- the error signal e(n) 22 is fed back to the adaptive filter 10.

- signal d(n) 20 also represents an output signal of the system output 24.

- FIG. Ib an inverse modeling type application of the adaptive filter 10 is shown.

- the filter circuit 100 includes an adaptive filter 10, a plant 14, a summer and a delay process 26.

- an input signal originating from system input 16 is transformed into the input signal u(n) 12 of the adaptive filter 10 by plant 14, and converted into signal d(n) 20 by a delay process 26.

- Filtered signal y(n) 18 of the adaptive filter 10 is subtracted from signal d(n) 20 to produce error signal e(n) 22, that is fed back to the adaptive filter 10.

- FIG. Ic a prediction type application of the adaptive filter 10 is shown.

- the filter circuit 100 includes an adaptive filter 10, a summer and a delay process 26.

- adaptive filter 10 and delay process 26 are arranged in series between system input 16, now supplying a random signal input 28, and the system output 24.

- the random signal input 28 is subtracted as signal d(n) 20 from filtered signal y(n) 18 to produce error signal e(n) 22, that is fed back to the adaptive filter 10.

- error signal e(n) 22 also represents the output signal supplied by system output 24.

- FIG. Id an interference canceling type application of the adaptive filter 10 is shown.

- the filter circuit 100 includes an adaptive filter 10 and a summer.

- a reference signal 30 and a primary signal 32 are provided as input signal u(n) 12 and as signal d(n) 20, respectively.

- primary signal 32 is subtracted as signal d(n) 20 from filtered signal y(n) 18 to produce error signal e(n) 22, that is fed back to the adaptive filter 10.

- error signal e(n) 22 also represents the output signal supplied the system output 24.

- the adaptive filter 100 includes a plurality of stages including a first stage 120 and an m-th stage 122.

- Each stage (m) may be characterized by a forward prediction error ⁇ m Qi) 102, a forward prediction error ⁇ m _ y ⁇ ) 103, a forward reflection coefficient K f m _ x Q ⁇ -l) 104, a delayed backward prediction error ⁇ m ⁇ Q ⁇ ) 105, a backward prediction error ⁇ Qi) 106, a backward reflection coefficient K b m _ j Qi - 1) 107, an a priori estimation error backward ⁇ m Qi) 108, an a priori estimation error backward ⁇ m _ x Q ⁇ ) 109 and a joint process regression coefficient K 1n-1 Qi -I) 110.

- This m-stage adaptive RLSL filter 100 is shown with filter coefficients updates indicated by arrows drawn through each coefficient block. These filter coefficient updates are recursively computed for each stage (m) of a filter length of the RLSL 100 and for each sample time (n) of the input signal u(n) 12. [0006]

- An RLSL algorithm for the RLSL 100 is defined below in terms of Equation 1 through Equation 8.

- K b , m (n) K bjn (n -I)- ⁇ V ⁇ & W t m-l ⁇ n )

- Equation s y,(n -l) ⁇ (n -l)- ⁇

- F m (n) Weighted sum of forward prediction error squares for stage m at time n.

- B m (n) Weighted sum of backward prediction error squares for stage m at time n.

- the RLSL 100 is supplied by signals u(n) 12and d(n) 20. Subsequently, for each stage m, the above defined filter coefficient updates are recursively computed.

- the forward prediction error ⁇ m(n) 102 is the forward prediction error ⁇ m-1 (n) 103 of stage m-1 augmented by a combination of the forward reflection coefficient Kf,m-l(n-l) 104 with the delayed backward prediction error ⁇ m-1 (n) 105.

- the backward prediction error ⁇ m (n) 106 is the backward prediction error ⁇ m-1 (n) 105 of stage m-1 augmented by a combination of the backward reflection coefficient Kb,m(n-l)107 with the delayed forward prediction error ⁇ m-1 (n) 103.

- a-priori estimation error backward ⁇ m+l(n) 108 for stage m at time n, is the a priori estimation error backward ⁇ m (n) 109 of stage m-1 reduced by a combination of the joint process regression coefficient Km-l(n-l) 110, of stage m-1 at time n-1, with the backward forward prediction error ⁇ m-1 (n) 105.

- the adaptive filter 100 may be implemented using any suitable component or combination of components.

- the adaptive filter is implemented using a DSP in combination with instructions and data stored in an associated memory.

- the DSP and memory may be part of any suitable system for speech processing or manipulation.

- the DSP and memory can be a stand-alone system or embedded in another system.

- This RLSL algorithm requires extensive computational resources and can be prohibitive for embedded systems.

- a mechanism for reducing the computational requirements of an RLSL adaptive filter 100 is obtained by reducing a number of calculated updates of the forward error prediction squares Fm(n) from backward error prediction squares Bm (n).

- processors are substantially efficient at adding, subtracting and multiplying, but not necessarily at dividing.

- Most processors use a successive approximation technique to implement a divide instruction and may require multiple clock cycles to produce a result.

- a total number of computations in the filter coefficient updates may need to be reduced as well as a number of divides that are required in the calculations of the filter coefficient updates.

- the RLSL algorithm filter coefficient updates are transformed to consolidate the divides.

- the time (n) and order (m) indices of the RLSL algorithm are translated to form Equation 9 through Equation 17.

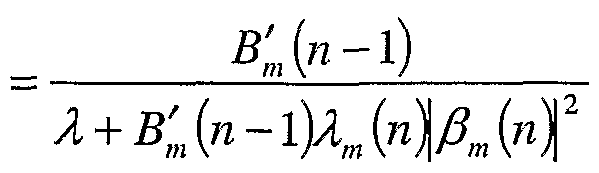

- Equation 21 B m ' (n)

- K f,m (n) K fjm (n - 1) - ⁇ m _ x (n - l) ⁇ m _ x (n - l) ⁇ m (n)B m ' _ x (n - 1)

- K 1n (n) K 1n (n - l)+ ⁇ m (n) ⁇ m (n) ⁇ m+ ⁇ (n)B m ' (n)

- FIG. 3 a block diagram of the backward reflection coefficient update Kb,m(n) 30 as evaluated in Equation 25 is shown.

- the block diagram of FIG. 3 is representative of, for example, a DSP operation or group of operations.

- the backward reflection coefficient update Kb,m(n) 30 is supplied to a delay 32 and the output of delay 32 Kb,m(n-1) is summed to a product of the forward error prediction squares F'm (n) with the backward prediction error ⁇ m(n), the forward prediction error ⁇ m-l(n), and the conversion factor ⁇ m(n-l).

- F'm (n) the forward error prediction squares

- FIG. 4 a block diagram of the forward reflection coefficient update Kf,m(n) 40 as evaluated in Equation 28 is shown. Similar to FIG. 3, the block diagram of FIG. 4 is representative of, for example, a DSP operation or group of operations.

- the backward reflection coefficient update Kb,m(n) 40 is supplied to a delay 42.

- the output of delay 42 Kf,m(n-1) is summed to a product of the backward error prediction squares B'm-1 (n-1) with the backward prediction error ⁇ m-l(n), the forward prediction error ⁇ m(n), and the conversion faction ⁇ m-l(n-l). [0021 ]

- the forward and backward error prediction squares, Fm (n) and Bm(n) are plotted against the length of the filter for 50 samples of input signal u(n) 12 during a condition of high convergence. These plots clearly indicate that the error prediction squares Fm (n) and Bm(n), ramp up quickly and then flatten out for the higher order taps of the adaptive filter 10. As such, one can infer that most of the information is substantially contained in the first few recursions of the RLSL algorithm and that a reduction in the number of coefficient updates can achieve acceptable filter performance.

- FIG. 8 illustrates graphically the loss in performance of a 360 tap filter when the forward and backward error prediction squares, Fm (n) and Bm(n), are held constant after only 100 updates of the filter.

- This graph shows the echo return loss enhancement (ERLE) in dB of the filter for a 360 tap filter for both the full filter update and one with the error prediction updates calculated for only the first 100 taps of the 360 tap filter.

- ERLE echo return loss enhancement

- This graph is included to serve as an aide in determining the proper trade-off between computational requirements and peak filter performance.

- This graph plots the measured timing requirements of the RLSL filter 10 vs. the number of forward and backward error prediction squares updated for a 360 tap length filter. These values reflect measurements taken on a real-time hardware implementation. As expected the timing decreases linearly as the number of updates is reduced.

- the recursion loop is broken into two parts.

- the first part is the full set of updates as shown in Equation 22 through Equation 30. These updates are performed as normal up to a predefined number of taps of the filter. The exact number of taps needed of course is determined by the trade off analysis between real time and filter performance.

- the second part of the optimized RLSL algorithm is given by Equation 31 through Equation 37. In these series of recursive updates, the forward and backward error prediction squares, Fm (n) and Bm(n), are held constant at the last value calculated from the first part of the optimized algorithm.

- the forward error prediction square term (Fc ) remains constant and is used in Equation 32 to update the backward reflection coefficient Kb,m(n) .

- the backward error prediction square term (Bc ) remains constant for the remainder of the filter updates and is used in Equation 34 to update the forward reflection coefficient Kf,m(n) .

- K bjn (n) K hJ ⁇ (n - 1) - ⁇ 1 (n - ⁇ (n) ⁇ m (n)F c

- K ⁇ 1 (n) K fJ ⁇ ⁇ n - 1) - ⁇ m _ ⁇ ⁇ n - l) ⁇ (n - l) ⁇ m (n)B c

- Equation 35 ⁇ m (n) - £,__ W - ⁇ -i (» ⁇ l) A,-i W Equation 36

- K m (n) K m ⁇ n -l)+ ⁇ m (n) ⁇ m (n) ⁇ mH (n)B c

- FIG. 11 is a block diagram of a communication device 1100 employing an adaptive filter.

- the communication device 1100 includes a DSP 1102, a microphone 1104, a speaker 1106, an analog signal processor 1108 and a network connection 1110.

- the DSP 1102 may be any processing device including a commercially available digital signal processor adapted to process audio and other information.

- the communication device 1100 includes a microphone 1104 and speaker 1106 and analog signal processor 1108.

- the microphone 1104 converts sound waves impressed thereon to electrical signals.

- the speaker 1106 converts electrical signals to audible sound waves.

- the analog signal processor 1108 serves as an interface between the DSP, which operates on digital data representative of the electrical signals, and the electrical signals useful to the microphone 1104 and 1106.

- the analog signal processor 1108 may be integrated with the DSP 1102.

- the network connection 1110 provides communication of data and other information between the communication device 1100 and other components. This communication may be over a wire line, over a wireless link, or a combination of the two.

- the communication device 1100 may be embodied as a cellular telephone and the adaptive filter 1112 operates to process audio information for the user of the cellular telephone.

- the network connection 1110 is formed by the radio interface circuit that communicates with a remote base station.

- the communication device 1100 is embodied as a hands-free, in- vehicle audio system and the adaptive filter 1112 is operative to serve as part of a double-talk detector of the system.

- the network connection 1110 is formed by a wire line connection over a communication bus of the vehicle.

- the DSP 1102 includes data and instructions to implement an adaptive filter 1112, a memory 1114 for storing data and instructions and a processor 1116.

- the adaptive filter 1112 in this embodiment is an RLSL adaptive filter of the type generally described herein.

- the adaptive filter 1112 is enhanced to reduce the number of calculations required to implement the RLSL algorithm as described herein.

- the adaptive filter 1112 may include additional enhancements and capabilities beyond those expressly described herein.

- the processor 1116 operates in response to the data and instructions implementing the adaptive filter 1112 and other data and instructions stored in the memory 1114 to process audio and other information of the communication device 1100.

- the adaptive filter 1112 receives an input signal from a source and provides a filtered signal as an output.

- the DSP 1102 receives digital data from either the analog signal processor 1108 or the network interface 1110.

- the analog signal processor 1108 and the network interface 1110 thus form means for receiving an input signal.

- the digital data is representative of a time- varying signal and forms the input signal.

- the processor 1116 of DSP 1102 implements the adaptive filter 1112.

- the data forming the input signal is provided to the instructions and data forming the adaptive filter.

- the adaptive filter 1112 produces an output signal in the form of output data.

- the output data may be further processed by the DSP 1102 or passed to the analog signal processor 1108 or the network interface 1110 for further processing.

- the communication device 1100 may be modified and adapted to other embodiments as well. The embodiments shown and described herein are intended to be exemplary only.

Abstract

A method for reducing a computational complexity of an m-stage adaptive filter is provided by updating recursively forward and backward error prediction square terms for a first portion of a length of the adaptive filter, and keeping the updated forward and backward error prediction square terms constant for a second portion of the length of the adaptive filter.

Description

A REDUCED COMPLEXITY RECURSIVE LEAST SQUARE

LATTICE STRUCTURE ADAPTIVE FILTER BY MEANS OF LIMITED

RECURSION OF THE BACKWARD AND FORWARD ERROR PREDICTION

SQUARES

RELATED APPLICATIONS

[0001 ] This application claims the benefit of U.S. Provisional Application No. 60/692,345, filed June 20, 2005, U.S. Provisional Application No. 60/692,236, filed June 20, 2005, and U.S. Provisional Application No. 60/692,347, filed June 20, 2005, all of which are incorporated herein by reference.

FIELD OF THE INVENTION

[0002] The present invention relates in general to adaptive filters and, more particularly to a reduced complexity recursive least square lattice structure adaptive filter.

BACKGROUND

[0003] Adaptive filters are found in a wide range of applications and come in a wide variety of configurations each with distinctive properties. A particular configuration chosen may depend on specific properties needed for a target application. These properties, which include among others, rate of convergence, mis- adjustment, tracking, and computational requirements, are evaluated and weighed against each other to determine the appropriate configuration for the target application.

[0004] Of particular interest when choosing an adaptive filter configuration for use in a non-stationary signal environment are the rate of convergence, the mis- adjustment and the tracking capability. Good tracking capability is generally a function of the convergence rate and mis-adjustment properties of a corresponding algorithm. However, these properties may be contradictory in nature, in that a higher convergence rate will typically result in a higher convergence error or mis-adjustment of the resulting filter.

[0005] A recursive least squares (RLS) algorithm is generally a good tool for the non-stationary signal environment due to its fast convergence rate and low level of mis-adjustment. One particular form of the RLS algorithm is a recursive least squares

lattice (RLSL) algorithm. The initial RLSL algorithm was introduced by Simon Haykin, and can be found in the "Adaptive Filter Theory Third Edition" book. The RLS class of adaptive filters exhibit fast convergence rates and are relatively insensitive to variations in an eigenvalue spread. Eigenvalues are a measure of correlation properties of the reference signal and the eigenvalue spread is typically defined as a ratio of the highest eigenvalue to the lowest eigenvalue. A large eigenvalue spread significantly slows down the rate of convergence for most adaptive algorithms.

[0006] However, the RLS algorithm typically requires extensive computational resources and can be prohibitive for embedded systems. Accordingly, there is a need to provide a mechanism by which the computational requirements of an RLSL structure adaptive filter are reduced.

BRIEF DESCRIPTION OF THE DRAWINGS

[0007] FIGs. Ia - Id illustrate four schematic diagrams of applications employing an adaptive filter;

[0008] FIG. 2 is a block diagram of a RLSL structure adaptive filter according to the invention;

[0009] FIG. 3 is a block diagram of a backward reflection coefficient update of the adaptive filter of FIG. 2;

[0010] FIG. 4 is a block diagram of a forward reflection coefficient update of the adaptive filter of FIG. 2;

[0011] FIG. 5 is a graph illustrating backward error prediction squares for fifty samples of an input signal;

[0012] FIG. 6 is a graph illustrating forward error prediction squares for fifty samples of the input signal;

[0013] FIG. 7 is a graph illustrating echo return loss enhancements(ERLE) sing limited recursion for backward prediction;

[0014] FIG. 8 is a graph illustrating a performance of the adaptive filter of FIG. 2 versus a number of recursions of backward error prediction squares updates; and

[0015] FIG. 9 is a graph illustrating measured timing requirements versus a number of forward and backward error prediction updates;

[0016] FIG. 10 is a graph illustrating measured timing requirements versus a number of forward and backward error prediction updates; and

[0017] FIG. 11 is a block diagram of a communication device employing an adaptive filter.

[0018] Illustrative and exemplary embodiments of the invention are described in further detail below with reference to and in conjunction with the figures.

DETAILED DESCRIPTION

[0019] A method for reducing a computational complexity of an m-stage adaptive filter is provided by updating recursively forward and backward error prediction square terms for a first portion of a length of the adaptive filter, and keeping the updated forward and backward error prediction square terms constant for a second portion of the length of the adaptive filter. The present invention is defined by the appended claims. This description addresses some aspects of the present embodiments and should not be used to limit the claims. [0002] FIGs Ia - Id illustrate four schematic diagrams of filter circuits 90 employing an adaptive filter 10. The filter circuits 90 in general and the adaptive filter 10 may be constructed in any suitable manner. In particular, the adaptive filter 10 may be formed using electrical components such as digital and analog integrated circuits. In other examples, the adaptive filter 10 is formed using a digital signal processor (DSP) operating in response to stored program code and data maintained in a memory. The DSP and memory may be integrated in a single component such as an integrated circuit, or may be maintained separately. Further, the DSP and memory may be components of another system, such as a speech processing system or a communication device.

[0003] In general, an input signal u(n) is supplied to the filter circuit 90 and to the adaptive filter 10. As shown, the adaptive filter 10 may be configured in a multitude of arrangements between a system input and a system output. It is intended that the improvements described herein may be applied to the widest variety of applications for the adaptive filter 10.

[0001 ] In FIG. Ia, an identification type application of the adaptive filter 10 is shown. In FIG. Ia, the filter circuit 90 includes an adaptive filter 10, a plant 14 and a

summer. The plant 14 may be any suitable signal source being monitored. In this arrangement, the input signal u(n) received at an input 12 and is supplied to the adaptive filter 10 and to a signal processing plant 14 from a system input 16. A filtered signal y(n) 18 produced at an output by adaptive filter 10 is subtracted from a signal d(n) 20 supplied by plant 14 at an output to produce an error signal e(n) 22. The error signal e(n) 22 is fed back to the adaptive filter 10. In this identification type application, signal d(n) 20 also represents an output signal of the system output 24. [0002] In FIG. Ib, an inverse modeling type application of the adaptive filter 10 is shown. In FIG. Ib, the filter circuit 100 includes an adaptive filter 10, a plant 14, a summer and a delay process 26. In this arrangement, an input signal originating from system input 16 is transformed into the input signal u(n) 12 of the adaptive filter 10 by plant 14, and converted into signal d(n) 20 by a delay process 26. Filtered signal y(n) 18 of the adaptive filter 10 is subtracted from signal d(n) 20 to produce error signal e(n) 22, that is fed back to the adaptive filter 10.

[0003] In FIG. Ic, a prediction type application of the adaptive filter 10 is shown. In FIG. Ic, the filter circuit 100 includes an adaptive filter 10, a summer and a delay process 26. In this arrangement, adaptive filter 10 and delay process 26 are arranged in series between system input 16, now supplying a random signal input 28, and the system output 24. As shown, the random signal input 28 is subtracted as signal d(n) 20 from filtered signal y(n) 18 to produce error signal e(n) 22, that is fed back to the adaptive filter 10. In this prediction type application, error signal e(n) 22 also represents the output signal supplied by system output 24.

[0004] In FIG. Id, an interference canceling type application of the adaptive filter 10 is shown. In FIG. Id, the filter circuit 100 includes an adaptive filter 10 and a summer. In this arrangement, a reference signal 30 and a primary signal 32 are provided as input signal u(n) 12 and as signal d(n) 20, respectively. As shown, primary signal 32 is subtracted as signal d(n) 20 from filtered signal y(n) 18 to produce error signal e(n) 22, that is fed back to the adaptive filter 10. In this interference canceling type application, error signal e(n) 22 also represents the output signal supplied the system output 24.

[0005] Now referring to FIG. 2, a block diagram of an m-stage RLSL structure adaptive filter (RLSL) 100 is shown. The adaptive filter 100 includes a plurality of

stages including a first stage 120 and an m-th stage 122. Each stage (m) may be characterized by a forward prediction error ηm Qi) 102, a forward prediction error ηm_y{ή) 103, a forward reflection coefficient Kf m_xQι -l) 104, a delayed backward prediction error βm^Qι) 105, a backward prediction error βQi) 106, a backward reflection coefficient Kb m_j Qi - 1) 107, an a priori estimation error backward ξm Qi) 108, an a priori estimation error backward ζm_xQι) 109 and a joint process regression coefficient K1n-1Qi -I) 110. This m-stage adaptive RLSL filter 100 is shown with filter coefficients updates indicated by arrows drawn through each coefficient block. These filter coefficient updates are recursively computed for each stage (m) of a filter length of the RLSL 100 and for each sample time (n) of the input signal u(n) 12. [0006] An RLSL algorithm for the RLSL 100 is defined below in terms of Equation 1 through Equation 8.

Equation 1

Equation 2 ηm (n) = ηm_x (n) + Kf>m {n - l)βm_γ (n - 1)

Equation 3 A,W = Λ,_1(n -l)+ i5:6im(« -l>7BI-iW

Equation 5 Kb,m (n) = Kbjn (n -I)- ^^V^ & W tm-l \n)

Equation s y,(n -l) = ^(n -l)- ^

Equation 7 ξm(n) = ξm-ι(n)- Km_1(n -l)β^ι(n)

Equation 8 K^(n)= K^n-I)+ γ"ι{")βft{n) ξm(n) βm~Λn)

[0007] Where the filter coefficient updates are defined as follows:

Fm(n) Weighted sum of forward prediction error squares for stage m at time n. Bm(n) Weighted sum of backward prediction error squares for stage m at time n.

Um(n) Forward prediction error.

D m(n) B ackward prediction error.

Kbιin(n) Backward reflection coefficient for stage m at time n.

Kf>m(n) Forward reflection coefficient for stage m at time n.

Km(n) Joint process regression coefficient for stage m at time n.

Dm(n) Conversion factor for stage m at time n.

D1n(Ti) A priori estimation error for stage m at time n.

D Exponential weighting factor or gain factor.

[0008] At stage zero, the RLSL 100 is supplied by signals u(n) 12and d(n) 20. Subsequently, for each stage m, the above defined filter coefficient updates are recursively computed. For example at stage m and time n, the forward prediction error ηm(n) 102 is the forward prediction error ηm-1 (n) 103 of stage m-1 augmented by a combination of the forward reflection coefficient Kf,m-l(n-l) 104 with the delayed backward prediction error βm-1 (n) 105.

[0009] In a similar fashion, at stage m and time n, the backward prediction error βm (n) 106 is the backward prediction error βm-1 (n) 105 of stage m-1 augmented by a combination of the backward reflection coefficient Kb,m(n-l)107 with the delayed forward prediction error ηm-1 (n) 103.

[0010] Moreover, a-priori estimation error backward ξm+l(n) 108, for stage m at time n, is the a priori estimation error backward ξm (n) 109 of stage m-1 reduced by a combination of the joint process regression coefficient Km-l(n-l) 110, of stage m-1 at time n-1, with the backward forward prediction error βm-1 (n) 105. [0011] The adaptive filter 100 may be implemented using any suitable component or combination of components. In one embodiment, the adaptive filter is implemented using a DSP in combination with instructions and data stored in an

associated memory. The DSP and memory may be part of any suitable system for speech processing or manipulation. The DSP and memory can be a stand-alone system or embedded in another system.

[0012] This RLSL algorithm requires extensive computational resources and can be prohibitive for embedded systems. As such, a mechanism for reducing the computational requirements of an RLSL adaptive filter 100 is obtained by reducing a number of calculated updates of the forward error prediction squares Fm(n) from backward error prediction squares Bm (n).

[0013] Typically, processors are substantially efficient at adding, subtracting and multiplying, but not necessarily at dividing. Most processors use a successive approximation technique to implement a divide instruction and may require multiple clock cycles to produce a result. As such, in an effort to reduce computational requirements, a total number of computations in the filter coefficient updates may need to be reduced as well as a number of divides that are required in the calculations of the filter coefficient updates. Thus, the RLSL algorithm filter coefficient updates are transformed to consolidate the divides. First, the time (n) and order (m) indices of the RLSL algorithm are translated to form Equation 9 through Equation 17.

Equation 9 F1n {n) = λFm {n - l)+ γm (n - l]ηm (nf

Equation 10 Bm{n) = λBm(n - l)+ γm{n}βm (n}2

Equation 11 ηm (n) = ηm_x (n) + Kf<m (n - l)βm_x (n - 1)

Equation 12 βm (n) = βm_λ (n - 1) + Kbjm (n - 1)^1 (n)

Equation 13

Equation 15 γm(n)= γm_M-^ψf^-

Equation 16 ξm(n) = ξm_x(n)- Km_x(n -l)βm_λ(n)

Equation 17 Km(n) = Km(n-l)+ ^ LM

[0014] Then, the forward error prediction squares Fm(n) and the backward error prediction squares Bm(n) are inverted and redefined to be their reciprocals as shown in Equation 18, Equation 20 and Equation 21. Thus, by inverting Equation 9 we get:

Equation 18

Fm(n) ΛFm(n -l)+ ym (n -l}ηm(nf

[0015] Then redefine the forward error prediction squares Fm(n):

Equation 19 F' = —

[0016] Then insert into Equation 18 and simplify:

Equation 20

[0017] By the same reasoning the backwards error prediction square Equation 10 becomes

[0018] Further, new definitions for the forward and backward error prediction squares, F'm (n) and B'm(n), are inserted back into the remaining equations, Equation

13, Equation 14, Equation 15, and Equation 17, to produce the algorithm coefficient updates as shown below in Equation 22 through Equation 30.

Equation 22 Fm' (n) = - FJPrl)

Λ + ^ ("-IhMKMT

Equation 23 Bm' (n) = BS' n -l)

Λ + Bm' (n -l)γm (n]j3m(nT

Equation 24 βm (n) = βm_x (n - 1) + Kb<m (n - l)ηm_x (n)

Equation 25 Kb<m (n) = Khjm (n - 1) - JV1 (n - l)ηm_x MA MCi W

Equation 26 ηm (n) = ηm_x {n) + Kftα (n - ϊ)βm_x (n - 1)

Equation 27 Kf,m (n) = Kfjm (n - 1) - γm_x (n - l)βm_x (n - l)ηm (n)Bm' _x (n - 1)

Equation 28 ξm (n) = ξm^ (n) - Km_x (n - l)βm_x (n)

Equation 29 K1n (n) = K1n (n - l)+ γm (n)βm (n)ξm+ι (n)Bm' (n)

Equation 30 γm (n) = γm_λ (n) - γl_x {n}βm_x (nf Bm' _, (π)

[0019] Now referring to FIG. 3, a block diagram of the backward reflection coefficient update Kb,m(n) 30 as evaluated in Equation 25 is shown. The block diagram of FIG. 3 is representative of, for example, a DSP operation or group of operations. The backward reflection coefficient update Kb,m(n) 30 is supplied to a delay 32 and the output of delay 32 Kb,m(n-1) is summed to a product of the forward error prediction squares F'm (n) with the backward prediction error βm(n), the forward prediction error ηm-l(n), and the conversion factor γm(n-l). [0020] Now referring to FIG. 4, a block diagram of the forward reflection coefficient update Kf,m(n) 40 as evaluated in Equation 28 is shown. Similar to FIG. 3, the block diagram of FIG. 4 is representative of, for example, a DSP operation or group of operations. The backward reflection coefficient update Kb,m(n) 40 is supplied to a delay 42. The output of delay 42 Kf,m(n-1) is summed to a product of

the backward error prediction squares B'm-1 (n-1) with the backward prediction error βm-l(n), the forward prediction error ηm(n), and the conversion faction γm-l(n-l). [0021 ] In FIG. 5 and FIG. 6, the forward and backward error prediction squares, Fm (n) and Bm(n), are plotted against the length of the filter for 50 samples of input signal u(n) 12 during a condition of high convergence. These plots clearly indicate that the error prediction squares Fm (n) and Bm(n), ramp up quickly and then flatten out for the higher order taps of the adaptive filter 10. As such, one can infer that most of the information is substantially contained in the first few recursions of the RLSL algorithm and that a reduction in the number of coefficient updates can achieve acceptable filter performance.

[0022] Thus, a good mechanism for trading performance with computational requirements is to reduce the number of recursions or updates performed on the forward and backward error prediction squares, Fm (n) and Bm(n), to a predefined portion of the filter length. Then, the error prediction squares are held constant for the remainder of the filter tap updates. A minimal loss in a performance of the adaptive filter may result as the number of updates is decreased, but a gain in real-time performance can justify the minimal loss in filter performance. [0023] FIG. 8 illustrates graphically the loss in performance of a 360 tap filter when the forward and backward error prediction squares, Fm (n) and Bm(n), are held constant after only 100 updates of the filter. This graph shows the echo return loss enhancement (ERLE) in dB of the filter for a 360 tap filter for both the full filter update and one with the error prediction updates calculated for only the first 100 taps of the 360 tap filter.

[0024] To generate the plot of FIG. 9, this process of limiting updates performed on the forward and backward error prediction squares, Fm (n) and Bm(n), to a predefined portion of the filter length was repeated for different limits applied to the number of error prediction updates and plotted against the ERLE of the adaptive filter 10. This plot of FIG. 9 shows how the filter performance degrades as the number of error prediction updates is reduced. Moreover, this FIG. 9 plot indicates that there is minimal improvement in filter performance in updating the error prediction squares beyond about 180 (half) filter taps.

[0025] Now referring to FIG. 10, a graph illustrating measured timing requirements versus a number of forward and backward error prediction updates, Fm (n) and Bm(n). This FIG. 10 graph is included to serve as an aide in determining the proper trade-off between computational requirements and peak filter performance. This graph plots the measured timing requirements of the RLSL filter 10 vs. the number of forward and backward error prediction squares updated for a 360 tap length filter. These values reflect measurements taken on a real-time hardware implementation. As expected the timing decreases linearly as the number of updates is reduced.

[0026] In the final realization of the optimized RLSL algorithm, the recursion loop is broken into two parts. The first part is the full set of updates as shown in Equation 22 through Equation 30. These updates are performed as normal up to a predefined number of taps of the filter. The exact number of taps needed of course is determined by the trade off analysis between real time and filter performance. The second part of the optimized RLSL algorithm is given by Equation 31 through Equation 37. In these series of recursive updates, the forward and backward error prediction squares, Fm (n) and Bm(n), are held constant at the last value calculated from the first part of the optimized algorithm.

[0027] For the remaining filter updates the forward error prediction square term (Fc ) remains constant and is used in Equation 32 to update the backward reflection coefficient Kb,m(n) . Moreover, the backward error prediction square term (Bc ) remains constant for the remainder of the filter updates and is used in Equation 34 to update the forward reflection coefficient Kf,m(n) .

Equation 31 βm (n) = βm_x (n - 1) + Kb<m (n - I)1n^ (n)

Equation 32 Kbjn (n) = KhJΛ (n - 1) - ^1 (n - ήη^ (n)βm (n)Fc

Equation 33 ηm{n) = ηm_ι(n) + Kf<n(n - l)j3m_ι{n - l)

Equation 34 K^1 (n) = KfJΛ {n - 1) - γm_γ {n - l)β^ (n - l)ηm (n)Bc

Equation 35 ξm (n) - £,__ W - ^-i (» ~ l) A,-i W

Equation 36 Km (n) = Km{n -l)+ γm (n)βm (n)ξmH (n)Bc

[0028] FIG. 11 is a block diagram of a communication device 1100 employing an adaptive filter. The communication device 1100 includes a DSP 1102, a microphone 1104, a speaker 1106, an analog signal processor 1108 and a network connection 1110. The DSP 1102 may be any processing device including a commercially available digital signal processor adapted to process audio and other information. [0029] The communication device 1100 includes a microphone 1104 and speaker 1106 and analog signal processor 1108. The microphone 1104 converts sound waves impressed thereon to electrical signals. Conversely, the speaker 1106 converts electrical signals to audible sound waves. The analog signal processor 1108 serves as an interface between the DSP, which operates on digital data representative of the electrical signals, and the electrical signals useful to the microphone 1104 and 1106. In some embodiments, the analog signal processor 1108 may be integrated with the DSP 1102.

[0030] The network connection 1110 provides communication of data and other information between the communication device 1100 and other components. This communication may be over a wire line, over a wireless link, or a combination of the two. For example, the communication device 1100 may be embodied as a cellular telephone and the adaptive filter 1112 operates to process audio information for the user of the cellular telephone. In such an embodiment, the network connection 1110 is formed by the radio interface circuit that communicates with a remote base station. In another embodiment, the communication device 1100 is embodied as a hands-free, in- vehicle audio system and the adaptive filter 1112 is operative to serve as part of a double-talk detector of the system. In such an embodiment, the network connection 1110 is formed by a wire line connection over a communication bus of the vehicle. [0031 ] In the embodiment of FIG. 11 , the DSP 1102 includes data and instructions to implement an adaptive filter 1112, a memory 1114 for storing data and instructions and a processor 1116. The adaptive filter 1112 in this embodiment is an RLSL adaptive filter of the type generally described herein. In particular, the

adaptive filter 1112 is enhanced to reduce the number of calculations required to implement the RLSL algorithm as described herein. The adaptive filter 1112 may include additional enhancements and capabilities beyond those expressly described herein. The processor 1116 operates in response to the data and instructions implementing the adaptive filter 1112 and other data and instructions stored in the memory 1114 to process audio and other information of the communication device 1100.

[0032] In operation, the adaptive filter 1112 receives an input signal from a source and provides a filtered signal as an output. In the illustrated embodiment, the DSP 1102 receives digital data from either the analog signal processor 1108 or the network interface 1110. The analog signal processor 1108 and the network interface 1110 thus form means for receiving an input signal. The digital data is representative of a time- varying signal and forms the input signal. As part of audio processing, the processor 1116 of DSP 1102 implements the adaptive filter 1112. The data forming the input signal is provided to the instructions and data forming the adaptive filter. The adaptive filter 1112 produces an output signal in the form of output data. The output data may be further processed by the DSP 1102 or passed to the analog signal processor 1108 or the network interface 1110 for further processing. [0033] The communication device 1100 may be modified and adapted to other embodiments as well. The embodiments shown and described herein are intended to be exemplary only.

[0034] It is therefore intended that the foregoing detailed description be regarded as illustrative rather than limiting, and that it be understood that it is the following claims, including all equivalents, that are intended to define the spirit and scope of this invention.

Claims

1. A method for an m-stage recursive least squares lattice structure adaptive filter, the method comprising: receiving an input signal at the adaptive filter; recursively updating filter coefficients for each stage of the adaptive filter, including reducing a number of updates of a forward error prediction squares and backward error prediction squares of the filter coefficients, and holding the forward error prediction squares and the backward error prediction squares constant during the remainder of the act of updating the filter coefficients; and producing a filtered signal at an output of the adaptive filter.

2. The method of claim 1 wherein updating filter coefficients comprises limiting updating of the forward error prediction squares and the backward error prediction squares to a predefined portion of filter length.

3. The method of claim 2 wherein limiting updating of the forward error prediction squares and the backward error prediction squares to a predefined portion of the filter length comprises updating the forward error prediction squares and the backward error prediction squares of approximately one-half of all filter stages.

4. The method of claim 1 wherein receiving an input signal comprises receiving digital data representative of a time-varying signal.

5. A method for reducing computational complexity of an m-stage adaptive filter, the method comprising: receiving an input signal at an input; filtering the input signal to produce a filtered signal, including updating recursively a forward error prediction square term for a first portion of a length of the adaptive filter; updating recursively a backward error prediction square term for the first portion of the length of the adaptive filter; and keeping the updated forward and backward error prediction square terms constant for a second portion of the length of the adaptive filter; and providing the filtered signal at an output.

6. The method of claim 5 wherein updating the forward error prediction square term comprises updating the forward error prediction square term for approximately one-half of the length of the adaptive filter and updating the backward error prediction square term comprises updating the backward error prediction square term for approximately one-half of the length of the adaptive filter.

7. The method of claim 5 wherein further comprising: updating all filter coefficients up to a predefined number of taps of the adaptive filter for a first set of filter updates; and holding constant the forward error prediction square term and the backward error prediction square term while updating the rest of the filter coefficients during a second set of filter updates.

8. The method of claim 7 further comprising: during the second set of filter updates, using the constant forward error prediction square term to update a backward reflection coefficient; and using the constant backward error prediction square term to update a forward reflection coefficient.

9. An adaptive filter comprising: an interface to receive an input signal; a processor operative in conjunction with stored data and instructions to recursively update filter coefficients for each stage of the adaptive filter while producing a filtered signal, the processor configured to reduce a number of updates of the forward error prediction squares and backward error prediction squares of the filter coefficients, and hold the forward error prediction squares and the backward error prediction squares constant during the remainder of the act of updating the filter coefficients; and an interface to provide the filtered signal as an output signal.

10. The adaptive filter of claim 9 wherein the processor is operative to update recursively the forward error prediction squares for a first portion of a length of the adaptive filter; update recursively the backward error prediction squares for the first portion of the length of the adaptive filter; and keep the updated forward error prediction squares and backward error prediction squares constant for a second portion of the length of the adaptive filter.

11. The adaptive filter of claim 10 wherein the processor is operative to update all filter coefficients up to a predefined number of taps of the adaptive filter for a first set of filter updates; and hold constant the forward error prediction squares and the backward error prediction squares while updating the rest of the filter coefficients during a second set of filter updates.

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN2006800218486A CN101496001B (en) | 2005-06-20 | 2006-06-08 | A reduced complexity recursive least square lattice structure adaptive filter by means of limited recursion of the backward and forward error prediction squares |

Applications Claiming Priority (4)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| US69223605P | 2005-06-20 | 2005-06-20 | |

| US60/692,236 | 2005-06-20 | ||

| US11/399,907 | 2006-04-07 | ||

| US11/399,907 US7734466B2 (en) | 2005-06-20 | 2006-04-07 | Reduced complexity recursive least square lattice structure adaptive filter by means of limited recursion of the backward and forward error prediction squares |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| WO2007001786A2 true WO2007001786A2 (en) | 2007-01-04 |

| WO2007001786A3 WO2007001786A3 (en) | 2009-04-16 |

Family

ID=37595657

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/US2006/022286 WO2007001786A2 (en) | 2005-06-20 | 2006-06-08 | A reduced complexity recursive least square lattice structure adaptive filter by means of limited recursion of the backward and forward error prediction squares |

Country Status (2)

| Country | Link |

|---|---|

| CN (1) | CN101496001B (en) |

| WO (1) | WO2007001786A2 (en) |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2012104828A1 (en) * | 2011-02-03 | 2012-08-09 | Dsp Group Ltd. | A method and apparatus for hierarchical adaptive filtering |

Families Citing this family (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN105785124A (en) * | 2016-03-07 | 2016-07-20 | 国网技术学院 | Method for measuring harmonics and interharmonics of electric power system through spectrum estimation and cross correlation |

| CN110224955B (en) * | 2019-06-19 | 2020-07-03 | 北京邮电大学 | Digital equalizer structure and implementation method |

Citations (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20030035468A1 (en) * | 2001-05-17 | 2003-02-20 | Corbaton Ivan Jesus Fernandez | System and method for adjusting combiner weights using an adaptive algorithm in wireless communications system |

| US20040071207A1 (en) * | 2000-11-08 | 2004-04-15 | Skidmore Ian David | Adaptive filter |

| US20040210146A1 (en) * | 1991-03-07 | 2004-10-21 | Diab Mohamed K. | Signal processing apparatus |

-

2006

- 2006-06-08 WO PCT/US2006/022286 patent/WO2007001786A2/en active Application Filing

- 2006-06-08 CN CN2006800218486A patent/CN101496001B/en active Active

Patent Citations (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20040210146A1 (en) * | 1991-03-07 | 2004-10-21 | Diab Mohamed K. | Signal processing apparatus |

| US20040071207A1 (en) * | 2000-11-08 | 2004-04-15 | Skidmore Ian David | Adaptive filter |

| US20030035468A1 (en) * | 2001-05-17 | 2003-02-20 | Corbaton Ivan Jesus Fernandez | System and method for adjusting combiner weights using an adaptive algorithm in wireless communications system |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2012104828A1 (en) * | 2011-02-03 | 2012-08-09 | Dsp Group Ltd. | A method and apparatus for hierarchical adaptive filtering |

Also Published As

| Publication number | Publication date |

|---|---|

| CN101496001B (en) | 2011-08-03 |

| WO2007001786A3 (en) | 2009-04-16 |

| CN101496001A (en) | 2009-07-29 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| JP5284475B2 (en) | Method for determining updated filter coefficients of an adaptive filter adapted by an LMS algorithm with pre-whitening | |

| JP3216704B2 (en) | Adaptive array device | |

| KR100721034B1 (en) | A method for enhancing the acoustic echo cancellation system using residual echo filter | |

| KR100595799B1 (en) | Signal noise reduction by spectral subtraction using spectrum dependent exponential gain function averaging | |

| KR20190085924A (en) | Beam steering | |

| KR20010043837A (en) | Signal noise reduction by spectral subtracrion using linear convolution and causal filtering | |

| Petillon et al. | The fast Newton transversal filter: An efficient scheme for acoustic echo cancellation in mobile radio | |

| Van Waterschoot et al. | Adaptive feedback cancellation for audio applications | |

| US7734466B2 (en) | Reduced complexity recursive least square lattice structure adaptive filter by means of limited recursion of the backward and forward error prediction squares | |

| CN111868826A (en) | Adaptive filtering method, device, equipment and storage medium in echo cancellation | |

| WO2007001786A2 (en) | A reduced complexity recursive least square lattice structure adaptive filter by means of limited recursion of the backward and forward error prediction squares | |

| EP1943730A1 (en) | Reduction of digital filter delay | |

| US7702711B2 (en) | Reduced complexity recursive least square lattice structure adaptive filter by means of estimating the backward and forward error prediction squares using binomial expansion | |

| AU4869200A (en) | Apparatus and method for estimating an echo path delay | |

| JP2000323962A (en) | Adaptive identification method and device, and adaptive echo canceler | |

| JP4538460B2 (en) | Echo canceller and sparse echo canceller | |

| US20060288067A1 (en) | Reduced complexity recursive least square lattice structure adaptive filter by means of approximating the forward error prediction squares using the backward error prediction squares | |

| WO2007001787A2 (en) | A reduced complexity recursive least square lattice structure adaptive filter by means of approximating the forward error prediction squares using the backward error prediction squares | |

| CN109493878B (en) | Filtering method, device, equipment and medium for echo cancellation | |

| WO2021194859A1 (en) | Echo residual suppression | |

| WO2007001785A2 (en) | Method for reducing computational complexity in an adaptive filter | |

| GIRIKA et al. | ADAPTIVE SPEECH ENHANCEMENT TECHNIQUES FOR COMPUTER BASED SPEAKER RECOGNITION. | |

| JP3180739B2 (en) | Method and apparatus for identifying unknown system by adaptive filter | |

| Kim et al. | Delayless block individual-weighting-factors sign subband adaptive filters with an improved band-dependent variable step-size | |

| CN109448748B (en) | Filtering method, device, equipment and medium for echo cancellation |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| WWE | Wipo information: entry into national phase |

Ref document number: 200680021848.6 Country of ref document: CN |

|

| 121 | Ep: the epo has been informed by wipo that ep was designated in this application | ||

| NENP | Non-entry into the national phase in: |

Ref country code: DE |

|

| 122 | Ep: pct application non-entry in european phase |

Ref document number: 06772549 Country of ref document: EP Kind code of ref document: A2 |