WO1998057504A1 - Overload prevention in a service control point (scp) - Google Patents

Overload prevention in a service control point (scp) Download PDFInfo

- Publication number

- WO1998057504A1 WO1998057504A1 PCT/GB1998/001628 GB9801628W WO9857504A1 WO 1998057504 A1 WO1998057504 A1 WO 1998057504A1 GB 9801628 W GB9801628 W GB 9801628W WO 9857504 A1 WO9857504 A1 WO 9857504A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- governor

- processing system

- transaction

- rate

- call

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Ceased

Links

Classifications

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04Q—SELECTING

- H04Q3/00—Selecting arrangements

- H04Q3/0016—Arrangements providing connection between exchanges

- H04Q3/0029—Provisions for intelligent networking

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04Q—SELECTING

- H04Q3/00—Selecting arrangements

- H04Q3/0016—Arrangements providing connection between exchanges

- H04Q3/0062—Provisions for network management

- H04Q3/0091—Congestion or overload control

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04Q—SELECTING

- H04Q2213/00—Indexing scheme relating to selecting arrangements in general and for multiplex systems

- H04Q2213/1305—Software aspects

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04Q—SELECTING

- H04Q2213/00—Indexing scheme relating to selecting arrangements in general and for multiplex systems

- H04Q2213/13166—Fault prevention

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04Q—SELECTING

- H04Q2213/00—Indexing scheme relating to selecting arrangements in general and for multiplex systems

- H04Q2213/13204—Protocols

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04Q—SELECTING

- H04Q2213/00—Indexing scheme relating to selecting arrangements in general and for multiplex systems

- H04Q2213/13345—Intelligent networks, SCP

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04Q—SELECTING

- H04Q2213/00—Indexing scheme relating to selecting arrangements in general and for multiplex systems

- H04Q2213/13387—Call gapping

Definitions

- the present invention relates to a transaction processing system in a communications network, and in particular to the prevention of overloading of the transaction processing system by inbound traffic.

- a transaction processing system for use in a communications network, the system including: a) a network interface for communicating signalling for inbound traffic b) a governor programmed with a static limit value for the rate of traffic- related signalling, and c) a transaction processor which is responsive to the governor and which is arranged to release an inbound transaction when the static limit value is reached.

- the transaction processing system comprises a service platform for use in a telecommunications network.

- the service platform may be a service control platform (SCP) used in an intelligent network.

- SCP service control platform

- the service platform might take the form of a computer-based system using CTI (computer telephony integration) technologies to provide intelligent services for a telephony network.

- CTI computer telephony integration

- the invention is also applicable, for example, to systems which carry out real-time processing of internet (TCP/IP) packets.

- the present invention protects the service platform or other transaction processing system by providing it with a governor.

- the governor compares the rate of inbound calls to the platform with a static limit or threshold, and causes calls to be released when the limit is exceeded.

- the inventors have found that this approach is flexible and powerful in protecting the platform, and functions effectively even when it is uncertain which of the platform resources determines the point of overload.

- the use of a static limit is found to give better results than directly monitoring the available resources on the platform to determine the point of overload.

- the operation of the governor can be made substantially independent of the detailed design of the service platform, and can protect network resources which may not be sited locally within the platform.

- the transaction processing system further comprises: d) an overload controller which is connected to the governor and which is arranged to output control signals onto the network to reduce the rate of inbound transactions to the transaction processing system when the static limit value is exceeded.

- the governor is programmed with a first, lower static limit value, and a second, higher static limit value, and is arranged to signal to the overload controller when the first, lower static limit value is reached, and is arranged to release an inbound call only when the second, higher static limit value is reached.

- the combination of a rate governor and an overload controller is found to be particularly advantageous. Then call release events caused by the governor serve as an input to the overload controller.

- the overload controller may then, for example, reduce the rate of inbound calls by sending a call-gapping instruction to an originating switch.

- Still further advantages can be gained in this context by operating the governor with two limit values, or equivalently by using two governors each with a different respective limit value. Then, at call rates between the lower limit value and the upper limit value, the governor causes a pseudo- rejection of an inbound call, in which the call is still admitted, but a rejection event is signalled to the overload controller.

- the use of pseudo-rejections enables more efficient handling of calls in circumstances where the rate-limiting network resource precedes the governor in the transaction processing chain.

- the governor is arranged to respond to the total rate of both initial traffic-related signals and predetermined intermediate traffic-related signals.

- the governor responds to the rate of intermediate traffic-related signals, such as those associated with the setting up of a call leg from an originating switch to a network intelligent peripheral, as well as responding to inital signals. The governor is then able to handle different transactions with widely varying processing overheads.

- the transaction processing system further comprises: e) a governor controller which is connected to the governor and which is arranged to set automatically the value for the static limit value.

- the system includes a plurality of processing systems, each of the processing systems comprising a transaction processor and a governor which is connected to the respective transaction processor, and the governor controller is connected to the plurality of processing systems and sets independent respective values for the static limit values in the different respective governors.

- the governor controller is programmed with a target transaction rate for the system, and is arranged to set the static limit values in the different respective governors so that the total admitted transaction rate substantially matches the said target transaction rate.

- the governor controller includes a monitor input which is connected to the plurality of processing systems and which receives status data for the processing systems, and the governor controller is arranged to amend the values for the rate limits in the different respective governors when the said status data indicates that the number of functioning transaction processors has changed. This enables the governor to balance the limit values of the different transaction processors so as to maintain as far as possible a desired global transaction rate for the overall system even in the event of one of the component processing systems failing.

- the governor controller is arranged to monitor the identity of network switches which, in use, direct inbound signalling to the transaction processing system, and the governor controller is arranged, when signalling is received from a switch which normally uses another transaction processing system, automatically to increase a target call rate for the transaction processing system.

- This preferred feature of the invention extends the functionality of the governor controller, in such a way that it can respond automatically to changes in the condition of the communications network when, for example, another service platform fails. Monitoring the identity of the switches which communicate with the platform makes it possible to achieve this extended functionality without requiring additional inter-platform signalling.

- a communications network including a transaction processing system in accordance with the first aspect.

- a method of operating a transaction processing system in a communications network comprising: a) communicating between the communications network and a governor located in the transaction processing system signalling for an inbound transaction; b) in the governor, comparing the current rate of transactions processed by the transaction processing system with a static limit value; and c) causing the transaction to be released when the static limit value is reached.

- a service platform for use in a communications network, the platform including: a network interface for receiving signalling for inbound calls a static governor programmed with a predetermined call rate threshold, and a call processor which is responsive to the static governor and is arranged to release an inbound call when the predetermined call rate threshold is reached.

- a method of operating a communications network including: a) comparing a rate of events in a network resource with a threshold rate by incrementing a leaky bucket counter in response to signalling events; and b) applying different weights to the increments of step (a) for different signalling events, the weights depending on the degree loading of the network resource associated with the different signalling events.

- This aspect of the invention is not limited to use in preventing overload of a control platform by inbound signalling, but is generally applicable to the prevention of overloading of network resources.

- the invention also encompasses a platform for use in such a method.

- Figure 1 is a schematic of a communications network embodying the present invention

- Figure 2 is a schematic of a service platform for use in the network of Figure 1 ;

- Figures 3a and 3b are diagrams illustrating rejection events in the service platform of Figure 2;

- Figure 4 is a diagram showing event flows in the platform of Figure 2;

- Figure 5 is a diagram illustrating the balancing of governor thresholds across several sites

- Figure 6 is a diagram showing a shared memory network which is used in implementing the platform of Figure 2;

- Figure 7 shows the physical architecture used to implement the platform of Figure 2.

- FIG. 8 is a flow diagram for a governor embodying the invention.

- FIG. 9 shows an alternative embodiment.

- a telecommunications network which uses an IN (Intelligent Network) architecture includes a service control point 1 , which is also termed herein the Network Intelligence Platform (NIP).

- the service control point 1 is connected to trunk digital main switching units (DMSU's) 2 ,3 and to digital local exchanges (DLE's) 4,5. Both the DMSU's and the DLE's function as service switching points (SSP's). At certain points during the progress of a call, the SSP's transfer control of the call to the service control point.

- the service control point carries out functions such as number translation and provides a gateway to additional resources such as a voice messaging platform.

- the service control point 1 in this example includes an overload control server (OCS) 21 , a number of transaction servers 22, and a communications server 23.

- OCS overload control server

- the communications server 23 provides an interface to a common channel signalling network.

- the transaction servers are linked to a common back end system 24 which is responsible for management functions such as the collection of call statistics.

- the SSP DMSU

- IDP initial detection point

- the call is subsequently processed within one of the transaction servers 22, for example by translating an 0800 number into an equivalent destination number, and the results of the processing are returned via the signalling network to the DMSU 2 which may then, for example, route the call via the other DMSU 3 and DLE 5 to the destination.

- the transaction servers 22 include static governors 220.

- the static governors 220 which may be embodied as software modules running on the processors which implement the transaction servers, monitor the rate of in-bound call-related signalling at a respective transaction server and cause a call to be released once a predetermined static call rate threshold or limit value has been reached. The governors also signal rejection events to the overload control server.

- the governor would be located as close as possible to the signalling entry point into the service platform, so that it has the earliest possible opportunity to detect a peak in traffic before preceding systems, such as communication servers, have become congested.

- the governor is located within the call processing protocol layer. For some calls, the governor operates only on the initial message for the call. Positioning the governor in the call processing layer ensures that it has sufficient knowledge of the incoming message to distinguish initial messages from other messages generated by the same call. This position also facilitates generation of a call rejection message, since that is one of the functions of the call processing protocol layer.

- a further advantage is that the governor has access to data on offered calls and the identity of originating SSP's.

- the Overload Control Server responds to call release events signalled by the governor by sending call-gapping instructions to originating SSP's. Each such instruction carries an interval and duration timer. Admission of a call by the SSP results in an initial detection point (IDP) and starts the gap interval timer. When the interval ends, the next offered call is admitted. This process repeats until the duration time expires.

- IDDP initial detection point

- the call processing layer is the INAP (Intelligent Network Application Protocol) layer specified in the ITU standard for Intelligent Networks.

- the initial message in this case is an INAP InitalDP operation.

- a ReleaseCall operation is used, and a reason for the release is specified. This results in a tone or announcement being played to the originator of the call.

- a nodal global title is contained in a calling address which is retrieved from the TCAP (Transaction Capabilities Application Part) TC-Begin message. This title is used to identify uniquely the originating SSP for each call.

- the governor in each transaction server limits the rate of admitted calls using what is termed a "leaky bucket" algorithm.

- the leaky bucket is a counter which is decremented regularly at a fixed rate, the leak rate.

- the governor adds a drip to the bucket, that is to say increments the counter, every time an initial message is received for a new call by the respective transaction server and the call is accepted.

- the bucket leak rate and the reject threshold are both set by the governor controller in the OCS.

- the operation of the algorithm is then as follows: 1 .

- the bucket starts with a fill level of 0 and receives a drip of value 1 every time that a new call is admitted.

- the effect of the algorithm is to ensure that when the offered traffic rate exceeds the governor threshold, then the admitted rate is limited to the threshold value.

- the governor triggers two types of rejection event, termed “real rejection” and “pseudo rejection” .

- a separate call rate threshold is maintained for each type of rejection event, and separate respective leaky bucket counters are used.

- the real rejection event is used as described above.

- the governor generates a release call message so that calls are rejected once the predetermined call rate threshold is reached. This is appropriate if the bottleneck, that is to say the resources which would otherwise be overloaded, comes after the governor in the call processing chain. However, sometimes the bottleneck precedes the governor. This implies that once the call has got as far as the governor, there is likely to be sufficient capacity following the governor to handle the call.

- a pseudo rejection event is used.

- the initial message is passed on to the post-governor systems for further processing.

- a rejection event is signalled to the overload control function of the OCS, so that the OCS acts to reduce the incoming call rate, thereby protecting the potentially overloaded resources in the pre-governor systems.

- the pseudo rejection event threshold is set to a lower value than the real rejection event threshold. For example, the pseudo rejection event threshold might be set to 100 calls/sec and the real rejection event threshold might be set to 1 20 calls/sec. Then, if the current call rate is, say, 1 10 calls/sec then a pseudo rejection event is triggered. If the call rate reaches, say, 1 20 calls/sec, then a real rejection event is triggered.

- Figure 4 illustrates the event flows in three types of situation.

- region a the current call rate is below both thresholds, and no rejection occurs.

- region b the current call rate is above the pseudo rejection event threshold and below the real rejection event threshold.

- region c the current call rate is above both the pseudo rejection event threshold and real rejection event threshold.

- the event locations shown in the diagram are, from left to right, the signalling network (CTN), the governor, the governor controller, the overload control function (OCF), a node membership change function (NMCF), and the back-end system.

- CTN signalling network

- OCF overload control function

- NMCF node membership change function

- the service platform may in practice be implemented as a multiplicity of transaction servers, referenced 1 to n, each of which has its own governor

- the use of several transaction servers both increases the capacity of the platform, and enhances its ability to withstand localised component failures.

- the governor controller is designed to maintain as far as possible the overall platform throughput in the event of one of the transaction servers failing.

- the governor controller includes a monitoring function.

- the governor controller communicates with the transaction servers using a memory channel network, and the monitoring function is implemented using a Node Member Change Function (NMCF) which is associated with the memory channel network.

- NMCF Node Member Change Function

- the monitoring function determines how many of the transaction servers in the service platform site are active.

- the governor controller then takes a target allocated rate for the site, which target rate may have been set previously by a network management function, and divides it by the number of transaction servers available.

- the governor controller ensures that the resulting threshold values which are allocated to the transaction servers do not exceed the maximum rate which a transaction server is capable of handling.

- each governor is programmed with a maximum threshold value. The governor defaults to this maximum threshold value if it finds itself operating in isolation, for example as a result of the site governor controller failing.

- a service platform is split between three sites, M,N,P.

- Each site has a number of transaction servers, together with a communications server and an overload control server.

- the transaction servers include respective governors, and a governor controller is located in each overload control server, as described previously with reference to the service platform of Figure 2

- the different sites are connected to a common back-end system.

- four of the network SSP's are shown.

- the SSP'S have nodal global titles A, B, C & D.

- Each SSP connects to a single one of the service platform sites.

- the preferred connections are shown by arrows with solid lines.

- Alternative, second choice connections are shown by arrows with dashed lines. If a particular service platform site fails, all the SSP's currently using that site as their first choice switch to their respective second choice sites.

- the governor controller in each site registers all traffic offered to the site, and monitors the identity of each SSP which is directing signalling to the site. From the number of different SSP identities which are encountered in a predetermined period of time, the governor controller calculates how many SSP's are connected to it. The target threshold value for the site is then set accordingly.

- a manual override mechanism may be included in the SCP's for use, for example, to stop other sites increasing their traffic levels when one SCP is taken out of use.

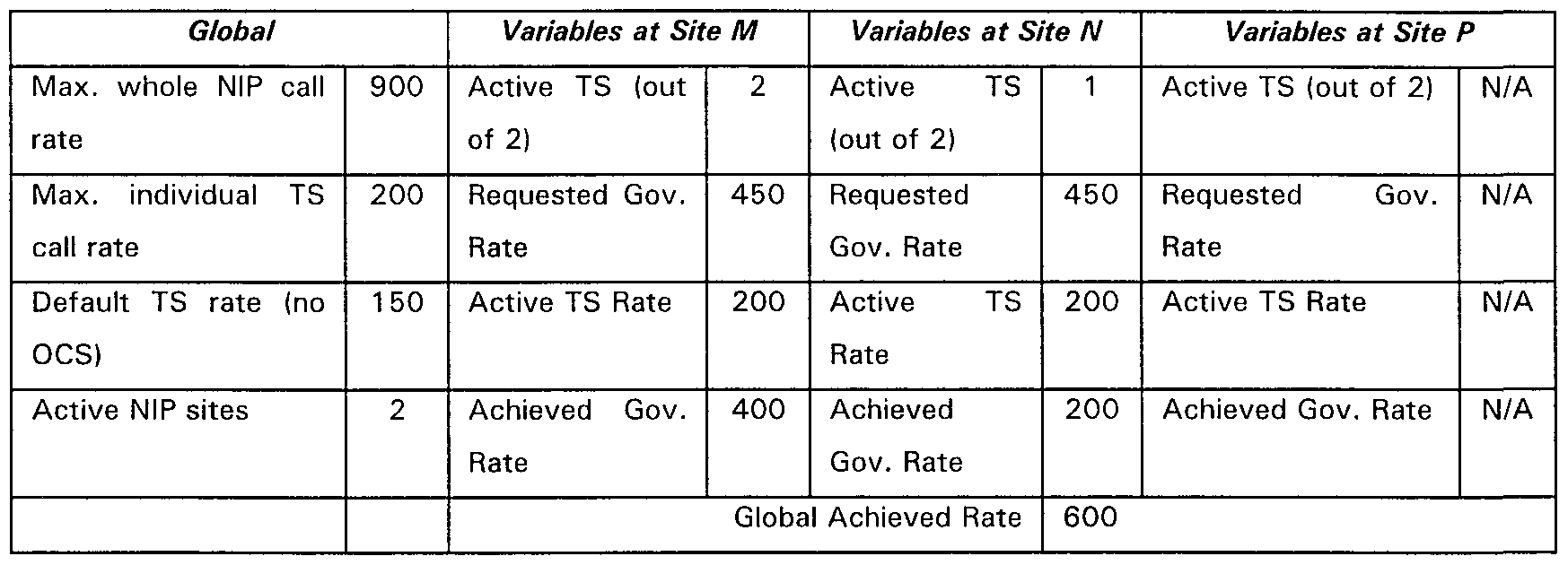

- Tables 1 a and 1 b below illustrate in further detail the balancing of thresholds in the network of Figure 5.

- Table 1 a shows the setting for the variables when the status of the entire NIP, that is the three sites and the common back end system is normal.

- Table 1 b shows how these settings change when one site (site P) fails, and one transaction server fails at site N.

- FIG 7 shows the physical implementation of the service platform of Figure 2.

- the governors and governor controllers are implemented as software modules running on these servers. These use a shared memory communications link termed "Memory Channel" (MC) .

- Memory Channel systems are available commercially from DEC.

- the memory channel is used to implement the node member change function NMCF. As noted above, this allows the governor controller to monitor how many active transaction servers there are.

- the memory channel also provides an efficient means for the transaction servers to communicate with central services on the OCS such as the governor controller and the OCF.

- Figure 6 illustrates the operation of the memory channel network.

- the Memory Channel technology provides a lock facility as part of its API (application program interface).

- the governor controller guarantees (within a set time) to release a lock if the node (server) fails.

- the governor controller is pre-programmed with the identities of transaction servers. The governor controller regularly attempts to grab the lock belonging to each of the transaction servers. If it manages to get one of the locks it knows that a previously active transaction server has now failed. If it can no longer obtain a lock (while previously it could) it knows that a transaction server is now active.

- the following information flows are communicated via the memory channel: the pseudo and real rejection thresholds; the count of an offered call and its associated SSP ID.

- the threshold values are simply data items held in the memory channel.

- Governor controller software on the OCS writes to the values while software methods on the transaction servers allow the values to be read. For each offered call, software on the transaction server accesses a common count of calls and then, while locking the count, increments the value of the count.

- each call made to the SCP results in approximately the same load for the SCP.

- This approximation gives acceptable results even where there is some variance in the loading associated with different calls.

- some calls may involve simple number translation, in which case the SCP handles an initial DP followed by FurnishCharginglnformation, SendCharginglnformation and Connect signals, while other calls may combine number translation with a call statistics operation.

- These events and signals, and other such events and signals referred to below, are formally defined in the ITU INAP standard, and the terminology used here is taken from that standard.) In this latter case, an extra RequestReportBCSMEvent operation is required.

- EventReportBCSM EventReportBCSM

- the SCP may be required to handle different calls which impose widely varying loads. This is the case when some of the calls involve multiple legs Such calls involve arming detection points at the SSP, using the RequestReportBSCMEvent, as interrupted. In this case, when the SCP triggers a detection point, e.g. for busy, then the control of the call remains with the SCP.

- the SCP in this case may have multiple interactions with the SSP following a single InitialDP (initial Detection Point) event.

- the service may now involve the use of an Intelligent Peripheral, such as a voice messaging platform. The SCP then has to set a temporary leg to the Intelligent Peripheral to allow voice interaction.

- the governor is programmed to add a drip to the leaky bucket counter not only when an InitialDP message is received at the SCP, but also when other operations are sent to or from the SCP, e.g. when an EventReportBCSM message is received provided that the event report is an interrupted EDP (event detection point). Since the call has already been admitted at this stage, any resulting rejection event is of the pseudo- rejection type.

- the service involves legs to an intelligent peripheral, then the occurrence of an EstabhshTemporaryConnection operation is treated as a further trigger for incrementing the leaky bucket counter.

- the trigger relates to a signal sent from the SCP to the SSP, rather than vice versa as in the other examples.

- any reject event is a pseudo-reject.

- a further feature of this alternative implementation uses a variable drip - that is to say the leaky bucket counter is incremented by different amounts depending on the nature of the event which caused the drip.

- a drip associated with an InitialDP may have a value of 2

- a drip associated with an EventReportBCSM may have a unit value of 1 .

- Determining the appropriate drip values for different events, and the threshold for the governor may be carried out by prototyping and testing a particular platform and/or from modelling using representative service times for different types of call. In principal any or all of the intermediate signalling events occurring at the service platform may be used to trigger the adding of a drip to the leaky bucket counter. For ease of implementation, all such events may be treated as potentially adding a drip, but for selected events the weight of the drip may be set to zero.

- FIG 8 is a flow diagram for a governor which use variable-weight drips, as described in the immediately preceding paragraphs.

- the periodic dripping from the leaky bucket counters is omitted from the illustrated event flows. This may be viewed as a concurrent external event.

- a message event occurs at the governor as it receives or transmits a signal via the network interface.

- the governor determines the event type, step s2. If the event is determined to be an InitialDP (IDP) then the value of the drip weight is set, e.g., equal to 3, step s3.

- the value of a variable r currentlevel which is the current level of the leaky bucket counter for real rejection events, is read, step s4.

- step s6 the value of temp is compared with realj, that is the limit value for real rejection events. If the limit value is exceeded then in step s7 the governor sends notification of a real rejection event to the OCS. In step s8 the governor causes the call to be released. If the limit value is not exceeded, then in step s9 ( Figure 8iii) the drip is added to the bucket as the variable r_currentlevel is set equal to the value held in the temporary register.

- step s10 The value p_currentlevel of the level of the leaky bucket counter for pseudo rejection events is read, step s10, and the drip weight is added to that value and is written into the temporary register, step s 1 1 .

- step s1 2 the value of temp is compared with pseudoj, that is the limit value for pseudo-rejection events. If the limit value is exceeded, then in step s1 3 the governor sends notification of a pseudo rejection event to the OCS. If the limit value is not exceeded, then in step s 14 the drip is added to the bucket as the variable p_currentlevel is set equal to the value held in the temporary register.

- Intermediate events subsequent to an IDP event and the admission of a call, are used to add to the level in the leaky buckets for real and pseudo rejection.

- the drip weight is set equal to, e.g., 2, step s1 5 ( Figure 8ii).

- ETC EstablishTemporaryConnection

- the drip weight is et equal, e.g., to 1 , step s1 6.

- other weights may be set for other intermediate events.

- the appropriate drip values are added to both the leaky buckets.

- the governor in this example, adds drips to the leaky buckets for all event flows between the SCP and SSP.

- the InitialDP is a special case as it can be rejected without dripping. As already noted, some events may be assigned a zero value for the drip.

- FIG. 9 shows a preferred implementation of the invention using different drip weights.

- the SCP in this example includes a transaction server call processing module 91 , a Transaction Capabilities Application Part (TCAP) server 92, a TCAP back end processor 93 and a communications server 94.

- a transfer module 95 links the TCAP server 92 to an Advanced Transaction Server 96 which is responsible for certain advanced transactions.

- the TCAP server includes a governor which uses a leaky bucket algorithm.

- the load on the transaction server is due to he processing of mesages crossing the six interfaces labelled 1 to 6 in the figure, and hence that load can be modelled as a weighted sum of message rates across these interfaces.

- signalling network load can be modelled as a weighted sum of messages across the 2 interfaces labelled 1 and 4 in the figure.

- Drip weights in the governor are set to be proportional to the amount of load implied by each message.

- drip weights for different messages and different interfaces may be set as follows: Using the notation ⁇ message > ( ⁇ ⁇ nterface_number > ) to specify a drip size, the drips added have the following weighting matrix IDP( 1 ), IDP(2), INAP Control(3), IDP(5), SCI(6), FCI(6), Connect(6), SCI(4), FCI(4), Connect(4) .

- the INAP Control message is the message which indicates to the TCAP Server that the message should be passed to an ATS.

- a weighting matrix is used with two dimensions corresponding to interface location and message event identity.

- the weights in that example are determined in relation to a single assumed bottleneck location, for example at the TCAP server.

- the capability of the governor can be further extended by considering a number of different potential bottlenecks, any one of which may become the true bottleneck depending on call mix.

- a reporting engine may be the bottleneck if all calls require real-time reports on their success or failure; signalling network processing for routing may be the bottleneck if there are large numbers of short signalling messages; signalling link bandwidth may be the bottleneck if average signalling message length is large.

- the absolute values depend on the chosen (arbitrary) leak rate of the governor bucket and on the capacity of the bottleneck: for example, if the reporting engine can handle 200 call outcome reports per second, and the Governor leak rate is 1 .0 per second, each Event Report should have a weight of 0.005. If a routing processor in the signalling network can handle 250 messages per second and there are 4 such processors in a load-sharing scheme, each message should have a weight of 0.001 .

- the result is a matrix of governor drip weights, with (say) columns indexed by bottleneck and rows indexed by message type. To protect against congestion at all potential bottlenecks, choose for each message the maximum weight appearing in its row, i.e. the weight corresponding to the bottleneck at which that message implies the greatest loading.

- differential weighting may be used with leaky buckets to protect network resources in other contexts, apart from their use in the governor of the present invention. For example they may be used in outbound rate control for prevention of focussed overload at destination number in a public switched telephone network (PSTN), as described in the present applicant's European patent EP-B-33461 2, granted 4 Aug 93, priority date 21 March 1 988, the contents of which are incorporated herein by reference.

- PSTN public switched telephone network

Landscapes

- Engineering & Computer Science (AREA)

- Computer Networks & Wireless Communication (AREA)

- Data Exchanges In Wide-Area Networks (AREA)

- Exchange Systems With Centralized Control (AREA)

- Telephonic Communication Services (AREA)

Abstract

Description

Claims

Priority Applications (4)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| JP50185999A JP2002505045A (en) | 1997-06-12 | 1998-06-04 | Overload protection at the service control point (SCP) |

| CA002293697A CA2293697A1 (en) | 1997-06-12 | 1998-06-04 | Overload prevention in a service control point (scp) |

| EP98925828A EP0988759A1 (en) | 1997-06-12 | 1998-06-04 | Overload prevention in a service control point (scp) |

| AU77802/98A AU7780298A (en) | 1997-06-12 | 1998-06-04 | Overload prevention in a service control point (scp) |

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| GBGB9712307.9A GB9712307D0 (en) | 1997-06-12 | 1997-06-12 | Communications network |

| GB9712307.9 | 1997-06-12 |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| WO1998057504A1 true WO1998057504A1 (en) | 1998-12-17 |

Family

ID=10814089

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/GB1998/001628 Ceased WO1998057504A1 (en) | 1997-06-12 | 1998-06-04 | Overload prevention in a service control point (scp) |

Country Status (6)

| Country | Link |

|---|---|

| EP (1) | EP0988759A1 (en) |

| JP (1) | JP2002505045A (en) |

| AU (1) | AU7780298A (en) |

| CA (1) | CA2293697A1 (en) |

| GB (1) | GB9712307D0 (en) |

| WO (1) | WO1998057504A1 (en) |

Cited By (7)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2000014913A3 (en) * | 1998-09-04 | 2000-10-19 | Ericsson Telefon Ab L M | Distributed communcations network management and control system |

| WO2001086968A1 (en) * | 2000-05-12 | 2001-11-15 | Nokia Corporation | Initiating service logic |

| KR100364171B1 (en) * | 2000-03-31 | 2002-12-11 | 주식회사데이콤 | Apparatus for processing mass call in AIN system |

| EP1775969A1 (en) * | 2005-10-17 | 2007-04-18 | Hewlett-Packard Development Company, L.P. | Communication system and method |

| WO2008032175A3 (en) * | 2006-09-11 | 2008-05-22 | Ericsson Telefon Ab L M | System and method for overload control in a next generation network |

| EP2180659A2 (en) | 2008-10-27 | 2010-04-28 | Broadsoft, Inc. | SIP server overload detection and control |

| US9253099B2 (en) | 2006-09-11 | 2016-02-02 | Telefonaktiebolaget L M Ericsson (Publ) | System and method for overload control in a next generation network |

Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US5425086A (en) * | 1991-09-18 | 1995-06-13 | Fujitsu Limited | Load control system for controlling a plurality of processes and exchange system having load control system |

| WO1996015634A2 (en) * | 1994-11-11 | 1996-05-23 | Nokia Telecommunications Oy | Overload prevention in a telecommunications network node |

| EP0735786A2 (en) * | 1995-03-31 | 1996-10-02 | Siemens Aktiengesellschaft | Method for overload defence in a communication network |

| US5581610A (en) * | 1994-10-19 | 1996-12-03 | Bellsouth Corporation | Method for network traffic regulation and management at a mediated access service control point in an open advanced intelligent network environment |

| WO1997009814A1 (en) * | 1995-09-07 | 1997-03-13 | Ericsson Australia Pty. Ltd. | Controlling traffic congestion in intelligent electronic networks |

-

1997

- 1997-06-12 GB GBGB9712307.9A patent/GB9712307D0/en active Pending

-

1998

- 1998-06-04 EP EP98925828A patent/EP0988759A1/en not_active Withdrawn

- 1998-06-04 CA CA002293697A patent/CA2293697A1/en not_active Abandoned

- 1998-06-04 WO PCT/GB1998/001628 patent/WO1998057504A1/en not_active Ceased

- 1998-06-04 AU AU77802/98A patent/AU7780298A/en not_active Abandoned

- 1998-06-04 JP JP50185999A patent/JP2002505045A/en active Pending

Patent Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US5425086A (en) * | 1991-09-18 | 1995-06-13 | Fujitsu Limited | Load control system for controlling a plurality of processes and exchange system having load control system |

| US5581610A (en) * | 1994-10-19 | 1996-12-03 | Bellsouth Corporation | Method for network traffic regulation and management at a mediated access service control point in an open advanced intelligent network environment |

| WO1996015634A2 (en) * | 1994-11-11 | 1996-05-23 | Nokia Telecommunications Oy | Overload prevention in a telecommunications network node |

| EP0735786A2 (en) * | 1995-03-31 | 1996-10-02 | Siemens Aktiengesellschaft | Method for overload defence in a communication network |

| WO1997009814A1 (en) * | 1995-09-07 | 1997-03-13 | Ericsson Australia Pty. Ltd. | Controlling traffic congestion in intelligent electronic networks |

Non-Patent Citations (3)

| Title |

|---|

| KOSAL H ET AL: "A CONTROL MECHANISM TO PREVENT CORRELATED MESSAGE ARRIVALS FROM DEGRADING SIGNALING NO. 7 NETWORK PERFORMANCE", IEEE JOURNAL ON SELECTED AREAS IN COMMUNICATIONS, vol. 12, no. 3, 1 April 1994 (1994-04-01), pages 439 - 445, XP000458689 * |

| RUMSEWICZ M P: "CRITICAL CONGESTION CONTROL ISSUES IN THE EVOLUTION OF COMMON CHANNEL SIGNALING NETWORKS", FUNDAMENTAL ROLE OF TELETRAFFIC IN THE EVOLUTION OF TELECOMMUNICATI NETWORKS, PROCEEDINGS OF THE 14TH. INTERNATIONAL TELETRAFFIC CONGRESS - ITC 1 JUAN-LES-PINS, JUNE 6 - 10, 1994, vol. 1A, 6 June 1994 (1994-06-06), LABETOULLE J;ROBERTS J W (EDS ), pages 115 - 124, XP000593405 * |

| SMITH D E: "ENSURING ROBUST CALL THROUGHPUT AND FAIRNESS FOR SCP OVERLOAD CONTROLS", IEEE / ACM TRANSACTIONS ON NETWORKING, vol. 3, no. 5, 1 October 1995 (1995-10-01), pages 538 - 548, XP000543255 * |

Cited By (13)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| WO2000014913A3 (en) * | 1998-09-04 | 2000-10-19 | Ericsson Telefon Ab L M | Distributed communcations network management and control system |

| EP1833266A3 (en) * | 1998-09-04 | 2007-10-17 | Telefonaktiebolaget LM Ericsson (publ) | Distributed communications network management and control system |

| US6370572B1 (en) | 1998-09-04 | 2002-04-09 | Telefonaktiebolaget L M Ericsson (Publ) | Performance management and control system for a distributed communications network |

| KR100364171B1 (en) * | 2000-03-31 | 2002-12-11 | 주식회사데이콤 | Apparatus for processing mass call in AIN system |

| US7203180B2 (en) | 2000-05-12 | 2007-04-10 | Nokia Corporation | Initiating service logic |

| WO2001086968A1 (en) * | 2000-05-12 | 2001-11-15 | Nokia Corporation | Initiating service logic |

| EP1775969A1 (en) * | 2005-10-17 | 2007-04-18 | Hewlett-Packard Development Company, L.P. | Communication system and method |

| US8654945B2 (en) | 2005-10-17 | 2014-02-18 | Hewlett-Packard Development Company, L.P. | Blocking a channel in a communication system |

| WO2008032175A3 (en) * | 2006-09-11 | 2008-05-22 | Ericsson Telefon Ab L M | System and method for overload control in a next generation network |

| US8446829B2 (en) | 2006-09-11 | 2013-05-21 | Telefonaktiebolaget L M Ericsson (Publ) | System and method for overload control in a next generation network |

| US9253099B2 (en) | 2006-09-11 | 2016-02-02 | Telefonaktiebolaget L M Ericsson (Publ) | System and method for overload control in a next generation network |

| EP2180659A2 (en) | 2008-10-27 | 2010-04-28 | Broadsoft, Inc. | SIP server overload detection and control |

| EP2180659A3 (en) * | 2008-10-27 | 2012-07-18 | Broadsoft, Inc. | SIP server overload detection and control |

Also Published As

| Publication number | Publication date |

|---|---|

| GB9712307D0 (en) | 1997-08-13 |

| JP2002505045A (en) | 2002-02-12 |

| AU7780298A (en) | 1998-12-30 |

| CA2293697A1 (en) | 1998-12-17 |

| EP0988759A1 (en) | 2000-03-29 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| EP0954934B1 (en) | Congestion control in a communications network | |

| US5570410A (en) | Dynamic resource allocation process for a service control point in an advanced intelligent network system | |

| US5862334A (en) | Mediated access to an intelligent network | |

| US5450482A (en) | Dynamic network automatic call distribution | |

| AU691509B2 (en) | Mediation of open advanced intelligent network interface for public switched telephone network | |

| CA1253241A (en) | Automatic call distributor telephone service | |

| US5778057A (en) | Service control point congestion control method | |

| US5838769A (en) | Method of reducing risk that calls are blocked by egress switch or trunk failures | |

| US5825860A (en) | Load sharing group of service control points connected to a mediation point for traffic management control | |

| Haenschke et al. | Network management and congestion in the US telecommunications network | |

| Kuhn et al. | Common channel signaling networks: Past, present, future | |

| US6377677B1 (en) | Telecommunications network having successively utilized different network addresses to a single destination | |

| WO1998057504A1 (en) | Overload prevention in a service control point (scp) | |

| Chemouil et al. | Performance issues in the design of dynamically controlled circuit-switched networks | |

| Manfield et al. | Congestion controls in SS7 signaling networks | |

| NZ293663A (en) | Virtual private network overflow service triggered by congestion indicator response | |

| US20060187841A1 (en) | Methods, systems, and computer program products for suppressing congestion control at a signaling system 7 network node | |

| EP1068716B1 (en) | Call distribution | |

| EP0972414B1 (en) | Telecommunications network including an overload control arrangement | |

| Angelin | On the properties of a congestion control mechanism for signaling networks based on a state machine | |

| Galletti et al. | Performance simulation of congestion control mechanisms for intelligent networks | |

| KR100322672B1 (en) | Method of controlling overload in load balancing AIN system | |

| KR940003516B1 (en) | Method of conducting a digital call signal of full exchanging system | |

| Mohanram et al. | Intelligent network traffic management | |

| Jennings et al. | A strategy for the resolution of intelligent network (IN) and Signalling System No. 7 (SS7) congestion control conflicts |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| WWE | Wipo information: entry into national phase |

Ref document number: 09101956 Country of ref document: US |

|

| AK | Designated states |

Kind code of ref document: A1 Designated state(s): AL AM AT AU AZ BA BB BG BR BY CA CH CN CU CZ DE DK EE ES FI GB GE GH GM GW HU ID IL IS JP KE KG KP KR KZ LC LK LR LS LT LU LV MD MG MK MN MW MX NO NZ PL PT RO RU SD SE SG SI SK SL TJ TM TR TT UA UG US UZ VN YU ZW |

|

| AL | Designated countries for regional patents |

Kind code of ref document: A1 Designated state(s): GH GM KE LS MW SD SZ UG ZW AM AZ BY KG KZ MD RU TJ TM AT BE CH CY DE DK ES FI FR GB GR IE IT LU MC NL PT SE BF BJ CF CG CI CM GA GN ML MR NE SN TD TG |

|

| DFPE | Request for preliminary examination filed prior to expiration of 19th month from priority date (pct application filed before 20040101) | ||

| 121 | Ep: the epo has been informed by wipo that ep was designated in this application | ||

| WWE | Wipo information: entry into national phase |

Ref document number: 1998925828 Country of ref document: EP |

|

| WWE | Wipo information: entry into national phase |

Ref document number: 77802/98 Country of ref document: AU |

|

| ENP | Entry into the national phase |

Ref document number: 2293697 Country of ref document: CA Ref country code: CA Ref document number: 2293697 Kind code of ref document: A Format of ref document f/p: F |

|

| ENP | Entry into the national phase |

Ref country code: JP Ref document number: 1999 501859 Kind code of ref document: A Format of ref document f/p: F |

|

| WWP | Wipo information: published in national office |

Ref document number: 1998925828 Country of ref document: EP |

|

| REG | Reference to national code |

Ref country code: DE Ref legal event code: 8642 |

|

| WWW | Wipo information: withdrawn in national office |

Ref document number: 1998925828 Country of ref document: EP |