WO1995021436A1 - Improved information input apparatus - Google Patents

Improved information input apparatus Download PDFInfo

- Publication number

- WO1995021436A1 WO1995021436A1 PCT/US1995/001483 US9501483W WO9521436A1 WO 1995021436 A1 WO1995021436 A1 WO 1995021436A1 US 9501483 W US9501483 W US 9501483W WO 9521436 A1 WO9521436 A1 WO 9521436A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- int

- ind

- motion

- arr

- index

- Prior art date

Links

Classifications

-

- G—PHYSICS

- G09—EDUCATION; CRYPTOGRAPHY; DISPLAY; ADVERTISING; SEALS

- G09B—EDUCATIONAL OR DEMONSTRATION APPLIANCES; APPLIANCES FOR TEACHING, OR COMMUNICATING WITH, THE BLIND, DEAF OR MUTE; MODELS; PLANETARIA; GLOBES; MAPS; DIAGRAMS

- G09B5/00—Electrically-operated educational appliances

- G09B5/06—Electrically-operated educational appliances with both visual and audible presentation of the material to be studied

- G09B5/062—Combinations of audio and printed presentations, e.g. magnetically striped cards, talking books, magnetic tapes with printed texts thereon

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F3/00—Input arrangements for transferring data to be processed into a form capable of being handled by the computer; Output arrangements for transferring data from processing unit to output unit, e.g. interface arrangements

- G06F3/01—Input arrangements or combined input and output arrangements for interaction between user and computer

- G06F3/03—Arrangements for converting the position or the displacement of a member into a coded form

- G06F3/033—Pointing devices displaced or positioned by the user, e.g. mice, trackballs, pens or joysticks; Accessories therefor

- G06F3/0354—Pointing devices displaced or positioned by the user, e.g. mice, trackballs, pens or joysticks; Accessories therefor with detection of 2D relative movements between the device, or an operating part thereof, and a plane or surface, e.g. 2D mice, trackballs, pens or pucks

- G06F3/03545—Pens or stylus

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V30/00—Character recognition; Recognising digital ink; Document-oriented image-based pattern recognition

- G06V30/10—Character recognition

- G06V30/14—Image acquisition

- G06V30/142—Image acquisition using hand-held instruments; Constructional details of the instruments

- G06V30/1423—Image acquisition using hand-held instruments; Constructional details of the instruments the instrument generating sequences of position coordinates corresponding to handwriting

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V30/00—Character recognition; Recognising digital ink; Document-oriented image-based pattern recognition

- G06V30/10—Character recognition

- G06V30/22—Character recognition characterised by the type of writing

- G06V30/228—Character recognition characterised by the type of writing of three-dimensional handwriting, e.g. writing in the air

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V30/00—Character recognition; Recognising digital ink; Document-oriented image-based pattern recognition

- G06V30/10—Character recognition

- G06V30/32—Digital ink

- G06V30/36—Matching; Classification

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V30/00—Character recognition; Recognising digital ink; Document-oriented image-based pattern recognition

- G06V30/10—Character recognition

- G06V30/32—Digital ink

- G06V30/36—Matching; Classification

- G06V30/373—Matching; Classification using a special pattern or subpattern alphabet

Definitions

- the present invention relates to input devices far computers generally.

- U.S. Patent 4,839,836 to LaBiche et. al. de ⁇ scribes an apparatus for providing spatA.i orientation data signals, using an inertial platform accelerometer cluster having a plurality of accelerometers.

- U.S. Patent 5,181,181 to Glynn describes a computer apparatus input device for three-dimensional information.

- the present invention seeks to provide an improved input device.

- information input apparatus including body supported apparatus for sensing voluntary body motions and providing an output indication thereof, a symbol output interpreter operative to utilize the output indication for providing symbol outputs, and a motion output interpreter operative to utilize the output indication for providing motion con ⁇ trol outputs.

- the output indication represents features of body motion including features which are characteristic of the individual.

- a mode selector is provided which is operative to cause a selected one of the symbol output interpreter and the motion output interpreter to function.

- the body supported appara ⁇ tus is a hand held device.

- the body supported apparatus is a generally pen-shaped device.

- the generally pen- shaped device is operative to provide a visible writing function.

- the information input apparatus also includes an object whose motion is controlled by the motion control outputs.

- the object is a graphic object displayed on a display or a physical object.

- the symbol outputs repre ⁇ sent alphanumeric symbols or a sensory quality such as an acoustic stimulus, including but not limited to music, or such as a visual stimulus, including but not limited to a color or a color image.

- acoustic stimulus including but not limited to music

- a visual stimulus including but not limited to a color or a color image.

- the information input apparatus also includes a computer, having a loca ⁇ tion input and a symbol input, and a display operated by the computer and wherein the symbol outputs represent information to be displayed on the display and the motion outputs are supplied to the location input and are em ⁇ ployed by the computer to govern the location of the information on the display.

- the symbol outputs include function commands.

- a method by which a manipulable device provides an output indica ⁇ tion representing its own angular mot including recording actual acceleration data from a plurality of accelerometers mounted in the manipulable device, generating predicted acceleration data on the basis of hypothetical angular motion information, compar ⁇ ing the predicted acceleration data to the actual accel- eration data, computing improved hypothetical angular motion information, repeating, while the predicted accel ⁇ eration data differs significantly from the actual accel ⁇ eration data, the generating, comparing and computing steps, and providing an output indication of the improved hypothetical angular motion information.

- the angular motion infor ⁇ mation includes angular displacement information, angular velocity information and angular acceleration informa ⁇ tion.

- the method includes computing linear motion information from the improved hypothetical angular motion information and from the actual acceleration data.

- recording includes recording from at least four accelerometers mounted in the manipulable device, wherein the accelerometers ' each have a center of mass and wherein the centers of mass do not lie within a single plane.

- the method also includes receiving the output indication of the improved hypothetical angular motion information and manipulating an object in accordance therewith.

- an accelerometer array mounted in a manipulable device and including at least four accelerometers each having a center of mass, wherein the centers of mass do not lie within a single plane, and a manipulable device motion computer receiving input from the accelerometers and generating an output signal indicative of the motion of the manipulable device.

- the manipulable device motion computer is operative to: record actual acceleration data from the accel ⁇ erometers, s generate predicted acceleration data on the basis of hypothetical angular motion information, compare the predicted acceleration data to the actual acceleration data, compute improved hypothetical angular motion information, repeat, while the predicted acceleration data differs significantly from the actual acceleration data, the generating, comparing and computing steps, and provide an output indication of the improved hypothetical angular motion information.

- the apparatus also included ⁇ ing an object manipulator receiving the output signal indicative of the motion of the manipulable device and manipulating an object in accordance therewith.

- an infor ⁇ mation input method including se_-_;ing voluntary body motions and providing an output indication thereof, utilizing the output indication for providing symbol outputs, and utilizing the output indication for provid ⁇ ing motion control outputs.

- Fig. 1 is a simplified pictorial illustration of object control and handwriting recognition apparatus constructed and operative in accordance with a preferred embodiment of the present invention

- Figs. 2A and 2B are schematic drawings of preferred structures of portions of the apparatus of Fig.

- Fig. 3 is a simplified block diagram of the apparatus of Fig. 1;

- Fig. 4 is a simplified flow chart illustrating the object control process performed by the apparatus of Fig. 1;

- Fig. 5A is a simplified flow chart illustrating the process of step 440 of figure 4.

- Fig. 5B comprises mathematical equations illus ⁇ trating the process performed by Fig. 5A;

- Fig. 6 is a simplified block diagram of the apparatus of Fig. 1;

- Fig. 7A is a simplified flow chart illustrating the teaching process performed by the apparatus of Fig. l;

- Fig. 7B is a simplified flow chart illustrating the recognition process performed by the apparatus of Fig. 1;

- Figs. 8A and 8B are graphical illustrations useful in understanding a preferred method for a portion of the teaching and recognition processes performed by the apparatus of Fig. 1.

- Appendix A is a computer listing of a preferred software implementation of a portion of steps 720 of Fig. 7A and 800 of Fig. 7B.

- FIG. 1 is a ; --plified pictorial illustration of apparatus operative t perform motion control in synergistic combination with symbol interpretation such as handwriting recognition.

- a hand-held pen 10 is operative to be translated and rotat ⁇ ed about some or all of three perpendicular axes.

- the term "six degrees of freedom" is used herein to designate translation along and rotation about three orthogonal axes.

- Pen 10 also comprises a plurality of built-in accelerometers 25, such as model ICS 3031-2 commercially available from IC Sensors, 1701 McCarthy Blvd., Milpitas, CA 95035.

- pen 10 comprises six accelerome ⁇ ters arranged in pairs, with each pair lying along a particular axis, with the axes being mutually orthogonal. Alternatively, the axes may not be mutually orthogonal. In any case the accelerometers need not be coplanar.

- Pen 10 also comprises a plurality of amplifiers 30, associated with the plurality of accelerometers 25.

- Fig. 2A is a schematic drawing of a preferred embodiment of amplifier 30.

- removable apparatus comprising a plurality of accelerometers 25 as described above, and also comprising associated amplifiers 30, as described above, may be retrofitted onto the pen 10.

- the removable apr ratus may have the form of a cap fitting the end of the pen, a ring fitting over the pen, or any other suit ⁇ able form.

- the apparatus may not include a pen, but may have any other suitable hand held form.

- the appa ⁇ ratus may be in the form of a ring fitting the user's finger, may be supported by the body of a user, or mount ⁇ ed thereupon in any suitable matter.

- Pen 10 also comprises a switch 35, which can be used to send a signal indicating whether pen 10 is being used for handwriting recognition or as a pointing and control device. Alternatively the signal may be sent by moving pen 10 in a predefined format, or by any other appropriate means. During handwriting recognition, the user may write with pen 10 on writing surface 37.

- the data from the plurality of accelerometers 25 in pen 10 is termed herein "accelerometer data".

- the accelerometer data is sent through a cable to a control circuit 40.

- the accelerometer data may be sent through any suitable wireless communication link, such as ultrasonic, infrared, or by any other suitable means.

- Control circuit 40 amplifies the acceleration signals from pen 10 and converts them to digital form, preferably using an analog to digital converter.

- Fig. 2B is a schematic drawing of a preferred embodiment of an analog to digital converter suitable for the present application.

- Control circuit 40 then sends acceleration data to a CPU 50.

- CPU 50 may be any suitable CPU such as an IBM PC compatible computer with an 80386 processor chip.

- CPU 50 Associated with CPU 50 are a screen 60 and a keyboard 70.

- An object 80 such as a cursor or a graphic representation of a physical object, is displayed on screen 60.

- CPU 50 based on the acceleration data, moves the cursor or graphic representation 80 with six degrees of freedom, corresponding to the movement of pen 10.

- a symbol 85 such as one or more characters or words, may also be displayed on screen 60.

- CPU 50 based on the acceleration data, displays the symbols corresponding to what is written on writing surface 37 on screen 60.

- the functionality of the apparatus of Fig. 1 when performing obj*- v. control will now be briefly de ⁇ scribed.

- the user moves pen 10 in three dimensions; the motion may include six degrees of freedom.

- Pen 10 sends acceleration data describing the accelerations of pen 10 during the motion to control circuit 40.

- Control circuit 40 amplifies and digitizes the acceleration data. The data is sent by control box 40 to CPU 50.

- CPU 50 computes the translational displacement, velocity, and acceleration of pen 10 along three mutually perpendicular axes which axes need have no relation to the axes of the accelerometers.

- CPU 50 also computes the angular displacement (rotation) , velocity and accelera ⁇ tion of pen 10 around the same three mutually perpendic ⁇ ular axes.

- CPU 50 moves the cursor or the representation of an object 80 on screen 60 with translations and rotations corresponding to those of pen 10.

- the axes for the translation and rotation of the cursor or object correspond to the axes used to compute the translation and rotation of pen 10.

- Fig. 3 is a simplified block diagram of the apparatus of Fig. 1.

- Pen 10 when moved by the user with six degrees of freedom, transmits data describing the accelerations of pen 10 to amplification circuit 120.

- Amplification circuit 120 amplifies the acceleration data and transmits the amplified acceleration data to analog/digital con ⁇ verter 130.

- Analog/digital converter 130 digitizes the acceleration data and transmits the digitized data to displacement/velocity/acceleration computation apparatus 140, termed herein DVA 140.

- DVA 140 computes the angular displacement, velocity, and acceleration of pen 10 around three mutual ⁇ ly perpendicular axes which axes need have no relation to the axes of the accelerometers. DVA 140 also computes the translational displacement, velocity and acceleration of pen 10 along the same three mutually perpendicular axes.

- DVA 140 transmits data describing the six degrees of freedom to screen display control 150. Based on the data, screen display control 150 updates screen 60 to show the new location and orientation of the cursor or the other object depicted on screen 60.

- Fig. 4 is a simplified flow chart illustrating operation of the apparatus of Fig. 1 in accordance with a preferred embodiment of the invention.

- the preferred method of operation of the method of Fig. 4 includes the following steps:

- STEP 410 Read accelerometer data. Data from each of the plurality of accelerometers 25 is sampled, preferably at a rate of one thousand data points per second.

- STEP 412 Check whether session is at the beginning. At the beginning of a session, STEP 420, described below, is required.

- STEP 415 Check whether pen is in motion. The accelerometer data is analyzed to determine whether pen 10 is in motion.

- pen 10 is considered to be not in motion whenever all of the acceleration signals indicate that the only sensed accelerations are due to gravity. Signals are chosen from one member of each of three pairs of accelerometers, each pair arranged along a different axis.

- the sensitivities of the accelerometers correct for any deviations of the axes of each pair of accelerom ⁇ eters as they are actually mounted in pen 10 from the common axis on which they are supposed to be situated; this component of the sensitivity is called static sensi ⁇ tivity.

- the sensitivities also correct for deviations between the axes of the global orthogonal coordinate system and the axes of the pairs of accelerometers; this component of the sensitivity is called dynamic sensitivi ⁇ ty. In actual practice, both static sensitivity and dynamic sensitivity may make important contributions to sensitivity.

- the static sensitivity is computed as part of step 420, described in detail below.

- the dynamic sensi ⁇ tivity is computed as part of step 455, described in detail below.

- _ denote a small positive constant; for example, .005g where g denotes the acceleration of gravi ⁇ ty at the earth's surface. Then the pen is considered not to be in motion whenever l-_ ⁇

- STEP 420 Compute initial conditions.

- the initial con.' .tions may comprise the initial Euler angles between the -lobal coordinate system and the axes of the pairs of accelerometers. These Euler angles are now determined.

- the static sensitivity can be computed from the accelerometer data while the pen is at rest in three known orientations.

- the static sensitivi ⁇ ty can be computed once as a property of the pen and stored for future use.

- STEP 430 Compute the differential signal from each pair of accelerometers. The signals from each member of each pair of accelerometers are subtracted to form a differential signal for each pair of accelerome ⁇ ters.

- STEP 440 Compute rotational parameters.

- the rotational parameters define parameters of the motion about the three axes of the global coordinate system.

- the rotational parameters comprise the three Euler angles; the three angular velocities; and the three angular accelerations.

- the rotational parameters are computed in parallel using an iterative feedback loop.

- an estimated differential acceler ⁇ ation is computed from the current rotational parameters. If the difference between the estimated differential acceleration and the actual differential acceleration signal is less than a predetermined amount, iteration is terminated.

- STEP 450 Compute translation acceleration.

- the angular orientation and the angular acceleration are known from step 440. From the angular orientation and the sensitivity vector the acceleration due to gravity is computed.

- STEP 455 Update dynamic sensitivity.

- the dynamic sensitivity represents deviations between the axes of the global orthogonal coordinate system and the axes of the pairs of accelerom ⁇ eters. Since the angular orientation of pen 10 may have changed, the dynamic sensitivity may also have changed.

- the new dynamic sensitivity may be computed from the new angular orientation and the old matrix of dynamic sensitivity.

- STEP 460 Compute translational velocity and displacement.

- the translational velocity is computed by integrating the translational acceleration with respect to time.

- the displacement is computed by integrating the translational velocity with respect to time.

- STEP 470 Move screen object. Based on the output of previous steps which comprises translational acceleration, velocity and displacement as well as angu ⁇ lar acceleration, velocity and orientation, the screen object is moved. The moving of the screen object may be according to any appropriate transformation of the mo ⁇ tions of pen 10.

- Fig. 5A is a simplified flowchart illustrating to operation of step 440 of Fig. 4.

- Fig. 5A includes the following steps:

- STEP 480 Set initial parameters.

- the rota ⁇ tional parameters comprise the three Euler angles; the three angular velocities; and the three angular accelera ⁇ tions.

- the initial value for the Euler angles is comput ⁇ ed based on the previously known value of the parameters, assuming that the acceleration has remained constant.

- STEP 482 Compute differential acceleration from model.

- the position of an accelerometer in the coordinate system of the pen is defined by vector r and the rotation of the pen in the global coordinate system is defined by a rotation matrix A(phi) .

- A(phi) may be an appropriate rotation matrix as presented in sections 14.10-5 through 14.10-7, pages 475- 48TJ of Mathematical Handbook for Engineers by Korn and Korn, 2nd Edition, published by McGraw-Hill in 1968.

- Equation 490 illustrates the computa ⁇ tion of the acceleration of the accelerometer in the global coordinate system.

- the sensitivity vector K also changes.

- the change in the sensitivity vector K may be computed by using equation 492 of Fig. 5B.

- the estimated value for the differential signal of the accelerometer, u es . may be computed by using equation 494 of Fig. 5B.

- the remainder of the parameters may be computed with an appropriate model.

- a model which allows the use of only the parameters specified above, rather than a larger number of parameters, is used.

- equation 496 of Fig. 5B represents an appropri ⁇ ate model for computing the remainder of the parameters.

- STEP 484 Is the difference between the com ⁇ puted and the current value less than a predetermined amount? If the difference is less than this amount, the estimated parameters are taken to be correct and itera ⁇ tion is terminated, with the computed parameters being reported.

- An appropriate value for the predetermined amount may vary depending on, for example, the maximum number of desired iterations.

- One possible appropriate value would be .0003 g, where g represents the accelera ⁇ tion of gravity at the earth's surface.

- STEP 486 Compute changes in estimated angles according to the gradient method. New estimated angles are computed by adding a change to the old estimated angles; the change is computed according to the gradient method.

- the gradient method is explained more fully in section 20.3-3 of Mathematical Handbook for Engineers by Korn and Korn, referred to above.

- STEP 488 Compute new parameters. New values for the remaining parameters are computed. Iteration then continues with step 482.

- Pen 10 sends acceleration data through . control circuit 40 to CPU 50. Teaching and recognition then occur based on the data from pen 10.

- Fig. 6 is a simplified block diagram of the apparatus of Fig. 1 when used for handwriting recognition.

- the appa ⁇ ratus of Fig. 6 receives input from pen 10.

- Pen 10 when moved by the user of the handwrit ⁇ ing recognition apparatus, transmits data describing the accelerations of pen 10 over time to acceleration teach ⁇ ing control 630 and/or acceleration handwriting recogni ⁇ tion control 650.

- the data from pen 10 may be transmitted to acceleration teaching control 630.

- Transmission to accel ⁇ eration teaching control 630 typically occurs for each person who is to use the system for handwriting recogni ⁇ tion for the first time. Transmission to acceleration teaching control 630 also preferebly occurs when recogni ⁇ tion errors are detected; use of acceleration teaching control 630 when recognition errors are detected is termed herein adaptive teaching.

- Acceleration teaching control 630 operates on the data received, which data represents hand movements by the user when writing a symbol, together with manual ⁇ ly-provided identification of the symbol codes that are associated with the data. Acceleration teaching control 630 then updates database 640, a per-person per-symbol acceleration database. Database 640 comprises prototypes of accelerations for each symbol, comprising data specif ⁇ ic to each person for each symbol.

- the data from pen 10 may be transmitted to acceleration handwriting recognition control 650.

- Acceleration handwriting recognition con ⁇ trol 650 operates on the data received from pen 10 to recognize the symbol represented by the movement of pen 10.

- the output of acceleration handwriting recogni ⁇ tion control 650 comprises a list of symbol codes and their respective probabilities.

- An acceleration hand ⁇ writing recognition post-processing circuit 660 chooses the correct symbol code based on the list of symbol codes and probabilities, and on post-processing information which preferably comprises a database of previous confu ⁇ sions and a dictionary.

- the output of acceleration hand ⁇ writing recognition post-processing circuit 660 is a list of symbol codes and/or words sorted by likelihood.

- FIGS. 7A and 7B are simplified flow charts illustrating operation of the apparatus of Fig. 7 in accordance with a preferred embodiment of the invention, when performing handwriting recognition.

- Fig. 7A illustrates the teach ⁇ ing process

- Fig. 7B illustrates the recognition process.

- the steps in Fig. 7A include the following:

- STEP 710 Read accelerometer data.

- the accel ⁇ erometer data comprises data points representing sampling of the acceleration measured by accelerometers 25.

- the sampling rate is approximately 1600 data points per second, averaged over 8 points, producing an output of approximately 200 data points per second.

- STEP 712 Identify pen-surface contact termi ⁇ nation.

- the data from step 710 does not include the surface contact status of pen 10.

- the surface contact status of pen 10 may be derived from the acceleration data as follows:

- the acceleration data is filtered to remove components other than noise.

- the accelera ⁇ tion data may be filtered by a Butterworth digital filter described in Digital Filter Design by T.W. Parks and C.S Burrus, John Wiley & Sons, 1987, chapter 7, section 7.3.3, using the 4th order lowpass digital filter with a cut-off frequency of 0.7 to 0.9.

- the filtered acceleration data is then inte ⁇ grated over time.

- the slope of the integrated filtered acceleration data is then analyzed to determine the point at which the slope exceeds a threshold value.

- the point at which the slope exceeds the threshold value is taken to be the first point with status "pen down”.

- the point at which the slope falls below a threshold value is taken to be the first point with status "pen up”; the threshold value may or may not be the same as the previously de ⁇ scribed threshold value.

- the threshold values described above may be determined in advance for the particular type of pen and writing surface, may be determined by a learning process for the particular person, or may be determined by other means.

- STEP 715 Identify individual symbols and words.

- the data from the previous step is divided into data representing individual symbols.

- the status which comprises the status of "pen up” is termed herein “pen not down” .

- the number of consecutive data points with status of "pen not down”, which data points represent a particular duration of the status "pen not down” is taken to indicate the end of a symbol or of a word.

- the duration of status "pen not down” within a range from 200 milliseconds to 400 milli- se * conds is taken to indicate the end of a symbol.

- Dura ⁇ tion of the status "pen not down” in the range from 800 milliseconds to 1200 milliseconds is typically taken to indicate the end of a word.

- Output data from step 715 comprises symbol end and word end data.

- STEP 720 Normalize accelerometer data.

- the accelerometer data is normalized in time or by other means.

- Appendix A is a computer listing in the C pro ⁇ graming language comprising routines that are a preferred implementation of step 720.

- the routines comprise the following routines in section II, "pre-preprocessing": normal; together with various definitions used by routine normal.

- STEP 730 Filter accelerometer data.

- the normalized accelerometer data received from the previous step is filtered in order to remove noise.

- the filtering may be accomplished by iterative smoothing of adjacent points until the total change in the signal due to a smoothing operation is less than the desired accuracy of the data, or by other suitable means.

- STEP 740 Parameterize accelerometer data.

- the data is parameterized according to criteria which are chosen .to represent each symbol. If the accelerometers are not mutually orthogonal, the acceleration data may be converted into equivalent data in a mutually orthogonal coordinate system as follows:

- the parameters preferably comprise the follow ⁇ ing: number of points before normalization; normalized signal of pen status; normalized signal of Z acceleration; sine of the angle a ' which angle is defined as the angle between the vector associated with the current data point (AccX j _,AccY j _,AccZ ⁇ ) and the AccXAccY plane as shown in Fig.

- STEP 750 Generalize parameters.

- the parame ⁇ ters of the symbol being learned represent a specific instance of the symbol.

- the symbol prototype stored by the system is to represent the general characteristics of the symbol as drawn by that person. Therefore, the parameters of the symbol being learned are generalized by some suitable means, such as by computation of the aver ⁇ age of the value of each parameter from previous in ⁇ stances of the symbol along with the value of each param- eter from the current instance of the symbol.

- STEP 760 Update per-person per-symbol accel ⁇ eration prototype database. The newly computed parame ⁇ ters from the previous step are stored in the per-person per-symbol acceleration prototype database.

- Fig. 7B The steps in Fig. 7B include steps which have already been described above with reference to Fig. 7A. The remainder of the steps in Fig. 7B include the follow ⁇ ing:

- STEP 800 For each prototype in the per-person per-symbol acceleration prototype database, build a measure ' of comparison between the sample and the proto ⁇ type, combined over parameters in the prototype. In accordance with a preferred embodiment of the present invention, all parameters are combined together to pro ⁇ cute the measure of comparison.

- Appendix A is a computer listing in the C programing language comprising routines that are a preferred implementation of step 800. The routines comprise the following, which are found in section V, "symbols recognition": make_corr; correl_hem; obj_funct; together with various definitions used by the routines.

- STEP 810 Create a list of probable symbols sorted by likelihood. Based on the measure or measures of comparison generated in step 800, a single list of probable symbols sorted by likelihood is generated.

- STEP 820 Choose the correct symbols and the correct word based on the list, the database of previous confusions and a dictionary. The symbols with greatest likelihood are the candidates from which the correct symbol is chosen.

- the database of previous confusions provides information that allows the correction of the choice of the correct symbol based on previous incorrect identifi ⁇ cations.

- the database of previous confusions comprises, for each symbol, a list of other symbols which have been confused with the first symbol; for example, that the symbol "f" has often been confused with the symbol "b" .

- the symbol or symbols that have previously been confused with the symbol in the li " st are added to the list.

- the symbol "f" is found in the list, then the symbol "b" is added to the list.

- the most likely word is checked against the dictionary.

- the dictionary comprises both a general dictionary used for all users of the system and a personal dictionary for each user of the system. If an entry exists in the dictionary for the most likely word, the word is chosen as the correct identification.

- STEP 830 Check to see if a correction has been entered.

- the user of the system is preferably provided with a visual indication of each symbol recognized.

- the user of the system preferably is provided with a visual indi ⁇ cation of the word recc ⁇ ized.

- the user may indicate manually that a given word was incorrectly recognized and may input a correction.

- STEP 840 Update database of previous confu ⁇ sions. Based on a manual correction entered in step 830 or an automatic correction based on the dictionary, the database of previous confusions is updated. Based on a manual correction, the personal dictionary is also updat ⁇ ed if the corrected word is not found in the dictionary.

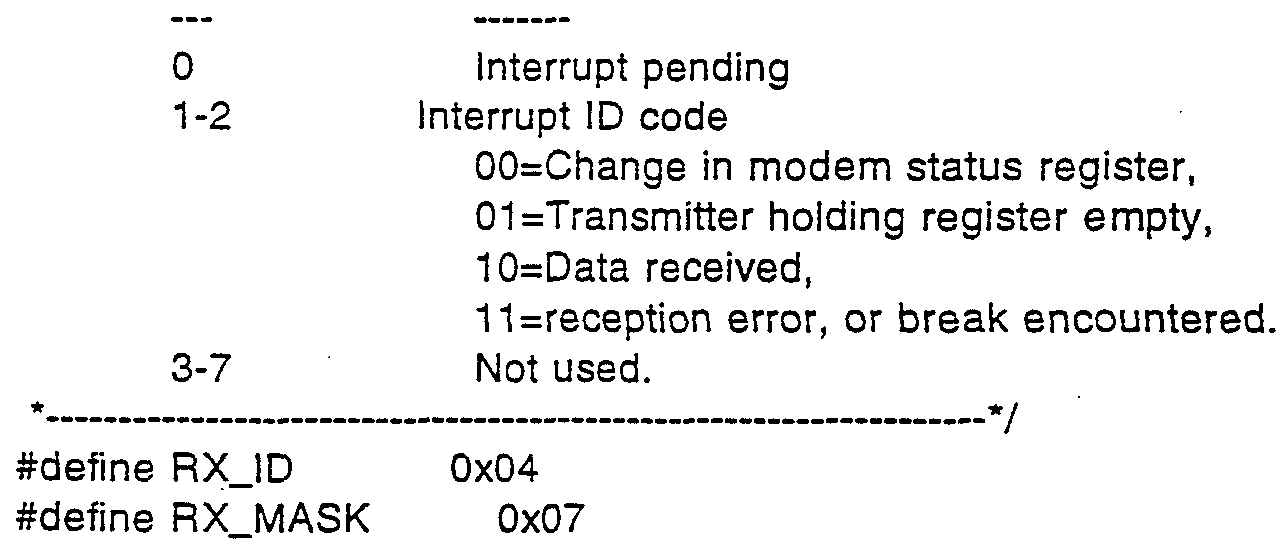

- the 8250 UART has 10 registers accessible through 7 port addresses. Here are their addresses relative tc COM1 BASE and COM2BASE. Note that the baud rate registers, (DLL) and (DLH) are active only when the Divisor-Latch Access-Bit (DLAB) is on. The (DLAB) is bit 7 of the (LCR).

- o LCR Initialize the serial port.

- o IER Controls interr ⁇ t generation.

- o MR Identifies interr. > .s. o MCR Send contort signals to the modem.

- o LSR Monitor the status of the serial port.

- the (IMR) tells the (PIC) to service an interrupt only if it is not masked (FALSE). 7

- the (IMR) tells the (PIC) to service an interrupt only if it is not masked (FALSE). 7

- the 8250 UART has 10 registers accessible through 7 port addresses. Here are their addresses relative to COM1 BASE and COM2BASE. Note that the baud rate registers, (DLL) and (DLH) are active only when the Divisor-Latch Access-Bit (DLAB) is on. The (DLAB) is bit 7 of the (LCR). o TXR Output data to the serial port. o RXR Input data from the serial port. o LCR Initialize the serial port. .. o IER Controls interrupt generation. o IIR Identifies interrupts. o MCR Send contorl signals to the modem. o LSR Monitor the status of the serial port. o MSR Receive status of the modem. o DLL Low byte of baud rate divisor. o DHH High byte of baud rate divisor. 7

- MSR Modem Input Status Register

- TBL BAUD 300 1 #def ⁇ ne TBL_BAUD_150 0 #defi ne TBL_PARITY_NONE 0 #defi ne TBL_PARITY_ODD 1 #defi ne TBL_PARITY_EVEN 2 #defi ne TBL_STOPBITS_1 0 #defi ne TBL_STOPBITS_2 1 #defi ne TBL_DSR_MONITOR_OFF 0 #defi ne TBL_DSR_MONITOR_ON 1 #defi ne TBL_DATALENGTH_7 0 #defi ne TBL_DATALENGTH_8 1 #defi ne TBL_TRANSFER_RATE_MAX 7 #defi ne TBL_TRANSFER_RATE_100 6 #defi ne TBL_TRANSFER_RATE_67 5 #defi ne TBL_TRANSFER

- arr_new[ind_new] arr_old[num_old-1] ;

- Procedure elev calculates the SIN and COS of the angle of elevation 7 void elev ( float x , float y , float z , float *cos_ug , float * sin_ug )

- norma (float) sqrt ( x * x + y * y + z * z ) ; if ( norma ⁇ .00001 ) ⁇

- *cos_ug ( x1 * x2 + y1 * y2 + z1 * 72. ) I normal / norma2 ;

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Multimedia (AREA)

- Computer Vision & Pattern Recognition (AREA)

- General Engineering & Computer Science (AREA)

- Business, Economics & Management (AREA)

- Human Computer Interaction (AREA)

- Educational Administration (AREA)

- Educational Technology (AREA)

- Position Input By Displaying (AREA)

- User Interface Of Digital Computer (AREA)

- Push-Button Switches (AREA)

- Indicating And Signalling Devices For Elevators (AREA)

- Input From Keyboards Or The Like (AREA)

Abstract

Description

Claims

Priority Applications (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| EP95909486A EP0742939A4 (en) | 1994-02-04 | 1995-02-03 | Improved information input apparatus |

| AU17436/95A AU1743695A (en) | 1994-02-04 | 1995-02-03 | Improved information input apparatus |

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| IL10856594A IL108565A0 (en) | 1994-02-04 | 1994-02-04 | Improved information input apparatus |

| IL108,565 | 1994-02-04 |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| WO1995021436A1 true WO1995021436A1 (en) | 1995-08-10 |

Family

ID=11065784

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/US1995/001483 WO1995021436A1 (en) | 1994-02-04 | 1995-02-03 | Improved information input apparatus |

Country Status (6)

| Country | Link |

|---|---|

| EP (1) | EP0742939A4 (en) |

| AU (1) | AU1743695A (en) |

| CA (1) | CA2182627A1 (en) |

| IL (1) | IL108565A0 (en) |

| WO (1) | WO1995021436A1 (en) |

| ZA (1) | ZA95810B (en) |

Cited By (31)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US5875257A (en) * | 1997-03-07 | 1999-02-23 | Massachusetts Institute Of Technology | Apparatus for controlling continuous behavior through hand and arm gestures |

| WO1999046909A1 (en) * | 1998-03-12 | 1999-09-16 | Johan Ullman | Device for entering signs into a cellular telephone |

| WO2000031682A1 (en) * | 1998-11-19 | 2000-06-02 | Daniel Gens | Device for recording data corresponding to written or recorded information |

| US6081261A (en) * | 1995-11-01 | 2000-06-27 | Ricoh Corporation | Manual entry interactive paper and electronic document handling and processing system |

| US6104380A (en) * | 1997-04-14 | 2000-08-15 | Ricoh Company, Ltd. | Direct pointing apparatus for digital displays |

| US6181329B1 (en) | 1997-12-23 | 2001-01-30 | Ricoh Company, Ltd. | Method and apparatus for tracking a hand-held writing instrument with multiple sensors that are calibrated by placing the writing instrument in predetermined positions with respect to the writing surface |

| US6201903B1 (en) | 1997-09-30 | 2001-03-13 | Ricoh Company, Ltd. | Method and apparatus for pen-based faxing |

| WO2001025891A1 (en) * | 1999-10-05 | 2001-04-12 | Ecritek Corporation | Method and apparatus for digitally capturing handwritten notes |

| US6396481B1 (en) | 1999-04-19 | 2002-05-28 | Ecrio Inc. | Apparatus and method for portable handwriting capture |

| WO2002071324A1 (en) * | 2001-03-05 | 2002-09-12 | Fraunhofer-Gesellschaft zur Förderung der angewandten Forschung e.V. | Method and device for tracking an object |

| WO2004003720A1 (en) * | 2002-06-26 | 2004-01-08 | Fingersteps, Inc. | Method and apparatus for composing and performing music |

| WO2004029866A1 (en) * | 2002-09-28 | 2004-04-08 | Koninklijke Philips Electronics N.V. | Method and system for three-dimensional handwriting recognition |

| WO2004059569A1 (en) * | 2002-12-26 | 2004-07-15 | Koninklijke Philips Electronics N.V. | Method and system for three-dimentional handwriting recognition |

| EP1460577A2 (en) * | 2003-03-17 | 2004-09-22 | Samsung Electronics Co., Ltd. | Motion detection for handwriting recognition |

| EP1460524A2 (en) * | 2003-03-14 | 2004-09-22 | Samsung Electronics Co., Ltd. | Motion-based electronic device control apparatus and method |

| US6831632B2 (en) | 2001-04-09 | 2004-12-14 | I. C. + Technologies Ltd. | Apparatus and methods for hand motion tracking and handwriting recognition |

| WO2005034023A1 (en) * | 2003-09-26 | 2005-04-14 | Ostecs, Inc. | Spatial chirographic sign reader |

| WO2005059766A2 (en) * | 2003-12-16 | 2005-06-30 | Koninklijke Philips Electronics N.V. | Pocket device for wireless receiving and delivering |

| EP1728187A2 (en) * | 2003-11-14 | 2006-12-06 | Malome T. Khomo | A method of text interaction using chirographic techniques |

| US7317450B2 (en) | 2003-09-26 | 2008-01-08 | Khomo Malome T | Spatial chirographic sign reader |

| US7554027B2 (en) | 2005-12-05 | 2009-06-30 | Daniel William Moffatt | Method to playback multiple musical instrument digital interface (MIDI) and audio sound files |

| US7668375B2 (en) * | 2003-09-26 | 2010-02-23 | Khomo Malome T | Method of employing a chirographic stylus |

| US7723603B2 (en) | 2002-06-26 | 2010-05-25 | Fingersteps, Inc. | Method and apparatus for composing and performing music |

| US7786366B2 (en) | 2004-07-06 | 2010-08-31 | Daniel William Moffatt | Method and apparatus for universal adaptive music system |

| US8007282B2 (en) | 2001-07-16 | 2011-08-30 | Immersion Corporation | Medical simulation interface apparatus and method |

| US8036465B2 (en) | 2003-09-26 | 2011-10-11 | Khomo Malome T | Method of text interaction using chirographic techniques |

| US8242344B2 (en) | 2002-06-26 | 2012-08-14 | Fingersteps, Inc. | Method and apparatus for composing and performing music |

| US8503086B2 (en) | 1995-11-06 | 2013-08-06 | Impulse Technology Ltd. | System and method for tracking and assessing movement skills in multidimensional space |

| EP3109797A1 (en) * | 2015-06-26 | 2016-12-28 | Orange | Method for recognising handwriting on a physical surface |

| US10254953B2 (en) | 2013-01-21 | 2019-04-09 | Keypoint Technologies India Pvt. Ltd. | Text input method using continuous trace across two or more clusters of candidate words to select two or more words to form a sequence, wherein the candidate words are arranged based on selection probabilities |

| US10474355B2 (en) | 2013-01-21 | 2019-11-12 | Keypoint Technologies India Pvt. Ltd. | Input pattern detection over virtual keyboard for candidate word identification |

Citations (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US4787051A (en) * | 1986-05-16 | 1988-11-22 | Tektronix, Inc. | Inertial mouse system |

| US5027115A (en) * | 1989-09-04 | 1991-06-25 | Matsushita Electric Industrial Co., Ltd. | Pen-type computer input device |

| US5181181A (en) * | 1990-09-27 | 1993-01-19 | Triton Technologies, Inc. | Computer apparatus input device for three-dimensional information |

Family Cites Families (2)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US4513437A (en) * | 1982-06-30 | 1985-04-23 | International Business Machines Corporation | Data input pen for Signature Verification |

| US4839838A (en) * | 1987-03-30 | 1989-06-13 | Labiche Mitchell | Spatial input apparatus |

-

1994

- 1994-02-04 IL IL10856594A patent/IL108565A0/en unknown

-

1995

- 1995-02-02 ZA ZA95810A patent/ZA95810B/en unknown

- 1995-02-03 CA CA002182627A patent/CA2182627A1/en not_active Abandoned

- 1995-02-03 EP EP95909486A patent/EP0742939A4/en not_active Withdrawn

- 1995-02-03 WO PCT/US1995/001483 patent/WO1995021436A1/en not_active Application Discontinuation

- 1995-02-03 AU AU17436/95A patent/AU1743695A/en not_active Abandoned

Patent Citations (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US4787051A (en) * | 1986-05-16 | 1988-11-22 | Tektronix, Inc. | Inertial mouse system |

| US5027115A (en) * | 1989-09-04 | 1991-06-25 | Matsushita Electric Industrial Co., Ltd. | Pen-type computer input device |

| US5181181A (en) * | 1990-09-27 | 1993-01-19 | Triton Technologies, Inc. | Computer apparatus input device for three-dimensional information |

Non-Patent Citations (1)

| Title |

|---|

| See also references of EP0742939A4 * |

Cited By (48)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US6081261A (en) * | 1995-11-01 | 2000-06-27 | Ricoh Corporation | Manual entry interactive paper and electronic document handling and processing system |

| US8503086B2 (en) | 1995-11-06 | 2013-08-06 | Impulse Technology Ltd. | System and method for tracking and assessing movement skills in multidimensional space |

| US8861091B2 (en) | 1995-11-06 | 2014-10-14 | Impulse Technology Ltd. | System and method for tracking and assessing movement skills in multidimensional space |

| US5875257A (en) * | 1997-03-07 | 1999-02-23 | Massachusetts Institute Of Technology | Apparatus for controlling continuous behavior through hand and arm gestures |

| US6104380A (en) * | 1997-04-14 | 2000-08-15 | Ricoh Company, Ltd. | Direct pointing apparatus for digital displays |

| US6201903B1 (en) | 1997-09-30 | 2001-03-13 | Ricoh Company, Ltd. | Method and apparatus for pen-based faxing |

| US6181329B1 (en) | 1997-12-23 | 2001-01-30 | Ricoh Company, Ltd. | Method and apparatus for tracking a hand-held writing instrument with multiple sensors that are calibrated by placing the writing instrument in predetermined positions with respect to the writing surface |

| US6492981B1 (en) | 1997-12-23 | 2002-12-10 | Ricoh Company, Ltd. | Calibration of a system for tracking a writing instrument with multiple sensors |

| WO1999046909A1 (en) * | 1998-03-12 | 1999-09-16 | Johan Ullman | Device for entering signs into a cellular telephone |

| WO2000031682A1 (en) * | 1998-11-19 | 2000-06-02 | Daniel Gens | Device for recording data corresponding to written or recorded information |

| US6396481B1 (en) | 1999-04-19 | 2002-05-28 | Ecrio Inc. | Apparatus and method for portable handwriting capture |

| US6504956B1 (en) | 1999-10-05 | 2003-01-07 | Ecrio Inc. | Method and apparatus for digitally capturing handwritten notes |

| WO2001025891A1 (en) * | 1999-10-05 | 2001-04-12 | Ecritek Corporation | Method and apparatus for digitally capturing handwritten notes |

| WO2002071324A1 (en) * | 2001-03-05 | 2002-09-12 | Fraunhofer-Gesellschaft zur Förderung der angewandten Forschung e.V. | Method and device for tracking an object |

| US7394460B2 (en) | 2001-04-09 | 2008-07-01 | I.C. + Technologies Ltd. | Apparatus and method for hand motion tracking and handwriting recognition |

| US8686976B2 (en) | 2001-04-09 | 2014-04-01 | I.C. + Technologies Ltd. | Apparatus and method for hand motion detection and hand motion tracking generally |

| US6831632B2 (en) | 2001-04-09 | 2004-12-14 | I. C. + Technologies Ltd. | Apparatus and methods for hand motion tracking and handwriting recognition |

| US7911457B2 (en) | 2001-04-09 | 2011-03-22 | I.C. + Technologies Ltd. | Apparatus and methods for hand motion detection and hand motion tracking generally |

| US8007282B2 (en) | 2001-07-16 | 2011-08-30 | Immersion Corporation | Medical simulation interface apparatus and method |

| WO2004003720A1 (en) * | 2002-06-26 | 2004-01-08 | Fingersteps, Inc. | Method and apparatus for composing and performing music |

| US8242344B2 (en) | 2002-06-26 | 2012-08-14 | Fingersteps, Inc. | Method and apparatus for composing and performing music |

| US7129405B2 (en) | 2002-06-26 | 2006-10-31 | Fingersteps, Inc. | Method and apparatus for composing and performing music |

| US7723603B2 (en) | 2002-06-26 | 2010-05-25 | Fingersteps, Inc. | Method and apparatus for composing and performing music |

| CN100377043C (en) * | 2002-09-28 | 2008-03-26 | 皇家飞利浦电子股份有限公司 | Three-dimensional hand-written identification process and system thereof |

| WO2004029866A1 (en) * | 2002-09-28 | 2004-04-08 | Koninklijke Philips Electronics N.V. | Method and system for three-dimensional handwriting recognition |

| US8150162B2 (en) | 2002-09-28 | 2012-04-03 | Koninklijke Philips Electronics N.V. | Method and system for three-dimensional handwriting recognition |

| WO2004059569A1 (en) * | 2002-12-26 | 2004-07-15 | Koninklijke Philips Electronics N.V. | Method and system for three-dimentional handwriting recognition |

| EP1460524A2 (en) * | 2003-03-14 | 2004-09-22 | Samsung Electronics Co., Ltd. | Motion-based electronic device control apparatus and method |

| EP1460524A3 (en) * | 2003-03-14 | 2006-07-26 | Samsung Electronics Co., Ltd. | Motion-based electronic device control apparatus and method |

| EP1460577A3 (en) * | 2003-03-17 | 2005-12-07 | Samsung Electronics Co., Ltd. | Motion detection for handwriting recognition |

| EP1460577A2 (en) * | 2003-03-17 | 2004-09-22 | Samsung Electronics Co., Ltd. | Motion detection for handwriting recognition |

| US7580572B2 (en) | 2003-03-17 | 2009-08-25 | Samsung Electronics Co., Ltd. | Spatial motion recognition system and method using a virtual handwriting plane |

| JP2007524150A (en) * | 2003-09-26 | 2007-08-23 | オステックス・インコーポレーテッド | Spatial writing reader |

| US7668375B2 (en) * | 2003-09-26 | 2010-02-23 | Khomo Malome T | Method of employing a chirographic stylus |

| WO2005034023A1 (en) * | 2003-09-26 | 2005-04-14 | Ostecs, Inc. | Spatial chirographic sign reader |

| US8036465B2 (en) | 2003-09-26 | 2011-10-11 | Khomo Malome T | Method of text interaction using chirographic techniques |

| US7317450B2 (en) | 2003-09-26 | 2008-01-08 | Khomo Malome T | Spatial chirographic sign reader |

| EP1728187A4 (en) * | 2003-11-14 | 2011-04-06 | Malome T Khomo | A method of text interaction using chirographic techniques |

| EP1728187A2 (en) * | 2003-11-14 | 2006-12-06 | Malome T. Khomo | A method of text interaction using chirographic techniques |

| WO2005059766A3 (en) * | 2003-12-16 | 2005-08-11 | Koninkl Philips Electronics Nv | Pocket device for wireless receiving and delivering |

| WO2005059766A2 (en) * | 2003-12-16 | 2005-06-30 | Koninklijke Philips Electronics N.V. | Pocket device for wireless receiving and delivering |

| US7786366B2 (en) | 2004-07-06 | 2010-08-31 | Daniel William Moffatt | Method and apparatus for universal adaptive music system |

| US7554027B2 (en) | 2005-12-05 | 2009-06-30 | Daniel William Moffatt | Method to playback multiple musical instrument digital interface (MIDI) and audio sound files |

| US10254953B2 (en) | 2013-01-21 | 2019-04-09 | Keypoint Technologies India Pvt. Ltd. | Text input method using continuous trace across two or more clusters of candidate words to select two or more words to form a sequence, wherein the candidate words are arranged based on selection probabilities |

| US10474355B2 (en) | 2013-01-21 | 2019-11-12 | Keypoint Technologies India Pvt. Ltd. | Input pattern detection over virtual keyboard for candidate word identification |

| EP3109797A1 (en) * | 2015-06-26 | 2016-12-28 | Orange | Method for recognising handwriting on a physical surface |

| FR3038100A1 (en) * | 2015-06-26 | 2016-12-30 | Orange | METHOD OF RECOGNIZING HANDWRITING ON A PHYSICAL SURFACE |

| US10126825B2 (en) | 2015-06-26 | 2018-11-13 | Orange | Method for recognizing handwriting on a physical surface |

Also Published As

| Publication number | Publication date |

|---|---|

| EP0742939A4 (en) | 2002-10-16 |

| ZA95810B (en) | 1995-11-06 |

| AU1743695A (en) | 1995-08-21 |

| EP0742939A1 (en) | 1996-11-20 |

| IL108565A0 (en) | 1994-05-30 |

| CA2182627A1 (en) | 1995-08-10 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| WO1995021436A1 (en) | Improved information input apparatus | |

| CN100377043C (en) | Three-dimensional hand-written identification process and system thereof | |

| US6081261A (en) | Manual entry interactive paper and electronic document handling and processing system | |

| EP0218407B1 (en) | Dynamic signature verification | |

| US6212296B1 (en) | Method and apparatus for transforming sensor signals into graphical images | |

| Hsu et al. | An inertial pen with dynamic time warping recognizer for handwriting and gesture recognition | |

| EP0666543B1 (en) | Handwriting input apparatus using more than one sensing technique | |

| US20100023314A1 (en) | ASL Glove with 3-Axis Accelerometers | |

| Bui et al. | Recognizing postures in Vietnamese sign language with MEMS accelerometers | |

| US20020181744A1 (en) | Electronic module for sensing pen motion | |

| ZA200603312B (en) | Spatial character recognition technique and chirographic text character reader | |

| CN105159463B (en) | A kind of contactless wearable intelligent ring system and its gesture identification method | |

| Oh et al. | Inertial sensor based recognition of 3-D character gestures with an ensemble classifiers | |

| Ong et al. | Sign-language recognition through gesture & movement analysis (SIGMA) | |

| Kim et al. | Recognition of sign language with an inertial sensor-based data glove | |

| EP1668566B1 (en) | Spatial chirographic sign reader | |

| US20150116285A1 (en) | Method and apparatus for electronic capture of handwriting and drawing | |

| Renuka et al. | Online hand written character recognition using digital pen for static authentication | |

| JPH08507886A (en) | Handwriting reader | |

| Mohandes et al. | Automation of the Arabic sign language recognition | |

| Zhang et al. | Towards an ubiquitous wireless digital writing instrument using MEMS motion sensing technology | |

| US11157099B2 (en) | Electronic writing device and a method for operating the same | |

| Chen et al. | A fusion recognition method based on multifeature hidden markov model for dynamic hand gesture | |

| Toyozumi et al. | Trajectory reconstruction algorithm based on sensor fusion between IMU and strain gauge for stand-alone digital pen | |

| AU2021101436A4 (en) | Wearable sign language detection system |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| AK | Designated states |

Kind code of ref document: A1 Designated state(s): AM AT AU BB BG BR BY CA CH CN CZ DE DK EE ES FI GB GE HU JP KE KG KP KR KZ LK LR LT LU LV MD MG MN MW MX NL NO NZ PL PT RO RU SD SE SI SK TJ TT UA US UZ VN |

|

| AL | Designated countries for regional patents |

Kind code of ref document: A1 Designated state(s): KE MW SD SZ AT BE CH DE DK ES FR GB GR IE IT LU MC NL PT SE BF BJ CF CG CI CM GA GN ML MR NE SN TD TG |

|

| CFP | Corrected version of a pamphlet front page | ||

| CR1 | Correction of entry in section i |

Free format text: PAT.BUL.34/95 UNDER INID (51) "IPC" REPLACE "G09B 3/02" BY "G09G 3/02" |

|

| 121 | Ep: the epo has been informed by wipo that ep was designated in this application | ||

| DFPE | Request for preliminary examination filed prior to expiration of 19th month from priority date (pct application filed before 20040101) | ||

| WWE | Wipo information: entry into national phase |

Ref document number: 2182627 Country of ref document: CA Ref document number: 281104 Country of ref document: NZ |

|

| WWE | Wipo information: entry into national phase |

Ref document number: 1995909486 Country of ref document: EP |

|

| ENP | Entry into the national phase |

Ref document number: 1996 687400 Country of ref document: US Date of ref document: 19961011 Kind code of ref document: A |

|

| WWP | Wipo information: published in national office |

Ref document number: 1995909486 Country of ref document: EP |

|

| REG | Reference to national code |

Ref country code: DE Ref legal event code: 8642 |

|

| WWW | Wipo information: withdrawn in national office |

Ref document number: 1995909486 Country of ref document: EP |