US20040066376A1 - Mobility assist device - Google Patents

Mobility assist device Download PDFInfo

- Publication number

- US20040066376A1 US20040066376A1 US10/626,953 US62695303A US2004066376A1 US 20040066376 A1 US20040066376 A1 US 20040066376A1 US 62695303 A US62695303 A US 62695303A US 2004066376 A1 US2004066376 A1 US 2004066376A1

- Authority

- US

- United States

- Prior art keywords

- display

- objects

- location

- mobile body

- signal

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Abandoned

Links

- 230000000007 visual effect Effects 0.000 claims abstract description 25

- 230000003190 augmentative effect Effects 0.000 claims abstract description 12

- 238000001514 detection method Methods 0.000 claims description 18

- 238000000034 method Methods 0.000 claims description 18

- 238000001914 filtration Methods 0.000 claims description 7

- 230000004313 glare Effects 0.000 claims description 4

- 230000000638 stimulation Effects 0.000 claims description 4

- 230000000903 blocking effect Effects 0.000 claims description 2

- 238000013500 data storage Methods 0.000 claims 11

- 241001465754 Metazoa Species 0.000 claims 1

- 230000007257 malfunction Effects 0.000 claims 1

- 238000012544 monitoring process Methods 0.000 claims 1

- 210000001508 eye Anatomy 0.000 description 26

- 239000011159 matrix material Substances 0.000 description 26

- 230000009466 transformation Effects 0.000 description 25

- 210000003128 head Anatomy 0.000 description 24

- 238000010586 diagram Methods 0.000 description 16

- 238000005259 measurement Methods 0.000 description 9

- 230000004438 eyesight Effects 0.000 description 7

- 230000008859 change Effects 0.000 description 6

- 238000012545 processing Methods 0.000 description 5

- 230000004888 barrier function Effects 0.000 description 4

- 238000010276 construction Methods 0.000 description 4

- 238000013459 approach Methods 0.000 description 3

- 238000006243 chemical reaction Methods 0.000 description 3

- 238000012937 correction Methods 0.000 description 3

- 230000008569 process Effects 0.000 description 3

- 239000011435 rock Substances 0.000 description 3

- 238000013519 translation Methods 0.000 description 3

- XLYOFNOQVPJJNP-UHFFFAOYSA-N water Substances O XLYOFNOQVPJJNP-UHFFFAOYSA-N 0.000 description 3

- 241000533950 Leucojum Species 0.000 description 2

- 230000015572 biosynthetic process Effects 0.000 description 2

- 238000007664 blowing Methods 0.000 description 2

- 210000004556 brain Anatomy 0.000 description 2

- 238000005755 formation reaction Methods 0.000 description 2

- 239000011521 glass Substances 0.000 description 2

- 238000013507 mapping Methods 0.000 description 2

- 238000005065 mining Methods 0.000 description 2

- 210000002569 neuron Anatomy 0.000 description 2

- 230000003287 optical effect Effects 0.000 description 2

- 229920000642 polymer Polymers 0.000 description 2

- 238000005070 sampling Methods 0.000 description 2

- 239000004576 sand Substances 0.000 description 2

- 239000013598 vector Substances 0.000 description 2

- 230000001133 acceleration Effects 0.000 description 1

- 208000003464 asthenopia Diseases 0.000 description 1

- 230000003416 augmentation Effects 0.000 description 1

- 210000005252 bulbus oculi Anatomy 0.000 description 1

- 230000009194 climbing Effects 0.000 description 1

- 239000003086 colorant Substances 0.000 description 1

- 230000001010 compromised effect Effects 0.000 description 1

- 238000011161 development Methods 0.000 description 1

- 230000004069 differentiation Effects 0.000 description 1

- 239000000428 dust Substances 0.000 description 1

- 238000005516 engineering process Methods 0.000 description 1

- 230000002708 enhancing effect Effects 0.000 description 1

- 239000000284 extract Substances 0.000 description 1

- 230000006870 function Effects 0.000 description 1

- 125000001475 halogen functional group Chemical group 0.000 description 1

- 230000001771 impaired effect Effects 0.000 description 1

- 239000004973 liquid crystal related substance Substances 0.000 description 1

- 239000003550 marker Substances 0.000 description 1

- 230000007246 mechanism Effects 0.000 description 1

- 230000004297 night vision Effects 0.000 description 1

- 239000005304 optical glass Substances 0.000 description 1

- 239000003973 paint Substances 0.000 description 1

- 239000013618 particulate matter Substances 0.000 description 1

- 230000000737 periodic effect Effects 0.000 description 1

- 230000003334 potential effect Effects 0.000 description 1

- 230000002035 prolonged effect Effects 0.000 description 1

- 238000011160 research Methods 0.000 description 1

- 230000001953 sensory effect Effects 0.000 description 1

- 239000000779 smoke Substances 0.000 description 1

- 238000005507 spraying Methods 0.000 description 1

- 230000004936 stimulating effect Effects 0.000 description 1

- 239000000126 substance Substances 0.000 description 1

- 238000000844 transformation Methods 0.000 description 1

- 230000016776 visual perception Effects 0.000 description 1

Images

Classifications

-

- G—PHYSICS

- G01—MEASURING; TESTING

- G01C—MEASURING DISTANCES, LEVELS OR BEARINGS; SURVEYING; NAVIGATION; GYROSCOPIC INSTRUMENTS; PHOTOGRAMMETRY OR VIDEOGRAMMETRY

- G01C21/00—Navigation; Navigational instruments not provided for in groups G01C1/00 - G01C19/00

- G01C21/26—Navigation; Navigational instruments not provided for in groups G01C1/00 - G01C19/00 specially adapted for navigation in a road network

- G01C21/34—Route searching; Route guidance

- G01C21/36—Input/output arrangements for on-board computers

- G01C21/3626—Details of the output of route guidance instructions

- G01C21/365—Guidance using head up displays or projectors, e.g. virtual vehicles or arrows projected on the windscreen or on the road itself

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B60—VEHICLES IN GENERAL

- B60R—VEHICLES, VEHICLE FITTINGS, OR VEHICLE PARTS, NOT OTHERWISE PROVIDED FOR

- B60R2300/00—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle

- B60R2300/20—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle characterised by the type of display used

- B60R2300/205—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle characterised by the type of display used using a head-up display

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B60—VEHICLES IN GENERAL

- B60R—VEHICLES, VEHICLE FITTINGS, OR VEHICLE PARTS, NOT OTHERWISE PROVIDED FOR

- B60R2300/00—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle

- B60R2300/30—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle characterised by the type of image processing

- B60R2300/301—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle characterised by the type of image processing combining image information with other obstacle sensor information, e.g. using RADAR/LIDAR/SONAR sensors for estimating risk of collision

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B60—VEHICLES IN GENERAL

- B60R—VEHICLES, VEHICLE FITTINGS, OR VEHICLE PARTS, NOT OTHERWISE PROVIDED FOR

- B60R2300/00—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle

- B60R2300/30—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle characterised by the type of image processing

- B60R2300/302—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle characterised by the type of image processing combining image information with GPS information or vehicle data, e.g. vehicle speed, gyro, steering angle data

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B60—VEHICLES IN GENERAL

- B60R—VEHICLES, VEHICLE FITTINGS, OR VEHICLE PARTS, NOT OTHERWISE PROVIDED FOR

- B60R2300/00—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle

- B60R2300/30—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle characterised by the type of image processing

- B60R2300/304—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle characterised by the type of image processing using merged images, e.g. merging camera image with stored images

- B60R2300/305—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle characterised by the type of image processing using merged images, e.g. merging camera image with stored images merging camera image with lines or icons

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B60—VEHICLES IN GENERAL

- B60R—VEHICLES, VEHICLE FITTINGS, OR VEHICLE PARTS, NOT OTHERWISE PROVIDED FOR

- B60R2300/00—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle

- B60R2300/30—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle characterised by the type of image processing

- B60R2300/307—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle characterised by the type of image processing virtually distinguishing relevant parts of a scene from the background of the scene

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B60—VEHICLES IN GENERAL

- B60R—VEHICLES, VEHICLE FITTINGS, OR VEHICLE PARTS, NOT OTHERWISE PROVIDED FOR

- B60R2300/00—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle

- B60R2300/60—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle characterised by monitoring and displaying vehicle exterior scenes from a transformed perspective

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B60—VEHICLES IN GENERAL

- B60R—VEHICLES, VEHICLE FITTINGS, OR VEHICLE PARTS, NOT OTHERWISE PROVIDED FOR

- B60R2300/00—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle

- B60R2300/80—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle characterised by the intended use of the viewing arrangement

- B60R2300/804—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle characterised by the intended use of the viewing arrangement for lane monitoring

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B60—VEHICLES IN GENERAL

- B60R—VEHICLES, VEHICLE FITTINGS, OR VEHICLE PARTS, NOT OTHERWISE PROVIDED FOR

- B60R2300/00—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle

- B60R2300/80—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle characterised by the intended use of the viewing arrangement

- B60R2300/8093—Details of viewing arrangements using cameras and displays, specially adapted for use in a vehicle characterised by the intended use of the viewing arrangement for obstacle warning

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B60—VEHICLES IN GENERAL

- B60T—VEHICLE BRAKE CONTROL SYSTEMS OR PARTS THEREOF; BRAKE CONTROL SYSTEMS OR PARTS THEREOF, IN GENERAL; ARRANGEMENT OF BRAKING ELEMENTS ON VEHICLES IN GENERAL; PORTABLE DEVICES FOR PREVENTING UNWANTED MOVEMENT OF VEHICLES; VEHICLE MODIFICATIONS TO FACILITATE COOLING OF BRAKES

- B60T2201/00—Particular use of vehicle brake systems; Special systems using also the brakes; Special software modules within the brake system controller

- B60T2201/08—Lane monitoring; Lane Keeping Systems

-

- B—PERFORMING OPERATIONS; TRANSPORTING

- B60—VEHICLES IN GENERAL

- B60T—VEHICLE BRAKE CONTROL SYSTEMS OR PARTS THEREOF; BRAKE CONTROL SYSTEMS OR PARTS THEREOF, IN GENERAL; ARRANGEMENT OF BRAKING ELEMENTS ON VEHICLES IN GENERAL; PORTABLE DEVICES FOR PREVENTING UNWANTED MOVEMENT OF VEHICLES; VEHICLE MODIFICATIONS TO FACILITATE COOLING OF BRAKES

- B60T2201/00—Particular use of vehicle brake systems; Special systems using also the brakes; Special software modules within the brake system controller

- B60T2201/08—Lane monitoring; Lane Keeping Systems

- B60T2201/086—Lane monitoring; Lane Keeping Systems using driver related features

Definitions

- the present invention deals with mobility assistance. More particularly, the present invention deals with a vision assist device in the form of a head up display (HUD) for assisting mobility of a mobile body, such as a person non-motorized vehicle or motor vehicle.

- HUD head up display

- the driver's forward-looking vision simply does not provide enough information to facilitate safe control of the vehicle. This can be exacerbated, particularly on snow removal equipment, because even on a relatively calm, clear day, snow can be blown up from the front or sides of snowplow blades, substantially obstructing the visual field of the driver.

- the present invention is directed to a visual assist device which provides a conformal, augmented display to assist in movement of a mobile body.

- the mobile body is a vehicle (motorized or non-motorized) and the present invention assists the driver in either lane keeping or collision avoidance, or both.

- the system can display lane boundaries, other navigational or guidance elements or a variety of other objects in proper perspective, to assist the driver.

- the mobile body is a person (or group of people) and the present invention assists the person in either staying on a prescribed path or collision avoidance or both.

- the system can display path boundaries, other navigational or guidance elements or a variety of other objects in proper perspective, to assist the walking person.

- FIG. 1 is a block diagram of a mobility assist device in accordance with one embodiment of the present invention.

- FIGS. 2 is a more detailed block diagrams of another embodiment of the mobility assist device.

- FIG. 3A is a partial-pictorial and partial block diagram illustrating operation of a mobility assist device in accordance with one embodiment of the present invention.

- FIG. 3B illustrates the concept of a combiner and virtual screen.

- FIGS. 3C, 3D and 3 E are pictorial illustrations of a conformal, augmented projection and display in accordance with one embodiment of the present invention.

- FIGS. 3F, 3G, 3 H and 3 I are pictorial illustrations of an actual conformal, augmented display in accordance with an embodiment of the present invention.

- FIGS. 4 A- 4 C are flow diagrams illustrating general operation of the mobility assist device.

- FIG. 5A illustrates coordinate frames used in accordance with one embodiment of the present invention.

- FIGS. 5 B- 1 to 5 K- 3 illustrate the development of a coordinate transformation matrix in accordance with one embodiment of the present invention.

- FIG. 6 is a side view of a vehicle employing the ranging system in accordance with one embodiment of the present invention.

- FIG. 7 is a flow diagram illustrating a use of the present invention in performing system diagnostics and improved radar processing.

- FIG. 8 is a pictorial view of a head up virtual mirror, in accordance with one embodiment of the present invention.

- FIG. 9 is a top view of one embodiment of a system used to obtain position information corresponding to a vehicle.

- FIG. 10 is a block diagram of another embodiment of the present invention.

- FIG. 1 is a simplified block diagram of one embodiment of driver assist device 10 in accordance with the present invention.

- Driver assist device 10 includes controller 12 , vehicle location system 14 , geospatial database 16 , ranging system 18 , operator interface 20 and display 22 .

- controller 12 is a microprocessor, microcontroller, digital computer, or other similar control device having associated memory and timing circuitry. It should be understood that the memory can be integrated with controller 12 , or be located separately therefrom. The memory, of course, may include random access memory, read only memory, magnetic or optical disc drives, tape memory, or any other suitable computer readable medium.

- Operator interface 20 is illustratively a keyboard, a touch-sensitive screen, a point and click user input device (e.g. a mouse), a keypad, a voice activated interface, joystick, or any other type of user interface suitable for receiving user commands, and providing those commands to controller 12 , as well as providing a user viewable indication of operating conditions from controller 12 to the user.

- the operator interface may also include, for example, the steering wheel and the throttle and brake pedals suitably instrumented to detect the operator's desired control inputs of heading angle and speed.

- Operator interface 20 may also include, for example, a LCD screen, LEDs, a plasma display, a CRT, audible noise generators, or any other suitable operator interface display or speaker unit.

- vehicle location system 14 determines and provides a vehicle location signal, indicative of the vehicle location in which driver assist device 10 is mounted, to controller 12 .

- vehicle location system 14 can include a global positioning system receiver (GPS receiver) such as a differential GPS receiver, an earth reference position measuring system, a dead reckoning system (such as odometery and an electronic compass), an inertial measurement unit (such as accelerometers, inclinometers, or rate gyroscopes), etc.

- GPS receiver global positioning system receiver

- vehicle location system 14 periodically provides a location signal to controller 12 which indicates the location of the vehicle on the surface of the earth.

- Geospatial database 16 contains a digital map which digitally locates road boundaries, lane boundaries, possibly some landmarks (such as road signs, water towers, or other landmarks) and any other desired items (such as road barriers, bridges etc . . . ) and describes a precise location and attributes of those items on the surface of the earth.

- landmarks such as road signs, water towers, or other landmarks

- any other desired items such as road barriers, bridges etc . . .

- the earth is approximately spherical in shape, it is convenient to determine a location on the surface of the earth if the location values are expressed in terms of an angle from a reference point.

- Longitude and latitude are the most commonly used angles to express a location on the earth's surface or in orbits around the earth.

- Latitude is a measurement on a globe of location north or south of the equator, and longitude is a measurement of the location east or west of the prime meridian at Greenwich, the specifically designated imaginary north-south line that passes through both geographic poles of the earth and Greenwich, England.

- the combinations of meridians of longitude and parallels of latitude establishes a framework or grid by means of which exact positions can be determined in reference to the prime meridian and the equator.

- Many of the currently available GPS systems provide latitude and longitude values as location data.

- One standard projection method is the Lambert Conformal Conic Projection Method. This projection method is extensively used in a ellipsoidal form for large scale mapping of regions of predominantly east-west extent, including topographic, quadrangles for many of the U.S. state plane coordinate system zones, maps in the International Map of the World series and the U.S. State Base maps. The method uses well known, and publicly available, conversion equations to calculate state coordinate values from GPS receiver longitude and latitude angle data.

- the digital map stored in the geospatial database 16 contains a series of numeric location data of, for example, the center line and lane boundaries of a road on which system 10 is to be used, as well as construction data which is given by a number of shape parameters including, starting and ending points of straight paths, the center of circular sections, and starting and ending angles of circular sections. While the present system is described herein in terms of starting and ending points of circular sections it could be described in terms of starting and ending points and any curvature between those points. For example, a straight path can be characterized as a section of zero curvature. Each of these items is indicated by a parameter marker, which indicates the type of parameter it is, and has associated location data giving the precise geographic location of that point on the map.

- each road point of the digital map in database 16 was generated at uniform 10 meter intervals.

- the road points represent only the centerline of the road, and the lane boundaries are calculated from that centerline point.

- both the center line and lane boundaries are mapped.

- geospatial database 16 also illustratively contains the exact location data indicative of the exact geographical location of street signs and other desirable landmarks.

- Database 16 can be obtained by manual mapping operations or by a number of automated methods such as, for example, placing a GPS receiver on the lane stripe paint spraying nozzle or tape laying mandrel to continuously obtain locations of lane boundaries.

- Ranging system 18 is configured to detect targets in the vicinity of the vehicle in which system 10 is implemented, and also to detect a location (such as range, range rate and azimuth angle) of the detected targets, relative to the vehicle.

- Targets are illustratively objects which must be monitored because they may collide with the mobile body either due to motion of the body or of the object.

- ranging system 18 is a radar system commercially available from Eaton Vorad.

- ranging system 18 can also include a passive or active infrared system (which could also provide the amount of heat emitted from the target) or laser based ranging system, or a directional ultrasonic system, or other similar systems.

- Another embodiment of system 18 is an infrared sensor calibrated to obtain a scaling factor for range, range rate and azimuth which is used for transformation to an eye coordinate system.

- Display 22 includes a projection unit and one or more combiners which are described in greater detail later in the specification.

- the projection unit receives a video signal from controller 12 and projects video images onto one or more combiners.

- the projection unit illustratively includes a liquid crystal display (LCD) matrix and a high-intensity light source similar to a conventional video projector, except that it is small so that it fits near the driver's seat space.

- the combiner is a partially-reflective, partially transmissive beam splitter formed of optical glass or polymer for reflecting the projected light from the projection unit back to the driver.

- the combiner is positioned such that the driver looks through the combiner, when looking through the forward-looking visual field, so that the driver can see both the actual outside road scene, as well as the computer generated images projected onto the combiner.

- the computer-generated images substantially overlay the actual images.

- combiners or other similar devices can be placed about the driver to cover substantially all fields of view or be implemented in the glass of the windshield and windows. This can illustratively be implemented using a plurality of projectors or a single projector with appropriate optics to scan the projected image across the appropriate fields of view.

- FIG. 2 illustrates that controller 12 may actually be formed of first controller 24 and second controller 26 (or any number of controllers with processing distributed among them, as desired).

- first controller 24 performs the primary data processing functions with respect to sensory data acquisition, and also performs database queries in the geospatial database 16 . This entails obtaining velocity and heading information from GPS receiver and correction system 28 .

- First controller 24 also performs processing of the target signal from radar ranging system 18 .

- FIG. 2 also illustrates that vehicle location system 14 may illustratively include a differential GPS receiver and correction system 28 as well as an auxiliary inertial measurement unit (IMU) 30 (although other approaches would also work).

- Second controller 26 processes signals from auxiliary IMU 30 , where necessary, and handles graphics computations for providing the appropriate video signal to display 22 .

- differential GPS receiver and correcting system 28 is illustratively a Novatel RT-20 differential GPS (DGPS) system with a 20-centimeter accuracy, while operating at a 5 Hz sampling rate or Trimble MS 750 with 2 cm accuracy operating at 10 Hz sampling rate.

- DGPS Novatel RT-20 differential GPS

- FIG. 2 also illustrates that system 10 can include optional vehicle orientation detection system 31 and head tracking system 32 .

- Vehicle orientation detection system 31 detects the orientation (such as roll and pitch) of the vehicle in which system 10 is implemented.

- the roll angle refers to the rotational orientation of the vehicle about its longitudinal axis (which is parallel to its direction of travel) .

- the roll angle can change, for example, if the vehicle is driving over a banked road, or on uneven terrain.

- the pitch angle is the angle that the vehicle makes in a vertical plane along the longitudinal direction. The pitch angle becomes significant if the vehicle is climbing up or descending down a hill. Taking into account the pitch and roll angles can make the projected image more accurate, and more closely conform to the actual image seen by the driver.

- Optional head tracking system 32 can be provided to accommodate for movements in the driver's head or eye position relative to the vehicle.

- the actual head and eye position of the driver is not monitored.

- the dimensions of the cab or operator compartment of the vehicle in which system 10 is implemented are taken and used, along with ergonomic data, such as the height and eye position of an operator, given the dimension of the operator compartment, and the image is projected on display 22 such that the displayed images will substantially overlie the actual images for an average operator.

- Specific measurements can be taken for any given operator as well, such that such a system can more closely conform to any given operator.

- Head tracking system 32 tracks the position of the operator's head, and eyes, in real time.

- FIGS. 3 A- 3 E better illustrate the display of information on display 22 .

- FIG. 3A illustrates that display 22 includes projector 40 , and combiner 42 .

- FIG. 3A also illustrates an operator 44 sitting in an operator compartment which includes seat 46 and which is partially defined by windshield 48 .

- Projector 40 receives the video display signal from controller 12 and projects road data onto combiner 42 .

- Combiner 42 is partially reflective and partially transmissive. Therefore, the operator looks forward through combiner 42 and windshield 48 to a virtual focal plane 50 .

- the road data (such as lane boundaries) are projected from projector 40 in proper perspective onto combiner 42 such that the lane boundaries appear to substantially overlie those which the operator actually sees, in the correct perspective. In this way, when the operator's view of the actual lane boundaries becomes obstructed, the operator can safely maintain lane keeping because the operator can navigate by the projected lane boundaries.

- FIG. 3A also illustrates that combiner 42 , in one illustrative embodiment, is hinged to an upper surface or side surface or other structural part 52 , of the operator compartment. Therefore, combiner 42 can be pivoted along an arc generally indicated by arrow 54 , up and out of the view of the operator, on days when no driver assistance is needed, and down to the position shown in FIG. 3A, when the operator desires to look through combiner 42 .

- FIG. 3B better illustrates combiner 42 , window 48 and virtual screen or focal plane 50 .

- Combiner 42 while being partially reflective, is essentially a transparent, optically correct, coated glass or polymer lens. Light reaching the eyes of operator 44 is a combination of light passing through the lens and light reflected off of the lens from the projector. With an unobstructed forward-looking visual field, the driver actually sees two images accurately superimposed together. The image passing through the combiner 42 comes from the actual forward-looking field of view, while the reflected image is generated by the graphics processor portion of controller 12 .

- the optical characteristics of combiner 42 allow the combination of elements to generate the virtual screen, or virtual focal plane 50 , which is illustratively projected to appear approximately 30-80 feet ahead of combiner 42 . This feature results in a virtual focus in front of the vehicle, and ensures that the driver's eyes are not required to focus back and forth between the real image and the virtual image, thus reducing eyestrain and fatigue.

- combiner 42 is formed such that the visual image size spans approximately 30° along a horizontal axis and 15° along a vertical axis with the projector located approximately 18 inches from the combiner.

- FIG. 1 Another embodiment is a helmet supported visor (or eyeglass device) on which images are projected, through which the driver can still see.

- Such displays might include technologies such as those available from Kaiser Electro-Optics, Inc. of Carlsbad, Calif., The MicroOptical Corporation of Westwood, Mass., Universal Display Corporation of Ewing, N.J., Microvision, Inc. of Bothell, Wash. and IODisplay System LLC of Menlo Park, Calif.

- FIGS. 3C and 3D are illustrative displays from projector 40 which are projected onto combiner 42 .

- the left most line is the left side road boundary.

- the dotted line corresponds to the centerline of a two-way road, while the right most curved line, with vertical poles, corresponds to the right-hand side road boundary.

- the gray circle near the center of the image shown in FIG. 3C corresponds to a target detected and located by ranging system 18 described in greater detail later in the application.

- the gray shape need not be a circle but could be any icon or shape and could be transparent, opaque or translucent.

- the screens illustrated in FIGS. 3C and 3D can illustratively be projected in the forward-looking visual field of the driver by projecting them onto combiner 42 with the correct scale so that objects (including the painted line stripes and road boundaries) in the screen are superimposed on the actual objects in the outer scene observed by the driver.

- the black area on the screens illustrated in FIGS. 3C and 3D appear transparent on combiner 42 under typical operating conditions. Only the brightly colored lines appear on the virtual image that is projected onto combiner 42 .

- the thickness and colors of the road boundaries illustrated in FIGS. 3C and 3D can be varied, as desired, they are illustratively white lines that are approximately 1-5 pixels thick while the center line is also white and is approximately 1-5 pixels thick as well.

- FIG. 3E illustrates a virtual image projected onto an actual image as seen through combiner 42 by the driver.

- the outline of combiner 42 can be seen in the illustration of FIG. 3E and the area 60 which includes the projected image has been outlined in FIG. 3E for the sake of clarity, although no such outline actually appears on the display.

- the display generated is a conformal, augmented display which is highly useful in low-visibility situations.

- Geographic landmarks are projected onto combiner 42 and are aligned with the view out of the windshield.

- Fixed roadside signs i.e., traditional speed limit signs, exit information signs, etc.

- Data supporting fixed signage and other fixed items projected onto the display are retrieved from geospatial database 16 .

- FIGS. 3 F- 3 H are pictorial illustrations of actual displays.

- FIG. 3F illustrates two vehicles in close proximity to the vehicle on which system 10 is deployed. It can be seen that the two vehicles have been detected by ranging system 18 (discussed in greater detail below) and have icons projected thereover.

- FIG. 3G illustrates a vehicle more distant than those in FIG. 3F.

- FIG. 3G also shows line boundaries which are projected over the actual boundaries.

- FIG. 3H shows even more distant vehicles and also illustrates objects around an intersection. For example, right turn lane markers are shown displayed over the actual lane boundaries.

- variable road signs such as stoplights, caution lights, railroad crossing warnings, etc.

- processor 12 determines, based on access to the geospatial database, that a variable sign is within the normal viewing distance of the vehicle.

- a radio frequency (RF) receiver for instance

- Processor 12 then proceeds to project the variable sign information to the driver on the projector.

- RF radio frequency

- this can take any desirable form. For instance, a stop light with a currently red light can be projected, such that it overlies the actual stoplight and such that the red light is highly visible to the driver.

- Other suitable information and display items can be implemented as well.

- text of signs or road markers can be enlarged to assist drivers with poor night vision.

- Items outside the driver's field of view can be displayed (e.g., at the top or sides of. the display) to give the driver information about objects out of view.

- Such items can be fixed or transitionary objects or in the nature of advertising such as goods or services available in the vicinity of the vehicle.

- Such information can be included in the geospatial database and selectively retrieved based on vehicle position.

- Directional signs can also be incorporated into the display to guide the driver to a destination (such as a rest area or hotel), as shown in FIG. 3I. It can be seen that the directional arrows are superimposed directly over the lane.

- database 16 can be stored locally on the vehicle or queried remotely. Also, database 16 can be periodically updated (either remotely or directly) with a wide variety of information such as detour or road construction information or any other desired information.

- Transitory obstacles also referred to herein as unexpected targets

- Transitory obstacle information indicative of such transitory targets or obstacles is derived from ranging system 18 .

- Transitory obstacles are distinguished from conventional roadside obstacles (such as road signs, etc.) by processor 12 .

- Processor 12 senses an obstacle from the signal provided by ranging system 18 .

- Processor 12 determines whether the target indicated by ranging system 18 actually corresponds to a conventional, expected roadside obstacle which has been mapped into database 16 .

- the transitory targets basically represent items which are not in a fixed location during normal operating conditions on the roadway.

- Such objects can include water towers, trees, bridges, road dividers, other landmarks, etc . . .

- Such indicators can also be warnings or alarms such as not to turn the wrong way on a one-way road or an off ramp, that the vehicle is approaching an intersection or work zone at too high a high rate of speed.

- the combiner can perform other tasks as well.

- Such tasks can include the display of blocking templates which block out or reduce glare from the sun or headlights from other cars. The location of the sun can be computed from the time, and its position relative to the driver can also be computed (the same is true for cars). Therefore, an icon can simply be displayed to block the undesired glare.

- the displays can be integrated with other operator perceptible features, such as a haptic feedback, sound, seat or steering wheel vibration, etc.

- FIGS. 4 A- 4 C illustrate the operation of system 10 in greater detail.

- FIG. 4A is a functional block diagram of a portion of system 10 illustrating software components and internal data flow throughout system 10 .

- FIG. 4B is a simplified flow diagram illustrating operation of system 10

- FIG. 4C is a simplified flow diagram illustrating target filtering in accordance with one embodiment of the present invention.

- system 10 It is first determined whether system 10 is receiving vehicle location information from its primary vehicle location system. This is indicated by block 62 in FIG. 4B.

- this signal may be temporarily lost. The signal may be lost, for instance, when the vehicle goes under a bridge, or simply goes through a pocket or area where GPS or correction signals can not be received or is distorted. If the primary vehicle location signal is available, that signal is received as indicated by block 64 . If not, system 10 accesses information from auxiliary inertial measurement unit 30 .

- Auxiliary IMU 30 may, illustratively, be complimented by a dead reckoning system which utilizes the last known position provided by the GPS receiver, as well as speed and angle information, in order to determine a new position. Receiving the location signal from auxiliary IMU 30 is illustrated by block 66 .

- system 10 also optionally receives head or eye location information, as well as optional vehicle orientation data.

- vehicle orientation information can be obtained from a roll rate gyroscope 68 to obtain the roll angle, and a tilt sensor 70 (such as an accelerometer) to obtain the pitch angle as well as a yaw rate sensor 69 to obtain yaw angle 83 .

- obtaining the head or eye location data and the vehicle orientation data are illustrated by optional blocks 72 and 74 in FIG. 4B.

- the optional driver's eye data is illustrated by block 76 in FIG. 4A

- the vehicle location data is indicated by block 78

- the pitch and roll angles are indicated by blocks 80 and 82 , respectively.

- a coordinate transformation matrix is constructed, as described in greater detail below, from the location and heading angle of the moving vehicle, and from the optional driver's head or eye data and vehicle orientation data, where that data is sensed.

- the location data is converted into a local coordinate measurement using the transformation matrix, and is then fed into the perspective projection routines to calculate and draw the road shape and target icons in the computer's graphic memory.

- the road shape and target icons are then projected as a virtual view in the driver's visual field, as illustrated in FIG. 3B above.

- the coordinate transformation block transforms the coordinate frame of the digital map from the global coordinate frame to the local coordinate frame.

- the local coordinate frame is a moving coordinate frame that is illustratively attached to the driver's head.

- the coordinate transformation is illustratively performed by multiplying a four-by-four homogeneous transformation matrix to the road data points although any other coordinate system transformations can be used, such as the Quaternion or other approach. Because the vehicle is kept moving, the matrix must be updated in real time. Movement of the driver's eye that is included in the matrix is also measured and fed into the matrix calculation in real time. Where no head tracking system 32 is provided, then the head angle and position of the driver's eyes are assumed to be constant and the driver is assumed tobe looking forward from a nominal position.

- the heading angle of the vehicle is estimated from the past history of the GPS location data.

- a rate gyroscope can be used to determine vehicle heading as well.

- An absolute heading angle is used in computing the correct coordinate transformation matrix.

- any other suitable method to measure an absolute heading angle can be used as well, such as a magnetometer (electronic compass) or an inertial measurement unit. Further, where pitch and roll sensors are not used, these angles can be assumed to be 0.

- the ranging information from ranging system 18 is also received by controller 12 (shown in FIG. 2). This is indicated by blocks 83 in FIG. 4A and by block 86 in FIG. 4B.

- the ranging data illustratively indicates the presence and location of targets around the vehicle.

- the radar ranging system 18 developed and available from Eaton Vorad, or Delphi, Celsius Tech, or other vendors provides a signal indicative of the presence of a radar target, its range, its range rate and the azimuth angle of that target with respect to the radar apparatus.

- controller 12 queries the digital road map in geospatial database 16 and extracts local road data 88 .

- the local road data provides information with respect to road boundaries as seen by the operator in the position of the vehicle, and also other potential radar targets, such as road signs, road barriers, etc.

- Accessing geospatial database 16 (which can be stored on the vehicle and receive periodic updates or can be stored remotely and accessed wirelessly) is indicated by block 90 in FIG. 4B.

- Controller 12 determines whether the targets indicated by target data 83 are expected targets. Controller 12 does this by examining the information in geospatial database 16 . In other words, if the targets correspond to road signs, road barriers, bridges, or other information which would provide a radar return to ranging system 18 , but which is expected because it is mapped into database 16 and does not need to be brought to the attention of the driver, that information can be filtered out such that the driver is not alerted to every single possible item on the road which would provide a radar return. Certain objects may a priori be programmed to be brought to the attention of the driver. Such items may be guard rails, bridge abutments, etc . . . and the filtering can be selective, as desired.

- all filtering can be turned off so all objects are brought to the driver's attention.

- the driver can change filtering based on substantially any predetermined filtering criteria, such as distance from the road or driver, location relative to the road or the driver, whether the objects are moving or stationary, or substantially any other criteria.

- criteria can be invoked by the user through the user interface, or they can be pre-programmed into controller 12 .

- the target information is determined to correspond to an unexpected target, such as a moving vehicle ahead of the vehicle on which system 10 is implemented, such as a stalled car or a pedestrian on the side of the road, or some other transitory target which has not been mapped to the geospatial database as a permanent, or expected target. It has been found that if all expected targets are brought to the operator's attention, this substantially amounts to noise such that when real targets are brought to the operator's attention, they are not as readily perceived by the operator. Therefore, filtering of targets not posing a threat to the driver is performed as is illustrated by block 92 in FIG. 4B.

- the frame transformation is performed using the transformation matrix.

- the result of the coordinate frame transformation provides the road boundary data, as well as the target data, seen from the driver's eye perspective.

- the road boundary and target data is output, as illustrated by block 94 in FIG. 4B, and as indicated by block 96 in FIG. 4A.

- the road and target shapes are generated by processor 12 for projection in the proper perspective.

- the actual image projected is clipped such that it only includes that part of the road which would be visible by the operator with an unobstructed forward-looking visual field. Clipping is described in greater detail below, and is illustrated by block 104 in FIG. 4A. The result of the entire process is the projected road and target data as illustrated by block 106 in FIG. 4A.

- FIG. 4C is a more detailed flow diagram illustrating how targets are projected or filtered from the display.

- ranging system 18 is providing a target signal indicating the presence of a target. This is indicated by block 108 . If so, then when controller 12 accesses geospatial database 16 , controller 12 determines whether sensed targets correlate to any expected targets. This is indicated by block 110 . If so, the expected targets are filtered from the sensed targets.

- ranging system 18 may provide an indication of a plurality of targets at any given time. In that case, only the expected targets are filtered from the target signal. This is indicated by block 112 . If any targets remain, other than the expected targets, the display signal is generated in which the unexpected, or transitory, targets are placed conformally on the display. This is indicated by block 114 .

- the display signal is also configured such that guidance markers (such as lane boundaries, lane striping or road edges) is also placed conformally on the display. This is indicated by block 116 .

- the display signal is then output to the projector such that the conformal, augmented display is provided to the user. This is indicated by block 118 .

- the term “conformal” is used herein to indicate that the “virtual image” generated by the present system projects images represented by the display in a fashion such that they are substantially aligned, and in proper perspective with, the actual images which would be seen by the driver, with an unobstructed field of view.

- augmented means that the actual image perceived by the operator is supplemented by the virtual image projected onto the head up display. Therefore, even if the driver's forward-looking visual field is obstructed, the augmentation allows the operator to receive and process information, in the proper perspective, as to the actual objects which would be seen with an unobstructed view.

- FIG. 5A The global coordinate frame is illustrated by the axes 120 . All distances and angles are measured about these axes.

- FIG. 5A also shows vehicle 124 , with the vehicle coordinate frame represented by axes 126 and the user's eye coordinate frame (also referred to as the graphic screen coordinate frame) illustrated by axes 128 .

- FIG. 5A also shows road point data 130 , which illustrates data corresponding to the center of road 132 .

- the capital letters “X”, “Y” and “Z” in this description are used as names of each axis.

- the positive Y-axis is the direction to true north

- the positive X-axis is the direction to true east in global coordinate frame 120 .

- Compass 122 is drawn to illustrate that the Y-axis of global coordinate frame 120 points due north.

- the elevation is defined by the Z-axis and is used to express elevation of the road shape and objects adjacent to, or on, the road.

- All of the road points 130 stored in the road map file in geospatial database 16 are illustratively expressed in terms of the global coordinate frame 120 .

- the vehicle coordinate frame 126 (V) is defined and used to express the vehicle configuration data, including the location and orientation of the driver's eye within the operator compartment, relative to the origin of the vehicle.

- the vehicle coordinate frame 126 is attached to the vehicle and moves as the vehicle moves.

- the origin is defined as the point on the ground under the location of the GPS receiver antenna. Everything in the vehicle is measured from the ground point under the GPS antenna. Other points, such as located on a vertical axis through the GPS receiver antenna or at any other location on the vehicle, can also be selected.

- the forward moving direction is defined as the positive y-axis.

- the direction to the right when the vehicle is moving forward is defined as the positive x-axis

- the vertical upward direction is defined as the positive z-axis which is parallel to the global coordinate frame Z-axis.

- the yaw angle, i.e. heading angle, of the vehicle is measured from true north, and has a positive value in the clockwise direction (since the positive z-axis points upward).

- the pitch angle is measured about the x-axis in coordinate frame 126 and the roll angle is measured as a rotation about the y-axis in coordinate frame 126 .

- the local L-coordinate frame 128 is defined and used to express the road data relative to the viewer's location and direction.

- the coordinate system 128 is also referred to herein as the local coordinate frame. Even though the driver's eye location and orientation may be assumed to be constant (where no head tracking system 30 is used) the global information still needs to be converted into the eye-coordinate frame 128 for calculating the perspective projection.

- the location of the eye i.e. the viewing point, is the origin of the local coordinate frame.

- the local coordinate frame 128 is defined with respect to the vehicle coordinate frame.

- the relative location of the driver's eye from the origin of the vehicle coordinate frame is measured and used in the coordinate transformation matrix described in greater detail below.

- the directional angle information from the driver's line of sight is used in constructing the projection screen. This angle information is also integrated into the coordinate transformation matrix.

- the objects in the outer world are drawn on a flat two-dimensional video projection screen which corresponds to the virtual focal plane, or virtual screen 50 perceived by human drivers.

- the virtual screen coordinate frame has only two axes.

- the positive x-axis of the screen is defined to be the same as the positive x-axis of the vehicle coordinate frame 126 for ease in coordinate conversion.

- the upward direction in the screen coordinate frame is the same as the positive z-axis and the forward-looking direction (or distance to the objects located on the visual screen) is the positive y-axis.

- the positive x-axis and the y-axis in the virtual projection screen 50 are mapped to the positive x-axis and the negative y-axis in computer memory space, because the upper left corner is deemed to be the beginning of the video memory.

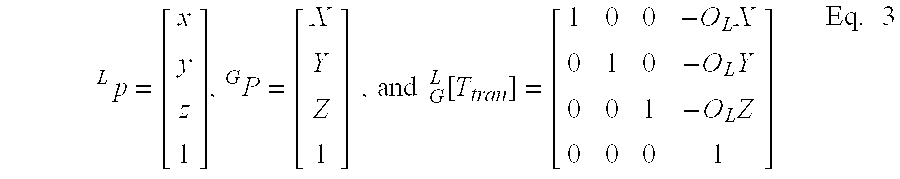

- Road data points including the left and right edges which are expressed with respect to the global coordinate frame ⁇ G ⁇ as P k , shown in FIG. 5B- 1 , are converted into the local coordinate frame ⁇ L ⁇ which is attached to the moving vehicle 124 coordinate frame ⁇ V ⁇ . Its origin (Ov) and direction ( ⁇ v) are changing continually as the vehicle 124 moves.

- the origin (O L ) of the local coordinate frame ⁇ L ⁇ , i.e. driver's eye location, and its orientation ( ⁇ E ) change as the driver moves his or her head and eyeballs.

- a homogeneous transformation matrix [T] was defined and used to convert the global coordinate data into local coordinate data.

- the matrix [T] is developed illustratively, as follows.

- the parameters in FIGS. 5 B- 1 and 5 B- 2 are as follows:

- P k is the k-th road point

- O G is the origin of the global coordinate frame

- O V is the origin of the vehicle coordinate frame with respect to the global coordinate frame

- O E is the origin of the local eye-attached coordinate frame.

- Any point in 3-dimensional space can be expressed in terms of either a global coordinate frame or a local coordinate frame. Because everything seen by the driver is defined with respect to his or her location and viewing direction (i.e. the relative geometrical configuration between the viewer and the environment) all of the viewable environment should be expressed in terms of a local coordinate frame. Then, any objects or line segments can be projected onto a flat surface or video screen by means of the perspective projection. Thus, the mathematical calculation of the coordinate transformation is performed by constructing the homogenous transformation matrix and applying the matrix to the position vectors.

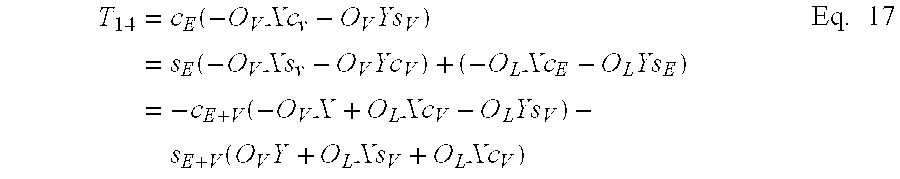

- the coordinate transformation matrix [T] is defined as a result of the multiplication of a number of matrices described in the following paragraphs.

- the letter G P is a point in terms of coordinates X, Y, Z as referenced from the global coordinate system.

- the letter L P represents the same point in terms of x, y, z in the local coordinate system.

- the transformation matrix L G[T tran ] allows for a translational transformation from the global G coordinate system to the local L coordinate system.

- [ x y z 1 ] [ cos ⁇ ⁇ ⁇ sin ⁇ ⁇ ⁇ 0 0 - sin ⁇ ⁇ ⁇ cos ⁇ ⁇ ⁇ 0 0 0 0 1 0 0 0 0 1 ] ⁇ [ X Y Z 1 ] Eq . ⁇ 5

- [ T ] [ C E S E 0 - O L ⁇ XC E - O L ⁇ YS E - S E C E 0 + O L ⁇ XS E - O L ⁇ YC E 0 0 1 - O L ⁇ Z 0 0 1 ] ⁇ [ C V S V 0 - O V ⁇ XC V - O X ⁇ YS V - S V C V 0 + O V ⁇ XS V - O V ⁇ YC V 0 0 1 - O V ⁇ Z 0 0 0 1 ] Eq . ⁇ 11

- T 13 0 Eq. 16

- T 23 0 Eq. 20

- the display screen is the virtual focal plane.

- the display screen is the plane, which is located at S y position, parallel to the z-x plane, where s x , s z , are the horizontal and vertical dimensions of the display screen.

- the object is projected onto the screen, it should be projected with the correct perspective so that the projected images match with the outer scene.

- the head up display system match the drawn road shapes (exactly or at least closely) the actual road which is in front of the driver.

- the perspective projection makes closer objects appear larger and further objects appear smaller.

- the prospective projection can be calculated from triangle similarity as shown in FIGS. 5G to 5 H- 2 . From the figures, one can find the location of the point s(x,z) for the known data p(x,y,z).

- the points are connected using straight lines to build up the road shapes.

- the line-connected road shape provides a better visual cue of the road geometry than plotting just a series of dots.

- the visible limit is illustrated by FIGS. 5I to 5 J- 3 .

- the visible three-dimensional volume is defined as a rectangular cone cut at the display screen. Every object in this visible region needs to be displayed on the projection screen. Objects in the small rectangular cone defined by O L and the display screen, a three dimensional volume space between the viewer's eye and the displaying screen, is displayed in an enlarged size. If the object in this region is too close to the viewer, then it results in an out of limit error or a divide by zero error during the calculation. However, usually there are no objects located in the “enlarging space.”

- FIGS. 5 J- 1 to 5 J- 3 and the following equations of lines were used for checking whether an object is in the visible space or not. Using these clipping techniques, if the position of a point in the local coordinate frame is defined as p(x, y, z) then this point is visible to the viewer only if:

- the point p is in front of the display screen.

- Equations in the diagram of FIGS. 5 J- 1 to 5 J- 3 are not line-equations but equations of planes in 3 dimensional space.

- the above conditions can be expressed by the following equations mathematically, which describe what we mean by “in front of”

- FIGS. 5 K- 1 to 5 K- 3 show one of many possible situations.

- FIG. 5K- 1 is a top view, which is a projection of the xy plane. It will now be described how to locate point p so that only the contained segment is drawn.

- k is an arbitrary real number, (0 ⁇ k ⁇ 1) and

- the projected values s x , and s z can be calculated by a perspective projection in the same manner as the other parameters.

- FIG. 6 illustrates a vehicle 200 with placement of ranging system 18 thereon.

- Vehicle 200 is, illustratively, a snow plow which includes an operator compartment 202 and a snow plow blade 204 .

- Ranging system 18 in the embodiment illustrated in FIG. 6, includes a first radar subsystem 206 and a second radar subsystem 208 . It can be desirable to be able to locate targets closely proximate to blade 204 . However, since radar subsystems 206 and 208 are directional, it is difficult, with one subsystem, to obtain target coverage close to blade 204 , yet still several hundred meters ahead of vehicle 200 , because of the placement of blade 204 .

- the two subsystems 206 and 208 are employed to obtain ranging system 18 .

- Radar subsystem 208 is located just above blade 204 and is directed approximately straightforwardly, in a horizontal plane. Radar subsystem 206 is located above blade 204 and is directed downwardly, such that targets can be detected closely proximate the front of blade 204 .

- the radar subsystems 206 and 208 are each illustratively an array of aligned radar detectors which is continuously scanned by a processor such that radar targets can be detected, and their range, range rate and azimuth angle from the radar subsystem 206 or 208 can be estimated as well. In this way, information regarding the location of radar targets can be provided to controller 12 such that controller 12 can display an icon or other visual element representative of the target on the head up display 22 . of course, the icon can be opaque or transparent.

- the icon representative of the target can be shaped in any desirable shape.

- bit maps can be placed on the head up display 22 which represent targets.

- targets can be small, colored or otherwise coded to indicate distance. In other words, if the targets are very close to vehicle 200 , they can be large, begin to flash, or turn red. Similarly, if the targets are a long distance from vehicle 200 , they can maintain a constant glow or halo.

- FIG. 7 is a flow diagram illustrating how ranging system 18 can be used, in combination with the remainder of the system, to verify operation of the subsystems.

- controller 12 receives a position signal. This is indicated by block 210 . This is the signal, illustratively, from the vehicle location system 14 . Controller 12 then receives a ranging signal, as indicated by block 212 in FIG. 7. This is the signal from ranging system 18 which is indicative of targets located within the ranging field of vehicle 200 .

- controller 12 queries geospatial database 16 . This is indicated by block 214 . In querying geospatial database 16 , controller 12 verifies that targets, such as street signs, road barriers, etc.

- controller 12 determines that system 10 is operating properly. This is indicated by block 216 and 218 . In view of this determination, controller 12 can provide an output to user interface 20 indicating that the system is healthy.

- controller 12 determines that something is not operating correctly, either the ranging system 18 is malfunctioning, the vehicle positioning system is malfunctioning, information retrieval from the geospatial database 16 is malfunctioning or the geospatial database 16 has been corrupted, etc.

- controller 12 illustratively provides an output to user interface (UI) 20 indicating a system problem exists. This is indicated by block 220 . Therefore, while controller 12 may not be able to detect the exact type of error which is occurring, controller 12 can detect that an error is occurring and provide an indication to the operator to have the system checked or to have further diagnostics run.

- UI user interface

- the present invention need not be provided only for the forward-looking field of view of the operator.

- the present system 10 can be implemented as a side-looking or rear-looking virtual mirror.

- ranging system 18 includes radar detectors (or other similar devices) located on the sides or to the rear of vehicle 200 .

- the transformation matrix would be adjusted to transform the view of the operator to the side looking or rear looking, field of view as appropriate.

- Vehicles or objects which are sensed, but which are not part of the fixed geospatial landscape are presented iconically based on the radar or other range sensing devices in ranging system 18 .

- the fixed lane boundaries are also presented conformally to the driver.

- Fixed geospatial landmarks which may be relevant to the driver (such as the backs of road signs, special pavement markings, bridges being passed under, watertowers, trees, etc.) can also be presented to the user, in the proper prospective. This gives the driver a sense of motion as well as cues to proper velocity.

- FIG. 8 One illustration of the present invention as both a forward looking driver assist device and one which assists in a rear view is illustrated in FIG. 8.

- a forward-looking field of view is illustrated by block 250 while the virtual rear view mirror is illustrated by block 252 .

- the view is provided, just as the operator would see when looking in a traditional mirror.

- the mirror may illustratively be virtually gimbaled along any axis (i.e., the image is rotated from side-to-side or top-to-bottom) in software such that the driver can change the angle of the mirror, just as the driver currently can mechanically, to accommodate different driver sizes, or to obtain a different view than is currently being represented by the mirror.

- FIG. 9 gives another illustrative embodiment of a vehicle positioning system which provides vehicle position along the roadway.

- the system illustrated in FIG. 9 can, illustratively, be used as the auxiliary vehicle positioning system 30 illustrated in FIG. 2A. This can provide vehicle positioning information when, for example, the DGPS signal is lost, momentarily, for whatever reason.

- vehicle 200 includes an array of magnetic sensors 260 .

- the road lane 262 is bounded by magnetic strips 264 which, illustratively, are formed of tape having magnetized portions 266 therein. Although a wide variety of such magnetic strips could be used, one illustrative embodiment is illustrated in U.S. Pat. No. 5,853,846 to the 3M Company of St.

- magnetometers in strip 260 are monitored such that the field strength sensed by each magnetometer is identified. Therefore, as the vehicle approaches strip 260 and begins to cross lane boundary 268 , magnetometers 270 and 272 begin to provide a signal indicating a larger field strength.

- Scanning the array of magnetometers is illustratively accomplished using a microprocessor which scans them quickly enough to detect even fairly high frequency changes in vehicle position toward or away from the magnetic elements in the marked lane boundaries. In this way, a measure of the vehicle's position in the lane can be obtained, even if the primary vehicle system is temporarily not working.

- FIG. 9 shows magnetometers mounted to the front of the vehicle, they can be mounted to the rear as well. This would allow an optional calculation of the vehicle's yaw angle relative to the magnetic strips.

- FIG. 10 is a block diagram of another embodiment of the present invention. All items are the same as those illustrated in FIG. 1 and are similarly numbered, and operate substantially the same way. However, rather than providing an output to display 22 , controller 12 provides an output to neurostimulator 300 .

- Neurostimulator 300 is a stimulating device which operates in a known manner to provide stimulation signals to the cortex to elicit image formation in the brain.

- the signal provided by controller 12 includes information as to eye perspective and image size and shape, thus enhancing the ability of neurostimulator 300 to properly stimulate the cortex in a meaningful way. Of course, as the person using the system moves and turns the head, the image stimulation will change accordingly.

- the present invention provides a significant advancement in the art of mobility assist devices, particularly, with respect to moving in conditions where the outward looking field of view of the observer is partially or fully obstructed.

- the present invention provides assistance in not only lane keeping, but also in collision avoidance, since the driver can use the system to steer around displayed obstacles.

- the present invention can also be used in many environments such as snow removal, mining or any other environment where airborne matter obscures vision.

- the invention can also be used in walking or driving in low light areas or at night, or through wooden or rocky areas where vision is obscured by the terrain.

- the present invention can be used on ships or boats to, for example, guide the water-going vessel into port, through a canal, through lock and dams, around rocks or other obstacles.

- the present invention can also be used on non-motorized, earth-based vehicles such as bicycles, wheelchairs, by skiers or substantially any other vehicle.

- the present invention can also be used to aid blind or vision impaired persons.

Abstract

The present invention is directed to a visual mobility assist device which provides a conformal, augmented display to assist a moving body. When the moving body is a motor vehicle, for instance (although it can be substantially any other body), the present invention assists the driver in either lane keeping or collision avoidance, or both. The system can display objects such as lane boundaries, targets, other navigational and guidance elements or objects, or a variety of other indicators, in proper perspective, to assist the driver.

Description

- The present invention deals with mobility assistance. More particularly, the present invention deals with a vision assist device in the form of a head up display (HUD) for assisting mobility of a mobile body, such as a person non-motorized vehicle or motor vehicle.

- Driving a motor vehicle on the road, with a modicum of safety, can be accomplished if two different aspects of driving are maintained. The first is referred to as “collision avoidance” which means maintaining motion of a vehicle without colliding with other obstacles. The second aspect in maintaining safe driving conditions is referred to as “lane keeping” which means maintaining forward motion of a vehicle without erroneously departing from a given driving lane.

- Drivers accomplish collision avoidance and lane keeping by continuously controlling vehicle speed, lateral position and heading direction by adjusting the acceleration and brake pedals, as well as the steering wheel. The ability to adequately maintain both collision avoidance and lane keeping is greatly compromised when the forward-looking visual field of a driver is obstructed. In fact, many researchers have concluded that the driver's ability to perceive the forward-looking visual field is the most essential input for the task of driving.

- There are many different conditions which can obstruct (to varying degrees) the forward-looking visual field of a driver. For example, heavy snowfall, heavy rain, fog, smoke, darkness, blowing dust or sand, or any other substance or mechanism which obstructs (either partially or fully) the forward-looking visual field of a driver makes it difficult to identify obstacles and road boundaries which, in turn, compromises collision avoidance and lane keeping. Similarly, even on sunny, or otherwise clear days, blowing snow or complete coverage of the road by snow, may result in a loss of visual perception of the road. Such “white out” conditions are often encountered by snowplows working on highways, due to the nature of their task. The driver's forward-looking vision simply does not provide enough information to facilitate safe control of the vehicle. This can be exacerbated, particularly on snow removal equipment, because even on a relatively calm, clear day, snow can be blown up from the front or sides of snowplow blades, substantially obstructing the visual field of the driver.

- Similarly, driving at night in heavy snowfall causes the headlight beams of the vehicle to be reflected into the driver's forward-looking view. Snow flakes glare brightly when they are illuminated at night and make the average brightness level perceived by the driver's eye higher than normal. This higher brightness level causes the iris to adapt to the increased brightness and, as a result, the eye becomes insensitive to the darker objects behind the glaring snowflakes, which are often vital to driving. Such objects can include road boundaries, obstacles, other vehicles, signs, etc.

- Research has also been done which indicates that prolonged deprivation of visual stimulation can lead to confusion. For example, scientists believe that one third of human brain neurons are devoted to visual processing. Pilots, who are exposed to an empty visual field for longer than a certain amount of time, such as during high-altitude flight, or flight in thick fog, have a massive number of unstimulated visual neurons. This can lead to control confusion which makes it difficult for the pilot to control the vehicle. A similar condition can occur when attempting to navigate or plow a snowy road during daytime heavy snowfall in a featureless rural environment.

- Many other environments are also plagued by poor visibility conditions. For instance, in military or other environments one may be moving through terrain at night, either in a vehicle or on foot, without the assistance of lights. Further, in mining environments or simply when driving on a dirt, sand or gravel surface particulate matter can obstruct vision. In water-going vehicles, it can be difficult to navigate through canals, around rocks, into a port, or through lock and dams because obstacles may be obscured by fog, below the water, or by other weather conditions. Similarly, surveyors may find it difficult to survey land with dense vegetation or rock formations which obstruct vision. People in non-motorized vehicles (such as in wheelchairs, on bicycles, on skis, etc . . . can find themselves in these environments as well. All such environments, and many others, have visual conditions which act as a hindrance to persons working in, or moving through, those environments.

- The present invention is directed to a visual assist device which provides a conformal, augmented display to assist in movement of a mobile body. In one example, the mobile body is a vehicle (motorized or non-motorized) and the present invention assists the driver in either lane keeping or collision avoidance, or both. The system can display lane boundaries, other navigational or guidance elements or a variety of other objects in proper perspective, to assist the driver. In another example, the mobile body is a person (or group of people) and the present invention assists the person in either staying on a prescribed path or collision avoidance or both. The system can display path boundaries, other navigational or guidance elements or a variety of other objects in proper perspective, to assist the walking person.

- FIG. 1 is a block diagram of a mobility assist device in accordance with one embodiment of the present invention.

- FIGS. 2 is a more detailed block diagrams of another embodiment of the mobility assist device.

- FIG. 3A is a partial-pictorial and partial block diagram illustrating operation of a mobility assist device in accordance with one embodiment of the present invention.

- FIG. 3B illustrates the concept of a combiner and virtual screen.

- FIGS. 3C, 3D and 3E are pictorial illustrations of a conformal, augmented projection and display in accordance with one embodiment of the present invention.

- FIGS. 3F, 3G, 3H and 3I are pictorial illustrations of an actual conformal, augmented display in accordance with an embodiment of the present invention.

- FIGS. 4A-4C are flow diagrams illustrating general operation of the mobility assist device.

- FIG. 5A illustrates coordinate frames used in accordance with one embodiment of the present invention.

- FIGS. 5B-1 to 5K-3 illustrate the development of a coordinate transformation matrix in accordance with one embodiment of the present invention.

- FIG. 6 is a side view of a vehicle employing the ranging system in accordance with one embodiment of the present invention.

- FIG. 7 is a flow diagram illustrating a use of the present invention in performing system diagnostics and improved radar processing.

- FIG. 8 is a pictorial view of a head up virtual mirror, in accordance with one embodiment of the present invention.

- FIG. 9 is a top view of one embodiment of a system used to obtain position information corresponding to a vehicle.

- FIG. 10 is a block diagram of another embodiment of the present invention.

- The present invention can be used with substantially any mobile body, such as a human being,a motor vehicle or a non-motorized vehicle. However, the present description proceeds with respect to an illustrative embodiment in which the invention is implemented on a motor vehicle as a driver assist device. FIG. 1 is a simplified block diagram of one embodiment of

driver assist device 10 in accordance with the present invention.Driver assist device 10 includescontroller 12,vehicle location system 14,geospatial database 16, rangingsystem 18,operator interface 20 anddisplay 22. - In one embodiment,

controller 12 is a microprocessor, microcontroller, digital computer, or other similar control device having associated memory and timing circuitry. It should be understood that the memory can be integrated withcontroller 12, or be located separately therefrom. The memory, of course, may include random access memory, read only memory, magnetic or optical disc drives, tape memory, or any other suitable computer readable medium. -

Operator interface 20 is illustratively a keyboard, a touch-sensitive screen, a point and click user input device (e.g. a mouse), a keypad, a voice activated interface, joystick, or any other type of user interface suitable for receiving user commands, and providing those commands tocontroller 12, as well as providing a user viewable indication of operating conditions fromcontroller 12 to the user. The operator interface may also include, for example, the steering wheel and the throttle and brake pedals suitably instrumented to detect the operator's desired control inputs of heading angle and speed.Operator interface 20 may also include, for example, a LCD screen, LEDs, a plasma display, a CRT, audible noise generators, or any other suitable operator interface display or speaker unit. - As is described in greater detail later in the specification,

vehicle location system 14 determines and provides a vehicle location signal, indicative of the vehicle location in which driver assistdevice 10 is mounted, tocontroller 12. Thus,vehicle location system 14 can include a global positioning system receiver (GPS receiver) such as a differential GPS receiver, an earth reference position measuring system, a dead reckoning system (such as odometery and an electronic compass), an inertial measurement unit (such as accelerometers, inclinometers, or rate gyroscopes), etc. In any case,vehicle location system 14 periodically provides a location signal tocontroller 12 which indicates the location of the vehicle on the surface of the earth. -

Geospatial database 16 contains a digital map which digitally locates road boundaries, lane boundaries, possibly some landmarks (such as road signs, water towers, or other landmarks) and any other desired items (such as road barriers, bridges etc . . . ) and describes a precise location and attributes of those items on the surface of the earth. - It should be noted that there are many possible coordinate systems that can be used to express a location on the surface of the earth, but the most common coordinate frames include longitudinal and latitudinal angle, state coordinate frame, and county coordinate frame.

- Because the earth is approximately spherical in shape, it is convenient to determine a location on the surface of the earth if the location values are expressed in terms of an angle from a reference point. Longitude and latitude are the most commonly used angles to express a location on the earth's surface or in orbits around the earth. Latitude is a measurement on a globe of location north or south of the equator, and longitude is a measurement of the location east or west of the prime meridian at Greenwich, the specifically designated imaginary north-south line that passes through both geographic poles of the earth and Greenwich, England. The combinations of meridians of longitude and parallels of latitude establishes a framework or grid by means of which exact positions can be determined in reference to the prime meridian and the equator. Many of the currently available GPS systems provide latitude and longitude values as location data.