US20040008269A1 - System and method for providing multi-sensor super-resolution - Google Patents

System and method for providing multi-sensor super-resolution Download PDFInfo

- Publication number

- US20040008269A1 US20040008269A1 US10/307,265 US30726502A US2004008269A1 US 20040008269 A1 US20040008269 A1 US 20040008269A1 US 30726502 A US30726502 A US 30726502A US 2004008269 A1 US2004008269 A1 US 2004008269A1

- Authority

- US

- United States

- Prior art keywords

- image

- super

- resolution enhanced

- enhanced image

- estimate

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Abandoned

Links

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T3/00—Geometric image transformation in the plane of the image

- G06T3/40—Scaling the whole image or part thereof

- G06T3/4053—Super resolution, i.e. output image resolution higher than sensor resolution

Definitions

- the invention is generally related to the field of image processing of images, and more particularly to systems for enhancing the resolution of images.

- the invention is specifically directed to a system and method of generating an enhanced super-resolution image of a scene from a plurality of input images of the scene that may have been recorded with different image recording sensors.

- An image of a scene as recorded by camera are recorded at a particular resolution, which depends on the particular image recording medium or sensor that is used to record the image.

- a number of so-called “super resolution enhancement” methodologies an image of enhanced resolution can be generated from a plurality of input images of the scene.

- super-resolution enhancement methodologies assume that the images are recorded by the same sensor.

- images of a scene will typically be recorded using different image recording sensors. For example, a color image of a scene is recorded by an image recording medium that actually comprises three different sensors, one sensor for each of the red, blue and green primary colors.

- the invention provides a new and improved system and method of generating a super-enhanced resolution image of a scene from a plurality of input images of a scene that may have been recorded with different image recording sensors.

- the invention provides a super-resolution enhanced image generating system for generating a super-resolution-enhanced image from an image of a scene, identified as image g 0 , comprising a base image and at least one other image g i , the system comprising an initial super-resolution enhanced image generator, an image projector module and a super-resolution enhanced image estimate update generator module.

- the initial super-resolution enhanced image generator module is configured to use the image go to generate a super-resolution enhanced image estimate.

- the image projector module is configured to selectively use a warping, a blurring and/or a decimation operator associated with the image g, to generate a projected super-resolution enhanced image estimate.

- the super-resolution enhanced image estimate update generator module is configured to use the input image g, and the super-resolution enhanced image estimate to generate an updated super-resolution enhanced image estimate.

- FIG. 1 is a functional block diagram of a super-resolution enhanced image generating system for generating a super-enhanced resolution image of a scene from a plurality of input images of a scene that may have been recorded with different image recording sensors, constructed in accordance with the invention

- FIG. 2 is a flow chart depicting operations performed by the super-resolution enhanced image generating system depicted in FIG. 1;

- FIG. 3 is a flow chart depicting operations performed by the super-resolution enhanced image generating system in connection with a demosaicing operation.

- FIG. 1 is a functional block diagram of a super-resolution enhanced image generating system 10 for generating a super-enhanced resolution image of a scene from a plurality of input images of a scene that may have been recorded with different image recording sensors, constructed in accordance with the invention.

- the super-resolution enhanced image generating system 10 includes an initial super-resolution enhanced image estimate generator 11 , an super-resolution enhanced image estimate store 12 , an image projector 13 , an input image selector 14 , a virtual input image generator 15 , an error image generator 16 , and an super-resolution enhanced image update generator 17 , all under control of a control module 18 .

- the super-resolution enhanced image estimate generator 11 receives a base input image “g o ” of a scene and generates an initial estimate for the super-resolution enhanced image to be generated, which it stores in the super-resolution enhanced image estimate store 12 .

- the control module 18 enables the image projector 13 to generate a projected image of the super-resolution enhanced image estimate image in the super-resolution enhanced image estimate store, in this case using the imaging model for the image recording sensor that had been used to record the selected input image g i .

- the projected super-resolution enhanced image estimate and the selected input image are both coupled to the virtual input image generator 15 .

- the virtual input image generator 15 receives the projected super-resolution enhanced image estimate as generated by the image projector 13 and the selected input image g i , i ⁇ 1, and performs two operations.

- the virtual input image generator 15 For each pixel in corresponding locations in the projected image estimate and the selected input image, the virtual input image generator 15 generates a normalized cross-correlation value in a neighborhood of the respective pixel, and determines whether the normalized cross-correlation value is above a selected threshold value.

- the normalized cross-correlation value is an indication of the affine similarity between the intensity of the projected image and the intensity of the selected input image, that is, the degree to which a feature, such as an edge, that appears near the particular location in the projected image images is also near the same location in the selected input image.

- the virtual input image generator If the normalized cross-correlation value is above the threshold, the virtual input image generator generates affine parameters using a least-squares estimate for the affine similarity in the window, and uses those affine parameters, along with the pixel value (which represents the intensity) at the location in the selected input image to generate a pixel value for the location in the virtual input image.

- the virtual input image generator 15 assigns the location in the virtual input image the pixel value at that location in the projected image. The virtual input image generator 15 performs these operations for each of the pixels in the projected image as generated by the image projector 13 and the selected input image g i , i ⁇ 1, thereby to generate the virtual input image.

- the error image generator 16 receives the projected image as generated by the image projector 13 and the virtual input image as generated by the virtual input image generator 15 and generates an error image that reflects the difference in pixel values as between the two images.

- the super-resolution enhanced image estimate update generator 17 receives the error image as generated by the error image generator 16 and the super-resolution enhanced image estimate store 12 and generates an updated super-resolution enhanced image estimate, which is stored in the super-resolution enhanced image estimate store 12 .

- control module 18 can terminate processing, at which point the image in the super-resolution enhanced image estimate store 12 will comprise the super-resolution enhanced image.

- the imaging model which models the various recorded images g i , usually includes one or more of a plurality of components, the components including a geometric warp, camera blur, decimation, and added noise.

- the goal is to find a high-resolution image f that, when imaged into the lattice of the input images according to the imaging model, provides a good prediction for the low-resolution images g i . If ⁇ circumflex over (f) ⁇ is an estimate of the unknown image f, a prediction error image “d i ” for the estimate ⁇ circumflex over (f) ⁇ is given by

- W i is an operator that represents geometric warping as between the “i-th” input image g i and the estimate ⁇ circumflex over (f) ⁇ of the unknown image f

- B i is an operator that represents the blurring as between the “i-th” input image g i and the estimate ⁇ circumflex over (f) ⁇ of the unknown image f

- ⁇ represents decimation, that is, the reduction in resolution of the estimate ⁇ circumflex over (f) ⁇ of the unknown image f to the resolution of the “i-th” input image g i .

- the blurring operator “B i ” represents all of the possible sources of blurring, including blurring that may be due to the camera's optical properties, blurring that may be due to the camera's image recording sensor, and blurring that may be due to motion of the camera and/or objects in the scene while the camera's shutter is open.

- the warping operator “W i ” represents the displacement or motion of points in the scene as between the respective “i-th” input image “g i ” and the estimate ⁇ circumflex over (f) ⁇ .

- An example of the motion that is represented by the warping function “W i ” is the displacement of a projection of a point in the scene from a pixel having coordinates (x i ,y i ) in an image g i to a pixel having coordinates (x i ′,y i ′) in the estimate ⁇ circumflex over (f) ⁇ .

- the information defining the respective input images are in the form of arrays, as are various operators. It will also be appreciated that the arrays comprising the various operators will generally be different for each of the “i” input images g i .

- super-resolution image generating systems determine the estimate ⁇ circumflex over (f) ⁇ of the unknown image f by minimizing the norm of the prediction error image d, (equation (1)) as among all of the input images g i .

- the super-resolution enhanced image generating system 10 can generate an initial estimate from the base image g 0 using any of a number of methodologies as will be apparent to those skilled in the art; one methodology that is useful in connection with one particular application will be described below.

- the super-resolution enhanced image generating system makes use of an observation that aligned images as recorded by different image recording sensors are often statistically related.

- a virtual input image ⁇ i can be defined as

- ⁇ circumflex over (f) ⁇ is the current estimate of the super-resolution enhanced image, and, as described above, “B i ,” “W i ” and “ ⁇ ” represent the blurring, warping and decimation operators, respectively, for the respective “i-th” input image, i ⁇ 1.

- a virtual prediction error image “v i ” can be generated as

- the super-resolution enhanced image generating system uses the virtual prediction error image “v i ” from equation (3) in generating the super-resolution enhanced image in a manner similar to that described above in connection with equation (1), but using the virtual prediction error image v i instead of the prediction error image d i

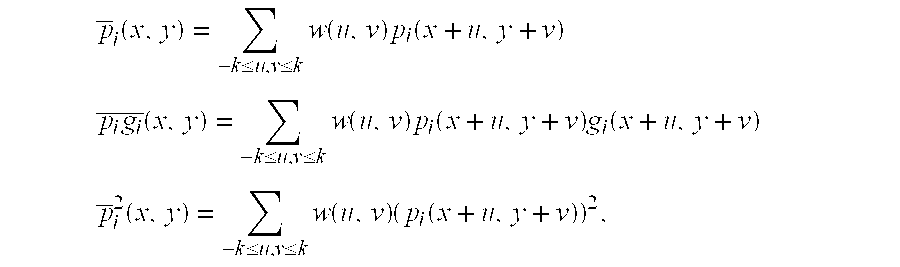

- ⁇ overscore (p i ) ⁇ (x,y), ⁇ overscore (g i ) ⁇ (x,y), ⁇ overscore (p i g i ) ⁇ (x,y), ⁇ overscore (p i 2 ) ⁇ (x,y), and ⁇ overscore (g i 2 ) ⁇ (x,y) are weighted averages around the pixel at coordinates (x,y) in the respective image p i or g i .

- w(u,v) is a weighting kernel.

- the values selected for the weighting kernel decrease with increasing absolute values of “u” and “v,” so that less weight will be given in the weighted sums to values of pixels in the window with increasing distance from the pixel at location (x,y), thereby to provide for a reduction in spacial discontinuities in the affine parameters “a” and “b” (equation (4)).

- FIG. 2 depicts a flow chart outlining operations that are performed by the super-resolution enhanced image generating system 10 as described above in connection with FIG. 1 and equations (2) through (9) in connection with generating and updating the a super-resolution enhanced image estimate ⁇ circumflex over (f) ⁇ .

- the operations will be apparent to those skilled in the art with reference to the above discussion, and so the various steps outlined in the flow chart will not be described in detail herein

- the super-resolution enhanced image generating system 10 is used in connection with demosaicing images that are associated with respective color channels of a CCD (charge coupled device) array that may be used in, for example a digital camera.

- a CCD array has a plurality of individual light sensor elements that are arranged in a plurality of rows and columns, with each light sensor element detecting the intensity of light of a particular color incident over the respective element's surface.

- Each sensor element is associated with a pixel in the image, and identifies the degree of brightness of the color associated with that pixel.

- the sensor element performs the operation of integrating the intensity of light incident over the sensor element's area over a particular period of time, as described above.

- each two-by-two block of four light sensor elements comprises light sensor elements that are to sense light of all three primary colors, namely, green red and blue.

- two light sensor elements are provided to sense light of color green

- one light sensor element is provided to sense light of color red

- one light sensor element is provided to sense light of color blue.

- the two light sensor elements that are to be used to sense light of color green are along a diagonal of the two-by-two block and the other two light sensor elements are along the diagonal orthogonal thereto.

- the green light sensor elements are at the upper right and lower left of the block, the red light sensor element is at the upper left and the blue light sensor element is at the lower right. It will be appreciated that the collection of sensor elements of each color provides an image of the respective color, with the additional proviso that the two green sensor elements in the respective blocks define two separate input images.

- the super-resolution enhanced image generating system generates a super-resolution enhanced image associated with each color channel, using information from the image that is associated with the respective color channel as well as the images that are associated with the other colors as input images.

- the collection of light sensor elements of the CCD array that is associated with each of the colors is a respective “image recording sensor” for purposes of the super-resolution enhanced image generating system, so that there will be four image recording sensors and four input images, with one input image being associated with each of the image recording sensors.

- the super-resolution enhanced image that is generated by the super-resolution enhanced image generating system for each of the color channels will have a resolution of m-by-n.

- the super-resolution enhanced images generated for the various color channels by the super-resolution enhanced image generating arrangement can then be used in generating a composite color image of higher resolution than the original image provided by the CCD array.

- the super-resolution enhanced image generating system actually generates four super-resolution enhanced images, one super-resolution enhanced image for each of the red and blue color channels, and two for the green color channel.

- [0048] will be provided by the collection of light sensor elements in the respective blocks that are associated with the red and blue channels. Similarly with base image and other input images associated with each of the other color channels.

- the super-resolution enhanced image generating system After the super-resolution enhanced image generating system receives the four input images, it first uses the input images associated with the respective color channels to generate, for each color channel, an initial estimate ⁇ circumflex over (f) ⁇ of the super-resolution enhanced image f for the respective color channel. Accordingly, the super-resolution enhanced image generating system uses the input image that is associated with the one of the green channels as the base image from which it generates the initial estimate ⁇ circumflex over (f) ⁇ of the super-resolution enhanced image f that is associated with that green color channel, and similarly for the other color channels.

- the super-resolution enhanced image generating system uses a linear interpolation as between points for the respective color channel in the array to provide initial pixel values for the array positions that are not provided by the CCD array.

- the CCD array in each two-by-two block of light sensor elements, one red light sensor element is provided at the upper left of the block.

- the red light sensor element in each block will provide the red channel pixel value that is associated with that pixel in the image that is associated with the red light sensor element.

- the super-resolution enhanced image generating system will generate red channel pixel values for the pixels that are associated with other three points in the block for which red light sensor elements are not provided by interpolating between the pixel values provided by red light sensor elements in neighboring blocks.

- the super-resolution enhanced image generating system generates the blue channel pixel values and the other blue channel pixel values for the pixels that are associated with the three points in the block for which blue light sensor elements are not provided in a similar manner.

- the super-resolution enhanced image generating system also uses a linear interpolation for the points in the block for which green light sensor elements are not provided. However, in this case, the super-resolution enhanced image generating system will select one of the green light sensor elements in each block, and perform a linear interpolation between pixel values provided by the selected green light sensor element and one of the green light sensor elements in adjacent blocks.

- the super-resolution enhanced image generating system makes use of the green light sensor element at the upper right of a block

- the super-resolution enhanced image generating system in generating the pixel value for the point associated with the upper left of the same block, makes use of the pixel value generated by the green light sensor elements of that block and of the block to the left.

- the super-resolution enhanced image generating system in generating the pixel value for the point associated with the lower right of the same block, makes use of the pixel value generated by the green light sensor elements of that block and of the adjacent block down the array.

- the super-resolution enhanced image generating system will not use the pixel value generated by the other green light sensor element in the block, in this example, the green light sensor element at the lower left corner of the block.

- Generating the initial estimate ⁇ circumflex over (f) ⁇ for the green channel in this manner results in a smooth initial estimate with satisfactory properties in connection with convergence to a result associated with the green color channel having desirable characteristics.

- the result associated with the green color channel may maintain some of the artifacts from the initial estimate ⁇ circumflex over (f) ⁇ .

- the super-resolution enhanced image generating system After the super-resolution enhanced image generating system has generated the initial estimate ⁇ circumflex over (f) ⁇ for the respective color channels, it will project the initial estimate ⁇ circumflex over (f) ⁇ that had been generated for the respective color channel back onto the input image for that color channel, thereby to generate the projected estimate image p 0 for the respective color channel.

- the super-resolution enhanced image generating system performs that operation, by convolving the initial estimate ⁇ circumflex over (f) ⁇ with the PSF function for the CCD array that generated the input image for the respective color channel.

- the super-resolution enhanced image generating system can then, for each color channel, use the projected image for the respective color channel, and the input images associated with the other channels to update the initial estimate ⁇ circumflex over (f) ⁇ so as to provide the final super-resolution enhanced image for the respective color channel.

- the following description will be of operations that are performed by the super-resolution enhanced image generating system to generate a super-resolution enhanced image for one of the color channels. It will be appreciated that the super-resolution enhanced image generating system can generate a super-resolution enhanced image for each of the color channels in the same way. It will further be appreciated that the super-resolution enhanced image generating system can perform the operations to generate a super-resolution enhanced image for each color channel consecutively, or it can perform the individual steps for the various color channels on an overlapped basis.

- the super-resolution enhanced image generating system After the super-resolution enhanced image generating system has projected the initial estimate ⁇ circumflex over (f) ⁇ generated for a color channel back onto the input image for that color channel, thereby to generate a projected estimate image p 0 for the color channel, it will select one of the other color channels whose input image g i will be used to update the initial estimate ⁇ circumflex over (f) ⁇ . For example, if the super-resolution enhanced image generating system is generating a super-resolution enhanced image for the green color channel, it will select one of the red or blue color channel whose associated the input image will be used in updating the initial estimate ⁇ circumflex over (f) ⁇ for the green color channel.

- the super-resolution enhanced image generating system After the super-resolution enhanced image generating system has updated the initial estimate using the input image associated with the color channel that it first selected, it will select the other of the red or blue color channel, whose input image will be used in further updating the updated estimate ⁇ circumflex over (f) ⁇ , thereby to generate the final super-resolution enhanced image. For example, if the super-resolution enhanced image generating system first selects the red channel, after it has updated the initial estimate ⁇ circumflex over (f) ⁇ using the input image associated with the red channel, it will select the blue channel and use its input image to further update the estimate, thereby to generate the final super-resolution enhanced image.

- the super-resolution enhanced image generating system After the super-resolution enhanced image generating system has selected a color channel whose input image g i will be used to update the initial estimate ⁇ circumflex over (f) ⁇ , it will generate and initialize an array data structure that will be used to receive values defining for the virtual error image v i .

- the super-resolution enhanced image generating system will initialize the array data structure to have number of array elements organized in rows and columns, the array having the same structure as the arrays for the various input images and the super-resolution enhanced image.

- the super-resolution enhanced image generating system initializes the values of the array elements to a predetermined number, which, in one embodiment, is selected to be zero.

- the super-resolution enhanced image generating system After the super-resolution enhanced image generating system has generated the various sub-images p ij and g ij , it will, for each pixel at location (x,y) in the respective sub-image, generate the weighted averages ⁇ overscore (p ij ) ⁇ (x, y), ⁇ overscore (g ij ) ⁇ (x, y), ⁇ overscore (p ij g ij ) ⁇ (x,y), ⁇ overscore (p ij 2 ) ⁇ (x,y), and ⁇ overscore (g ij 2 ) ⁇ (x, y) in a manner described above in connection with equation (7).

- the super-resolution enhanced image generating system generates the centralized and normalized cross-correlation c ij (x,y) and determines whether the value of c ij (x,y) is greater than the threshold value T. If the super-resolution enhanced image generating system determines that the value of c ij (x,y) is greater than the threshold value T, it will generate values for “a” and “b” as described above in connection with equation (8) and set the value of the element at location (2x+i,2y+j) of the data structure established for the error image to

- v (2 x+i, 2 y+j ) ag ij ( x,y )+ b ⁇ p ij ( x,y ) (10).

- equation (16) “ag ij (x,y)+b” corresponds to the pixel value of the pixel at location (x,y) of the virtual input image g as described above in connection with equation (4) and so equation (16) corresponds to equation (3) above.

- the super-resolution enhanced image generating system makes use of equation (16) only if the value of the cross-correlation c ij is greater than the threshold value, for those locations for which the value of the cross-correlation is not greater than the threshold value, the value in the element of the data structure for established for the error image will remain zero, which is the desired result since, as noted above, if the value of the cross-correlation c ij for particular location (x,y) is less than or equal to the threshold value, the pixel at that location in the virtual input image is assigned the value of the pixel of the projected image at that location.

- the super-resolution enhanced image generating system performs the operations described above for all of the pixels at locations (x,y) of the all of the respective sub-images p ij and g ij to populate the array provided for the error image v. Thereafter, the super-resolution enhanced image generating system uses the pixel values comprising the array for the error image v to update the initial estimate ⁇ circumflex over (f) ⁇ . In that operation, the super-resolution enhanced image generating system initially performs a convolution operation to convolve the error image v with the PSF function.

- the super-resolution enhanced image generating system can multiply the convolved error image with a sharpness parameter, and will add the result to the initial estimate ⁇ circumflex over (f) ⁇ , thereby to provide the estimate as updated for the selected color channel.

- the value of the sharpness parameter is selected to be between “one” and “two,” although it will be appreciated that other values may be selected.

- the super-resolution enhanced image generating system After the super-resolution enhanced image generating system has generated the estimate as updated for the selected color channel, it can select the other color channel and perform the operations described above to further update the estimate and provide the final super-resolution enhanced image. As noted above, the super-resolution enhanced image generating system can also perform the same operations to generate a super-resolution enhanced image for each of the color channels.

- FIG. 3 depicts a flow chart outlining operations that are performed by the super-resolution enhanced image generating arrangement as described above in connection with performing a demosaicing operation. The operations will be apparent to those skilled in the art with reference to the above discussion, and so the various steps outlined in the flow chart will not be described in detail herein.

- the invention provides a number of advantages.

- the invention provides a system that generates an super-resolution enhanced image of a scene from a plurality of input images of the scene, which input images may have been recorded using two or more image recording sensors.

- the system may be used for a number of applications, including but not limited to the demosaicing application described herein.

- the super-resolution enhanced image generating system can be used in connection with a demosaicing operation to generate demosaiced images that were provided using image recording sensors for different colors than those described above, or that are sensitive to different parts of the electromagnetic spectrum.

- the super-resolution enhanced image generating system can be used in connection with a demosaicing operation to generate demosaiced images that were provided using image recording sensors having different patterns of light sensing elements.

- the projected image p i B i (W i ( ⁇ circumflex over (f) ⁇ )) ⁇ may be generated using the warping operator W i , followed by the blurring operator B i , it will be appreciated that the projected image p i may be generated using the blurring operator B i followed by the warping operator W i , or by selected one or more of the respective warping, blurring and/or decimation operators.

- demosaicing operation has been described in connection with a CCD sensor array, it will be appreciated that the operation can be performed in connection with any sensor arrays, including but not limited to a CMOS array.

- the super-resolution enhanced image generating system has been described as generating a super-resolution enhanced image in the case in which the relation among the intensities of the various input images is affine, it will be appreciated that similar operations can be performed if the intensities have other relations, including but not limited to logarithmic, trigonometric, and so forth, with an appropriate adaptation of the normalized cross-correlation as a measure of fit.

- a system in accordance with the invention can be constructed in whole or in part from special purpose hardware or a general purpose computer system, or any combination thereof, any portion of which may be controlled by a suitable program.

- Any program may in whole or in part comprise part of or be stored on the system in a conventional manner, or it may in whole or in part be provided in to the system over a network or other mechanism for transferring information in a conventional manner.

- the system may be operated and/or otherwise controlled by means of information provided by an operator using operator input elements (not shown) which may be connected directly to the system or which may transfer the information to the system over a network or other mechanism for transferring information in a conventional manner.

Abstract

A super-resolution enhanced image generating system is described for generating a super-resolution-enhanced image from an image of a scene, identified as image g0, comprising a base image and at least one other image gi, the system comprising an initial super-resolution enhanced image generator, an image projector module and a super-resolution enhanced image estimate update generator module. The initial super-resolution enhanced image generator module is configured to use the image g0 to generate a super-resolution enhanced image estimate. The image projector module is configured to selectively use a warping, a blurring and/or a decimation operator associated with the image gi to generate a projected super-resolution enhanced image estimate. The super-resolution enhanced image estimate update generator module is configured to use the input image gi and the super-resolution enhanced image estimate to generate an updated super-resolution enhanced image estimate.

Description

- The invention is generally related to the field of image processing of images, and more particularly to systems for enhancing the resolution of images. The invention is specifically directed to a system and method of generating an enhanced super-resolution image of a scene from a plurality of input images of the scene that may have been recorded with different image recording sensors.

- An image of a scene as recorded by camera are recorded at a particular resolution, which depends on the particular image recording medium or sensor that is used to record the image. Using one of a number of so-called “super resolution enhancement” methodologies, an image of enhanced resolution can be generated from a plurality of input images of the scene. A number of super-resolution enhancement methodologies assume that the images are recorded by the same sensor. However, in many applications, images of a scene will typically be recorded using different image recording sensors. For example, a color image of a scene is recorded by an image recording medium that actually comprises three different sensors, one sensor for each of the red, blue and green primary colors. A problem arises in such applications, since conventional super-resolution-enhancement methodologies can only be applied on multiple images generated by a similar sensor.

- The invention provides a new and improved system and method of generating a super-enhanced resolution image of a scene from a plurality of input images of a scene that may have been recorded with different image recording sensors.

- In brief summary, the invention provides a super-resolution enhanced image generating system for generating a super-resolution-enhanced image from an image of a scene, identified as image g 0, comprising a base image and at least one other image gi, the system comprising an initial super-resolution enhanced image generator, an image projector module and a super-resolution enhanced image estimate update generator module. The initial super-resolution enhanced image generator module is configured to use the image go to generate a super-resolution enhanced image estimate. The image projector module is configured to selectively use a warping, a blurring and/or a decimation operator associated with the image g, to generate a projected super-resolution enhanced image estimate. The super-resolution enhanced image estimate update generator module is configured to use the input image g, and the super-resolution enhanced image estimate to generate an updated super-resolution enhanced image estimate.

- This invention is pointed out with particularity in the appended claims. The above and further advantages of this invention may be better understood by referring to the following description taken in conjunction with the accompanying drawings, in which:

- FIG. 1 is a functional block diagram of a super-resolution enhanced image generating system for generating a super-enhanced resolution image of a scene from a plurality of input images of a scene that may have been recorded with different image recording sensors, constructed in accordance with the invention;

- FIG. 2 is a flow chart depicting operations performed by the super-resolution enhanced image generating system depicted in FIG. 1;

- FIG. 3 is a flow chart depicting operations performed by the super-resolution enhanced image generating system in connection with a demosaicing operation.

- FIG. 1 is a functional block diagram of a super-resolution enhanced

image generating system 10 for generating a super-enhanced resolution image of a scene from a plurality of input images of a scene that may have been recorded with different image recording sensors, constructed in accordance with the invention. With reference to FIG. 1, the super-resolution enhancedimage generating system 10 includes an initial super-resolution enhancedimage estimate generator 11, an super-resolution enhancedimage estimate store 12, animage projector 13, aninput image selector 14, a virtualinput image generator 15, anerror image generator 16, and an super-resolution enhancedimage update generator 17, all under control of acontrol module 18. Under control of thecontrol module 18, the super-resolution enhancedimage estimate generator 11 receives a base input image “go” of a scene and generates an initial estimate for the super-resolution enhanced image to be generated, which it stores in the super-resolution enhancedimage estimate store 12. -

- of the scene, which may have been recorded by another sensor. Thereafter, the

control module 18 enables theimage projector 13 to generate a projected image of the super-resolution enhanced image estimate image in the super-resolution enhanced image estimate store, in this case using the imaging model for the image recording sensor that had been used to record the selected input image gi. The projected super-resolution enhanced image estimate and the selected input image are both coupled to the virtualinput image generator 15. The virtualinput image generator 15 receives the projected super-resolution enhanced image estimate as generated by theimage projector 13 and the selected input image gi, i≧1, and performs two operations. First, for each pixel in corresponding locations in the projected image estimate and the selected input image, the virtualinput image generator 15 generates a normalized cross-correlation value in a neighborhood of the respective pixel, and determines whether the normalized cross-correlation value is above a selected threshold value. The normalized cross-correlation value is an indication of the affine similarity between the intensity of the projected image and the intensity of the selected input image, that is, the degree to which a feature, such as an edge, that appears near the particular location in the projected image images is also near the same location in the selected input image. If the normalized cross-correlation value is above the threshold, the virtual input image generator generates affine parameters using a least-squares estimate for the affine similarity in the window, and uses those affine parameters, along with the pixel value (which represents the intensity) at the location in the selected input image to generate a pixel value for the location in the virtual input image. On the other hand, if the normalized cross-correction value is below the threshold, the virtualinput image generator 15 assigns the location in the virtual input image the pixel value at that location in the projected image. The virtualinput image generator 15 performs these operations for each of the pixels in the projected image as generated by theimage projector 13 and the selected input image gi, i≧1, thereby to generate the virtual input image. - The

error image generator 16 receives the projected image as generated by theimage projector 13 and the virtual input image as generated by the virtualinput image generator 15 and generates an error image that reflects the difference in pixel values as between the two images. The super-resolution enhanced imageestimate update generator 17 receives the error image as generated by theerror image generator 16 and the super-resolution enhancedimage estimate store 12 and generates an updated super-resolution enhanced image estimate, which is stored in the super-resolution enhancedimage estimate store 12. -

- after the updated super-resolution enhanced image estimate has been stored in the

store 12, the control module can enable the various modules 13-17 to repeat the operations described above in connection with another input image. These operations can be repeated for each of the input images. After all, or a selected subset, of the input images - have been selected and used as described above, the

control module 18 can terminate processing, at which point the image in the super-resolution enhancedimage estimate store 12 will comprise the super-resolution enhanced image. Alternatively, or in addition, thecontrol module 18 can enable the operations in connection with the input images - or the selected subset, through one or more subsequent iterations to further enhance the resolution of the image in the super-resolution enhanced

image estimate store 12. - Details of the operations performed by the initial super-resolution enhanced

image estimate generator 11, theimage projector 13, the virtualinput image generator 16, theerror image generator 16 and the super-resolution enhanced imageestimate update generator 17 will be described below. -

- of “n” input images of a scene, all of which have been recorded by the same image recording sensor. The input images are indexed using index “i.” It will be assumed that all of the images are the result of imaging a high-resolution image f of a scene, which high-resolution image initially is unknown. The imaging model, which models the various recorded images g i, usually includes one or more of a plurality of components, the components including a geometric warp, camera blur, decimation, and added noise. The goal is to find a high-resolution image f that, when imaged into the lattice of the input images according to the imaging model, provides a good prediction for the low-resolution images gi. If {circumflex over (f)} is an estimate of the unknown image f, a prediction error image “di” for the estimate {circumflex over (f)} is given by

- d i =g i −B i(W i({circumflex over (f)})↓ (1)

- where “W i” is an operator that represents geometric warping as between the “i-th” input image gi and the estimate {circumflex over (f)} of the unknown image f, “Bi” is an operator that represents the blurring as between the “i-th” input image gi and the estimate {circumflex over (f)} of the unknown image f, and “↓” represents decimation, that is, the reduction in resolution of the estimate {circumflex over (f)} of the unknown image f to the resolution of the “i-th” input image gi. The blurring operator “Bi” represents all of the possible sources of blurring, including blurring that may be due to the camera's optical properties, blurring that may be due to the camera's image recording sensor, and blurring that may be due to motion of the camera and/or objects in the scene while the camera's shutter is open. The warping operator “Wi” represents the displacement or motion of points in the scene as between the respective “i-th” input image “gi” and the estimate {circumflex over (f)}. An example of the motion that is represented by the warping function “Wi” is the displacement of a projection of a point in the scene from a pixel having coordinates (xi,yi) in an image gi to a pixel having coordinates (xi′,yi′) in the estimate {circumflex over (f)}. It will be appreciated that the information defining the respective input images are in the form of arrays, as are various operators. It will also be appreciated that the arrays comprising the various operators will generally be different for each of the “i” input images gi. Typically, super-resolution image generating systems determine the estimate {circumflex over (f)} of the unknown image f by minimizing the norm of the prediction error image d, (equation (1)) as among all of the input images gi.

-

- all of which were recorded by the same image recording sensor. The super-resolution enhanced image generating system in accordance with the invention generates a super-enhanced image from a set of input images, which need not have been recorded using the same image recording sensor. More specifically, the super-resolution enhanced image generating system generates an estimate {circumflex over (f)} of a high-resolution version of a base image “g 0” of a scene using that image “g0” and a set

-

-

- are similar to each other, or to the sensor that was used to record the base image “g 0.” The super-resolution enhanced

image generating system 10 can generate an initial estimate from the base image g0 using any of a number of methodologies as will be apparent to those skilled in the art; one methodology that is useful in connection with one particular application will be described below. - The super-resolution enhanced image generating system makes use of an observation that aligned images as recorded by different image recording sensors are often statistically related. The super-resolution enhanced image generating system makes use of a statistical model “M” that relates a projected image p i=Bi(Wi({circumflex over (f)}))↓ to the respective input image “gi” as recorded by the image recording sensor. In that case, a virtual input image ĝi can be defined as

- ĝ i =M(p i ,g i) (2)

- For the projected image p i=Bi(Wi({circumflex over (f)}))↓, {circumflex over (f)} is the current estimate of the super-resolution enhanced image, and, as described above, “Bi,” “Wi” and “↓” represent the blurring, warping and decimation operators, respectively, for the respective “i-th” input image, i≧1.

- After the respective projected image p i and the virtual input image ĝi for a particular input image g, have been generated, a virtual prediction error image “vi” can be generated as

- v i =ĝ i −p i (3)

- Thereafter, the super-resolution enhanced image generating system uses the virtual prediction error image “v i” from equation (3) in generating the super-resolution enhanced image in a manner similar to that described above in connection with equation (1), but using the virtual prediction error image vi instead of the prediction error image di

-

- and the intensities of the projected images. In particular, the super-resolution enhanced image 8 generating system makes use of small corresponding pixel neighborhoods in the projected image pi and the associated input image gi and generates estimates for an affine transformation relating the intensity values of the image pi to the intensity values of the respective input image gi, that is pi(x, y)=a(x, y)gi(x, y)+b(x, y), where (x,y) represents the location of the respective pixel. (In the following discussion, the use of “(x,y)” may be dropped for clarity.) The super-resolution enhanced image generating system then uses the mapping to generate the respective virtual input image as

- ĝ i(x,y)=a(x,y)g i(x,y)+b(x,y) (4),

- and the prediction error image v i as described above in connection with equation (3). Under the assumption that the input image is contaminated with zero-mean white noise, which typically is the case, the optimal estimation for the affine relation between each projected image pi and the respective input image g, provides coefficients “a” and “b” such as to minimize

- where “u” and “v” define a small neighborhood R around each of the respective pixels (x,y).

- The super-resolution enhanced image generating system generates the pixel value (intensity) for a pixel at location (x,y) in the virtual input image ĝ i=M(pi,gi) (reference equation (3)) as follows. Initially, the super-resolution enhanced image generating system generates an estimate “c(x,y)” of a centralized and normalized cross-correlation in a weighted window of size “2k+1” by “2k+1,” for a selected value of “k,” around the respective pixel at location (x,y) as follows:

-

- and so forth, where “w(u,v)” is a weighting kernel. In one embodiment, the values selected for the weighting kernel decrease with increasing absolute values of “u” and “v,” so that less weight will be given in the weighted sums to values of pixels in the window with increasing distance from the pixel at location (x,y), thereby to provide for a reduction in spacial discontinuities in the affine parameters “a” and “b” (equation (4)).

- After the super-resolution enhanced image generating system has generated the cross-correlation estimate c(x,y) of the cross-correlation for a pixel at location (x,y), it compares the absolute value of the cross-correlation estimate c(x,y) to a threshold value “T.” If the super-resolution enhanced image generating system determines that the absolute value of the cross-correlation estimate c(x,y) is less than the threshold value “T,” the value of the pixel at location (x,y) in the virtual input image ĝ i is set to correspond to the value of the pixel at location (x,y) in the projected image pi, that is, ĝi(x,y)=pi(x,y).

- On the other hand, if the super-resolution enhanced image generating system determines that the absolute value of the estimate c(x,y) is greater than or equal to the threshold value “T,” it generates coefficients “a” and “b” for use in generating the least-squares estimate for the affine similarity in the window around the pixel at location (x,y) as follows

- and then generates the pixel value for the pixel in location (x,y) of the virtual input image as

- ĝ i(x,y)=ag i(x,y)+b (9),

- (compare equation (4)) where “a” and “b” in equation (9) are as determined from equation (8). The super-resolution enhanced image generating system performs the operations described above in connection with equations (6) through (9) for each of the pixels that are to comprise part of the respective virtual input image ĝ i After the super-resolution enhanced image generating system has completed the respective virtual input image ĝi, it generates the value for the virtual prediction error image vi using equation (3). After the super-resolution enhanced image generating system has generated the virtual prediction error image vi, it will use the virtual prediction error image to update the estimate {circumflex over (f)} of the unknown image f. The super-resolution enhanced image generating system can repeat these operations for each of the input images

-

- and possibly also the base input image g 0, through one or more subsequent iterations to further update the super-resolution enhanced image estimate {circumflex over (f)}.

- FIG. 2 depicts a flow chart outlining operations that are performed by the super-resolution enhanced

image generating system 10 as described above in connection with FIG. 1 and equations (2) through (9) in connection with generating and updating the a super-resolution enhanced image estimate {circumflex over (f)}. The operations will be apparent to those skilled in the art with reference to the above discussion, and so the various steps outlined in the flow chart will not be described in detail herein - In one embodiment, the super-resolution enhanced

image generating system 10 is used in connection with demosaicing images that are associated with respective color channels of a CCD (charge coupled device) array that may be used in, for example a digital camera. Typically, a CCD array has a plurality of individual light sensor elements that are arranged in a plurality of rows and columns, with each light sensor element detecting the intensity of light of a particular color incident over the respective element's surface. Each sensor element is associated with a pixel in the image, and identifies the degree of brightness of the color associated with that pixel. The sensor element performs the operation of integrating the intensity of light incident over the sensor element's area over a particular period of time, as described above. - For a CCD array that is to be used in connection with the visual portion of the electromagnetic spectrum, each two-by-two block of four light sensor elements comprises light sensor elements that are to sense light of all three primary colors, namely, green red and blue. In each two-by-two block, two light sensor elements are provided to sense light of color green, one light sensor element is provided to sense light of color red and one light sensor element is provided to sense light of color blue. Typically, the two light sensor elements that are to be used to sense light of color green are along a diagonal of the two-by-two block and the other two light sensor elements are along the diagonal orthogonal thereto. In a typical CCD array, the green light sensor elements are at the upper right and lower left of the block, the red light sensor element is at the upper left and the blue light sensor element is at the lower right. It will be appreciated that the collection of sensor elements of each color provides an image of the respective color, with the additional proviso that the two green sensor elements in the respective blocks define two separate input images. In that case, if, for example, the CCD array has “m” rows-by-“n” columns, with a total resolution of m*n (where “*” represents multiplication), the image that is provided by the respective sets of green sensors in the respective blocks will have a resolution of ¼*m*n, and the image that is provided by each of the red and blue sensors will have a resolution of ¼*m*n. In the demosaicing operation, the super-resolution enhanced image generating system generates a super-resolution enhanced image associated with each color channel, using information from the image that is associated with the respective color channel as well as the images that are associated with the other colors as input images. In this connection, the collection of light sensor elements of the CCD array that is associated with each of the colors is a respective “image recording sensor” for purposes of the super-resolution enhanced image generating system, so that there will be four image recording sensors and four input images, with one input image being associated with each of the image recording sensors. The super-resolution enhanced image that is generated by the super-resolution enhanced image generating system for each of the color channels will have a resolution of m-by-n. The super-resolution enhanced images generated for the various color channels by the super-resolution enhanced image generating arrangement can then be used in generating a composite color image of higher resolution than the original image provided by the CCD array.

- In the demosaicing operation, the super-resolution enhanced image generating system actually generates four super-resolution enhanced images, one super-resolution enhanced image for each of the red and blue color channels, and two for the green color channel. For each super-resolution enhanced image, and with reference to the discussion above in connection with equations 3 through 12, the base input image go is the image provided by the collection of light sensor elements associated with the respective color channel (with the two green light sensors in the respective blocks representing different color channels), and the other input images

- (in this case, n= 3) will be provided by the collection of light sensor elements associated with the other three color channels, with the proviso, noted above, that the sensor elements in the respective blocks that are associated with the green color define separate channels for the demosaicing operation. For example, for the green channels, the base images go are the images that are provided by the green light sensor elements of the respective positions in the blocks comprising the CCD array, and the red and blue input images

- will be provided by the collection of light sensor elements in the respective blocks that are associated with the red and blue channels. Similarly with base image and other input images associated with each of the other color channels.

- After the super-resolution enhanced image generating system receives the four input images, it first uses the input images associated with the respective color channels to generate, for each color channel, an initial estimate {circumflex over (f)} of the super-resolution enhanced image f for the respective color channel. Accordingly, the super-resolution enhanced image generating system uses the input image that is associated with the one of the green channels as the base image from which it generates the initial estimate {circumflex over (f)} of the super-resolution enhanced image f that is associated with that green color channel, and similarly for the other color channels. In one embodiment, for each of the red and blue color channels, as well as the other green color channel, the super-resolution enhanced image generating system uses a linear interpolation as between points for the respective color channel in the array to provide initial pixel values for the array positions that are not provided by the CCD array. As mentioned above, the CCD array, in each two-by-two block of light sensor elements, one red light sensor element is provided at the upper left of the block. For the initial estimate {circumflex over (f)} for the super-resolution enhanced image that is associated with the red channel, the red light sensor element in each block will provide the red channel pixel value that is associated with that pixel in the image that is associated with the red light sensor element. The super-resolution enhanced image generating system will generate red channel pixel values for the pixels that are associated with other three points in the block for which red light sensor elements are not provided by interpolating between the pixel values provided by red light sensor elements in neighboring blocks. The super-resolution enhanced image generating system generates the blue channel pixel values and the other blue channel pixel values for the pixels that are associated with the three points in the block for which blue light sensor elements are not provided in a similar manner.

- As noted above, two green light sensor elements are provided in each block, one at the upper right and one at the lower left. In one embodiment, the super-resolution enhanced image generating system also uses a linear interpolation for the points in the block for which green light sensor elements are not provided. However, in this case, the super-resolution enhanced image generating system will select one of the green light sensor elements in each block, and perform a linear interpolation between pixel values provided by the selected green light sensor element and one of the green light sensor elements in adjacent blocks. For example, if the super-resolution enhanced image generating system makes use of the green light sensor element at the upper right of a block, in generating the pixel value for the point associated with the upper left of the same block, the super-resolution enhanced image generating system makes use of the pixel value generated by the green light sensor elements of that block and of the block to the left. In that case, in generating the pixel value for the point associated with the lower right of the same block, the super-resolution enhanced image generating system makes use of the pixel value generated by the green light sensor elements of that block and of the adjacent block down the array. In that embodiment, the super-resolution enhanced image generating system will not use the pixel value generated by the other green light sensor element in the block, in this example, the green light sensor element at the lower left corner of the block. Generating the initial estimate {circumflex over (f)} for the green channel in this manner results in a smooth initial estimate with satisfactory properties in connection with convergence to a result associated with the green color channel having desirable characteristics. On the other hand, if pixel values provided by both of the green light sensor elements in a block were to be used, the result associated with the green color channel may maintain some of the artifacts from the initial estimate {circumflex over (f)}.

- After the super-resolution enhanced image generating system has generated the initial estimate {circumflex over (f)} for the respective color channels, it will project the initial estimate {circumflex over (f)} that had been generated for the respective color channel back onto the input image for that color channel, thereby to generate the projected estimate image p 0 for the respective color channel. The super-resolution enhanced image generating system performs that operation, by convolving the initial estimate {circumflex over (f)} with the PSF function for the CCD array that generated the input image for the respective color channel. The super-resolution enhanced image generating system can then, for each color channel, use the projected image for the respective color channel, and the input images associated with the other channels to update the initial estimate {circumflex over (f)} so as to provide the final super-resolution enhanced image for the respective color channel.

- For clarity, the following description will be of operations that are performed by the super-resolution enhanced image generating system to generate a super-resolution enhanced image for one of the color channels. It will be appreciated that the super-resolution enhanced image generating system can generate a super-resolution enhanced image for each of the color channels in the same way. It will further be appreciated that the super-resolution enhanced image generating system can perform the operations to generate a super-resolution enhanced image for each color channel consecutively, or it can perform the individual steps for the various color channels on an overlapped basis.

- After the super-resolution enhanced image generating system has projected the initial estimate {circumflex over (f)} generated for a color channel back onto the input image for that color channel, thereby to generate a projected estimate image p 0 for the color channel, it will select one of the other color channels whose input image gi will be used to update the initial estimate {circumflex over (f)}. For example, if the super-resolution enhanced image generating system is generating a super-resolution enhanced image for the green color channel, it will select one of the red or blue color channel whose associated the input image will be used in updating the initial estimate {circumflex over (f)} for the green color channel. After the super-resolution enhanced image generating system has updated the initial estimate using the input image associated with the color channel that it first selected, it will select the other of the red or blue color channel, whose input image will be used in further updating the updated estimate {circumflex over (f)}, thereby to generate the final super-resolution enhanced image. For example, if the super-resolution enhanced image generating system first selects the red channel, after it has updated the initial estimate {circumflex over (f)} using the input image associated with the red channel, it will select the blue channel and use its input image to further update the estimate, thereby to generate the final super-resolution enhanced image.

- After the super-resolution enhanced image generating system has selected a color channel whose input image g i will be used to update the initial estimate {circumflex over (f)}, it will generate and initialize an array data structure that will be used to receive values defining for the virtual error image vi. The super-resolution enhanced image generating system will initialize the array data structure to have number of array elements organized in rows and columns, the array having the same structure as the arrays for the various input images and the super-resolution enhanced image. The super-resolution enhanced image generating system initializes the values of the array elements to a predetermined number, which, in one embodiment, is selected to be zero.

- After the super-resolution enhanced image generating system has initialized an array data structure to be used for the virtual error image v i, it will perform a number of steps to generate an error image v for the selected color channel. In that operation, the super-resolution enhanced image generating system initially divides both the projected input image p and the input image g for the selected color channel into four sub-images pij and gij (i=0,1) (here the subscripts are used to identify the particular sub-image). In dividing each of the images p and g into a sub-image, the pixel value corresponding to the pixel at location (q,r) of the respective image p, g provides the pixel value for the pixel at location (x,y) in the respective sub-image pij, gij such that (q,r)=(2x+i,2y+j).

- After the super-resolution enhanced image generating system has generated the various sub-images p ij and gij, it will, for each pixel at location (x,y) in the respective sub-image, generate the weighted averages {overscore (pij)}(x, y), {overscore (gij)}(x, y), {overscore (pijgij)}(x,y), {overscore (pij 2)}(x,y), and {overscore (gij 2)}(x, y) in a manner described above in connection with equation (7). Thereafter, for each pixel at location (x,y) in the respective sub-images pij and cij, the super-resolution enhanced image generating system generates the centralized and normalized cross-correlation cij(x,y) and determines whether the value of cij(x,y) is greater than the threshold value T. If the super-resolution enhanced image generating system determines that the value of cij(x,y) is greater than the threshold value T, it will generate values for “a” and “b” as described above in connection with equation (8) and set the value of the element at location (2x+i,2y+j) of the data structure established for the error image to

- v(2x+i,2y+j)=ag ij(x,y)+b−p ij(x,y) (10).

- It will be appreciated that, in equation (16), “ag ij(x,y)+b” corresponds to the pixel value of the pixel at location (x,y) of the virtual input image g as described above in connection with equation (4) and so equation (16) corresponds to equation (3) above.

- It will be appreciated that, since, the super-resolution enhanced image generating system makes use of equation (16) only if the value of the cross-correlation c ij is greater than the threshold value, for those locations for which the value of the cross-correlation is not greater than the threshold value, the value in the element of the data structure for established for the error image will remain zero, which is the desired result since, as noted above, if the value of the cross-correlation cij for particular location (x,y) is less than or equal to the threshold value, the pixel at that location in the virtual input image is assigned the value of the pixel of the projected image at that location.

- The super-resolution enhanced image generating system performs the operations described above for all of the pixels at locations (x,y) of the all of the respective sub-images p ij and gij to populate the array provided for the error image v. Thereafter, the super-resolution enhanced image generating system uses the pixel values comprising the array for the error image v to update the initial estimate {circumflex over (f)}. In that operation, the super-resolution enhanced image generating system initially performs a convolution operation to convolve the error image v with the PSF function. Thereafter, the super-resolution enhanced image generating system can multiply the convolved error image with a sharpness parameter, and will add the result to the initial estimate {circumflex over (f)}, thereby to provide the estimate as updated for the selected color channel. In one embodiment, the value of the sharpness parameter is selected to be between “one” and “two,” although it will be appreciated that other values may be selected.

- After the super-resolution enhanced image generating system has generated the estimate as updated for the selected color channel, it can select the other color channel and perform the operations described above to further update the estimate and provide the final super-resolution enhanced image. As noted above, the super-resolution enhanced image generating system can also perform the same operations to generate a super-resolution enhanced image for each of the color channels.

- FIG. 3 depicts a flow chart outlining operations that are performed by the super-resolution enhanced image generating arrangement as described above in connection with performing a demosaicing operation. The operations will be apparent to those skilled in the art with reference to the above discussion, and so the various steps outlined in the flow chart will not be described in detail herein.

- The invention provides a number of advantages. In particular, the invention provides a system that generates an super-resolution enhanced image of a scene from a plurality of input images of the scene, which input images may have been recorded using two or more image recording sensors. The system may be used for a number of applications, including but not limited to the demosaicing application described herein.

- It will be appreciated that a number of changes and modifications may be made to the super-resolution enhanced image generating system as described above. For example, the super-resolution enhanced image generating system can be used in connection with a demosaicing operation to generate demosaiced images that were provided using image recording sensors for different colors than those described above, or that are sensitive to different parts of the electromagnetic spectrum. In addition, the super-resolution enhanced image generating system can be used in connection with a demosaicing operation to generate demosaiced images that were provided using image recording sensors having different patterns of light sensing elements.

- In addition, although the projected image p i=Bi(Wi({circumflex over (f)}))↓ may be generated using the warping operator Wi, followed by the blurring operator Bi, it will be appreciated that the projected image pi may be generated using the blurring operator Bi followed by the warping operator Wi, or by selected one or more of the respective warping, blurring and/or decimation operators.

- Furthermore, although a methodology has been described for generating the initial estimate {circumflex over (f)} for the super-resolution enhanced image, it will be appreciated that any of a number of other methodologies may be used.

- In addition, although the demosaicing operation has been described in connection with a CCD sensor array, it will be appreciated that the operation can be performed in connection with any sensor arrays, including but not limited to a CMOS array.

- Furthermore, although the super-resolution enhanced image generating system has been described as generating a super-resolution enhanced image in the case in which the relation among the intensities of the various input images is affine, it will be appreciated that similar operations can be performed if the intensities have other relations, including but not limited to logarithmic, trigonometric, and so forth, with an appropriate adaptation of the normalized cross-correlation as a measure of fit.

- It will be appreciated that a system in accordance with the invention can be constructed in whole or in part from special purpose hardware or a general purpose computer system, or any combination thereof, any portion of which may be controlled by a suitable program. Any program may in whole or in part comprise part of or be stored on the system in a conventional manner, or it may in whole or in part be provided in to the system over a network or other mechanism for transferring information in a conventional manner. In addition, it will be appreciated that the system may be operated and/or otherwise controlled by means of information provided by an operator using operator input elements (not shown) which may be connected directly to the system or which may transfer the information to the system over a network or other mechanism for transferring information in a conventional manner.

- The foregoing description has been limited to a specific embodiment of this invention. It will be apparent, however, that various variations and modifications may be made to the invention, with the attainment of some or all of the advantages of the invention. It is the object of the appended claims to cover these and such other variations and modifications as come within the true spirit and scope of the invention.

Claims (21)

1. A super-resolution enhanced image generating system for generating a super-resolution-enhanced image from an image of a scene, identified as image g0, comprising a base image and at least one other image gi the system comprising:

A an initial super-resolution enhanced image generator module configured to use the image go to generate a super-resolution enhanced image estimate;

B. an image projector module configured to selectively use a warping, a blurring and/or a decimation operator associated with the image gi to generate a projected super-resolution enhanced image estimate; and

C. a super-resolution enhanced image estimate update generator module configured to use the input image gi and the super-resolution enhanced image estimate to generate an updated super-resolution enhanced image estimate.

2. A super-resolution enhanced image generating system as defined in claim 1 , the system being configured to generate the super-resolution enhanced image in relation to a plurality of other images identified as images

the system further comprising an image selector module configured to select one of the images

the image projector module being configured to selectively use the warping, a blurring and/or a decimation operator associated with the image g, selected by the image selector module to generate a projected super-resolution enhanced image estimate, the super-resolution enhanced image estimate update generator module being configured to use the selected input image gi and the super-resolution enhanced image estimate to generate an updated super-resolution enhanced image.

3. A super-resolution enhanced image generating system as defined in claim 2 further comprising a control module configured to control the image selector module, image projector module, and the super-resolution enhanced image update generator to operate in successive iterations using respective ones of the images

in each iteration, the image projector module using the updated super-resolution enhanced image generated during the previous iteration as the super-resolution enhanced image estimate for the respective iteration.

4. A super-resolution enhanced image generating system as defined in claim 2 in which the super-resolution enhanced image estimate update generator is configured to generate at least one parameter in connection with a least squares estimate between the selected input image and the projected super-resolution enhanced image estimate.

5. A super-resolution enhanced image generating system as defined in claim 4 in which the super resolution enhanced image estimate update generator is configured to generate the super-resolution enhanced image update estimate, for the selected input image

in accordance with a virtual input image ĝi defined by

g i(x,y)=ag i(x,y)+b

where “a” and “b” are parameters associated with respective pixel location (x,y) defined by

and where {overscore (pi)}(x,y), {overscore (gi)}(x,y), {overscore (pigi)}(x,y), {overscore (pi 2)}(x,y), and {overscore (gi 2)}(x,y) are weighted averages around the pixel at coordinates (x,y) in the respective image projected super-resolution enhanced image estimate pi or the input image gi.

6. A super-resolution enhanced image generating system as defined in claim 5 in which the super resolution enhanced image estimate update generator is configured to generate the weighted averages in accordance with

and so forth, where “w(u,v)” is a weighting kernel and “(u,v)” defines a region R around the respective pixel at location “(x,y).”

7. A super-resolution enhanced image generating system as defined in claim 5 in which the super resolution enhanced image estimate update generator is further configured to generate the super-resolution enhanced image update estimate, for the selected input image

in accordance with a cross-correlation value c(x,y) generated for respective a pixel at location (x,y) in the respective image defined by

and a selected threshold value.

8. A method of generating a super-resolution-enhanced image from an image of a scene, identified as image go, comprising a base image and at least one other image gi, the system comprising:

A an initial super-resolution enhanced image generation step of using the image g0 to generate a super-resolution enhanced image estimate;

B. an image projection step of using a warping, a blurring and/or a decimation operator associated with the image gi to generate a projected super-resolution enhanced image estimate; and

C. a super-resolution enhanced image estimate update generator module configured to use the input image gi and the super-resolution enhanced image estimate to generate an updated super-resolution enhanced image estimate.

9. A method as defined in claim 8 , the method being configured to generate the super-resolution enhanced image in relation to a plurality of other images identified as images

the method further comprising an image selection step of selecting one of the images

the image projection step including the step of selectively using the warping, a blurring and/or a decimation operator associated with the selected image gi to generate a projected super-resolution enhanced image estimate, the super-resolution enhanced image estimate update generation step including the step of using use the selected input image gi and the super-resolution enhanced image estimate to generate an updated super-resolution enhanced image.

10. A method as defined in claim 9 further comprising a step of enabling the image selection step, the image projection step, and the super-resolution enhanced image update generation step to be performed in successive iterations using respective ones of the images

in each iteration, the image projection step including the step of using the updated super-resolution enhanced image generated during the previous iteration as the super-resolution enhanced image estimate for the respective iteration.

11. A super-resolution enhanced image generating method as defined in claim 10 in which the super-resolution enhanced image estimate update step includes the step of generating at least one parameter in connection with a least squares estimate between the selected input image and the projected super-resolution enhanced image estimate.

12. A super-resolution enhanced image generating method as defined in claim 11 in which the super resolution enhanced image estimate update generation step includes the step of generating the super-resolution enhanced image update estimate, for the selected input image

in accordance with a virtual input image ĝi defined by