CN115984658A - Multi-sensor fusion vehicle window identification method and system and readable storage medium - Google Patents

Multi-sensor fusion vehicle window identification method and system and readable storage medium Download PDFInfo

- Publication number

- CN115984658A CN115984658A CN202310066606.4A CN202310066606A CN115984658A CN 115984658 A CN115984658 A CN 115984658A CN 202310066606 A CN202310066606 A CN 202310066606A CN 115984658 A CN115984658 A CN 115984658A

- Authority

- CN

- China

- Prior art keywords

- point cloud

- data

- features

- illumination

- image

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Granted

Links

- 230000004927 fusion Effects 0.000 title claims abstract description 73

- 238000000034 method Methods 0.000 title claims abstract description 36

- 238000005286 illumination Methods 0.000 claims abstract description 62

- 238000000605 extraction Methods 0.000 claims abstract description 18

- 230000006870 function Effects 0.000 claims description 16

- 238000012545 processing Methods 0.000 claims description 16

- 239000013598 vector Substances 0.000 claims description 10

- 238000013519 translation Methods 0.000 claims description 6

- 230000008859 change Effects 0.000 claims description 4

- 238000012935 Averaging Methods 0.000 claims description 3

- 230000004913 activation Effects 0.000 claims description 3

- 238000010304 firing Methods 0.000 claims description 3

- 238000011176 pooling Methods 0.000 claims description 3

- 230000008569 process Effects 0.000 claims description 3

- 238000011112 process operation Methods 0.000 claims description 3

- 238000012216 screening Methods 0.000 claims description 2

- 230000002708 enhancing effect Effects 0.000 abstract description 5

- 238000004891 communication Methods 0.000 description 3

- 230000008878 coupling Effects 0.000 description 3

- 238000010168 coupling process Methods 0.000 description 3

- 238000005859 coupling reaction Methods 0.000 description 3

- 238000005070 sampling Methods 0.000 description 3

- 230000002411 adverse Effects 0.000 description 2

- 238000010586 diagram Methods 0.000 description 2

- 239000010410 layer Substances 0.000 description 2

- 230000002093 peripheral effect Effects 0.000 description 2

- 230000009286 beneficial effect Effects 0.000 description 1

- 230000005540 biological transmission Effects 0.000 description 1

- 238000001514 detection method Methods 0.000 description 1

- 230000000694 effects Effects 0.000 description 1

- 230000006872 improvement Effects 0.000 description 1

- 239000011159 matrix material Substances 0.000 description 1

- 238000005259 measurement Methods 0.000 description 1

- 238000012986 modification Methods 0.000 description 1

- 230000004048 modification Effects 0.000 description 1

- 239000002356 single layer Substances 0.000 description 1

Images

Classifications

-

- Y—GENERAL TAGGING OF NEW TECHNOLOGICAL DEVELOPMENTS; GENERAL TAGGING OF CROSS-SECTIONAL TECHNOLOGIES SPANNING OVER SEVERAL SECTIONS OF THE IPC; TECHNICAL SUBJECTS COVERED BY FORMER USPC CROSS-REFERENCE ART COLLECTIONS [XRACs] AND DIGESTS

- Y02—TECHNOLOGIES OR APPLICATIONS FOR MITIGATION OR ADAPTATION AGAINST CLIMATE CHANGE

- Y02T—CLIMATE CHANGE MITIGATION TECHNOLOGIES RELATED TO TRANSPORTATION

- Y02T10/00—Road transport of goods or passengers

- Y02T10/10—Internal combustion engine [ICE] based vehicles

- Y02T10/40—Engine management systems

Landscapes

- Image Analysis (AREA)

- Image Processing (AREA)

Abstract

The invention provides a multi-sensor fusion vehicle window identification method, a multi-sensor fusion vehicle window identification system and a readable storage medium, wherein illumination compensation is carried out on rgb image data according to illumination data, and image feature extraction is carried out; collecting temperature sensor data and a laser radar point cloud array; compensating the laser radar point cloud array according to the data of the temperature sensor, and extracting point cloud characteristics point by point; performing pixel level fusion on the image features and the point cloud features; and identifying the vehicle window according to the fused features. And performing illumination data compensation and fusion on the original rgb image by using a dynamic SSR algorithm, enhancing the image, reducing the influence of severe illumination on vehicle window identification, acquiring temperature sensor data, performing temperature compensation and fusion on a radar point cloud array based on a tested laser radar distance temperature compensation system, and reducing the influence of inaccurate point cloud data caused by temperature on vehicle window identification.

Description

Technical Field

The invention relates to the field of vehicle window identification, in particular to a multi-sensor fusion vehicle window identification method and system and a readable storage medium.

Background

Vehicle window discernment among the prior art, under adverse lighting environment, too bright and dark in bright/dark/region appear easily in the rgb image, it is not good to directly use original rgb image to carry out feature extraction and follow-up vehicle window detection easy effect under adverse lighting, and simultaneously, under microthermal condition, the position depth data that deviates from central area often appears in the point cloud data is invalid or inaccurate, the condition that the volume is littleer appears when the volume measurement, and under the high temperature condition, the noise of marginal area also can increase, can embody the phenomenon of inside high outside extension occasionally, thereby lead to the initial data of acquireing inaccurate, thereby cause the influence to vehicle window discernment.

The above problems are currently in need of solution.

Disclosure of Invention

The invention aims to provide a multi-sensor fusion vehicle window identification method, a multi-sensor fusion vehicle window identification system and a readable storage medium.

In order to solve the technical problem, the invention provides a multi-sensor fusion vehicle window identification method, which comprises the following steps:

acquiring illumination data and rgb image data;

performing illumination compensation on the rgb image data according to the illumination data, and extracting image features;

collecting temperature sensor data and a laser radar point cloud array;

compensating the laser radar point cloud array according to the temperature sensor data, and extracting point cloud characteristics point by point;

performing pixel level fusion on the image features and the point cloud features;

and identifying the vehicle window according to the fused features.

Further, the method for performing illumination compensation on the rgb image data according to the illumination data is that the illumination compensation is dynamically performed on the rgb image by using a Single Scale Retinex algorithm based on the illumination data, and the expression is as follows:

in the formula, I (x, y) is an original image, R (x, y) is a reflection component, L (x, y) is an illumination component, I represents the ith color channel, x represents convolution, and G (x, y) is a gaussian surrounding function;

Wherein,is a scale parameter surrounded by gauss, which is dynamically adjusted on the basis of an illumination variable Li, is/are>For the adjustment factor, lx is the illumination unit:

obtainingThen converting from a logarithmic domain to a real domain to obtain R (x, y), and then performing linear stretching processing to obtain an output image, wherein the final linear stretching formula is as follows: />

In the formula,the Single Scale Retinex algorithm is used for dynamically carrying out illumination compensation on the rgb image to obtain image data.

Further, the step of performing image feature extraction includes:

inputting the compensated image data into a trained ResNeXt CNN network to obtain a feature map;

and setting a feature map to obtain a candidate ROI, sending the candidate ROI into an RPN (resilient packet network) to classify and filter part of the ROI, then performing ROI Align operation, and corresponding rgb image data and the pixels of the feature map to obtain a feature map of the candidate ROI.

Further, the step of compensating the laser radar point cloud array according to the temperature sensor data and extracting point cloud characteristics point by point comprises:

collecting distance data measured by laser radar at different temperatures

The distance data is used for constructing a distance temperature change relation,

generating a relation between compensation time and temperature according to the light speed distance relation;

finally, according to the current temperature of the laser radar, searching a corresponding compensation distance in the spline interpolation table, and calculating the compensation distance of each depth data in the point cloud array, thereby completing the compensation of the point cloud array;

and inputting the compensated point cloud array into a point cloud feature extraction network for feature extraction to obtain point cloud features.

Further, the step of identifying the vehicle window according to the fused features includes:

feeding pixel-level fusion features into FC layerTo obtain a feature vectorFor the feature vector->Carrying out Sigmoid operation to carry out confidence judgment;

using RCNN's bounding box regression algorithm, inputProcessing to obtain an offset function, and further carrying out offset adjustment on the original frame;

carrying out bilinear interpolation on the pixel-level fusion features meeting the threshold requirement, scaling the pixel-level fusion features to the size of an ROI Align feature map, sending the feature map into an FCN (fuzzy C-means) to generate a car window mask, wherein the size of a single mask is as follows:;

and performing resize operation and background filling on the vehicle window mask according to the original Proposal and the image size to obtain the mask corresponding to the original image size, filling the mask and frame regression on the original image, and displaying a confidence judgment result of the Sigmoid branch to a corresponding area to finish vehicle window identification.

Further, the offset function is:

wherein P represents the original Proposal,a feature vector representing an input>Denotes a prediction offset value, w denotes a learned parameter;

the offset adjustment of the original frame comprises translation and size scaling:

further, the step of performing pixel-level fusion on the image features and the point cloud features includes:

and splicing the color features of each pixel point and the depth features of the corresponding point cloud points on the channel to obtain a group of fused features, wherein the process is as follows:

wherein,、respectively the extracted point cloud characteristics and the image characteristics, device for combining or screening>Indicates a splicing operation, <' > is taken>Is a relu activation function, < >>Represents a 1*1 convolution operation, greater or lesser than zero>Representing a splicing operation on a channel;

feeding the fused feature into mlp, and obtaining the global feature by using the average firing

and splicing the global feature and the fused feature on the channel to obtain a pixel level fusion feature, namely a fused feature:

The invention also provides a multi-sensor fusion vehicle window identification system, which comprises:

a first acquisition module adapted to acquire illumination data and rgb image data

The first processing module is suitable for performing illumination compensation on rgb image data according to the illumination data and performing image feature extraction;

the second acquisition module is suitable for acquiring temperature sensor data and a laser radar point cloud array;

the second processing module is suitable for compensating the laser radar point cloud array according to the temperature sensor data and extracting point cloud characteristics point by point;

the fusion module is suitable for carrying out pixel level fusion on the image characteristics and the point cloud characteristics;

and the identification module is suitable for identifying the vehicle window according to the fused features.

The invention also provides a computer-readable storage medium, wherein at least one instruction is stored in the computer-readable storage medium, and the instruction is executed by a processor to realize the multi-sensor fusion vehicle window identification method.

The invention also provides an electronic device, comprising a memory and a processor; at least one instruction is stored in the memory; the processor is used for realizing the multi-sensor fusion vehicle window identification method by loading and executing the at least one instruction.

The invention has the beneficial effects that the invention provides a method and a system for identifying the fusion vehicle window of multiple sensors and a readable storage medium, wherein the method for identifying the fusion vehicle window of multiple sensors comprises the steps of acquiring illumination data and rgb image data; performing illumination compensation on the rgb image data according to the illumination data, and extracting image features; collecting temperature sensor data and a laser radar point cloud array; compensating the laser radar point cloud array according to the temperature sensor data, and extracting point cloud characteristics point by point; performing pixel level fusion on the image features and the point cloud features; and identifying the vehicle window according to the fused features. And performing illumination data compensation and fusion on the original rgb image by using a dynamic SSR algorithm, enhancing the image, reducing the influence of severe illumination on vehicle window identification, acquiring temperature sensor data, performing temperature compensation and fusion on a radar point cloud array based on a tested laser radar distance temperature compensation system, and reducing the influence of inaccurate point cloud data caused by temperature on vehicle window identification.

Drawings

The invention is further illustrated by the following examples in conjunction with the drawings.

Fig. 1 is a flowchart of a multi-sensor fusion vehicle window identification method according to an embodiment of the present invention.

Fig. 2 is a schematic block diagram of a multi-sensor fusion vehicle window identification system provided by an embodiment of the invention.

Fig. 3 is a partial functional block diagram of an electronic device provided by an embodiment of the invention.

Detailed Description

The present invention will now be described in further detail with reference to the accompanying drawings. These drawings are simplified schematic views illustrating only the basic structure of the present invention in a schematic manner, and thus show only the constitution related to the present invention.

Example 1

Referring to fig. 1-3, an embodiment of the invention provides a multi-sensor fusion vehicle window identification method, which includes the steps of compensating and fusing illumination data of an original rgb image by using a dynamic SSR algorithm, enhancing the image, reducing the influence of severe illumination on vehicle window identification, collecting temperature sensor data, performing temperature compensation and fusion on a radar point cloud array based on an examined laser radar distance temperature compensation system, and reducing the influence of inaccurate point cloud data caused by temperature on vehicle window identification.

Specifically, the multi-sensor fusion vehicle window identification method comprises the following steps:

s110: illumination data is acquired as well as rgb image data.

In particular, the illumination data acquisition is performed by illumination sensor data, and the rgb image data acquisition is performed by a 2D camera.

S120: and performing illumination compensation on the rgb image data according to the illumination data, and extracting image features.

Specifically, the method for performing illumination compensation on the rgb image data according to the illumination data is to dynamically perform illumination compensation on the rgb image by using a Single Scale Retinex algorithm based on the illumination data, and the expression is as follows:

in the formula, I (x, y) is an original image, R (x, y) is a reflection component, L (x, y) is an illumination component, I represents the ith color channel, x represents convolution, and G (x, y) is a gaussian surrounding function;

Wherein,is a scale parameter surrounded by gauss, which is dynamically adjusted on the basis of an illumination variable Li, is/are>For the adjustment factor, lx is the illuminance unit:

obtainingThen converting from a logarithmic domain to a real domain to obtain R (x, y), and then performing linear stretching processing to obtain an output image, wherein the final linear stretching formula is as follows:

in the formula,the rgb image is dynamically subjected to illumination compensation by a Single Scale Retinex algorithm to obtain image data.

The step of performing image feature extraction includes:

inputting the compensated image data into a trained ResNeXt CNN network to obtain a feature map;

and setting a feature map to obtain a candidate ROI, sending the candidate ROI into an RPN (resilient packet network) to classify and filter part of the ROI, then performing ROI Align operation, and corresponding rgb image data and the pixels of the feature map to obtain a feature map of the candidate ROI.

Specifically, in order to obtain a feature map of a fixed size, ROI Align uses bilinear interpolation to determine pixel values of a virtual point in the original image, using four actually existing pixel values around the virtual point:

representing a pixel value corresponding to the coordinate point, ("bin")>)、()、 ()、 () The coordinates of the virtual point are respectively the upper left, lower left, upper right and lower right coordinates, and (x, y) are the coordinates corresponding to the obtained virtual point.

After ROI Align, the characteristics of the original image are scaled after bilinear interpolation, and the structure is as follows:

dim is the dimension, H, W original image ROI area size scalar.

S130: and collecting temperature sensor data and a laser radar point cloud array.

S140: and compensating the laser radar point cloud array according to the temperature sensor data, and extracting point cloud characteristics point by point.

In the present embodiment, S140 includes the following steps:

s141: and collecting distance data measured by the laser radar at different temperatures.

S142: and constructing a distance temperature change relation by using the distance data.

Specifically, the constructed distance temperature change curve equation is:

and calculating the compensation time corresponding to the current temperature of the radar, and performing time compensation on the shutter signal to complete the time domain compensation of the radar.

S143: and generating the relation between the compensation time and the temperature according to the light speed distance relation.

Specifically, the initial point temperature at which the laser radar is calibrated is set toThen the result is substituted into the equation of the distance/temperature variation curve to obtain the corresponding ^ or ^ value>;

distance formula S =0.5 × c according to the speed of lightAnd Δ t is the sum of the laser emission time and the laser return time of the laser radar, and the obtained functional relation equation of the compensation time Δ t and the temperature f is as follows:

on the basis of time domain compensation, collecting the distance measured by the laser radar at each temperature to form a second group of data of which the distance changes along with the temperature; and carrying out cubic spline interpolation on the second group of data to generate a spline interpolation table, and constructing the relationship between the temperature and the compensation distance.

The interpolation function is:

and f is the acquired temperature, s is the distance of the corresponding temperature point, xq is the interpolation interval, the corresponding compensation distance is searched in the spline interpolation table according to the current temperature of the laser radar, and the compensation distance of the depth data is calculated. Wherein, firstly, the calibration initial temperature is searchedAnd the final compensation distance is the compensation distance minus the initial value of the compensation distance.

S144: and finally, searching the corresponding compensation distance in the spline interpolation table according to the current temperature of the laser radar, and calculating the compensation distance of each depth data in the point cloud array, thereby completing the compensation of the point cloud array.

The final compensated distance vector is represented as:

where F is the current temperature, query () represents a table lookup operation,is a compensated distance vector.

Compensating the input point cloud data:

the point cloud array is represented by i, i represents the ith point of the point cloud array, and a, b and c are coordinate values of xyz axes of the point cloud point under the point cloud three-dimensional coordinate system.

S145: and inputting the compensated point cloud array into a point cloud feature extraction network for feature extraction to obtain point cloud features, wherein the network takes a PCTR (point cloud Transformer) unit as a core.

Specifically, step S145 includes the steps of:

(1) FPS (farthest point sampling) is carried out on the point cloud, local coordinates and local features of the point cloud are extracted by combining a K-nearest neighbor method, and the point cloud is respectively sent to a local feature extraction unit, a local PTCR unit and a local jump connection unit to carry out local high-dimensional feature extraction in different feature subspaces.

(2) Feature joining and fusion

Three different local features are added through a matrix, spliced with global features in feature dimensions, and feature fusion is carried out through a layer of nonlinear convolution, and the formula is as follows:

wherein、Is the output of the local feature extraction unit and the local jump connection unit, is combined with the output of the local feature extraction unit and the output of the local jump connection unit>Andis the output of the local PCTR cell and the global PCTR cell, is asserted>Is a single layer non-linear convolution, <' > is>And connecting the characteristic channels in proportion by the expansion dimension, and splicing in the dimension of the characteristic channels.

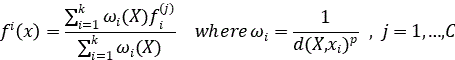

(5) In the decoding stage, point-by-point cloud characteristics are finally obtained through reverse interpolation up-sampling and jump connection, and the specific operation of the reverse interpolation is as follows:

wherein X represents the point in the point cloud feature set after up-sampling,representing a set of features for an existing point cloud->The point (b) in (c) is,table weighting operation, C represents the number of point clouds.

After reverse interpolation, if the returned features lack local information, local jump connection is carried out to finally obtain point-by-point cloud features, and the structure is expressed as

Wherein C represents the point cloud number, d represents the spatial coordinate dimension, and F represents the characteristic dimension.

S150: performing pixel level fusion on the image features and the point cloud features;

specifically, the color feature of each pixel point and the depth feature of the corresponding point cloud point are spliced on the channel to obtain a group of fused features, and the process is as follows:

wherein,、respectively extracted point cloud characteristics and image characteristics>Indicates a splicing operation, <' > is taken>Is a relu activation function, < >>Represents a 1*1 convolution operation, greater or lesser than zero>Representing a splicing operation on a channel;

feeding the fused feature into mlp, and obtaining the global feature by using the average firing

and (3) splicing the global feature and the fused feature on the channel to obtain a pixel level fusion feature, namely a dense fused feature:

S160: identifying the vehicle window according to the fused features;

step S160 includes the steps of:

using the frame regression algorithm of RCNN, inputAnd processing to obtain an offset function, and further carrying out offset adjustment on the original frame.And (M, N, w, h) respectively represents the coordinates of the center point of the window and the width and the height, and further the original frame is subjected to offset adjustment. />

Wherein the offset function is:

wherein, P represents original Proposal,a feature vector representing an input>Represents a predicted offset value, w represents a learned parameter;

the offset adjustment of the original frame comprises translation and size scaling:

carrying out bilinear interpolation on the pixel-level fusion features meeting the threshold requirement, scaling the pixel-level fusion features to the size of an ROI Align feature map, sending the feature map into an FCN (fuzzy C-means) to generate a car window mask, wherein the size of a single mask is as follows:;

and performing resize operation and background filling on the vehicle window mask according to the original Proposal and the image size to obtain the mask corresponding to the original image size, filling the mask and frame regression on the original image, and displaying a confidence judgment result of the Sigmoid branch to a corresponding area to finish vehicle window identification.

Example 2

The embodiment provides a multi-sensor fusion vehicle window identification system. The multi-sensor fusion vehicle window identification system comprises:

a first acquisition module adapted to acquire illumination data and rgb image data. In this embodiment, the first acquisition module is adapted to implement step S110 in embodiment 1.

And the first processing module is suitable for performing illumination compensation on the rgb image data according to the illumination data and extracting image characteristics. In the present embodiment, the first processing module is adapted to implement step S120 in embodiment 1.

And the second acquisition module is suitable for acquiring temperature sensor data and the laser radar point cloud array. In this embodiment, the second acquisition module is adapted to implement step S130 in embodiment 1.

And the second processing module is suitable for compensating the laser radar point cloud array according to the temperature sensor data and extracting point cloud characteristics point by point. In this embodiment, the first acquisition module is adapted to implement step S110 in embodiment 1. In the present embodiment, the second processing module is adapted to implement step S140 in embodiment 1.

And the fusion module is suitable for carrying out pixel level fusion on the image characteristics and the point cloud characteristics. In the present embodiment, the fusion module is adapted to implement step S150 in embodiment 1.

And the identification module is suitable for identifying the vehicle window according to the fused features. In the present embodiment, the identification module is adapted to implement step S160 in embodiment 1.

Example 3

The present embodiment provides a computer-readable storage medium, wherein at least one instruction is stored in the computer-readable storage medium, and the instruction is executed by a processor, so that the multi-sensor fusion vehicle window identification method provided in embodiment 1 is implemented.

The multi-sensor fusion car window identification method comprises the steps of carrying out illumination compensation on rgb image data according to illumination data, and carrying out image feature extraction; collecting temperature sensor data and a laser radar point cloud array; compensating the laser radar point cloud array according to the temperature sensor data, and extracting point cloud characteristics point by point; performing pixel level fusion on the image features and the point cloud features; and identifying the vehicle window according to the fused features. And performing illumination data compensation and fusion on the original rgb image by using a dynamic SSR algorithm, enhancing the image, reducing the influence of severe illumination on vehicle window identification, acquiring temperature sensor data, performing temperature compensation and fusion on a radar point cloud array based on a tested laser radar distance temperature compensation system, and reducing the influence of inaccurate point cloud data caused by temperature on vehicle window identification.

Example 4

Referring to fig. 3, the present embodiment provides an electronic device, including: a memory 502 and a processor 501; the memory 502 has at least one program instruction stored therein; the processor 501 loads and executes the at least one program instruction to implement the multi-sensor fusion vehicle window identification method provided in embodiment 1.

The memory 502 and the processor 501 are coupled in a bus that may include any number of interconnected buses and bridges that couple one or more of the various circuits of the processor 501 and the memory 502 together. The bus may also connect various other circuits such as peripherals, voltage regulators, power management circuits, etc., which are well known in the art, and therefore, will not be described any further herein. A bus interface provides an interface between the bus and the transceiver. The transceiver may be one element or a plurality of elements, such as a plurality of receivers and transmitters, providing a means for communicating with various other apparatus over a transmission medium. The data processed by the processor 501 is transmitted over a wireless medium through an antenna, which further receives the data and transmits the data to the processor 501.

The processor 501 is responsible for managing the bus and general processing and may also provide various functions including timing, peripheral interfaces, voltage regulation, power management, and other control functions. And memory 502 may be used to store data used by processor 501 in performing operations.

In summary, the present invention provides a multi-sensor fusion vehicle window identification method, a system and a readable storage medium, wherein the multi-sensor fusion vehicle window identification method includes acquiring illumination data and rgb image data; performing illumination compensation on the rgb image data according to the illumination data, and extracting image features; collecting temperature sensor data and a laser radar point cloud array; compensating the laser radar point cloud array according to the temperature sensor data, and extracting point cloud characteristics point by point; performing pixel level fusion on the image features and the point cloud features; and identifying the vehicle window according to the fused features. And performing illumination data compensation and fusion on the original rgb image by using a dynamic SSR algorithm, enhancing the image, reducing the influence of severe illumination on vehicle window identification, acquiring temperature sensor data, performing temperature compensation and fusion on a radar point cloud array based on a tested laser radar distance temperature compensation system, and reducing the influence of inaccurate point cloud data caused by temperature on vehicle window identification.

The components selected for use in the present application (components not illustrated for specific structures) are all common standard components or components known to those skilled in the art, and the structure and principle thereof can be known to those skilled in the art through technical manuals or through routine experimentation. Moreover, the software programs referred to in the present application are all prior art, and the present application does not involve any improvement in the software programs.

In the description of the embodiments of the present invention, unless otherwise explicitly specified or limited, the terms "mounted," "connected," and "connected" are to be construed broadly, e.g., as meaning either a fixed connection, a removable connection, or an integral connection; can be mechanically or electrically connected; they may be connected directly or indirectly through intervening media, or they may be interconnected between two elements. The specific meanings of the above terms in the present invention can be understood in specific cases to those skilled in the art.

In the description of the present invention, it should be noted that the terms "center", "upper", "lower", "left", "right", "vertical", "horizontal", "inner", "outer", etc., indicate orientations or positional relationships based on the orientations or positional relationships shown in the drawings, and are only for convenience of description and simplicity of description, but do not indicate or imply that the device or element being referred to must have a particular orientation, be constructed and operated in a particular orientation, and thus, should not be construed as limiting the present invention. Furthermore, the terms "first," "second," and "third" are used for descriptive purposes only and are not to be construed as indicating or implying relative importance.

In the several embodiments provided in the present application, it should be understood that the disclosed system, apparatus and method may be implemented in other ways. The above-described embodiments of the apparatus are merely illustrative, and for example, the division of the units is only one logical division, and there may be other divisions when actually implemented, and for example, a plurality of units or components may be combined or integrated into another system, or some features may be omitted, or not executed. In addition, the shown or discussed coupling or direct coupling or communication connection between each other may be through some communication interfaces, indirect coupling or communication connection between devices or units, and may be in an electrical, mechanical or other form.

The units described as separate parts may or may not be physically separate, and parts displayed as units may or may not be physical units, may be located in one place, or may be distributed on a plurality of network units. Some or all of the units can be selected according to actual needs to achieve the purpose of the solution of the embodiment.

In addition, functional units in the embodiments of the present invention may be integrated into one processing unit, or each unit may exist alone physically, or two or more units are integrated into one unit.

In light of the foregoing description of the preferred embodiment of the present invention, many modifications and variations will be apparent to those skilled in the art without departing from the spirit and scope of the invention. The technical scope of the present invention is not limited to the content of the specification, and must be determined according to the scope of the claims.

Claims (10)

1. A multi-sensor fusion vehicle window identification method is characterized by comprising the following steps:

acquiring illumination data and rgb image data;

performing illumination compensation on the rgb image data according to the illumination data, and extracting image features;

collecting temperature sensor data and a laser radar point cloud array;

compensating the laser radar point cloud array according to the temperature sensor data, and extracting point cloud characteristics point by point;

performing pixel level fusion on the image features and the point cloud features;

and identifying the vehicle window according to the fused features.

2. The multi-sensor fusion window identification method of claim 1,

the method for performing illumination compensation on the rgb image data according to the illumination data is characterized in that illumination compensation is dynamically performed on the rgb image by using a Single Scale Retinex algorithm based on the illumination data, and the expression is as follows:

in the formula, I (x, y) is an original image, R (x, y) is a reflection component, L (x, y) is an illumination component, I represents the ith color channel, x represents convolution, and G (x, y) is a gaussian surrounding function;

Wherein,is a scale parameter surrounded by gauss, which is dynamically adjusted on the basis of an illumination variable Li, is/are>For the adjustment factor, lx is the illuminance unit:

obtainingThen converting from a logarithmic domain to a real domain to obtain R (x, y), and then performing linear stretching processing to obtain an output image, wherein the final linear stretching formula is as follows:

3. The multi-sensor fusion window identification method of claim 2,

the step of performing image feature extraction includes:

inputting the compensated image data into a trained ResNeXt CNN network to obtain a feature map;

and setting a feature map to obtain a candidate ROI, sending the candidate ROI into an RPN (resilient packet network) to classify and filter part of the ROI, then performing ROI Align operation, and corresponding rgb image data and the pixels of the feature map to obtain a feature map of the candidate ROI.

4. The multi-sensor fusion window identification method of claim 1,

the method for compensating the laser radar point cloud array according to the temperature sensor data and extracting the point cloud characteristics point by point comprises the following steps:

collecting distance data measured by a laser radar at different temperatures;

constructing a distance temperature change relation by using the distance data;

generating a relation between the compensation time and the temperature according to the light speed distance relation;

finally, according to the current temperature of the laser radar, searching a corresponding compensation distance in the spline interpolation table, and calculating the compensation distance of each depth data in the point cloud array, thereby completing the compensation of the point cloud array;

and inputting the compensated point cloud array into a point cloud feature extraction network for feature extraction to obtain point cloud features.

5. The multi-sensor fusion window identification method of claim 1,

the step of identifying the vehicle window according to the fused features comprises the following steps of:

sending the pixel level fusion features into an FC layer to obtain feature vectorsFor a feature vector>Carrying out Sigmoid operation to carry out confidence judgment;

using RCNN's bounding box regression algorithm, inputProcessing to obtain an offset function, and further carrying out offset adjustment on the original frame;

carrying out bilinear interpolation on the pixel-level fusion features meeting the threshold requirement, scaling the pixel-level fusion features to the size of an ROI Align feature map, sending the feature map into an FCN (fuzzy C-means) to generate a car window mask, wherein the size of a single mask is as follows:;

and performing resize operation and background filling on the vehicle window mask according to the original Proposal and the image size to obtain the mask corresponding to the original image size, filling the mask and frame regression on the original image, and displaying a confidence judgment result of the Sigmoid branch to a corresponding area to finish vehicle window identification.

6. The multi-sensor fusion window identification method of claim 5,

the offset function is:

wherein P represents the original Proposal,feature vectors representing inputs>Represents a predicted offset value, w represents a learned parameter;

the offset adjustment of the original frame comprises translation and size scaling:

7. the multi-sensor fusion window identification method of claim 1,

the step of performing pixel level fusion on the image features and the point cloud features comprises the following steps:

and splicing the color features of each pixel point and the depth features of the corresponding point cloud points on the channel to obtain a group of fused features, wherein the process is as follows:

wherein,、respectively extracted point cloud characteristics and image characteristics, device for combining or screening>Indicates a splicing operation, <' > is taken>Is a relu activation function, < >>Represents 1*1 convolution operation, based on a convolution operation>Represents a channell, splicing operation;

feeding the fused feature into mlp, and obtaining the global feature by using average firing

and (3) splicing the global feature and the fused feature on the channel to obtain a pixel level fusion feature, namely a dense fused feature:

8. A multi-sensor fusion window identification system, comprising:

a first acquisition module adapted to acquire illumination data and rgb image data

The first processing module is suitable for performing illumination compensation on rgb image data according to the illumination data and performing image feature extraction;

the second acquisition module is suitable for acquiring temperature sensor data and a laser radar point cloud array;

the second processing module is suitable for compensating the laser radar point cloud array according to the temperature sensor data and extracting point cloud characteristics point by point;

the fusion module is suitable for carrying out pixel level fusion on the image characteristics and the point cloud characteristics;

and the identification module is suitable for identifying the vehicle window according to the fused features.

9. A computer readable storage medium having stored therein at least one instruction, wherein the instruction when executed by a processor implements the multi-sensor fusion window identification method of any of claims 1 to 7.

10. An electronic device comprising a memory and a processor; at least one instruction is stored in the memory; the processor, by loading and executing the at least one instruction, implements the multi-sensor fusion window identification method of any one of claims 1-7.

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202310066606.4A CN115984658B (en) | 2023-02-06 | 2023-02-06 | Multi-sensor fusion vehicle window recognition method, system and readable storage medium |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN202310066606.4A CN115984658B (en) | 2023-02-06 | 2023-02-06 | Multi-sensor fusion vehicle window recognition method, system and readable storage medium |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN115984658A true CN115984658A (en) | 2023-04-18 |

| CN115984658B CN115984658B (en) | 2023-10-20 |

Family

ID=85959698

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN202310066606.4A Active CN115984658B (en) | 2023-02-06 | 2023-02-06 | Multi-sensor fusion vehicle window recognition method, system and readable storage medium |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN115984658B (en) |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN118224993A (en) * | 2024-05-24 | 2024-06-21 | 杭州鲁尔物联科技有限公司 | Building structure deformation monitoring method and device, electronic equipment and storage medium |

Citations (7)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN111860695A (en) * | 2020-08-03 | 2020-10-30 | 上海高德威智能交通系统有限公司 | A data fusion, target detection method, device and equipment |

| CN112363145A (en) * | 2020-11-09 | 2021-02-12 | 浙江光珀智能科技有限公司 | Vehicle-mounted laser radar temperature compensation system and method |

| CN112836734A (en) * | 2021-01-27 | 2021-05-25 | 深圳市华汉伟业科技有限公司 | Heterogeneous data fusion method and device, and storage medium |

| CN113538569A (en) * | 2021-08-11 | 2021-10-22 | 广东工业大学 | Weak texture object pose estimation method and system |

| CN113850744A (en) * | 2021-08-26 | 2021-12-28 | 辽宁工程技术大学 | Image Enhancement Algorithm Based on Adaptive Retinex and Wavelet Fusion |

| CN115063329A (en) * | 2022-06-10 | 2022-09-16 | 中国人民解放军国防科技大学 | Visible light and infrared image fusion enhancement method and system in low light environment |

| CN115656984A (en) * | 2022-09-19 | 2023-01-31 | 深圳市欢创科技有限公司 | TOF point cloud processing method, point cloud optimization method, laser radar and robot |

-

2023

- 2023-02-06 CN CN202310066606.4A patent/CN115984658B/en active Active

Patent Citations (7)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN111860695A (en) * | 2020-08-03 | 2020-10-30 | 上海高德威智能交通系统有限公司 | A data fusion, target detection method, device and equipment |

| CN112363145A (en) * | 2020-11-09 | 2021-02-12 | 浙江光珀智能科技有限公司 | Vehicle-mounted laser radar temperature compensation system and method |

| CN112836734A (en) * | 2021-01-27 | 2021-05-25 | 深圳市华汉伟业科技有限公司 | Heterogeneous data fusion method and device, and storage medium |

| CN113538569A (en) * | 2021-08-11 | 2021-10-22 | 广东工业大学 | Weak texture object pose estimation method and system |

| CN113850744A (en) * | 2021-08-26 | 2021-12-28 | 辽宁工程技术大学 | Image Enhancement Algorithm Based on Adaptive Retinex and Wavelet Fusion |

| CN115063329A (en) * | 2022-06-10 | 2022-09-16 | 中国人民解放军国防科技大学 | Visible light and infrared image fusion enhancement method and system in low light environment |

| CN115656984A (en) * | 2022-09-19 | 2023-01-31 | 深圳市欢创科技有限公司 | TOF point cloud processing method, point cloud optimization method, laser radar and robot |

Non-Patent Citations (3)

| Title |

|---|

| JONATHAN SCHIERL ET.AL: "Multi-modal Data Analysis and Fusion for Robust Object Detection in 2D/3D Sensing", 《2020 IEEE APPLIED IMAGERY PATTERN RECOGNITION WORKSHOP (AIPR)》, pages 1 - 7 * |

| 张诗: "基于Retinex理论的图像与视频增强算法研究", 《中国优秀硕士学位论文全文数据库 信息科技辑》, no. 02, pages 13 - 14 * |

| 蔡恒铨: "面向无序抓取的工件识别与位姿估计", 《中国优秀硕士学位论文全文数据库 信息科技辑》, no. 01, pages 38 - 42 * |

Cited By (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN118224993A (en) * | 2024-05-24 | 2024-06-21 | 杭州鲁尔物联科技有限公司 | Building structure deformation monitoring method and device, electronic equipment and storage medium |

Also Published As

| Publication number | Publication date |

|---|---|

| CN115984658B (en) | 2023-10-20 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| JP2022514974A (en) | Object detection methods, devices, electronic devices, and computer programs | |

| CN107113408B (en) | Image processing device, image processing method, program and system | |

| US20230146924A1 (en) | Neural network analysis of lfa test strips | |

| CN112614164A (en) | Image fusion method and device, image processing equipment and binocular system | |

| WO2021184254A1 (en) | Infrared thermal imaging temperature measurement method, electronic device, unmanned aerial vehicle and storage medium | |

| CN111753649B (en) | Parking space detection method, device, computer equipment and storage medium | |

| CN113267258B (en) | Infrared temperature measurement method, device, equipment, intelligent inspection robot and storage medium | |

| US20220067514A1 (en) | Inference apparatus, method, non-transitory computer readable medium and learning apparatus | |

| CN113358231A (en) | Infrared temperature measurement method, device and equipment | |

| CN112562093A (en) | Object detection method, electronic medium, and computer storage medium | |

| CN115984658A (en) | Multi-sensor fusion vehicle window identification method and system and readable storage medium | |

| CN113642425A (en) | Multi-mode-based image detection method and device, electronic equipment and storage medium | |

| CN111860042B (en) | Method and device for reading instrument | |

| CN117268345B (en) | High-real-time monocular depth estimation measurement method and device and electronic equipment | |

| CN113340405B (en) | Bridge vibration mode measuring method, device and system | |

| CN114002704A (en) | A lidar monitoring method, device and medium for bridge and tunnel | |

| CN108229271B (en) | Method and device for interpreting remote sensing image and electronic equipment | |

| CN115797998A (en) | A dual-band intelligent temperature measurement device and intelligent temperature measurement method based on image fusion | |

| CN119168944A (en) | Device heating detection method and system based on HSV color space image fusion | |

| US20240212101A1 (en) | Image fusion method, electronic device, unmanned aerial vehicle and storage medium | |

| CN113222968A (en) | Detection method, system, equipment and storage medium fusing millimeter waves and images | |

| CN109495694B (en) | RGB-D-based environment sensing method and device | |

| CN114414065A (en) | Object temperature detection method, object temperature detection device, computer equipment and medium | |

| JP7687387B2 (en) | Communication design support device, communication design support method, and program | |

| CN110196638B (en) | Mobile terminal augmented reality method and system based on target detection and space projection |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |