Disclosure of Invention

The invention provides an eye movement data preprocessing method and system, and aims to solve the problem that original eye movement data has defects and cannot be directly used for eye movement data analysis.

In one aspect, the present invention provides an eye movement data preprocessing method, including: acquiring original eye movement data, wherein the original eye movement data comprises left eye movement data and right eye movement data; verifying whether the original eye movement data is valid; performing frequency correction on the effective left eye movement data and/or right eye movement data; acquiring missing gaps of the missing data based on the corrected eye movement data, judging whether the missing gaps are invalid gaps based on a preset threshold value, and filling the missing data in the invalid gaps by constructing Fourier series; filtering and denoising the filled eye movement data; and performing feature extraction on the filtered and denoised eye movement data to classify eye movement points, and acquiring the eye movement data with effective labels based on the classified eye movement points.

Compared with the prior art, the invention has the following beneficial effects: in the original eye movement data acquisition stage, problems of data loss or hardware system data transmission delay faults and the like can almost inevitably occur, so that the acquired original eye movement data has a certain degree of inaccuracy; due to the hardware of the eye movement data acquisition equipment, the actual sampling frequency and the theoretical sampling frequency of the eye movement data are often larger, the applied eye movement data can be more regular through frequency correction, regular sampling points are more accurate, and the subsequent eye movement data can be conveniently processed; in the process of acquiring original eye movement data and tracking an eyeball, a user blinks or looks elsewhere, eye movement data loss is caused by factors such as data transmission delay in a hardware system, temporary faults, incapability of identifying the eyeball by an eye tracker and the like, missing gaps of missing data are acquired based on the corrected eye movement data, then are compared with a set threshold value, whether the missing gaps are invalid gaps is analyzed, missing data filling is carried out on the invalid gaps through constructing Fourier series, the missing data needing filling is added into the corrected eye movement data, the eye movement data used for data analysis are more complete, the accuracy of subsequent data analysis is improved, in addition, effective gaps caused by events such as blinking, temporary shielding of the sight of the eyeball tracker or the fact that the user moves away from the eyeball tracker can be eliminated by judging whether the missing gaps are invalid gaps, the work load of unnecessary missing data filling is further reduced, the eye movement data filling efficiency is improved, moreover, the invalid gaps are filled by constructing Fourier series, compared with the traditional linear interpolation filling (the filled eye movement data does not have sampling noise and random error, and the filled eye movement data is not real), the filling is carried out by adopting the constructed Fourier series, the filled eye movement data has random sampling error and noise, and the filled eye movement data is more real; random errors during data acquisition and movements of tremor, micro-eye jump, micro-saccade and the like in the movement of eyeballs of a user can generate certain noise for the acquisition of original eye movement data; the invention extracts the characteristics of the filtered and denoised eye movement data to classify the eye movement points, obtains the eye movement data with effective labels based on the classified eye movement points, and can be used for analyzing the eye movement characteristics of the user. The eye movement data processed by the preprocessing method of the invention is closer to the actual eye movement data of the user, and the eye movement characteristics of the user can be more accurately analyzed.

In some embodiments of the present invention, raw eye movement data is acquired by a data acquisition device, the raw eye movement data including a sampling time t, a human eye position 3D coordinate, a human eye fixation point 3D coordinate, and a human eye pupil diameter R.

The technical scheme has the advantages that the sampling time t, the 3D coordinate of the human eye position, the 3D coordinate of the human eye fixation point, the diameter R of the pupil of the human eye and other original eye movement data provide a data base for the subsequent steps of validity check, frequency correction, missing data filling, filtering and noise reduction treatment and the like, particularly sufficient data are provided for the validity check of the original eye movement data, the validity of the obtained original eye movement data is accurately judged, and the subsequent other preprocessing steps are facilitated.

In some embodiments of the invention, verifying that the raw eye movement data is valid comprises the steps of: a. judging whether the Z-axis coordinate of the 3D coordinate of the human eye position in the original eye movement data is larger than 0; b. judging whether the Z-axis coordinate of the 3D coordinate of the human eye fixation point in the original eye movement data is less than 0; c. judging whether the diameter R of the pupil of the human eye in the original eye movement data is more than or equal to 0 or not; d. if a, b and c are all yes, the detected original eye movement data is valid eye movement data, and if at least one of a, b and c is no, the detected original eye movement data is invalid eye movement data.

The effectiveness testing method has the advantages that the three conditions of a, b and c are limited, the effectiveness of the original eye movement data is judged through strict screening of the three conditions, the testing is more accurate, and the obtained effective eye movement data is more accurate.

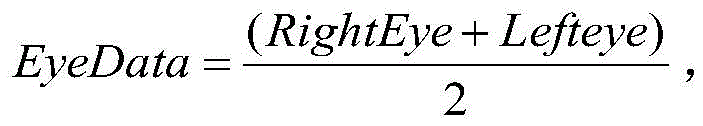

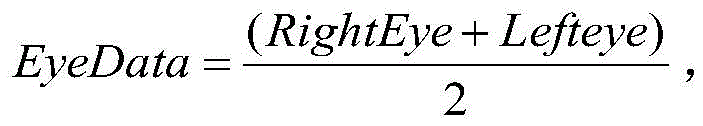

In some embodiments of the present invention, when performing frequency correction on the valid left eye movement data and right eye movement data, acquiring binocular movement data based on the valid left eye movement data and right eye movement data, and performing frequency correction, the binocular movement data being acquired by the following formula:

the eye data is eye movement data of both eyes, the RightEye is effective eye movement data of the right eye, and the LeftEye is effective eye movement data of the left eye.

The method has the advantages that when the binocular eye movement data are applied to frequency correction, the binocular eye movement data are obtained through the effective left eye movement data and the right eye movement data, the binocular eye movement data are obtained through the effective left eye movement data and the effective right eye movement data in the average process, the obtained binocular eye movement data are more practical, and the method can be used for analyzing the eye movement characteristics of the user more accurately.

In some embodiments of the present invention, the frequency correction of the valid left eye movement data and/or right eye movement data comprises the steps of: acquiring a rated sampling frequency f of data acquisition equipment for acquiring original eye movement data, a start Time StartTime for acquiring the original eye movement data, an end Time EndTime for acquiring the original eye movement data and a Time Time corresponding to each row of the original eye movement data; obtaining a theoretical sampling point index value No of the original eye movement data, wherein the obtaining formula is as follows: no [ (EndTime-StartTime) × f ] + 1; obtaining an actual Index value Index corresponding to a theoretical line in the frequency distortion data, wherein the obtaining formula is as follows: index [ (Time-StartTime) × f ] + 1; and assigning the data corresponding to the actual index value in the original eye movement data to the data corresponding to the theoretical sampling point index so as to correct the frequency. The actual sampling frequency and the theoretical sampling frequency of the original eye movement data acquired by the data acquisition equipment often have a large difference, and the original eye movement data can be called frequency distortion data.

The method has the advantages that the sampling frequency of the data acquisition equipment of the original eye movement data is between 40 and 120 Hz generally, the sampling frequency is influenced by the hardware reason of the data acquisition equipment, and the actual sampling frequency and the theoretical sampling frequency are larger in and out.

In some embodiments of the invention, the padding of missing data comprises the steps of: setting a threshold value to be 75ms, and judging whether a missing gap is an invalid gap or not based on the threshold value, wherein the missing gap with the gap length larger than 75ms is an effective gap, and the missing gap with the gap length smaller than 75ms is an invalid gap; filling missing data in invalid gaps by constructing Fourier series, and the method comprises the following steps: and recording a segment of missing data needing to be filled in the corrected eye movement data as x

(1)x

(2)...x

(i)And satisfy

Fetch missing data { x

(1)x

(2)...x

(i)Constructing Fourier series equation of the first k data in the sequence

Substituting the time coordinates corresponding to the first k data into an equation to obtain filling data 1, and recording the filling data as filling data 1

Fetch missing data { x

(1)x

(2)...x

(i)Constructing Fourier series equation of last k data in the data

Substituting the time coordinates corresponding to the k data into an equation to obtain filling data 2, and recording the filling data as

Carrying out average processing on the filling data 1 and the filling data 2 to obtain the filling data of the missing data

The method has the advantages that whether the missing gap is an invalid gap or not is judged based on the threshold value, and the effective gap caused by the events of blinking, temporary shielding of the sight of the eyeball tracker, removal of a user from the eyeball tracker and the like can be eliminated by judging whether the missing gap is the invalid gap or not, so that the workload of unnecessary missing data filling is reduced, and the eye movement data filling efficiency is improved; the invalid gaps needing to be filled with the eye movement data are screened out, the invalid gaps are filled with the eye movement data through constructing Fourier series, compared with the traditional linear interpolation filling (the filled eye movement data depend on two effective data points before and after the gaps and the lengths of the gaps, the filled eye movement data do not have sampling noise and random errors, and the filled eye movement data are not real), the filling is carried out through constructing the Fourier series, the filled eye movement data have random sampling errors and noise, the filled eye movement data are more real, the eye movement data which are more fit to reality are obtained through subsequent filtering and noise reduction processing, and the accuracy of the eye movement characteristic analysis of the user is further improved.

In some embodiments of the present invention, the filtering and denoising processing performed on the filled eye movement data comprises the following steps: carrying out primary noise reduction on the filled eye movement data by adopting a bilateral convolution filtering method, and carrying out wavelet noise reduction on the eye movement data subjected to primary noise reduction; wherein, the noise reduction step is as follows: recording filled eye movement data to be subjected to noise reduction as { (X)

1,Y

1)(X

2,Y

2)(X

3,Y

3)...(X

n,Y

n) Calculating Euclidean distance { L } of adjacent eye movement data by the following formula

1、L

2...L

n-1}:

Filtering by adopting a 3-point bilateral convolution filtering method through the following formula, and recording the filtered eye movement data as { (X)

(1),Y

(1)),(X

(2),Y

(2)),(X

(3),Y

(3))...(X

(n),Y

(n))}:X

(i+1)=α

1*X

(i)+α

2*X

(i+1)+α

3*X

(i+2),

X

(1)=β

1*X

(1)+β

2*X

(2),X

(n)=β

1*X

(n)+β

2*X

(n-1)(ii) a Wherein alpha is

1、α

2、α

3For convolution kernel coefficients, alpha

2=0.5;β

1、β

2Is a weight coefficient, beta

1=0.8、β

20.2; the wavelet denoising method comprises the following steps: performing wavelet transformation on the eye movement data subjected to the initial noise reduction to obtain a wavelet coefficient consisting of noise and detail characteristics of the eye movement data subjected to the initial noise reduction; based on the property difference of the wavelet coefficients corresponding to the noise and the eye movement data after the initial noise reduction on different scales, the wavelet coefficients of the eye movement data after the initial noise reduction containing the noise are processed in a wavelet domain to reduce the wavelet coefficients of the noise so as to realize the final noise reduction.

The filter denoising processing method has the advantages that the filter denoising processing method comprises initial denoising of a bilateral convolution filtering method and wavelet denoising after the initial denoising. Compared with the traditional moving average noise reduction method, the bilateral convolution filtering method has better noise reduction effect when the noise reduction is carried out, and the bilateral convolution filtering method not only comprises data of the previous time point, but also comprises data of the later time point; in addition, after the initial noise reduction, the wavelet noise reduction is carried out, so that the effect of removing noises such as white noise, Gaussian noise and the like is achieved. The overall trend of the eye movement data after noise reduction is the same as that of the original eye movement data acquired by the data acquisition equipment, and the intact data fluctuation characteristic is kept.

In some embodiments of the present invention, the eye movement points include a fixation point, a saccade point and a smooth tracing point, wherein the step of performing feature extraction on the filtered and noise-reduced eye movement data to classify the eye movement points is as follows: calculating a feature vector F ═ R, delta alpha, beta, R, delta L of the filtered and denoised eye movement data in each unit time2) Wherein R is the minimum radius of the coverage circle of the distribution of the filtered and de-noised eye movement data in unit time, delta alpha is the change rate of the adjacent movement direction in unit time, beta is the weighted average sum of the adjacent Euclidean distance ratios in unit time, R is the curvature radius of the adjacent three points in unit time, and delta L2Is the variance of Euclidean distance in unit time; inputting the characteristic vector with the truth label eye movement data into a decision tree classification model for training to obtain an eye movement data classification model; and calculating the characteristic vector of the filtered and denoised eye movement data to be classified, and inputting the characteristic vector into the trained eye movement data classification model to classify and obtain the fixation point, the saccade point and the smooth tracking point. The source of the eye movement data with the truth label is not limited, and the eye movement data with the truth label can be provided based on the existing data set (for example, based on a Gazecom data set) of the eye movement data with the truth label, or can be provided through various data sets self-constructed by technicians. It should be noted that the method for classifying the eye movement of the filtered and noise-reduced eye movement data according to the present invention is not limited thereto, and those skilled in the art can reasonably select the method according to actual requirements, for example, the filtered and noise-reduced eye movement data can be classified by methods such as I-VT and I-DT.

The method has the advantages that the characteristic vectors are calculated, the characteristic vectors of the eye movement data with the truth value labels are input into the decision tree classification model, the eye movement data classification model is obtained after training, the characteristic vectors of the filtered and noise-reduced eye movement data to be classified are input into the trained eye movement data classification model to classify and obtain the fixation point, the saccade point and the smooth tracking point, the characteristic extraction and classification method is simple and convenient, and the characteristic extraction and classification are accurate.

In some embodiments of the present invention, the obtaining of eye movement data with valid labels based on the classified eye movement points comprises a merging and discarding process of at least the gaze points, comprising the steps of: setting the maximum value of the sum of the gazing time of the adjacent gazing points, and recording the maximum value as a first threshold value; setting the maximum value of the visual angle between the gazing sight lines of the adjacent gazing points, and recording the maximum value as a second threshold value; judging whether to merge adjacent fixation points or not based on the first threshold and the second threshold; setting the minimum fixation duration of the fixation point, recording the minimum fixation duration as a third threshold, and judging whether to discard the fixation point or not based on the third threshold; if the sum of the gazing duration time of the front gazing point and the gazing duration time of the rear gazing point in the adjacent gazing points is less than a first threshold value, and the visual angle between the visual line when the gazing of the front gazing point in the adjacent gazing points is finished and the visual line when the gazing of the rear gazing point is started is not more than a second threshold value, combining the adjacent gazing points; if the gaze duration of the gaze point is less than the third threshold, the gaze point is discarded. The sum of the gazing time of the adjacent gazing points represents the sum of the gazing duration time of the front gazing point and the gazing duration time of the rear gazing point in the adjacent gazing points, and the visual angle between the gazing lines of the adjacent gazing points represents the visual angle between the visual line when the gazing of the front gazing point in the adjacent gazing points is finished and the visual line when the gazing of the rear gazing point is started. In the present invention, the step of obtaining the eye movement data with the effective label based on the classified eye movement points includes merging and discarding the gaze points, and a person skilled in the art may also add merging and discarding processes to the smooth tracking points according to actual requirements, that is, the eye movement data with the effective label may be the eye movement data including the gaze point, the smooth tracking points, and the gaze point after merging and discarding processes, or the eye movement data including the gaze point, the gaze point after merging and discarding processes, and the smooth tracking points after merging and discarding processes.

The technical scheme has the advantages that due to the fact that the obtained original eye movement data are noisy, the situation that extremely short saccades exist between two sections of fixation is judged, whether adjacent fixation points are combined or not is judged through setting the first threshold value and the second threshold value, the singularity of the fixation points can be guaranteed, and the situation that the same fixation points are repeatedly reflected is avoided. Unnecessary short fixation points can be discarded by setting the third threshold value, and eye movement data for analyzing the eye movement characteristics of the user can be briefly and accurately analyzed.

In another aspect, the present invention further provides an eye movement data preprocessing system, including: an original eye movement data acquisition unit for acquiring original eye movement data including left eye movement data and right eye movement data; a validity checking unit for checking whether the acquired original eye movement data is valid; the frequency correction unit is used for carrying out frequency correction on the effective left eye movement data and/or right eye movement data; the missing data filling unit is used for judging whether a missing gap of the missing data is an invalid gap or not and filling the missing data in the invalid gap by constructing Fourier series; the filtering and noise reducing unit is used for filtering and noise reducing the filled eye movement data; the characteristic extraction unit is used for carrying out characteristic extraction on the filtered and noise-reduced eye movement data to classify eye movement points and acquiring the eye movement data with effective labels based on the classified eye movement points; and the control module is used for sending an instruction to control the execution of each unit of the eye movement data preprocessing system.

Compared with the prior art, the invention has the following beneficial effects: the eye movement data preprocessing system is suitable for the eye movement data preprocessing method, the control module controls execution of the original eye movement data acquisition unit, the effectiveness inspection unit, the frequency correction unit, the missing data filling unit, the filtering and noise reduction unit and the feature extraction unit, the control module sends an instruction to control execution and stop of each unit, and therefore the eye movement data preprocessing method is achieved.

Detailed Description

In order to make the objects, technical solutions and advantages of the present invention more apparent, the following detailed description of various aspects of the present invention is provided with specific examples, which are only used for illustrating the present invention and do not limit the scope and spirit of the present invention.

The embodiment provides an eye movement data preprocessing method. Fig. 1 shows a flowchart of an eye movement data preprocessing method according to the present embodiment, fig. 2 shows a spatial schematic diagram of each original eye movement data when the eye movement data preprocessing method according to the present embodiment collects the original eye movement data, fig. 3 shows a frequency distribution diagram of the original eye movement data collected by a 40Hz eye tracker during frequency detection of the eye movement data preprocessing method according to the present embodiment, fig. 4 shows a frequency distribution histogram of the original eye movement data collected by the 40Hz eye tracker during frequency detection of the eye movement data preprocessing method according to the present embodiment, fig. 5 shows a sampling point effect schematic diagram of frequency correction of the eye movement data preprocessing method according to the present embodiment, fig. 6 shows an eye movement data broken line diagram after missing data filling of the eye movement data preprocessing method according to the present embodiment, fig. 7 shows an eye movement data broken line diagram after filling by using a conventional linear interpolation filling manner, fig. 8 shows a comparison graph of eye movement data before and after the eye movement data preprocessing method of the present embodiment, and after the eye movement data preprocessing method is used for processing.

As shown in fig. 1, the method for preprocessing eye movement data of the present embodiment includes the following steps: s1, acquiring original eye movement data, wherein the original eye movement data comprise left eye movement data and right eye movement data; s2, checking whether the original eye movement data is valid; s3, performing frequency correction on the effective left eye movement data and/or right eye movement data; s4, acquiring missing gaps of missing data based on the corrected eye movement data, judging whether the missing gaps are invalid gaps based on a preset threshold value, and filling the invalid gaps with the missing data by constructing Fourier series; s5, filtering and denoising the filled eye movement data; and S6, extracting the features of the filtered and noise-reduced eye movement data to classify eye movement points, and acquiring the eye movement data with effective labels based on the classified eye movement points.

Referring to fig. 2, in this embodiment, in the step S1, the raw eye movement data may be acquired by a data acquisition device, specifically: a simple traffic scene is cut out in a video cutting mode, a Tobii TX60L eye tracker (data acquisition equipment) is used for recording original eye movement data when a driver observes a video, the screen resolution is 1920 x 1200, the user is 50cm away from a screen, the acquired original eye movement data comprise sampling time t, 3D coordinates (X, Y, Z) of positions of human eyes (left and right eyes), 3D coordinates (X, Y, Z) of fixation points of the human eyes (left and right eyes) and pupil diameters R of the human eyes, and the sampling frequency can be set according to actual requirements (for example, 40Hz, 60Hz and the like). Specifically, a user sits in front of a screen to observe a traffic scene, an eye tracker records original eye movement data of the user, the collected original eye movement data of a driver are transmitted into a designated folder, and the data of the designated folder are read in as input of preprocessing. In this embodiment, when the original eye movement data is acquired, the original eye movement data of the user may be acquired through a data acquisition device, and then the original eye movement data is classified, so as to identify and distinguish the left eye movement data and the right eye movement data. In the present embodiment, the human eye position 3D coordinate (X, Y, Z) and the human eye fixation point 3D coordinate (X, Y, Z) are acquired in the coordinate system of the 3D coordinate system of the Tobii TX60L eye tracker.

In the present embodiment, the step of S2 of verifying whether the original eye movement data is valid includes the steps of: a. judging whether the Z-axis coordinate of the 3D coordinate of the human eye position in the original eye movement data is larger than 0; b. judging whether the Z-axis coordinate of the 3D coordinate of the human eye fixation point in the original eye movement data is less than 0; c. judging whether the diameter R of the pupil of the human eye in the original eye movement data is more than or equal to 0 or not; d. if a, b and c are all yes, the checked original eye movement data are valid eye movement data, the validity of the valid eye movement data is given as 1 to mark the valid eye movement data, if at least one of a, b and c is no, the checked original eye movement data are invalid eye movement data, and the validity of the invalid eye movement data is given as 0 to mark the invalid eye movement data. In this embodiment, the effective eye movement data and the invalid eye movement data are assigned with the labels, so that the effective eye movement data can be conveniently extracted for subsequent operations. In this embodiment, the step S2 is performed on the basis of the Tobii TX60L eye tracker for verifying the validity of the original eye movement data, that is, after the Tobii TX60L eye tracker itself verifies the validity of the original eye movement data, the step S2 is further performed to verify the validity of the original eye movement data, and compared with the method that only the eye tracker is used for verifying the validity, the result of verifying the validity of the original eye movement data is more accurate. In the present embodiment, in the original eye movement data validity check condition c, the eye closing state is assumed when the pupil diameter R of the human eye in the original eye movement data is equal to 0, and when the condition c is not met, it indicates that the eye movement data is missing.

In this embodiment, when the effective left eye movement data and right eye movement data are applied to perform frequency correction in step S3, first, the binocular eye movement data are acquired based on the effective left eye movement data and right eye movement data, and then frequency correction is performed, where the binocular eye movement data are acquired by the following formula:

the eye data is eye movement data of both eyes, the RightEye is effective eye movement data of the right eye, and the LeftEye is effective eye movement data of the left eye. The binocular eye movement data is eye movement data obtained by averaging the left eye movement data and the right eye movement data after validity test. In the embodiment, the original eye movement data is classified after being acquired, the left eye movement data and the right eye movement data are classified and recognized, and the frequency correction is performed on the left eye movement data and the right eye movement data which are selected and applied effectively, so that the left eye movement data and the right eye movement data which are checked according to effectiveness can be averaged conveniently to obtain the binocular eye movement data.

In the present embodiment, the frequency correction of the valid left eye movement data and/or right eye movement data by the step S3 includes the steps of: acquiring a rated sampling frequency f of data acquisition equipment for acquiring original eye movement data, a start Time StartTime for acquiring the original eye movement data, an end Time EndTime for acquiring the original eye movement data and a Time Time corresponding to each row of the original eye movement data; obtaining a theoretical sampling point index value No of the original eye movement data, wherein the obtaining formula is as follows: no [ (EndTime-StartTime) × f ] + 1; obtaining an actual Index value Index corresponding to a theoretical line in the frequency distortion data, wherein the obtaining formula is as follows: index [ (Time-StartTime) × f ] + 1; and assigning the data corresponding to the actual index value in the original eye movement data to the data corresponding to the theoretical sampling point index so as to correct the frequency. As shown in fig. 3-4, a frequency distribution graph and a frequency distribution histogram of original eye movement data collected by a 40Hz eye movement instrument during frequency detection are shown, and it can be seen from the graph that when the theoretical sampling frequency is 40Hz, the actual sampling frequency is mostly lower than 40Hz, that is, the actual sampling frequency and the theoretical sampling frequency have a large difference. As shown in fig. 5, a sampling point effect schematic diagram of frequency correction of the eye movement data preprocessing method of the present embodiment is shown, it can be seen that the eye movement data obtained after frequency correction is more regular, and the regular sampling point is more accurate.

In this embodiment, the filling of missing data in the step S4 includes the following steps: setting a threshold value to be 75ms, and judging whether a missing gap is an invalid gap or not based on the threshold value, wherein the missing gap with the gap length larger than 75ms is an effective gap, and the missing gap with the gap length smaller than 75ms is an invalid gap; filling missing data in invalid gaps by constructing Fourier series, and the method comprises the following steps: and recording a segment of missing data needing to be filled in the corrected eye movement data as x

(1)x

(2)...x

(i)And satisfy

Fetch missing data { x

(1)x

(2)...x

(i)Constructing Fourier series equation of the first k data in the sequence

Substituting the time coordinates corresponding to the first k data into the equation to obtain filling data 1, and recording the filling data as

Fetch missing data { x

(1)x

(2)...x

(i)Constructing Fourier series equation of last k data in the data

Substituting the time coordinates corresponding to the k data into the equation to obtain filling data 2, and recording the filling data as

Carrying out average processing on the filling data 1 and the filling data 2 to obtain the filling data of the missing data

In the present embodiment, the threshold value 75ms is a value smaller than the normal blinking time, but the threshold value in the present embodiment is not limited thereto (but is preferably 75ms), and can be set reasonably by those skilled in the art according to actual needs. As shown in fig. 6 to 7, the eye movement data line graph (fig. 6) after filling missing data and the eye movement data line graph (fig. 7) after filling by using the conventional linear interpolation filling method of the eye movement data preprocessing method of the present embodiment are respectively shown, it can be seen that the eye movement data after filling in the present embodiment has random sampling errors and noise of the original eye movement data acquired by the original data acquisition device, and the eye movement data after filling is more real.

In this embodiment, the filtering and noise reduction processing performed on the filled eye movement data in step S5 includes the following steps: carrying out primary noise reduction on the filled eye movement data by adopting a bilateral convolution filtering method, and carrying out wavelet noise reduction on the eye movement data subjected to primary noise reduction; wherein, the noise reduction step is as follows: recording filled eye movement data to be subjected to noise reduction as { (X)

1,Y

1)(X

2,Y

2)(X

3,Y

3)...(X

n,Y

n) Calculating Euclidean distance { L } of adjacent eye movement data by the following formula

1、L

2...L

n-1}:

Filtering by adopting a 3-point bilateral convolution filtering method through the following formula, and recording the filtered eye movement data as { (X)

(1),Y

(1)),(X

(2),Y

(2)),(X

(3),Y

(3))...(X

(n),Y

(n))}:X

(i+1)=α

1*X

(i)+α

2*X

(i+1)+α

3*X

(i+2),

X

(1)=β

1*X

(1)+β

2*X

(2),X

(n)=β

1*X

(n)+β

2*X

(n-1)(ii) a Wherein alpha is

1、α

2、α

3For convolution kernel coefficients, alpha

2=0.5;β

1、β

2Is a weight coefficient, beta

1=0.8、β

20.2; the wavelet denoising method comprises the following steps: performing wavelet transformation on the eye movement data subjected to the initial noise reduction to obtain a wavelet coefficient consisting of noise and detail characteristics of the eye movement data subjected to the initial noise reduction; based on the property difference of the wavelet coefficients corresponding to the noise and the eye movement data after the initial noise reduction on different scales, the wavelet coefficients of the eye movement data after the initial noise reduction containing the noise are processed in a wavelet domain to reduce the wavelet coefficients of the noise so as to realize the final noise reduction.

Random errors during data acquisition and movements of tremor, micro-eye jumps, micro saccades and the like in user eyeball movement can generate certain noise for the acquisition of original eye movement data, in the embodiment, an improved 3-point bilateral convolution filtering method is firstly applied to carry out primary noise reduction on the data, a distance scale is applied to determine a noise reduction coefficient, then a wavelet noise reduction method is applied to carry out secondary noise reduction on the primary noise-reduced data, parameters are selected as shown in the following table 1, white noise, Gaussian noise and the like in the data are removed, a noise reduction comparison graph is shown in a graph 8, and errors between the eye movement data processed by the embodiment and real stimulation points are shown in the following table 2.

TABLE 1 wavelet de-noising parameter selection

| Parameter(s)

|

Parameter value

|

| Wavelet base type

|

db4

|

| Wavelet threshold

|

Bayes

|

| Number of wavelet decomposition layers

|

6

|

| Threshold function

|

Soft |

TABLE 2 noise reduction error contrast

| Noise reduction method

|

Error in accuracy

|

| Without noise reduction

|

1.6095

|

| Moving average noise reduction method

|

1.4044

|

| Method of the present embodiment

|

1.4034 |

As can be seen from fig. 3-8 and tables 1-2, the filled eye movement data in this embodiment has random sampling errors and noise of the original eye movement data acquired by the data acquisition device, and as shown in fig. 8, the original eye movement data acquired by the data acquisition device and the eye movement data processed by other noise reduction methods (moving average noise reduction) have too many spikes, the overall trend of the eye movement data after the noise reduction processing in this embodiment is the same as that of the original eye movement data, and intact data fluctuation characteristics are retained.

In this embodiment, in step S6, feature extraction is performed on the filtered and noise-reduced eye movement data to classify eye movement points, and the eye movement data with effective labels is obtained based on the classified eye movement points, which can be used to analyze the eye movement characteristics of the user. The eye movement points comprise a fixation point, a saccade point and a smooth tracking point, wherein the step of carrying out feature extraction on the filtered and noise-reduced eye movement data to classify the eye movement points is as follows: calculating a feature vector F ═ R, delta alpha, beta, R, delta L of the filtered and denoised eye movement data in each unit time2) Wherein R is the minimum radius of the coverage circle of the distribution of the filtered and de-noised eye movement data in unit time, delta alpha is the change rate of the adjacent movement direction in unit time, beta is the weighted average sum of the adjacent Euclidean distance ratios in unit time, R is the curvature radius of the adjacent three points in unit time, and delta L2Is the variance of Euclidean distance in unit time; inputting the feature vector of the eye movement data with the truth label into a decision tree classification model for training to obtain an eye movement data classification model; and calculating the characteristic vector of the filtered and denoised eye movement data to be classified, and inputting the characteristic vector into the trained eye movement data classification model to classify and obtain the fixation point, the saccade point and the smooth tracking point. In the present embodiment, the source of the eye movement data with the truth label is not limited, and may be provided based on a currently existing data set of the eye movement data with the truth label (for example, based on a Gazecom data set), or may be provided by various data sets self-constructed by a technician. It should be noted that the method for classifying the eye movement of the filtered and noise-reduced eye movement data according to the present invention is not limited thereto, and those skilled in the art can reasonably select the method according to actual requirements, for example, the filtered and noise-reduced eye movement data can be classified by methods such as I-VT and I-DT.

In the present embodiment, the feature vector F is (R, Δ α, β, R, Δ L)

2) Calculated by the following steps: recording the filtered and denoised eye movement data to be subjected to feature extraction as G ═ G

1,g

2,......g

nIs given by g

iIs the ith eye moving track point, g

iThe corresponding coordinate point is (X, Y, t), the value of i is 1,2, the. Computing the Europe between neighboring pointsThe Euclidean distance between the ith point and the (i + 1) th point is L, and the calculation formula is as follows:

the formula for β is as follows:

calculating the motion direction alpha of the continuous eye movement data points, wherein the motion direction of the ith point is alpha

iThe calculation formula is as follows:

therefore, the formula for Δ α is as follows:

calculating r, including a point set G in a certain unit time i

i={g

1,g

2,......g

kAnd calculating the distances a, b and c between the continuous three points, if the three points are not collinear:

calculating DeltaL

2A point set G is included in a unit time i

i={g

1,g

2,......g

kCalculating a set L of Euclidean distances of adjacent points

i={L

2,......L

kAnd k is more than or equal to 3, and the calculation formula is as follows:

calculating R, including a point set G in a certain unit time i

i={g

1,g

2,......g

kAnd the k is more than or equal to 3, and the specific steps are as follows: finding point set G in same unit time i

i={g

1,g

2,......g

kWherein k is more than or equal to 3; get g

1、g

2Two points, in g

1g

2Obtaining an initial circle C for the diameter

2(ii) a Adding point set G in sequence

i={g

1,g

2,......g

kPoints in the f, let the current point be g

iIf the point is on circle C

2Inner, then round C

2Half ofThe diameter is R; if not, g is given

1g

iTemporarily obtain a circle C for the diameter

iInsertion point g

iMust be on the circle C

iOn the boundary of (1); circle C

iAll points in 1 to i are not necessarily included, and a point not in C can be found

iOne point g in

j(j<i) In g, with

ig

jTemporarily obtain a circle C for the diameter

jThen g is

i、g

jMust be on the circle C

iOn the boundary of (1); circle C

jAll points in 1 to j are not necessarily included, and a point not in C is found

jOne point g in

k(k<j<i) In g, with

i,g

j,g

kBuild a new circle, then g

i,g

j,g

kAnd the radius of the new circle is R, wherein the radius of the new circle is defined as R.

In this embodiment, the step S6 of acquiring the eye movement data with the valid label based on the classified eye movement points includes at least merging and discarding the gaze point, and specifically includes the following steps: setting the maximum value of the sum of the gazing time of the adjacent gazing points, and recording the maximum value as a first threshold value; setting the maximum value of the visual angle between the gazing sight lines of the adjacent gazing points, and recording the maximum value as a second threshold value; judging whether to merge adjacent fixation points or not based on the first threshold and the second threshold; setting the minimum fixation duration of the fixation point, recording the minimum fixation duration as a third threshold, and judging whether to discard the fixation point or not based on the third threshold; if the sum of the gazing duration time of the front gazing point and the gazing duration time of the rear gazing point in the adjacent gazing points is less than a first threshold value, and the visual angle between the visual line when the gazing of the front gazing point in the adjacent gazing points is finished and the visual line when the gazing of the rear gazing point is started is not more than a second threshold value, combining the adjacent gazing points; if the gaze duration of the gaze point is less than the third threshold, the gaze point is discarded. In this embodiment, the setting of the first threshold, the second threshold and the third threshold is not limited, and those skilled in the art can set the threshold according to actual needs, and in this embodiment, the first threshold is preferably 75ms, the second threshold is preferably 0.5 °, and the third threshold is preferably 60 ms. In this embodiment, the step S6 of obtaining the eye movement data with the valid label based on the classified eye movement points includes, in addition to the merging and discarding process performed on the gaze point, a person skilled in the art may also add the merging and discarding process on the smooth tracking point according to actual requirements, the merging and discarding process method on the smooth tracking point is not limited, and the person skilled in the art may reasonably select the eye movement data according to needs. That is, the eye movement data with the valid label may be eye movement data including the point of gaze, the smoothed tracking point, and the merged and discarded processed point of gaze, or eye movement data including the point of gaze, the merged and discarded processed point of gaze, and the merged and discarded processed smoothed tracking point.

In the present embodiment, the experimental environment parameters CPU of the present example: inter (R) core (TM) i5-6200U @2.30GHz2.40GHz, display card GTX940M, 8GB of memory, Windows10 of operating system and based on x64 processor. It should be understood by those skilled in the art that the experimental environment of the eye movement data preprocessing method of the present embodiment includes, but is not limited to, this.

The present embodiment further provides an eye movement data preprocessing system, which includes: an original eye movement data acquisition unit for acquiring original eye movement data including left eye movement data and right eye movement data; a validity checking unit for checking whether the acquired original eye movement data is valid; the frequency correction unit is used for carrying out frequency correction on the effective left eye movement data and/or right eye movement data; the missing data filling unit is used for judging whether a missing gap of the missing data is an invalid gap or not and filling the missing data in the invalid gap by constructing Fourier series; the filtering and noise reducing unit is used for filtering and noise reducing the filled eye movement data; the feature extraction unit is used for carrying out feature extraction on the filtered and noise-reduced eye movement data to classify eye movement points, and acquiring the eye movement data with effective labels based on the classified eye movement points; and the control module is used for sending an instruction to control the execution of each unit of the eye movement data preprocessing system.

In this embodiment, the original eye movement data acquiring unit of the eye movement data preprocessing system may further classify the original eye movement data, so as to identify and distinguish the left eye movement data and the right eye movement data. The validity checking unit can respectively carry out assignment marking on the checked valid eye movement data and invalid eye movement data. The eye movement data preprocessing system of the embodiment may further include a binocular eye movement data acquiring unit, and when determining that the effective left eye movement data and right eye movement data are applied to perform frequency correction, the binocular eye movement data acquiring unit acquires the binocular eye movement data based on the effective left eye movement data and right eye movement data, and then the frequency correcting unit performs frequency correction on the binocular eye movement data. The feature extraction unit performs feature extraction on the filtered and noise-reduced eye movement data to obtain eye movement points including a fixation point, a saccade point and a smooth tracking point in a classified manner, and performs merging and discarding processing on at least the fixation points to obtain eye movement data with effective labels (including the fixation point after the saccade point, the smooth tracking point and the fixation point after the merging and discarding processing, or including the fixation point after the saccade point, the fixation point after the merging and discarding processing and the smooth tracking point after the discarding processing), which can be used for analyzing eye movement characteristics of a user.

The present invention has been described in conjunction with specific embodiments which are intended to be exemplary only and are not intended to limit the scope of the invention, which is to be given the full breadth of the appended claims and any and all modifications, variations or alterations that may occur to those skilled in the art without departing from the spirit of the invention. Therefore, various equivalent changes made according to the present invention still fall within the scope covered by the present invention.