CN108171663B - Image filling system of convolutional neural network based on feature map nearest neighbor replacement - Google Patents

Image filling system of convolutional neural network based on feature map nearest neighbor replacement Download PDFInfo

- Publication number

- CN108171663B CN108171663B CN201711416650.4A CN201711416650A CN108171663B CN 108171663 B CN108171663 B CN 108171663B CN 201711416650 A CN201711416650 A CN 201711416650A CN 108171663 B CN108171663 B CN 108171663B

- Authority

- CN

- China

- Prior art keywords

- layer

- image

- convolution

- deconvolution

- feature map

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Active

Links

- 238000013527 convolutional neural network Methods 0.000 title claims abstract description 20

- 238000000034 method Methods 0.000 claims abstract description 27

- 238000010586 diagram Methods 0.000 claims description 33

- 230000006870 function Effects 0.000 claims description 22

- 238000010606 normalization Methods 0.000 claims description 15

- 230000004913 activation Effects 0.000 claims description 12

- 238000012549 training Methods 0.000 claims description 10

- 230000008569 process Effects 0.000 claims description 6

- ORILYTVJVMAKLC-UHFFFAOYSA-N Adamantane Natural products C1C(C2)CC3CC1CC2C3 ORILYTVJVMAKLC-UHFFFAOYSA-N 0.000 claims description 3

- 238000004422 calculation algorithm Methods 0.000 claims description 3

- 230000000873 masking effect Effects 0.000 claims description 3

- 238000005457 optimization Methods 0.000 claims description 3

- 238000009792 diffusion process Methods 0.000 description 3

- 238000004364 calculation method Methods 0.000 description 2

- 230000000694 effects Effects 0.000 description 2

- 238000013528 artificial neural network Methods 0.000 description 1

- 238000013135 deep learning Methods 0.000 description 1

- 230000001419 dependent effect Effects 0.000 description 1

- 230000006872 improvement Effects 0.000 description 1

- 238000012986 modification Methods 0.000 description 1

- 230000004048 modification Effects 0.000 description 1

- 238000012545 processing Methods 0.000 description 1

- 230000004044 response Effects 0.000 description 1

- 238000004088 simulation Methods 0.000 description 1

- 238000006467 substitution reaction Methods 0.000 description 1

Images

Classifications

-

- G06T5/77—

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/04—Architecture, e.g. interconnection topology

Abstract

An image filling system of a convolutional neural network based on feature map nearest neighbor replacement belongs to the technical field of image filling, and solves the problem that the existing image filling method cannot quickly obtain a filled image with consistent integral semantics and good definition. The system comprises the following steps: and the generated network encodes and then decodes the image to be filled to obtain the filled image. The decoder for generating the network comprises N deconvolution layers, the network is generated based on the output result of each deconvolution layer and the output result of the convolution layer corresponding to the deconvolution layer for any M deconvolution layers from the first deconvolution layer to the N-1 st deconvolution layer, an additional feature map is obtained by adopting a feature map nearest neighbor replacement mode, and the output result of each deconvolution layer, the output result of the convolution layer corresponding to the deconvolution layer and the additional feature map are used as input objects of the next deconvolution layer. And the judging network is used for judging whether the filled image is a real image corresponding to the image to be filled.

Description

Technical Field

The invention relates to an image filling system, and belongs to the technical field of image filling.

Background

Image filling is a fundamental problem in the field of computer vision and image processing, and is mainly used for performing restoration reconstruction on damaged images or removing unnecessary objects in the images.

The existing image filling methods mainly include a diffusion-based image filling method, a sample-based image filling method and a depth learning-based image filling method.

The basic idea of the diffusion-based image filling method is as follows: and diffusing the image information at the edge of the region to be filled into the inner part of the region to be filled by taking the pixel points as units. When the area of the area to be filled is small, the structure is simple, and the texture is single, the image filling method can well complete the image filling task. However, when the area of the region to be filled is large, the definition of the filled image obtained by the image filling method is poor.

The basic idea of the sample-based image filling method is as follows: and gradually filling the image blocks from the known area of the image to the area to be filled by taking the image blocks as units. And filling the area to be filled with the image blocks which are most similar to the image blocks at the edge of the area to be filled in the known area of the image each time the image blocks are filled. Compared with the image filling method based on diffusion, the filled image obtained by the image filling method based on the sample has better texture and higher definition. However, since the sample-based image filling method gradually replaces the unknown image blocks in the region to be filled with similar image blocks in the known region of the image, a filled image with uniform overall semantics cannot be obtained by using the image filling method.

The image filling method based on deep learning mainly refers to the application of a deep neural network to the field of image filling. Currently, it is proposed to use an encoder-decoder network to perform image filling on an image with missing intermediate regions. However, this image filling method is only applicable to 128 × 128 RGB images. Although the filled image obtained by the image filling method can meet the requirement of uniform overall semantics, the definition of the filled image is poor. In response to this problem, some researchers have attempted to perform a clear filling of large graphs using multi-scale iterative updating. However, although such image filling methods result in filled images with overall semantic consistency and good sharpness, they are extremely slow. In the Titan X display running environment, it takes tens of seconds to several minutes to fill a 256 × 256 RGB image.

Disclosure of Invention

The invention provides an image filling system of a convolutional neural network based on nearest neighbor replacement of a feature map, which aims to solve the problem that the existing image filling method cannot quickly obtain a filled image with consistent integral semantics and good definition.

The image filling system of the convolutional neural network based on feature map nearest neighbor replacement comprises a generating network and a judging network;

the generation network comprises an encoder and a decoder, wherein the encoder comprises N convolution layers, the decoder comprises N deconvolution layers, and N is more than or equal to 2;

the generation network obtains the filled image by a mode of firstly encoding and then decoding the image to be filled;

for any M deconvolution layers from the first deconvolution layer to the N-1 th deconvolution layer, generating a network based on an output result of each deconvolution layer and an output result of a convolution layer corresponding to the deconvolution layer, obtaining an additional feature map by adopting a feature map nearest neighbor replacement mode, and taking the output result of each deconvolution layer, the output result of the convolution layer corresponding to the deconvolution layer and the obtained additional feature map as input objects of the next deconvolution layer;

1≤M≤N-1;

the judgment network is used for judging whether the filled image is a real image corresponding to the image to be filled, and further restricting the weight learning of the generated network.

Preferably, the encoder includes a convolutional layer E1-convolutional layer E8The decoder includes an deconvolution layer D1Inverse convolution layer D8;

The image to be filled is a convolution layer E1The input object of (1);

for convolution layer E1-convolutional layer E8The output result of the former is used as the input object of the latter after being sequentially subjected to batch normalization and activation of a Leaky ReLU function;

convolution layer E8The output result of (A) is used as an deconvolution layer D after being sequentially subjected to batch normalization and activation of a Leaky ReLU function1The input object of (1);

deconvolution layer D1The output result of (A) is used as a deconvolution layer D after being activated by a ReLU function2The first input object of (1);

for deconvolution layer D2Inverse convolution layer D8The output result of the former is used as a first input object of the latter after being sequentially activated by a ReLU function and normalized in batches;

deconvolution layer D2Inverse convolution layer D8The second input object of (2) is a convolutional layer in turnE7-convolutional layer E1The output result is sequentially subjected to batch normalization and Leaky ReLU function activation;

deconvolution layer D after Tanh function activation8The output of (1) is a filled image;

convolution layer E1The convolution operation is used for performing 64 convolution operations with 4 × 4 and the step size of 2 on the input object;

convolution layer E2The convolution operation is used for performing 128 4-by-4 convolution operations with the step size of 2 on the input object;

convolution layer E3The convolution operation is used for carrying out 256 convolution operations with 4 × 4 and the step size of 2 on the input object;

convolution layer E4-convolutional layer E8All used for carrying out 512 convolution operations with 4 × 4 and 2 steps on the input object;

deconvolution layer D1Inverse convolution layer D4All used for carrying out 512 deconvolution operations with 4 × 4 and step length of 2 on the input object;

deconvolution layer D5The deconvolution operation is used for carrying out 256 operations with 4 × 4 and the step size of 2 on the input object;

deconvolution layer D6The deconvolution operation is carried out on the input objects with 128 4 × 4 steps of 2;

deconvolution layer D7The deconvolution device is used for carrying out 64 deconvolution operations with 4 × 4 and the step size of 2 on the input object;

deconvolution layer D8The deconvolution operation is performed on the input object by 3 times 4 with the step size of 2;

generating networks based on deconvolution layer D5Output result of (2) and convolutional layer E3And obtaining an additional feature map by adopting a feature map nearest neighbor replacement mode, and taking the additional feature map as a deconvolution layer D6The third input object of (1).

Preferably, the generation network is based on the deconvolution layer D5Output result of (2) and convolutional layer E3The specific process of obtaining the additional feature map by adopting the feature map nearest neighbor replacement mode is as follows:

selecting a feature map to be assigned with feature values of 0The feature map and the deconvolution layer D5Output feature map of (2) and convolutional layer E3The output feature maps of (a) have equal channel numbers and the same space size;

calculating to obtain the deconvolution layer D5Mask region of the output feature map of (1) and convolution layer E3And simultaneously cutting the masked areas and the unmasked areas into a plurality of feature blocks;

the characteristic blocks are cuboids with the size of C h w, wherein C, h and w are deconvolution layers D respectively5The number of channels of the output characteristic diagram, the length of the cuboid and the width of the cuboid;

for each feature block p in the masked area1Selecting a feature block p from the plurality of feature blocks of the non-mask region1Nearest feature block p2;

Selecting a region to be assigned in the feature map to be assigned, wherein the region to be assigned and the feature block p1At the deconvolution layer D5The positions in the output feature map of (a) are consistent;

will the characteristic block p2The characteristic value of (2) is given to the area to be assigned.

Preferably, the feature block p2And feature block p1The cosine of (c) is closest.

Preferably, the calculation method of the masked area and the unmasked area of the output feature map is as follows:

giving a mask image to replace an image to be filled, wherein the mask image and the image to be filled have the same size, the number of channels is 1, and the characteristic value is 0 or 1;

0 represents that the corresponding position of the characteristic point on the image to be filled is a non-filling point;

1 represents that the corresponding position of the characteristic point on the image to be filled is a point to be filled;

calculating a mask region and a non-mask region of a feature map of a mask image through a convolution network, wherein the convolution network comprises a first convolution layer to a third convolution layer;

the mask image is an input object of the first convolution layer;

for the first convolution layer to the third convolution layer, the output result of the former is the input object of the latter;

the first convolution layer to the third convolution layer are all used for carrying out 1 convolution operation with 4 x 4 and step length of 2 on the input object;

the output result of the third convolution layer is a feature map of the mask image, the size of the feature map is 32 × 32, and the channel is 1;

for the feature map of the mask image, when one feature value of the feature map is larger than a set threshold value, judging that the feature point is a mask point, otherwise, judging that the feature point is a non-mask point;

the mask area of the feature map of the mask image is a set of mask points, and the unmasked area of the feature map of the mask image is a set of unmasked points;

the mask area of the output characteristic diagram is equal to the mask area of the characteristic diagram of the mask image, and the unmasked area of the output characteristic diagram is equal to the unmasked area of the characteristic diagram of the mask image.

Preferably, the generated network is trained in a guidance loss constraint mode, wherein the specific guidance loss constraint mode is to perform feature similarity constraint on the real image and the input image in any convolution layer or deconvolution layer in the network generation training process;

the input image is a real image subjected to the masking operation.

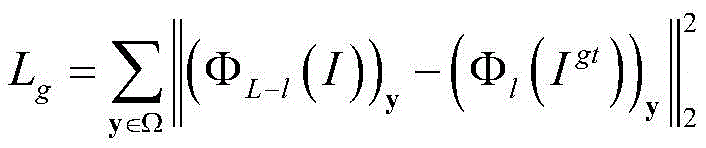

Preferably, the specific way of generating the network for training is as follows:

the target image IgtInputting the data into a generation network, calculating a mask region of a characteristic diagram of the l-th layer, and obtaining (phi)l(Igt))yInformation;

inputting the image I to be filled into a generation network, calculating a mask region of a characteristic diagram of the L-L layer, and obtaining (phi)L-l(I))yInformation;

at this point a guidance loss constraint L is definedg:

Where Ω is the mask area, L is the total number of layers to generate the network, y is any coordinate point within the mask area, ΦL-l(I) When the input object is an image to be filled, a characteristic diagram output by the network at the L-L level is generated, (phi)L-l(I))yInformation of y in the masked region of the output feature map for the L-L-th layer, Φl(Igt) When the input object is a target image, generating a characteristic diagram output by the network at the l-th layer (phi)l(Igt))yAnd the information of y in the mask area of the output characteristic diagram of the l-th layer.

Preferably, the discriminating network comprises a convolutional layer E9-convolutional layer E13;

Convolution layer E9The input object of (1) is a filled image;

convolution layer E9The output result of (A) is activated by a Leaky ReLU function and then used as a convolution layer E10The input object of (1);

for convolution layer E10-convolutional layer E13The output result of the former is used as the input object of the latter after being sequentially subjected to batch normalization and activation of a Leaky ReLU function;

convolutional layer E sequentially subjected to batch normalization and Sigmoid function activation13The output result of (1) is the output result of the discrimination network;

convolution layer E9The convolution operation is used for performing 64 convolution operations with 4 × 4 and the step size of 2 on the input object;

convolution layer E10The convolution operation is used for performing 128 4-by-4 convolution operations with the step size of 2 on the input object;

convolution layer E11The convolution operation is used for carrying out 256 convolution operations with 4 × 4 and the step size of 2 on the input object;

convolution layer E12The convolution operation is used for carrying out 512 convolution operations with 4 x 4 and step size of 1 on the input object;

convolution layer E13For performing 1 convolution operation with 4 × 4 and step size of 1 on the input object.

Preferably, the filled image is an RGB image of 256 × 256, and the convolution layer E is formed13The space of the output result is largeSmall 64 x 64, channel 1.

Preferably, the image population system is trained end-to-end using an Adam optimization algorithm.

The image filling system of the convolutional neural network based on feature map nearest neighbor replacement takes an image to be filled as an input object of the image, and performs feature map nearest neighbor replacement through intermediate output of a network decoding part, so that the filled image with integral semantic consistency and good definition can be obtained through one-time forward propagation. Compared with the existing image filling method, the image filling system can obtain the filled image more quickly because only one forward propagation is needed.

Drawings

The image filling system of the convolutional neural network based on feature map nearest neighbor replacement according to the present invention will be described in more detail below based on embodiments and with reference to the accompanying drawings, in which:

FIG. 1 is a block diagram of a network according to an embodiment;

FIG. 2 is a block diagram of a discrimination network according to an embodiment;

FIG. 3 is an arbitrarily missing image to be filled;

FIG. 4 is a filled image obtained after inputting any missing image to be filled into the generation network;

FIG. 5 is a missing-centered image to be filled;

fig. 6 is a filled image obtained by inputting an image to be filled with a missing center into the generation network.

Detailed Description

The image filling system based on the convolutional neural network with feature map nearest neighbor replacement according to the present invention will be further described with reference to the accompanying drawings.

Example (b): the present embodiment will be described in detail with reference to fig. 1 to 6.

The image filling system of the convolutional neural network based on feature map nearest neighbor replacement described in this embodiment includes a generation network and a discrimination network;

the generation network comprises an encoder and a decoder, wherein the encoder comprises N convolution layers, the decoder comprises N deconvolution layers, and N is more than or equal to 2;

the generation network obtains the filled image by a mode of firstly encoding and then decoding the image to be filled;

for any M deconvolution layers from the first deconvolution layer to the N-1 th deconvolution layer, generating a network based on an output result of each deconvolution layer and an output result of a convolution layer corresponding to the deconvolution layer, obtaining an additional feature map by adopting a feature map nearest neighbor replacement mode, and taking the output result of each deconvolution layer, the output result of the convolution layer corresponding to the deconvolution layer and the obtained additional feature map as input objects of the next deconvolution layer;

1≤M≤N-1;

the judgment network is used for judging whether the filled image is a real image corresponding to the image to be filled, and further restricting the weight learning of the generated network.

The encoder of the present embodiment includes a convolution layer E1-convolutional layer E8The decoder includes an deconvolution layer D1Inverse convolution layer D8;

The image to be filled is a convolution layer E1The input object of (1);

for convolution layer E1-convolutional layer E8The output result of the former is used as the input object of the latter after being sequentially subjected to batch normalization and activation of a Leaky ReLU function;

convolution layer E8The output result of (A) is used as an deconvolution layer D after being sequentially subjected to batch normalization and activation of a Leaky ReLU function1The input object of (1);

deconvolution layer D1The output result of (A) is used as a deconvolution layer D after being activated by a ReLU function2The first input object of (1);

for deconvolution layer D2Inverse convolution layer D8The output result of the former is used as a first input object of the latter after being sequentially activated by a ReLU function and normalized in batches;

deconvolution layer D2Inverse convolution layer D8The second input object of (2) is sequentially a convolutional layer E7-convolutional layer E1The output result is sequentially subjected to batch normalization and Leaky ReLU function activation;

deconvolution layer D after Tanh function activation8The output of (1) is a filled image;

convolution layer E1The convolution operation is used for performing 64 convolution operations with 4 × 4 and the step size of 2 on the input object;

convolution layer E2The convolution operation is used for performing 128 4-by-4 convolution operations with the step size of 2 on the input object;

convolution layer E3The convolution operation is used for carrying out 256 convolution operations with 4 × 4 and the step size of 2 on the input object;

convolution layer E4-convolutional layer E8All used for carrying out 512 convolution operations with 4 × 4 and 2 steps on the input object;

deconvolution layer D1Inverse convolution layer D4All used for carrying out 512 deconvolution operations with 4 × 4 and step length of 2 on the input object;

deconvolution layer D5The deconvolution operation is used for carrying out 256 operations with 4 × 4 and the step size of 2 on the input object;

deconvolution layer D6The deconvolution operation is carried out on the input objects with 128 4 × 4 steps of 2;

deconvolution layer D7The deconvolution device is used for carrying out 64 deconvolution operations with 4 × 4 and the step size of 2 on the input object;

deconvolution layer D8The deconvolution operation is performed on the input object by 3 times 4 with the step size of 2;

generating networks based on deconvolution layer D5Output result of (2) and convolutional layer E3And obtaining an additional feature map by adopting a feature map nearest neighbor replacement mode, and taking the additional feature map as a deconvolution layer D6The third input object of (1).

The generation network of this embodiment is based on deconvolution layer D5Output result of (2) and convolutional layer E3The specific process of obtaining the additional feature map by adopting the feature map nearest neighbor replacement mode is as follows:

selecting a feature map to be assigned with feature values of 0, and comparing the feature map with a deconvolution layer D5Output feature map of (2) and convolutional layer E3The output feature maps of (a) have equal channel numbers and the same space size;

calculating to obtain the deconvolution layer D5Mask region of the output feature map of (1) and convolution layer E3And simultaneously cutting the masked areas and the unmasked areas into a plurality of feature blocks;

the characteristic blocks are cuboids with the size of C h w, wherein C, h and w are deconvolution layers D respectively5The number of channels of the output characteristic diagram, the length of the cuboid and the width of the cuboid;

for each feature block p in the masked area1Selecting a feature block p from the plurality of feature blocks of the non-mask region1Nearest feature block p2;

Selecting a region to be assigned in the feature map to be assigned, wherein the region to be assigned and the feature block p1At the deconvolution layer D5The positions in the output feature map of (a) are consistent;

will the characteristic block p2The characteristic value of (2) is given to the area to be assigned.

The calculation mode of the mask region and the non-mask region of the output characteristic diagram is as follows:

giving a mask image to replace an image to be filled, wherein the mask image and the image to be filled have the same size, the number of channels is 1, and the characteristic value is 0 or 1;

0 represents that the corresponding position of the characteristic point on the image to be filled is a non-filling point;

1 represents that the corresponding position of the characteristic point on the image to be filled is a point to be filled;

calculating a mask region and a non-mask region of a feature map of a mask image through a convolution network, wherein the convolution network comprises a first convolution layer to a third convolution layer;

the mask image is an input object of the first convolution layer;

for the first convolution layer to the third convolution layer, the output result of the former is the input object of the latter;

the first convolution layer to the third convolution layer are all used for carrying out 1 convolution operation with 4 x 4 and step length of 2 on the input object;

the output result of the third convolution layer is a feature map of the mask image, the size of the feature map is 32 × 32, and the channel is 1;

for the feature map of the mask image, when one feature value of the feature map is larger than a set threshold value, judging that the feature point is a mask point, otherwise, judging that the feature point is a non-mask point;

the mask area of the feature map of the mask image is a set of mask points, and the unmasked area of the feature map of the mask image is a set of unmasked points;

the mask area of the output characteristic diagram is equal to the mask area of the characteristic diagram of the mask image, and the unmasked area of the output characteristic diagram is equal to the unmasked area of the characteristic diagram of the mask image.

The generation network of the embodiment is trained in a guidance loss constraint mode, wherein the specific guidance loss constraint mode is to perform feature similarity constraint on a real image and an input image in any convolutional layer or deconvolution layer in the network generation training process;

the input image is a real image subjected to the masking operation.

The specific way of training the generated network of this embodiment is as follows:

inputting the target image Igt into a generation network, calculating a mask region of a characteristic diagram of the l-th layer, and obtaining (phi)l(Igt))yInformation;

inputting the image I to be filled into a generation network, calculating a mask region of a characteristic diagram of the L-L layer, and obtaining (phi)L-l(I))yInformation;

at this point a guidance loss constraint L is definedg:

Where Ω is the mask area, L is the total number of layers to generate the network, y is any coordinate point within the mask area, ΦL-l(I) When the input object is an image to be filled, generatingCharacteristic diagram of network output at L-L layer (phi)L-l(I))yInformation of y in the masked region of the output feature map for the L-L-th layer, Φl(Igt) When the input object is a target image, generating a characteristic diagram output by the network at the l-th layer (phi)l(Igt))yAnd the information of y in the mask area of the output characteristic diagram of the l-th layer.

In addition, the image I to be filled is recorded as phi (I; W) after passing through the generation network, wherein W is a parameter for generating a network model. Defining reconstruction loss

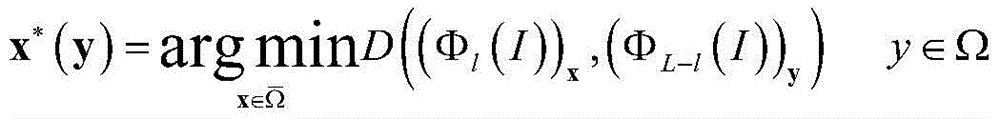

For each (phi)L-l(I))yOf which is in contact with (phi)l(I))xThe distance of (d) is calculated as follows:

x is any coordinate point in the non-mask region (phi)l(I))xIs the information of x in the unmasked region of the output feature map of the l-th layer,non-masked areas.

Wherein the distance metric is formulated as follows:

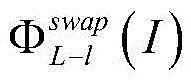

find the closest point x*After (y), using x*(y) substitutionIn the same plane as y in the areaIs an additional feature map to be input into the next deconvolution layer.

Namely, the method comprises the following steps:

the discriminating network of this embodiment includes a convolutional layer E9-convolutional layer E13;

Convolution layer E9The input object of (1) is a filled image;

convolution layer E9The output result of (A) is activated by a Leaky ReLU function and then used as a convolution layer E10The input object of (1);

for convolution layer E10-convolutional layer E13The output result of the former is used as the input object of the latter after being sequentially subjected to batch normalization and activation of a Leaky ReLU function;

convolutional layer E sequentially subjected to batch normalization and Sigmoid function activation13The output result of (1) is the output result of the discrimination network;

convolution layer E9The convolution operation is used for performing 64 convolution operations with 4 × 4 and the step size of 2 on the input object;

convolution layer E10The convolution operation is used for performing 128 4-by-4 convolution operations with the step size of 2 on the input object;

convolution layer E11The convolution operation is used for carrying out 256 convolution operations with 4 × 4 and the step size of 2 on the input object;

convolution layer E12The convolution operation is used for carrying out 512 convolution operations with 4 x 4 and step size of 1 on the input object;

convolution layer E13For performing 1 convolution operation with 4 × 4 and step size of 1 on the input object.

256 × 256 RGB image as filled image, convolution layer E13The spatial size of the output result of (1) is 64 × 64, and the channel is 1.

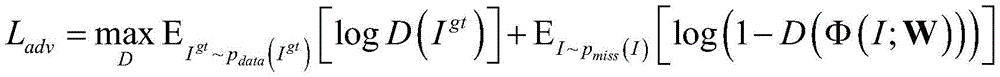

Determining if the network input is phi (I; W) or I generating the output of the networkgtGenerating a network and judging the network to carry out the confrontation training, and generating the confrontation loss L at the momentadv:

In the formula, pdata(Igt) For distribution of real images, pmiss(I) For the distribution of the input image, D (-) means that the image of the discrimination network input into the discrimination network is from pdata(Igt) Log is a logarithmic function, IgtIs the target image and I is the image to be filled.

Thus, when training the generating network, the total loss is L:

wherein λgAnd λadvAre all hyper-parameters.

Fig. 3 is an image to be filled which is arbitrarily missing, and fig. 4 is a filled image obtained after the image to be filled which is arbitrarily missing is input into the generation network. Comparing fig. 3 with fig. 4, it can be seen that: the image filling system of the convolutional neural network based on feature map nearest neighbor replacement is suitable for filling any missing image to be filled, and can obtain a good filling effect.

Fig. 5 is an image to be filled with a missing center, and fig. 6 is a filled image obtained by inputting the image to be filled with a missing center into the generation network. Comparing fig. 5 with fig. 6, it can be seen that: the image filling system of the convolutional neural network based on feature map nearest neighbor replacement is suitable for filling images to be filled with missing centers, and can obtain a good filling effect.

Through simulation experiments, the image filling system based on the convolutional neural network with feature map nearest neighbor replacement described in this embodiment takes about 80ms for a 256 × 256 RGB image. Compared with the existing image filling method which takes tens of seconds to several minutes, the image filling system of the embodiment has a significant improvement in filling speed.

The image filling system of the convolutional neural network based on feature map nearest neighbor replacement described in this embodiment performs end-to-end training by using an Adam optimization algorithm.

Although the invention herein has been described with reference to particular embodiments, it is to be understood that these embodiments are merely illustrative of the principles and applications of the present invention. It is therefore to be understood that numerous modifications may be made to the illustrative embodiments and that other arrangements may be devised without departing from the spirit and scope of the present invention as defined by the appended claims. It should be understood that features described in different dependent claims and herein may be combined in ways different from those described in the original claims. It is also to be understood that features described in connection with individual embodiments may be used in other described embodiments.

Claims (8)

1. The image filling system of the convolutional neural network based on the nearest neighbor replacement of the feature map is characterized by comprising a generating network and a judging network;

the generation network comprises an encoder and a decoder, wherein the encoder comprises N convolution layers, the decoder comprises N deconvolution layers, and N is more than or equal to 2;

the generation network obtains the filled image by a mode of firstly encoding and then decoding the image to be filled;

for any M deconvolution layers from the first deconvolution layer to the N-1 th deconvolution layer, generating a network based on an output result of each deconvolution layer and an output result of a convolution layer corresponding to the deconvolution layer, obtaining an additional feature map by adopting a feature map nearest neighbor replacement mode, and taking the output result of each deconvolution layer, the output result of the convolution layer corresponding to the deconvolution layer and the obtained additional feature map as input objects of the next deconvolution layer;

the judging network is used for judging whether the filled image is a real image corresponding to the image to be filled or not, and further constraining the weight learning of the generated network;

the encoder includes a convolution layer E1-convolutional layer E8The decoder includes an deconvolution layer D1Inverse convolution layer D8;

The image to be filled is a convolution layer E1The input object of (1);

for convolution layer E1-convolutional layer E8The output result of the former is used as the input object of the latter after being sequentially subjected to batch normalization and activation of a Leaky ReLU function;

convolution layer E8The output result of (A) is used as an deconvolution layer D after being sequentially subjected to batch normalization and activation of a Leaky ReLU function1The input object of (1);

deconvolution layer D1The output result of (A) is used as a deconvolution layer D after being activated by a ReLU function2The first input object of (1);

for deconvolution layer D2Inverse convolution layer D8The output result of the former is used as a first input object of the latter after being sequentially activated by a ReLU function and normalized in batches;

deconvolution layer D2Inverse convolution layer D8The second input object of (2) is sequentially a convolutional layer E7-convolutional layer E1The output result is sequentially subjected to batch normalization and Leaky ReLU function activation;

deconvolution layer D after Tanh function activation8The output of (1) is a filled image;

convolution layer E1The convolution operation is used for performing 64 convolution operations with 4 × 4 and the step size of 2 on the input object;

convolution layer E2The convolution operation is used for performing 128 4-by-4 convolution operations with the step size of 2 on the input object;

convolution layer E3The convolution operation is used for carrying out 256 convolution operations with 4 × 4 and the step size of 2 on the input object;

convolution layer E4-convolutional layer E8All used for carrying out 512 convolution operations with 4 × 4 and 2 steps on the input object;

deconvolution layer D1Inverse convolution layer D4All used for carrying out 512 deconvolution operations with 4 × 4 and step length of 2 on the input object;

deconvolution layer D5The deconvolution operation is used for carrying out 256 operations with 4 × 4 and the step size of 2 on the input object;

deconvolution layer D6The deconvolution operation is carried out on the input objects with 128 4 × 4 steps of 2;

deconvolution layer D7The deconvolution device is used for carrying out 64 deconvolution operations with 4 × 4 and the step size of 2 on the input object;

deconvolution layer D8The deconvolution operation is performed on the input object by 3 times 4 with the step size of 2;

generating networks based on deconvolution layer D5Output result of (2) and convolutional layer E3And obtaining an additional feature map by adopting a feature map nearest neighbor replacement mode, and taking the additional feature map as a deconvolution layer D6A third input object of (1);

generating networks based on deconvolution layer D5Output result of (2) and convolutional layer E3The specific process of obtaining the additional feature map by adopting the feature map nearest neighbor replacement mode is as follows:

selecting a feature map to be assigned with feature values of 0, and comparing the feature map with a deconvolution layer D5Output feature map of (2) and convolutional layer E3The output feature maps of (a) have equal channel numbers and the same space size;

calculating to obtain the deconvolution layer D5Mask region of the output feature map of (1) and convolution layer E3And simultaneously cutting the masked areas and the unmasked areas into a plurality of feature blocks;

the characteristic blocks are cuboids with the size of C h w, wherein C, h and w are deconvolution layers D respectively5The number of channels of the output characteristic diagram, the length of the cuboid and the width of the cuboid;

for each feature block p in the masked area1Selecting a feature block p from the plurality of feature blocks of the non-mask region1Nearest feature block p2;

Selecting a region to be assigned in the feature map to be assigned, wherein the region to be assigned and the feature block p1The positions in the output feature map of the deconvolution layer D5 are consistent;

will the characteristic block p2The characteristic value of (2) is given to the area to be assigned.

2. The feature map nearest neighbor replacement based image filling system of convolutional neural network of claim 1, wherein feature block p2And feature block p1The cosine of (c) is closest.

3. The feature map nearest neighbor replacement based image population system of a convolutional neural network of claim 2, wherein the masked areas and unmasked areas of the output feature map are computed by:

giving a mask image to replace an image to be filled, wherein the mask image and the image to be filled have the same size, the number of channels is 1, and the characteristic value is 0 or 1;

0 represents that the corresponding position of the characteristic point on the image to be filled is a non-filling point;

1 represents that the corresponding position of the characteristic point on the image to be filled is a point to be filled;

calculating a mask region and a non-mask region of a feature map of a mask image through a convolution network, wherein the convolution network comprises a first convolution layer to a third convolution layer;

the mask image is an input object of the first convolution layer;

for the first convolution layer to the third convolution layer, the output result of the former is the input object of the latter;

the first convolution layer to the third convolution layer are all used for carrying out 1 convolution operation with 4 x 4 and step length of 2 on the input object;

the output result of the third convolution layer is a feature map of the mask image, the size of the feature map is 32 × 32, and the channel is 1;

for the feature map of the mask image, when one feature value of the feature map is larger than a set threshold value, judging that the feature point is a mask point, otherwise, judging that the feature point is a non-mask point;

the mask area of the feature map of the mask image is a set of mask points, and the unmasked area of the feature map of the mask image is a set of unmasked points;

the mask area of the output characteristic diagram is equal to the mask area of the characteristic diagram of the mask image, and the unmasked area of the output characteristic diagram is equal to the unmasked area of the characteristic diagram of the mask image.

4. The feature map nearest neighbor replacement-based image filling system of the convolutional neural network as claimed in claim 3, wherein the generation network is trained in a guided loss constraint mode, and the guided loss constraint mode is specifically that in the network generation training process, feature similarity constraint is performed on the real image and the input image in any convolutional layer or deconvolution layer;

the input image is a real image subjected to the masking operation.

5. The feature map nearest neighbor replacement-based image filling system of the convolutional neural network according to claim 4, wherein the specific way of generating the network for training is as follows:

the target image IgtInputting the data into a generation network, calculating a mask region of a characteristic diagram of the l-th layer, and obtaining (phi)l(Igt))yInformation;

inputting the image I to be filled into a generation network, calculating a mask region of a characteristic diagram of the L-L layer, and obtaining (phi)L-l(I))yInformation;

at this point a guidance loss constraint L is definedg:

Where Ω is the mask area, L is the total number of layers to generate the network, y is any coordinate point within the mask area, ΦL-l(I) When the input object is an image to be filled, a characteristic diagram output by the network at the L-L level is generated, (phi)L-l(I))yInformation of y in the masked region of the output feature map for the L-L-th layer, Φl(Igt) When the input object is a target image, generating a characteristic diagram output by the network at the l-th layer (phi)l(Igt))yAnd the information of y in the mask area of the output characteristic diagram of the l-th layer.

6. The feature map nearest neighbor replacement based image filling system of convolutional neural network of claim 5, wherein the discriminant network comprises convolutional layer E9-convolutional layer E13;

Convolution layer E9The input object of (1) is a filled image;

convolution layer E9The output result of (A) is activated by a Leaky ReLU function and then used as a convolution layer E10The input object of (1);

for convolution layer E10-convolutional layer E13The output result of the former is used as the input object of the latter after being sequentially subjected to batch normalization and activation of a Leaky ReLU function;

convolutional layer E sequentially subjected to batch normalization and Sigmoid function activation13The output result of (1) is the output result of the discrimination network;

convolution layer E9The convolution operation is used for performing 64 convolution operations with 4 × 4 and the step size of 2 on the input object;

convolution layer E10The convolution operation is used for performing 128 4-by-4 convolution operations with the step size of 2 on the input object;

convolution layer E11The convolution operation is used for carrying out 256 convolution operations with 4 × 4 and the step size of 2 on the input object;

convolution layer E12The convolution operation is used for carrying out 512 convolution operations with 4 x 4 and step size of 1 on the input object;

convolution layer E13For performing 1 convolution operation with 4 × 4 and step size of 1 on the input object.

7. The system of claim 6, wherein the populated image is a 256 x 256 RGB image, convolutional layer E13The spatial size of the output result of (1) is 64 × 64, and the channel is 1.

8. The feature map nearest neighbor replacement based image filling system of a convolutional neural network as claimed in claim 7, wherein said image filling system employs Adam optimization algorithm for end-to-end training.

Priority Applications (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201711416650.4A CN108171663B (en) | 2017-12-22 | 2017-12-22 | Image filling system of convolutional neural network based on feature map nearest neighbor replacement |

Applications Claiming Priority (1)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| CN201711416650.4A CN108171663B (en) | 2017-12-22 | 2017-12-22 | Image filling system of convolutional neural network based on feature map nearest neighbor replacement |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| CN108171663A CN108171663A (en) | 2018-06-15 |

| CN108171663B true CN108171663B (en) | 2021-05-25 |

Family

ID=62520202

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| CN201711416650.4A Active CN108171663B (en) | 2017-12-22 | 2017-12-22 | Image filling system of convolutional neural network based on feature map nearest neighbor replacement |

Country Status (1)

| Country | Link |

|---|---|

| CN (1) | CN108171663B (en) |

Families Citing this family (10)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN109087375B (en) * | 2018-06-22 | 2023-06-23 | 华东师范大学 | Deep learning-based image cavity filling method |

| CN108898647A (en) * | 2018-06-27 | 2018-11-27 | Oppo(重庆)智能科技有限公司 | Image processing method, device, mobile terminal and storage medium |

| JP7202087B2 (en) * | 2018-06-29 | 2023-01-11 | 日本放送協会 | Video processing device |

| CN109300128B (en) * | 2018-09-29 | 2022-08-26 | 聚时科技(上海)有限公司 | Transfer learning image processing method based on convolution neural network hidden structure |

| WO2020098360A1 (en) * | 2018-11-15 | 2020-05-22 | Guangdong Oppo Mobile Telecommunications Corp., Ltd. | Method, system, and computer-readable medium for processing images using cross-stage skip connections |

| DE102019201702A1 (en) * | 2019-02-11 | 2020-08-13 | Conti Temic Microelectronic Gmbh | Modular inpainting process |

| CN110490203B (en) * | 2019-07-05 | 2023-11-03 | 平安科技(深圳)有限公司 | Image segmentation method and device, electronic equipment and computer readable storage medium |

| CN111242874B (en) * | 2020-02-11 | 2023-08-29 | 北京百度网讯科技有限公司 | Image restoration method, device, electronic equipment and storage medium |

| CN111614974B (en) * | 2020-04-07 | 2021-11-30 | 上海推乐信息技术服务有限公司 | Video image restoration method and system |

| CN112184566B (en) * | 2020-08-27 | 2023-09-01 | 北京大学 | Image processing method and system for removing adhered water mist and water drops |

Citations (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN104025588A (en) * | 2011-10-28 | 2014-09-03 | 三星电子株式会社 | Method and device for intra prediction of video |

| CN106952239A (en) * | 2017-03-28 | 2017-07-14 | 厦门幻世网络科技有限公司 | image generating method and device |

| CN107133934A (en) * | 2017-05-18 | 2017-09-05 | 北京小米移动软件有限公司 | Image completion method and device |

Family Cites Families (1)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US10319076B2 (en) * | 2016-06-16 | 2019-06-11 | Facebook, Inc. | Producing higher-quality samples of natural images |

-

2017

- 2017-12-22 CN CN201711416650.4A patent/CN108171663B/en active Active

Patent Citations (3)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN104025588A (en) * | 2011-10-28 | 2014-09-03 | 三星电子株式会社 | Method and device for intra prediction of video |

| CN106952239A (en) * | 2017-03-28 | 2017-07-14 | 厦门幻世网络科技有限公司 | image generating method and device |

| CN107133934A (en) * | 2017-05-18 | 2017-09-05 | 北京小米移动软件有限公司 | Image completion method and device |

Non-Patent Citations (2)

| Title |

|---|

| 生成对抗映射网络下的图像多层感知去雾算法;李策等;《计算机辅助设计与图形学学报》;20171031;第29卷(第10期);全文 * |

| 生成对抗网络理论模型和应用综述;徐一峰;《金华职业技术学院学报》;20170630;全文 * |

Also Published As

| Publication number | Publication date |

|---|---|

| CN108171663A (en) | 2018-06-15 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| CN108171663B (en) | Image filling system of convolutional neural network based on feature map nearest neighbor replacement | |

| CN112052787B (en) | Target detection method and device based on artificial intelligence and electronic equipment | |

| CN106599900B (en) | Method and device for recognizing character strings in image | |

| CN108805828B (en) | Image processing method, device, computer equipment and storage medium | |

| WO2022105125A1 (en) | Image segmentation method and apparatus, computer device, and storage medium | |

| CN110807757B (en) | Image quality evaluation method and device based on artificial intelligence and computer equipment | |

| CN111723732A (en) | Optical remote sensing image change detection method, storage medium and computing device | |

| CN110675339A (en) | Image restoration method and system based on edge restoration and content restoration | |

| CN112418195B (en) | Face key point detection method and device, electronic equipment and storage medium | |

| CN112101207B (en) | Target tracking method and device, electronic equipment and readable storage medium | |

| CN112214775A (en) | Injection type attack method and device for graph data, medium and electronic equipment | |

| CN114549913B (en) | Semantic segmentation method and device, computer equipment and storage medium | |

| CN112784954A (en) | Method and device for determining neural network | |

| CN111709415B (en) | Target detection method, device, computer equipment and storage medium | |

| CN111724370A (en) | Multi-task non-reference image quality evaluation method and system based on uncertainty and probability | |

| CN114266894A (en) | Image segmentation method and device, electronic equipment and storage medium | |

| CN111179270A (en) | Image co-segmentation method and device based on attention mechanism | |

| CN111639230B (en) | Similar video screening method, device, equipment and storage medium | |

| CN116580257A (en) | Feature fusion model training and sample retrieval method and device and computer equipment | |

| CN114282059A (en) | Video retrieval method, device, equipment and storage medium | |

| US20220207861A1 (en) | Methods, devices, and computer readable storage media for image processing | |

| CN110135428A (en) | Image segmentation processing method and device | |

| CN112560034B (en) | Malicious code sample synthesis method and device based on feedback type deep countermeasure network | |

| CN113592008A (en) | System, method, equipment and storage medium for solving small sample image classification based on graph neural network mechanism of self-encoder | |

| CN111079930A (en) | Method and device for determining quality parameters of data set and electronic equipment |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| PB01 | Publication | ||

| PB01 | Publication | ||

| SE01 | Entry into force of request for substantive examination | ||

| SE01 | Entry into force of request for substantive examination | ||

| GR01 | Patent grant | ||

| GR01 | Patent grant |