CN106023969B - Method for applying audio effects to one or more tracks of a music compilation - Google Patents

Method for applying audio effects to one or more tracks of a music compilation Download PDFInfo

- Publication number

- CN106023969B CN106023969B CN201610208527.2A CN201610208527A CN106023969B CN 106023969 B CN106023969 B CN 106023969B CN 201610208527 A CN201610208527 A CN 201610208527A CN 106023969 B CN106023969 B CN 106023969B

- Authority

- CN

- China

- Prior art keywords

- audio

- track

- music

- note

- instrument

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Expired - Fee Related

Links

Images

Classifications

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10H—ELECTROPHONIC MUSICAL INSTRUMENTS; INSTRUMENTS IN WHICH THE TONES ARE GENERATED BY ELECTROMECHANICAL MEANS OR ELECTRONIC GENERATORS, OR IN WHICH THE TONES ARE SYNTHESISED FROM A DATA STORE

- G10H1/00—Details of electrophonic musical instruments

- G10H1/0008—Associated control or indicating means

- G10H1/0025—Automatic or semi-automatic music composition, e.g. producing random music, applying rules from music theory or modifying a musical piece

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10H—ELECTROPHONIC MUSICAL INSTRUMENTS; INSTRUMENTS IN WHICH THE TONES ARE GENERATED BY ELECTROMECHANICAL MEANS OR ELECTRONIC GENERATORS, OR IN WHICH THE TONES ARE SYNTHESISED FROM A DATA STORE

- G10H1/00—Details of electrophonic musical instruments

- G10H1/0033—Recording/reproducing or transmission of music for electrophonic musical instruments

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10H—ELECTROPHONIC MUSICAL INSTRUMENTS; INSTRUMENTS IN WHICH THE TONES ARE GENERATED BY ELECTROMECHANICAL MEANS OR ELECTRONIC GENERATORS, OR IN WHICH THE TONES ARE SYNTHESISED FROM A DATA STORE

- G10H1/00—Details of electrophonic musical instruments

- G10H1/0091—Means for obtaining special acoustic effects

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10H—ELECTROPHONIC MUSICAL INSTRUMENTS; INSTRUMENTS IN WHICH THE TONES ARE GENERATED BY ELECTROMECHANICAL MEANS OR ELECTRONIC GENERATORS, OR IN WHICH THE TONES ARE SYNTHESISED FROM A DATA STORE

- G10H1/00—Details of electrophonic musical instruments

- G10H1/36—Accompaniment arrangements

- G10H1/38—Chord

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10H—ELECTROPHONIC MUSICAL INSTRUMENTS; INSTRUMENTS IN WHICH THE TONES ARE GENERATED BY ELECTROMECHANICAL MEANS OR ELECTRONIC GENERATORS, OR IN WHICH THE TONES ARE SYNTHESISED FROM A DATA STORE

- G10H2210/00—Aspects or methods of musical processing having intrinsic musical character, i.e. involving musical theory or musical parameters or relying on musical knowledge, as applied in electrophonic musical tools or instruments

- G10H2210/031—Musical analysis, i.e. isolation, extraction or identification of musical elements or musical parameters from a raw acoustic signal or from an encoded audio signal

- G10H2210/081—Musical analysis, i.e. isolation, extraction or identification of musical elements or musical parameters from a raw acoustic signal or from an encoded audio signal for automatic key or tonality recognition, e.g. using musical rules or a knowledge base

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10H—ELECTROPHONIC MUSICAL INSTRUMENTS; INSTRUMENTS IN WHICH THE TONES ARE GENERATED BY ELECTROMECHANICAL MEANS OR ELECTRONIC GENERATORS, OR IN WHICH THE TONES ARE SYNTHESISED FROM A DATA STORE

- G10H2210/00—Aspects or methods of musical processing having intrinsic musical character, i.e. involving musical theory or musical parameters or relying on musical knowledge, as applied in electrophonic musical tools or instruments

- G10H2210/325—Musical pitch modification

- G10H2210/331—Note pitch correction, i.e. modifying a note pitch or replacing it by the closest one in a given scale

- G10H2210/335—Chord correction, i.e. modifying one or several notes within a chord, e.g. to correct wrong fingering or to improve harmony

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10H—ELECTROPHONIC MUSICAL INSTRUMENTS; INSTRUMENTS IN WHICH THE TONES ARE GENERATED BY ELECTROMECHANICAL MEANS OR ELECTRONIC GENERATORS, OR IN WHICH THE TONES ARE SYNTHESISED FROM A DATA STORE

- G10H2210/00—Aspects or methods of musical processing having intrinsic musical character, i.e. involving musical theory or musical parameters or relying on musical knowledge, as applied in electrophonic musical tools or instruments

- G10H2210/571—Chords; Chord sequences

- G10H2210/576—Chord progression

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10H—ELECTROPHONIC MUSICAL INSTRUMENTS; INSTRUMENTS IN WHICH THE TONES ARE GENERATED BY ELECTROMECHANICAL MEANS OR ELECTRONIC GENERATORS, OR IN WHICH THE TONES ARE SYNTHESISED FROM A DATA STORE

- G10H2210/00—Aspects or methods of musical processing having intrinsic musical character, i.e. involving musical theory or musical parameters or relying on musical knowledge, as applied in electrophonic musical tools or instruments

- G10H2210/571—Chords; Chord sequences

- G10H2210/586—Natural chords, i.e. adjustment of individual note pitches in order to generate just intonation chords

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10H—ELECTROPHONIC MUSICAL INSTRUMENTS; INSTRUMENTS IN WHICH THE TONES ARE GENERATED BY ELECTROMECHANICAL MEANS OR ELECTRONIC GENERATORS, OR IN WHICH THE TONES ARE SYNTHESISED FROM A DATA STORE

- G10H2220/00—Input/output interfacing specifically adapted for electrophonic musical tools or instruments

- G10H2220/091—Graphical user interface [GUI] specifically adapted for electrophonic musical instruments, e.g. interactive musical displays, musical instrument icons or menus; Details of user interactions therewith

- G10H2220/101—Graphical user interface [GUI] specifically adapted for electrophonic musical instruments, e.g. interactive musical displays, musical instrument icons or menus; Details of user interactions therewith for graphical creation, edition or control of musical data or parameters

- G10H2220/106—Graphical user interface [GUI] specifically adapted for electrophonic musical instruments, e.g. interactive musical displays, musical instrument icons or menus; Details of user interactions therewith for graphical creation, edition or control of musical data or parameters using icons, e.g. selecting, moving or linking icons, on-screen symbols, screen regions or segments representing musical elements or parameters

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10H—ELECTROPHONIC MUSICAL INSTRUMENTS; INSTRUMENTS IN WHICH THE TONES ARE GENERATED BY ELECTROMECHANICAL MEANS OR ELECTRONIC GENERATORS, OR IN WHICH THE TONES ARE SYNTHESISED FROM A DATA STORE

- G10H2220/00—Input/output interfacing specifically adapted for electrophonic musical tools or instruments

- G10H2220/091—Graphical user interface [GUI] specifically adapted for electrophonic musical instruments, e.g. interactive musical displays, musical instrument icons or menus; Details of user interactions therewith

- G10H2220/101—Graphical user interface [GUI] specifically adapted for electrophonic musical instruments, e.g. interactive musical displays, musical instrument icons or menus; Details of user interactions therewith for graphical creation, edition or control of musical data or parameters

- G10H2220/126—Graphical user interface [GUI] specifically adapted for electrophonic musical instruments, e.g. interactive musical displays, musical instrument icons or menus; Details of user interactions therewith for graphical creation, edition or control of musical data or parameters for graphical editing of individual notes, parts or phrases represented as variable length segments on a 2D or 3D representation, e.g. graphical edition of musical collage, remix files or pianoroll representations of MIDI-like files

-

- G—PHYSICS

- G10—MUSICAL INSTRUMENTS; ACOUSTICS

- G10H—ELECTROPHONIC MUSICAL INSTRUMENTS; INSTRUMENTS IN WHICH THE TONES ARE GENERATED BY ELECTROMECHANICAL MEANS OR ELECTRONIC GENERATORS, OR IN WHICH THE TONES ARE SYNTHESISED FROM A DATA STORE

- G10H2220/00—Input/output interfacing specifically adapted for electrophonic musical tools or instruments

- G10H2220/135—Musical aspects of games or videogames; Musical instrument-shaped game input interfaces

Abstract

A system and process for making a more harmonious musical accompaniment for a musical compilation, the process comprising: determining a plurality of possible tone-symbols of the music compilation; authoring a musical interval distribution matrix for each of the possible tone-symbols; obtaining the product of the major pitch interval distribution matrix and each of the pitch interval distribution matrices; summing each of the major key interval products into a current major key; obtaining the product of minor key interval distribution and each of the interval distribution matrixes; summing each of the minor pitch interval products into a current minor pitch; and selecting a most likely tone-symbol from the plurality of possible tone-symbols by comparing the minor and major tone sums.

Description

The present application is a divisional application entitled "system and method for making a more harmonious musical accompaniment and for applying a chain of effects to a musical composition" from its parent application, having a filing date of 2012, 7 and 30 and an application number of 201280048059.7.

This application claims priority from: U.S. provisional patent application No. 61/182,982 filed on 1/6/2009; U.S. provisional patent application No. 61/248,238 filed on 2/10/2009; U.S. provisional patent application No. 12/791,792 filed on 3.12.2009; U.S. patent application nos. 12/791,792, 12/791,798, 12/791,803 and 12/791,807, all filed on 1/6/2010.

Technical Field

The present invention relates generally to the creation of music and, more particularly, to a system and method for making a more harmonious musical accompaniment.

Background

Music is a well-known form of human self-expression with good reputation. However, the personal appreciation of such artistic efforts can be obtained in different ways. In general, this person can enjoy music more easily by listening to the creations of other persons rather than generating music by himself or herself. The ability to listen to and recognize attractive music tracks is natural to many people, while the ability to manually compose a suitable collection of notes remains far from being reached. The ability of a person to compose new music may be constrained by the time, money and/or skill necessary to learn an instrument good enough to accurately reproduce the tune at will. For most people, their own imagination can be a source of new music, but their ability to hum or sing the same tune limits the extent to which their tunes can be formally retained and re-created for others to enjoy.

Recording the performance of a player may also be a laborious process. Multiple bends (take) of the same material are recorded and scrutinized with great care until a single bend can be assembled with all flaws eliminated. A good passage usually requires the talented artist to adjust his or her performance accordingly under the direction of another artist. In the case of amateur records, the best curved segments are the result of machine coincidence and therefore cannot be repeated. Often, amateur players make music pieces that both the good part and the bad part have. If a song can be constructed without having to analyze each part of each segment too finely, the recording process will be much simpler and more enjoyable. It is with respect to these and other considerations that the present invention has been made.

In addition, music that a person desires to compose may be complex. For example, a conceivable tune may have more than one instrument that may be played simultaneously with other instruments in a possible arrangement. This complexity further increases the time, skill and/or money required for an individual person to generate a desired sound combination. The physical configuration of most instruments also requires full physical attention from a person to manually generate the notes, further requiring additional personnel to play additional portions of the desired tune. Furthermore, additional auditing and management may then be necessary to ensure proper interaction of the various involved instruments and elements of the desired tune.

Even for those who have enjoyed the creation of their own music, those listeners may lack the expertise to implement the proper composition and type of music creation. Thus, the composed music may contain notes that are not within the same musical key or string. In most musical styles, the presence of a key-off or string-off note (often referred to as a "dissonant" note) makes the music unpleasant and harsh. Accordingly, music listeners often create music that sounds unpleasant and unprofessional due to their lack of experience and training.

For some people, the artistic inspiration is not constrained by the same time and location limitations typically associated with the generation and recording of new music. For example, when the idea of a new tune is suddenly shaped, a person may not be in a production studio with playable instruments in hand. After a brief lapse of inspiration, the person may not be able to regain the full extent of the original tune, resulting in a loss of artistic effort. Furthermore, the person may become frustrated with the time and effort applied when only inferior and incomplete versions of his or her original music affordance can be re-created.

Specialized music composition and editing software tools are generally available today. However, these tools exhibit entrance thresholds that are prohibitive for novice users. Such a complex user interface may quickly diminish the enthusiasm of any novices dared to venture on their art fantasy roads. Being limited to professional sound server suites also tie up the style of mobile creators who want to refine tunes in the move.

What is needed is a music composition system and method that can easily interact with most of the basic abilities of a user, yet can achieve music composition as complex as the imagination and expectations of the user. There is also a related need to facilitate musical compositions without dissonant notes. Further, there is a need in the art for a music authoring (authoring) system that is capable of generating a music compilation track by aggregating portions of a plurality of pieces of music based on automated selection criteria. It is also desirable to implement such a system in a manner that is not limited by the user's location when inspiration occurs, thereby enabling the capture of the first expression of a new tune.

There is a need in the art for a system and method that can create a compilation track from a plurality of segments by automatically evaluating the quality of previously recorded audio tracks and selecting the best of the previously recorded audio tracks recorded via an electronic authoring system.

It would also be desirable to implement a system and method for cloud-based music authoring whereby the processing-intensive functions are implemented by a server that is remote from the client device. However, since digital music authoring relies on huge amounts of data, such a configuration is generally limited by several factors. Processing, storing, and servicing such large amounts of data can be enormous for the provider unless the central processor is extremely powerful and therefore expensive from a cost and latency standpoint. Given the current cost for storing and sending data, the transmission of data from a presence server to a client may quickly become cost prohibitive and may also increase undesirable latency. From the client perspective, bandwidth limitations may also lead to significant latency issues, which detract from the user experience. Accordingly, there remains a need in the art for systems that address and overcome these deficiencies.

Drawings

Non-limiting and non-exhaustive embodiments are described with reference to the following figures. In the drawings, like reference numerals refer to like parts throughout the various figures unless otherwise specified.

For a better understanding of the present disclosure, reference will be made to the following detailed description which is to be read in association with the accompanying drawings, wherein:

1A, 1B, and 1C illustrate several embodiments of systems in which aspects of the invention may be implemented;

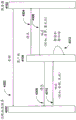

FIG. 2 is a block diagram of one embodiment of possible components of the audio converter 140 of the system of FIG. 1;

FIG. 3 illustrates an exemplary embodiment of a progression of a music compilation;

FIG. 4 is a block diagram of one embodiment of possible components of the soundtrack partitioner 204 of the system of FIG. 2;

FIG. 5 is an exemplary spectral diagram illustrating a frequency distribution of an audio input having a fundamental frequency and a plurality of harmonics;

FIG. 6 is an exemplary pitch versus time graph illustrating the pitch of a human voice that varies between a first and second pitch and is then positioned around the second pitch;

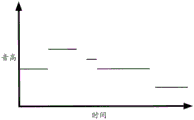

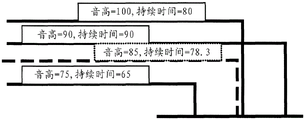

FIG. 7 is an exemplary embodiment of a morphology plotted as pitch events versus time, each pitch event having a discrete duration;

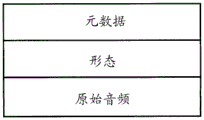

FIG. 8 is a block diagram illustrating the contents of a data file in one embodiment of the invention;

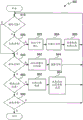

FIG. 9 is a flow diagram illustrating one embodiment of a method for generating a music track within a continuous loop of recorded accompaniment;

10, 10A and 10B together form an illustration of one possible user interface for generating a music track within a continuous loop of recorded accompaniment;

FIG. 11 is an illustration of one possible user interface for a calibration recording accompaniment;

12A, 12B, and 12C together illustrate a second possible user interface associated with the generation of a music track within a continuous loop of recorded accompaniment at three separate time periods;

13A, 13B and 13C together illustrate one possible use of a user interface for modifying a music track input into a system using the user interface of FIG. 12;

14A, 14B, and 14C together illustrate one possible user interface for creating a rhythm audio track at three separate time periods;

FIG. 15 is a block diagram of one embodiment of possible components of MTAC module 144 of the system of FIG. 1;

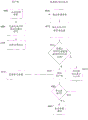

FIG. 16 is a flow chart illustrating one possible process for determining the musical key reflected by one or more notes of an audio input;

FIG. 16A illustrates a interval distribution matrix that may be used to better determine tone-symbols;

16B and 16C illustrate a minor key and a minor key interval distribution matrix, respectively, used in association with the interval distribution matrix to provide preferred tone-symbol determinations;

17, 17A and 17B together form a flow diagram illustrating one possible process for scoring portions of a music track based on chordal sequence constraints;

FIG. 18 illustrates one embodiment of a process for determining the centroid of a morphology;

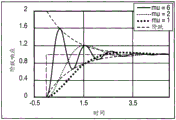

FIG. 19 illustrates a step response of a harmonic oscillator over time, with a damped response, an over-damped response, and an under-damped response;

FIG. 20 illustrates a logic flow diagram to show one embodiment for scoring portions of a musical input;

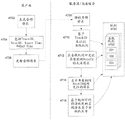

FIG. 21 illustrates a logic flow diagram for one embodiment of a process for composing a "best" track from a plurality of recording tracks;

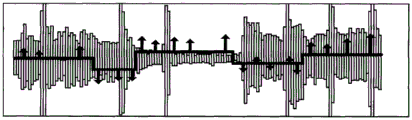

FIG. 22 illustrates one embodiment of an exemplary audio waveform and graphical representation of a score showing the difference in actual pitch from ideal pitch;

FIG. 23 illustrates one embodiment of a new audio track made up of partitions of previously recorded audio tracks;

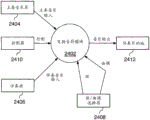

FIG. 24 illustrates a data flow diagram showing one embodiment of a process for harmonizing an accompaniment music input with a master music input;

FIG. 25 is a data flow diagram illustrating the process performed by the transform notes module of FIG. 24;

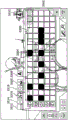

FIG. 26 illustrates an exemplary embodiment of a super keyboard;

27A-B illustrate two exemplary embodiments of a chord wheel;

FIG. 28 illustrates one exemplary embodiment of a network configuration in which the present invention may be implemented;

FIG. 29 illustrates a block diagram of a device that supports the processes discussed herein;

FIG. 30 illustrates one embodiment of a music network device;

FIG. 31 illustrates one possible embodiment of a first interface in a gaming environment;

FIG. 32 illustrates one possible embodiment of an interface for creating one or more master vocal or instrument tracks in the gaming environment of FIG. 31;

FIG. 33 illustrates one possible embodiment of an interface for creating one or more percussion instrument tracks in the gaming environment of FIG. 31;

34A-C illustrate a possible embodiment of an interface for creating one or more accompaniment tracks in the gaming environment of FIG. 31;

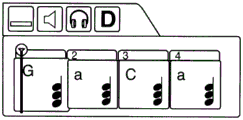

FIG. 35 illustrates one possible embodiment of a graphical interface depicting the progression of chords played as an accompaniment to a dominant music;

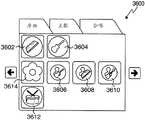

FIG. 36 illustrates one possible embodiment for selecting among different segments of a music compilation in the gaming environment of FIG. 31;

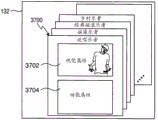

FIGS. 37A and 37B illustrate a possible embodiment of a file structure associated with a music asset that may be utilized in connection with the gaming environment of FIGS. 31-36;

FIG. 38 illustrates one embodiment of a presentation cache in accordance with the present invention;

FIG. 39 illustrates one embodiment of a logic flow diagram for one embodiment of obtaining audio for a requested note in accordance with the present invention;

FIG. 40 illustrates one embodiment of a flow diagram for implementing the cache control process of FIG. 39, in accordance with the present invention;

FIG. 41 illustrates one embodiment of an architecture for implementing a presentation cache in accordance with the present invention;

FIG. 42 illustrates a second embodiment of an architecture for implementing a presentation cache in accordance with the present invention;

FIG. 43 illustrates one embodiment of a signal diagram that illustrates communication between a client, a server, and an edge cache in accordance with the present invention;

FIG. 44 illustrates a second embodiment of a signal diagram illustrating communication between a client, a server, and an edge cache, according to an embodiment of the invention;

FIG. 45 illustrates an embodiment of a first process for optimizing an audio request processing queue according to the present invention;

FIG. 46 illustrates an embodiment of a second process for optimizing an audio request processing queue according to the present invention;

FIG. 47 illustrates an embodiment of a third process for optimizing an audio request processing queue according to the present invention;

FIG. 48 illustrates an exemplary embodiment of a live play loop according to an embodiment of the present invention;

FIG. 49 illustrates one embodiment of a series of effects that may be applied to a music compilation in accordance with the present invention;

FIG. 50 illustrates one embodiment of a series of musician character effects that can be applied to an instrument track in accordance with the present invention;

FIG. 51 illustrates one embodiment of a series of producer character effects that may be applied to musical instrument tracks in accordance with the present invention;

fig. 52 illustrates one embodiment of a series of producer character effects that may be applied to a compilation track in accordance with the present invention.

Detailed Description

The present invention now will be described more fully hereinafter with reference to the accompanying drawings, which form a part hereof, and which show, by way of illustration, specific exemplary embodiments by which the invention may be practiced. This invention may, however, be embodied in many different forms and should not be construed as limited to the embodiments set forth herein; rather, these embodiments are provided so that this disclosure will be thorough and complete, and will fully convey the scope of the invention to those skilled in the art. The present invention may be embodied as, among other things, methods or devices. Accordingly, the present invention may take the form of an entirely hardware embodiment, an entirely software embodiment or an embodiment combining software and hardware aspects. The following detailed description is, therefore, not to be taken in a limiting sense.

And (4) defining.

Throughout the specification and claims, the following terms take the meanings explicitly associated herein, unless the context clearly dictates otherwise. The phrase "in one embodiment" as used herein does not necessarily refer to the same embodiment, although it may. Moreover, the phrase "in another embodiment" as used herein does not necessarily refer to a different embodiment, although it may. Thus, as described below, various embodiments of the present invention may be readily combined without departing from the scope or spirit of the present invention.

Further, as used herein, the term "or" is an inclusive "or" operator, and is equivalent to the term "and/or," unless the context clearly dictates otherwise. The term "based on" is not exclusive and allows for being based on additional factors not described, unless the context clearly dictates otherwise. Furthermore, throughout the specification, the meaning of "a", "an" and "the" includes plural references. The meaning of "in … …" includes "in … …" and includes plural references. The meaning of "in … …" includes "in … …" and "on … …".

As used herein, the term "music input" refers to any signal input containing music and/or control information transmitted over a variety of media including, but not limited to, air, microphones, in-line mechanisms, and the like. The music input is not limited to the frequency of signal input that may be heard by the human ear, and may include other frequencies beyond those that may be heard by the human ear or in a form that is not readily heard by the human ear. Furthermore, the use of the term "musical" is not intended to convey an inherent need for tempo, rhythm, or the like. Thus, for example, a musical input may include various inputs such as taps (including single taps), taps, human inputs such as speech (e.g., do, re, mi), percussive inputs (e.g., ka, cha, da-da), etc., as well as indirect inputs via transmission through musical instruments or other amplitude and/or frequency generation mechanisms, including but not limited to microphone inputs, line-in inputs, MIDI inputs, files with signal information usable to convey musical inputs, or other inputs that enable transmitted signals to be converted to music.

As used herein, the term "musical key" is a harmonious set of musical notes. The pitch is usually major or minor. Musicians frequently talk about a piece of music as C major "key", which implies, for example, that a piece of music harmonically centers on note C and utilizes a major scale with the first note or key as C. A major scale is an octave progression made up of complete and large semitones (e.g., C D E F G a B or do re mifa so la ti). With respect to pianos, for example, the center C (sometimes referred to as "C4") has a frequency of 261.626Hz, while D4 is 293.665 Hz; e4 is 329.628 Hz; f4 is 349.228 Hz; g4 is 391.995 Hz; a4 is 440.000 Hz; and B4 is 493.883 Hz. Although the same note on other instruments will be played at the same frequency, it is also understood that some instruments play in one tone or another.

As used herein, the term "inharmonious note" is a note that is not in the correct musical tone or string, where the correct musical tone and correct string are the musical tones or strings currently being played by another musician or music source.

As used herein, the term "blue note" is a note that is not in the correct musical key or string but is allowed to play without transformation.

As used herein, the term "notes of the accompaniment music input" is notes performed by the accompanying player in association with notes performed in the corresponding theme melody.

General description of the invention.

Various embodiments are briefly described below to provide a basic understanding of some aspects of the invention. This brief description is not intended as a comprehensive overview. It is not intended to identify key or critical elements or to delineate or otherwise narrow the scope. Its sole purpose is to present some concepts in a simplified form as a prelude to the more detailed description that is presented later.

Briefly, various embodiments are directed to generating a multi-track recording by looping through a set of previously recorded audio tracks and receiving audible input for each added audio track. In one embodiment, each audio track in a multi-track recording may be generated from audible vocal input from an end user. Each new audible input may be provided after repeated playback of the current recording, or cycled one or more times. This recording sequence, separated by a loop period during which no new audio track input is received, may allow the user to listen to the current recording thoroughly, continuously, and without the time-dependent stress of additional input being immediately required. Regardless of the loop in which the additional audio track is input, playback of the loop may also allow other actions to be performed, such as modifying a previous audio track or changing parameters of the recording system.

Further, at least one of the audio tracks in the multi-track recording may include one or more instrument sounds generated based on one or more different sounds provided in the audible input. Various forms of processing may be performed on the received audible input to create an audio track, including alignment and adjustment of the timing of the audible input, frequency identification and adjustment, conversion of the audible input to a timbre associated with the instrument, addition of known auditory cues associated with the instrument, and so forth. Further, each of these processes may be performed in real-time, allowing for near instantaneous playback of the generated audio track, and enabling immediate and subsequent receipt of another audible input for processing and overlaying as an audio track onto one or more previously recorded tracks in a multi-track recording.

In one embodiment, the loop or repeated portion of the multi-track recording may include a single piece of music. The length of the bar may be determined by the tempo and time signature (time signature) associated with the track. In another embodiment, the number of bars or loop points of playback of a multi-track recording may be dynamic. That is, the repetition of a first audio track in a multi-track recording may occur at a different time than the time of a second audio track in the multi-track recording. The adjustment of the dynamic loop point may be determined automatically, for example, based on the length of audible input for a subsequent audio track.

Various embodiments are also directed to automatically making a single "best" curve segment from a series of curve segments. In one embodiment, a plurality of episodes of a performance are recorded onto a multi-track recorder during one or more accompaniments. Each curve segment is automatically partitioned into segments. The quality of each partition of each of the plurality of curved segments is scored based on a selectable criterion, and the audio track is automatically constructed from the best quality segment of each curved segment. In one embodiment, the best segment is defined by the segment having the highest score from among the plurality of segment scores.

Various embodiments are further directed to protecting musicians from playing anharmonic notes. In one embodiment, notes of the accompanying instrument are received and from the master instrument. The notes from the accompanying instruments are then modified based on the pitch, strings, and/or timing of the key. In one embodiment, a virtual instrument may be provided in which the input tones of the instrument are dynamically mapped onto the safety notes. Thus, if a player of the virtual instrument accompanies the melody, the virtual instrument may identify safe notes including notes for the current string of the melody being accompanied or notes in the tone of the melody.

A device architecture.

FIG. 1 shows one embodiment of a system 100 that may be deployed on a variety of devices 50, and for purposes of illustration, the devices 50 may be any general purpose computer (FIG. 1A), handheld computing device (FIG. 1B), and/or dedicated gaming system (FIG. 1C). The system 100 may be deployed as an application installed on the device. Alternatively, the system may operate within an http browser environment, which may optionally utilize web plug-in technology to extend the functionality of the browser to implement the functionality associated with the system 100. The device 50 may include more or fewer components than those shown in fig. 29. However, those skilled in the art will appreciate that certain components are not necessary to operate the system 100, and other components such as a processor, microphone, video display, and audio speaker are important if not necessary to practice aspects of the invention.

As shown in fig. 29, device 50 includes a processor 2902, which processor 2902 may be a CPU that communicates with a mass storage 2904 via a bus 2906. The processor 2902 may also include one or more general purpose processors, digital signal processors, other special purpose processors, and/or ASICs, alone or in combination with each other, as will be appreciated by those skilled in the art upon review of the present specification, drawings, and claims. The device 50 also includes a power supply 2908, one or more network interfaces 2910, an audio interface 2912, a display driver 2914, a user input processing program 2916, an illuminator 2918, an input/output interface 2920, an optional tactile interface 2922, and an optional Global Positioning System (GPS) receiver 2924. The device 50 may also include a camera (not shown) that enables video to be acquired and/or associated with a particular multi-track recording. Video from a camera or other source may further be provided to an online social network and/or an online music community. Device 50 may also optionally communicate with a base station (not shown) or directly with another computing device. Other computing devices, such as base stations, may include additional audio-related components, such as a specialized audio processor, generator, amplifier, speakers, XLR connectors, and/or power supply.

Continuing with fig. 29, the power source 2908 can include rechargeable or non-rechargeable batteries, or can be provided by an external power source (such as an AC adapter or powered docking cradle that can also supplement and/or recharge the batteries). Network interface 2910 includes circuitry for coupling device 50 to one or more networks and is constructed for use with one or more communication protocols and techniques, including but not limited to: global system for mobile communications (GSM), Code Division Multiple Access (CDMA), Time Division Multiple Access (TDMA), User Datagram Protocol (UDP), transmission control protocol/internet protocol (TCP/IP), SMS, General Packet Radio Service (GPRS), WAP, Ultra Wideband (UWB), IEEE 802.16 worldwide interoperability for microwave access (WiMax), SIP/RTP, or any of a variety of other wireless communication protocols. Accordingly, the network interface 2910 may include a transceiving device or a Network Interface Card (NIC) as a transceiver.

The audio interface 2912 (fig. 29) is arranged to generate and receive audio signals, such as the sound of a human voice. For example, as best shown in fig. 1A and 1B, audio interface 2912 may be coupled to a speaker 51 and/or microphone 52 to enable music output and input into system 100. The display driver 2914 (fig. 29) is arranged to generate video signals to drive various types of displays. For example, the display driver 2914 may drive the video surveillance display 75 shown in fig. 1A, the video surveillance display 75 may be a liquid crystal, gas plasma, or Light Emitting Diode (LED) based display or any other type of display usable with a computing device. As shown in fig. 1B, the display driver 2914 may alternatively drive a handheld touch sensitive screen 80, the handheld touch sensitive screen 80 will also be arranged to receive input from an object such as a stylus or a number from a human hand via the user input handler 2916 (see fig. 31). Keypad 55 may include any input device (e.g., keyboard, game controller, trackball, and/or mouse) arranged to receive input from a user. For example, keypad 55 may include one or more buttons, numeric dials, and/or keys. Keypad 55 may also include command buttons associated with selecting and sending images.

As shown in FIG. 29, the mass storage 2904 includes a RAM 2924, a ROM 2926, and other storage devices. Mass memory 2904 illustrates examples of computer-readable storage media for storing information such as computer-readable instructions, data structures, program modules or other data. The mass memory 2904 stores a basic input/output system ("BIOS") 2928 used to control low-level operation of the device 50. The mass memory also stores an operating system 2930, a messenger 2934, a browser 2936, and other applications 2938 for controlling the operation of the device 50. It will be appreciated that the component may comprise a general-purpose operating system (such as a version of MAC OS, WINDOWS, UNIX, LINUX) or a special-purpose operating system (such as, for example, Xbox 360 System software, Wii IOS, Windows MobileTM, iOS, Android, webOS, QNX, or Symbian @. operating system). The operating system may include or interface with a Java virtual machine module that enables control of hardware components and/or operating system operations via Java application programs. The operating system may also include a secure virtual container, also commonly referred to as a "sandbox," that enables applications (e.g., Flash and Unity) to be securely executed.

One or more data storage modules 132 may be stored in memory 2904 of device 50. Portions of the information stored in the data storage module 132 may also be stored on a disk drive or other storage medium associated with the device 50, as will be appreciated by those skilled in the art upon review of the present specification, drawings, and claims. These data storage modules 132 may store multi-track recordings, MIDI files, WAV files, audio data samples, and a variety of other data and/or data formats or input melody data having any of the formats discussed above. The data storage module 132 may also store information describing various capabilities of the system 100, which may be sent to other devices, e.g., as part of a header during a communication, upon request, or in response to a particular event, etc. In addition, the data storage module 132 may also be employed to store social networking information including address books, friends lists, aliases, user profile information, and the like.

Applications on device 50 may also include a messenger 132 and a browser 134. The messenger 132 may be configured to initiate and manage messaging sessions using any of a variety of messaging communications including, but not limited to, email, Short Message Service (SMS), Instant Message (IM), Multimedia Message Service (MMS), Internet Relay Chat (IRC), mrrc, RSS feeds, and the like. For example, in one embodiment, the messenger 132 may be configured as an IM messaging application, such as an AOL instant messenger, Yahoo! messenger,. NET messenger server, ICQ, or the like. In another embodiment, the messenger 132 may be a client application configured to integrate and employ multiple messaging protocols. In one embodiment, the messenger 132 may interact with the browser 134 to manage messages. Browser 134 may include virtually any application configured to receive and render graphics, text, multimedia, and the like, in virtually any web-based language. In one embodiment, the browser application is enabled to employ Handheld Device Markup Language (HDML), Wireless Markup Language (WML), WMLScript, JavaScript, standard generalized markup language (SMGL), hypertext markup language (HTML), extensible markup language (XML), and the like, to display and send messages. However, any of a variety of other web-based languages may be employed, including Python, Java, and third party web plug-ins.

Of course, while the various applications discussed above are shown as being implemented on device 50, in alternative embodiments, one or more portions of each of these applications may be implemented on one or more remote devices or servers, with the inputs and outputs of each portion being communicated between device 50 and the one or more remote devices or servers over one or more networks. Alternatively, one or more of the applications may be packaged for execution on or downloaded from a peripheral device.

An audio transducer.

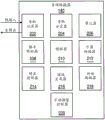

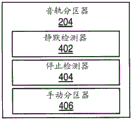

The audio converter 140 is configured to receive the audio data and convert it into a more meaningful form for use within the system 100. One embodiment of an audio transducer 140 is illustrated in fig. 2. In this embodiment, the audio converter 140 may include a variety of subsystems including a track recorder 202, a track partitioner 204, a quantizer 206, a frequency detector 208, a frequency shifter 210, an instrument converter 212, a gain controller 214, a harmonic generator 216, a special effects editor 218, and a manual adjustment controller 220. Connections to and interconnections between the various subsystems of the audio transducer 140 are not shown to avoid obscuring the invention, however, as will be understood by those skilled in the art in light of the present specification, drawings and claims, these subsystems will be electrically and/or logically connected.

The soundtrack recorder 202 enables a user to record at least one audio soundtrack from a vocal or musical instrument. In one embodiment, the user may record the track without any accompaniment. However, the track recorder 202 may also be configured to play audio automatically or upon user request, including a beat track (click track), a musical accompaniment, an initial tone against which the user may judge his/her pitch and timing, or even previously recorded audio. A "beat track" refers to a periodic clicking noise (such as that emitted by a mechanical metronome) intended to help a user maintain a consistent beat. The track recorder 202 may also enable the user to set the length of time to be recorded as a time limit (i.e., a number of minutes and seconds) or a number of music bars. When used in conjunction with MTAC module 144, as discussed below, soundtrack recorder 202 may also be configured to graphically indicate scores associated with various portions of recorded soundtracks to indicate, for example, when a user is out of tune, etc.

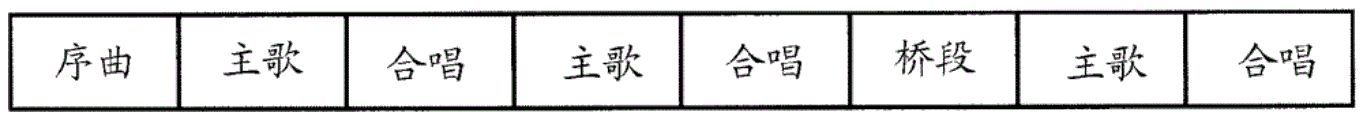

Typically, the music compilation is composed of a plurality of lyrics. For example, FIG. 3 illustrates a typical progression of popular songs, starting with a sequential song segment, followed by alternate verse and chorus segments, and a bridge segment, followed by a final verse. Of course, although not shown, other structures may be used, such as refrains, ending songs, and so forth. Thus, in one embodiment, the track recorder 202 may also be configured to enable the user to select a segment of a song for which the recorded audio track is to be used. The segments may then be arranged in any order (either automatically (based on the determination made by the type matcher module 152) or as selected by the end user) to compose a complete music compilation.

The track partitioner 204 divides the recorded audio tracks into separate partitions, which can then be addressed and potentially stored as individually addressable separate sound clips or files. The partitions are preferably chosen such that end-to-end spliced segments result in little or no audio artifacts. For example, let us assume that the audible input includes the phrase "pumpa pum". In one embodiment, the partitioning of the audible input may identify and distinguish each syllable of the audible input as a separate sound, such as "pum," pa, "and" pum. However, it should be understood that the phrase may be depicted in other ways, and that a single partition may include more than one syllable or word. Four sections (numbered "1", "2", "3" and "4") are illustrated on the display 75 in fig. 1A, 1B and 1C, each section comprising more than one syllable. As illustrated, the partition "1" has a plurality of notes that may reflect the same plurality of syllables that have been recorded by the track recorder 202 using input from the microphone 52 of a human or instrumental source.

To perform the division of the audible audio tracks into separate partitions, the audio track partitioner 204 may utilize one or more processes running on the processor 2902. In one exemplary embodiment shown in fig. 4, the audio track partitioner 204 may include a silence detector 402, a stop detector 404, and/or a manual partitioner 406, each of which may be used to partition an audio track into N partitions aligned in time. The track partitioner 204 can use the silence detector 302 to partition the track whenever silence is detected for a certain period of time. This "silence" may be defined by a volume threshold, such that when the audio volume falls below the defined threshold for a defined period of time, the location in the audio track is considered to be silent. Both the volume threshold and the time period may be configurable.

On the other hand, the stop detector 404 may be configured to use voice analysis (such as format analysis) to identify vowels and consonants in the audio track. For example, consonants (such as T, D, P, B, G, K) and nasal sounds are delimited by the blockage of airflow in their utterances. The location of a particular vowel or consonant may then be used to detect and identify points that are preferably partitioned. Similar to silence detector 402, the types of vowels and consonants utilized by stop detector 404 to identify partition points may be configurable. A manual partition 406 may also be provided to enable a user to manually delimit each partition. For example, the user may simply specify the length of time for each partition, thereby causing the audio track to be divided into a number of partitions each having equal length. The user may also be allowed to identify a specific location in the audio track where a partition is to be created. The identification may be performed graphically using a pointing device (such as a mouse or game controller) in conjunction with the type of graphical user interface shown in fig. 1A, 1B, and 1C. The identification may also be performed by pressing a button or key on a user input device, such as the keyboard 55, mouse 54, or game controller 56, during audible playback of the audio track by the track recorder 202.

Of course, while the functions of the silence detector 402, the stop detector 304, and the manual partitioner 406 have been described separately, it is contemplated that the audio track partitioner 204 may partition or divide the audio track into segments using any combination of silence detectors, stop detectors, and/or manual partitioners. Those skilled in the art will also appreciate in view of the foregoing description, drawings, and claims that other techniques for partitioning or dividing an audio track into segments may also be used.

The quantizer 206 is configured to quantize the partitions of the received audio tracks, which may utilize one or more processes running on the processor 2902. The process of quantizing (as that term is used herein) refers to the time-shifting of each previously created partition (and, therefore, the notes contained within that partition), as may be necessary to align the sound within the partition with a particular beat. Preferably, the quantizer 206 is configured to time-sequentially align the start of each partition with the previously determined beat. For example, a prosody may be provided in which each bar may include 4 beats and the alignment of the separated sounds may be made with respect to quarter-beat time increments, thereby providing 16 time points in each four-beat bar with which the partitions may be aligned. Of course, any number of increments of each bar (such as three beats for the waltz or boekard effect, two beats for the rock effect, etc.) and beat may be used, and at any time during the process, may be manually adjusted by the user or automatically adjusted based on certain criteria, such as a user selection of a particular style or type of music (e.g., blues, jazz, boekard, pop, rock, or waltz).

In one embodiment, each partition may be automatically aligned by the quantizer 206 at an available time increment at which it was most closely received at the time of recording. That is, if the sound starts between two time increments in the beat, the playback timing of the sound will be shifted chronologically forward or backward to one of the increments that is closer to its initial start time. Alternatively, each sound may be automatically shifted in time to each time increment immediately preceding the relative time at which the sound was initially recorded. In yet another embodiment, each sound may be automatically shifted in time to each time increment immediately after the relative time at which the sound was initially recorded. Alternatively or additionally, the time shift (how well) of each separate sound may also be affected based on the type selected for the multi-track recording, as discussed further below with respect to the type matcher 1252. In another embodiment, each sound may also be automatically time-aligned with a previously recorded track in a multi-track recording, thereby achieving a karaoke type effect. Further, the length of the separated sounds may be greater than one or more time increments, and the time-shifting of the quantizer 206 may be controlled to prevent the separated sounds from being time-shifted such that the separated sounds overlap within the same audio track.

To illustrate, fig. 5 depicts one example of a frequency spectrum that may result from the output of an FFT process performed on portions of a received audio track. As can be seen, the spectrum 400 includes one main peak 502 corresponding to pitch at a single fundamental frequency (F), as well as harmonics excited at 2F, 3F, 4F … … nF. There are additional harmonics in the spectrum, since when an oscillator such as a vocal cord or a violin string is excited at a single pitch, the oscillator typically vibrates at multiple frequencies.

In some instances, the identification of pitches may be complicated by additional noise. For example, as shown in fig. 5, the spectrum may include noise that appears as low amplitude spikes spread across the spectrum as a result of the audio input coming from a real-world oscillator such as a speech or musical instrument. In one embodiment, the noise may be extracted by filtering the FFT output below a certain noise threshold. In some instances, the identification of pitch may also be complicated by the presence of vibrato. Vibrato is an intentional frequency modulation that may be applied to a musical performance and is typically between 5.5Hz and 7.5 Hz. The vibrato may be filtered out of the FFT output by applying a band pass filter in the frequency domain, as in the noisy case, but filtering the vibrato may be undesirable in many situations.

In addition to the frequency domain methods discussed above, it is also contemplated that one or more time domain methods may be used to determine the pitch of one or more sounds in a partition. For example, in one embodiment, pitch may be determined by measuring the distance between zero crossings of the signal. Algorithms such as AMDF (average magnitude difference function), ASMDF (average mean square difference function), and other similar autocorrelation algorithms may also be used.

To make the determination of pitch most efficient, the pitched content can also be grouped into notes (of constant frequency) and glides (of steadily increasing or decreasing frequency). However, unlike musical instruments having frets or keys that naturally produce stable discrete pitches, human voices tend to slide into notes and rock in a continuous manner, making conversion to discrete pitches difficult. Thus, the frequency detector 208 may also preferably utilize pitch pulse detection to identify shifts or changes in pitch between the separate sounds within the partition.

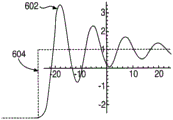

Pitch pulse detection is a method for delimiting pitch events that are focused on the trajectory of the control loop formed between the singer's voice and his perception of his voice. Generally, when a singer utters a voice, the singer hears the voice immediately thereafter. If the singer hears a pitch that is incorrect, he immediately modifies his speech to the intended pitch. The negative feedback loop can be modeled as a damped harmonic motion driven by periodic pulses. Thus, the human voice can be viewed as a single oscillator: the vocal cords. An example illustration of the pitch shifting and placement of the singer's voice 602 can be seen in fig. 6. Tension in the vocal cords controls pitch, and this change in pitch can be modeled by a response to a step function (such as step function 604 in fig. 6). Thus, the start of a new pitch event can be determined by finding the start of damped harmonic oscillations in pitch and observing the successive turning points of the pitch that converge to a stable value.

After a pitch event within a partition of an audio track has been determined, it may be converted and/or stored into a modality that is a graph of pitch events versus time. One example of a modality (without partitioning) is depicted in fig. 7. Thus, the morphology may include information identifying the onset, duration, and pitch of each sound, or any combination or subset of these values. In one embodiment, the morphology may be in the form of MIDI data, although morphology may refer to any representation of pitch versus time and is not limited to semitones or any particular prosody. For example, in the "Morphological Metrics" by Larry Polansky, incorporated herein by reference,Journal of New Music Researchother such examples of morphologies that may be used are described in volume 25, pp. 289-368, ISSN: 09929-8215.

The gain controller 214 may be configured to automatically adjust the relative volume of the audible input based on the volume of other previously recorded audio tracks and may utilize one or more processes running on the processor 2902. The harmonic generator 216 may be configured to incorporate harmonics into the audio tracks, which may utilize one or more processes running on the processor 2902. For example, different additional frequencies of the audible input signal may be determined and added to the produced audio track. Determining the additional frequency may also be based on the type from the type matcher 260 or by using other predetermined parameter settings entered by the user. For example, if the selected type is Waltz, it may be at a beat of "oom-pa-paThe additional frequency is selected in time from the major chord in the octave immediately below the dominant in harmony with the dominant music as follows: fundamental toneFundamental tone. The special effects editor 218 may be configured to add various effects to an audio track, such as echo, reverberation, and the like, preferably utilizing one or more processes running on the processor 2902.

The audio transducer 140 may also include a manual adjustment control 220 to enable a user to manually alter any settings automatically configured by the modules discussed above. For example, the manual adjustment control 220 may enable the user to alter the frequency of the audio input or portions thereof, among other options; enabling the user to alter the onset and duration of each discrete sound; increasing or decreasing the gain of the audio track; a different instrument to be applied to instrument transformer 212 is selected. The manual adjustment control 220 may be designed for use with one or more graphical user interfaces, as will be understood by those skilled in the art in light of the present specification, drawings, and claims. One particular graphical user interface will be discussed below in association with FIGS. 13A, 13B, and 13C.

Fig. 8 illustrates one embodiment of a file structure for a partition of an audio track that has been processed by the audio converter 140 or otherwise downloaded, retrieved or obtained from another source. As shown, in this embodiment, the file includes metadata associated with the file, obtained morphology data (e.g., having a MIDI format), and raw audio (e.g., having a. wav format). The metadata may include information indicative of a profile associated with a creator or supplier of the audio track partition. It may also include additional information related to the audio signature of the data, such as the pitch, tempo, and partition associated with the audio. The metadata may also include information about the possible available pitch shifts that may be applied to each note in the partition, the amount of time shift that may be applied to each note, and so on. For example, it will be appreciated that for live recorded audio, if the pitch is shifted more than a semitone, there is a possibility of distortion. Accordingly, in one embodiment, constraints may be placed on live audio to prevent the shifting of more than one semitone. Of course, different settings and different constraints may also be used. In another embodiment, the range of possible pitch shifts, time shifts, etc. may also be altered or established by the creator of the audio track partition or any individual (such as an administrator, partner, etc.) having substantial rights in the audio track partition.

Recording the live loop of accompaniment.

The record accompaniment live loop (RSLL) module 142 implements a digital audio workstation that, in conjunction with the audio transducer 140, enables the recording of audible input, the generation of separate audio tracks, and the creation of multi-track records. Thus, the RSLL module 1422 may enable any recorded audio track (spoken, singing, or otherwise) to be combined with a previously recorded track to create a multi-track recording. The RSLL module 142 is also preferably configured to loop at least one of the previously recorded multi-track recordings for repeated playback, as discussed further below. This repeated playback may be performed while a new audible input is being recorded or the RSLL module 142 is otherwise receiving instructions for the recording accompaniment that is currently ongoing. Thus, the RSLL module 142 allows the user to continue editing and synthesizing music tracks while playing and listening to previously recorded tracks. As will be appreciated from the discussion below, the continuous loop of previously recorded audio tracks also minimizes the user's perception of any latency that may be caused by the process applied to the audio track that the user is currently recording, when such a process is preferably completed.

Fig. 9 illustrates a logical flow diagram generally showing one embodiment of an overview process for creating a multi-track recording using the RSLL module 142 in conjunction with the audio transducer 140. In general, the operations of FIG. 9 generally represent recording an accompaniment. Such accompaniment may be newly created and completed each time the user employs the system 100 and, for example, the RSLL module 142. Alternatively, the previous accompaniment may be continued and certain elements thereof may also be loaded and applied, such as previously recorded multi-track recordings or other user-specified recording parameters.

In either arrangement, after a start block, the process 900 begins at decision block 910 where the user determines whether to playback a currently recorded multi-track recording. The process of playing back a current multi-track recording while enabling other actions to be performed is generally referred to herein as a "live loop". The content and duration of the portion of the multi-track recording currently being played back without explicit repetition is referred to as a "live loop". During playback, a multi-track recording may be accompanied by a tempo audio track, which typically includes a separate audio track not stored with the multi-track recording that provides a series of equally spaced reference sounds or tempos that audibly indicate the speed of the audio track and bar that the system is currently configured to record.

In an initial execution of process 900, an audio track may not have been generated. In this state, playback of the empty multi-track recording in block 910 may be simulated, and the beat track may provide the only sound played back to the user. However, in one embodiment, the user may choose to have the beat tracks muted, as discussed further below with respect to block 964. Visual cues may be provided to the user in conjunction with audio playback during recording. Even when the audio track is not recorded and the tempo track is faded, the indication of the simulated playback and the current playback position can be limited to only these visual cues, which may include, for example, a display of a change in a progress bar, pointer, or some other graphical indication (see, e.g., fig. 12A, 12B, and 12C).

The multi-track recording of the live loop played back in decision block 910 can include one or more audio tracks that have been previously recorded. The multi-track recording may include an overall length and a length for playback as a live loop. The length of the live loop may be selected to be less than the overall length of the multi-track recording, allowing a user to separately layer different pieces of the multi-track recording. The length of the live loop relative to the overall length of the multi-track recording may be manually selected by the user or alternatively automatically determined based on received audible input. In at least one embodiment, the overall length of the multi-track recording and the live loop may be the same. For example, the live loop and the multi-track recording may be a single piece of music in length.

When a multi-track recording is selected for playback at decision block 910, additional visual cues, such as visual representations of one or more tracks, can be provided in synchronization with the audio playback of the live loop that includes at least the portion of the multi-track recording for user playback. While the multi-track recording is being played, process 900 continues at decision block 920 where a determination is made by the end user whether to generate an audio track of the multi-track recording. Recording may be initiated based on receiving audible input, such as vocal audible input generated by an end user. In one embodiment, the detected magnitude of the audible input may trigger sampling and storage of the audible input signal received in the system 100. In an alternative embodiment, such audio track generation may be initiated by manual input received by the system 100. Furthermore, generating a new audio track may require both a detected audible input (such as from a microphone) and a manual indication. If a new audio track is to be generated, processing continues at block 922. If generation of an audio track has not been initiated, process 900 continues at decision block 940.

At block 922, audible input is received by the track recorder 202 of the audio transducer 140 and stored in the memory 2904 in the one or more data storage modules 132. As used herein, "audible" refers to the nature of an input to device 50, wherein the input, when provided, may be heard simultaneously, naturally, and directly by at least one user without amplification or other electronic processing. In one embodiment, the length of the recorded audible input may be determined based on an amount of time remaining within a live loop when the audible input is first received. That is, the recording of audible input may end after the length of time at the end of the live loop, regardless of whether a detectable amount of audible input is still being received. For example, if the length of the loop is one at each of four beats and the receipt of audible input is first detected or triggered at the beginning of the second beat, three beats corresponding to the second, third and fourth beats of the strip may be recorded equivalent to the audible input, and therefore these second, third and fourth beats will be looped in the multi-track recording playback that is continuously processed in block 910. In such an arrangement, any audible input received after the end of a single bar may be recorded and processed as a basis for another separate track of a multi-track recording. This additional processing of separate audio tracks may be represented as separate iterations through at least blocks 910, 920, and 922.

In at least one alternative embodiment, the length of loop playback may be dynamically adjusted based on the length of audible input received at block 922. That is, the audible input may automatically result in an extension of the length of the track of the multi-track recording currently being played back in block 910. For example, if additional audible input is received after the length of the current live loop has been played back, the longer audible input may be further recorded and maintained to be derived as a new audio track. In such an arrangement, previous tracks of the multi-track recording may be repeated within a subsequent live loop to match the length of the received audible input. In one embodiment, an integer number of repetitions of a shorter previous multitrack recording may be performed. This integral number of repetitions maintains the relationship, if any, between the bars of the previously recorded shorter multitrack recording. In this way, the loop point of multi-track recordings and live loops can be dynamically altered.

Similarly, the length of the audio track received at block 922 may be shorter than the length of the currently playing live loop (i.e., receiving audible input of only one bar during playback of four long live loops). In such an arrangement, an end of the audible input may be detected when additional audible input has not been received after a predetermined time (e.g., a selected number of seconds) after receipt and recording of the audible input of at least the threshold volume. In one embodiment, the detection of this silence may be based on the absence of input above a threshold volume for the current live cycle. Alternatively or additionally, the end of the audible input may be signaled by the receipt of a manual signal. The associated length of the shorter audible input may be determined in terms of the number of bars having the same number of beats as the multi-track recording. In one embodiment, this number of bars is selected as a factor of the length of the current live loop. In each case, once converted to a track at block 924, the audible input may be manually or automatically selected to repeat a number of times sufficient to match the length of the multi-track recording currently being played back.

In block 924, the received audible input may be converted to an audio track by the audio converter 140. As discussed above, the audio conversion process may include various operations including partitioning, quantization, frequency detection and shifting, instrument conversion, gain control, harmonic generation, adding special effects, and manual adjustment. The order of each of these audio conversion operations may be altered and, in at least one embodiment, may be configured by the end user. Further, each of these operations may be selectively applied, enabling conversion of audible input to an audio track with as much or as minimal additional processing as needed. For example, instrument conversions may not be selected, allowing one or more original sounds from the audible input to be substantially included in the generated audio track with its original timbre. In block 924, an echo cancellation process may be applied to filter out audio of other tracks played during a live loop from an actively recorded audio track. In one embodiment, this may be accomplished by: identifying an audio signal played during a live loop; determining any delay between the output audio signal and the input audio signal; filtering and delaying the output audio signal to be similar to the input audio signal; and subtracting the output audio signal from the input audio signal. One preferred echo cancellation procedure that may be used is that implemented by iztope, although other implementations may also be used. The process of block 924 may then be applied or removed, as discussed further herein with respect to block 942. After converting the audible input to the generated audio track at block 924, the process 900 continues at block 926.

At block 926, the generated audio track from block 924 may be added to the multi-track recording in real-time. This may be a multi-track already initiated or alternatively this may be a new multi-track with an audio track included as its first track. After block 926, the process 900 may begin again at decision block 910, where multiple tracks may be played back with the included most recently generated audio track. Although operations 922, 924, and 926 are shown as being performed serially in fig. 9, these steps may also be performed in parallel for each received audible input to further enable real-time recording and playback of the audible input signals. Such parallel processing may be performed, for example, for each separate sound identified from the audible input during each audible input, although alternative embodiments may include other differently sized portions of the audible input signal.

At decision block 940, a determination is made whether one or more audio tracks in the multi-track recording are to be modified. For example, input may be received indicating an end user desire to modify one or more previously recorded audio tracks. In one embodiment, the indication may be received through manual input. As mentioned above, the modification may also be performed during playback of a currently recorded multi-track recording, thereby allowing an immediate evaluation of the current state of the multi-track recording for the end user. In one embodiment, the indication may include one or more tracks of the multi-track recording to which it is desired to apply the adjustment. These tracks may also include one or more new tracks that are manually added to the multi-track recording. If an indication of a track modification is received, process 900 continues at block 942; otherwise, process 900 continues at decision block 960.

At block 942, parameters for one or more previously converted audio tracks are received and adjusted parameters may be input by the end user. The modified parameters may include any adjustments that may be done using the process of the audio converter 140, which may include, among other examples, fading or soloise the track, removing the entire track, adjusting the strike speed of instruments in the track, adjusting the volume level of the track, adjusting the tempo of playback of all tracks in the live loop, adding or removing separate sounds from selected time increments of the track, adjusting the length of the live loop, and/or the overall length of the multi-track recording. Adjusting the length of the live loop may include: altering the start and end points of the loop with respect to the overall multi-track recording, and/or may also include adding more tracks to the track currently being repeated in the live loop, adding and/or appending previously recorded strips of the multi-track recording (where at least a subset of the tracks were previously associated with the strips), or deleting strips from the multi-track recording. The addition of a new audio track may require that aspects of the new audio track be manually entered by the end user. Further at block 942, additional tracks may be searched for by using the sound searcher module 150 to facilitate reuse of previously recorded audio tracks by the end user.

At block 944, the adjusted parameters are applied to the one or more audio tracks indicated at decision block 940. The applying may include converting the adjusted parameters to a format compatible with the adjusted one or more audio tracks. For example, one or more numerical parameters may be adjusted to correspond to one or more values suitable for use in a MIDI or other protocol format. Following block 944, the process 900 may begin again at decision block 910, where at least the portion of the multi-track recording corresponding to the live loop may be played back utilizing the included one or more modified audio tracks.

At decision block 960, a determination is made whether the record settings are to be modified. For example, input may be received indicating whether a user desires to modify one or more aspects of record settings. The indication may also be received by a manual input. The indication may advance one or more parameter settings for the record settings to be adjusted. If the end user desires to modify the recording step, process 900 continues at block 962; otherwise, process 900 continues at decision block 980.

At block 962, the recording system may be calibrated. In particular, recording circuitry including at least an audio input source, an audio output source, and audio track processing components may be calibrated to determine, in conjunction with device 50, a latency of system 100, preferably measured in thousands of seconds between playback of sound through the audio output source and receipt of audible input through the audio input source. For example, if the recording circuitry includes headphones and a microphone, the latency may be determined by the RSLL142 to improve the reception and conversion of audible input, particularly the determination of the relative timing between the beats of a multi-track recording being played back and the received audible input. After calibration (if any) at block 962, the process 900 continues to block 964.

At block 964, other recording system parameter settings may be changed. For example, playback of the beat audio track may be turned on or off. In addition, default settings for a new track or new multi-track recording may be modified, such as a default tempo and default transition set that may provide audible input for block 924. The beat number of the current multi-track recording may also be changed at block 964. Other settings associated with the digital audio workstation may also be provided such that these other settings may be modified by the end user, as will be understood by those skilled in the art in light of the present specification, drawings and claims. After block 964, process 900 may return to decision block 910 where adjustments to the recording system may be applied to subsequent recordings and modifications of audio tracks of the multi-track recording.

At block 980, a determination is made whether to end recording the accompaniment. For example, an input indicating an end of the accompaniment may be received from a manual input. Alternatively, the device 50 may initiate the ending of the accompaniment if, for example, the data storage 132 is full. If an end of accompaniment indication is received, multiple track recordings may be stored and/or transmitted for additional operations. For example, multi-track records may be stored in the data store 132 for future retrieval, review and modification in a persistent or new accompaniment of the accompaniment that initially created the multi-track record. The multi-track record may also be transmitted from the device 50 to another device 50 over a network for storage in at least one remote data store associated with the user account. The transmitted multi-track recording may also be shared with the online music community through a web server, or may be shared in a game hosted by a web server.