WO2011151000A9 - Method and device for data processing - Google Patents

Method and device for data processing Download PDFInfo

- Publication number

- WO2011151000A9 WO2011151000A9 PCT/EP2011/002163 EP2011002163W WO2011151000A9 WO 2011151000 A9 WO2011151000 A9 WO 2011151000A9 EP 2011002163 W EP2011002163 W EP 2011002163W WO 2011151000 A9 WO2011151000 A9 WO 2011151000A9

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- alu

- register

- opcode

- alus

- instruction

- Prior art date

Links

- 238000012545 processing Methods 0.000 title claims abstract description 70

- 238000000034 method Methods 0.000 title claims abstract description 30

- 230000008569 process Effects 0.000 abstract description 11

- 230000015654 memory Effects 0.000 description 95

- 238000013507 mapping Methods 0.000 description 29

- 230000006870 function Effects 0.000 description 28

- 238000004422 calculation algorithm Methods 0.000 description 21

- 238000013461 design Methods 0.000 description 15

- 230000000694 effects Effects 0.000 description 15

- 239000013598 vector Substances 0.000 description 15

- 238000012546 transfer Methods 0.000 description 13

- 230000008878 coupling Effects 0.000 description 12

- 238000010168 coupling process Methods 0.000 description 12

- 238000005859 coupling reaction Methods 0.000 description 12

- 238000011156 evaluation Methods 0.000 description 12

- 230000004044 response Effects 0.000 description 12

- 230000008901 benefit Effects 0.000 description 9

- 230000001965 increasing effect Effects 0.000 description 9

- 230000006399 behavior Effects 0.000 description 8

- 239000003607 modifier Substances 0.000 description 7

- 238000011144 upstream manufacturing Methods 0.000 description 7

- 102100040023 Adhesion G-protein coupled receptor G6 Human genes 0.000 description 6

- PLQDLOBGKJCDSZ-UHFFFAOYSA-N Cypromid Chemical compound C1=C(Cl)C(Cl)=CC=C1NC(=O)C1CC1 PLQDLOBGKJCDSZ-UHFFFAOYSA-N 0.000 description 6

- 101100378639 Homo sapiens ADGRG6 gene Proteins 0.000 description 6

- 101001056707 Homo sapiens Proepiregulin Proteins 0.000 description 6

- 101100378640 Mus musculus Adgrg6 gene Proteins 0.000 description 6

- 102100025498 Proepiregulin Human genes 0.000 description 6

- 230000000644 propagated effect Effects 0.000 description 6

- 241000761456 Nops Species 0.000 description 5

- 238000004364 calculation method Methods 0.000 description 5

- 108010020615 nociceptin receptor Proteins 0.000 description 5

- 230000004913 activation Effects 0.000 description 4

- 238000004891 communication Methods 0.000 description 4

- 238000002474 experimental method Methods 0.000 description 4

- 230000010354 integration Effects 0.000 description 4

- 238000005457 optimization Methods 0.000 description 4

- 230000007480 spreading Effects 0.000 description 4

- 101100490563 Caenorhabditis elegans adr-1 gene Proteins 0.000 description 3

- 241001505100 Succisa pratensis Species 0.000 description 3

- 238000007792 addition Methods 0.000 description 3

- 238000003491 array Methods 0.000 description 3

- 238000010276 construction Methods 0.000 description 3

- 238000005516 engineering process Methods 0.000 description 3

- 238000007667 floating Methods 0.000 description 3

- 230000009191 jumping Effects 0.000 description 3

- 230000002093 peripheral effect Effects 0.000 description 3

- 230000002829 reductive effect Effects 0.000 description 3

- 230000008521 reorganization Effects 0.000 description 3

- ORVURRVCHHWGDK-UHFFFAOYSA-N 2-[[4-(3-chlorodiazirin-3-yl)benzoyl]amino]acetic acid Chemical compound C1=CC(C(=O)NCC(=O)O)=CC=C1C1(Cl)N=N1 ORVURRVCHHWGDK-UHFFFAOYSA-N 0.000 description 2

- 101100440639 Drosophila melanogaster Cont gene Proteins 0.000 description 2

- XUIMIQQOPSSXEZ-UHFFFAOYSA-N Silicon Chemical compound [Si] XUIMIQQOPSSXEZ-UHFFFAOYSA-N 0.000 description 2

- 230000009471 action Effects 0.000 description 2

- 230000003213 activating effect Effects 0.000 description 2

- 238000004458 analytical method Methods 0.000 description 2

- 239000003795 chemical substances by application Substances 0.000 description 2

- 230000009849 deactivation Effects 0.000 description 2

- 230000001419 dependent effect Effects 0.000 description 2

- 238000010586 diagram Methods 0.000 description 2

- 230000014509 gene expression Effects 0.000 description 2

- 230000002045 lasting effect Effects 0.000 description 2

- 230000000670 limiting effect Effects 0.000 description 2

- 230000007246 mechanism Effects 0.000 description 2

- 230000004048 modification Effects 0.000 description 2

- 238000012986 modification Methods 0.000 description 2

- 230000002441 reversible effect Effects 0.000 description 2

- 229910052710 silicon Inorganic materials 0.000 description 2

- 239000010703 silicon Substances 0.000 description 2

- 230000001360 synchronised effect Effects 0.000 description 2

- 230000002123 temporal effect Effects 0.000 description 2

- 238000012360 testing method Methods 0.000 description 2

- 241000282994 Cervidae Species 0.000 description 1

- 241000122205 Chamaeleonidae Species 0.000 description 1

- 241000614261 Citrus hongheensis Species 0.000 description 1

- 241001137251 Corvidae Species 0.000 description 1

- 239000006144 Dulbecco’s modified Eagle's medium Substances 0.000 description 1

- 241001522296 Erithacus rubecula Species 0.000 description 1

- 241000820057 Ithone Species 0.000 description 1

- 208000009144 Pure autonomic failure Diseases 0.000 description 1

- 230000002411 adverse Effects 0.000 description 1

- 230000003466 anti-cipated effect Effects 0.000 description 1

- 238000013528 artificial neural network Methods 0.000 description 1

- 230000005540 biological transmission Effects 0.000 description 1

- 230000015572 biosynthetic process Effects 0.000 description 1

- 230000008859 change Effects 0.000 description 1

- 230000002301 combined effect Effects 0.000 description 1

- 230000003247 decreasing effect Effects 0.000 description 1

- 230000003111 delayed effect Effects 0.000 description 1

- 238000012938 design process Methods 0.000 description 1

- 230000037213 diet Effects 0.000 description 1

- 235000005911 diet Nutrition 0.000 description 1

- 230000009977 dual effect Effects 0.000 description 1

- 238000005265 energy consumption Methods 0.000 description 1

- 230000002708 enhancing effect Effects 0.000 description 1

- 230000002779 inactivation Effects 0.000 description 1

- 150000002500 ions Chemical class 0.000 description 1

- 239000011159 matrix material Substances 0.000 description 1

- 239000003595 mist Substances 0.000 description 1

- 230000006855 networking Effects 0.000 description 1

- 235000015108 pies Nutrition 0.000 description 1

- 239000013312 porous aromatic framework Substances 0.000 description 1

- 230000000063 preceeding effect Effects 0.000 description 1

- 230000036316 preload Effects 0.000 description 1

- 230000001902 propagating effect Effects 0.000 description 1

- 230000007420 reactivation Effects 0.000 description 1

- 230000009467 reduction Effects 0.000 description 1

- 238000000926 separation method Methods 0.000 description 1

- 230000011664 signaling Effects 0.000 description 1

- 238000004088 simulation Methods 0.000 description 1

- 230000002311 subsequent effect Effects 0.000 description 1

- 238000003786 synthesis reaction Methods 0.000 description 1

- 230000007704 transition Effects 0.000 description 1

- 238000012800 visualization Methods 0.000 description 1

- 239000002699 waste material Substances 0.000 description 1

Classifications

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F9/00—Arrangements for program control, e.g. control units

- G06F9/06—Arrangements for program control, e.g. control units using stored programs, i.e. using an internal store of processing equipment to receive or retain programs

- G06F9/30—Arrangements for executing machine instructions, e.g. instruction decode

- G06F9/38—Concurrent instruction execution, e.g. pipeline or look ahead

- G06F9/3885—Concurrent instruction execution, e.g. pipeline or look ahead using a plurality of independent parallel functional units

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F8/00—Arrangements for software engineering

- G06F8/40—Transformation of program code

- G06F8/41—Compilation

- G06F8/44—Encoding

- G06F8/443—Optimisation

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F9/00—Arrangements for program control, e.g. control units

- G06F9/06—Arrangements for program control, e.g. control units using stored programs, i.e. using an internal store of processing equipment to receive or retain programs

- G06F9/30—Arrangements for executing machine instructions, e.g. instruction decode

- G06F9/38—Concurrent instruction execution, e.g. pipeline or look ahead

- G06F9/3836—Instruction issuing, e.g. dynamic instruction scheduling or out of order instruction execution

- G06F9/3853—Instruction issuing, e.g. dynamic instruction scheduling or out of order instruction execution of compound instructions

Definitions

- the present invention relates to a method of operating a low latency massive parallel data processing device in particular with respect to optimizer passes in high-language compilers.

- the disclosure comprises two major parts, namely a first part relating to mapping and scheduling particularly for a unit designated as FNC.

- HL compiler high-language compilers

- AAP ALU-Array-Processors

- GNU GCC compiler is used for the described exemplary implementation. Therefore reference is also made to the publically available "GNU Compiler Collection Internals by Richard . Stallman and the GCC Developer Community; for GCC Version 4.3.0", which is embodied in this specification for full disclosure and better understanding.

- the compiler pass AAPMAP works on the "RTL Representation" created by GCC.

- the RTL representation comprises a set of basic blocks that correspond to parts of the source code. Each basic block comprises a list of instructions. For short, GCC calls an instruction insn and a list of instructions is called insn-list. Please refer to Chapter 12 of the GCC Internals Manual for more details on the GCC RTL representation.

- the first pass of AAPMAP performs a preliminary mapping of insns to AAP opcodes. Details to AAP opcodes can be found in the description of the FNC ALU path, embodied in this

- insns can be reordered in the GCC insn-list if it improves the mapping. GCC then passes the modified insn-list to further GCC compiler passes.

- mapping of insns to AAP opcodes is recorded in a temporary data structure called codelist. After the first pass finishes, the codelist is deleted. The only lasting effect of the first pass of AAPMAP is the (potential) reordering of insns.

- AAPMAP iterates over all RTL basic blocks of a procedure generated by GCC and maps the insns of the basic blocks to ALUs of AAP opcodes in the codelist.

- the ALUs of an AAP opcode are filled from top-left to bottom-right (order: alO, arO, all, arl etc.) whenever possible, i.e. if an insn is available which can be mapped to the ALU.

- the order in which the insns are mapped i.e. scheduled

- the order of the insns cannot be changed anymore since the register

- insns may be allowed to write to the same register in one ALU row.

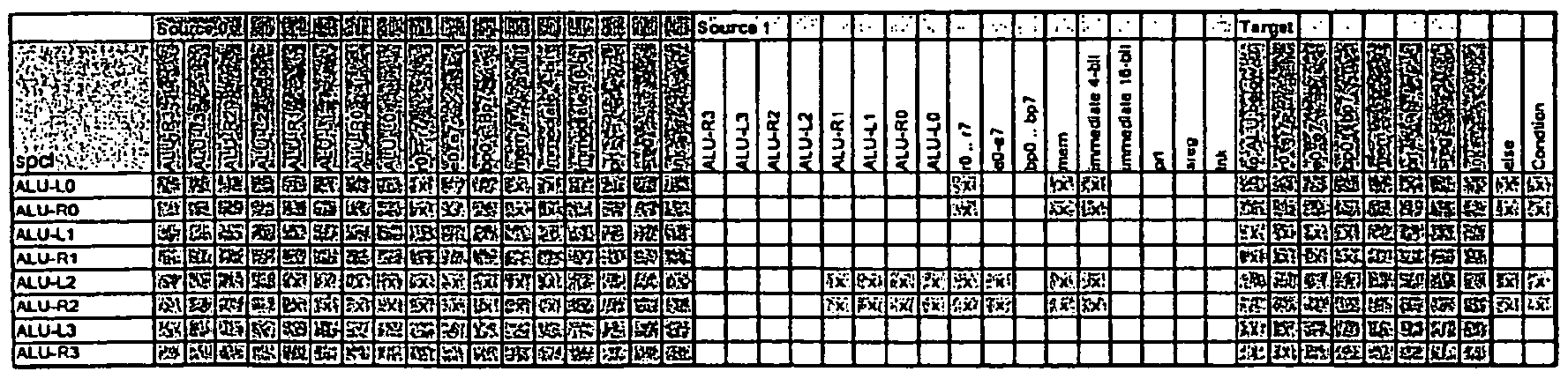

- mapping is depicted in the above shown diagram according to the structure of one embodiment of a multi-ALU array processor (AAP) .

- AAP multi-ALU array processor

- Two columns of ALUs are implemented, each column comprising 4 ALUs.

- the dataflow direction is preferably from top to bottom.

- ALUs receive operands from the register file and/or any ALUs located above. For details see e.g. Figs. 4, 5, 6, 13, 27, 28 of PCT/EP2009/007 15 and e.g. Figs. 1, 2, 4, 9, 10, 11.

- the assembler language is using a 3 address syntax:

- GCC ensures that the modified insn-list is passed on to the following compiler passes.

- maplist empty insn list

- insn first insn in insn-list of bb, ignoring NOTE and USE

- MAP_LIST (maplist) :

- opc last opcode in codelist or NULL if empty

- insn find first insn in maplist which can be mapped to opc at pos

- the function iterates the generated codelist and adds the pseudo-insns "NOP” , "

- ” define the spatial mapping of insns to ALUs in one AAP opcode.

- the pseudo-insn "NEXT” defines the temporal scheduling starting a new AAP opcode.

- the pseudo-insns cause GCC to generate the correct assembler output with the insns at the correct ALU positions in the AAP opcodes .

- the data from a load instruction is available in the next cycle from a special register called "mem".

- the read value is available from the target register only in the second cycle following the load instruction.

- the register is replaced with "mem” if possible; otherwise an additional "NEXT" pseudo-opcode is inserted creating an empty AAP opcode.

- the read access is replaced with the pseudo- register for the ALU output (a10 etc.) of the write access .

- alO all, al2 define operands to be received from ALUs in the left column, the following number defines the ALU row providing the operand.

- the syntax for defining operands being forwarded from one ALU to another within the ALU array is e.g. as such:

- An ALU may produce no output to the register file. While the results of ALUs of processors in the prior art are always written into the register file, AAP processors may transmit data solely from one ALU to another. As ALU sources are clearly defined, it is not necessary to define the target of the result producing ALU. In this case, the result producing ALU has no (register) target for the result, which is defined by Anyhow, ALUs using the respective result as operands can address that ALU with the previously described

- the producing ALU may address the receiving ALU. However, if multiple receivers exist, it becomes

- mapping is depicted in the above shown diagram according to the structure of one embodiment of a multi-ALU array processor (AAP) .

- AAP multi-ALU array processor

- Two columns of ALUs are implemented, each column comprising 4 ALUs.

- the dataflow direction is preferably from top to bottom.

- ALUs receive operands from the register file and/or any ALUs located above. For details see e.g. Figs. 4, 5, 6, 13, 27, 28 of

- the upper left field references to the upper left ALU in the respective datapath, the upper right field to the upper right ALU and the lower right field to the lower right ALU . All other fields reference to the datapath accordingly .

- the GCC insn-list is modified in the following way:

- GCC passes the modified insn-list to any following passes.

- values are often passed directly from one ALU to another ALU. Sometimes those values need to be stored into a register, as they will be used in another AAP opcode later, but often those values are never used in another place and there is no need to allocate a register for them. Typical examples for the latter are intermediate values computed while ' evaluating a complex expression.

- FNCMAP PROC is called twice for each procedure: before register allocation (from FNCMAP) and after (from rNSERTJvlAPPING INSNS).

- the boolean parameter "after ra" distinguishes the first and second call.

- maplist empty insn list

- opc last opcode in codelist orNULL ifempty

- the function iterates the generated codelist and adds the pseudo-insns "NOP”, "NEXT” and "

- the processor architecture according to the present invention can effect arbitrary jumps within the pipeline and does not need complex additional hardware such as those used for branch-prediction. Since no pipeline-stalls occur, the architecture achieves a significant higher average performance close to the theoretical maximum compared to conventional processors, in particular for algorithms comprising a large number of jumps and/or conditions.

- the execution of one instruction, in the first ALU-stage is necessary, in the second ALU-stage, the conditional execution of one instruction out of (at least) two, on the third ALU- stage the' conditional execution of one instruction out of (at least) four and on the n.th stage the conditional execution of an OpCode out of (at least) 2° is required.

- All ALUs may have and will have in the preferred embodiment reading and writing access to the common register set.

- the result of one ALU-stage is sent to the subsequent ALU-stage as operand. It should be noted that here "result" might refer to result-related data such as carry; overflow; sign flags and the like as well.

- Pipeline register stages may be used between different ALU-stages.

- it can be implemented to provide a pipeline-like register stage not downstream of every ALU-stage but only downstream of a given group of ALUs.

- the group-wise relation between ALUs and pipeline stages is preferred in a manner such that within an ALU group only exactly one conditional execution can occur.

- a register stage optionally following the multiplexer is decoupling the data transfer between ALU-stages in a pipelined— manner. It is to be noted that in a preferred embodiment there is no such register stage implemented.

- a multiplexer stage 0110 is provided selecting the operands for the first ALU-stage.

- a further multiplexer stage 0111 is selecting the results of the ALU-stages for the target registers in' 0109.

- Fig. 2 shows the. program flow control for the ALU-stage arrangement 0130 of Fig. 1.

- the instruction register 0201 holds the instruction to be executed at a given time within 0130.

- instructions are fetched by an instruction fetcher in the Usual manner, the instruction fetcher fetching the instruction to be executed from the address in the program memory defined by the program pointer PP (0210) .

- the first ALU stage 0101 is executing an instruction 0201a defined in a fixed manner by the instruction register 0201 determining .the operands for the ALU using the multiplexer stage 0110; furthermore, the function of the ALU is set in a similar manner.

- the ALU-flag generated by 0101 may be com bined (0203) with the processor flag register 0202 and ia sent to the subsequent ALU 0102 as the flag input data thereof .

- Each ALU-stage within 0103 can generate a status in response to which subsequent stages execute the corresponding jump without delay and continue with a corresponding instruction.

- one instruction 0205 of two possible instructions from 0201 is selected for ALU-stage 0102 by a multiplexer.

- the selection of the jump target is transferred by a jump vector.0204 to the subsequent ALU-stage.

- the multiplexer stage- 0105 selects the operands for the subse- quent ALU-stage 0102.

- the function of the ALU- stage 0102 is determined by the selected instruction 0205.

- ALU-stage 0101 has two possible jump targets, resulting in two possible instructions for ALU 0102.

- ALU 0102 in turn has two jump targets, this however being the case for each of the two jump targets of 0101.

- a binary tree of possible jump targets is created, each node of said tree having two branches here.

- the jump target selected is transmitted via signals 0208 to the subsequent ALU-stage 0103.

- the multiplexer stage 0106 selects the operands for the subsequent ALU-stage 0103. Also, the function of the ALU-stage 0103 is determined by the selected instruction 0207.

- the processing in the ALU-stages 0103, 0104 corresponds to the description of the other stages 0101 and 0102 respec- tively however, the instruction set from which is to be selected according to the predefined condition is 8 (for 0103)— or 16 (for ..0104) .respectively..

- the jump address memory is preferably implemented as part of the instruction word 0201.

- addresses are stored in the jump address memory 0212 in a relative manner (e. g. +/- 127), adding the selected jutrp address- ' using 0213 to the current program pointer 0210 and sending the program pointer to the next instruction to be loaded and executed.

- a relative manner e. g. +/- 127

- adding the selected jutrp address- ' using 0213 to the current program pointer 0210 and sending the program pointer to the next instruction to be loaded and executed.

- Flags of ALU-stage 0104 are combined with the flags obtained from the previous stages in the same manner as in the previous ALU-stage (compare 0209) and are written back into the flag register. This flag is the result flag of all ALU- operations within the ALU-stage arrangement 0130 and will be used as flag input to the ALU-path 0130 in the next cycle.

- the basic method of data processing allows for each ALU-etage of the multi-ALU-stage arrangement to execute and/or generate conditions and/or jumps.

- the result of the condition or the jump target respectively is transferred via flag vectors, e. g. 0206, or jum vectors,- e. g. 0208, to the respective subsequent ALU-stage", executing its operation depending on the incoming vectors, e. g. 0206 and .0208 by using flags and/or flag vectors for data processing, e. g. as operands and/or by selecting instructions to be executed by the jump vectors. This may include selecting the no-operation instruction, effectively disabling the ALU.

- each ALU can execute arbitrary jumpe which are implic- itly coded within the instruction word 0201 ⁇ without requiring and/or executing an explicit jump command.

- the program pointer is after the execution of the operations in the ALU- etage arrangement via 0213, leading to the execution of a jump to the next instruction to be loaded.

- the processor flag 0202 is consumed from the ALU-stages one after the 'other and combined and/or replaced with the result flag of the respective ALU.

- the result flag of the final result of all ALUs is returned to the processor flag register 0202 and defines the new processor status.

- the design or construction of the ALU-stage according to Fig. 2 can be become very complex and consumptious, ' given the fact that a large plurality of jumpB can be executed, increasing on the one hand the area needed while on the other hand in- creasing the complexity of the.design and simulation.

- the ALU-path may be simplified.

- an embodiment thereof is shown in Fig. 3. According to Fig. 3, the general deeign closely corresponds to that of Fig. 2 restricting however the set of possible jumps to two.

- the instructions for the first two ALUs 010L and 0102 are coded in the instruction registers 0301 in a fixed manner (fixed manner does not imply that the instruction is fixed during the hardware design process, but that it need not be altered during the execution of one program part loaded at one time into the device of Fig. 3) .

- ALU- stage 0102 can execute a jump, so that for ALU-stages 0103 and 0104 two .instructions each are stored in 0302, one of. each pair of instructions being selected at runtime depending on the jump target in response to the status of the ALU-stage 0102 using a multiplexer.

- ALU-stage 0104 can execute a jump having four possible targets stored in 0303.

- a target is selected by a multiplexer at runtime depending on the status of ALU-stage 0104 and is combined with a program pointer 0210 using an adder 0213.

- a multiplexer stage 0304, 0305, .0306 is provided between each ALU-stages that may comprise a register stage each. Preferably, no register stage is implemented so as to reduce latency.

- Side-ALUs 0131 although drawn in the figure at the side of the pipeline, need not be physically placed at the side of the ALU-stage/pipeline-arrangemen . Instead, they might be implemented on top thereof and/or beneath thereof, depending on the possibilities of the actual process used for building the processor in hardware. Side-ALUs 0131 receive their operands as necessary via a multiplexer 0110 from processor register 0109 and write back results to the processor register using multiplexer 0111. Thus, the way side-ALUs receive the necessary operands corresponds to the way the ALU-stage ' arrangement receives operands.

- side-units 0131 are referred to above and -in the following to be side-"ALUs" , in the same way that an XPP-like array can be coupled to the architecture of the invention as a side-ALU, other units may be used as "ALUs", for example and without limitation lookuptables, RAMs, ROMs, FIFOs or other kinds of memories, in par- . ticular memories that can be written in and/or read out from each and/or a plurality of the ALU-stages or ALUs in the multiple row.

- a plurality of ALU-stages can be implemented, each ALU- stage- being configured in a fixed manner for one of the possible branches.

- Fig. 4 shows a corresponding arrangement wherein the ALU- stage arrangement 0401 (corresponding to 0101 .... 0104 in the previous embodiment) is duplicated in a multiple way, thus implementing for branching zz-ALU-stages arrangements

- each ALU-stage arrangement 0401 to 0403 the operation is defined by specific, instructions of the Opcode not to be altered during the execution.

- the instructions comprise the specific ALU command and the source of each operand for each single ALU as well as the target register of any. Be it noted-- that the register set might be defined to be compatible with register and/or stack machine processor models.

- the statue signals are transferred from one ALU-stage to the next 0412. In this way, the status signale inputted into one ALU-row 0404, 0405, 0406, 0407 may select the respective active

- the status signal created within the ALU-rows corresponds, as described above, to the status of the "virtual" path, and thus the data path jumped to and ' actually run through, and is written back via 0413 to the status register 0920 of . he processor.

- the load/store processor is integrated in a Bide element, compare e. g. 0131, although in that case 0131 is preferably referred to not as a W side-ALU" but as a side-L/S- (load/store) -uni .

- This unit allows parallel and independent access to the memory.

- a plurality of side-L/S-units may be provided accessing different memories, memory parts and/or memory-hierarchies.

- L/S- units can be provided for fast access to internal lookup tables as well as for external memory accesses.

- the L/S-unit(s) need not necessarily be implemented as side-unit (s) but could be integrated into the processor as is known in the prior art.

- MCOPY additional load-store command

- the command is particularly advantageous if for example the memory is connected to a processor using a multiport interface, for example a dual port or two port interface, allowing for simultaneous read and write access to the memory.

- a new load instruction can be car- ried out directly in the next cycle following the MCOPY instruction.

- the load instruction accesses the same memory during the store access of MCOPY in parallel.

- Pig. 5 shows an overall design, of an X P processor module.

- ALU-stage arrangements 0130 are provided that can exchange data ' ith one another as necessary in the way dis- closed for the preferred embodiment ehown in Fig. 4 as indicated by the data path arrow 0501.

- side- ALUs 0131 and load/store-units 0502 are provided, where again a plurality of load/store-units may be implemented accessing memory and/or lookup tables 0503 in parallel.

- the data proc- essing unit 0130 and 0131 and load/store-unit 0502 are loaded with data (and status information) from the register 0109 via the bus system 0140.

- the RDY-handshake is signalled externally and will be reset as soon as the data has been read externally and has been prompted by the AC -handshake . Without RDY. being set the register can not be read from externally.

- 0801 denotes the main ALU-stage path

- 0802 denotes the ALU-stage path executed in case of a branching

- 0803 includes the proc- essing of the load-/store-unit, one load-/store operation being executed per four ALU-stage operations (that is during ' one ALU-stage cycle) .

- ALU-stage instructions form one OpCode per clock cycle.

- the Opcode comprises- both ALU-stages ⁇ four instructions each plus jump target) .and the load-/store-instruction.

- the f rst instructions are executed in parallel in 0801 and 0802 and the results are processed subsequently in data patb 0801.

- MCOPY 0815 copies the memory location *8tate3 to *stateprt and reads during execution cycle 0815 the data from state3.

- data ie written to *stateptr; simultaneously read access to the memory already takes place using LOAD in- 0816.

- the caller executes the LOAD 0804.

- the calling routine has to attend to not accessing the memory for writing in a first subsequent cycle due to MCOPY.

- the instruction CONT points to the address of the OpCode to be executed next. Preferably it is translated by the assembler in such a way that it does not appear as an explicit in- struction but simply adds the jump target relative to the offset of the program pointer.

- the corresponding assembler program can be programmed as lieted hereinafter: three ⁇ brackets are used for the de- Bcription of an OpCode, the first bracket containing the four instructions and the relative program pointer target of the main ALU-stage path, the second bracket including the corresponding branching ALU-stage path and the third bracket determining an OpCode for the load-/store-uni .

- bit #1 SUB low, low, range MOV range, rangelps ADD state3, Ipsstateptr, state

- SHL range range, #2 SHL low, low,#2 SUB bitsleft, bitsleft, #1

- Pig. 9 shows in detail a design of a data path according to the present invention, wherein a plurality of details as described above yet not shovm for simplicity in Fig. 1-4 is included.

- Parallel to two ALU-stri -paths two special units OlOlxyz, 0103xyz are implemented for each strip, operating instead of the ALU-path 0101..

- the special unite can include operations that are more complex and/or require more runtime, that is ocerations that are executed during ' the run- time of two or, should it be implemented in a different way and/or wished in the present embodiment, more ALU-stages.

- the special unite can include operations that are more complex and/or require more runtime, that is ocerations that are executed during ' the run- time of two or, should it be implemented in a different way and/or wished in the present embodiment, more ALU-stages.

- Special units are adapted for example for executing a count-leading-zeros DSP- instruction in one cycle: Special units may comprise memories such as RAM3, ROMs, LUTB and so forth as well aa any kind of FPGA circuitry and/or peripheral function, and/or accelerator ASIC functionality.

- a further unit which may be used as a side-unit, as an ALU-PAE or as part of an ALU-chain is disclosed in attachment 2.

- an additional multiplexer stage 0910 is provided selecting from the plurality, of registers 0109 those which are to be used in a further data processing per. clock cycle and connects them to 0140.

- the number of registers 0109 can be increased significantly without enlarging bus 0140 or increasing complexity and latency of multiplexers 0110, 0105 ... 0107.

- the status register 0920 and the control path 0414, 0412, 0413 are also shown.

- Control unit 0921 sur- veys the incoming status signal. It selects the valid data path in response to the operation and controls the code- fetcher (CONT) and the jumps (JMP) according to the state in the ALU-path.

- the delay members 0941 ... 0944 are designed such that they delay the signal for the maximum delay time of each ALU-stage. After each delay stage the signal delayed in this manner will be propagated to the stage of the corresponding multiplexer unit 0105...0107 eerving there as an ENABLE-signal to enable the propagation of the input data. If ENABLE is not set, the mul- tiplexera are passive and do not propagate input signals.

- a latch can be provided at the output of the multiplexer stage, the latch being set transparent by the EN- ABLE-signal enabling the data transition, while holding the previous content if ENABLE is not set. This is reducing the .(re) charge activity of- the gates downstream significantly.

- the comparatively low clock frequency of the circuit and/or the circuitry and/or the I/O constructed therewith allow for a further optimisation that makes it possible to reduce the multiple code memory to one.

- a plurality of code-memory accesses is carried out within one ALU-stage cycle and the plurality of instruction fetch accesses to different program pointers described are now carried out sequentially one after the other.

- Tn order to carry out n instructio fetch accesses within the ALU-stage clock cycle the code memory interface is operated with the n-times ALU-stage clock frequency.

- ALU-path is completely programmable, a disadvantage may be considered to reside in the fact that a very large instruction word has to be loaded. At the same time it is, as has been described, advantageous to carry out jumps and branches fast and without loss of clock .cycles thus having an increased hardware complexity as a result.

- the frequency of jumps can be minimized by implement ng a new configurable ALU-unit 0132 in parallel to the ALU-units 0130 and 0131 embedded in a similar way in the overall chip/processor design.

- This unit generally has ALU-stages identical to those of 0130 as far as possible; however, a basic difference resides in that the function and interconnection of the ALU-stages in the new ALU-unit 0132 is not determined by an instruction loaded in a cycle-wise manner but is configured. That means that the function and/or connection/interconnec- tion can be determined by one or more instructions word(s) •and remains the same for a plurality of clock cycles until one or more new instruction words alter the configura on. It should be noted that one or more ALU-stage paths can be implemented in 0132, thus providing several configurable path9. There also is a possibility of using both instruction loaded ALUs and configurable elements within one strip.

- PCT/DE 99/00505 PACTlOc/PCT

- PCT/DE 00/01869 PACT13/PCT

- decode dispatch: CMP cmd, 0x8001

- the instruction JMP is an explicit jump instruction requiring one additional clock cycle for fetching the new Opcode as is known in processors of the prior art.

- the JMP instruction is preferably used in branching where jumpa are carried out in the less performance relevant branches of the dispatcher.

- the routiae can be optimised by using the conditional pipe capability of the XMP:

- the device of the present invention can be used and operated in a number of ways.

- the information regarding activity of a given cell is not evaluated at the same stage but at a subsequent stage so that the cross-column propagation of status information is not and/or not only effected within one stage under consideration but s effected to at least one neighboring column downstream.

- FNC-PAEs havesomesimilarities with VLlWarchitectures, theydifferinmanypoints.

- the FNC-PAEs aredesignedto formaximum bandwidth for control-flowhandlingwheremanydecisions and branchesin an algorithm are required.

- the interfacing is based on the XPP dataflow protocol: a source transmits single- word packets which are consumed by the receiver. The receiving objectconsumes the packets only ifall required inputs are available. This simple mechanism provides a self-synchronising network. Due to the F C-PAFs sequential nature, in many cases they don't provideresults or consume inputs with every clock cycle. However, the dataflow protocols ensure that all XPP objects synchronize automatically to F C-PAE inputs and outputs. Four FNC-PAE input ports are connected to the bottom horizontal busses, four output ports transfer data packets to the top horizontal busses. As with data, also events can be received and sent using horizontal event busses.

- the ALUs support a restricted set of operations; addition, subtraction, compare, barrel shifting, and boolean functions as well asjumps. More complex operations are implemented separately as SFU functions.

- Most ALU instructions'are available for all ALUs, howeversome ofthem are restricted to specific rows ofALUs.

- the access to source operands from the AGREGa, EREGs, IO is restricted in some rows ofALUs, also the available targets may differ from column to column 1 .

- the ALUs can access several 15-bitregisters simultaneously.

- Thegeneral purpose registers DREGs (rO.. r7) can be accessed by all ALUsindependently with simultaneous read and write.

- the extended registers EREG (eO .. e7), the address generator registers bpO ..bp7 and the ports can also be accessed by the ALUs however withrestrictions on some ALUs. Simultaneouswriting within one cycle to thoseregisters is only allowed ifthe same index is used. E.g. ifone ALUwrites to el, another ALU is only allowed to write to bpJ.

- HPC high priority continue: 6 bits (signed) specify the next opcode to be fetched relative to the current program pointer PP.

- HPC is the default pointer, since it is pre-fetched in any case.

- One code specifies to use the Ink register to select the next opcode absolutely.

- LPC low priority continue: as with HPC, 6 bits (signed) specify the next opcode to be fetched in case of branches. One code specifies to use the Ink register to point to the next opcode absolutely.

- Implicit short jump 6 bits (signed) specify the next opcode to be fetched relative to the current program pointer. Jumps require always one cycle delay since the next opcode cannot be prefetched.

- the Load/Store unit and the SFU execute their commands in parallel.

- the ALU data-path and the address generator are not pipelined. Both load and store operations comprise one pipeline stage.

- SFUs may implement pipelines ofarbitrary depth (for details refer to the section 2.14).

- Program Pointer 1 pp is not incremented sequentially ifnojump occurs. Instead, a value defined by the HPC entry ofthe opcode is added to thepp.

- ALU Instructions support conditional execution, depending on the results the previous ALU operations, either from the ALU status flags ofrow above or- for the frrst ALU row - the status register, which holds the status ofthe ALUs ofrow 3 fromresults ofthe previous clock cycle.

- condition FALSE

- the instruction with the condition and all subsequent instructions in the same ALU column are deactivated.

- the status flag indicating that a column was activated/deactivated is also available for thenextopcode ⁇ LCL or ICR condition).

- a deactivated ALU column can only be reactivated by theACTcondition.

- the conditions LCL or LCR provide an efficient way to implement branching without causing delay slots, as it allows executing in the current instruction the same path as conditionally selected in the previous opcode(s).

- Implicit Program Pointer modifiers' are available with all opcodes and allow PP relativejumpe by +/- 15 opcodes or 0 if.the instruction processes a loop in its own.

- the pointer HPCorLPC (6 bit each) define the relative branch offset.

- One HPC orLPCcode is reserved for selection ofjumps via the Ink register.

- HPC points to the next instruction to be executed relative to the actual pp.

- the usage ofthe HPC pointer can be specified explicitly in one ofthe paths(i.e. ALU columns).

- the EX1T-L or EXIT- specify weather the HPC-pointer will point to the next opcode.

- HPC is setto 1. The assembler performs this perdefault.

- the 6-bit pointer IJMPO In addition to the HPC/LPC, the 6-bit pointer IJMPOpoints relatively to an alternate instruction and is used within complex dispatch algorithms.

- the IJMPO points to the next instruction to be executed relative to the actualpp.

- Theusage of theIJMPO pointer can be specified explicitly in one ofthe paths (i.e. ALU columns). This statement is evaluated only, if the respective path is activated

- the FNC-PAE can be implemented either with one or two instruction memories:

- This high performance implementation ofthe FNC-PAE comprises two instruction memories allowing parallel access.

- the instructions referenced byHPC and LPCare fetched simultaneously.

- the actual instruction to be executed is selected right before execution depending on the execution state ofthe

- the label is optional. If label Is not specified pp+i is used. If an absolute value (e.g. #3) Is specified, It Is added the value to the pp (e.g. pp+3). previous instruction.Thiseliminates thede!eyslotevenwhilebranchingwith LPCthusproviding maximumperformance.

- TheassemblerstatementJMPL. ⁇ iabel defineslongjumpstoanabsolute address.

- CALL target address is defined absolutely by either a 16 bit immediate value or by the content of a register or ALU. Note, that the return address is defined aspp + UMPO'.

- the link register supports fast access to subroutines without the penalty ofrequiring stack operations as for call and ret.

- the link register is used to store the program pointer to the next instruction which is restored for returning from the routine.

- the Ink can be set explicitly by the setlnki rsp. setlinkr opcodes, adding a 16-bit constant to pp or adding a register or ALU value to the pp.

- the LoadStore unit comprises the AGREGs, an address generator, and the Memory-in and Memory-out registers.

- the Load/Store unit generates addresses for the data memories in parallel to the execution ofthe ALU data-path.

- the Load/Store unit supports up to eight base pointers.

- One ofthe eight base pointers is dedicated as stack pointer, whenever stack operations (push, pop, call, ret) are used.

- another base pointer is dedicated as frame pointer/p.

- the bp5 and bp6 can be used as the address pointers apO and apJ with post - increment/decrement.

- All load/store accesses use one of the base pointers bpO ..bp7 to generate the memory addresses.

- Table 4 summarizes the options that define the auto-increment/decrement modes. The options are available for bp5/ap0 and bp6/apJ.

- the mode for post increment and decrement depends on the opcode. For byte load/store (sib. Idbu. Idbs, cpw) apO rsp. apl are incremented or decremented by one. For word load/store (stw. Idw. cpw) apO rsp.

- apl are incremented or decremented by two.

- the data read by a load operation in the previous cycle is available in the mew-register of the ALU datapath.

- the data is available in the target (e.g on of the registers, ALU inputs) one cycle after issuing the load operation.

- Load operations support loading of 16-bit words and signed and unsigned bytes.

- the Debugger shows memory sections which are defined as 16-bit words with the LSB on the right side of the word.

- the FNC-PAE is implemented using theHarvardprocessingmodel,thereforeatleastonedatamemory andone instructionmemoryare required. BothmemoriesareimplementedasfastSRAMs thusallowing operationwith onlyonepipelinestage.

- Theinstruction memory >s256 bitswide inordertosupporttheVLIW-likeinstructionformat.Fortypical embedded applicationstheprogrammemoryneeds tobe 16 to256entries large.Theprogrampointer/ ⁇ addressesone256-bitword oftheprogrammemorywhich holds oneopcode.

- thedata memory is 16-bitwide.

- Fortypical embedded applications the datamemoryneeds tobe2048 to 8196entries large.

- TheALUs providethebasiccalculation functions. Several restrictionsapply, since notall opcodesare useful orpossiblein all positionsandthe availablenumberofopcodebitsin theinstruction memoryis limitedto256. Furthermore, theallowedsourcesand targetsofopcodes(seeTable8)maybedifferent fom ALUrowtoALUrow.

- Table 8 ALU hardware instructions summary 2.12.2 Availability of Instructions

- the arithmetic /logical opcodes comprise nop, not, and, or, xor, add, sub, addc, subc, shru, shrs and shl.

- These instructions move a source to a target.

- the copy instruction transfers data between the ALUs or register files to and from memory.

- the copy instruction allows to define the source and target in the memory.

- the address generator uses one ofthe base pointers (bpO ..bp7) and the offset as specified in the tables.

- post-increment / decrement is possible with apO and apl .

- Push / Pop use bp7 /sp as stack pointer with post-decrement rsp pre-increment. Pop from stack loads the results directly to the registers i.e. without using the mem-out registers as with load/store operations.

- Table 14 Link register load instructions Return is possible via stack, the Ink register or the interrupt Ink register

- RDS and WRS transfer two bits ofthe status register from and to the ports.

- Multiple ALUs may attempl to write within one cycle to the same target register.

- the following list of priorities applies:

- the memory registers are use for transfer between the FNC-core and the memory. Reading from memory (!dw, ldbu, Idbs) load the result values to mem-out. The ALUs can access this register in the next cycle. Writing to the register is performed implicitly with the store instructions. The Ram is written in the next cycle.

- the Ink and intlnk register store program pointers. It is not possible to read the registers.

- the FNC-PAE supports up to 16 SFUs, while each ofthem can execute up to 7 different defined SFU instructions.

- SFUs operate in parallel to the ALU data-path. Each instruction may contain up to two SFU commands. Each SFU command disables all or ar3 in the bottom row. The results ofthe SFU operation are fed into the bottom multiplexers, instead of the results ofthe disabled al3. SFU instructions are non- conditional and are executed whether the respective ALU path is active or not.

- SFUs may access all registers as sources but no ALU outputs.

- the SFU instruction format is shown in Table 25:

- the SFU may generate a 32-bit result (e.g. multiplication). In this case the result is written simultaneously to two adjacent registers, requiring the target register to be even. The least significant 16-bit word ofthe result is written to the even register, the most significant word is written to the odd register.

- a 32-bit result e.g. multiplication

- Copro# selects one of up to 16 SFUs. SFUs 0-7 are reserved for PACT standard releases.

- CWB, CMD 7

- the SFU 0 provides signed and unsigned multiplication on 16 bit operands. The least significant word of the result is written to the specified target register. The most significant word is discarded.

- the result is available in the target register in the next clock cycle.

- SFU 1 provides a special function to read and write blocks ofbits from a port.

- Bit-block input (ibit) ibit

- the SFU reads a 16-bit word from a port and shifts the specified number ofbits to the target (left-shift). If all bits have been "consumed", a new 16-bit word is read.

- the specified number of bits ofa source is left-shifted to the SFU. As soon as overall 16 bits have been shifted, the SFU writes the word to the output port.

- the FNC-PAE uses separate memories foT Data (D EM) and Code (1 E ). Different concepts are implemented:

- DMEM is a tightly coupled memory (TCM) under explicit control by the programmer

- ⁇ IMEM is implemented as 4-way associative cache which is transparent for the programmer.

- the reference design consists of a 4-way associative cache and interface to an external GGDR3 DRAM.

- Figure 18 depicts the basic structure ofthe memory hierarchy spanning several Function PAEs, the shared D-cache and the shared Sysmem interface.

- the Instruction decoder accesses the local IRAM, which updates its content automatically according to its LRU access mechanism.

- the Load-Store unit may access the local TCM, the shared D-cache or the shared SYSMEM.

- the TCM must be updated under explicit control of the program either using the load /store Opcodes or the Block-Move Unit.

- All data busses are 256 Bit wide. Thus a 256 Bit opcode can be transferred in one cycle or up to 8 x 16 bits (16-bit aligned) can be transferred using the block-move unit.

- SYSMEM must be designed to support the highest possible bandwidth.

- ⁇ FNCI to FNCn are using round robin

- Theblockmoveunitofone oftheFNC-PAEs mayboototherFNC-PAEs or(re-)configurethe arrayof ALl)-/RAM-PAEsbyfetchingcode or configuration data from theexternal memory.

- Whileconfiguringanotherdevice,theWoclc-move unit is selectingthe targettobereconfiguredor booted, Simultaneously itisrising Iheconfigurationoutputsignal, indicating theconfiguration cycleto thetargetunit.

- TheFNC-PAB willbecormecled neartheRAM-PAEsoftheevenrowsofiheXPParray.

- the FNC- PAEs will haveports toexchangedatadirectlybetween theFNC-PABcoresorexternal components withouttheneedtogothrough the XPPamydatapaths.

- the instruction format allows the definition of up to four data segment pointers. Selection ofsegments extends the addressable memory space.

- the Function PAE is can be programmed in assembler language and - in a second project phase - in C.

- the FNC-Assembler supports all features which the hardware provides. Thus, optimised code for high performance applications can be written.

- the assembler language provides only a few elements which are easy to leam.

- the usage ofa standard C-preprocessoT allows the definition ofcommands preceded with the "#" symbol. Examples are ⁇ include and conditional assembly with #i ... #endif.

- the FNCDBG which is an integrated assembler, simulator and debugger, allows simulating and testing the programs with cycle accuracy.

- the debugger shows all ALU outputs, the register files and the memory content. It features single stepping through the program and the definition ofbreakpoints.

- the assembler uses a typical three-address code for most instructions: it is possible to define the target and two sources. Multiple ALU instructions are merged into one FNC opcode. The right ALU path is separated with '

- the example Figun19 shows the structure ofone opcode. Ifa row ofALUs is not required it can be left open (the assembler automatically inserts NOPs here).

- the example shows a typical opcode with branching to the right path with the OP1 condition.

- Constant definitions are preceded by keyword CONST. Constants expressions mustbe within parenthesis ( ).

- Table 28 Assembler naming of objects and registers Immediate valuesareprecededby The numberofallowed bitsoftheimmediatevaluedepends the ALU instruction.

- Optionallytheregistersettobe usedwhenjumpingtoa label can bespecifierwith(RSO)rsp. (RSI) beforethecolon.

- the Instruction RAM is initializedwiththekeywordFNC_IRAM(0).

- the symbol specifiesunrnitalizeddata.Lengthis thenumberofbyteaorwords,respectively.

- Word reservestwobyteswithbigendian byteordering 1 .

- Datasectionscan alsobeinitialised usingalist ofvalues.

- FNCDBG fills uninitialized Data RAM sections with default values:

- FNCDBG shows the memory content in a separate frame on the right side. Bytes or words which have been changed in the previous cycle(s) are highlighted red.

- Arithmetic and move ALU instructions can be prefixed with one of the conditions.

- ALU-instructions conditions refer to Table 9 to Table 17 Column "Condition”.

- the status flags of ALU are available for evaluation for the ALU of the same column the row below. If the condition is TRUE, the subsequent ALUs that column are enabled. Ifthe condition is false, the ALU with the condition statement and all subsequent ALUs ofthat column don't write results to the specified source 1 .

- the status of the ALUs of the bottom column (al3, ar3) are written to the status register for evaluation by the ALUs in the first row during the next opcode.

- OPI opposite column inactive

- OPA opposite column active

- LCL last column active left

- LCR last column active right

- the conditions are derived from three ALU flags:

- the disabled ALUs provide results at their outputs which can be used by other ALUs

- the FNC-PAE does not have a program counter in the classical sense, instead, a program pointer must point to the next opcode.

- the assembler allows to set the three opcode fields HPC, LPC and IJMPO which define the next opcode.

- the maximum branch distance for this type of branches is +-31.

- the assembler instructions must be defined in a separate source code line. 3.1,8.1 EXIT branch

- HPC, LPC and JMPS define ( he next opcode when exiting a column.

- HPC, LPC or JMPS can only be specified once per column.

- the relative pointer must be within the range+-15. For branchesoutside of this range, JMPL must be used.

- LPC label LPC points to the label.

- Table 30,Table 31 The following tables (Table 30,Table 31) specify which pointers the assembler enters(during design- time) andwhich pointers are usedbased on the runtime activity ofcolumns. "Default" means, diet the exitpointer was not explicitly specified in the assemblercode.

- the ELSE branch evaluates the result of a conditional ALU instruction and defines one ofthe HPC, LPC or JMPS fields to point to the next opcode as specified by the target or default (ifno.target is specified). For restrictions, which ALU- instructions ELSE allow branches/refer to Table 9 to Table 17 Column "ELSE”.

- condition is TRUE, the ALU column is enabled and the setting for the EXIT branch is used. If the condition is FALSE, the ALU column is disabled and the setting for the ELSE branch is used. If an ALU column is disabled by a previous condition, the ELSE branch is not evaluated.

- LPC label use LPC in case that the condition in the previous instruction was FALSE.

- Table 32 shows which pointer is used based on the else statement. If the condition in the line is TRUE, the specification ofthe EXIT branch is used (See Table 30, Table 31 ), If the condition is FALSE the else target (e) is used.

- ⁇ JMRL source use a register or ALU or 6-bit immediate as relative jump target to the actual program pointer. The source is added to the pp.

- the assembler uses in most cases the ALU instructions. However, some of the hardware instructions are merged (e.g. mov. movr, movai to NOV) in order to simplify programming. Besides the ALU instructions, a set ofinstructions allow to control the program flow on opcode level (e.g. definition of the HPC to point to the next opcode - see previous chapter).

- opcode level e.g. definition of the HPC to point to the next opcode - see previous chapter.

- Target the target object to which the result is written.

- Target "-" means that nothing is written to a register file, however, the ALU output is available.

- ⁇ src the source operand, can also be a 4 bit or 6 bit immediate

- ⁇ srcO the left side source operand, can also be a 4 bit or 6 bit immediate

- ⁇ srcI the right side ALU operand, can also be a 4 bit or 6 bit immediate

- ⁇ bpreg one of the base registers of the AGREG

- movai moves an immediate 16-bit value to the ALU output which can be used by the subsequent ALU stages.

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Software Systems (AREA)

- General Engineering & Computer Science (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Advance Control (AREA)

- Devices For Executing Special Programs (AREA)

Abstract

Data processing device comprising an array of data processing elements, adapted to process data in an order to be determined by instructions.

Description

Title: Method and Device for Data Processing

Description

The present invention relates to a method of operating a low latency massive parallel data processing device in particular with respect to optimizer passes in high-language compilers.

The disclosure comprises two major parts, namely a first part relating to mapping and scheduling particularly for a unit designated as FNC.

The unit and a suitable architectural embedding of said unit is disclosed in detail in the second part of the present invention, the second part having been published as WO

2006/082091 A2.

This patents describes the optimizer passes in high-language compilers (HL compiler), such as e.g. C/C++ and FORTRAN compilers, necessary to schedule and map instructions onto ALU-Array-Processors (AAP) comprising an array of ALUs in the processor datapath. Reference is made to examples of those processors, e.g. described in US 2009/0031104 Al and

PCT/EP2009/007415 both are entirely embodied in this

specification for full disclosure. Details of the referenced patents may be claimed in conjunction with inventions

described herein.

Only for the sake of full disclosure and better understanding, the GNU GCC compiler is used for the described exemplary implementation. Therefore reference is also made to the publically available "GNU Compiler Collection Internals by Richard . Stallman and the GCC Developer Community; for GCC Version 4.3.0", which is embodied in this specification for full disclosure and better understanding.

Also fully embodied is the book "Compilers Principles,

Techniques, & Tools by Aho, Lam, Sethi and Ullman" for

detailed disclosure.

OPCODE MAPPING AND SCHEDULING IN AAP-HL

This document briefly describes the opcode mapping and

scheduling algorithm exemplary implemented in the GCC port for the AAP-PAE.

The original compiler passes of GCC are changed as follows:

• A first call to function AAPMAP is added to the GCC

instruction scheduling pass, executed before register allocation .

• A second call to AAPMAP is added to the machine

reorganization pass, executed after register allocation.

For more details on GCC compiler passes please refer to

Chapter 8 of the GCC Internals Manual.

AAPMAP - FIRST PASS

The compiler pass AAPMAP works on the "RTL Representation" created by GCC. The RTL representation comprises a set of basic blocks that correspond to parts of the source code. Each basic block comprises a list of instructions. For short, GCC calls an instruction insn and a list of instructions is called insn-list. Please refer to Chapter 12 of the GCC Internals Manual for more details on the GCC RTL representation.

The first pass of AAPMAP performs a preliminary mapping of insns to AAP opcodes. Details to AAP opcodes can be found in the description of the FNC ALU path, embodied in this

specification. Reference is also made to US 2009/0031104 Al which is entirely embodied in this specification for full disclosure. Another example of a multi-ALU processor is described in PCT/EP2009/007415. The respective specification is also entirely embodied in this specification for full disclosure. Details of the referenced patents may be claimed in conjunction with inventions described herein.

Unless data dependencies are violated, insns can be reordered in the GCC insn-list if it improves the mapping. GCC then passes the modified insn-list to further GCC compiler passes.

The mapping of insns to AAP opcodes is recorded in a temporary data structure called codelist. After the first pass finishes, the codelist is deleted. The only lasting effect of the first pass of AAPMAP is the (potential) reordering of insns.

AAPMAP iterates over all RTL basic blocks of a procedure generated by GCC and maps the insns of the basic blocks to ALUs of AAP opcodes in the codelist. The ALUs of an AAP opcode are filled from top-left to bottom-right (order: alO, arO, all, arl etc.) whenever possible, i.e. if an insn is available which can be mapped to the ALU. In the first AAPMAP pass, the order in which the insns are mapped (i.e. scheduled) is only determined by the data dependencies within the basic block. Hence an insn may be moved back or forth in the insn-list. In the second pass, after GCC register allocation, the order of the insns cannot be changed anymore since the register

allocation may have introduced additional dependencies.

In one embodiment independent insns may be reordered after register allocation if a detailed analysis of the used

registers shows it is possible.

If no ALUs of an AAP opcode can be filled anymore, a new, empty opcode is added to the codelist and filled. However, if a special insn (store, call, jump or clobber, see GCC

Internals Manual, Chaper 12) occurs in the original insn-list, all previous insns must be mapped before the special insn. Insns representing assembler macros (combined operators as defined in AAP.md, jumps and inline assembler) are always mapped to a separate AAP opcode.

Note:

• The exact position of the insns in the AAP opcode is only stored in the temporary codelist data structure but not

in the insn list. Later the second pass of AAPMAP will insert pseudo-insns representing the final mapping. For details please see below.

• After register allocation, two insns writing to the same register may not be mappable in the same ALU row, according to a limitation in one embodiment of the AAP- PAE.

In one embodiment insns may be allowed to write to the same register in one ALU row.

Example for code reordering:

The following exemplary original insn-list

MOV rO, #100

ADD r2, rl, rO

MOV r3, #200

SUB r5, r4, r3

is mapped in the first pass of AAPMAP to ALUs in AAP opcode as follows :

The mapping is depicted in the above shown diagram according to the structure of one embodiment of a multi-ALU array processor (AAP) . Two columns of ALUs are implemented, each column comprising 4 ALUs. The dataflow direction is preferably from top to bottom. ALUs receive operands from the register file and/or any ALUs located above. For details see e.g. Figs. 4, 5, 6, 13, 27, 28 of PCT/EP2009/007 15 and e.g. Figs. 1, 2, 4, 9, 10, 11.

In the table above, the upper left field references to the upper left ALU in the respective datapath, the upper right field to the upper right ALU and the lower right field to the lower right ALU. All other fields reference to the datapath accordingly .

Note: MOV, ADD, and SUB are assembler instructions, obvious for one skilled in the art. r0..r7 define registers of

processors register set, # defines constants.

The assembler language is using a 3 address syntax:

<opcode> <target>, <source0>, <sourcel>

The GCC insn-list is reordered in the following way:

MOV r0, #100

MOV r3, #200

ADD r2, rl, rO

SUB r5, r4, r3

GCC ensures that the modified insn-list is passed on to the following compiler passes.

The subsequent pseudo-code of AAPMAP describes the algorithm in great detail and is without further explanation

understandable for men skilled in the art:

AAPMAP ( ) :

FOR ALL bb in PROCDURE {

MAP_BB(bb)

}

MAP_BB (bb) :

maplist = empty insn list

insn = first insn in insn-list of bb, ignoring NOTE and USE

WHILE (insn != NULL) {

WHILE (insn not store, call, jump, macro or clobber) {

add insn to maplist

insn = next insn in insn-list (or NULL if insn was last in list)

}

MAP_LIST (maplist)

if (insn != clobber) {

add insn to maplist

)

if (insn is macro or call) {

MAP_LIST (maplist ) /* macro and call are mapped separately */ insn = next insn in insn-list (or NULL if insn was last in list)

)

}

MAP_LIST (maplist) :

opc = last opcode in codelist or NULL if empty

WHILE (maplist not empty) {

FILL_OPC (maplist, opc)

if (maplist not empty and no insn could be mapped to opc) {

opc = new, empty opcode

add opc to codelist

}

)

FILL_OPC (maplist, opc):

if (first pass) ( // before register allocation: reordering allowed // loop over positions and fill with whatever insn is possible for (pos = first free ALU position in opc; pos <= last ALU pos . ; pos++) {

insn = find first insn in maplist which can be mapped to opc at pos

if (insn) (

map insn to opc at pos

remove insn from maplist

}

}

} else ( // after register allocation: reordering not allowed

do {

pos = first free ALU position in opc

insn = first insn in maplist

while (insn cannot be mapped to pos and pos <= last ALU position) pos = pos + 1

}

if (insn can be mapped to pos) {

map insn to opc at pos

remove insn from maplist

}

) until maplist empty or nothing can be mapped to opc anymore

)

AAPMAP - SECOND PASS

The second pass of AAPMAP again maps the insn-list to a new temporary data structure called codelist. Because of

dependencies introduced by the register allocation, this mapping cannot reorder insns as freely as the first pass of AAPMAP, but it can produce a better mapping since the restrictions of the registers allocated are known. The insn reordering introduced in the first pass of AAPMAP results in a more optimal mapping in the second pass.

Then, the function iterates the generated codelist and adds the pseudo-insns "NOP" , " |" and "NEXT" to the GCC insn-lists. The pseudo-insns "NOP" and "|" define the spatial mapping of insns to ALUs in one AAP opcode. The pseudo-insn "NEXT" defines the temporal scheduling starting a new AAP opcode.

The pseudo-insns cause GCC to generate the correct assembler output with the insns at the correct ALU positions in the AAP opcodes .

It also performs the following actions:

• In one embodiment of the AAP-PAE, the data from a load instruction is available in the next cycle from a special register called "mem". The read value is available from the target register only in the second cycle following the load instruction. Thus if a loaded value is used in the next opcode, the register is replaced with "mem" if possible; otherwise an additional "NEXT" pseudo-opcode is inserted creating an empty AAP opcode.

• If a register is written and read within the same AAP

opcode, the read access is replaced with the pseudo- register for the ALU output (a10 etc.) of the write access .

Example for pseudo-register replacement::

Analyzing the following exemplary original insn-list

ADD r2, rl, rO

SUB r4, r2, #2

SHL r2, r4, #1

we notice that the three insns have data dependencies via registers r2 and r . When mapping these insns onto the AAP ALU matrix by combining them into one AAP opcode, the references to r2 and r4 have to be replaced with the virtual registers alO and all, representing the output of the respective ALU. The following table shows the result of that mapping.

In this exemplary embodiment alO, all, al2 define operands to be received from ALUs in the left column, the following number defines the ALU row providing the operand. Basically the syntax for defining operands being forwarded from one ALU to another within the ALU array is e.g. as such:

a<column><row>

"a" defines an ALU as the source, <column> and <row> define the source's position with the ALU array.

In the exemplary embodiment the ALU sources are defined as shown in the following arrangement:

As the exemplary datapath comprises two columns, left (1) and right (r) are sufficient for the definition and easier to read.

An ALU may produce no output to the register file. While the results of ALUs of processors in the prior art are always written into the register file, AAP processors may transmit data solely from one ALU to another. As ALU sources are clearly defined, it is not necessary to define the target of the result producing ALU. In this case, the result producing ALU has no (register) target for the result, which is defined

by Anyhow, ALUs using the respective result as operands can address that ALU with the previously described

a<columnXrow> address.

In one embodiment, the producing ALU may address the receiving ALU. However, if multiple receivers exist, it becomes

burdensome if not impossible to implement this addressing scheme in the binary format of the opcode.

As already described, the mapping is depicted in the above shown diagram according to the structure of one embodiment of a multi-ALU array processor (AAP) . Two columns of ALUs are implemented, each column comprising 4 ALUs. The dataflow direction is preferably from top to bottom. ALUs receive operands from the register file and/or any ALUs located above. For details see e.g. Figs. 4, 5, 6, 13, 27, 28 of

PCT/EP2009/007415 and e.g. Figs. 1, 2, 4, 9, 10, 11.

In the table above, the upper left field references to the upper left ALU in the respective datapath, the upper right field to the upper right ALU and the lower right field to the lower right ALU . All other fields reference to the datapath accordingly .

The GCC insn-list is modified in the following way:

ADD -, rl, r0

SUB r4, a10, #2

SHL r2, a11, #1

NEXT

GCC passes the modified insn-list to any following passes.

PSEUDO REGISTERS FOR REPRESENTING ALU REFERENCES

When mapping multiple insns onto one AAP opcode, values are often passed directly from one ALU to another ALU. Sometimes those values need to be stored into a register, as they will be used in another AAP opcode later, but often those values are never used in another place and there is no need to allocate a register for them. Typical examples for the latter are intermediate values computed while' evaluating a complex expression.

Consequently we need to hide from GCC those values that are passed from one ALU directly to another and not used anywhere else, so they don't cause unnecessary register spilling or saving. This is accomplished by mapping them to virtual hardware registers representing the ALU connections before register allocation. The final mapping step then has to ensure

that instructions using those virtual registers are mapped to the same AAP opcode.

OPCODE MAPPING AND SCHEDULING IN FNC-GCC

This document briefly describes the opcode mapping and scheduling algorithm implemented in fnc-gcc.

The original fnc-gcc program is changed as follows:

• The pass FNCMAP is inserted directly before pass_sms, i.e. before scheduling and register allocation.

• In the machine reorganization pass (pass_machine_reorg, after register

allocation), a call to rNSERTJvlAPPINGJNSNS is added to the function fnc_reorg_pass.

• The function FNCMAP PROC is called twice for each procedure: before register allocation (from FNCMAP) and after (from rNSERTJvlAPPING INSNS). The boolean parameter "after ra" distinguishes the first and second call.

FNCMAP

FNCMAP performs a first, preliminary mapping ofinstructions (insns) to FNC opcodes by calling FNCMAP PROC(false), see below. Unless data dependencies are violated, insns can be reordered in the GCC insn-list ifit improves the mapping.

Afterthe pass, the mapping ofinsns to FNC opcodes in the codelist is deleted. The only lasting effect ofthe pass is the (potential) reordering ofinsns.

FNCMAP_PROC

FNCMAP_PROC iterates over all basic blocks ofa procedure and maps the instructions (insns) ofthe basic blocks to ALUs ofFNC opcodes in a codelist. The ALUs ofan FNC opcode are filled from top-left to bottom-right (order: alO, arO, all , arl etc.) whenever possible, i.e. ifan insn is available which can be mapped to the ALU. If

FNCMAP PROC is called before register allocation (i.e. after_ra is not set), the order in which the insns are mapped (i.e. scheduled) is only determined by the data dependencies within the basic block. Hence an insn may be moved back or forth in the insn-list. If after_ra is set, the order ofthe insns cannot be changed anymore since the register allocation may have introduced additional dependencies.

Possible optimization: Independent insns may also be reordered after register allocation ifa detailed analysis ofthe used registers shows it is possible.

Ifno ALUs ofan FNC opcode can be filled anymore, a new, empty opcode is added to the codelist and filled. However, ifa special insn (store, call,jump or clobber) occurs in the original insn-list, all previous insns must be mapped before the special insn. Macro insns (for combined operators as defined in fnc.md, jumps and inline assembler) are always mapped to a separate FNC opcode.

Note:

• The exact position ofthe insns in the FNC opcode is only stored in the codelist data structure but not in the insn list. If after_ra is set, "NOP", "NEXT" and "\" pseudo-insns will be inserted later by I SERT_MAPP1NG_JNSNS.

• The exact mapping algorithm differs slightly depending on ifafter ra is set or not, cf. function FlLL_OPC.

• After register allocation, two insns defining the same register cannot be mapped in the same ALU row.

Possible optimization: Allow insns which define the same register in one ALU row, but, replace the output which is not used in subsequent FNC opcodes by

Example for code reord

Original insn-list:

MOV r0,#100

ADD r2, rl , rO

MOV r3, #200

SUB r5, r4, r3

Mapping to ALUs in FNC opcode:

Changed insn-list:

MOV r0, #100

MOV r3, #200

ADD r2, rl, rO

SUB r5, r4, r3

The pseudo-code ofFNCMAP PROC is as follows:

FNCMAP_PROC(after_ra):

FOR ALL bb in PROCDURE {

MAP_BB(bb, after_ra)

}

MAP_BB(bb, after_ra):

maplist = empty insn list

insn = first insn in insn-list ofbb, ignoring NOTE and USE

WHILE (insn !=NULL) {

WHILE (insn not store, call,jump, macro or clobber) {

add insn to maplist

insn = next insn in insn-list (or NULL if insn was last in list)

MAP_LlST(maplist, after_ra)

if(insn != clobber) {

add insn to maplist

}

if(insn is macro or call) {

MAP_LIST(maplisf) /* macro and call are mapped separately */ insn = next insn in insn-list (or NULL ifinsn was last in list)

}

}

MAP_LIST(maplist, afterja):

opc = last opcode in codelist orNULL ifempty

WHILE (maplist not empty) {

FILL_OPC(maplist, opc, afterja)

if(maplist not empty and no insn could be mapped to opc) {

opc = new, empty opcode

add opc to codelist

}

}

FILL_OPC(maplist, opc, after_ra):

if(!afterra) { // before register allocation: reordering allowed

// loop over positions and fill with whatever insn is possible

for (pos = first free ALU position in opc; pos <= last ALU pos.; pos++) { insn = find first insn in maplist which can be mapped to opc at pos if(insn) {

map insn to opc at pos

remove insn from maplist

}

}

} else { // after register allocation: reordering not allowed

do {

pos = first free ALU position in opc

insn = first insn in maplist

while (insn cannot be mapped to pos and pos <= last ALU position) { pos = pos + 1

}

if(insn can be mapped to pos) {

map insn to opc at pos

remove insn from maplist

}

} until maplist empty or nothing can be mapped to opc anymore

INSERT_MAPPINGJNSNS

This function, called by the machine reorganization pass, first calls

FNCMAP_PROC(true). It re-maps the insn-list to a new codelist. Because of dependencies introduced by the register allocation, this mapping cannot reorder insns as freely as pass FNCMAP. But it can produce a better mapping since the removed and inserted insns can be considered and the class ofthe registers allocated is known.

Probably the reordering in FNCMAP will help producing a better mapping here.

Then, the function iterates the generated codelist and adds the pseudo-insns "NOP", "NEXT" and "|" to generate the correct assembler output with the insns at the correct ALU positions in the FNC opcode.

It also performs the following actions:

• After load instructions, ifloaded value is used in next opcode: Replace register by "mem" ifpossible, otherwise insert additional "NEXT".

• Ifa register is defined and used within the same FNC opcode, the input ofthe use is replaced by the pseudo-register for the ALU output (alO etc.).

Example for pseudo-register replacement:

Insn-list:

ADD r2, rl , rO

SUB r4, r2, #2

SHLr2, r4,#I

Mapping to ALUs in FNC opcode:

Changed insn-list:

ADD -,rl, rO

SUB r4, alO, #2

SHLr2, all, #1

NEXT

Note that the insns are output to assembler code exactly as in the original fnc-gcc. Only "NOP", "NEXT" and "|" pseudo-insns are added, and the registers referring to internal ALU results are adjusted.

PSEUDO REGISTERS TO REPRESENT ALU REFERENCES

TBD (by tomorrow morning)

Latency Massive Parallel Data Processing Device

The present invention relates to a method of data processing and in particular to an optimized architecture for a processor having an execution pipeline allowing on each stage of the pipeline the conditional execution and in particular conditional jumps without reducing the overall performance due to. stalls of the pipeline. The architecture according to the— present invention is particularly adapted to process any sequential algorithm, in particular Huffman-like algorithms, e. g. CAVLC and arithmetic codecs like CABAC having a large number of conditions and jumps. Furthermore, the present invention is particularly suited for intra-frame coding, e. g. as suggested by the video codecs H.264.