WO2016197026A1 - Full reference image quality assessment based on convolutional neural network - Google Patents

Full reference image quality assessment based on convolutional neural network Download PDFInfo

- Publication number

- WO2016197026A1 WO2016197026A1 PCT/US2016/035868 US2016035868W WO2016197026A1 WO 2016197026 A1 WO2016197026 A1 WO 2016197026A1 US 2016035868 W US2016035868 W US 2016035868W WO 2016197026 A1 WO2016197026 A1 WO 2016197026A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- image

- distorted

- normalized

- reference image

- similarity

- Prior art date

Links

- 238000013527 convolutional neural network Methods 0.000 title claims abstract description 30

- 238000001303 quality assessment method Methods 0.000 title description 6

- 238000000034 method Methods 0.000 claims abstract description 40

- 230000008569 process Effects 0.000 claims abstract description 5

- 230000006870 function Effects 0.000 claims description 18

- 238000012549 training Methods 0.000 claims description 17

- 238000012545 processing Methods 0.000 claims description 14

- 238000011176 pooling Methods 0.000 claims description 9

- 230000008447 perception Effects 0.000 claims description 7

- 230000004913 activation Effects 0.000 claims description 6

- 238000012417 linear regression Methods 0.000 claims description 5

- 238000013507 mapping Methods 0.000 claims 1

- 238000013528 artificial neural network Methods 0.000 description 5

- 230000000007 visual effect Effects 0.000 description 5

- 238000013459 approach Methods 0.000 description 4

- 238000004891 communication Methods 0.000 description 4

- 238000004458 analytical method Methods 0.000 description 2

- 230000008901 benefit Effects 0.000 description 2

- 230000007246 mechanism Effects 0.000 description 2

- 238000012986 modification Methods 0.000 description 2

- 230000004048 modification Effects 0.000 description 2

- 230000003287 optical effect Effects 0.000 description 2

- 238000013442 quality metrics Methods 0.000 description 2

- 230000004044 response Effects 0.000 description 2

- 238000012706 support-vector machine Methods 0.000 description 2

- 238000012360 testing method Methods 0.000 description 2

- XOJVVFBFDXDTEG-UHFFFAOYSA-N Norphytane Natural products CC(C)CCCC(C)CCCC(C)CCCC(C)C XOJVVFBFDXDTEG-UHFFFAOYSA-N 0.000 description 1

- 239000000654 additive Substances 0.000 description 1

- 230000000996 additive effect Effects 0.000 description 1

- 238000003491 array Methods 0.000 description 1

- 230000005540 biological transmission Effects 0.000 description 1

- 230000015556 catabolic process Effects 0.000 description 1

- 238000006731 degradation reaction Methods 0.000 description 1

- 238000011161 development Methods 0.000 description 1

- 238000005516 engineering process Methods 0.000 description 1

- 238000003384 imaging method Methods 0.000 description 1

- 230000000873 masking effect Effects 0.000 description 1

- 239000000463 material Substances 0.000 description 1

- 230000001766 physiological effect Effects 0.000 description 1

- 238000011160 research Methods 0.000 description 1

- 230000035945 sensitivity Effects 0.000 description 1

- 239000000126 substance Substances 0.000 description 1

- 238000006467 substitution reaction Methods 0.000 description 1

- 230000002123 temporal effect Effects 0.000 description 1

- 238000012546 transfer Methods 0.000 description 1

- 230000009466 transformation Effects 0.000 description 1

- 210000000239 visual pathway Anatomy 0.000 description 1

- 230000004400 visual pathway Effects 0.000 description 1

Classifications

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/0002—Inspection of images, e.g. flaw detection

- G06T7/0004—Industrial image inspection

- G06T7/001—Industrial image inspection using an image reference approach

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/22—Matching criteria, e.g. proximity measures

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F18/00—Pattern recognition

- G06F18/20—Analysing

- G06F18/24—Classification techniques

- G06F18/241—Classification techniques relating to the classification model, e.g. parametric or non-parametric approaches

- G06F18/2413—Classification techniques relating to the classification model, e.g. parametric or non-parametric approaches based on distances to training or reference patterns

- G06F18/24133—Distances to prototypes

- G06F18/24137—Distances to cluster centroïds

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/04—Architecture, e.g. interconnection topology

- G06N3/045—Combinations of networks

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/08—Learning methods

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06N—COMPUTING ARRANGEMENTS BASED ON SPECIFIC COMPUTATIONAL MODELS

- G06N3/00—Computing arrangements based on biological models

- G06N3/02—Neural networks

- G06N3/08—Learning methods

- G06N3/084—Backpropagation, e.g. using gradient descent

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T5/00—Image enhancement or restoration

- G06T5/70—Denoising; Smoothing

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

- G06T7/0002—Inspection of images, e.g. flaw detection

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V10/00—Arrangements for image or video recognition or understanding

- G06V10/40—Extraction of image or video features

- G06V10/44—Local feature extraction by analysis of parts of the pattern, e.g. by detecting edges, contours, loops, corners, strokes or intersections; Connectivity analysis, e.g. of connected components

- G06V10/443—Local feature extraction by analysis of parts of the pattern, e.g. by detecting edges, contours, loops, corners, strokes or intersections; Connectivity analysis, e.g. of connected components by matching or filtering

- G06V10/449—Biologically inspired filters, e.g. difference of Gaussians [DoG] or Gabor filters

- G06V10/451—Biologically inspired filters, e.g. difference of Gaussians [DoG] or Gabor filters with interaction between the filter responses, e.g. cortical complex cells

- G06V10/454—Integrating the filters into a hierarchical structure, e.g. convolutional neural networks [CNN]

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V10/00—Arrangements for image or video recognition or understanding

- G06V10/70—Arrangements for image or video recognition or understanding using pattern recognition or machine learning

- G06V10/82—Arrangements for image or video recognition or understanding using pattern recognition or machine learning using neural networks

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V10/00—Arrangements for image or video recognition or understanding

- G06V10/98—Detection or correction of errors, e.g. by rescanning the pattern or by human intervention; Evaluation of the quality of the acquired patterns

- G06V10/993—Evaluation of the quality of the acquired pattern

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V30/00—Character recognition; Recognising digital ink; Document-oriented image-based pattern recognition

- G06V30/10—Character recognition

- G06V30/19—Recognition using electronic means

- G06V30/191—Design or setup of recognition systems or techniques; Extraction of features in feature space; Clustering techniques; Blind source separation

- G06V30/19173—Classification techniques

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06V—IMAGE OR VIDEO RECOGNITION OR UNDERSTANDING

- G06V30/00—Character recognition; Recognising digital ink; Document-oriented image-based pattern recognition

- G06V30/10—Character recognition

- G06V30/19—Recognition using electronic means

- G06V30/192—Recognition using electronic means using simultaneous comparisons or correlations of the image signals with a plurality of references

- G06V30/194—References adjustable by an adaptive method, e.g. learning

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N17/00—Diagnosis, testing or measuring for television systems or their details

- H04N17/004—Diagnosis, testing or measuring for television systems or their details for digital television systems

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04N—PICTORIAL COMMUNICATION, e.g. TELEVISION

- H04N19/00—Methods or arrangements for coding, decoding, compressing or decompressing digital video signals

- H04N19/10—Methods or arrangements for coding, decoding, compressing or decompressing digital video signals using adaptive coding

- H04N19/134—Methods or arrangements for coding, decoding, compressing or decompressing digital video signals using adaptive coding characterised by the element, parameter or criterion affecting or controlling the adaptive coding

- H04N19/154—Measured or subjectively estimated visual quality after decoding, e.g. measurement of distortion

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/10—Image acquisition modality

- G06T2207/10004—Still image; Photographic image

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/10—Image acquisition modality

- G06T2207/10016—Video; Image sequence

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/20—Special algorithmic details

- G06T2207/20021—Dividing image into blocks, subimages or windows

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/20—Special algorithmic details

- G06T2207/20081—Training; Learning

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/20—Special algorithmic details

- G06T2207/20084—Artificial neural networks [ANN]

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/30—Subject of image; Context of image processing

- G06T2207/30168—Image quality inspection

Definitions

- Image Quality Assessment a prediction of visual quality as perceived by a human viewer.

- Image quality measures can be used to assess the dependence of perceived distortion as a function of parameters such as transmission rate and also for selecting the optimal parameters of image enhancement methods.

- subjective tests may be carried out in laboratory settings to perform IQA, such tests are expensive and time- consuming, and cannot be used in real-time and automated systems. Therefore, the possibility of developing objective IQA metrics to measure image quality automatically and efficiently is of great interest.

- FR-IQA Full-Reference IQA

- MSE Mean Squared Error

- PSNR Peak Signal-to-Noise Ratio

- PVQMs perceptual visual quality metrics

- the bottom-up approaches attempt to model various processing stages in the visual pathway of the human visual system (HVS) by simulating relevant psychophysical and physiological properties including contrast sensitivity, luminance adaption, various masking effects and so on.

- HVS human visual system

- FR-IQA Structural SIMilarity

- MS-SSEVI Multi-Scale SSIM

- IW- SSIM Information Weighted SSIM

- FSIM Feature SIMilarity

- GMSD Gradient Magnitude Similarity Deviation

- Embodiments generally relate to providing systems and methods for assessing image quality of a distorted image relative to a reference image.

- the system comprises a convolutional neural network that accepts as an input the distorted image and the reference image, and provides as an output a metric of image quality.

- the method comprises inputting the distorted image and the reference image to a convolutional neural network configured to process the distorted image and the reference image and provide as an output a metric of image quality.

- a method for training a convolutional neural network to assess image quality of a distorted image relative to a reference image.

- the method comprises selecting an initial set of network parameters as a current set of network parameters for the convolutional neural network; for each of a plurality of pairs of images, each pair consisting of a distorted image and a corresponding reference image, processing the pair of images through the convolutional neural network to provide a computed similarity score metric Sc; and adjusting one or more of the network parameters of the current set of network parameters based on a comparison of the distortion score metric S and an expected similarity score metric Sp for the pair; wherein the expected similarity score metric Sp is provided by human perception.

- Figure 1 schematically illustrates the use of a system for assessing image quality of a distorted image relative to a reference image according to one embodiment.

- Figure 2 illustrates the architecture of a system for assessing image quality of a distorted image relative to a reference image according to one embodiment.

- Figure 3 illustrates one implementation of a layered system for assessing image quality of a distorted image relative to a reference image.

- Figure 4 is a flowchart showing steps of a method for assessing image quality of a distorted image relative to a reference image according to one embodiment.

- Figure 5 illustrates a method of training a convolutional neural network to assess the image quality of a distorted image relative to a reference image according to one embodiment.

- Figure 6 is a flowchart showing steps of a method of training a convolutional neural network to assess the image quality of a distorted image relative to a reference image according to one embodiment.

- FIG. 1 schematically illustrates the use of a system 100 for assessing image quality of a distorted image 102 relative to a reference image 104 according to one embodiment.

- System 100 includes a network of interconnected modules or layers, further described below in reference to Figure 2, that embody a trained FR-IQA model.

- Image data from each of the distorted image 102 and the reference image 104 are fed into system 100, which in turn produces output metric 106, indicative of the quality of distorted image 102 as likely to be perceived by a human viewer.

- FIG. 2 illustrates a schematic view of the architecture of a system 200 that may be used for assessing image quality of a distorted image 212 relative to a reference image 214 according to one embodiment.

- System 200 comprises a plurality of layers, 201 through 207. It may be helpful to consider system 200 as a two-stage system, where the first stage consists of layers 201 through 205, collectively providing data to the second stage, layers 206 and 207. This second stage may be thought of as a "standard" neural network, while the combination of first and second stages makes system 200 a convolutional neural network.

- Image data from distorted image 212 and reference image 214 are fed into input layer 201, which acts to normalize both sets of image data providing a normalized distorted image and a normalized reference image.

- Image data from the normalized distorted and reference images are fed into convolution layer 202, which acts to convolve each of the normalized distorted image and the normalized reference image with a plurality Nl of filters, and applies a squared activation function to each pixel of each image, to provide Nl pairs of feature maps.

- Each pair of feature maps contains one filtered normalized distorted image and one correspondingly filtered and normalized reference image.

- Image data from the Nl pairs of feature maps are fed into linear combination layer 203 which computes N2 linear combinations of the Nl feature maps corresponding to distorted image 212 and N2 linear combinations of the corresponding Nl feature maps corresponding to the reference image 214, providing N2 pairs of combined feature maps.

- Each pair of combined feature maps contains one combination of filtered normalized distorted images and one corresponding combination of filtered and normalized reference images.

- Similarity computation layer 204 acts on the data from the N2 pairs of combined feature maps received from liner combination layer 203 to compute N2 similarity maps.

- Each similarity map is computed on the basis of data from corresponding patches of pixels from one pair of combined feature maps, with each similarity map corresponding to a different one of the N2 pairs of combined feature maps.

- Data from the similarity maps are fed into pooling layer 205, which applies an average pooling for each of the N2 similarity maps to provide N2 similarity input values.

- the N2 similarity input values are fed into fully connected layer 206, which operates on the N2 similarity input values to provide M hidden node values, where M is an integer greater than N2.

- the M hidden node values are mapped to a single output node by linear regression layer 207.

- the value at output node is a metric of image quality, indicative of the quality of distorted image 202 as likely to be perceived by a human viewer, based on the training of system 200.

- hyper-parameters of the neural network 200 may be selected to determine specific different architectures or implementations suited to particular image assessment applications.

- One is the patch size of pixels in the original distorted and reference images.

- Other hyper-parameters include the size of the filters (in terms of numbers of pixels) used in the convolution layer, the number Nl of filters used in the convolution layer, the activation function used in the convolution layer, the number N2 of linear combinations computed in the linear combination layer, the number M of hidden nodes in the fully connected layer, and the equation used to compute similarity in the similarity computation layer.

- FIG. 3 One specific implementation of a convolutional neural network that has been found to be suitable for carrying our image quality assessment is illustrated in Figure 3, showing a 32x32-7x7x10-1 x 1 x 10 -800-1 structure. Details of each layer are explained as follows:

- filters smaller or greater than 7x7 may be used, depending on the minimum size of the low-level features of interest. Similarly, in some embodiments, fewer than or more than 10 filters may be used, depending on the complexity of the distortions involved.

- a squared activation function is applied at each pixel of each image patch in this layer.

- similarities are computed in a point-by-point way (i.e. pixel by pixel) between the combined feature maps from corresponding distorted and reference patches

- the pooling layer provides a 10-dim pooled similarities as inputs to the fully connected layer. In cases where more than 10 filters are used in the convolution layer, producing more than 10 filtered feature maps, and so on, there will be a correspondingly greater dimensionality to the pooled similarity inputs.

- b is a bias term

- the activation function may include other non-linear operations.

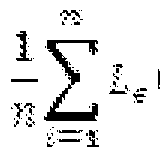

- the 800 hidden nodes are mapped to one single output node. If the weights in the linear regression layer are described by

- FIG. 4 is a flowchart showing steps of a method 400 for assessing image quality of a distorted image relative to a reference image according to one embodiment.

- a distorted image and a reference image (that may, for example, be corresponding frames from a distorted and reference video stream) are received as inputs to a trained convolutional neural network.

- the distorted and reference images are processed through the trained convolutional neural network.

- the output of the trained neural network operating on the distorted and reference images is provided as a metric of the image quality of the distorted image.

- Figure 5 illustrates a method of training a convolutional neural network model 500 to assess the image quality of a distorted image relative to a reference image according to one embodiment.

- An initial set of network parameters is chosen for the models, and a pair of corresponding distorted (502) and reference (504) images, having an expected or predicted similarity score metric S, determined by a human viewer, is provided as an input to the network.

- the similarity score metric S' provided by the network is compared at cost module 506 with the predicted score metric S, and one or more parameters of model 500 are adjusted in response to that comparison, updating the model.

- a second pair of distorted and reference images are then processed through network 500 using the adjusted parameters, a comparison is made between the second score metric produced and the score metric expected for this second pair, and further adjustments may be made in response.

- the process is repeated as desired for the available set of training image pairs, until the final model parameters are set and the model network is deemed to be trained.

- FIG. 6 is a flowchart showing steps of a method 600 of training a convolutional neural network to assess the image quality of a distorted image relative to a reference image according to one embodiment.

- a pair of distorted and reference images (the ith pair of a total of T pairs available) is received and input to the network, characterized by a previously selected set of parameters.

- the distorted and reference images are processed through the network.

- the network parameters are adjusted, using an objective function, according to a comparison between the output similarity score Sc computed by the convolutional neural network and the similarity score metric Sp predicted for that pair of images, as perceived by a human viewer.

- one such pair is processed through steps 602 and 604, and network parameters further adjusted at step 606, and a further determination made at step 608 whether all the training image pairs have been processed.

- the neural network may be considered to be trained.

- the training images may be fed into the network more than once to improve the training.

- the order in which the training images are processed may be random.

- the objective function used to train the network, in determining exactly how the model parameters are adjusted in step 606 of method 600, for example, is the same as that used in standard -SVR (support vector regression).

- the network can be trained by performing back-propagation using Stochastic Gradient Descent (SGD).

- SGD Stochastic Gradient Descent

- Regularization methods may be used to avoid over-training the neural network.

- the regularization method involves adding the L2 norm of the weights in the linear regression layer in the objective function. This is a widely used method for regularization, which for example has been used in SVM (support vector machine).

- the objective function can be modified as: where ⁇ 1 ⁇ 2 is a small positive constant.

- This constraint may be implemented by adding a sparse binary mask ;

- Embodiments described herein provide various benefits.

- embodiments enable image quality to be assessed in applications where corresponding pairs of reference and distorted images are available for analysis, using systems and methods that are readily implemented in real-time and automated systems and yield results that align well with human perception across different types of distortions.

- This invention provides an "end-to-end” solution for automatic image quality assessment, accepting a pair of reference and distorted images as an input, and providing a meaningful image quality metric as an output.

- routines of particular embodiments including C, C++, Java, assembly language, etc.

- Different programming techniques can be employed such as procedural or object oriented.

- the routines can execute on a single processing device or multiple processors. Although the steps, operations, or computations may be presented in a specific order, this order may be changed in different particular embodiments. In some particular embodiments, multiple steps shown as sequential in this specification can be performed at the same time.

- Particular embodiments may be implemented in a computer-readable storage medium for use by or in connection with the instruction execution system, apparatus, system, or device.

- Particular embodiments can be implemented in the form of control logic in software or hardware or a combination of both.

- the control logic when executed by one or more processors, may be operable to perform that which is described in particular embodiments.

- Particular embodiments may be implemented by using a programmed general purpose digital computer, by using application specific integrated circuits, programmable logic devices, field programmable gate arrays, optical, chemical, biological, quantum or nanoengineered systems, components and mechanisms may be used.

- the functions of particular embodiments can be achieved by any means as is known in the art. Distributed, networked systems, components, and/or circuits can be used.

- Communication, or transfer, of data may be wired, wireless, or by any other means.

- a "processor” includes any suitable hardware and/or software system, mechanism or component that processes data, signals or other information.

- a processor can include a system with a general-purpose central processing unit, multiple processing units, dedicated circuitry for achieving functionality, or other systems. Processing need not be limited to a geographic location, or have temporal limitations. For example, a processor can perform its functions in "real time,” “offline,” in a “batch mode,” etc.

- Portions of processing can be performed at different times and at different locations, by different (or the same) processing systems.

- Examples of processing systems can include servers, clients, end user devices, routers, switches, networked storage, etc.

- a computer may be any processor in communication with a memory.

- the memory may be any suitable processor-readable storage medium, such as random-access memory (RAM), read-only memory (ROM), magnetic or optical disk, or other tangible media suitable for storing instructions for execution by the processor.

Landscapes

- Engineering & Computer Science (AREA)

- Theoretical Computer Science (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Evolutionary Computation (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Multimedia (AREA)

- Artificial Intelligence (AREA)

- Health & Medical Sciences (AREA)

- General Health & Medical Sciences (AREA)

- Data Mining & Analysis (AREA)

- Life Sciences & Earth Sciences (AREA)

- Biomedical Technology (AREA)

- General Engineering & Computer Science (AREA)

- Computing Systems (AREA)

- Software Systems (AREA)

- Quality & Reliability (AREA)

- Molecular Biology (AREA)

- Mathematical Physics (AREA)

- Computational Linguistics (AREA)

- Biophysics (AREA)

- Signal Processing (AREA)

- Databases & Information Systems (AREA)

- Bioinformatics & Cheminformatics (AREA)

- Bioinformatics & Computational Biology (AREA)

- Evolutionary Biology (AREA)

- Medical Informatics (AREA)

- Biodiversity & Conservation Biology (AREA)

- Image Analysis (AREA)

- Image Processing (AREA)

- Testing, Inspecting, Measuring Of Stereoscopic Televisions And Televisions (AREA)

Abstract

Description

Claims

Priority Applications (4)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| EP16804582.1A EP3292512B1 (en) | 2015-06-05 | 2016-06-03 | Full reference image quality assessment based on convolutional neural network |

| JP2017563173A JP6544543B2 (en) | 2015-06-05 | 2016-06-03 | Full Reference Image Quality Evaluation Method Based on Convolutional Neural Network |

| KR1020177034859A KR101967089B1 (en) | 2015-06-05 | 2016-06-03 | Convergence Neural Network based complete reference image quality evaluation |

| CN201680032412.0A CN107636690B (en) | 2015-06-05 | 2016-06-03 | Full reference image quality assessment based on convolutional neural network |

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| US14/732,518 US9741107B2 (en) | 2015-06-05 | 2015-06-05 | Full reference image quality assessment based on convolutional neural network |

| US14/732,518 | 2015-06-05 |

Publications (1)

| Publication Number | Publication Date |

|---|---|

| WO2016197026A1 true WO2016197026A1 (en) | 2016-12-08 |

Family

ID=57441857

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/US2016/035868 WO2016197026A1 (en) | 2015-06-05 | 2016-06-03 | Full reference image quality assessment based on convolutional neural network |

Country Status (6)

| Country | Link |

|---|---|

| US (1) | US9741107B2 (en) |

| EP (1) | EP3292512B1 (en) |

| JP (1) | JP6544543B2 (en) |

| KR (1) | KR101967089B1 (en) |

| CN (1) | CN107636690B (en) |

| WO (1) | WO2016197026A1 (en) |

Cited By (14)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN108335289A (en) * | 2018-01-18 | 2018-07-27 | 天津大学 | A kind of full image method for evaluating objective quality with reference to fusion |

| CN109360183A (en) * | 2018-08-20 | 2019-02-19 | 中国电子进出口有限公司 | A kind of quality of human face image appraisal procedure and system based on convolutional neural networks |

| WO2019047949A1 (en) * | 2017-09-08 | 2019-03-14 | 众安信息技术服务有限公司 | Image quality evaluation method and image quality evaluation system |

| CN110033446A (en) * | 2019-04-10 | 2019-07-19 | 西安电子科技大学 | Enhancing image quality evaluating method based on twin network |

| WO2019157235A1 (en) * | 2018-02-07 | 2019-08-15 | Netflix, Inc. | Techniques for predicting perceptual video quality based on complementary perceptual quality models |

| WO2019182759A1 (en) * | 2018-03-20 | 2019-09-26 | Uber Technologies, Inc. | Image quality scorer machine |

| CN110751649A (en) * | 2019-10-29 | 2020-02-04 | 腾讯科技(深圳)有限公司 | Video quality evaluation method and device, electronic equipment and storage medium |

| CN111105357A (en) * | 2018-10-25 | 2020-05-05 | 杭州海康威视数字技术股份有限公司 | Distortion removing method and device for distorted image and electronic equipment |

| CN111127587A (en) * | 2019-12-16 | 2020-05-08 | 杭州电子科技大学 | Non-reference image quality map generation method based on countermeasure generation network |

| EP3540637A4 (en) * | 2017-03-08 | 2020-09-02 | Tencent Technology (Shenzhen) Company Limited | Neural network model training method, device and storage medium for image processing |

| US10887602B2 (en) | 2018-02-07 | 2021-01-05 | Netflix, Inc. | Techniques for modeling temporal distortions when predicting perceptual video quality |

| JP2021507345A (en) * | 2017-12-14 | 2021-02-22 | インターナショナル・ビジネス・マシーンズ・コーポレーションInternational Business Machines Corporation | Fusion of sparse kernels to approximate the complete kernel of convolutional neural networks |

| WO2022217496A1 (en) * | 2021-04-14 | 2022-10-20 | 中国科学院深圳先进技术研究院 | Image data quality evaluation method and apparatus, terminal device, and readable storage medium |

| CN118096770A (en) * | 2024-04-29 | 2024-05-28 | 江西财经大学 | Distortion-resistant and reference-free panoramic image quality evaluation method and system independent of view port |

Families Citing this family (67)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US9734567B2 (en) * | 2015-06-24 | 2017-08-15 | Samsung Electronics Co., Ltd. | Label-free non-reference image quality assessment via deep neural network |

| US10410330B2 (en) * | 2015-11-12 | 2019-09-10 | University Of Virginia Patent Foundation | System and method for comparison-based image quality assessment |

| US10356343B2 (en) * | 2016-03-18 | 2019-07-16 | Raytheon Company | Methods and system for geometric distortion correction for space-based rolling-shutter framing sensors |

| US9904871B2 (en) * | 2016-04-14 | 2018-02-27 | Microsoft Technologies Licensing, LLC | Deep convolutional neural network prediction of image professionalism |

| US10043240B2 (en) | 2016-04-14 | 2018-08-07 | Microsoft Technology Licensing, Llc | Optimal cropping of digital image based on professionalism score of subject |

| US10043254B2 (en) | 2016-04-14 | 2018-08-07 | Microsoft Technology Licensing, Llc | Optimal image transformation based on professionalism score of subject |

| WO2018033137A1 (en) * | 2016-08-19 | 2018-02-22 | 北京市商汤科技开发有限公司 | Method, apparatus, and electronic device for displaying service object in video image |

| US10360494B2 (en) * | 2016-11-30 | 2019-07-23 | Altumview Systems Inc. | Convolutional neural network (CNN) system based on resolution-limited small-scale CNN modules |

| US10834406B2 (en) * | 2016-12-12 | 2020-11-10 | Netflix, Inc. | Device-consistent techniques for predicting absolute perceptual video quality |

| US11113800B2 (en) | 2017-01-18 | 2021-09-07 | Nvidia Corporation | Filtering image data using a neural network |

| US11537869B2 (en) * | 2017-02-17 | 2022-12-27 | Twitter, Inc. | Difference metric for machine learning-based processing systems |

| WO2018152741A1 (en) * | 2017-02-23 | 2018-08-30 | Nokia Technologies Oy | Collaborative activation for deep learning field |

| CN106920215B (en) * | 2017-03-06 | 2020-03-27 | 长沙全度影像科技有限公司 | Method for detecting registration effect of panoramic image |

| CN107103331B (en) * | 2017-04-01 | 2020-06-16 | 中北大学 | Image fusion method based on deep learning |

| US10529066B2 (en) | 2017-04-04 | 2020-01-07 | Board Of Regents, The University Of Texas Systems | Assessing quality of images or videos using a two-stage quality assessment |

| US10699160B2 (en) * | 2017-08-23 | 2020-06-30 | Samsung Electronics Co., Ltd. | Neural network method and apparatus |

| CN107705299B (en) * | 2017-09-25 | 2021-05-14 | 安徽睿极智能科技有限公司 | Image quality classification method based on multi-attribute features |

| CN107679490B (en) * | 2017-09-29 | 2019-06-28 | 百度在线网络技术(北京)有限公司 | Method and apparatus for detection image quality |

| US10540589B2 (en) * | 2017-10-24 | 2020-01-21 | Deep North, Inc. | Image quality assessment using similar scenes as reference |

| CN108171256A (en) * | 2017-11-27 | 2018-06-15 | 深圳市深网视界科技有限公司 | Facial image matter comments model construction, screening, recognition methods and equipment and medium |

| CN108074239B (en) * | 2017-12-30 | 2021-12-17 | 中国传媒大学 | No-reference image quality objective evaluation method based on prior perception quality characteristic diagram |

| CN108389192A (en) * | 2018-02-11 | 2018-08-10 | 天津大学 | Stereo-picture Comfort Evaluation method based on convolutional neural networks |

| US11216698B2 (en) * | 2018-02-16 | 2022-01-04 | Spirent Communications, Inc. | Training a non-reference video scoring system with full reference video scores |

| CN108259893B (en) * | 2018-03-22 | 2020-08-18 | 天津大学 | Virtual reality video quality evaluation method based on double-current convolutional neural network |

| WO2019194044A1 (en) * | 2018-04-04 | 2019-10-10 | パナソニックIpマネジメント株式会社 | Image processing device and image processing method |

| CN108875904A (en) * | 2018-04-04 | 2018-11-23 | 北京迈格威科技有限公司 | Image processing method, image processing apparatus and computer readable storage medium |

| CN108648180B (en) * | 2018-04-20 | 2020-11-17 | 浙江科技学院 | Full-reference image quality objective evaluation method based on visual multi-feature depth fusion processing |

| CN108596890B (en) * | 2018-04-20 | 2020-06-16 | 浙江科技学院 | Full-reference image quality objective evaluation method based on vision measurement rate adaptive fusion |

| CN108596902B (en) * | 2018-05-04 | 2020-09-08 | 北京大学 | Multi-task full-reference image quality evaluation method based on gating convolutional neural network |

| CN108665460B (en) * | 2018-05-23 | 2020-07-03 | 浙江科技学院 | Image quality evaluation method based on combined neural network and classified neural network |

| KR102184755B1 (en) * | 2018-05-31 | 2020-11-30 | 서울대학교 산학협력단 | Apparatus and Method for Training Super Resolution Deep Neural Network |

| CN108986075A (en) * | 2018-06-13 | 2018-12-11 | 浙江大华技术股份有限公司 | A kind of judgment method and device of preferred image |

| US11704791B2 (en) * | 2018-08-30 | 2023-07-18 | Topcon Corporation | Multivariate and multi-resolution retinal image anomaly detection system |

| JP6925474B2 (en) * | 2018-08-31 | 2021-08-25 | ソニーセミコンダクタソリューションズ株式会社 | Operation method and program of solid-state image sensor, information processing system, solid-state image sensor |

| JP6697042B2 (en) * | 2018-08-31 | 2020-05-20 | ソニーセミコンダクタソリューションズ株式会社 | Solid-state imaging system, solid-state imaging method, and program |

| JP7075012B2 (en) * | 2018-09-05 | 2022-05-25 | 日本電信電話株式会社 | Image processing device, image processing method and image processing program |

| US11055819B1 (en) * | 2018-09-27 | 2021-07-06 | Amazon Technologies, Inc. | DualPath Deep BackProjection Network for super-resolution |

| US11132586B2 (en) * | 2018-10-29 | 2021-09-28 | Nec Corporation | Rolling shutter rectification in images/videos using convolutional neural networks with applications to SFM/SLAM with rolling shutter images/videos |

| CN109685772B (en) * | 2018-12-10 | 2022-06-14 | 福州大学 | No-reference stereo image quality evaluation method based on registration distortion representation |

| US11557107B2 (en) | 2019-01-02 | 2023-01-17 | Bank Of America Corporation | Intelligent recognition and extraction of numerical data from non-numerical graphical representations |

| CN109801273B (en) * | 2019-01-08 | 2022-11-01 | 华侨大学 | Light field image quality evaluation method based on polar plane linear similarity |

| US10325179B1 (en) * | 2019-01-23 | 2019-06-18 | StradVision, Inc. | Learning method and learning device for pooling ROI by using masking parameters to be used for mobile devices or compact networks via hardware optimization, and testing method and testing device using the same |

| CN109871780B (en) * | 2019-01-28 | 2023-02-10 | 中国科学院重庆绿色智能技术研究院 | Face quality judgment method and system and face identification method and system |

| WO2020165848A1 (en) * | 2019-02-14 | 2020-08-20 | Hatef Otroshi Shahreza | Quality assessment of an image |

| US11405695B2 (en) | 2019-04-08 | 2022-08-02 | Spirent Communications, Inc. | Training an encrypted video stream network scoring system with non-reference video scores |

| KR102420104B1 (en) * | 2019-05-16 | 2022-07-12 | 삼성전자주식회사 | Image processing apparatus and operating method for the same |

| US11521011B2 (en) | 2019-06-06 | 2022-12-06 | Samsung Electronics Co., Ltd. | Method and apparatus for training neural network model for enhancing image detail |

| CN110517237B (en) * | 2019-08-20 | 2022-12-06 | 西安电子科技大学 | No-reference video quality evaluation method based on expansion three-dimensional convolution neural network |

| CN110766657B (en) * | 2019-09-20 | 2022-03-18 | 华中科技大学 | Laser interference image quality evaluation method |

| US10877540B2 (en) | 2019-10-04 | 2020-12-29 | Intel Corporation | Content adaptive display power savings systems and methods |

| CN110796651A (en) * | 2019-10-29 | 2020-02-14 | 杭州阜博科技有限公司 | Image quality prediction method and device, electronic device and storage medium |

| KR102395038B1 (en) * | 2019-11-20 | 2022-05-09 | 한국전자통신연구원 | Method for measuring video quality using machine learning based features and knowledge based features and apparatus using the same |

| CN111192258A (en) * | 2020-01-02 | 2020-05-22 | 广州大学 | Image quality evaluation method and device |

| CN111524123B (en) * | 2020-04-23 | 2023-08-08 | 北京百度网讯科技有限公司 | Method and apparatus for processing image |

| CN111833326B (en) * | 2020-07-10 | 2022-02-11 | 深圳大学 | Image quality evaluation method, image quality evaluation device, computer equipment and storage medium |

| US11616959B2 (en) | 2020-07-24 | 2023-03-28 | Ssimwave, Inc. | Relationship modeling of encode quality and encode parameters based on source attributes |

| US11341682B2 (en) | 2020-08-13 | 2022-05-24 | Argo AI, LLC | Testing and validation of a camera under electromagnetic interference |

| KR20220043764A (en) | 2020-09-29 | 2022-04-05 | 삼성전자주식회사 | Method and apparatus for video quality assessment |

| CN112419242B (en) * | 2020-11-10 | 2023-09-15 | 西北大学 | No-reference image quality evaluation method based on self-attention mechanism GAN network |

| CN112784698A (en) * | 2020-12-31 | 2021-05-11 | 杭州电子科技大学 | No-reference video quality evaluation method based on deep spatiotemporal information |

| CN112700425B (en) * | 2021-01-07 | 2024-04-26 | 云南电网有限责任公司电力科学研究院 | Determination method for X-ray image quality of power equipment |

| US11521639B1 (en) | 2021-04-02 | 2022-12-06 | Asapp, Inc. | Speech sentiment analysis using a speech sentiment classifier pretrained with pseudo sentiment labels |

| US11763803B1 (en) | 2021-07-28 | 2023-09-19 | Asapp, Inc. | System, method, and computer program for extracting utterances corresponding to a user problem statement in a conversation between a human agent and a user |

| CN113505854B (en) * | 2021-07-29 | 2023-08-15 | 济南博观智能科技有限公司 | Face image quality evaluation model construction method, device, equipment and medium |

| KR20230073871A (en) * | 2021-11-19 | 2023-05-26 | 삼성전자주식회사 | Image processing apparatus and operating method for the same |

| CN114332088B (en) * | 2022-03-11 | 2022-06-03 | 电子科技大学 | Motion estimation-based full-reference video quality evaluation method |

| CN117152092B (en) * | 2023-09-01 | 2024-05-28 | 国家广播电视总局广播电视规划院 | Full-reference image evaluation method, device, electronic equipment and computer storage medium |

Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20090103813A1 (en) * | 2005-05-25 | 2009-04-23 | Olivier Le Meur | Method for Assessing Image Quality |

| US20120308145A1 (en) * | 2010-01-12 | 2012-12-06 | Industry-University Cooperation Foundation Sogang University | Method and apparatus for assessing image quality using quantization codes |

| US20130156345A1 (en) * | 2010-08-06 | 2013-06-20 | Dmitry Valerievich Shmunk | Method for producing super-resolution images and nonlinear digital filter for implementing same |

| US20130163896A1 (en) * | 2011-12-24 | 2013-06-27 | Ecole De Technologie Superieure | Image registration method and system robust to noise |

| US20150117763A1 (en) * | 2012-05-31 | 2015-04-30 | Thomson Licensing | Image quality measurement based on local amplitude and phase spectra |

Family Cites Families (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| AU2002347754A1 (en) | 2002-11-06 | 2004-06-07 | Agency For Science, Technology And Research | A method for generating a quality oriented significance map for assessing the quality of an image or video |

| CN100588271C (en) | 2006-08-08 | 2010-02-03 | 安捷伦科技有限公司 | System and method for measuring video quality based on packet measurement and image measurement |

| EP2137696A2 (en) * | 2007-03-16 | 2009-12-30 | STI Medical Systems, LLC | A method to provide automated quality feedback to imaging devices to achieve standardized imaging data |

| CN102497576B (en) * | 2011-12-21 | 2013-11-20 | 浙江大学 | Full-reference image quality assessment method based on mutual information of Gabor features (MIGF) |

| US20150341667A1 (en) | 2012-12-21 | 2015-11-26 | Thomson Licensing | Video quality model, method for training a video quality model, and method for determining video quality using a video quality model |

-

2015

- 2015-06-05 US US14/732,518 patent/US9741107B2/en active Active

-

2016

- 2016-06-03 CN CN201680032412.0A patent/CN107636690B/en active Active

- 2016-06-03 KR KR1020177034859A patent/KR101967089B1/en active IP Right Grant

- 2016-06-03 JP JP2017563173A patent/JP6544543B2/en active Active

- 2016-06-03 WO PCT/US2016/035868 patent/WO2016197026A1/en active Application Filing

- 2016-06-03 EP EP16804582.1A patent/EP3292512B1/en active Active

Patent Citations (5)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US20090103813A1 (en) * | 2005-05-25 | 2009-04-23 | Olivier Le Meur | Method for Assessing Image Quality |

| US20120308145A1 (en) * | 2010-01-12 | 2012-12-06 | Industry-University Cooperation Foundation Sogang University | Method and apparatus for assessing image quality using quantization codes |

| US20130156345A1 (en) * | 2010-08-06 | 2013-06-20 | Dmitry Valerievich Shmunk | Method for producing super-resolution images and nonlinear digital filter for implementing same |

| US20130163896A1 (en) * | 2011-12-24 | 2013-06-27 | Ecole De Technologie Superieure | Image registration method and system robust to noise |

| US20150117763A1 (en) * | 2012-05-31 | 2015-04-30 | Thomson Licensing | Image quality measurement based on local amplitude and phase spectra |

Non-Patent Citations (12)

| Title |

|---|

| HOU WEILONG ET AL.: "IEEE TRANSACTIONS ON NEURAL NETWORKS AND LEARNING SYSTEMS", vol. 26, IEEE, article "Blind Image Quality Assessment via Deep Learning" |

| JAOERBERG ET AL.: "Speeding up convolutional neural networks with low rank expansions.", ARXIV PREPRINT ARXIV:1405.3866, 15 May 2014 (2014-05-15), XP055229678, Retrieved from the Internet <URL:https://arxiv.org/pdf/1405.3866.pdf> * |

| KANG ET AL.: "Convolutional neural networks for no-reference image quality assessment.", PROCEEDINGS OF THE IEEE CONFERENCE ON COMPUTER VISION AND PATTERN RECOGNITION., 28 June 2014 (2014-06-28), XP032649580, Retrieved from the Internet <URL:http://www.cv-foundation.org/openaccess/content_cvpr_2014/papers/Kang_Convolutional_Neural_Networks_2014_CVPRpaper.pdf> * |

| KANG LE ET AL.: "2014 IEEE CONFERENCE ON COMPUTER VISION AND PATTERN RECOGNITION", 23 June 2014, IEEE, article "Convolutional Neural Networks for No-Reference Image Quality Assessment" |

| LECUN ET AL.: "Deep learning tutorial.", TUTORIALS IN INTERNATIONAL CONFERENCE ON MACHINE LEARNING., 16 June 2013 (2013-06-16), Retrieved from the Internet <URL:http://www.cs.nyu.edu/~yann/talks/lecun-ranzato-icm12013.pdf> * |

| LIN ZHANG ET AL.: "IEEE TRANSACTIONS ON IMAGE PROCESSING", vol. 20, 1 August 2011, IEEE SERVICE CENTER, article "FSIM: A Feature Similarity Index for Image Quality Assessment" |

| LIVNI ET AL.: "On the computational efficiency of training neural networks.", ADVANCES IN NEURAL INFORMATION PROCESSING SYSTEMS., 28 October 2014 (2014-10-28), XP055296368, Retrieved from the Internet <URL:https://arxiv.org/pdf/1410.1141.pdf> * |

| MAX JADERBERG ET AL.: "Speeding up Convolutional Neural Networks with Low Rank Expansions", PROCEEDINGS OF THE BRITISH MACHINE VISION CONFERENCE 2014, 15 May 2014 (2014-05-15), ISBN: 978-1-901725-52-0 |

| PATRICK LE CALLET ET AL.: "A Convolutional Neural Network Approach for Objective Video Quality Assessment", HAL ARCHIVES-OUVERTES.FR, 11 June 2008 (2008-06-11), XP055522249, Retrieved from the Internet <URL:http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.692.4056&rep=rep1&type=pdf> |

| SAKANASHI ET AL.: "Comparison of superimposition and sparse models In blind source separation by multichannel Wiener filter.", SIGNAL & INFORMATION PROCESSING ASSOCIATION ANNUAL SUMMIT AND CONFERENCE (APSIPA ASC)., 6 December 2012 (2012-12-06), XP032309832, Retrieved from the Internet <URL:http://www.apsipa.org/proceedings_2012/papers/140.pdf> * |

| See also references of EP3292512A4 |

| ZHANG ET AL.: "FSIM: a feature similarity index for image quality assessment.", IEEE TRANSACTIONS ON IMAGE PROCESSING., 31 January 2011 (2011-01-31), XP011411841, Retrieved from the Internet <URL:http://www4.comp.polyu.edu.hk/-csizhang/IQA/TIP-IQA-FSIM.pdf> * |

Cited By (25)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| EP3540637A4 (en) * | 2017-03-08 | 2020-09-02 | Tencent Technology (Shenzhen) Company Limited | Neural network model training method, device and storage medium for image processing |

| WO2019047949A1 (en) * | 2017-09-08 | 2019-03-14 | 众安信息技术服务有限公司 | Image quality evaluation method and image quality evaluation system |

| JP7179850B2 (en) | 2017-12-14 | 2022-11-29 | インターナショナル・ビジネス・マシーンズ・コーポレーション | Fusing Sparse Kernels to Approximate Full Kernels of Convolutional Neural Networks |

| JP2021507345A (en) * | 2017-12-14 | 2021-02-22 | インターナショナル・ビジネス・マシーンズ・コーポレーションInternational Business Machines Corporation | Fusion of sparse kernels to approximate the complete kernel of convolutional neural networks |

| CN108335289A (en) * | 2018-01-18 | 2018-07-27 | 天津大学 | A kind of full image method for evaluating objective quality with reference to fusion |

| US10887602B2 (en) | 2018-02-07 | 2021-01-05 | Netflix, Inc. | Techniques for modeling temporal distortions when predicting perceptual video quality |

| KR102455509B1 (en) | 2018-02-07 | 2022-10-19 | 넷플릭스, 인크. | Techniques for predicting perceptual video quality based on complementary perceptual quality models |

| US11729396B2 (en) | 2018-02-07 | 2023-08-15 | Netflix, Inc. | Techniques for modeling temporal distortions when predicting perceptual video quality |

| US11700383B2 (en) | 2018-02-07 | 2023-07-11 | Netflix, Inc. | Techniques for modeling temporal distortions when predicting perceptual video quality |

| US10721477B2 (en) | 2018-02-07 | 2020-07-21 | Netflix, Inc. | Techniques for predicting perceptual video quality based on complementary perceptual quality models |

| KR20200116973A (en) * | 2018-02-07 | 2020-10-13 | 넷플릭스, 인크. | Techniques for predicting perceptual video quality based on complementary perceptual quality models |

| WO2019157235A1 (en) * | 2018-02-07 | 2019-08-15 | Netflix, Inc. | Techniques for predicting perceptual video quality based on complementary perceptual quality models |

| WO2019182759A1 (en) * | 2018-03-20 | 2019-09-26 | Uber Technologies, Inc. | Image quality scorer machine |

| US10916003B2 (en) | 2018-03-20 | 2021-02-09 | Uber Technologies, Inc. | Image quality scorer machine |

| CN109360183A (en) * | 2018-08-20 | 2019-02-19 | 中国电子进出口有限公司 | A kind of quality of human face image appraisal procedure and system based on convolutional neural networks |

| CN111105357B (en) * | 2018-10-25 | 2023-05-02 | 杭州海康威视数字技术股份有限公司 | Method and device for removing distortion of distorted image and electronic equipment |

| CN111105357A (en) * | 2018-10-25 | 2020-05-05 | 杭州海康威视数字技术股份有限公司 | Distortion removing method and device for distorted image and electronic equipment |

| CN110033446A (en) * | 2019-04-10 | 2019-07-19 | 西安电子科技大学 | Enhancing image quality evaluating method based on twin network |

| CN110033446B (en) * | 2019-04-10 | 2022-12-06 | 西安电子科技大学 | Enhanced image quality evaluation method based on twin network |

| CN110751649B (en) * | 2019-10-29 | 2021-11-02 | 腾讯科技(深圳)有限公司 | Video quality evaluation method and device, electronic equipment and storage medium |

| CN110751649A (en) * | 2019-10-29 | 2020-02-04 | 腾讯科技(深圳)有限公司 | Video quality evaluation method and device, electronic equipment and storage medium |

| CN111127587B (en) * | 2019-12-16 | 2023-06-23 | 杭州电子科技大学 | Reference-free image quality map generation method based on countermeasure generation network |

| CN111127587A (en) * | 2019-12-16 | 2020-05-08 | 杭州电子科技大学 | Non-reference image quality map generation method based on countermeasure generation network |

| WO2022217496A1 (en) * | 2021-04-14 | 2022-10-20 | 中国科学院深圳先进技术研究院 | Image data quality evaluation method and apparatus, terminal device, and readable storage medium |

| CN118096770A (en) * | 2024-04-29 | 2024-05-28 | 江西财经大学 | Distortion-resistant and reference-free panoramic image quality evaluation method and system independent of view port |

Also Published As

| Publication number | Publication date |

|---|---|

| KR101967089B1 (en) | 2019-04-08 |

| JP2018516412A (en) | 2018-06-21 |

| CN107636690A (en) | 2018-01-26 |

| EP3292512B1 (en) | 2021-01-20 |

| CN107636690B (en) | 2021-06-22 |

| US20160358321A1 (en) | 2016-12-08 |

| JP6544543B2 (en) | 2019-07-17 |

| KR20180004208A (en) | 2018-01-10 |

| EP3292512A4 (en) | 2019-01-02 |

| US9741107B2 (en) | 2017-08-22 |

| EP3292512A1 (en) | 2018-03-14 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| EP3292512B1 (en) | Full reference image quality assessment based on convolutional neural network | |

| Li et al. | No-reference image quality assessment with deep convolutional neural networks | |

| Kundu et al. | No-reference quality assessment of tone-mapped HDR pictures | |

| Kang et al. | Convolutional neural networks for no-reference image quality assessment | |

| JP2018101406A (en) | Using image analysis algorithm for providing training data to neural network | |

| JP2018516412A5 (en) | ||

| Hu et al. | Blind quality assessment of night-time image | |

| WO2013177779A1 (en) | Image quality measurement based on local amplitude and phase spectra | |

| CN110827269B (en) | Crop growth change condition detection method, device, equipment and medium | |

| Gastaldo et al. | Machine learning solutions for objective visual quality assessment | |

| CN111415304A (en) | Underwater vision enhancement method and device based on cascade deep network | |

| CN108257117B (en) | Image exposure evaluation method and device | |

| CN116543433A (en) | Mask wearing detection method and device based on improved YOLOv7 model | |

| Yi et al. | No-reference quality assessment of underwater image enhancement | |

| CN115457614B (en) | Image quality evaluation method, model training method and device | |

| CN115457015A (en) | Image no-reference quality evaluation method and device based on visual interactive perception double-flow network | |

| Kalatehjari et al. | A new reduced-reference image quality assessment based on the SVD signal projection | |

| Gao et al. | Image quality assessment using image description in information theory | |

| Singh et al. | Performance Analysis of Conditional GANs based Image-to-Image Translation Models for Low-Light Image Enhancement | |

| Kottayil et al. | Learning local distortion visibility from image quality | |

| CN110751632B (en) | Multi-scale image quality detection method based on convolutional neural network | |

| Yin | Multipurpose image quality assessment for both human and computer vision systems via convolutional neural network | |

| Cloramidina et al. | High Dynamic Range (HDR) Image Quality Assessment: A Survey | |

| US20230063209A1 (en) | Neural network training based on consistency loss | |

| Pourebadi | A Deep Learning Approach for Blind Image Quality Assessment |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| 121 | Ep: the epo has been informed by wipo that ep was designated in this application |

Ref document number: 16804582 Country of ref document: EP Kind code of ref document: A1 |

|

| ENP | Entry into the national phase |

Ref document number: 20177034859 Country of ref document: KR Kind code of ref document: A |

|

| WWE | Wipo information: entry into national phase |

Ref document number: 2016804582 Country of ref document: EP |

|

| ENP | Entry into the national phase |

Ref document number: 2017563173 Country of ref document: JP Kind code of ref document: A |

|

| NENP | Non-entry into the national phase |

Ref country code: DE |