WO2011073990A1 - Method and system for enhancing an image - Google Patents

Method and system for enhancing an image Download PDFInfo

- Publication number

- WO2011073990A1 WO2011073990A1 PCT/IL2010/001071 IL2010001071W WO2011073990A1 WO 2011073990 A1 WO2011073990 A1 WO 2011073990A1 IL 2010001071 W IL2010001071 W IL 2010001071W WO 2011073990 A1 WO2011073990 A1 WO 2011073990A1

- Authority

- WO

- WIPO (PCT)

- Prior art keywords

- image

- images

- likelihood

- discrepancy

- pixel

- Prior art date

Links

- 238000000034 method Methods 0.000 title claims abstract description 86

- 230000002708 enhancing effect Effects 0.000 title description 9

- 239000011159 matrix material Substances 0.000 claims abstract description 63

- 238000012545 processing Methods 0.000 claims abstract description 60

- 238000004364 calculation method Methods 0.000 claims description 34

- 230000008569 process Effects 0.000 claims description 14

- 238000004590 computer program Methods 0.000 claims description 8

- 230000002787 reinforcement Effects 0.000 claims description 8

- 238000001514 detection method Methods 0.000 claims description 7

- 238000006073 displacement reaction Methods 0.000 claims description 6

- 235000012093 Myrtus ugni Nutrition 0.000 claims description 3

- 244000061461 Tema Species 0.000 claims description 3

- 238000005728 strengthening Methods 0.000 claims 1

- 230000003313 weakening effect Effects 0.000 claims 1

- 238000009877 rendering Methods 0.000 description 18

- 230000002123 temporal effect Effects 0.000 description 12

- 238000010586 diagram Methods 0.000 description 11

- 238000000605 extraction Methods 0.000 description 11

- 230000008859 change Effects 0.000 description 6

- 239000003086 colorant Substances 0.000 description 6

- 230000010354 integration Effects 0.000 description 6

- 239000013598 vector Substances 0.000 description 6

- 238000004458 analytical method Methods 0.000 description 4

- 238000010276 construction Methods 0.000 description 4

- 230000006870 function Effects 0.000 description 4

- 238000001303 quality assessment method Methods 0.000 description 4

- 238000012952 Resampling Methods 0.000 description 3

- 241000669298 Pseudaulacaspis pentagona Species 0.000 description 2

- 238000012986 modification Methods 0.000 description 2

- 230000004048 modification Effects 0.000 description 2

- 238000010606 normalization Methods 0.000 description 2

- 230000009471 action Effects 0.000 description 1

- 230000006978 adaptation Effects 0.000 description 1

- 238000013459 approach Methods 0.000 description 1

- 230000015572 biosynthetic process Effects 0.000 description 1

- 230000015556 catabolic process Effects 0.000 description 1

- 239000002131 composite material Substances 0.000 description 1

- 238000006731 degradation reaction Methods 0.000 description 1

- 238000005516 engineering process Methods 0.000 description 1

- 230000007613 environmental effect Effects 0.000 description 1

- 238000001914 filtration Methods 0.000 description 1

- 238000010191 image analysis Methods 0.000 description 1

- 238000012544 monitoring process Methods 0.000 description 1

- 238000007639 printing Methods 0.000 description 1

- 238000002604 ultrasonography Methods 0.000 description 1

Classifications

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T5/00—Image enhancement or restoration

- G06T5/50—Image enhancement or restoration using two or more images, e.g. averaging or subtraction

-

- G—PHYSICS

- G01—MEASURING; TESTING

- G01S—RADIO DIRECTION-FINDING; RADIO NAVIGATION; DETERMINING DISTANCE OR VELOCITY BY USE OF RADIO WAVES; LOCATING OR PRESENCE-DETECTING BY USE OF THE REFLECTION OR RERADIATION OF RADIO WAVES; ANALOGOUS ARRANGEMENTS USING OTHER WAVES

- G01S13/00—Systems using the reflection or reradiation of radio waves, e.g. radar systems; Analogous systems using reflection or reradiation of waves whose nature or wavelength is irrelevant or unspecified

- G01S13/02—Systems using reflection of radio waves, e.g. primary radar systems; Analogous systems

- G01S13/06—Systems determining position data of a target

- G01S13/46—Indirect determination of position data

-

- G—PHYSICS

- G01—MEASURING; TESTING

- G01S—RADIO DIRECTION-FINDING; RADIO NAVIGATION; DETERMINING DISTANCE OR VELOCITY BY USE OF RADIO WAVES; LOCATING OR PRESENCE-DETECTING BY USE OF THE REFLECTION OR RERADIATION OF RADIO WAVES; ANALOGOUS ARRANGEMENTS USING OTHER WAVES

- G01S13/00—Systems using the reflection or reradiation of radio waves, e.g. radar systems; Analogous systems using reflection or reradiation of waves whose nature or wavelength is irrelevant or unspecified

- G01S13/02—Systems using reflection of radio waves, e.g. primary radar systems; Analogous systems

- G01S13/50—Systems of measurement based on relative movement of target

- G01S13/52—Discriminating between fixed and moving objects or between objects moving at different speeds

- G01S13/56—Discriminating between fixed and moving objects or between objects moving at different speeds for presence detection

-

- G—PHYSICS

- G01—MEASURING; TESTING

- G01S—RADIO DIRECTION-FINDING; RADIO NAVIGATION; DETERMINING DISTANCE OR VELOCITY BY USE OF RADIO WAVES; LOCATING OR PRESENCE-DETECTING BY USE OF THE REFLECTION OR RERADIATION OF RADIO WAVES; ANALOGOUS ARRANGEMENTS USING OTHER WAVES

- G01S13/00—Systems using the reflection or reradiation of radio waves, e.g. radar systems; Analogous systems using reflection or reradiation of waves whose nature or wavelength is irrelevant or unspecified

- G01S13/88—Radar or analogous systems specially adapted for specific applications

- G01S13/89—Radar or analogous systems specially adapted for specific applications for mapping or imaging

-

- G—PHYSICS

- G01—MEASURING; TESTING

- G01S—RADIO DIRECTION-FINDING; RADIO NAVIGATION; DETERMINING DISTANCE OR VELOCITY BY USE OF RADIO WAVES; LOCATING OR PRESENCE-DETECTING BY USE OF THE REFLECTION OR RERADIATION OF RADIO WAVES; ANALOGOUS ARRANGEMENTS USING OTHER WAVES

- G01S13/00—Systems using the reflection or reradiation of radio waves, e.g. radar systems; Analogous systems using reflection or reradiation of waves whose nature or wavelength is irrelevant or unspecified

- G01S13/88—Radar or analogous systems specially adapted for specific applications

- G01S13/89—Radar or analogous systems specially adapted for specific applications for mapping or imaging

- G01S13/90—Radar or analogous systems specially adapted for specific applications for mapping or imaging using synthetic aperture techniques, e.g. synthetic aperture radar [SAR] techniques

- G01S13/9021—SAR image post-processing techniques

- G01S13/9027—Pattern recognition for feature extraction

-

- G—PHYSICS

- G01—MEASURING; TESTING

- G01S—RADIO DIRECTION-FINDING; RADIO NAVIGATION; DETERMINING DISTANCE OR VELOCITY BY USE OF RADIO WAVES; LOCATING OR PRESENCE-DETECTING BY USE OF THE REFLECTION OR RERADIATION OF RADIO WAVES; ANALOGOUS ARRANGEMENTS USING OTHER WAVES

- G01S13/00—Systems using the reflection or reradiation of radio waves, e.g. radar systems; Analogous systems using reflection or reradiation of waves whose nature or wavelength is irrelevant or unspecified

- G01S13/88—Radar or analogous systems specially adapted for specific applications

- G01S13/89—Radar or analogous systems specially adapted for specific applications for mapping or imaging

- G01S13/90—Radar or analogous systems specially adapted for specific applications for mapping or imaging using synthetic aperture techniques, e.g. synthetic aperture radar [SAR] techniques

- G01S13/904—SAR modes

-

- G—PHYSICS

- G01—MEASURING; TESTING

- G01S—RADIO DIRECTION-FINDING; RADIO NAVIGATION; DETERMINING DISTANCE OR VELOCITY BY USE OF RADIO WAVES; LOCATING OR PRESENCE-DETECTING BY USE OF THE REFLECTION OR RERADIATION OF RADIO WAVES; ANALOGOUS ARRANGEMENTS USING OTHER WAVES

- G01S7/00—Details of systems according to groups G01S13/00, G01S15/00, G01S17/00

- G01S7/02—Details of systems according to groups G01S13/00, G01S15/00, G01S17/00 of systems according to group G01S13/00

- G01S7/28—Details of pulse systems

- G01S7/285—Receivers

- G01S7/295—Means for transforming co-ordinates or for evaluating data, e.g. using computers

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T5/00—Image enhancement or restoration

- G06T5/20—Image enhancement or restoration using local operators

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T5/00—Image enhancement or restoration

- G06T5/70—Denoising; Smoothing

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T7/00—Image analysis

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/10—Image acquisition modality

- G06T2207/10032—Satellite or aerial image; Remote sensing

- G06T2207/10044—Radar image

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06T—IMAGE DATA PROCESSING OR GENERATION, IN GENERAL

- G06T2207/00—Indexing scheme for image analysis or image enhancement

- G06T2207/30—Subject of image; Context of image processing

- G06T2207/30181—Earth observation

- G06T2207/30184—Infrastructure

Definitions

- This invention relates to image processing. More specifically, it relates to generating enhanced imagery based on a plurality of acquired images.

- Image processing and enhancement of images require different techniques, sometimes taken from other fields of technology.

- decision making full reinforcement operators and reinforcement learning are known algorithms that combine fuzzy logic and reinforcement learning.

- the images can be acquired with the help of Synthetic Aperture Radar (SAR).

- SAR Synthetic Aperture Radar

- the plurality of acquired images can originate from one or more sources (e.g. the images can be acquired by one or more SAR systems during one or more periods of time, etc.).

- the method comprises:

- processing comprises: i) for each given pixel within the common area of interest, calculating a likelihood of discrepancy between respective pixels of the images of said one or more groups, thus giving rise to likelihood of discrepancy values characterizing said given pixel; ii) generating a "likelihood of discrepancy matrix" comprising the likelihood of discrepancy values and characterizing the likelihood of discrepancy for each pixel within the common area of interest; iii) for each of said one or more groups, generating an enhanced image while utilizing said likelihood of discrepancy matrix.

- Generating an enhanced image can comprise improving the contrast of at least one of said plurality of acquired images, reducing noise in at least one of said plurality of acquired images, and/or improving other values characterizing quality of respective image(s) and/or of information extracted thereof.

- Each pixel in the enhanced image can be calculated as a weighted combination between respective pixels of the images in the group, wherein the weights of the respective pixels are defined by their respective values within the likelihood of discrepancy matrix.

- the method can further comprise: (a) grouping said enhanced images into one or more groups; (b) processing the images within said one or more groups, wherein said processing can comprise: i) for each given pixel within the common area of interest, calculating a likelihood of discrepancy between respective pixels of the images of said one or more groups, thus giving rise to likelihood of discrepancy values characterizing said given pixel; ii) generating a "likelihood of discrepancy matrix" comprising the likelihood of discrepancy values, characterizing the likelihood of discrepancy for each pixel within the common area of interest; iii) for each of said one or more groups, generating an enhanced image while utilizing said likelihood of discrepancy matrix; (c) repeating steps (a) and (b) until a single enhanced image is created.

- the method can further comprise selecting an image to be enhanced, wherein the pixel corresponding to the selected image is provided with additional weight in the weighted combination proportional to the likelihood of discrepancy value.

- a system capable of generating an enhanced image based on a plurality of acquired images.

- the images can be acquired with the help of Synthetic Aperture Radar (SAR).

- SAR Synthetic Aperture Radar

- the plurality of acquired images can originate from one or more sources (e.g. the images can be acquired by one or more SAR systems during one or more periods of time, etc.).

- the system comprises a processor operatively connected to an image input block and a processing parameters input block; wherein the image input block is configured to receive a plurality of acquired images, each comprising a common area of interest; the processing parameter input block is configured to receive processing parameters; and the processor is configured to group said plurality of acquired images into one or more groups and process the images within said one or more groups, including at least the following: i) for each given pixel within the common area of interest, calculate a likelihood of discrepancy between respective pixels of the images of said one or more groups, thus giving rise to likelihood of discrepancy values characterizing said given pixel; ii) generate a "likelihood of discrepancy matrix" comprising the likelihood of discrepancy values and characterizing the likelihood of discrepancy for each pixel within the common area of interest; iii) for each of said one or more groups, generate an enhanced image while utilizing said likelihood of discrepancy matrix.

- the system can further comprise a display wherein said processor can be further configured to display said

- the processor can be further configured to perform at least the following: (a) group said enhanced images into one or more groups; (b) process the images within said one or more groups, including at least the following: i) for each given pixel within the common area of interest, calculate a likelihood of discrepancy between respective pixels of the images of said one or more groups, thus giving rise to likelihood of discrepancy values characterizing said given pixel; ii) generate a "likelihood of discrepancy matrix" comprising the likelihood of discrepancy values, characterizing the likelihood of discrepancy for each pixel within the common area of interest; iii) for each of said one or more groups, generate an enhanced image while utilizing said likelihood of discrepancy matrix; (c) repeat steps (a) and (b) until a single enhanced image is created.

- generating a "likelihood of discrepancy matrix" for that group can comprise: (a) generating a likelihood of discrepancy matrix for at least one possible couple within the group; (b) calculating an average likelihood of discrepancy matrix corresponding to all likelihood of discrepancy matrices generated for said at least one possible couple in the group.

- Each pixel in the enhanced image can be calculated as a weighted combination between respective pixels of the images in the group, wherein the weights of the respective pixels are defined by their respective values within the likelihood of discrepancy matrix.

- the processing parameters can include at least one of the following: (a) an indication of a selected image to be enhanced; (b) a target size parameter, representing the size of an object of interest; (c) a pixel size parameter, representing the size of an area covered by a single pixel in an image.

- generating a likelihood of discrepancy matrix can include: (a) computing one or more characteristics for each of said respective pixels of the images of said one or more groups, thus giving rise to computed characteristics; and (b) processing said computed characteristics for obtaining normalized differences values amongst each of said respective pixels of the images of said one or more groups, constituting the likelihood of discrepancy matrix respective of the one or more characteristics of each of said respective pixels of the images of said one or more groups.

- the characteristics include at least one of the following: (a) local targetness calculations; (b) local texture calculation; (c) local entropy calculations.

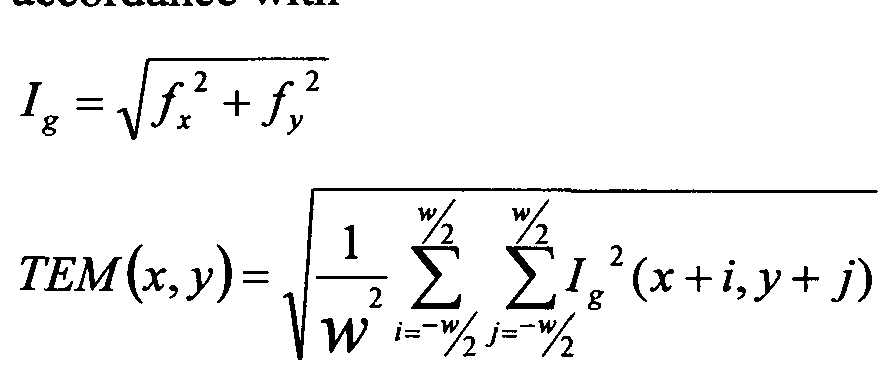

- f x and f y are the output of a Sobel operator applied to pixels in each image; x and y stand for the central pixel of a local texture sliding window; and j identify pixel displacement around the local texture sliding window's central pixel x, y; w is the local texture sliding window's width and height.

- TEM a is the local texture obtained for Image a amongst respective group

- TEM b is the local texture obtained for Image b amongst said respective group

- TEM aa is (TEMa) 2

- TEM bb is (TEMb) 2 .

- Local targetness calculation can be computed in accordance with

- #i is the number of pixels forming a targetness sliding window

- G is the G value computed for a pixel 7 " in accordance with:

- T djff T a x ⁇ l - T Formula)+ T b x (l - T a ) wherein T a and T b stand for targetness (T) of corresponding pixels in Image a and Image b , respectively.

- Figure 1 is a schematic representation of a system for processing images in accordance with one embodiment of the invention

- Figure 2 is an example of a SAR image and a corresponding enhanced image achieved in accordance with an embodiment of the invention

- Figure 3 is a schematic example illustrating several temporal images of a scene

- Figure 4 is a schematic illustration of a composite image based on the images of Figure 3, obtained in accordance with embodiments of the invention.

- Figure 5 is an exemplary diagram illustrating integration while creating an enhanced image, in accordance with an embodiment of the invention.

- Figure 6A is an exemplary diagram illustrating integration while creating an enhanced image, in accordance with one embodiment of the invention.

- Figure 6B is an exemplary diagram illustrating integration while creating an enhanced image, in accordance with another embodiment of the invention.

- Figure 7 is a schematic functional block diagram illustrating the main modules in a system configured to detect discrepancies amongst two images, in accordance with one embodiment of the invention

- Figure 8 is a generalized flowchart illustrating one embodiment for rendering an

- Figure 9 is a generalized flowchart describing exemplary operations taken during feature extraction, according to one alternative embodiment of the invention.

- Figure 10 is a functional block diagram schematically illustrating the exemplary modules operative in the feature extraction module, according to the embodiment

- Figure 11 illustrates determining local texture, according to one embodiment of the invention

- Figure 12 presents two illustrative masks, for horizontal and vertical gradients, to be used with a 3x3 sliding window according to one embodiment of the invention

- Figure 13 is a functional block diagram schematically illustrating the local texture module, according to one embodiment of the invention.

- Figure 14 is a graph illustrating higher reflection of object (and hence targets) compared to the background.

- FIG. 1 is a schematic representation of a system 101 for processing SAR (Synthetic Aperture Radar) images.

- the system may provide enhanced images based on a plurality of acquired images.

- the system 101 may be configured for detecting discrepancies amongst SAR images.

- the system 101 obtains SAR images as input. It may obtain the input from one or more sources such as an airborne radar 102 transmitting SAR images to the system 101, a spaceborne radar 103 transmitting SAR images thereto, a ground positioned radar 104 transmitting SAR images to the system (e.g., a radar carried by a vehicle), an archive or a storage device 105 in which SAR images are stored, etc.

- sources mentioned herewith are non-limiting examples and system 101 may obtain images from any other appropriate source.

- the system may obtain input from more than one source at a time.

- SAR image may be obtained from an airborne radar 102, one image from a ground positioned radar 104, and additional images from archive 105.

- SAR images obtained as input are referred to hereinafter as “acquired SAR images” or shortly, “acquired images”.

- the system 101 can further receive processing parameters as input.

- the processing parameters can include, inter alia:

- a parameter defining the target size being the size of an object of interest.

- a municipality can define the requirement to detect constructions whose size is 5 meters over 5 meters (i.e., 25 square meters) or less, i.e., the target's size is 5x5 meters;

- ⁇ a parameter defining the pixel size, being the size of the area covered by a single pixel in the image. For example, if the pixel size is 1 meter, the area covered in a single pixel is lxl meter.

- the system 101 uses a plurality of acquired images, each comprising a common area of interest in order to generate one or more enhanced images 106.

- the system 101 can detect discrepancies amongst a plurality of acquired images, each of the acquired images comprising a common area of interest.

- the output of system 101 may be provided by any available and applicable means, such as displaying the output on a screen 107, storing it in a storage device and/or archive 108 (the archive can be identical or different than the archive 105), and/or printing it by the aid of a printer 109, etc.

- Figure 2 is a non-limiting example of an acquired SAR image 201 and an enhanced image 202 which enhances image 201, the enhanced image 202 is achieved in accordance with an embodiment of the invention. It is appreciated that enhanced image 202 is clearer than image 201. It is noted that image 202 was achieved as result of a computation performed by system 101, hence it constitutes a "synthetic image", unlike image 201 which was acquired from any source. It is noted, though, that image 201, if obtained e.g. from an archive (such as archive 105), may be an image that was previously processed by the system. However, because the image is presently obtained as input and is used for enhancing another image or is subject for further enhancement, it is considered as an acquired image, while ignoring its history.

- an archive such as archive 105

- Figure 3 is a non-limiting schematic example illustrating several temporal images of a scene.

- the images are denoted 301 to 308.

- the images were all taken from above, e.g., from a standing-off or standing-in airplane, during a leg.

- this is non-limiting.

- the images in Figure 3 were not necessarily acquired by the same platform.

- one or more images in a series could have been acquired by an airplane, while one or more other images in the same series could have been acquired by a satellite, etc.

- it is possible that none, one or more and even all the images are obtained directly from the radar, while it is also possible that none, one or more and even all the images are obtained from an archive where they are stored.

- the images represent registered temporal images of the same scene.

- Image 301 was taken first.

- image 302 was taken, and then images 303, 304, 308.

- Such temporal images constitute, hereinafter, a “series of temporal images”, or shortly, a “series”, and may also be referred to as a “sequence of temporal images", or shortly, as a “sequence”.

- the term "frame” is sometimes used, hereinafter, in order to refer to an image in a series. Registration of the images in a series can be done in any appropriate method known per se.

- a total overlapping between the acquired images is not mandatory, as long as they have an overlapping portion (including, for example, a common area of interest).

- image 301 was originally larger than the image illustrated in the figure, and so was image 302 (and the other images as well).

- the illustrated images represent only those overlapping portions, further to registration and resampling, performed by any appropriate method 5 known per se.

- the "series” includes only those portions of the images that are overlapping.

- the term “acquired image” further refers to the all or part of the overlapping portion (for example the common area of interest), after registration and resampling.

- Image 301 illustrates a building 309 and a road 310 leading thereto.

- the image includes building 311, which is a neighbor of building 309, and a vehicle 312 (probably a truck) parking nearby.

- the same buildings 309 and 311 as well as road 310 appear, however, the vehicle 312 is missing therefrom.

- building 309 is partially occluded (by environmental phenomena and/or an artifact), and therefore part thereof does not appear in the image.

- the occluded part 313 15 of the image is represented by a dotted region.

- Image 303 includes, in addition to building 309 and road 310, another building 314, while building 311 is missing (probably destroyed sometimes between the times when images 302 and 303 were taken).

- image 304 there is a vehicle 315 (e.g., a truck), positioned on road 310 near building 314.

- vehicle 315 e.g., a truck

- Image 305 shows that road 310 has now been forked into a new road 316, in the end of which there is a new building 317.

- image 306 there are three vehicles, 318, 319 and 320 parking near building 317.

- image 307 a fence 321 appears around the buildings, bordering area 322 and delimiting it as a camp.

- the entrance 323 to the camp is via road 310.

- Four vehicles, denoted 324, 325, 326 and 327 park now near building

- image 307 like image 302, also suffers from occlusion, represented by the doted region, and hence it is unclear.

- Image 308 was probably taken a short time after image 307. It is also occluded. In addition, three of the vehicles appearing in image 307 (these are vehicles 324, 325 and 327) still appear therein, but vehicle 326 was probably driven away and hence it is

- items appearing only in a subset of the images in a series are referred to as "objects” or “object items”, while items that appear in all images of a series are referred to as “background” or “background items”.

- objects items that appear in all images of a series

- background items that appear in all images of a series

- building 309 and road 310 are part of the background, because they appear in every image being part of the series.

- buildings 311, 314 and 317, the vehicles appearing in some of the images (such as 312, 315, 318, 319, 320 and others), fence 321 etc. are objects.

- an item appearing alone (i.e., not as part of a phrase such as "object item” or "background item”) is used for referring to any one of object items and background items.

- an item is anything that appears in one, two or any other subset of a series, including every image of the series.

- image 308 it may be required to enhance the image, thus allowing the viewer to better distinguish the items included therein. For that purpose, the user can indicate that image 308 is to be enhanced.

- image 202 enhances acquired image 201

- the contrast is higher, i.e., the "objects-to-background ratio" is higher, wherein higher contrast is likely to allow easier distinguishing of items in an image.

- Image 401 in Figure 4 illustrates a non-limiting example of the enhanced image enhancing image 308.

- the items appearing therein are similar to those appearing in image 308, however, they are clearer and easier to distinguish.

- an "enhanced image enhancing a specific acquired image” is a synthetic image whose contrast is higher than the specific acquired image, all the objects appearing therein appear also in the acquired image and vice versa (i.e., all the objects appearing in the acquired image appear also in the synthetic image).

- the synthetic image and the specific acquired image are considered as "corresponding" to each other.

- the objects included in the enhanced image all appear in the acquired image, it should still be appreciated that the enhanced image is based on a plurality of acquired images, including the corresponding acquired image.

- Figure 4 includes additional schematic images 402 and 403, that are based on processing the series of the temporal images of Figure 3.

- Image 403 includes the background of Figure 3's images as well as every object appearing in one or more thereof.

- An image resulting of a processing of a certain sequence of temporal images and including the joint background and all objects appearing in the images of the sequence is referred to hereinafter as an "inclusive image" of said certain sequence.

- image 402 illustrates only those items appearing in every one of the series' images.

- An images resulting of a processing of a certain sequence of temporal images and including merely the joint background, i.e. items appearing in all images of the sequence is referred to hereinafter as a "background image" of said certain sequence.

- Object items do not appear in the background image.

- each one of the acquired images and/or an enhanced image enhancing any one of the acquired images in a series represent a certain point in time.

- the background image includes only constant, non-changing items, and therefore it has no correspondence to any specific time point in the time range through which the series' images were taken.

- the inclusive image does not correspond to a certain time point as well.

- the inclusive image and the background image are enhanced images.

- Figure 5 includes a diagram 501 illustrating integration while creating an enhanced image, in accordance with an embodiment of the invention.

- a series of eight acquired images forms the basis for rendering an enhanced image 516.

- the eight images are denoted by reference numerals 502, 503, 504, 505, 506, 507, 508 and 509.

- groups for example, groups of two, three, four, etc.

- groups of two acquired images are used for rendering intermediate images, denoted 510, 511, 512 and 513.

- the intermediate images are enhanced images as their contrast is potentially higher in comparison with the contrast of each one of the two images they are based on.

- groups for example, groups of two, three, four, etc.

- groups of two intermediate images are used for rendering another generation of intermediate images 514 and 515, whose contrast is higher than the contrast of each one of the intermediate images they are based on (510, 511, 512 and 513) and therefore also of the contrast of each one of the acquired images the intermediate images are based on.

- the intermediate images, images 514 and 515 in the example of figure 5 are used for rendering the final enhanced image 516 whose contrast is even higher (it should be noted that in other cases more than two intermediate images, e.g. three, four, etc. images, can be used for rendering the final enhanced image).

- the series of acquired images is integrated into a single enhanced image.

- images 510 and 511 directly relate to image 514

- images 506 and 507 directly relate to image 512 etc.

- Images that are situated more than one generation before an enhanced image relate thereto with indirect relationship.

- image 502 indirectly relates to image 514 and so does image 505.

- image 502 indirectly relates also to image 516. Images relating to each other are considered as relatives while they can be direct relatives or indirect relatives.

- the invention is not limited, neither by this number (eight) nor by this characteristic (power of two), and any number of acquired images can compose the series, as long as the number is larger than one.

- the number is not a power of two

- the groups of acquired images are groups of two acquired images.

- the groups of acquired images are groups of more than two (e.g. three, four, five, etc.) acquired images, the same principles apply, only in such cases, more than one image in a series can be multiplied, or that one image can be multiplied more than one time.

- the acquired images comprising the series may be divided to a-symmetrical groups.

- one group may consist, for example, of two acquired images whereas another group may consist, for example, of three acquired images, etc.

- an enhanced image is (directly and/or indirectly) based on two or more images.

- the term "two or more" (or “more than one”) is referred hereinafter as “multiple”, and therefore an enhanced synthetic image, which is based (directly and/or indirectly) on two or more acquired images, constitutes a "multi look” image, shortly referred to as ML.

- multi look is presently used for describing an image which results of processing two or more images. Unlike that, it will be further illustrated below that according to the invention alternative computation may be performed.

- an enhanced synthetic image obtained in accordance with the invention is an MT image and an ML image, and therefore it constitutes an MTML image.

- an inclusive image is denoted MTMLm a x

- a background image is denoted as MTMLt, ackground or shortly, MTML ba ck-

- MTMLi an enhanced image whose objects are identical to the objects included in one of the series' images ( ), while the background is also preserved as in the i'th image background, but with higher contrast compared thereto.

- Such an enhanced image, enhancing the i'th image is referred to, hereinafter, as MTMLi.

- MTMLi images enhancing the series' images, namely these are MTMLi, MTML2, MTML n .

- the convention demonstrated here for numbering the images (1,..., «) is non-limiting and any other convention may be used, if applicable, such as 0,1,..., M-1, such as a,b,... etc.

- the ith image constitutes a "selected image” (selected to be enhanced by a user) or a "selected acquired image” while the other images in the series constitute “other images” or “other acquired images”.

- An enhanced image relating to the selected image constitutes a "new selected image” or “enhanced selected image”, while enhanced images relating only to other images (but not to the selected image) constitutes "new other images” or “enhanced other images”.

- each intermediate image is directly related to two previous-generation's images it can also be considered as a two-look image (2-Look image) of the previous generation's images. In cases where more than two images were used for rendering an intermediate image, it can be considered as a three-look image, four-look image, etc. according to the number of images used for rendering it.

- enhanced images' rendering including the intermediate images and final enhanced image, are based on likelihood of discrepancy (shortly referred to as "LD") computations amongst the group of images to which the enhanced image directly relates to.

- LD likelihood of discrepancy

- rendering an enhanced image, whether an intermediate image or a final enhanced image is based on discrepancy detection amongst the group of images the enhanced image directly relates to.

- the process below is described with reference to groups of two images. However, in cases where the group of images comprises more than two images, the calculations can be performed, for example, for at least one couple of images in the group. In such cases, after such calculation, as an example, an average value can be calculated out of the values calculated according to the below process for the at least one couple of images in the group.

- Figure 7 is a functional block diagram illustrating the main modules in a system 701 configured to enhance images and detect discrepancies amongst two images, in accordance with one embodiment of the invention.

- two images, 702 and 703 are fed into a "feature extraction module” 704.

- a vector is created, representing the pixel's characteristics in its surrounding environment.

- the vector can include a value representing the variability of the pixel, compared to the pixels surrounding it.

- the output of the feature extraction module 704, for each pixel is two vectors, 705 and 706, respective of the two images 702 and 703.

- each vector constitutes a "feature vector”.

- 707 is a comparator that is coupled to the feature extraction module 704.

- the comparator obtains the two feature vectors 705 and 706, performs, for example, a context based feature comparison, and computes the difference therebetween.

- the difference is fed into a non-linear filter 708 that is coupled to the comparator 707.

- the filter 708 is used for normalizing the calculated differences to values in the range of 0-1, wherein the value 0 indicates no change, while 1 indicates a significant change. It is noted though that this convention is non-limiting and another embodiment can use the opposite convention, wherein 0 indicates a significant change while 1 stands for no change. It is also possible to use a different range.

- LD matrix is indicative of the level of change in a siding window examining the two images 702 and 703. If the normalized difference of most pixels in the window is high, LD will be high, while if most pixels in the window are characterized by a low normalized difference, LD will be low. It will be further described below that the window's size is variable.

- FIG. 8 is presented, illustrating one embodiment for rendering an MTML image, based on a series of k images, in accordance with one embodiment of the invention.

- A is a power of two.

- this is non- limiting.

- image quality is assessed for the k images in the series while in 802 registration and resampling is performed, bringing all the series' images to the current image plane.

- enhanced images' rendering can begin.

- couples of one generation are processed in order to render enhanced images of the next generation, then forming basis for rendering the next generation and so on (see, e.g., 803 and 804).

- the groups of acquired images are couples of acquired images.

- the groups of acquired images are groups of more than two (e.g. three, four, five, etc.) acquired images, the same principles apply, with some modifications as detailed above.

- Figure 9 is a flowchart describing exemplary operations taken during feature extraction 806, according to one alternative embodiment of the invention, while Figure 10 is a block diagram schematically illustrating exemplary modules operative in the feature extraction module 704, according to the embodiment.

- the feature extraction's products are later used for determining likelihood of discrepancy, as was illustrated with reference to Figure 8.

- two characteristics are determined. Namely, these are local texture 901, targetness 902 and local entropy 903. These characteristics are computed by the local texture module 1001, the targetness module 1002 and the local entropy module 1003 illustrated in Figure 10. It is noted though, that alternative embodiments may apply only a subset of the modules illustrated in Figure 10 and hence, it is not obligatory to compute all the characteristics mentioned herewith (in Figure 9). According to the invention, any one or two of the characteristics can be computed. Therefore, in a general manner, one or more characteristics can be computed.

- Each characteristic is computed, substantially for every pixel in the common area of interest in the image.

- the pixel presently analyzed for computing its characteristics constitutes an "analyzed pixel".

- the analyzed pixel is covered by a sliding window while the analyzed pixel is in the window's center. It is explained, in this connection, that different characteristics may required different sliding windows for computation thereof.

- analysis may include searching for something specific, namely a "target" in the images.

- 5x5 is only a non- limiting example and any other window size can be defined, as required, such as 3x3, 9x9, etc.

- the sliding window in the first case (5x5 meters) would be at least 5x5 pixels (25 pixels), while in the second case (3x3 meters) it would be at least 3x3 pixels (i.e., 9 pixels).

- the window's size is affected, as was previously explained, by the target's expected size and by the pixel size.

- the described embodiment requires a window whose size is measured by an odd number of pixels, however, this is non- limiting and other embodiments may allow windows having even number of pixels as their width and height (e.g. 4x4, 1 Ox 10, etc.).

- Figure 11 illustrates how local texture can be determined in 901 according to one embodiment of the invention. It is noted that the embodiment presented with reference to Figure 11 can be implemented, for example, by the local texture module

- the sliding window constitutes a "local texture sliding window".

- the local texture is calculated using the gradient of the image intensity at each point and the Sobel operator used for calculating it which are familiar to those versed in the art of image processing. It is to be noted that such calculation of the local texture is a mere example of one method of calculating it and accordingly, other methods may be utilized for calculating the local texture.

- a method for obtaining the masks is presented, e.g., by Linda Shapiro and George Stockman's "Computer Vision”.

- the output of the Sobel operator is two functions. Namely, these are f x for the horizontal gradient and f y for the vertical gradient. These functions are combined to obtain the gradient image I g in accordance with E uation 1 :

- Image I g forms the input for the convolution operation presented in Equation 2:

- TEM Texture Energy Measure and according to the embodiment it is computed for each pixel in every one of the n images in the series;

- j and,y stand for the central pixel of the sliding window; and j identify pixel displacement around the local texture sliding window's central pixel x,y that is the analyzed pixel;

- w is the local texture sliding window's width and height.

- Equation 2 The result of operating Equation 2 on each pixel in every image is a set of texture energy images.

- For the texture energy image of each pixel normalized differences are processed in accordance with Equation 3:

- R is the set of normalized texture differences

- TEM a is the result obtained by operating Equation 1 on Image a

- TEM b is the result obtained by operating Equation 1 on Images

- TEM aa is (TEMa) 2 ;

- TEM bb is (TEMb) 2 .

- TEMbb TEM a b, wherein TEM a b is (TEM a x TEMb), and therefore R equals zero.

- the bigger is the difference between the images the smaller is the value of TEM a x TEM b TEM a b, and therefore R approaches 1.

- R is the normalized local texture difference or the likelihood of discrepancy in texture. It reflects the probability that a discrepancy exists between a single pixel within an image compared to the parallel pixel in the second image.

- G a and G b are the G values computed in accordance to Equation 4 for pixels in Image a and Imaget, respectively;

- / represents reflection of a pixel, / > 0 ;

- A is a constant.

- A 0.3.

- the extended neighborhood of a pixel covers three time the target size.

- the extended neighborhood is larger in size than the targetness sliding window size as well as the expected target size.

- Equation 4 is a normalizing function, transferring values into a 0-1 range.

- Equation 5 it was presented in connection with Equation 5 however Equation 4 can be used elsewhere as well.

- Equation 1 may form part of the feature extraction module 704, while Equation 2 may form part of the comparator 707.

- the texture energy measure is computed, e.g., in accordance with Equation 1.

- the set of normalized texture differences is processed, e.g., in accordance with Equation 2.

- Figure 13 is a block diagram schematically illustrating the local texture module

- 1301 is the mask generator wherein masks are generated.

- 1302 is the Sobel operator.

- 1303 a texture energy measure module, is coupled to the Sobel Operator for obtaining f x and f y therefrom. In this module texture energy measure is computed, see also 1103.

- 1304 is a normalized texture differences module. It is coupled to the texture energy differences module and is configured to process a set of normalized texture differences (see 1104).

- the sliding window used for targetness computation is not necessarily identical to the sliding window used for local texture computation.

- the sliding window respective of targetness computation constitutes a "targetness sliding window".

- the targetness sliding window's size is frequently smaller than the target size and therefore smaller than the local texture sliding window's size. The basis of determining the targetness sliding window's size will be described below.

- FIG. 14 graphically represents the function that normalizes the target's reflection into a 0-1 range, wherein black (low reflection) pixels are represented by 0 while white pixels (higher reflection) are represented by 1. It should be understood that such normalization can be done, for example, in accordance with Equation 4, as appears above.

- the targetness can be computed, e.g., in accordance with Equation

- n is the number of pixels forming the targetness sliding window; and T stands for targetness value.

- the reinforcement operator in the Equation 6 is a fuzzy logic operator characterized as following: in a case when all inputs are high, e.g. all input values Gj are higher than 0.5 in the normalized range (0, 1), the operator generates values of T higher than any of the input values. In a case when all inputs are low .e.g. lower than 0.5, the operator generates values of T lower than any input values Gj. In generality, the full reinforcement operator strengthens high value pixels and weakens low value pixels.

- the targetness sliding window size is usually a constant size of 3 x 3 pixels. Larger window sizes can be considered such as 5x5 or 7x7 pixels, but the 3x3 window is more efficient and therefore is usually employed. However, the window size should be always smaller than the smallest target of interest. For example, if the smallest target of interest is 4x4 pixels than the targetness sliding window size will preferably be 3x3. If the smallest target is of 5x5 pixels, the targetness sliding window size will preferably be 3x3 although 4x4 may be considered as we.

- T represents the targetness value of a certain pixel it can be computed separately for each pixel in the image. Furthermore, in two images a and b, T a represents the targetness value of the certain pixel within image a, while T b represents the targetness value of the corresponding pixel in image b.

- the targetness likelihood of discrepancy, denoted as T diff can be calculated, e.g., in accordance with

- T diff T a x ⁇ - T b )+ T b x ⁇ - T a )

- Equation 8 the local entropy between two images image a and image b is calculated in accordance with Equation 8:

- C xy is the covariance matrix of image x and image y . It is to be noted that according to the last equation:

- Equation 8 it is also possible to operate a reinforcement operator on the normalized eigenvalues (p k (ij)) according to the following equation:

- Each pixel within H(i,j) represents the local entropy value of the corresponding pixel. The higher the value is, the higher the likelihood of discrepancy is.

- Each pixel within OH(i,j) represents the likelihood of discrepancy based on the normalized eigenvalues of the covariance matrix Cxy. The higher the value is, the higher the likelihood of discrepancy is.

- the exemplary embodiments of the invention presented above computes three characteristics for the purpose of detecting discrepancies between images. Namely, these characteristics are local texture, entropy and targetness. As noted above, as a person of ordinary skill in the art can appreciate, less and/or additional and/or other characteristics may be utilized for that purpose.

- the overall likelihood of discrepancy, LD, at each pixel is computed for combining the LD previously calculated for the different characteristics. For example, in the example of combining local texture and targetness, the overall LD is computed by combining the local texture difference R (see Equation 3 or Equation 5 above) and the local targetness difference Taff (see Equation 7 above), e.g. according to Equation 9 below: dtff

- Equation 9 represents in fact a particular case of Equation 6 for the case of two variables only, e.g. R and ⁇ ⁇ ⁇ .

- Equation 6 above should be used where in that case n should be the actual number of characteristics employed to assess the likelihood of discrepancy, LD.

- an intermediate enhanced image can be calculated, for example according to Equation 10:

- ml2 LD ⁇ image a + (1 - LD) ⁇ (image a + image b ) l 2;

- ml2 is the constraint average of the two input images image a and images

- image a is the constraint average of the two input images image a and images

- the intermediate enhanced image ml2 is a weighted combination between the constrained mean of the two images and image a (which, as noted above, is the selected image or the enhanced selected image if such image is part of the group of images corresponding to the calculated LD).

- image a is considered as a leading image on which the enhancement is based. In the calculation described above, the higher the LD value is - the weight of the leading image in the calculation is higher.

- An image displayed using a single primary color or a combination of sub-set of primary colors constitutes, hereinafter, a "colored image”.

- a single primary color or a combination of sub-set of primary colors constitutes, hereinafter, a "colored image”.

- one embodiment uses a combination of red and blue for displaying the MTMLb ac k g r o umi Kn&gG, while green is used for displaying the MTMLi image.

- MTML j and MTMLk while MTML j is displayed using a combination of red and blue while MTMLk is displayed in green.

- background items i.e., those items appearing in both images, will appear in the black/grey/white scale, object items appearing only in MTML j will appear red/pink/blue, and objects appearing only in MTMLk will appear green.

- RGB is not a limitation of the invention and it is possible to use other color models such as subtractive color models (e.g., CMYK, which stands for Cyan, Magenta, Yellow and Key that is black) or any other model.

- CMYK subtractive color models

- system according to the invention may be a suitably programmed computer.

- the invention contemplates a computer program being readable by a computer for executing the method of the invention.

- the invention further contemplates a machine-readable memory tangibly embodying a program of instructions executable by the machine for executing the method of the invention.

- a system according to the invention can be hardware. Alternatively, the system can compose hardware and software components.

Landscapes

- Engineering & Computer Science (AREA)

- Remote Sensing (AREA)

- Radar, Positioning & Navigation (AREA)

- Physics & Mathematics (AREA)

- General Physics & Mathematics (AREA)

- Computer Networks & Wireless Communication (AREA)

- Theoretical Computer Science (AREA)

- Electromagnetism (AREA)

- Computer Vision & Pattern Recognition (AREA)

- Artificial Intelligence (AREA)

- Image Processing (AREA)

- Radar Systems Or Details Thereof (AREA)

- Image Analysis (AREA)

- Apparatus For Radiation Diagnosis (AREA)

Abstract

Description

Claims

Priority Applications (6)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| KR1020127018800A KR101878306B1 (en) | 2009-12-17 | 2010-12-16 | Method and system for enhancing an image |

| AU2010331754A AU2010331754A1 (en) | 2009-12-17 | 2010-12-16 | Method and system for enhancing an image |

| US13/515,617 US8724918B2 (en) | 2009-12-17 | 2010-12-16 | Method and system for enhancing an image |

| EP10837169.1A EP2513665A4 (en) | 2009-12-17 | 2010-12-16 | Method and system for enhancing an image |

| SG2012044657A SG181767A1 (en) | 2009-12-17 | 2010-12-16 | Method and system for enhancing an image |

| BR112012014627A BR112012014627A2 (en) | 2009-12-17 | 2010-12-16 | METHOD FOR COMPUTERIZED GENERATION OF AN ENHANCED IMAGE BASED ON A PLURALITY OF IMAGES, SYSTEM CAPABLE OF GENERATING AN ENHANCED IMAGE BASED ON A PLURALITY OF IMAGES, METHOD FOR COMPUTERIZED OBJECT DETECTION IN RECORDED SAR IMAGES AND COMPUTER PROGRAM |

Applications Claiming Priority (2)

| Application Number | Priority Date | Filing Date | Title |

|---|---|---|---|

| IL202788 | 2009-12-17 | ||

| IL202788A IL202788A (en) | 2009-12-17 | 2009-12-17 | Method and system for enhancing a sar image |

Publications (2)

| Publication Number | Publication Date |

|---|---|

| WO2011073990A1 true WO2011073990A1 (en) | 2011-06-23 |

| WO2011073990A4 WO2011073990A4 (en) | 2011-09-22 |

Family

ID=43570379

Family Applications (1)

| Application Number | Title | Priority Date | Filing Date |

|---|---|---|---|

| PCT/IL2010/001071 WO2011073990A1 (en) | 2009-12-17 | 2010-12-16 | Method and system for enhancing an image |

Country Status (11)

| Country | Link |

|---|---|

| US (1) | US8724918B2 (en) |

| EP (1) | EP2513665A4 (en) |

| KR (1) | KR101878306B1 (en) |

| AU (1) | AU2010331754A1 (en) |

| BR (1) | BR112012014627A2 (en) |

| CL (1) | CL2012001640A1 (en) |

| CO (1) | CO6592055A2 (en) |

| IL (1) | IL202788A (en) |

| PE (1) | PE20130969A1 (en) |

| SG (1) | SG181767A1 (en) |

| WO (1) | WO2011073990A1 (en) |

Families Citing this family (14)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| CN103327326B (en) * | 2013-05-30 | 2016-03-30 | 西安交通大学 | Based on the SAR image transmission method of compressed sensing and channel self-adapting |

| US10230925B2 (en) | 2014-06-13 | 2019-03-12 | Urthecast Corp. | Systems and methods for processing and providing terrestrial and/or space-based earth observation video |

| WO2016153914A1 (en) | 2015-03-25 | 2016-09-29 | King Abdulaziz City Of Science And Technology | Apparatus and methods for synthetic aperture radar with digital beamforming |

| CA2990063A1 (en) | 2015-06-16 | 2017-03-16 | King Abdulaziz City Of Science And Technology | Efficient planar phased array antenna assembly |

| EP3311194A4 (en) * | 2015-06-16 | 2018-06-13 | King Abdulaziz City for Science and Technology | Systems and methods for enhancing synthetic aperture radar imagery |

| US10037477B2 (en) | 2015-08-31 | 2018-07-31 | Massachusetts Institute Of Technology | Combined intensity and coherent change detection in images |

| EP3380864A4 (en) | 2015-11-25 | 2019-07-03 | Urthecast Corp. | Synthetic aperture radar imaging apparatus and methods |

| GB2553284B (en) * | 2016-08-23 | 2020-02-05 | Thales Holdings Uk Plc | Multilook coherent change detection |

| EP3631504B8 (en) | 2017-05-23 | 2023-08-16 | Spacealpha Insights Corp. | Synthetic aperture radar imaging apparatus and methods |

| EP3646054A4 (en) | 2017-05-23 | 2020-10-28 | King Abdulaziz City for Science and Technology | RADAR IMAGING DEVICE WITH SYNTHETIC APERTURE AND METHOD OF MOVING TARGETS |

| WO2019226194A2 (en) | 2017-11-22 | 2019-11-28 | Urthecast Corp. | Synthetic aperture radar apparatus and methods |

| US10580150B2 (en) * | 2018-02-13 | 2020-03-03 | Macau University Of Science And Technology | Method for improving calculations of surface roughness |

| CN115190311B (en) * | 2022-09-08 | 2022-11-15 | 湖北工业大学 | Security monitoring video compression storage method |

| CN116433657B (en) * | 2023-06-08 | 2023-08-25 | 金乡县明耀玻璃有限公司 | Toughened glass scratch area image enhancement method based on computer vision |

Citations (10)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US4636850A (en) * | 1984-09-07 | 1987-01-13 | Adac Laboratories, Inc. | Apparatus and method for enhancement of video images |

| US6529575B1 (en) * | 2002-04-29 | 2003-03-04 | Ge Medical Systems Global Technology Company, Llc | Adaptive projection filtering scheme for noise reduction |

| US20040022438A1 (en) * | 2002-08-02 | 2004-02-05 | Hibbard Lyndon S. | Method and apparatus for image segmentation using Jensen-Shannon divergence and Jensen-Renyi divergence |

| US20040156561A1 (en) * | 2003-02-12 | 2004-08-12 | Inventec Appliances Corporation | Method for producing enhanced-resolution image by use of a plurality of low-resolution images |

| US20050232514A1 (en) * | 2004-04-15 | 2005-10-20 | Mei Chen | Enhancing image resolution |

| US20060228040A1 (en) * | 2003-02-28 | 2006-10-12 | Simon Richard A | Method and system for enhancing portrait image that are processed in a batch mode |

| US20060239550A1 (en) * | 2005-04-22 | 2006-10-26 | Lexmark International Inc. | Method and system for enhancing an image |

| US20070162193A1 (en) * | 2006-01-10 | 2007-07-12 | Harris Corporation, Corporation Of The State Of Delaware | Accuracy enhancing system for geospatial collection value of an image sensor aboard an airborne platform and associated methods |

| US20090179790A1 (en) | 2006-03-31 | 2009-07-16 | Qinetiq Limited | System and method for processing imagery from synthetic aperture systems |

| US20090256741A1 (en) | 2006-08-03 | 2009-10-15 | Pasco Corporation | Disaster countermeasure support method |

Family Cites Families (12)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US4975704A (en) | 1990-01-26 | 1990-12-04 | The United States Of America As Represented By The Administrator Of The National Aeronautics And Space Administration | Method for detecting surface motions and mapping small terrestrial or planetary surface deformations with synthetic aperture radar |

| JP4046859B2 (en) * | 1998-07-08 | 2008-02-13 | キヤノン株式会社 | Image processing apparatus and method, and storage medium |

| DE69909635T2 (en) * | 1998-08-28 | 2004-04-15 | Sarnoff Corp. | DEVICE AND METHOD FOR ELECTRONIC IMAGE IMPROVEMENT |

| EP1293925A1 (en) * | 2001-09-18 | 2003-03-19 | Agfa-Gevaert | Radiographic scoring method |

| US7064779B1 (en) | 2001-10-23 | 2006-06-20 | Ess Technology, Inc. | Imaging system combining multiple still images for higher resolution image output |

| JP3671220B2 (en) | 2002-04-30 | 2005-07-13 | 独立行政法人 宇宙航空研究開発機構 | Moving object detection method |

| US6650273B1 (en) | 2002-05-06 | 2003-11-18 | Lockheed Martin Corporation | Change subtraction of synthetic aperture radar data |

| US7295691B2 (en) * | 2002-05-15 | 2007-11-13 | Ge Medical Systems Global Technology Company, Llc | Computer aided diagnosis of an image set |

| US7466848B2 (en) * | 2002-12-13 | 2008-12-16 | Rutgers, The State University Of New Jersey | Method and apparatus for automatically detecting breast lesions and tumors in images |

| IL155034A0 (en) | 2003-03-23 | 2004-06-20 | M A M D Digital Data Proc Syst | Automatic aerial digital photography and digital data processing systems |

| GB0427779D0 (en) | 2004-12-17 | 2005-07-06 | Bae Systems Plc | Imaging system and method |

| US7760956B2 (en) * | 2005-05-12 | 2010-07-20 | Hewlett-Packard Development Company, L.P. | System and method for producing a page using frames of a video stream |

-

2009

- 2009-12-17 IL IL202788A patent/IL202788A/en active IP Right Grant

-

2010

- 2010-12-16 EP EP10837169.1A patent/EP2513665A4/en not_active Ceased

- 2010-12-16 PE PE2012000839A patent/PE20130969A1/en not_active Application Discontinuation

- 2010-12-16 BR BR112012014627A patent/BR112012014627A2/en not_active IP Right Cessation

- 2010-12-16 US US13/515,617 patent/US8724918B2/en active Active

- 2010-12-16 AU AU2010331754A patent/AU2010331754A1/en not_active Abandoned

- 2010-12-16 SG SG2012044657A patent/SG181767A1/en unknown

- 2010-12-16 KR KR1020127018800A patent/KR101878306B1/en active IP Right Grant

- 2010-12-16 WO PCT/IL2010/001071 patent/WO2011073990A1/en active Application Filing

-

2012

- 2012-06-15 CL CL2012001640A patent/CL2012001640A1/en unknown

- 2012-07-17 CO CO12120403A patent/CO6592055A2/en active IP Right Grant

Patent Citations (10)

| Publication number | Priority date | Publication date | Assignee | Title |

|---|---|---|---|---|

| US4636850A (en) * | 1984-09-07 | 1987-01-13 | Adac Laboratories, Inc. | Apparatus and method for enhancement of video images |

| US6529575B1 (en) * | 2002-04-29 | 2003-03-04 | Ge Medical Systems Global Technology Company, Llc | Adaptive projection filtering scheme for noise reduction |

| US20040022438A1 (en) * | 2002-08-02 | 2004-02-05 | Hibbard Lyndon S. | Method and apparatus for image segmentation using Jensen-Shannon divergence and Jensen-Renyi divergence |

| US20040156561A1 (en) * | 2003-02-12 | 2004-08-12 | Inventec Appliances Corporation | Method for producing enhanced-resolution image by use of a plurality of low-resolution images |

| US20060228040A1 (en) * | 2003-02-28 | 2006-10-12 | Simon Richard A | Method and system for enhancing portrait image that are processed in a batch mode |

| US20050232514A1 (en) * | 2004-04-15 | 2005-10-20 | Mei Chen | Enhancing image resolution |

| US20060239550A1 (en) * | 2005-04-22 | 2006-10-26 | Lexmark International Inc. | Method and system for enhancing an image |

| US20070162193A1 (en) * | 2006-01-10 | 2007-07-12 | Harris Corporation, Corporation Of The State Of Delaware | Accuracy enhancing system for geospatial collection value of an image sensor aboard an airborne platform and associated methods |

| US20090179790A1 (en) | 2006-03-31 | 2009-07-16 | Qinetiq Limited | System and method for processing imagery from synthetic aperture systems |

| US20090256741A1 (en) | 2006-08-03 | 2009-10-15 | Pasco Corporation | Disaster countermeasure support method |

Non-Patent Citations (9)

| Title |

|---|

| ALBERTO MOREIRA'S: "Improved Multilook Techniques Applied to SAR and SCANSAR Imagery", IEEE TRANSACTIONS ON GEOSCIENCE AND REMOTE SENSING, vol. 29, no. 4, July 1991 (1991-07-01), XP000258531, DOI: doi:10.1109/36.135814 |

| BARBARA ZITOVA; JAN FLUSSER'S: "Image registration methods: a survey", IMAGE AND VISION COMPUTING, vol. 21, 2003, pages 977 - 1,000 |

| E. J. M. RIGNOT ET AL.: "Change Detection Techniques for ERS-1 SAR data", IEEE TRANSACTIONS ON GEOSCIENCE AND REMOTE SENSING, vol. 31, no. 4, 1 July 1993 (1993-07-01), pages 896 - 906, XP055111990, DOI: doi:10.1109/36.239913 |

| L. JIANG ET AL.: "Urban change detection using multitemporal ERS-1/2 InSAR data", PROCEEDINGS OF THE SPIE, vol. 5982, 6 October 2005 (2005-10-06), pages 59821B - 59821B,8 |

| R. Z. SCHNEIDER ET AL.: "Entropy Among a Sequency of SAR Images for Change Detection", IEEE INTERNATIONAL GEOSCIENCE AND REMOTE SENSING SYMPOSIUM, IGARSS 2003, vol. 2, 21 July 2003 (2003-07-21), pages 1389 - 1391, XP010704072, DOI: doi:10.1109/IGARSS.2003.1294118 |

| See also references of EP2513665A4 |

| V. ALBERGA: "Similarity Measures of Remotely Sensed Multi-Sensor Images for Change Detection Applications", REMOTE SENSING, vol. 1, no. 3, 3 July 2009 (2009-07-03), pages 122 - 143, XP055111966, DOI: doi:10.3390/rs1030122 |

| W. M. F. GRAY ET AL.: "Mapping urban change in the UK using satellite radar interferometry", REMOTE SENSING OF ENVIRONMENT, vol. 87, no. 1, 1 September 2003 (2003-09-01), pages 16 - 22, XP055111991, DOI: doi:10.1016/S0034-4257(03)00142-1 |

| WANG: "Image Quality Assessment: from Error Visibility to Structural Similarity", IEE TRANSACTIONS ON IMAGE PROCESSING, vol. 13, no. 4, April 2004 (2004-04-01), XP011110418, DOI: doi:10.1109/TIP.2003.819861 |

Also Published As

| Publication number | Publication date |

|---|---|

| CL2012001640A1 (en) | 2013-04-05 |

| SG181767A1 (en) | 2012-07-30 |

| WO2011073990A4 (en) | 2011-09-22 |

| PE20130969A1 (en) | 2013-10-02 |

| IL202788A0 (en) | 2010-11-30 |

| AU2010331754A1 (en) | 2012-08-09 |

| EP2513665A1 (en) | 2012-10-24 |

| IL202788A (en) | 2016-08-31 |

| BR112012014627A2 (en) | 2017-09-12 |

| US8724918B2 (en) | 2014-05-13 |

| CO6592055A2 (en) | 2013-01-02 |

| EP2513665A4 (en) | 2014-05-14 |

| KR20130027453A (en) | 2013-03-15 |

| US20130064467A1 (en) | 2013-03-14 |

| KR101878306B1 (en) | 2018-07-13 |

Similar Documents

| Publication | Publication Date | Title |

|---|---|---|

| US8724918B2 (en) | Method and system for enhancing an image | |

| JP4571987B2 (en) | System and method for detecting features from an image of a vehicle | |

| Renza et al. | A new approach to change detection in multispectral images by means of ERGAS index | |

| JP6798854B2 (en) | Target number estimation device, target number estimation method and program | |

| US20090003699A1 (en) | User guided object segmentation recognition | |

| Chen et al. | Edge-guided multiscale segmentation of satellite multispectral imagery | |

| CN101601287A (en) | Produce the equipment and the method for photorealistic image thumbnails | |

| CN104160690A (en) | Display method of area extraction results, and image processing device | |

| CN101246593B (en) | Color image edge detection method and apparatus | |

| US7630990B2 (en) | Endmember spectrum database construction method, endmember spectrum database construction apparatus and endmember spectrum database construction program | |

| CN112633185A (en) | Image processing method and device | |

| US20130201342A1 (en) | Estimating a visible vector representation for pixels in an infrared image | |

| CN102760287B (en) | Color correction method for static cameras and apparatus thereof | |

| CN112101260A (en) | Method, device, equipment and storage medium for identifying safety belt of operator | |

| JP3776591B2 (en) | Map information updating method and apparatus | |

| Tullis et al. | Expert system house detection in high spatial resolution imagery using size, shape, and context | |

| CN100566655C (en) | Be used to handle image to determine the method for picture characteristics or analysis candidate | |

| CN115294439B (en) | Method, system, equipment and storage medium for detecting air weak and small moving target | |

| CN111798446A (en) | Container image screening method, computing device and storage medium | |

| Sportouche et al. | A processing chain for simple 3D reconstruction of buildings in urban scenes from high resolution optical and SAR images | |

| Masini et al. | Analysis of multiresolution-based fusion strategies for a dual infrared system | |

| JP2016009379A (en) | Object detection device and element selection device | |

| AU2005299436B2 (en) | Virtual grid alignment of sub-volumes | |

| KR102524375B1 (en) | System and method for inspecting pet excreta | |

| JP5313080B2 (en) | Linear component reduction device and pedestrian detection display system |

Legal Events

| Date | Code | Title | Description |

|---|---|---|---|

| 121 | Ep: the epo has been informed by wipo that ep was designated in this application |

Ref document number: 10837169 Country of ref document: EP Kind code of ref document: A1 |

|

| DPE1 | Request for preliminary examination filed after expiration of 19th month from priority date (pct application filed from 20040101) | ||

| WWE | Wipo information: entry into national phase |

Ref document number: 13515617 Country of ref document: US |

|

| WWE | Wipo information: entry into national phase |

Ref document number: 2012001640 Country of ref document: CL Ref document number: 000839-2012 Country of ref document: PE |

|

| NENP | Non-entry into the national phase |

Ref country code: DE |

|

| WWE | Wipo information: entry into national phase |

Ref document number: 1759/MUMNP/2012 Country of ref document: IN |

|

| ENP | Entry into the national phase |

Ref document number: 20127018800 Country of ref document: KR Kind code of ref document: A |

|

| WWE | Wipo information: entry into national phase |

Ref document number: 2010331754 Country of ref document: AU Ref document number: 12120403 Country of ref document: CO Ref document number: 2010837169 Country of ref document: EP |

|

| ENP | Entry into the national phase |

Ref document number: 2010331754 Country of ref document: AU Date of ref document: 20101216 Kind code of ref document: A |

|

| REG | Reference to national code |

Ref country code: BR Ref legal event code: B01A Ref document number: 112012014627 Country of ref document: BR |

|

| ENP | Entry into the national phase |

Ref document number: 112012014627 Country of ref document: BR Kind code of ref document: A2 Effective date: 20120615 |