EP4284023B1 - Hierarchical control system for hearing system - Google Patents

Hierarchical control system for hearing system Download PDFInfo

- Publication number

- EP4284023B1 EP4284023B1 EP23168466.3A EP23168466A EP4284023B1 EP 4284023 B1 EP4284023 B1 EP 4284023B1 EP 23168466 A EP23168466 A EP 23168466A EP 4284023 B1 EP4284023 B1 EP 4284023B1

- Authority

- EP

- European Patent Office

- Prior art keywords

- hearing

- control

- user

- hearing device

- updated

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Active

Links

Images

Classifications

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04R—LOUDSPEAKERS, MICROPHONES, GRAMOPHONE PICK-UPS OR LIKE ACOUSTIC ELECTROMECHANICAL TRANSDUCERS; DEAF-AID SETS; PUBLIC ADDRESS SYSTEMS

- H04R25/00—Deaf-aid sets, i.e. electro-acoustic or electro-mechanical hearing aids; Electric tinnitus maskers providing an auditory perception

- H04R25/30—Monitoring or testing of hearing aids, e.g. functioning, settings, battery power

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04R—LOUDSPEAKERS, MICROPHONES, GRAMOPHONE PICK-UPS OR LIKE ACOUSTIC ELECTROMECHANICAL TRANSDUCERS; DEAF-AID SETS; PUBLIC ADDRESS SYSTEMS

- H04R25/00—Deaf-aid sets, i.e. electro-acoustic or electro-mechanical hearing aids; Electric tinnitus maskers providing an auditory perception

- H04R25/50—Customised settings for obtaining desired overall acoustical characteristics

-

- H—ELECTRICITY

- H04—ELECTRIC COMMUNICATION TECHNIQUE

- H04R—LOUDSPEAKERS, MICROPHONES, GRAMOPHONE PICK-UPS OR LIKE ACOUSTIC ELECTROMECHANICAL TRANSDUCERS; DEAF-AID SETS; PUBLIC ADDRESS SYSTEMS

- H04R25/00—Deaf-aid sets, i.e. electro-acoustic or electro-mechanical hearing aids; Electric tinnitus maskers providing an auditory perception

- H04R25/70—Adaptation of deaf aid to hearing loss, e.g. initial electronic fitting

Definitions

- the invention relates to a hierarchical control system with a hearing system including a hearing device.

- the invention further relates to a method, a computer program and a computer readable medium for controlling the hearing system.

- Hearing devices are generally small and complex devices. Hearing devices can include a processor, microphone, speaker, memory, housing, and other electronical and mechanical components. Some example hearing devices are Behind-The-Ear (BTE), Receiver-In-Canal (RIC), In-The-Ear (ITE), Completely-In-Canal (CIC), and Invisible-In-The-Canal (IIC) devices.

- BTE Behind-The-Ear

- RIC Receiver-In-Canal

- ITE In-The-Ear

- CIC Completely-In-Canal

- IIC Invisible-In-The-Canal

- a user can prefer one of these hearing devices compared to another device based on hearing loss, aesthetic preferences, lifestyle needs, and budget.

- Today's hearing devices mainly work based on the settings that the user receives from user's audiologist or hearing care professional (HCP). Such first settings have typically to be optimized at least during an initial period, where the hearing device is worn.

- HCP hearing care professional

- some hearing devices are configured for automatic adjustments.

- automatic adjustments based on sensors or user feedbacks

- the user just gets adjusted settings for the hearing devices without interactions with the user.

- the user may not be aware of what has caused the change, may not even perceive any change and cannot control this change.

- the user thus can also not reject the change when he would prefer the previous setting.

- Document EP3236673 A1 discloses a method of adjusting a hearing aid, which hearing aid is adapted for processing sound signals based on sound processing parameters stored in the hearing aid, the method comprising: creating a user profile of the hearing aid user in a server device, the user profile comprising at least a user identification and hearing aid information specifying the hearing aid; preparing a list of user interaction scenarios in the server device, based on the user profile; selecting a user interaction scenario from the list with a mobile device of the user communicatively interconnected with the server device; determining one or more hearing aid adjustment parameters based on the selected user interaction scenario; displaying one or more input controls for adjusting the determined one or more hearing aid adjustment parameters with the mobile device; after adjustment of at least one of the hearing aid adjustment parameters with the one or more input controls: deriving sound processing parameters for the hearing aid based on the adjusted one or more hearing aid adjustment parameters; applying the derived sound processing parameters in the hearing aid, such that the hearing aid is adapted for generating optimized sound signals based on the applied sound processing parameters.

- Document US11213680 B2 discloses a system for controlling parameter settings of an auditory device for a user comprising: an auditory device processor; an auditory device output mechanism controlled by the auditory device processor, the auditory device output mechanism including one or more modifiable parameter settings; an auditory input sensor configured to detect an environmental sound and communicate with the auditory device processor; a database in communication with the auditory device processor, the database including a reference bank of environmental sounds and corresponding sound profiles, each sound profile including an associated set of parameter settings; a memory in communication with the auditory device processor and including instructions that, when executed by the auditory device processor, cause the auditory device processor to: while the auditory input sensor is detecting a first environmental sound, repeatedly present a plurality of sound options to the user, wherein each sound option is a set of parameter settings, until the user selects a preferred sound option, wherein the selected sound option is associated with the first environmental sound; store a first sound profile in the reference bank corresponding to the first environmental sound, and wherein the set of parameter settings of the selected sound

- a first aspect of the invention relates to a hierarchical control system with a hearing system including a hearing device, the control system implementing at least two control levels.

- the control system comprises at least one control unit and assigned to the control levels.

- Each control unit may be assigned to one of the control levels.

- Such control units may be parts of the hearing device, a user device, such as a smartphones and/or further computing devices communicatively connected with the hearing system.

- the hearing system includes a memory storing instructions, and a processor communicatively coupled to the memory and configured to execute at least parts of the control system.

- the control system and in particular the hearing system is further configured to execute the following instructions: determining and monitoring a hearing situation of a user of the hearing system based on a status of the hearing system and user related data, for example stored in the memory; and determining, based on the hearing situation of the user, an updated hearing device setting.

- the updated hearing device setting also may be associated with one of the at least two control levels.

- the hearing device may be in particular one of Behind-The-Ear (BTE), Receiver-In-Canal (RIC), In-The-Ear (ITE), Completely-In-Canal (CIC), and Invisible-In-The-Canal (IIC) devices.

- BTE Behind-The-Ear

- RIC Receiver-In-Canal

- ITE In-The-Ear

- CIC Completely-In-Canal

- IIC Invisible-In-The-Canal

- Such a hearing device typically comprises a housing, a microphone or sound detector, and an output device including e.g. a speaker (also "receiver") for delivering an acoustic signal, i.e. sound into an ear canal of a user.

- the output device can also be configured, alternatively or in addition, to deliver other types of signals to the user which are representative of audio content and which are suited to evoke a hearing sensation.

- the control system is further configured to generate a notification message about the application of the updated hearing device setting, if the hierarchical control system comprises a next higher control level.

- the notification message is sent to the control unit assigned to the next higher control level.

- Subsequently at least the control unit and optionally the control entity assigned to the next higher control level is notified about the application of the updated hearing device setting, if the control system comprises a next higher control level.

- the user can be protected by changes in the hearing device setting, which may be not beneficial, i.e. may not help the user to compensate his or her hearing loss and/or which may be dangerous for him.

- the volume of a hearing device may not be turned up as high for a user with a mild hearing loss compared to a user with a severe hearing loss.

- control system includes at least three control levels, such as three control levels or four control levels.

- a control unit assigned to a lowest, first control level may be associated with the hearing device, and/or a control unit assigned to a next higher, second control level may be associated with a user of the hearing system, and/or a control unit assigned to a next higher, third control level may be associated with an HCP or a caretaker, and/or a control unit assigned to a next higher, fourth control level may be associated with a regulatory body or a manufacturer.

- the HCP may get notified as well about the recommended and/or accepted changes of the hearing device settings by the corresponding control unit being a computing device in his or her office.

- the notification can be sent to the HCP, in particular to a device, e.g. computer device, of the HCP as message whenever the user gets this information.

- the message is stored and the HCP is notified when the user is the next time at the HCP office, thus e.g. when the hearing device is connected to a device of the HCP.

- control system and in particular the control units include digital interfaces for presenting information to and receiving information from one or more of the control entities.

- the control units may also be communicatively coupled to user interfaces for presenting information to and receiving information from one or more of the control entities.

- the query message, the feedback message and/or the notification message may be data structured exchanged between computing devices (such as the control units) via their interfaces.

- These messages also may contain information to be provided to a person via a user interface as e.g. a text message or a voice message.

- the user interface may comprise a display screen or an electroacoustic transducer in the form of a speaker for communicating visual or acoustic information, respectively, to the user which is provided or triggered by the message.

- the display screen may be a touchscreen for receiving a user input.

- the user interface may also comprise other input means for receiving a user input as e.g. a button, a separate touch sensing area (i.e. a touch pad) or an electroacoustic transducer in the form of a microphone for acoustic input.

- the information may be displayed on a screen of the user device or on a device of the HCP or other control entity.

- the status of the hearing system is based on sensor data acquired by the hearing system.

- the status of the hearing system includes a motion status and/or a temperature.

- the hearing situation is determined and monitored by classifying sensor data acquired by the hearing system and optional the user related data, which is stored in the hearing system, in particular the hearing device.

- the audio data acquired by the hearing device may be classified to determine, what kind of sounds the user is hearing in the moment. Such hearing situations may distinguish between speech and music.

- motion data or position data may be classified, whether the user is walking, riding or going by car.

- the sensor data comprises audio data acquired with a microphone of the hearing system and the audio data is classified with respect to different sound situations as hearing situations.

- the sensor data comprises position data acquired with a position sensor of the hearing system and the position data is classified with respect to different locations and/or movement situations of the user as hearing situations.

- the sensor data comprises medical data acquired with a medical sensor of the hearing system and the medical data is classified with respect to different medical conditions of the user as hearing situation.

- the user related data comprises information on a hearing loss of the user and/or an audiogram of the user.

- the user related data also may comprise settings of a sound processing of the hearing system, in particular, the hearing device.

- the hearing situation is classified with a first machine learning algorithm.

- the first machine learning algorithm may be executed in the hearing system, in particular in the hearing device.

- the updated hearing device setting is determined with a second machine learning algorithm.

- the second machine learning algorithm may be executed in a control unit associated with one control entity.

- the classification of the hearing situation may be sent to the control unit, which then selects the updated hearing device setting from the database.

- a further aspect of the invention relates to a method for controlling a hearing system and for improving the settings of a hearing device.

- the method may be performed by the control system.

- the method comprises: determining and monitoring a hearing situation of a user of the hearing system based on a status of the hearing system and user related data stored in the hearing system; and determining, based on the hearing situation of the user, an updated hearing device setting. For example, based on the hearing situation of the user, an updated hearing device setting may be selected from a database of available hearing device settings, each available hearing device setting being associated with one of the at least two control levels.

- the method further comprises: associating the updated hearing device setting with one of the at least two control levels. The may be done by the hearing device, by the user device or other control units of the control system.

- the method further comprises: generating a query message for approval of the updated hearing device setting and sending the query message to the control unit assigned to the control level associated with the updated hearing device setting, receiving a feedback message in response to the query message from the control unit and, if the feedback messages contains an approval of the updated hearing device setting, applying the updated hearing device setting to the hearing device; and, if the control system comprises a next higher control level, generating a notification message about the application of the updated hearing device setting and sending the notification message to the control unit assigned to the next higher control level.

- a further aspect of the invention relates to a computer program for controlling a hearing system, which, when being executed by at least one processor, is adapted to carry out the method such as described above and below.

- a further aspect of the invention relates to a computer-readable medium, in which such a computer program is stored.

- the computer program may be executed in a processor of the control system, hearing system and/or a hearing device, which hearing device, for example, may be carried by the person behind the ear.

- the computer-readable medium may be a memory of this control system, hearing system and/or hearing device.

- the computer program also may be executed by a processor of a mobile device, which is part of the hearing system, and the computer-readable medium may be a memory of the mobile device. It also may be that steps of the method are performed by the hearing device and other steps of the method are performed by the mobile device.

- a computer-readable medium may be a floppy disk, a hard disk, an USB (Universal Serial Bus) storage device, a RAM (Random Access Memory), a ROM (Read Only Memory), an EPROM (Erasable Programmable Read Only Memory) or a FLASH memory.

- a computer-readable medium may also be a data communication network, e.g. the Internet, which allows downloading a program code.

- the computer-readable medium may be a non-transitory or transitory medium.

- Fig. 1 schematically shows a hearing system 10 according to an embodiment of the invention.

- the hearing system 10 includes a hearing device 12 and a user device 14 connected to the hearing device 12.

- the hearing device 12 is formed as a behind-the-ear device carried by a user (not shown) of the hearing device 12.

- the hearing device 12 is a specific embodiment and that the method described herein also may be performed with other types of hearing devices, such as e.g. an in-the-ear device or one or two of the hearing devices 12 mentioned above.

- the user device 14 may be a smartphone, a tablet computer, and/or smart glasses.

- the hearing device 12 comprises a part 15 behind the ear and a part 16 to be put in the ear canal of the user.

- the part 15 and the part 16 are connected by a tube 18.

- the part 15 comprises at least one sound detector 20, e.g. a microphone or a microphone array, a sound output device 22, such as a loudspeaker, and an input mean 24, e.g. a knob, a button, or a touch-sensitive sensor, e.g. capacitive sensor.

- the sound detector 20 can detect a sound in the environment of the user and generate an audio signal indicative of the detected sound.

- the sound detector 20 may be one of the sensors for determining the hearing situation.

- the sound output device 22 can output sound based on the audio signal modified by the hearing device 12 in accordance with the hearing device settings, wherein the sound from the sound output device 22 is guided through the tube 18 to the part 16.

- the input mean 24 enables an input of the user into the hearing device 12, e.g. in order to power the hearing device 12 on or off, and/or for choosing a sound program resp. hearing device settings or any other modification of the audio signal.

- the user device 14 which may be a Smartphone, a tablet computer, or Smart glasses, comprises a display 30, e.g. a touch-sensitive display, providing a graphical user interface 32 including control element 32, e.g. a slider, which may be controlled via a touch on the display 30.

- the control element 32 may be referred to as input means of the user device 14. If the user device 14 are smart glasses, the user device 14 may comprise a knob or button instead of a touch-sensitive display.

- Fig. 2 shows a block diagram of components of the hearing system 10 according to figure 1 .

- the hearing device 12 comprises a first processing unit 40.

- the first processing unit 40 is configured to receive the audio signal generated by the sound detector 20.

- the hearing device 12 may include a sound processing module 42.

- the sound processing module 42 may be implemented as a computer program executed by the first processing unit 40, which may comprise a CPU for processing the computer program.

- the sound processing module 42 may comprise a sound processor implemented in hardware or more specific a DSP (digital signal processor) for modifying the audio signal.

- the sound processing module 42 may be configured to modify, in particular amplify, dampen and/or delay, the audio signal generated by the sound detector 20, e.g.

- the parameter may be one or more of the group of frequency dependent gain, time constant for attack and release times of compressive gain, time constant for noise canceller, time constant for dereverberation algorithms, reverberation compensation, frequency dependent reverberation compensation, mixing ratio of channels, gain compression, gain shape/amplification scheme.

- a set of one or more of these parameters and parameter values may correspond to a predetermined sound program included in hearing device settings.

- a sound program resp. hearing device settings may be defined by parameters and/or parameter values defining the sound processing of the sound processing module 42, such as the parameters described above. Different sound programs resp. hearing device settings are then characterized by correspondingly different parameters and parameter values.

- a sound program resp. hearing device settings furthermore may comprise a list of sound processing features.

- the sound processing features may for example be a noise cancelling algorithm or a beamformer, which strengths can be increased to increase speech intelligibility but with the cost of more and stronger processing artifacts.

- the sound output device 22 generates sound from the modified audio signal and the sound is guided through the tube 18 and the in-the-ear part 16 into the ear canal of the user.

- the hearing device 12 may include a control module 44, being a control unit.

- the control module 44 may be implemented as a computer program executed by the first processing unit 40.

- the control module 44 may comprise a control processor implemented in hardware or more specific a DSP (digital signal processor).

- the control module 44 may be configured for adjusting the parameters of the sound processing module 42, e.g. such that an output volume of the sound signal is adjusted based on an input volume.

- the user may select a modifier (such as bass, treble, noise suppression, dynamic volume, etc.) and levels and/or values of the modifiers with the input mean 24. From this modifier, an adjustment command may be created and processed as described above and below.

- processing parameters may be determined based on the adjustment command and based on this, for example, the frequency dependent gain and the dynamic volume of the sound processing module 42 may be changed.

- the first memory 50 may be implemented by any suitable type of storage medium, in particular a non-transitory computer-readable medium, and can be configured to maintain, e.g. store, data controlled by the first processing unit 40, in particular data generated, accessed, modified and/or otherwise used by the first processing unit 40.

- the first memory 50 may also be configured to store instructions for operating the hearing device 12 and/or the user device 14 that can be executed by the first processing unit 40, in particular an algorithm and/or a software that can be accessed and executed by the first processing unit 40.

- the first memory 50 of the hearing device 12 may be a part of the memory 130 storing instructions according to the present invention and the first processing unit 40 may be a processor 132 of a control system 100 (see Fig. 4 ), which comprises the hearing system 10.

- the database 140 may be stored in the first memory 50.

- the hearing system 10 determines and monitors the hearing situation of the user i.a. by the sound detector 20

- the hearing device 12 may further comprise a first transceiver 52.

- the first transceiver 52 may be configured for a wireless data communication with a remote server 72, which may be part of a control system 100 (see Fig. 4 ) for the hearing system 10. It has to be noted that the control system 100 also may be implemented completely within the hearing system 10, i.e. without remote server 72,

- the first transceiver 52 may be adapted for a wireless data communication with a second transceiver 64 of the user device 14.

- the first and/or the second transceiver 52, 64 each may be e.g. a Bluetooth or RFID radio chip.

- a sound source detector 46 may be implemented in a computer program executed by the first processing unit 40.

- the sound source detector 46 is configured to determine at least the one sound source from the audio signal.

- the sound source detector 46 may be configured to determine a spatial relationship between the hearing device 12 and the corresponding sound source. The spatial relationship may be given by a direction and/or a distance from the hearing device 12 to the corresponding audio source, wherein the audio signal may be a stereo-signal and the direction and/or distance may be determined by different arrival times of the sound waves from one audio source at two different sound detectors 20 of the hearing device 12 and/or a second hearing device 12 worn by the same user.

- a first classifier 48 may be implemented in a computer program executed by the first processing unit 40.

- the first classifier 48 can be configured to evaluate the audio signal generated by the sound detector 20.

- the first classifier 48 may be configured to classify the audio signal generated by the sound detector 20 by assigning the audio signal to a class from a plurality of predetermined classes.

- the first classifier 48 may be configured to determine a characteristic of the audio signal generated by the sound detector 20, wherein the audio signal is assigned to the class depending on the determined characteristic.

- the first classifier 48 may be configured to identify one or more predetermined classification values based on the audio signal from the sound detector 20.

- the classification may be based on a statistical evaluation of the audio signal and/or a machine learning algorithm that has been trained to classify the ambient sound, e.g. by a training set comprising a huge amount of audio signals and associated classes of the corresponding acoustic environment. So, the machine learning algorithm may be trained with several audio signals of acoustic environments, wherein the corresponding classification is

- the first classifier 48 may be configured to identify at least one signal feature in the audio signal generated by the sound detector 20, wherein the characteristic determined from the audio signal corresponds to a presence and/or absence of the signal feature.

- Exemplary characteristics include, but are not limited to, a mean-squared signal power, a standard deviation of a signal envelope, a mel-frequency cepstrum (MFC), a mel-frequency cepstrum coefficient (MFCC), a delta mel-frequency cepstrum coefficient (delta MFCC), a spectral centroid such as a power spectrum centroid, a standard deviation of the centroid, a spectral entropy such as a power spectrum entropy, a zero crossing rate (ZCR), a standard deviation of the ZCR, a broadband envelope correlation lag and/or peak, and a four-band envelope correlation lag and/or peak.

- MFC mel-frequency cepstrum

- MFCC mel-frequency cepstrum coefficient

- the first classifier 48 may determine the characteristic from the audio signal using one or more algorithms that identify and/or use zero crossing rates, amplitude histograms, auto correlation functions, spectral analysis, amplitude modulation spectrums, spectral centroids, slopes, roll-offs, auto correlation functions, and/or the like.

- the characteristic determined from the audio signal is characteristic of an ambient noise in an environment of the user, e.g. a noise level, and/or a speech, e.g. a speech level.

- the first classifier 48 may be configured to divide the audio signal into a number of segments and to determine the characteristic from a particular segment, e.g. by extracting at least one signal feature from the segment. The extracted feature may be processed to assign the audio signal to the corresponding class.

- the first classifier 48 may be further configured to assign, depending on the determined characteristic, the audio signal generated by the sound detector 20 to a class of at least two predetermined classes.

- the classes may represent a specific content in the audio signal.

- the classes may relate to a speaking activity of the user and/or another person and/or an acoustic environment of the user.

- Exemplary classes include, but are not limited to, low ambient noise, high ambient noise, traffic noise, music, machine noise, babble noise, public area noise, background noise, speech, nonspeech, speech in quiet, speech in babble, speech in noise, speech in loud noise, speech from the user, speech from a significant other, background speech, speech from multiple sources, calm situation and/or the like.

- the first classifier 48 may be configured to evaluate the characteristic relative to a threshold.

- the classes may comprise a first class assigned to the audio signal when the characteristic is determined to be above the threshold, and a second class assigned to the audio signal when the characteristic is determined to be below the threshold. For example, when the characteristic determined from the audio signal corresponds to ambient noise, a first class representative of a high ambient noise may be assigned to the audio signal when the characteristic is above the threshold, and a second class representative of a low ambient noise may be assigned to the audio signal when the characteristic is below the threshold.

- a first class representative of a larger speech content may be assigned to the audio signal when the characteristic is above the threshold, and a second class representative of a smaller speech content may be assigned to the audio signal when the characteristic is below the threshold.

- At least two of the classes can be associated with different sound programs i.e. hearing device settings, in particular with different sound processing parameters, which may be applied by the sound processing module 42 for modifying the audio signal.

- the class assigned to the audio signal which may correspond to a classification value, may be provided to the control module 44 in order to select the associated audio processing parameters, in particular the associated sound program, which may be stored in the first memory 50.

- the class assigned to the audio signal may thus be used to determine the sound program, which may be proposed as updated hearing device settings by the control system 100, in particular depending on the audio signal received from the sound detector 20.

- the hearing device 12 may further comprise a first transceiver 52.

- the first transceiver 52 may be configured for a wireless data communication with the remote server 72. Additionally or alternatively, the first transceiver 52 may be adapted for a wireless data communication with a second transceiver 64 of the user device 14.

- the first and/or the second transceiver 52, 64 each may be e.g. a Bluetooth or RFID radio chip.

- Each of the sound processing module 42, the control module 44, the sound source detector 46, and the first classifier 48 may be embodied in hardware or software, or in a combination of hardware and software. Further, at least two of the modules 42, 44, 46, 48 may be consolidated in one single module or may be provided as separate modules.

- the first processing unit 40 may be implemented with a single processor or with a plurality of processors. For instance, the first processing unit 40 may comprise a first processor in which the sound processing module 42 is implemented, and a second processor in which the control module 44 and/or the sound source detector 46 and/or the first classifier 48 are implemented.

- the first processing unit 40 may further comprise the processor 132 for executing the control system 100 as a further processor. Alternatively the one of first or second processor may be used as processor 132.

- the user device 14 which may be connected to the hearing device 12 for data communication, may comprise a second processing unit 60 with a second memory 62, and a second transceiver 64.

- the second processing unit 60 may comprise one or more processors, such as CPUs. If the hearing device 12 is controlled via the user device 14, the second processing unit 60 of the user device 14 may be seen at least in part as a controller of the hearing device 12. In other words, according to some embodiments, the first processing unit 40 of the hearing device 12 and the second processing unit 60 of the user device 14 may form the controller of the hearing device 12.

- a processing unit of the hearing system 10 may comprise the first processing unit 40 and the second processing unit 60. Thus, first and second processing unit may form the processor 132.

- the second processing unit 60 and the second memory 62 may be alternatively processor 132 and memory 130 according to the present invention.

- the database 140 may be stored in the second memory 62.

- the hearing device 12 and the user device 14 and in particular the processing units 40, 60 may communicate data via the first and second transceivers 52, 64, which may be Bluetooth ⁇ transceivers.

- the hearing device 12 and the user device 14 may be connected for data communication via a wireless data communication connection.

- the above-mentioned modifiers and their levels and/or values are adjusted with the user device 14 and/or that an adjustment command is generated with the user device 14 and sent to the hearing device 12.

- This may be performed with a computer program run in the second processing unit 60 and stored in the second memory 62 of the user device 14.

- This computer program may also provide the graphical user interface 32 on the display 30 of the user device 14.

- the graphical user interface 32 may comprise the control element 34, such as a slider.

- an adjustment command may be generated, which will change the sound processing of the hearing device 12.

- the user may adjust the modifier with the hearing device 12 itself, for example via the input mean 24.

- the hearing device 12 and/or the user device 14 may communicate with each other and/or with the remote server 72 via the Internet 70.

- the method explained below may be carried out at least in part by the remote server 72.

- processing tasks which require a huge amount of processing resources, may be outsourced from the hearing device 12 and/or the user device of 14 to the remote server 72.

- the processing units (not shown) of the remote server 72 may be used at least in part as the controller for controlling the hearing device 12 and/or the use device 14.

- the processor 132 for executing the control system 100 of the hearing system 10 as well as the memory 130 may be at least partially located on the remote server 72.

- the user device 14 may comprise a second classifier 66 and/or a further module 68.

- the second classifier 66 may have the same functionality as the first classifier 48 explained above and/or also may be based on a machine learning algorithm.

- the second classifier 66 may be arranged alternatively or additionally to the first classifier 48 of the hearing device 12.

- the second classifier 66 may be configured to classify the acoustic environment of the user and the user device 14 depending on the received audio signal, as explained above with respect to the first classifier 48, wherein the acoustic environment of the user and the user device 14 corresponds to the acoustic environment of the hearing device 12 and wherein the audio signal may be forwarded from the hearing device 12 to the user device 14.

- the system as explained here may thus comprise a certain number of classifiers, for example classifying movement, time, noise, environment for describing the possible hearing situations of the user.

- the control system 100 is described with respect to Fig. 4 . It is shown that the control system comprises the hearing system 10 and a part 102 external to the hearing system 10, such as the remote server 72.

- the remote part 102 is depicted abstractly, solely showing that it comprises one or more computing devices with at least one processor 130, at least one processor 132 and at least one database 140.

- Fig. 4 also shows control units 150, 152, 154, 156, which are in data communication with each other and the hearing system 10.

- a control unit 150, 152 also may be part of the hearing device 12 and/or hearing system 10.

- step S4 the hearing system 10 determines the hearing situation of the user in particular with internal and/or external sensors.

- Internal sensors are in particular the sound detector 20 and/or sound source detector 42.

- sensors like motion sensors, health sensors, such as blood pressure sensors, heart beat sensors, etc. or temperature sensors which may be either included in the hearing device 12 or in the user device 14.

- the user device 14 is for example a smart phone, such a smartphone itself typically includes a microphone and a motion sensor and may have a temperature sensor.

- the hearing situation may be determined by the hearing device 12 alone, by the user device 14 or with both the hearing device 12 and the user device 14.

- the hearing system includes the first and/or second classifiers 48, 66 or even further classifiers, those may be applied to classify the hearing situation.

- the hearing situation may be classified with a first machine learning algorithm provided by the first classifier 48 and/or the second classifier 66.

- the control system 100 selects from the database 140, as depicted in Fig. 4 , a hearing device setting in step 6.

- the database 140 comprises hearing device settings 142, 144. If this hearing device setting is equal to the used hearing device setting 142, no chance is required, and the loop returns to step S4. If the hearing device setting is different from the used one, it is an updated hearing device setting 144, which is selected.

- the database 140 also may be stored in the hearing system 10 or in a control unit 150, 152, 154, 156.

- the server 72 may be such a control unit.

- the updated hearing device setting 144 may be determined with a second machine learning algorithm, which is executed is executed in a control unit associated with one control entity.

- the classification of the hearing situation, which has been generated by the first machine learning algorithm may be sent to the control unit and may be used there as input to the second machine learning algorithm.

- step S8 the control system 100 determines, which control level the updated hearing device setting is assigned to. Each of the available hearing settings is assigned to one control level.

- the control system 100 is a hierarchical control system. Typically, in such a hierarchical control framework, inner layers/loops run at higher frequency than outer layers/loops. Notifications or feedback can happen instantaneously or may be batched (e.g., as summary report). The notification and feedback can be applied on the hearing device 12 directly or alternatively on third devices, such as smartphones that provide a display to highlight and type, or computer terminals that close the control loop via connection to the cloud. Thus, in particular by a message send to the user or HCP. Feedbacks allow to acknowledge, rate, override, oversteer, revert single changes or roll back multiple changes in history at several layers in hierarchy.

- They can be triggered by the user right on the device or by an actor at higher layer indirectly via remote connection (via proxies like cloud, smartphones, fitting stations at HCPs). They can be actively submitted by the user on the devices as explicit feedbacks but may also happen implicitly as the hearing devices observe the user, its behavior and interactions with the hearing devices.

- the feedback to the hearing devices can also be given by speech: either actively by the user via voice control or passively by having the hearing devices monitor the user's speech in daily conversations and detect keywords that indicate some reinforcement, rejection or hint regarding an applied functional change or future change still to be applied.

- step S10 the control system 100 initiates a query to the control units 150, 152, 154, 156 and optionally control entities 120, 122, 124, 126 (see Fig. 5 ) assigned to the respective hearing setting.

- the query is sent as a query message, which is a data structure exchanged between control units 150, 152, 154, 156.

- the control entity 120 may be associated with the control unit 150, which may be the control module 44 of the hearing device 12.

- the control entity 122 is associated with a control unit 152 or 152', wherein the control unit 152 is for example implemented into a smartphone of the user or the control unit 152' is for example implemented in a computer of the user.

- the control entity 154 may be the HCP, can be associated with the control unit 154, which is implanted in a computing device of the HCP and the control entity 156 may be associated with a control unit 156, wherein the control entity 156 may be a regulatory body or manufacturer.

- a query message may be either send by a direct (wireless connection) as depicted between the user device 14 and the hearing device 12 or via a network connection, e.g. internet 70, as depicted for the control units 152', 154 and 156.

- a network connection e.g. internet 70

- the hearing system 10 Upon approval, the hearing system 10 is allowed to apply the updated hearing device setting. If the update is not allowed, the hearing system returns to step S4. The approval is also sent as a message, in this case a feedback message, from the respective control unit 150, 152, 154, 156 to the hearing system 10.

- the control system 100 subsequently notifies at least the control unit 150, 152, 154, 156 and optionally the control entity 120, 122, 124, 126 assigned to the next higher control level about the application of the updated hearing device setting in step S12, if the control system comprises a next higher control level.

- This notification also in the form of a notification message, can be either send directly or may be batched.

- step S14 the routine ends with step S14, for example by switching the hearing system off, or returns to step S4, if a continuous monitoring is exhibited.

- the notifications to the next higher control unit and/or entity cover functional changes (e.g., new functions, modes, configuration settings) that have already been applied and are perceivable for the user, that are suggested by the hearing devices (aka recommender systems) based on user history (e.g., user interaction history) or context (e.g., sensed acoustic environments a user stays in during the day).

- user history e.g., user interaction history

- context e.g., sensed acoustic environments a user stays in during the day.

- a notification may also comprise several options for the user to choose from (e.g., to apply and test out, eventually rate and single out the preferred one). These notifications shall improve the transparency of the system towards the user.

- the basic shared control loop as shown in Fig. 3 is a loop between user and at least one hearing device 12.

- the hearing device 12 may comprise at least one processing unit dedicated to Al, like a deep neural network processor, and one or several sensors and user interactions. Machine learning may happen during operation on the hearing devices or a third connected device, based on data sensed by the hearing device sensors or data obtained by the user interacting with the hearing device. Further data may be prescribed, defining preconditions (e.g., severity or type of hearing loss, user type) for learning from the sensed or user/usage data, or itself being the output from learning algorithms applied to other data sources offline (e.g., deep neural network models trained on other user data from manufacture's database).

- preconditions e.g., severity or type of hearing loss, user type

- the user and the hearing devices are both involved in steering the system behavior: the user receives notifications with information about system functions applied or recommended to be applied from the hearing device resp. hearing system, the hearing system receives feedback from the user (acknowledge, revert and roll back, override), from which it can learn further.

- the control system 100 shows that the processor 132 is communicatively coupled with the memory 130, not depending on whether processor 132 and memory 130 are assigned directly to the hearing device or to another device as discussed above.

- the processor communicates with the different control entities 120, 122, 124, 126, which may be for example assigned as control entity 120 being the control module 44, control entity 122 being the user, control entity 124 being the HCP and control entity 126 being the manufacturer or a regulatory body.

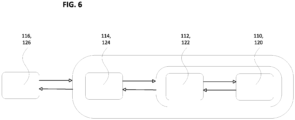

- Figure 6 shows a hierarchical (respectively layered) shared control topology with an inner control loop, comprising the shared control among the user and the hearing devices according to Figure 3 .

- the hearing device constitutes the lowest control level 110 with control entity 120.

- the user constitutes the next higher control level 112 with control entity 122.

- the shared control topology further comprises an outer control loop, comprising the shared control among the hearing care professional (HCP) and the system of the user and the hearing devices, wherein the HCP constitutes a third level 114, the HCP being the third control entity 124.

- HCP hearing care professional

- the HCP may receive notifications from the system about changes applied or suggested, and can act upon them by triggering recommendations for the user, enabling new functions or features, adjusting configuration settings or enforcing hard bounds on the configuration space for the user as well as the steering units of the hearing devices.

- the optional fourth level 116 is constituted by the control entity 116, which may be for example the manufacturer, e.g. limiting the learning and changing of device settings.

- Figure 7 shows another embodiment of the hierarchical shared control system with three layers and several actors on each layer.

- additional actors on the outmost layer such as a regulatory body (e.g., a public health authority or the US food and drug administration) or a manufacturer (e.g., a hearing device manufacturer like Sonova), which may receive notifications from the underlying system of applied configuration settings, exercised working modes, provided quality guarantees or compliance with regulations, specifications or best practice guidelines.

- a regulatory body e.g., a public health authority or the US food and drug administration

- a manufacturer e.g., a hearing device manufacturer like Sonova

- the hearing system as described above provides in the different embodiments one or more of the following benefits:

- the machine learning algorithm is improved by collecting feedback (e.g., ratings, acknowledgments) from users for applied configurations and configuration changes, which can be used as labels for learning (knowledge about true improvements); a bad rating or a user's veto could also indicate uncertainty of the steering unit and a need for gathering more representative data for the given situation.

- feedback e.g., ratings, acknowledgments

- the machine learning algorithm learns users' preferences. It can use them for abstraction of user groups/profiles, provide recommendations/predictions to users based on their own and/or other users' feedback, as well as environmental clues as part of the hearing situation of the user from sensor measurements, which helps to increase users' convenience and hearing devices' performance (i.e., continuous adjustments in the field to make up for potentially limited product requirements/verification of the Al system)

- the users interacts with the system and thus set their requirements which leads to a user-centered/driven customized settings and takes care of personalized requirements quasi a posteriori).

- the inclusion of a machine learning algorithm in the system is in particular beneficial, if the Al can learn from the preferences and data of a multitude of users providing heterogeneous data.

- the safety of the system in enhanced by having human control entities at several levels (user himself, experts like HCP, manufacturer, regulatory body) to acknowledge decisions by machine learning algorithm to ensure compliance with medical regulations (medical safety, application/network security) and/or performance as intended, i.e., according to requirements/specification (machine learning algorithm safety as well as machine learning algorithm security: increase users' safety/security and hearing devices' quality, eventual verification - according to users' requirements - to make up for limited product verification of machine learning algorithm systems and anticipate unexpected situations as quasi verification of the "black box" in the field through experts instances).It also remedies inherent limitations of the machine learning algorithm of missing determinism and transparency (e.g. what has been learnt exactly) and its predictions implying uncertainty.

Landscapes

- Health & Medical Sciences (AREA)

- General Health & Medical Sciences (AREA)

- Otolaryngology (AREA)

- Neurosurgery (AREA)

- Physics & Mathematics (AREA)

- Engineering & Computer Science (AREA)

- Acoustics & Sound (AREA)

- Signal Processing (AREA)

- Circuit For Audible Band Transducer (AREA)

Description

- The invention relates to a hierarchical control system with a hearing system including a hearing device. The invention further relates to a method, a computer program and a computer readable medium for controlling the hearing system.

- Hearing devices are generally small and complex devices. Hearing devices can include a processor, microphone, speaker, memory, housing, and other electronical and mechanical components. Some example hearing devices are Behind-The-Ear (BTE), Receiver-In-Canal (RIC), In-The-Ear (ITE), Completely-In-Canal (CIC), and Invisible-In-The-Canal (IIC) devices.

- A user can prefer one of these hearing devices compared to another device based on hearing loss, aesthetic preferences, lifestyle needs, and budget.

- Today's hearing devices mainly work based on the settings that the user receives from user's audiologist or hearing care professional (HCP). Such first settings have typically to be optimized at least during an initial period, where the hearing device is worn.

- There are several ways to improve this configuration such as follow up fittings with the HCP or by the user who can adjust settings either at the hearing device directly or e. g. based on apps running on a smartphone.

- Furthermore, some hearing devices are configured for automatic adjustments. In case of automatic adjustments based on sensors or user feedbacks, the user just gets adjusted settings for the hearing devices without interactions with the user. Thus, the user may not be aware of what has caused the change, may not even perceive any change and cannot control this change. The user thus can also not reject the change when he would prefer the previous setting.

- Document

EP3236673 A1 discloses a method of adjusting a hearing aid, which hearing aid is adapted for processing sound signals based on sound processing parameters stored in the hearing aid, the method comprising: creating a user profile of the hearing aid user in a server device, the user profile comprising at least a user identification and hearing aid information specifying the hearing aid; preparing a list of user interaction scenarios in the server device, based on the user profile; selecting a user interaction scenario from the list with a mobile device of the user communicatively interconnected with the server device; determining one or more hearing aid adjustment parameters based on the selected user interaction scenario; displaying one or more input controls for adjusting the determined one or more hearing aid adjustment parameters with the mobile device; after adjustment of at least one of the hearing aid adjustment parameters with the one or more input controls: deriving sound processing parameters for the hearing aid based on the adjusted one or more hearing aid adjustment parameters; applying the derived sound processing parameters in the hearing aid, such that the hearing aid is adapted for generating optimized sound signals based on the applied sound processing parameters. - Document

US11213680 B2 - It is an objective of the invention to provide a hearing system which provides an improved user experience. It is a further objective to provide an enhanced safety for the user.

- These objectives are achieved by the subject-matter of the independent claim. Further exemplary embodiments are evident from the dependent claims and the following description.

- A first aspect of the invention relates to a hierarchical control system with a hearing system including a hearing device, the control system implementing at least two control levels. The control system comprises at least one control unit and assigned to the control levels. Each control unit may be assigned to one of the control levels. Such control units may be parts of the hearing device, a user device, such as a smartphones and/or further computing devices communicatively connected with the hearing system. There also may be control entities assigned to the control levels, such as the hearing device, the user, an HCP, etc.

- The hearing system includes a memory storing instructions, and a processor communicatively coupled to the memory and configured to execute at least parts of the control system.

- The control system and in particular the hearing system is further configured to execute the following instructions: determining and monitoring a hearing situation of a user of the hearing system based on a status of the hearing system and user related data, for example stored in the memory; and determining, based on the hearing situation of the user, an updated hearing device setting. The updated hearing device setting also may be associated with one of the at least two control levels.

- The hearing device according to the present invention may be in particular one of Behind-The-Ear (BTE), Receiver-In-Canal (RIC), In-The-Ear (ITE), Completely-In-Canal (CIC), and Invisible-In-The-Canal (IIC) devices. Such a hearing device typically comprises a housing, a microphone or sound detector, and an output device including e.g. a speaker (also "receiver") for delivering an acoustic signal, i.e. sound into an ear canal of a user. The output device can also be configured, alternatively or in addition, to deliver other types of signals to the user which are representative of audio content and which are suited to evoke a hearing sensation. The output device can in particular be configured to deliver an electric signal for driving an implanted electrode for directly stimulating hearing receptors of the user as it is e.g. the case in a cochlea-implant. Output devices of other types of hearing devices are configured to transmit an acoustic signal directly via bone conduction as it is e.g. the case with so called BAHA devices (Bone Anchored Hearing Aid - BAHA) which transmit the acoustic signal via a pin implanted into the skull of a user. The hearing device may comprise a processor and a memory. The hearing device may further include other electronical and mechanical components, like an input unit or a transmitter and/or one or more sensors.

- The hearing system may further include a mobile device being a user device, for example a smart phone or other connectable device, which can be connected to the hearing device over a network or by a direct, in particular wireless, connection. In such a case, sensors included in the mobile device may be used to collect sensor data to be send to one or more processors of the hearing system.

- The hearing system is configured to determine and monitor a hearing situation of the user. A hearing situation of the user is to be understood to include factors, which change the personal hearing experience of the user such as acoustic of the environment, background and foreground noise and sound as well as medical conditions of the user. The hearing system may take into account measurements from one or more sensors to determine the hearing status.

- A processor for determining, based on the hearing situation of the user, an updated hearing device setting, may be a processor internal to the hearing device or a processor of a connected device or a connected network e.g. accessible through internet. The updated hearing device setting may be obtained from a database of available hearing device settings or can be procedurally generated at runtime based e.g. on the current hearing situation and user preferences. The control system may comprise such a database. For example, based on the hearing situation of the user, an updated hearing device setting may be selected from a database of available hearing device settings, each available hearing device setting being associated with one of the at least two control levels. The hearing device settings may include settings for a single parameter as e.g. volume, or settings for a set of several parameters, like filter settings, loudness, balances, etc.

- The available hearing device settings stored in the database may include a control level associated with the respective hearing device setting in which case the step of associating the updated hearing device setting with a control level simply requires obtaining and utilizing the corresponding information of the database. In case the updated hearing device setting is generated at runtime, the step of associating the updated hearing device setting with a control level may include e.g. associating the control level procedurally according to a predefined set of rules or may include obtaining an associated control level e.g. from a lookup table in which control levels are assigned to certain hearing device settings.

- The control system and/or the hearing system may implement one or more machine learning algorithms, like a trained neuronal network, which perform the determination and monitoring of the hearing situation and/or the selection of the updated hearing device setting. The algorithm may be executed in the hearing device, in a user or mobile device such as user's smartphone or in the cloud i.e. on one or several remote server or a combination thereof, i.e. by the control system.

- The database may be provided only for one user or may be provided for several users with several hearing devices. Such a database may comprise hearing device settings and their changes for a plurality of users. The device settings may be stored in the database, together with information, in which hearing situation the settings should be applied and for which users, in particular depending on user profiles and medical conditions like the hearing loss type, the amount of hearing loss, i.e. the user related data.

- The control system is further configured to generate a query message for approval of the updated hearing device setting and sending the query message to the control unit assigned to the control level associated with the updated hearing device setting. In such a way, the control unit and/or the associated control entity assigned to the control level associated with the updated hearing device setting may be queried for approval of the updated hearing device setting.

- The control system is further configured to receive a feedback message in response to the query message from the control unit and, if the feedback messages contains an approval of the updated hearing device setting, applying the updated hearing device setting to the hearing device. The control unit may automatically decide, whether to approve the update or not. It is also possible that information about the update is presented to a control entity, for example, via a user interface, which then approves the update via the user interface. In any case, the control unit generates the feedback messages, which contains information, whether the update is approved or not.

- Upon approval by the control unit and optionally control entity, the hearing system is allowed to apply the updated hearing device setting.

- The control system is further configured to generate a notification message about the application of the updated hearing device setting, if the hierarchical control system comprises a next higher control level. The notification message is sent to the control unit assigned to the next higher control level. Subsequently at least the control unit and optionally the control entity assigned to the next higher control level is notified about the application of the updated hearing device setting, if the control system comprises a next higher control level. In such a way, the user can be protected by changes in the hearing device setting, which may be not beneficial, i.e. may not help the user to compensate his or her hearing loss and/or which may be dangerous for him. For example, the volume of a hearing device may not be turned up as high for a user with a mild hearing loss compared to a user with a severe hearing loss.

- Control entities assigned to a control level can be algorithms, for instance machine learning algorithms, in particular algorithms implementing an artificial intelligence, or persons as e.g. a user, a designated caretaker or an HCP. In such a way a control unit, being a computing device, may be or may comprise a control entity.

- Control entities can also be collective entities as e.g. a manufacturer or a regulating body. Algorithms particularly may be control entities assigned to the lowest available control level whereas a regulatory body and/or manufacturer may be the control entity assigned to the highest available control level.

- There may be several hierarchically ordered control levels with different assigned control entities. "Hierarchical ordering" herein refers to a well-defined ordering of the control levels such that each control level - besides a highest control level - has exactly one next higher control level. The hierarchical ordering can be defined by an authorization that is required to apply a setting associated with the respective control level. Typically, higher control levels are authorized to apply settings of a lower control level whereas lower control levels are not authorized to apply settings associated with a higher control level. For instance, a user may be authorized to apply personalized settings of the hearing device whereas the HCP may be authorized to change personalized settings as well as fitting parameters related to the hearing loss of the user (which the user is not authorized to change). The regulatory body or manufacturer may e.g. be authorized to change hearing device related properties as e.g. a maximum allowable or achievable gain which the HCP is not authorized to change.

- If a certain control level does not have sufficient authority to apply specific settings, such settings may be proposed to a control entity assigned to a higher control level which can approve and apply such settings by way of its higher authority.

- For safety reasons, the control level associated with a particular setting for one or more parameters may be related to the required deviation from the actual settings in some embodiments. For example, the volume may be increased solely within a specific margin by the user, but within a wider margin by the HCP, etc. The same may apply to other parameters. As such, the control level assigned to a particular setting may be determined at runtime, based on the required deviation from the actual settings. The associated control level can be procedurally determined or can be read e.g. from a lookup table stored in the control system. This enhances the safety of the hearing device by avoiding to increase a hearing loss of the user by harmful settings or settings that may be harmful due to too big or sudden a change. Furthermore, when the selection of the settings is done automatically by a control unit, the control unit and/or control entity of the next higher control level receives a corresponding notification and may check, whether the applied selection is beneficial. Alternatively, the selection of setting can be proposed to the control unit and/or control entity of the next higher control level and may be approved by this control unit and/or control entity.

- The machine learning algorithm may be trained as to which settings to propose or to apply in a given hearing situation and/or for a given condition of the user. The machine learning algorithm may be trained with historical data of the user of the hearing device and/or with data from a plurality of users obtained via a data sharing network. For example, the machine learning algorithm may be trained to propose a specific setting with a higher probability when the respective setting is satisfactory for a lot of users in the same or a comparable hearing situations.

- New hearing device settings, which may be made by the user or the HCP, etc. can be included into the database and/or optional additional database and the machine learning algorithm can evaluate, whether these settings are satisfactory in specific situations or not. The new settings also can be included into the training process of the machine learning algorithm.

- The improvements of the hearing device settings can either be audiological improvements or usability improvements.

- Audiological improvements may comprise preferences of user in specific environments based on sensor measurements (microphone, accelerometer, GPS location, biometry sensors, ...), for example when working, travelling, listen to music , doing sports.

- Usability improvements may comprise: e.g. adapting /changing timing of user interactions (responsivity, etc.) based on an user interaction history (e.g., many corrective adjustments) and/or emotion/health (e.g., shaking/trembling) measured by a biometry sensor.

- According to an embodiment the control system a control unit is associated with a control entity. Thus, there may be for example a first lower-level control unit associated with or included in the hearing device and associated with the hearing device. There may be a second, next higher level control unit, which may be implemented in the user device and which may be associated with the user as control entity. One or more of the control units may implement an algorithm, in particular a machine learning algorithm, which provides the functionality of a control entity, i.e. for example is configured to approve updates of hearing device settings and to generate feedback messages.

- The user may receive a notification with a suggestion for new possible settings for the hearing device based on the machine learning algorithm and sensor measurement. The user feedbacks, whether he applies the new setting(s) and whether also keeps them on the long run will then be used as well to improve future recommendation(s). This information may be included into the training of the machine learning algorithm.

- It is possible that information in a feedback message and/or a notification message is provided to the user by a user interface, either on an app, or directly by the hearing device (e.g. as a voice message) or by a user device resp. mobile device. The information may be displayed on the user device.

- According to an embodiment, the control system includes at least three control levels, such as three control levels or four control levels.

- A control unit assigned to a lowest, first control level may be associated with the hearing device, and/or a control unit assigned to a next higher, second control level may be associated with a user of the hearing system, and/or a control unit assigned to a next higher, third control level may be associated with an HCP or a caretaker, and/or a control unit assigned to a next higher, fourth control level may be associated with a regulatory body or a manufacturer.

- The HCP may get notified as well about the recommended and/or accepted changes of the hearing device settings by the corresponding control unit being a computing device in his or her office. The notification can be sent to the HCP, in particular to a device, e.g. computer device, of the HCP as message whenever the user gets this information. Alternatively, the message is stored and the HCP is notified when the user is the next time at the HCP office, thus e.g. when the hearing device is connected to a device of the HCP.

- According to an embodiment, the control system and in particular the control units include digital interfaces for presenting information to and receiving information from one or more of the control entities. The control units may also be communicatively coupled to user interfaces for presenting information to and receiving information from one or more of the control entities. The query message, the feedback message and/or the notification message may be data structured exchanged between computing devices (such as the control units) via their interfaces. These messages also may contain information to be provided to a person via a user interface as e.g. a text message or a voice message. The user interface may comprise a display screen or an electroacoustic transducer in the form of a speaker for communicating visual or acoustic information, respectively, to the user which is provided or triggered by the message. The display screen may be a touchscreen for receiving a user input. The user interface may also comprise other input means for receiving a user input as e.g. a button, a separate touch sensing area (i.e. a touch pad) or an electroacoustic transducer in the form of a microphone for acoustic input.

- In particular, the information may be displayed on a screen of the user device or on a device of the HCP or other control entity.

- According to an embodiment, the status of the hearing system is based on sensor data acquired by the hearing system. For example, the status of the hearing system includes a motion status and/or a temperature.

- According to an embodiment, the hearing situation is determined and monitored by classifying sensor data acquired by the hearing system and optional the user related data, which is stored in the hearing system, in particular the hearing device. As an example, the audio data acquired by the hearing device may be classified to determine, what kind of sounds the user is hearing in the moment. Such hearing situations may distinguish between speech and music. As a further example, motion data or position data may be classified, whether the user is walking, riding or going by car.

- According to an embodiment, the sensor data comprises audio data acquired with a microphone of the hearing system and the audio data is classified with respect to different sound situations as hearing situations.

- According to an embodiment, the sensor data comprises position data acquired with a position sensor of the hearing system and the position data is classified with respect to different locations and/or movement situations of the user as hearing situations.

- According to an embodiment, the sensor data comprises medical data acquired with a medical sensor of the hearing system and the medical data is classified with respect to different medical conditions of the user as hearing situation.

- According to an embodiment, the user related data comprises information on a hearing loss of the user and/or an audiogram of the user. The user related data also may comprise settings of a sound processing of the hearing system, in particular, the hearing device.

- According to an embodiment, the hearing situation is classified with a first machine learning algorithm. The first machine learning algorithm may be executed in the hearing system, in particular in the hearing device.

- According to an embodiment, the updated hearing device setting is determined with a second machine learning algorithm. The second machine learning algorithm may be executed in a control unit associated with one control entity. The classification of the hearing situation may be sent to the control unit, which then selects the updated hearing device setting from the database.

- A further aspect of the invention relates to a method for controlling a hearing system and for improving the settings of a hearing device. The method may be performed by the control system.

- According to an embodiment, the method comprises: determining and monitoring a hearing situation of a user of the hearing system based on a status of the hearing system and user related data stored in the hearing system; and determining, based on the hearing situation of the user, an updated hearing device setting. For example, based on the hearing situation of the user, an updated hearing device setting may be selected from a database of available hearing device settings, each available hearing device setting being associated with one of the at least two control levels.

- According to an embodiment, the method further comprises: associating the updated hearing device setting with one of the at least two control levels. The may be done by the hearing device, by the user device or other control units of the control system.

According to an embodiment, the method further comprises: generating a query message for approval of the updated hearing device setting and sending the query message to the control unit assigned to the control level associated with the updated hearing device setting, receiving a feedback message in response to the query message from the control unit and, if the feedback messages contains an approval of the updated hearing device setting, applying the updated hearing device setting to the hearing device; and, if the control system comprises a next higher control level, generating a notification message about the application of the updated hearing device setting and sending the notification message to the control unit assigned to the next higher control level. - A further aspect of the invention relates to a computer program for controlling a hearing system, which, when being executed by at least one processor, is adapted to carry out the method such as described above and below. A further aspect of the invention relates to a computer-readable medium, in which such a computer program is stored.

- For example, the computer program may be executed in a processor of the control system, hearing system and/or a hearing device, which hearing device, for example, may be carried by the person behind the ear. The computer-readable medium may be a memory of this control system, hearing system and/or hearing device. The computer program also may be executed by a processor of a mobile device, which is part of the hearing system, and the computer-readable medium may be a memory of the mobile device. It also may be that steps of the method are performed by the hearing device and other steps of the method are performed by the mobile device.

- In general, a computer-readable medium may be a floppy disk, a hard disk, an USB (Universal Serial Bus) storage device, a RAM (Random Access Memory), a ROM (Read Only Memory), an EPROM (Erasable Programmable Read Only Memory) or a FLASH memory. A computer-readable medium may also be a data communication network, e.g. the Internet, which allows downloading a program code. The computer-readable medium may be a non-transitory or transitory medium.

- It has to be understood that features of the method as described in the above and in the following may be features of the computer program, the computer-readable medium and the hearing system as described in the above and in the following, and vice versa.

- These and other aspects of the invention will be apparent from and elucidated with reference to the embodiments described hereinafter.

- Below, embodiments of the present invention are described in more detail with reference to the attached drawings.

-

Fig. 1 schematically shows a hearing system according to an embodiment of the invention. -

Fig. 2 shows a block diagram of components of the hearing system according tofigure 1 . -

Fig. 3 schematically shows a basic shared control loop between a user and at least one hearing device, -

Fig. 4 schematically shows a control system according to an embodiment of the invention. -

Fig. 5 schematically shows control units associated with the different control entities. -

Fig. 6 schematically shows a shared control topology. -

Fig. 7 hierarchical shows a shared control system with four layers. - The reference symbols used in the drawings, and their meanings, are listed in summary form in the list of reference symbols. In principle, identical parts are provided with the same reference symbols in the figures.

-

Fig. 1 schematically shows ahearing system 10 according to an embodiment of the invention. Thehearing system 10 includes ahearing device 12 and auser device 14 connected to thehearing device 12. As an example, thehearing device 12 is formed as a behind-the-ear device carried by a user (not shown) of thehearing device 12. It has to be noted that thehearing device 12 is a specific embodiment and that the method described herein also may be performed with other types of hearing devices, such as e.g. an in-the-ear device or one or two of thehearing devices 12 mentioned above. Theuser device 14 may be a smartphone, a tablet computer, and/or smart glasses. - The